Abstract

Due to the complexity and dynamism of animated scenes, frame prediction in animated videos is a challenging task. In order to improve the playback frame rate of animated videos, an innovative convolutional neural network combined with convolutional gated recursive unit method is used to refine the synthesized stream in frame prediction of animated videos. The obtained results indicated that the average prediction accuracy of the proposed model was 99.64%, and the training effect was good. The peak signal-to-noise ratios on the three datasets were 31.26, 36.63, and 22.15 dB, respectively, and the structural similarities were 0.958, 0.886, and 0.813, respectively. The maximum Learned Perceptual Image Patch Similarity of the proposed model was 0.144. This indicates that the model has achieved excellent performance in prediction accuracy and visual quality, which can successfully capture complex dynamics and fine details in animated scenes. The contribution of this study is to provide a technical support for improving the accuracy of frame prediction in animated videos, which will help promote the intelligent development of the animation production field.

1 Introduction

Video prediction uses a given continuous frame to predict future video frames. Animation video frame prediction plays a crucial role in various applications such as computer-generated animation, video game development, and virtual reality. The ability to accurately predict future frames enhances the visual quality and realism of animation content. However, due to the complexity and dynamism of animation, predicting accurate frames remains a challenging task [1]. Convolutional neural network (CNN), with its excellent feature extraction and pattern recognition capabilities, can effectively capture dynamic changes and temporal relationships in animation video recognition tasks. In the current field of deep learning, Gated recurrent unit (GRU) has achieved significant results in image processing and sequence data. ConvGRU combines CNN and GRU, which can effectively capture spatio-temporal dependencies and spatial information in video sequences, thereby generating more accurate and visually coherent animation frames [2]. For complex scenes, traditional video frame prediction methods may not accurately capture the details and complex actions in the scene [3]. For example, when there are a large number of moving objects or complex backgrounds in the scene, traditional methods may not be able to effectively predict the next frame [4]. Therefore, to avoid the need for too many reference frames, a Motion Estimation (ME) network is designed based on CNN. A ConvGRU fine-grained synthesis flow algorithm is proposed. Combining the reverse distortion algorithm and bi-linear interpolation algorithm, the specific pixel values in the extracted frames of the video are calculated to obtain high-precision predicted frames. The research mainly includes four parts. The first part overviews video frame prediction and ConvGRU. The second part is about animation video frame prediction based on ConvGRU fine-grained synthesis flow algorithm. The third part is the animation video frame prediction results based on ConvGRU fine-grained synthesis flow. The fourth part concludes animation video frame prediction based on ConvGRU fine-grained synthesis flow.

2 Related works

Researchers have proposed various methods to solve video frame prediction and have achieved certain results. Xu et al. developed a video anomaly detection and motion aware future frame prediction method that combined saliency perception to avoid imbalanced information distribution between video foreground and background. This method improved the ability to express moving targets. The results showed that the network had certain advantages [5]. Hassan et al. used long short term memory (LSTM) and generative adversarial networks to locate experimental subjects and analyze motion paths for predicting future human motion trajectories. The results showed that the method ultimately reduced displacement error by 41% [6]. Aslam et al. developed an auto-encoder based on deep multiple attention to achieve anomaly detection in videos. The context vector determined the output of the decoder, and the global attention mechanism participated in the weighted calculation. The results showed that the method improved the running time by 0.015 s [7]. RS combined an LSTM and ResNeXt-50 deep forgery detection algorithm for combating video deformation attacks, which was used to extract complex features. The results showed that this method had high detection accuracy [8].

ConvGRU has been widely applied in fields such as image processing and natural language processing. Many scholars have conducted extensive research on this topic. Sreeja and Kovoor focused on precise detection of suspicious events in surveillance videos. Multi-layer CNN and stacked bidirectional GRU extracted sequence level and frame level features, respectively, which was beneficial for improving accurate recognition rate. The results showed that the model had generalization and effectiveness [9]. To achieve multi-agent micro-grid energy management, Afrasiabi et al. utilized accelerated alternative direction method of multipliers to predict the parameters required for intelligent agents based on ConvGRU, searching for the optimal working point and enhancing the convergence of distributed algorithms. The results indicated that the method had good performance [10]. Xu et al. designed an AM-ConvGRU model that combined channel attention blocks for predicting typhoon paths, extracting nonlinear three-dimensional features of typhoons with complex high-dimensional attributes. The results showed that the model had good prediction accuracy [11]. Zhang et al. proposed a ConvGRU spatio-temporal prediction model to extract glacier velocity to analyze the past and present spatio-temporal variations of glacier velocity. The fluctuations in glacier velocity time series data was captured. The results showed that the model had high accuracy [12]. Tian et al. developed a GA-ConvGRU model for predicting precipitation approaching, achieving multi-modal and skewed intensity distributions. More realistic and accurate extrapolation was generated. The results showed that the model had certain applicability [13].

In summary, current research on ConvGRU mainly focuses on the improvement methods of the model, and the modeling ability for long-term dependency relationships still needs to be improved. Given the advantages of ConvGRU in processing sequence data, its future application prospects are still very broad, especially in the practical application of video analysis. Therefore, further exploring the research potential and application prospects of ConvGRU has positive impacts on the field of deep learning.

3 Animation video frame prediction based on ConvGRU fine-grained synthesis flow

The research first designs a ME network based on CNN to solve the single search direction and limited reference range. Afterwards, in order to ensure the acquisition of high-precision prediction frames, the ConvGRU fine-grained synthesis flow algorithm is proposed. Simultaneously, combining the reverse distortion algorithm and bi-linear interpolation algorithm, the computational complexity is optimized.

3.1 CNN-based ME network

Animation video is a media form that simulates motion by quickly and continuously playing static images. However, producing high-quality animated videos requires a significant amount of time and resources, especially during the process of drawing each frame. To improve the production efficiency and reduce cost of animated videos, the research needs to improve the frame efficiency of predicted animated videos. Animation video frame prediction refers to predicting the next frame of an image based on the known first few frames of the image [14]. To solve the excessive number of reference frames required for video frame prediction and ME process, the mathematical quantization animation modeling for video frame prediction is constructed, as shown in Eq. (1).

where

Schematic diagram of the animation video frame prediction network flow.

In Figure 1, the inter-frame temporal content information of the reference frame is extracted by the ME network module. Combined with motion residual calculation and synthetic flow calculation, the ConvGRU fine-grained synthetic flow algorithm is completed. Finally, combined with the reverse distortion algorithm, the predicted frame is obtained. When predicting between frames, the correlation between video frames is used to filter out redundant information about time, which generally includes two steps: ME and motion compensation. The main task of ME is to find a matching block for the currently being encoded macro block in the historical reference frame to find the optimal matching block. After finding the optimal matching block, ME will output a motion vector, which is the position coordinate of the reference block relative to the current block [15]. The ME is shown in Figure 2.

ME principle diagram.

In Figure 2, the current video coding standards mainly use inter-frame encoding methods that combine blocks. The principle is to use ME to find the reference block with the smallest difference from adjacent reference reconstruction frames. The reconstruction value serves as the predicted value of the current block. The displacement from the reference block to the current block is the motion vector. The process of taking reconstructed values as predicted values is motion compensation. The ME network can extract motion and occlusion information between reference frames, and predict initial motion vectors, soft masks, and convolutional kernel weights [16,17]. Soft mask matrix is used to avoid occlusion issues, and

To avoid the information loss at the previous level and the impact of gradient vanishing on performance when the network depth gradually increases, a ME network is designed based on CNN. The schematic diagram of the ME network structure is shown in Figure 3.

Schematic diagram of ME network structure.

In Figure 3, the ME network structure consists of an encoding end and a decoding end. The encoding side ensures that the convolutional kernel can extract the content and difference information of two reference frames. Afterwards, the initial feature extraction is completed through a convolutional layer with a kernel size 7 × 7. To reduce the resolution, a convolutional block is formed by combining three convolutional layers with kernel size 3 × 3 and an average pooling layer. The encoding end utilizes skip connections to the decoding end. The decoding end replaces the pooling layer with a bi-linear up-sampling layer to improve the resolution of the feature map. Finally, it is output to the sub-network for predicting motion vectors, soft mask

3.2 Animation video frame prediction based on ConvGRU fine-grained flow algorithm and reverse distortion algorithm

In animation video frame prediction, if only motion compensation is applied to the reference frame to obtain the predicted frame, the lack of high-dimensional content features cannot compensate for the detail loss caused by ME errors, resulting in blurred prediction frames [19,20]. To avoid blurry prediction frames, a video frame content extractor combining feature pyramids is developed on the basis of CNN, obtaining multi-scale features with rich detail information in reference frames [21]. By combining CNN for extracting spatial features with GRU for extracting temporal features, the encoded high-dimensional temporal motion information is processed to generate bias values to correct the errors in the synthesized stream, thereby achieving the correct pixel mapping relationship [22]. The internal structure of ConvGRU is shown in Figure 4.

Internal structure of ConvGRU.

In Figure 4, the reset gate, update gate, and hidden gate are all used for deep feature extraction. All consist of a convolutional layer. The connection operation

The update gate is shown in Eq. (4).

where

The current hidden state

To improve the accurate prediction ability of future frame movements, the ConvGRU fine-grained synthesis flow algorithm is proposed. This algorithm utilizes multi-level encoding of motion residuals and extracts high-dimensional temporal dependency information. Then, these pieces of information are decoded to obtain bias values with the same resolution. The bias value and

Schematic diagram of ConvGRU fine-grained synthesis flow network structure.

In Figure 5, the network consists of a pyramid structure, a motion residual encoder, and a ConvGRU module. The ConvGRU module consists of three levels. The channel dimension at the same level is 256. The motion residual encoder shares weights at the same level and remains independent of each other at different levels. The reference frame is the rough prediction frame

where

Schematic diagram of (a) reverse distortion algorithm and (b) bi-linear interpolation algorithm.

In Figure 6(a), the reverse distortion algorithm can calculate the optical flow

In the horizontal direction, the differentiation for pixels is shown in Eq. (9).

In the vertical direction, the differentiation for pixels is shown in Eq. (10).

4 Prediction results of animation video frame based on ConvGRU fine-grained synthesis flow

The influence of ME network and its time-domain motion vector parameters on predicting frames in animated videos is analyzed. The performance of ConvGRU fine-grained synthesis flow algorithm and its improved algorithm is verified.

4.1 ME network and parameter analysis of time-domain motion vectors

The experimental CPU is Intel Core i7-9700 3.00 GHz, and the operating system is Ubuntu 18.04. The graphics memory is 16GB. The selected animation video datasets for the experiment are Creative Flow+ and AnimeRun. The basic principles of the proposed model parameters and their settings are shown in Table 1.

Basic principles of model parameters and their settings

| Parameter | Numerical value | Basic principle |

|---|---|---|

| Exponential decay rates of momentum and RMSProp terms | 0.9, 0.999 | Update of equilibrium gradient |

| Initial learning rate | 0.001 | Ensure stable convergence of the model |

| Batch size | 8 | Balancing memory consumption and training speed |

| The number of convolutional layers | 32, 64, 96 | Improve the expressive power of the model |

| Convolutional kernel size | 5 × 5 | Capture features at different scales |

| The number of motion vectors in the time domain | 5 | Considering the time complexity of the model comprehensively |

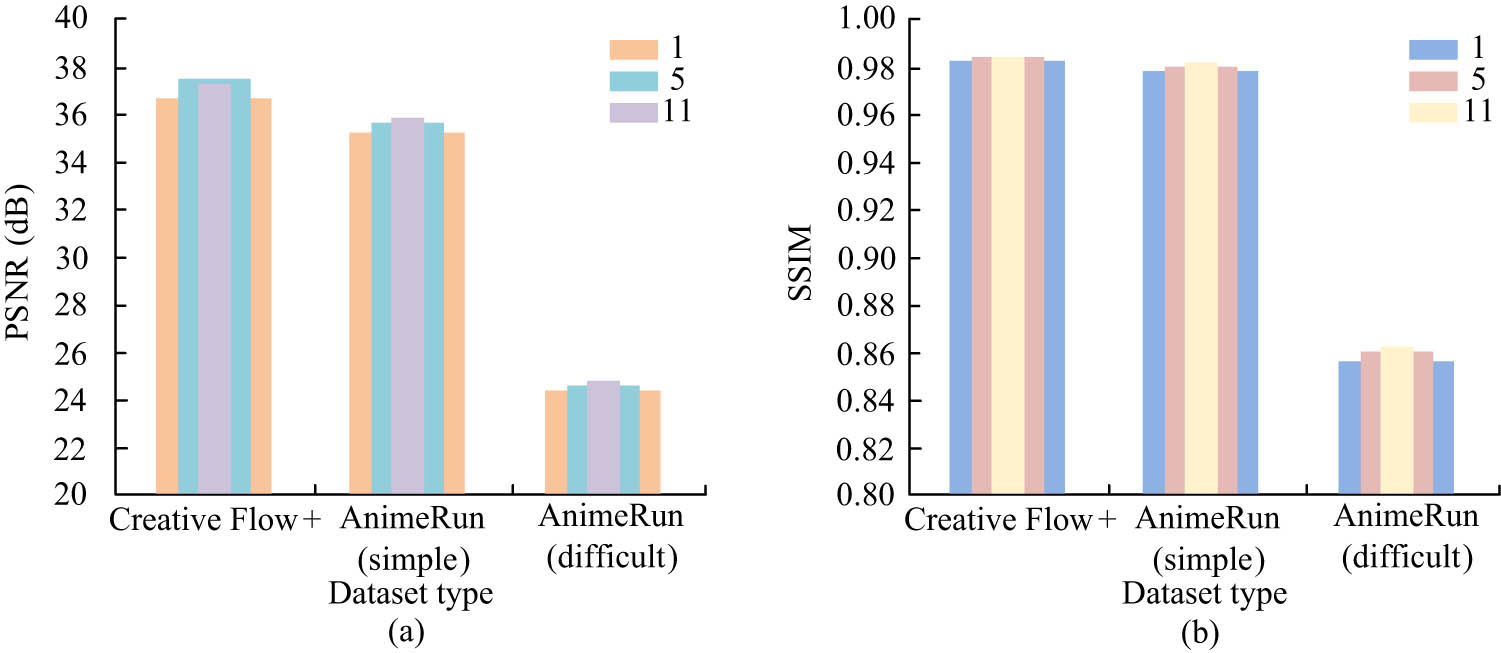

The experimental evaluation indicators are Learned Perceptual Image Patch Similarity (LPIPS), Structural Similarity (SSIM) and Peak signal to noise ratio (PSNR). To investigate the impact of different numbers of time-domain motion vectors on algorithm performance, the model convolution kernel size is kept at 11 × 11. The PSNR and SSIM results of the model on Creative Flow+, AnimeRun simple, and difficult datasets are shown in Figure 7 when the number of time-domain motion vectors is 1, 5, and 11, respectively.

Comparison of objective evaluation results of different time-domain motion vector numbers. (a) Comparison of PSNR results. (b) Comparison of SSIM results.

In Figure 7(a), on the Creative Flow+ dataset, when the motion vectors in the domain were 5, the model had the highest PSNR, which was 37.51 dB. On the AnimeRun simple and difficult datasets, when the time-domain motion vectors were 11, the PSNR was the highest, at 35.83 and 24.62 dB, respectively. In Figure 7(b), on the Creative Flow+, AnimeRun simple, and difficult datasets, when the time-domain motion vectors were 11, the SSIM was the highest, with values of 0.984, 0.981, and 0862, respectively. The increase in the estimation quantity of time-domain motion vectors can quickly search for animation video information, thereby improving the predicted frame quality. To analyze the impact of different convolution kernel sizes on the predicted frames of animated videos, the number of temporal motion vectors is kept at 5. When the convolutional kernel sizes are 1 × 1, 5 × 5, and 11 × 11, the PSNR and SSIM results of the predicted animation video frames on the Creative Flow+, AnimeRun simple, and difficult datasets are shown in Figure 8.

Comparison of objective evaluation results for different convolutional kernel sizes. (a) Comparison of PSNR results. (b) Comparison of SSIM results.

In Figure 8(a), when the convolution kernel was 11 × 11, the PSNR of the predicted animation video frame on different datasets was 36.82, 37.08, and 37.51 dB, respectively. In Figure 8(b), when the convolution kernel was 11 × 11, the SSIM of the predicted animation video frame on different datasets was 0.984, 0.980, and 0.860, respectively. To study the impact of animation video frame prediction models with ME network modules on accuracy, the AnimeRun dataset is selected as a sample to train models with and without the ME network module. The PSNR results of the running and walking states of the characters are shown in Figure 9.

Comparison of PSNR results of different models in running and walking states of characters. (a) Run status. (b) Walk state.

In Figure 9(a), when iterating 300 times, the PSNR with and without the ME module were 29.40 and 29.30 dB, respectively. In Figure 9(b), after 300 iterations of the walking state of the animated video character, the PSNR with and without the ME module were 26.78 and 26.58 dB, respectively, which increased by 0.2 dB. To verify the impact of learning rate on model training, the total loss changes during model training are compared when the learning rates are 0.01 and 0.001, respectively, as shown in Figure 10.

Comparison of total loss values at learning rates of (a) 0.01 and (b) 0.001.

In Figure 10(a), when the learning rate was 0.001, the total loss curve gradually decreased with increasing iterations. At 50–75 cycles, the total loss value slightly increased. Afterwards, the total loss value fluctuated between 21.8 and 22.0. In Figure 10(b), when the learning rate was 0.01, the total training loss curve first increased and then decreased. The highest total loss value was about 26.58, which decreased by 8.1%.

4.2 Performance analysis of the improved algorithm

To verify the performance of the improved algorithm, the results of spatial information transfer and time backtracking (SITB) [26], Bayesian DeNet [27], and ConvGRU algorithms on the Creative Flow+ and AnimeRun datasets are compared, as shown in Table 2.

Comparison of three algorithms on different data machines

| Algorithm type | Creative Flow+ | AnimeRun | ||||

|---|---|---|---|---|---|---|

| LPIPS | SSIM | PSNR | LPIPS | SSIM | PSNR | |

| Bayesian DeNet | 0.071 | 0.869 | 24.91 | 0.032 | 0.944 | 29.09 |

| SITB | 0.056 | 0.884 | 26.45 | 0.028 | 0.949 | 30.28 |

| ConvGRU | 0.073 | 0.886 | 26.63 | 0.033 | 0.958 | 31.26 |

On the Creative Flow+ dataset, compared with the other two algorithms, the ConvGRU algorithm had the highest LPIPS, SSIM, and PSNR, with values of 0.073, 0.886, and 26.63 dB, respectively. On the AnimeRun dataset, the SSIM and PSNR of the ConvGRU algorithm were 0.958 and 31.26 dB, respectively. The performance has improved. The ConvGRU algorithm ran nearly 50% longer than the SITB algorithm. However, compared with the Bayesian DeNet algorithm, it was 13.342 s faster. Experiments are conducted on a self-made high-resolution animation video dataset. These three algorithms under different motion modes and motion sizes are compared. The results are shown in Figure 11.

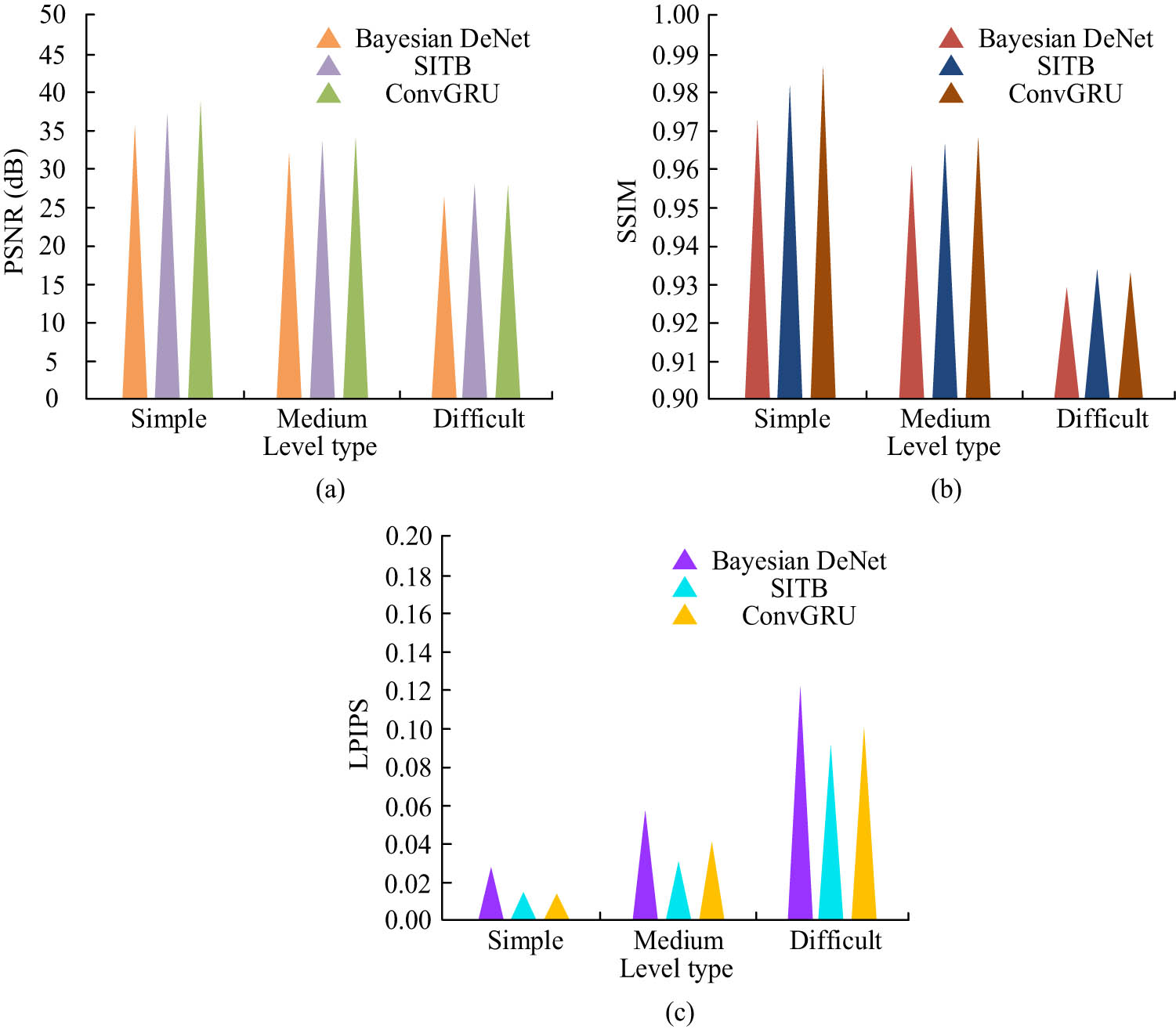

Comparison of PSNR, SSIM, and LPIPS results of different algorithms on datasets of different levels. (a) Comparison of PSNR results of different algorithms. (b) Comparison of SSIM results of different algorithms. (c) Comparison of LPIPS results of different algorithms.

In Figure 11(a), the PSNR of the improved ConvGRU algorithm on simple, medium, and difficult datasets were 38.96, 34.05, and 27.90 dB, respectively. In Figure 11(b), the PSNR of the improved ConvGRU algorithm on simple, medium, and difficult datasets were 0.987, 0.968, and 0.933, respectively. Compared with the SITB algorithm, the improved ConvGRU algorithm increased SSIM by 0.005 on simple datasets. In Figure 11(c), the improved ConvGRU algorithm had the lowest LPIPS on simple datasets, which was 0.014. To analyze the performance of the improved algorithm, the objective quality results of the model before and after training improvement are compared on Creative Flow+, AnimeRun simple, and difficult datasets, as shown in Figure 12.

Comparison of PSNR, SSIM, and LPIPS results between unimproved and improved models on different datasets. (a) Comparison of PSNR results. (b) Comparison of SSIM results. (c) Comparison of LPIPS results.

In Figure 12(a), the PSNR of the improved model on three datasets were 31.26, 36.63, and 22.15 dB, respectively. The improved model has the greatest improvement in quality on difficult datasets, indicating an enhanced accuracy capability. In Figure 12(b), the SSIM of the improved model on three datasets were 0.958, 0.886, and 0.813, respectively. In Figure 12(c), the LPIPS of the improved model on three datasets were 0.033, 0.073, and 0.144, respectively. The actual detection performance of the improved algorithm on Creative Flow+, AnimeRun, and self-made datasets is shown in Figure 13.

The frequency of frame anomalies on different datasets. (a) Anomalous frequencies on the datasets Creative Flow+ and AnimeRun. (b) Frequency of anomalies on self-made datasets.

In Figure 13(a), at frames 0–55, the frame sequence frequency of the Creative Flow+ dataset was at a relatively low level, averaging 1.95 frames per second. The characters in the animated video sample were in a normal walking state. The AnimeRun dataset showed that the characters in the animated video samples 35 frames ago were in a normal walking state. After 35 frames, the frequency of screen changes remained at a high level. In Figure 13(b), at 50 frames in the self-made dataset, there was a running scene on the screen, with a frequency of 0.28 frames per second. At 950 frames, the details of the character were magnified ten times compared with the previous frame sequence, with a frequency of 0.97 frames per second. The improved algorithm has good actual detection results and high accuracy. The training set, validation set, and testing set are divided in a ratio of 6:2:2. The improved model is trained 400 times. The model training results are shown in Table 3.

Numerical comparison of improved model results

| Batch | 1 | 2 | 3 | 4 | 5 | Average value | |

|---|---|---|---|---|---|---|---|

| Training set | RMSE | 0.0423 | 0.0435 | 0.0447 | 0.0464 | 0.0476 | 0.0449 |

| Accuracy | 99.72 | 99.72 | 99.69 | 99.67 | 99.64 | 99.69 | |

| Validation set | RMSE | 0.0431 | 0.0407 | 0.0390 | 0.0446 | 0.0584 | 0.0452 |

| Accuracy | 99.64 | 99.74 | 99.70 | 99.68 | 99.42 | 99.64 | |

| Testing set | RMSE | 0.0479 | 0.0483 | 0.0454 | 0.0488 | 0.0487 | 0.0478 |

| Accuracy | 99.65 | 99.66 | 99.59 | 99.50 | 99.59 | 99.60 | |

The average accuracy of the improved model on the training set, validation set, and testing set was 99.69, 99.64, and 99.60%, respectively. The training results of the improved model are good, with an average prediction accuracy of 99.64%. The improved model has achieved excellent performance in prediction accuracy and visual quality.

5 Conclusion

The study combined ConvGRU and synthetic flow algorithm for animation video frame prediction, effectively capturing temporal correlation and spatial information, and achieving accurate and realistic frame synthesis. The results showed that the improved model had the highest PSNR of 37.51 dB on the Creative Flow+ dataset when the motion vectors in the domain were 5. After 300 iterations, the PSNR of the running state of the animated video characters gradually increased. The PSNR with and without the ME module were 29.40 and 29.30 dB, respectively. At 128–142 times, there was a decrease in PSNR with the ME module, which may be due to errors in the dataset sample videos. When the learning rate was 0.001, the total loss curve gradually decreased with the increase in iterations. When the learning rate was 0.01, the total training loss curve first increased and then decreased, with the highest total loss value of about 26.58. The model accuracy decreased by 8.1%. On the Creative Flow+ dataset, the LPIPS, SSIM, and PSNR of the ConvGRU algorithm were 0.073, 0.886, and 26.63 dB, respectively. On the AnimeRun dataset, the ConvGRU algorithm improved performance with 0.958 and 31.26 dB, respectively. Compared with existing methods, the improved method has superior performance, improving the prediction accuracy. Accurate frame prediction can be used in animation production and video game development to reduce the workload of animators, improve production efficiency, and enhance the visual effects of animations and games. However, the model is sensitive to ME parameters and hyper-parameters of ConvGRU. Meanwhile, improper parameter settings may lead to significant performance degradation of the model. In the future, intelligent algorithms such as grid search, random search, or Bayesian optimization can be chosen to determine the optimal parameter combination, thereby further improving the stability of model performance.

-

Funding information: None.

-

Author contributions: Xue Duan Conducted the data collection and analysis, and the writing of the manuscript.

-

Conflict of interest: Author declares no conflict of interest.

-

Data availability statement: The dataset generated and analyzed in this study can be obtained from the Creative Flow+ and AnimeRun repositories.

References

[1] Li Y, Wang J, Sun X, Li Z, Liu M, Gui G. Smoothing-aided support vector machine based nonstationary video traffic prediction towards B5G networks. IEEE Trans Veh Technol. 2020;69(7):7493–502.10.1109/TVT.2020.2993262Search in Google Scholar

[2] Nsugbe E. Toward a self-supervised architecture for semen quality prediction using environmental and lifestyle factors. Artif Intell Appl. 2023;1(1):35–42.10.47852/bonviewAIA2202303Search in Google Scholar

[3] Zhao S, Zhao L. Forecasting long-term electric power demand by linear semiparametric regression. AIEM. 2022;11(1):29–31.Search in Google Scholar

[4] Hilda. Make an impression in 60 seconds: Video on social media, the main weapon of professional marketers – Trends 2023. Acta Inform Malays. 2024;8(1):52–5.10.26480/aim.02.2024.56.59Search in Google Scholar

[5] Xu H, Liu W, Xing W, Wei X. Motion-aware future frame prediction for video anomaly detection based on saliency perception. Signal Image Video Process. 2022;16(8):2121–9.10.1007/s11760-022-02174-7Search in Google Scholar

[6] Hassan MA, Khan MUG, Iqbal R, Riaz O, Bashir AK, Tariq U. Predicting human’s future motion trajectories in video streams using generative adversarial network. Multimed Tools Appl. 2024;83(5):15289–311.10.1007/s11042-021-11457-zSearch in Google Scholar

[7] Aslam N, Kolekar MH. DeMAAE: deep multiplicative attention-based autoencoder for identification of peculiarities in video sequences. Vis Comput. 2024;40(3):1729–43.10.1007/s00371-023-02882-2Search in Google Scholar

[8] Video RSSN. Morphing attack detection using convolutional neural networks on deep fake detection algorithm. Educ Admin Theory Pract. 2024;30(5):3589–603.Search in Google Scholar

[9] Sreeja MU, Kovoor BC. An aggregated deep convolutional recurrent model for event-based surveillance video summarisation: a supervised approach. IET Comput. 2021;15(4):297–311.10.1049/cvi2.12044Search in Google Scholar

[10] Afrasiabi M, Mohammadi M, Rastegar M, Kargarian A. Multi-agent microgrid energy management based on deep learning forecaster. Energ J. 2019;186(1):115873.1–14.10.1016/j.energy.2019.115873Search in Google Scholar

[11] Xu G, Xian D, Fournier-Viger P, Li X, Ye Y, Hu X. AM-ConvGRU: a spatio-temporal model for typhoon path prediction. Neural Comput Appl. 2022;34(8):5905–21.10.1007/s00521-021-06724-xSearch in Google Scholar

[12] Zhang Y, Zhang L, He Y, Yao S, Yang W, Cao S, et al. Analysis of the future trends of typical mountain glacier movements along the Sichuan-Tibet Railway based on ConvGRU network. Int J Digit Earth. 2023;16(1):762–80.10.1080/17538947.2022.2152884Search in Google Scholar

[13] Tian L, Li X, Ye Y, Xie P, Li Y. A generative adversarial gated recurrent unit model for precipitation nowcasting. IEEE Geosci Remote Sens Lett. 2019;17(4):601–5.10.1109/LGRS.2019.2926776Search in Google Scholar

[14] Li Y, Hou G, Zhang X. Deep convolutional LSTM for dynamic facial expression recognition. IEEE Trans Cybern. 2019;49(6):2150–63.Search in Google Scholar

[15] Li T, Wang X, Liu H, Zhang L. Video prediction with appearance and motion conditions. Proc IEEE Conf Comput Vis Pattern Recognit (CVPR). 2019;6(2):11341–50.Search in Google Scholar

[16] Kalchbrenner N, Oord A, Simonyan K, Danihelka I, Vinyals O, Graves A, et al. Video pixel networks. In Proceedings of the International Conference on Machine Learning (ICML). 2016. p. 1771–9.Search in Google Scholar

[17] Liu Z, He Q, Peng Z. Interactive visual simulation modeling for structural response prediction and damage detection. IEEE Trans Ind Electron. 2021;69(1):868–78.10.1109/TIE.2021.3050365Search in Google Scholar

[18] Bao W, Lai WS, Zhang X, Gao Z, Yang MH. Memc-net: Motion estimation and motion compensation driven neural network for video interpolation and enhancement. IEEE Trans Pattern Anal Mach Intell. 2019;43(3):933–48.10.1109/TPAMI.2019.2941941Search in Google Scholar PubMed

[19] Gogoi S, Peesapati R. Design and implementation of an efficient multi-pattern motion estimation search algorithm for HEVC/H.265. IEEE Trans Consum Electron. 2021;67(4):319–28.10.1109/TCE.2021.3126670Search in Google Scholar

[20] Li T, Zhang M, Qi W, Asma E, Qi J. Deep learning-based joint PET image reconstruction and motion estimation. IEEE Trans Med Imaging. 2021;41(5):1230–41.10.1109/TMI.2021.3136553Search in Google Scholar PubMed PubMed Central

[21] Mohseni M, Santhanam S, Williams J, Thakker A, Nataraj C. Systematic fatigue spectrum editing by fast wavelet transform and genetic algorithm. Fatigue Fract Eng Mater Struct. 2022;45(1):69–83.10.1111/ffe.13583Search in Google Scholar

[22] Zhan Y, Guan J, Zhao Y. An adaptive second-order sliding-mode observer for permanent magnet synchronous motor with an improved phase-locked loop structure considering speed reverse. Trans Inst Meas Control. 2020;42(5):1008–21.10.1177/0142331219880712Search in Google Scholar

[23] Wang ZJ, Turko R, Shaikh O, Park H, Das N, Hohman F, et al. CNN explainer: learning convolutional neural networks with interactive visualization. IEEE Trans Vis Comput Graph. 2020;27(2):1396–406.10.1109/TVCG.2020.3030418Search in Google Scholar PubMed

[24] Mourot L, Hoyet L, Le Clerc F, Schnitzler F, Hellier P. A survey on deep learning for skeleton-based human animation. Comput Graph Forum. 2022;41(1):122–57.10.1111/cgf.14426Search in Google Scholar

[25] Yan B, Wang L, Zhang Y, Zhang Y, Zhang L. Video prediction with convolutional LSTM networks using temporal relation networks. IEEE Trans Circuits Syst Video Technol. 2020;30(2):450–63.Search in Google Scholar

[26] Yuan P, Guan Y, Huang J. Video prediction based on spatial information transfer and time backtracking. Signal Image Video Process. 2022;16(3):825–33.10.1007/s11760-021-02023-zSearch in Google Scholar

[27] Yang X, Gao Y, Luo H, Liao C, Cheng KT. Bayesian denet: Monocular depth prediction and frame-wise fusion with synchronized uncertainty. IEEE Trans Multimed. 2019;1(11):2701–13.10.1109/TMM.2019.2912121Search in Google Scholar

© 2025 the author(s), published by De Gruyter

This work is licensed under the Creative Commons Attribution 4.0 International License.

Articles in the same Issue

- Research Articles

- Generalized (ψ,φ)-contraction to investigate Volterra integral inclusions and fractal fractional PDEs in super-metric space with numerical experiments

- Solitons in ultrasound imaging: Exploring applications and enhancements via the Westervelt equation

- Stochastic improved Simpson for solving nonlinear fractional-order systems using product integration rules

- Exploring dynamical features like bifurcation assessment, sensitivity visualization, and solitary wave solutions of the integrable Akbota equation

- Research on surface defect detection method and optimization of paper-plastic composite bag based on improved combined segmentation algorithm

- Impact the sulphur content in Iraqi crude oil on the mechanical properties and corrosion behaviour of carbon steel in various types of API 5L pipelines and ASTM 106 grade B

- Unravelling quiescent optical solitons: An exploration of the complex Ginzburg–Landau equation with nonlinear chromatic dispersion and self-phase modulation

- Perturbation-iteration approach for fractional-order logistic differential equations

- Variational formulations for the Euler and Navier–Stokes systems in fluid mechanics and related models

- Rotor response to unbalanced load and system performance considering variable bearing profile

- DeepFowl: Disease prediction from chicken excreta images using deep learning

- Channel flow of Ellis fluid due to cilia motion

- A case study of fractional-order varicella virus model to nonlinear dynamics strategy for control and prevalence

- Multi-point estimation weldment recognition and estimation of pose with data-driven robotics design

- Analysis of Hall current and nonuniform heating effects on magneto-convection between vertically aligned plates under the influence of electric and magnetic fields

- A comparative study on residual power series method and differential transform method through the time-fractional telegraph equation

- Insights from the nonlinear Schrödinger–Hirota equation with chromatic dispersion: Dynamics in fiber–optic communication

- Mathematical analysis of Jeffrey ferrofluid on stretching surface with the Darcy–Forchheimer model

- Exploring the interaction between lump, stripe and double-stripe, and periodic wave solutions of the Konopelchenko–Dubrovsky–Kaup–Kupershmidt system

- Computational investigation of tuberculosis and HIV/AIDS co-infection in fuzzy environment

- Signature verification by geometry and image processing

- Theoretical and numerical approach for quantifying sensitivity to system parameters of nonlinear systems

- Chaotic behaviors, stability, and solitary wave propagations of M-fractional LWE equation in magneto-electro-elastic circular rod

- Dynamic analysis and optimization of syphilis spread: Simulations, integrating treatment and public health interventions

- Visco-thermoelastic rectangular plate under uniform loading: A study of deflection

- Threshold dynamics and optimal control of an epidemiological smoking model

- Numerical computational model for an unsteady hybrid nanofluid flow in a porous medium past an MHD rotating sheet

- Regression prediction model of fabric brightness based on light and shadow reconstruction of layered images

- Dynamics and prevention of gemini virus infection in red chili crops studied with generalized fractional operator: Analysis and modeling

- Qualitative analysis on existence and stability of nonlinear fractional dynamic equations on time scales

- Fractional-order super-twisting sliding mode active disturbance rejection control for electro-hydraulic position servo systems

- Analytical exploration and parametric insights into optical solitons in magneto-optic waveguides: Advances in nonlinear dynamics for applied sciences

- Bifurcation dynamics and optical soliton structures in the nonlinear Schrödinger–Bopp–Podolsky system

- User profiling in university libraries by combining multi-perspective clustering algorithm and reader behavior analysis

- Exploring bifurcation and chaos control in a discrete-time Lotka–Volterra model framework for COVID-19 modeling

- Review Article

- Haar wavelet collocation method for existence and numerical solutions of fourth-order integro-differential equations with bounded coefficients

- Special Issue: Nonlinear Analysis and Design of Communication Networks for IoT Applications - Part II

- Silicon-based all-optical wavelength converter for on-chip optical interconnection

- Research on a path-tracking control system of unmanned rollers based on an optimization algorithm and real-time feedback

- Analysis of the sports action recognition model based on the LSTM recurrent neural network

- Industrial robot trajectory error compensation based on enhanced transfer convolutional neural networks

- Research on IoT network performance prediction model of power grid warehouse based on nonlinear GA-BP neural network

- Interactive recommendation of social network communication between cities based on GNN and user preferences

- Application of improved P-BEM in time varying channel prediction in 5G high-speed mobile communication system

- Construction of a BIM smart building collaborative design model combining the Internet of Things

- Optimizing malicious website prediction: An advanced XGBoost-based machine learning model

- Economic operation analysis of the power grid combining communication network and distributed optimization algorithm

- Sports video temporal action detection technology based on an improved MSST algorithm

- Internet of things data security and privacy protection based on improved federated learning

- Enterprise power emission reduction technology based on the LSTM–SVM model

- Construction of multi-style face models based on artistic image generation algorithms

- Research and application of interactive digital twin monitoring system for photovoltaic power station based on global perception

- Special Issue: Decision and Control in Nonlinear Systems - Part II

- Animation video frame prediction based on ConvGRU fine-grained synthesis flow

- Application of GGNN inference propagation model for martial art intensity evaluation

- Benefit evaluation of building energy-saving renovation projects based on BWM weighting method

- Deep neural network application in real-time economic dispatch and frequency control of microgrids

- Real-time force/position control of soft growing robots: A data-driven model predictive approach

- Mechanical product design and manufacturing system based on CNN and server optimization algorithm

- Application of finite element analysis in the formal analysis of ancient architectural plaque section

- Research on territorial spatial planning based on data mining and geographic information visualization

- Fault diagnosis of agricultural sprinkler irrigation machinery equipment based on machine vision

- Closure technology of large span steel truss arch bridge with temporarily fixed edge supports

- Intelligent accounting question-answering robot based on a large language model and knowledge graph

- Analysis of manufacturing and retailer blockchain decision based on resource recyclability

- Flexible manufacturing workshop mechanical processing and product scheduling algorithm based on MES

- Exploration of indoor environment perception and design model based on virtual reality technology

- Tennis automatic ball-picking robot based on image object detection and positioning technology

- A new CNN deep learning model for computer-intelligent color matching

- Design of AR-based general computer technology experiment demonstration platform

- Indoor environment monitoring method based on the fusion of audio recognition and video patrol features

- Health condition prediction method of the computer numerical control machine tool parts by ensembling digital twins and improved LSTM networks

- Establishment of a green degree evaluation model for wall materials based on lifecycle

- Quantitative evaluation of college music teaching pronunciation based on nonlinear feature extraction

- Multi-index nonlinear robust virtual synchronous generator control method for microgrid inverters

- Manufacturing engineering production line scheduling management technology integrating availability constraints and heuristic rules

- Analysis of digital intelligent financial audit system based on improved BiLSTM neural network

- Attention community discovery model applied to complex network information analysis

- A neural collaborative filtering recommendation algorithm based on attention mechanism and contrastive learning

- Rehabilitation training method for motor dysfunction based on video stream matching

- Research on façade design for cold-region buildings based on artificial neural networks and parametric modeling techniques

- Intelligent implementation of muscle strain identification algorithm in Mi health exercise induced waist muscle strain

- Optimization design of urban rainwater and flood drainage system based on SWMM

- Improved GA for construction progress and cost management in construction projects

- Evaluation and prediction of SVM parameters in engineering cost based on random forest hybrid optimization

- Museum intelligent warning system based on wireless data module

- Optimization design and research of mechatronics based on torque motor control algorithm

- Special Issue: Nonlinear Engineering’s significance in Materials Science

- Experimental research on the degradation of chemical industrial wastewater by combined hydrodynamic cavitation based on nonlinear dynamic model

- Study on low-cycle fatigue life of nickel-based superalloy GH4586 at various temperatures

- Some results of solutions to neutral stochastic functional operator-differential equations

- Ultrasonic cavitation did not occur in high-pressure CO2 liquid

- Research on the performance of a novel type of cemented filler material for coal mine opening and filling

- Testing of recycled fine aggregate concrete’s mechanical properties using recycled fine aggregate concrete and research on technology for highway construction

- A modified fuzzy TOPSIS approach for the condition assessment of existing bridges

- Nonlinear structural and vibration analysis of straddle monorail pantograph under random excitations

- Achieving high efficiency and stability in blue OLEDs: Role of wide-gap hosts and emitter interactions

- Construction of teaching quality evaluation model of online dance teaching course based on improved PSO-BPNN

- Enhanced electrical conductivity and electromagnetic shielding properties of multi-component polymer/graphite nanocomposites prepared by solid-state shear milling

- Optimization of thermal characteristics of buried composite phase-change energy storage walls based on nonlinear engineering methods

- A higher-performance big data-based movie recommendation system

- Nonlinear impact of minimum wage on labor employment in China

- Nonlinear comprehensive evaluation method based on information entropy and discrimination optimization

- Application of numerical calculation methods in stability analysis of pile foundation under complex foundation conditions

- Research on the contribution of shale gas development and utilization in Sichuan Province to carbon peak based on the PSA process

- Characteristics of tight oil reservoirs and their impact on seepage flow from a nonlinear engineering perspective

- Nonlinear deformation decomposition and mode identification of plane structures via orthogonal theory

- Numerical simulation of damage mechanism in rock with cracks impacted by self-excited pulsed jet based on SPH-FEM coupling method: The perspective of nonlinear engineering and materials science

- Cross-scale modeling and collaborative optimization of ethanol-catalyzed coupling to produce C4 olefins: Nonlinear modeling and collaborative optimization strategies

- Unequal width T-node stress concentration factor analysis of stiffened rectangular steel pipe concrete

- Special Issue: Advances in Nonlinear Dynamics and Control

- Development of a cognitive blood glucose–insulin control strategy design for a nonlinear diabetic patient model

- Big data-based optimized model of building design in the context of rural revitalization

- Multi-UAV assisted air-to-ground data collection for ground sensors with unknown positions

- Design of urban and rural elderly care public areas integrating person-environment fit theory

- Application of lossless signal transmission technology in piano timbre recognition

- Application of improved GA in optimizing rural tourism routes

- Architectural animation generation system based on AL-GAN algorithm

- Advanced sentiment analysis in online shopping: Implementing LSTM models analyzing E-commerce user sentiments

- Intelligent recommendation algorithm for piano tracks based on the CNN model

- Visualization of large-scale user association feature data based on a nonlinear dimensionality reduction method

- Low-carbon economic optimization of microgrid clusters based on an energy interaction operation strategy

- Optimization effect of video data extraction and search based on Faster-RCNN hybrid model on intelligent information systems

- Construction of image segmentation system combining TC and swarm intelligence algorithm

- Particle swarm optimization and fuzzy C-means clustering algorithm for the adhesive layer defect detection

- Optimization of student learning status by instructional intervention decision-making techniques incorporating reinforcement learning

- Fuzzy model-based stabilization control and state estimation of nonlinear systems

- Optimization of distribution network scheduling based on BA and photovoltaic uncertainty

- Tai Chi movement segmentation and recognition on the grounds of multi-sensor data fusion and the DBSCAN algorithm

- Special Issue: Dynamic Engineering and Control Methods for the Nonlinear Systems - Part III

- Generalized numerical RKM method for solving sixth-order fractional partial differential equations

Articles in the same Issue

- Research Articles

- Generalized (ψ,φ)-contraction to investigate Volterra integral inclusions and fractal fractional PDEs in super-metric space with numerical experiments

- Solitons in ultrasound imaging: Exploring applications and enhancements via the Westervelt equation

- Stochastic improved Simpson for solving nonlinear fractional-order systems using product integration rules

- Exploring dynamical features like bifurcation assessment, sensitivity visualization, and solitary wave solutions of the integrable Akbota equation

- Research on surface defect detection method and optimization of paper-plastic composite bag based on improved combined segmentation algorithm

- Impact the sulphur content in Iraqi crude oil on the mechanical properties and corrosion behaviour of carbon steel in various types of API 5L pipelines and ASTM 106 grade B

- Unravelling quiescent optical solitons: An exploration of the complex Ginzburg–Landau equation with nonlinear chromatic dispersion and self-phase modulation

- Perturbation-iteration approach for fractional-order logistic differential equations

- Variational formulations for the Euler and Navier–Stokes systems in fluid mechanics and related models

- Rotor response to unbalanced load and system performance considering variable bearing profile

- DeepFowl: Disease prediction from chicken excreta images using deep learning

- Channel flow of Ellis fluid due to cilia motion

- A case study of fractional-order varicella virus model to nonlinear dynamics strategy for control and prevalence

- Multi-point estimation weldment recognition and estimation of pose with data-driven robotics design

- Analysis of Hall current and nonuniform heating effects on magneto-convection between vertically aligned plates under the influence of electric and magnetic fields

- A comparative study on residual power series method and differential transform method through the time-fractional telegraph equation

- Insights from the nonlinear Schrödinger–Hirota equation with chromatic dispersion: Dynamics in fiber–optic communication

- Mathematical analysis of Jeffrey ferrofluid on stretching surface with the Darcy–Forchheimer model

- Exploring the interaction between lump, stripe and double-stripe, and periodic wave solutions of the Konopelchenko–Dubrovsky–Kaup–Kupershmidt system

- Computational investigation of tuberculosis and HIV/AIDS co-infection in fuzzy environment

- Signature verification by geometry and image processing

- Theoretical and numerical approach for quantifying sensitivity to system parameters of nonlinear systems

- Chaotic behaviors, stability, and solitary wave propagations of M-fractional LWE equation in magneto-electro-elastic circular rod

- Dynamic analysis and optimization of syphilis spread: Simulations, integrating treatment and public health interventions

- Visco-thermoelastic rectangular plate under uniform loading: A study of deflection

- Threshold dynamics and optimal control of an epidemiological smoking model

- Numerical computational model for an unsteady hybrid nanofluid flow in a porous medium past an MHD rotating sheet

- Regression prediction model of fabric brightness based on light and shadow reconstruction of layered images

- Dynamics and prevention of gemini virus infection in red chili crops studied with generalized fractional operator: Analysis and modeling

- Qualitative analysis on existence and stability of nonlinear fractional dynamic equations on time scales

- Fractional-order super-twisting sliding mode active disturbance rejection control for electro-hydraulic position servo systems

- Analytical exploration and parametric insights into optical solitons in magneto-optic waveguides: Advances in nonlinear dynamics for applied sciences

- Bifurcation dynamics and optical soliton structures in the nonlinear Schrödinger–Bopp–Podolsky system

- User profiling in university libraries by combining multi-perspective clustering algorithm and reader behavior analysis

- Exploring bifurcation and chaos control in a discrete-time Lotka–Volterra model framework for COVID-19 modeling

- Review Article

- Haar wavelet collocation method for existence and numerical solutions of fourth-order integro-differential equations with bounded coefficients

- Special Issue: Nonlinear Analysis and Design of Communication Networks for IoT Applications - Part II

- Silicon-based all-optical wavelength converter for on-chip optical interconnection

- Research on a path-tracking control system of unmanned rollers based on an optimization algorithm and real-time feedback

- Analysis of the sports action recognition model based on the LSTM recurrent neural network

- Industrial robot trajectory error compensation based on enhanced transfer convolutional neural networks

- Research on IoT network performance prediction model of power grid warehouse based on nonlinear GA-BP neural network

- Interactive recommendation of social network communication between cities based on GNN and user preferences

- Application of improved P-BEM in time varying channel prediction in 5G high-speed mobile communication system

- Construction of a BIM smart building collaborative design model combining the Internet of Things

- Optimizing malicious website prediction: An advanced XGBoost-based machine learning model

- Economic operation analysis of the power grid combining communication network and distributed optimization algorithm

- Sports video temporal action detection technology based on an improved MSST algorithm

- Internet of things data security and privacy protection based on improved federated learning

- Enterprise power emission reduction technology based on the LSTM–SVM model

- Construction of multi-style face models based on artistic image generation algorithms

- Research and application of interactive digital twin monitoring system for photovoltaic power station based on global perception

- Special Issue: Decision and Control in Nonlinear Systems - Part II

- Animation video frame prediction based on ConvGRU fine-grained synthesis flow

- Application of GGNN inference propagation model for martial art intensity evaluation

- Benefit evaluation of building energy-saving renovation projects based on BWM weighting method

- Deep neural network application in real-time economic dispatch and frequency control of microgrids

- Real-time force/position control of soft growing robots: A data-driven model predictive approach

- Mechanical product design and manufacturing system based on CNN and server optimization algorithm

- Application of finite element analysis in the formal analysis of ancient architectural plaque section

- Research on territorial spatial planning based on data mining and geographic information visualization

- Fault diagnosis of agricultural sprinkler irrigation machinery equipment based on machine vision

- Closure technology of large span steel truss arch bridge with temporarily fixed edge supports

- Intelligent accounting question-answering robot based on a large language model and knowledge graph

- Analysis of manufacturing and retailer blockchain decision based on resource recyclability

- Flexible manufacturing workshop mechanical processing and product scheduling algorithm based on MES

- Exploration of indoor environment perception and design model based on virtual reality technology

- Tennis automatic ball-picking robot based on image object detection and positioning technology

- A new CNN deep learning model for computer-intelligent color matching

- Design of AR-based general computer technology experiment demonstration platform

- Indoor environment monitoring method based on the fusion of audio recognition and video patrol features

- Health condition prediction method of the computer numerical control machine tool parts by ensembling digital twins and improved LSTM networks

- Establishment of a green degree evaluation model for wall materials based on lifecycle

- Quantitative evaluation of college music teaching pronunciation based on nonlinear feature extraction

- Multi-index nonlinear robust virtual synchronous generator control method for microgrid inverters

- Manufacturing engineering production line scheduling management technology integrating availability constraints and heuristic rules

- Analysis of digital intelligent financial audit system based on improved BiLSTM neural network

- Attention community discovery model applied to complex network information analysis

- A neural collaborative filtering recommendation algorithm based on attention mechanism and contrastive learning

- Rehabilitation training method for motor dysfunction based on video stream matching

- Research on façade design for cold-region buildings based on artificial neural networks and parametric modeling techniques

- Intelligent implementation of muscle strain identification algorithm in Mi health exercise induced waist muscle strain

- Optimization design of urban rainwater and flood drainage system based on SWMM

- Improved GA for construction progress and cost management in construction projects

- Evaluation and prediction of SVM parameters in engineering cost based on random forest hybrid optimization

- Museum intelligent warning system based on wireless data module

- Optimization design and research of mechatronics based on torque motor control algorithm

- Special Issue: Nonlinear Engineering’s significance in Materials Science

- Experimental research on the degradation of chemical industrial wastewater by combined hydrodynamic cavitation based on nonlinear dynamic model

- Study on low-cycle fatigue life of nickel-based superalloy GH4586 at various temperatures

- Some results of solutions to neutral stochastic functional operator-differential equations

- Ultrasonic cavitation did not occur in high-pressure CO2 liquid

- Research on the performance of a novel type of cemented filler material for coal mine opening and filling

- Testing of recycled fine aggregate concrete’s mechanical properties using recycled fine aggregate concrete and research on technology for highway construction

- A modified fuzzy TOPSIS approach for the condition assessment of existing bridges

- Nonlinear structural and vibration analysis of straddle monorail pantograph under random excitations

- Achieving high efficiency and stability in blue OLEDs: Role of wide-gap hosts and emitter interactions

- Construction of teaching quality evaluation model of online dance teaching course based on improved PSO-BPNN

- Enhanced electrical conductivity and electromagnetic shielding properties of multi-component polymer/graphite nanocomposites prepared by solid-state shear milling

- Optimization of thermal characteristics of buried composite phase-change energy storage walls based on nonlinear engineering methods

- A higher-performance big data-based movie recommendation system

- Nonlinear impact of minimum wage on labor employment in China

- Nonlinear comprehensive evaluation method based on information entropy and discrimination optimization

- Application of numerical calculation methods in stability analysis of pile foundation under complex foundation conditions

- Research on the contribution of shale gas development and utilization in Sichuan Province to carbon peak based on the PSA process

- Characteristics of tight oil reservoirs and their impact on seepage flow from a nonlinear engineering perspective

- Nonlinear deformation decomposition and mode identification of plane structures via orthogonal theory

- Numerical simulation of damage mechanism in rock with cracks impacted by self-excited pulsed jet based on SPH-FEM coupling method: The perspective of nonlinear engineering and materials science

- Cross-scale modeling and collaborative optimization of ethanol-catalyzed coupling to produce C4 olefins: Nonlinear modeling and collaborative optimization strategies

- Unequal width T-node stress concentration factor analysis of stiffened rectangular steel pipe concrete

- Special Issue: Advances in Nonlinear Dynamics and Control

- Development of a cognitive blood glucose–insulin control strategy design for a nonlinear diabetic patient model

- Big data-based optimized model of building design in the context of rural revitalization

- Multi-UAV assisted air-to-ground data collection for ground sensors with unknown positions

- Design of urban and rural elderly care public areas integrating person-environment fit theory

- Application of lossless signal transmission technology in piano timbre recognition

- Application of improved GA in optimizing rural tourism routes

- Architectural animation generation system based on AL-GAN algorithm

- Advanced sentiment analysis in online shopping: Implementing LSTM models analyzing E-commerce user sentiments

- Intelligent recommendation algorithm for piano tracks based on the CNN model

- Visualization of large-scale user association feature data based on a nonlinear dimensionality reduction method

- Low-carbon economic optimization of microgrid clusters based on an energy interaction operation strategy

- Optimization effect of video data extraction and search based on Faster-RCNN hybrid model on intelligent information systems

- Construction of image segmentation system combining TC and swarm intelligence algorithm

- Particle swarm optimization and fuzzy C-means clustering algorithm for the adhesive layer defect detection

- Optimization of student learning status by instructional intervention decision-making techniques incorporating reinforcement learning

- Fuzzy model-based stabilization control and state estimation of nonlinear systems

- Optimization of distribution network scheduling based on BA and photovoltaic uncertainty

- Tai Chi movement segmentation and recognition on the grounds of multi-sensor data fusion and the DBSCAN algorithm

- Special Issue: Dynamic Engineering and Control Methods for the Nonlinear Systems - Part III

- Generalized numerical RKM method for solving sixth-order fractional partial differential equations