Abstract

The traditional machine intelligence system lacks deep understanding and reasoning ability. This study took the automatic driving system in multi-agent as an example to bring higher-level intelligence and decision-making ability to automatic driving through knowledge intelligence. It obtained real-world geographic information data from OpenStreetMap, preprocessed the data, and built a virtual environment. The inception model was used to identify information in environmental images, and the knowledge information of traffic regulations, road signs, and traffic accidents was expressed to build a knowledge map. The knowledge related to automatic driving was integrated, and automatic driving training was carried out through the reward mechanism and the deep Q-network (DQN) model. About 13 kinds of traffic situations were set up in the virtual environment, and the traditional machine intelligence autonomous driving and knowledge fusion autonomous driving multi-agent were compared. The results show that the average number of accidents in 100,000 km of traditional machine intelligence autonomous driving and knowledge fusion autonomous driving multi-agents was 3 and 1.4, and the average number of violations in 100,000 km was 4.3 and 1.8, respectively. The average graphics processing unit (GPU) utilization rate of knowledge fusion autonomous driving in 13 virtual environments was 75.9%, and the average peak GPU utilization rate was 96.1%. Knowledge fusion of autonomous driving multi-agents can effectively improve the safety of autonomous driving and enable autonomous driving multi-agents to have a higher level of decision-making ability.

1 Introduction

With the rapid development of science and technology, machine intelligence has made remarkable progress, but the traditional machine intelligence system still has obvious shortcomings in deep understanding and reasoning ability. The deficiency of traditional machine intelligence is particularly significant in complex interactive scenarios such as autonomous driving systems [1,2]. Traditional machine intelligent autonomous driving systems are capable of performing basic tasks, but lack deep understanding and reasoning capabilities. They often perform poorly when dealing with complex traffic situations, changing road conditions, and multiple decisions. In autonomous driving, although traditional systems can perform basic tasks, they perform poorly in complex traffic situations, ever-changing road conditions, and multiple decision-making. Autonomous driving is an inevitable trend in the development of the automobile industry. In order to overcome the drawbacks of traditional autonomous driving systems, it is necessary to shift from machine intelligence to knowledge intelligence. This can enable the autonomous driving system to understand and respond to complex situations at a higher level, thereby improving road safety and traffic efficiency [3,4]. The multi-agent simulation method can be transferred by technology to create an autonomous driving multi-agent system with knowledge intelligence to cope with the limitations of current machine intelligence systems. By introducing knowledge intelligence into the auto drive system, the transformation from traditional machine intelligence to knowledge intelligence has been realized. This transformation has brought a higher level of understanding and decision-making ability to the auto drive system, thus improving the system’s performance in complex traffic situations.

Machine intelligence is a computer system that simulates human intelligent behavior and decision-making ability to perform tasks. A lot of studies use machine intelligence to improve the perception and control ability of the system. Machine intelligence is widely used in the medical field, and it provides intelligent decision-making for medical diagnosis by analyzing medical data through artificial intelligence (AI) and other technologies [5,6]. In order to improve the performance of non-invasive endocrine cancer diagnosis, Thomasian used AI to characterize adrenal, pancreatic, pituitary, and thyroid masses, and to construct machine learning models in medicine for in-depth analysis of endocrine cancers [7]. In order to conduct intelligent analysis of high-dimensional clinical imaging data, Shad et al. designed a clinically translatable AI system to realize automatic medical image acquisition, data standardization, and translatable clinical decision support [8]. For intelligent analysis of cardiovascular medicine, Miller applied machine learning and deep learning AI to cardiovascular medicine. Through archiving and AI training of raw cardiovascular data, he performed predictive analysis of clinical outcomes to enhance the real-time performance of cardiologists in emergency rooms, catheter insertion laboratory, imaging rooms, and clinics [9]. Machine intelligence plays a key role in autonomous driving, enabling autonomous vehicles to sense, learn, and make decisions to drive more safely and efficiently [10,11]. In order to reduce the occurrence of autonomous vehicle accidents and improve the safety of autonomous driving, Hong et al. used AI technology to make intelligent decisions according to the set driving scenarios and intelligently reduce autonomous driving failures [12]. The method of machine intelligence can improve the ability of intelligent analysis by training the model, but it is difficult to deal with the complex and changeable environment. Traditional machine intelligence systems have significant shortcomings in deep understanding and reasoning abilities, which leads to limited performance in dealing with complex and ever-changing environments, especially in complex interactive scenarios such as autonomous driving.

Multi-agent system can sense the change of environment and take action in time through the cooperation of multiple individuals. Many people have carried out research on multi-agent system. Multi-agent systems can choose actions based on perceived information and goals and can cope with scene changes in complex dynamic and non-deterministic environments [13,14]. In order to ensure the ideal performance of each agent and the whole multi-agent when encountering faults, Gao et al. improved the fault-tolerant consistent control of the multi-agent system from the aspects of physical security and logical security [15]. In order to improve the consistency-tracking ability of nonlinear multi-agent systems, Bin et al. proposed an event-triggered adaptive control algorithm. He introduced the barrier Lyapunov function to make the tracking error converge within the specified time range [16]. Autonomous driving involves the cooperative operation and interaction between multiple vehicles or traffic participants, and multi-agent systems can coordinate the driving of autonomous vehicles to optimize traffic flow [17,18]. In order to improve multi-agent trajectory prediction in autonomous driving, Vishnu et al. uses traffic states in interactive driving scenarios to represent the changing spatiotemporal interactions between adjacent vehicles to improve trajectory prediction [19]. The existing multi-agent simulation research still has some limitations when applied to areas such as autonomous driving, and it is difficult to introduce rich domain knowledge and advanced reasoning into the simulation environment to enable the system to better understand and cope with complex situations in the real world.

In order to solve the problem that the automatic driving system based on traditional machine intelligence cannot accurately perceive and understand the complex road environment, this study applies the relevant driving knowledge to the automatic driving multi-agent through technology transfer. In this study, by introducing knowledge intelligence, real-time geographic information, traffic regulations, road signs, and other knowledge are integrated into the automated driving system, so that it can have deeper understanding and decision-making ability, thus driving more efficiently and safely in the complex traffic environment. The geographic information data in OpenStreetMap is acquired; the data is cleaned and the real longitude and latitude coordinates are converted to Cartesian coordinates, and the virtual environment is constructed by introducing virtual driving elements. The car uses the camera to obtain the surrounding road environment information and inception model for environment perception, and expresses the knowledge of traffic regulations, road signs, and traffic accidents. It combines all the driving-related knowledge. It also conducts decision analysis through the reward mechanism and deep Q-network (DQN) model, and plans the driving path through the Rapidly Exploring Random Trees (RRT) algorithm. The experiment set up 13 kinds of simulated driving environment, and the experimental results show that (1) knowledge fusion of automatic driving multi-agent can improve the safety of automatic driving, (2) knowledge fusion of autonomous driving multi-agents has better driving efficiency in a variety of driving environments, (3) knowledge fusion of autonomous driving multi-agents can improve the accuracy of traffic signs and road obstacle recognition, and (4) knowledge fusion of autonomous driving multi-agent has excellent CPU (central processing unit) and graphics processing unit (GPU) resource utilization.

2 Multi-agent simulation method

2.1 Building virtual environment

Road traffic accident is one of the major safety hazards around the world, and automated driving technology is expected to significantly reduce traffic accidents through route planning and intelligent cooperation [20,21]. In addition, the energy consumption of cars is very large, and autonomous driving technology can improve energy efficiency, reduce emissions, and increase fuel efficiency, thereby contributing to the sustainability of future transportation systems.

The emergence of autonomous driving is to solve traffic problems, such as traffic safety, congestion, sustainability, and efficiency, and improve the performance and quality of the transportation system. Through rational planning of the driving environment, it has a positive impact on urban life and social development. In order to carry out intelligent analysis of automatic driving effectively, it is necessary to build a virtual environment to provide all aspects of data information for the automatic driving system.

It can build a highly realistic virtual road environment, including road maps, road markings, and road obstacles. Real-world geographic information data can be obtained from OpenStreetMap, an open source mapping data. Map data can be preprocessed, and the obtained real-world geographic data can be applied to virtual environment through data cleaning, coordinate system transformation, and road classification. Some of the real-world geographic information data in OpenStreetMap is shown in Table 1.

Part of geographic information data

| Road type | City street | Highway | Backroad | Mountainous road |

|---|---|---|---|---|

| Longitude | −73.9877 | −118.2437 | −97.7431 | −118.8610 |

| Latitude | 40.7488 | 34.0522 | 30.2672 | 37.7749 |

| Road name | Broadway | Interstate 405 | County Road 101 | Mountain View Road |

| Speed limit (km/h) | 40 | 105 | 72 | 56 |

| Number of lanes | 2 | 4 | 2 | 2 |

| Intersection type | Crossroads | Roundabout | T intersection | Crossroads |

| Lane marking | Dotted line | Full line | Dotted line | Full line |

Table 1 describes the part of the geographic information data, showing the road type, longitude, latitude, road name, speed limit, number of lanes, intersection type, and lane markings in detail. Interstate 405 has four lanes, with lanes marked as solid lines and intersections as roundabouts. All kinds of road environment can be simulated accurately through real geographic information data.

Since there may be noise data in the collected real-world geographic data, the missing values and outliers are processed by data cleaning. There may be data missing in the imported geographic data; the missing data needs to be deleted; the correctness of the data with large or small anomalies should be verified and manually corrected. When the abnormal value cannot be explained or is unreasonable, the abnormal value should be excluded. In order to facilitate geographic data analysis, the original geographic data is converted to the Shapefile format supported by the virtual environment.

The virtual environment uses the Cartesian coordinate system, while the real geographic data uses the latitude and longitude coordinate system, considering the curvature and projection of the earth, and converts the geographic data in the data set to the Cartesian coordinate system. Let the longitude and latitude coordinates of a point in real geography be (b, c), and the points in the converted Cartesian coordinate system be (x, y, z). The formulas of coordinate system transformation are expressed as

where R represents the radius of the earth.

The Cartesian coordinate system provides a unified coordinate representation, and converting geographic data into the Cartesian coordinate system can achieve the consistency of geographic data from different sources and formats. This helps to simplify the data processing and analysis process, allowing different types of data to be compared and integrated in the same coordinate system.

Weather simulators can be used to model various weather conditions, including simulating sunny, rainy, snowy and foggy days, and the shape of the sky, raindrops, and snowflakes can be changed through the parameter settings of the weather simulator. Simulating different weather conditions can change the friction on the road and the performance of the vehicle, so as to accurately analyze the automatic driving in the real environment.

Vehicle movement is also influenced by the surrounding dynamics and the complexity of the environment, through the introduction of virtual motor vehicles, pedestrians, non-motor vehicles, and traffic lights. It creates a real vehicle scene by adding traffic density and complex routes. The virtual vehicles introduced have intelligent agent functions and can follow traffic rules, and the simulated traffic lights are also consistent with those in the real world.

Classification of virtual environments, subdivision of virtual environments, and labeling is conducive to the subsequent training of autonomous driving models. The classification of virtual environments is shown in Table 2.

Classification of virtual environments

| Environment | Category | Label |

|---|---|---|

| Road environment | Urban road | A1 |

| Highway | A2 | |

| Backroad | A3 | |

| Mountainous road | A4 | |

| Traffic environment | Congestion road | B1 |

| Free circulation road | B2 | |

| Construction area | B3 | |

| Topographic environment | Flat road | C1 |

| Steep road | C2 | |

| Weather environment | Sunny road | D1 |

| Rainy road | D2 | |

| Ice and snow road | D3 | |

| Foggy road | D4 | |

| Traffic sign environment | Clear sign | E1 |

| The sign is not clear | E2 | |

| Intersection environment | Crossroads | F1 |

| Roundabout | F2 | |

| T intersection | F3 |

In Table 2, virtual environment classification is described. Six kinds of environments are refined to a total of 18 types, and each type of virtual environment is labeled. In the actual autonomous driving environment, six kinds of environments are combined to form a complex virtual environment. The virtual environment settings can help the system to comprehensively learn and adapt to various complex road scenes, and improve the robustness and safety of the auto drive system under different conditions.

2.2 Sensing the environment

With the increasing number of vehicles, in order to reduce traffic congestion, optimize traffic fluency, and improve road utilization rate, it is necessary to effectively coordinate vehicle actions through automatic driving system [22,23]. The autonomous driving system needs to accurately perceive the surrounding environment, make quick decisions according to the surrounding environment, and optimize driving efficiency under the premise of following traffic rules.

Machine intelligence is a computer system that enables machines to perceive, learn, understand, and make decisions and perform tasks by simulating human intelligent behavior [24,25]. The application of machine intelligence to the autonomous driving system can help the car intelligently perceive the road environment and carry out task planning.

In the process of automatic driving, the car obtains the surrounding road environment information through the camera, and the image including road signs, vehicles, and pedestrians can be collected through the camera. It is the basis of automatic driving perception to detect the features of the collected images and identify the object information in the images.

Inception is a deep convolutional neural network that processes input images in parallel by using multiple convolutional cores of different sizes to capture image features at different scales [26,27]. Inception model contains parallel

The inception model adopts a multi-scale convolution design, which effectively captures different scales and complex features in images by using convolution kernels of different sizes in parallel. This architecture gives inception models an advantage in processing complex road environment images and can improve the accuracy of environmental perception.

The purpose of using multiple convolution kernels of different sizes in the inception model is to capture features of different scales in the input image. This multi-scale convolution strategy helps the model to understand and learn image information more comprehensively, improving the model’s perception ability of structures and patterns at different scales.

The inception model can effectively capture multi-scale features in input images and improve the model’s representation ability for complex images through parallel operations with convolution kernels of different sizes. Although the inception model reduces the number of parameters through dimensionality reduction operations, the model still has a certain computational complexity due to parallel operations and concatenation, especially in deep cases.

The activation function used in the inception model is

where

The calculation of the ReLU function is very simple, just checking whether the input is greater than zero, so the calculation speed is fast. When the input is negative, the output of ReLU is zero, which may cause certain neurons to never be activated during training, known as the “death ReLU” problem.

The global maximum pooling layer is used to focus on the most obvious feature information in the image features. The formula for global maximum pooling is as follows:

where

After sensing the road environment, it is necessary to understand and analyze the surrounding information; integrate the road signs, vehicles, pedestrians, and other information collected by sensors; and comprehensively analyze the road environment data through the backpropagation neural network model. Let the perceived road environment data set be

where

During model training, the backpropagation neural network adjusts the weights of different neurons through errors, so that the errors reach an acceptable range [28,29]. Through forward data analysis and reverse transmission of errors, the ability of automatic driving to understand the road environment is improved. The errors are expressed as

where

After comprehensive analysis of the perceived road environment information, the automatic driving system makes decisions based on the surrounding environment, deciding to accelerate, slow down, brake, turn, and path planning. The automatic driving system uses the RRT algorithm to create a tree, which contains the starting node as the root node, randomly generates a node, expands the node closest to the new node in the tree, and adds it if the path is feasible. In the RRT algorithm, the distance between two points A and B is expressed as

In the automatic driving system of machine intelligence, the road environment information can be sensed and intelligently understood. Intelligent decisions can be made based on real-time road environment conditions, and the vehicle can be physically controlled to achieve safe, efficient, and autonomous driving.

2.3 Knowledge representation and knowledge base

The automatic driving system of traditional machine intelligence still has certain technical defects, which makes it difficult to make accurate decisions in the face of complex road environment. Technology transfer is the transfer of a certain technology, knowledge, or innovation from one field or organization to another for wider application or commercialization [30,31]. Through technology transfer, the knowledge about vehicle driving can be applied to the automatic driving system, thus improving the performance of the automatic driving.

Autonomous driving should follow the traffic rules, and the safety of driving can be optimized through previous traffic accident cases. The knowledge base is determined to be traffic regulations manual, road sign database, and traffic accident case database. Using natural language processing technology to extract text data information from knowledge base, the extracted text data can be converted into the first-order logic, which is conducive to machine understanding.

This study converts the text information of Interstate 405’s speed limit of 105 km/h into SpeedLimit(Interstate 405, 105 km/h). The information in the knowledge base can be periodically updated to ensure that the information in the knowledge base is up-to-date. Domain knowledge related to automatic driving is associated by building a knowledge graph.

The inception model is used to process perceived environmental images. The inception model can extract advanced features of images for understanding information such as traffic signs and road conditions in the environment. The knowledge of traffic regulations, road signs, and traffic accident information obtained from the data is expressed. The information is mapped to a form that the model can understand, and a knowledge graph is constructed.

2.4 Reinforcement learning and knowledge integration

In order to comprehensively relate the knowledge related to automatic driving, it is necessary to integrate the relevant automatic driving knowledge. This article creates an interface that enables the agent to quickly query information in the knowledge base. This interface can also accept the query and return all knowledge related to the query content.

The state of the environment around the car can be understood and expressed into a form that the machine can understand, including vehicle speed, surrounding vehicle position, road sign information, etc. Specific actions can be performed based on information about the environment around the car and related knowledge.

Setting the action space for an automated driving agent allows people to define all possible agent actions. The actions of the agent include acceleration, braking, steering, changing lanes, keeping lanes, stopping, avoiding obstacles, emergency braking, changing lane signals, turning on the wiper, and adjusting the lights.

Autonomous driving is the process of continuous learning, improvement, and optimization of driving performance. Based on the data driven on the road, the learning ability of the autonomous driving can be enhanced by setting a reward function. In autonomous driving, the reward is usually based on the agent’s behavior, whether it follows traffic rules, whether it conflicts with other vehicles, whether it maintains a proper distance, and so on. It can positively reward performance that improves driving performance and penalize performance that decreases it.

The reward mechanism takes into account driving safety, driving efficiency, compliance with traffic rules and other directions, and safety behaviors such as avoiding collisions, not speeding, and not running red lights can obtain positive rewards. Positive rewards can be earned for following traffic rules, maintaining flow, and making efficient lane changes. Parking, yielding to pedestrians, and not driving the wrong way can get a positive reward. Incentives can be used to encourage agents to adopt safe behaviors and improve the safety of automated driving.

The design idea of the reward mechanism focuses on the safety, efficiency, and behavior conforming to traffic rules of the auto drive system. The goal of designing a reward mechanism is to guide autonomous driving agents to adopt safe, efficient, and compliant behaviors through positive rewards and punishments.

The current environmental state and available actions of the autopilot are input into the DQN model, so that the output layer of the DQN network is consistent with the available action dimensions, and thus, the Q value that may occur for each action is output.

The mean squared error is selected as the loss function of the DQN model to analyze the difference between the predicted Q value and the target Q value [32,33]. The mean squared error formula is expressed as

where n represents the total number of samples,

In this study, Adam optimizer is selected for DQN model training to optimize the automatic driving system’s comprehensive analysis ability of driving-related knowledge and driving environment, and gradually realize the transformation from machine intelligence to knowledge intelligence.

2.5 Autonomous driving multi-agent model

The autonomous driving multi-agent model is a computational model used to simulate and optimize the driving of autonomous vehicles in multi-vehicle traffic environments, and comprehensively analyze the road environment and related driving knowledge. Vehicles can operate safely and efficiently in complex traffic scenarios through the collaboration of multiple agents.

The autonomous driving multi-agent model includes several virtual autonomous vehicle agents, each of which has the ability of perception, understanding, and decision-making. The autonomous driving system in the real world can be simulated through the cooperation of various agents, and the agents share information and cooperate through communication protocols [34,35].

3 Experimental evaluation

3.1 Setting traffic situation

The multi-agent of autonomous driving with knowledge fusion can quickly perceive the surrounding environment through multiple intelligent agents and combine the relevant driving knowledge to make feasible driving behaviors. In order to effectively analyze the knowledge fusion of autonomous driving multi-agents, the experiment simulates various traffic situations in virtual environment and reflects the performance of autonomous driving multi-agents through simulated traffic situations.

Because there are too many traffic scenarios that can be set in the virtual environment, the labels of traffic scenario P1 are initialized as A1, B1, C1, D1, E1, and F1. In order to set up different traffic situations, only one of the environmental contents of road environment, traffic environment, terrain environment, weather environment, traffic sign environment, and intersection environment is changed, so as to generate different types of situations.

On the basis of traffic situation P1, the road environment is changed into expressway, rural road, and mountain road, and the corresponding traffic situation is PA2, PA3, and PA4, respectively. PB2 and PB3 can be generated by changing the traffic environment to free circulation road and construction area on the basis of traffic situation P1. By analogy, traffic situations can also be generated: PC2, PD2, PD3, PD4, PE2, PF2, and PF3.

The situational information about the road environment set in the virtual environment is shown in Table 3.

Contextual information about the road environment

| Circumstances | Content | Label |

|---|---|---|

| P1 | Urban road | A1 |

| Congestion road | B1 | |

| Flat road | C1 | |

| Sunny road | D1 | |

| Clear sign | E1 | |

| Crossroads | F1 | |

| PA2 | Highway | A2 |

| Congestion road | B1 | |

| Flat road | C1 | |

| Sunny road | D1 | |

| Clear sign | E1 | |

| Crossroads | F1 | |

| PA3 | Backroad | A3 |

| Congestion road | B1 | |

| Flat road | C1 | |

| Sunny road | D1 | |

| Clear sign | E1 | |

| Crossroads | F1 | |

| PA4 | Mountainous road | A4 |

| Congestion road | B1 | |

| Flat road | C1 | |

| Sunny road | D1 | |

| Clear sign | E1 | |

| Crossroads | F1 |

Table 3 describes the situational information about the road environment, showing the specific content in P1, PA2, PA3, and PA4 scenarios. By observing the performance of automatic driving in different situations, the influence of knowledge intelligence transformation on automatic driving performance can be well evaluated.

3.2 Evaluation of automatic driving performance

In order to effectively evaluate the performance of the knowledge fusion autonomous multi-agent system, it is necessary to compare the knowledge fusion autonomous multi-agent system with the traditional machine intelligence autonomous driving system. Traditional machine intelligence autonomous driving systems use backpropagation neural network models to comprehensively analyze road environment data to determine the movement of the car.

Knowledge fusion of automatic driving multi-agent system introduces a variety of driving knowledge on the basis of traditional machine intelligence, integrating knowledge of traffic regulations, road signs, and traffic accident cases. Through the reward mechanism and DQN model training, it improves the comprehensive analysis ability of the automatic driving system to the surrounding environment.

In order to comprehensively analyze the performance of knowledge-fused autonomous driving multi-agents, this article sets up a variety of driving scenarios. By observing the safety, efficiency, feasibility, environmental perception accuracy, and resource utilization of autonomous driving, it can judge the performance of autonomous driving multi-agents in simulated driving situations.

The first requirement of autonomous driving is safety, and the number of accidents per 100,000 km and the number of violations per 100,000 km of autonomous driving are recorded in the simulated environment P1. Vehicle accidents are identified as vehicle collisions, including vehicle-to-vehicle collisions, vehicle-to-guardrail collisions, and vehicle-to-pedestrian collisions. The violation of the vehicle is reflected in the violation of traffic rules, including running red lights and not driving at the specified speed.

Traffic intersections are complex driving environments. In this study, three traffic intersection environments, P1, PF2, and PF3, were set up to record the average driving speed of vehicles in the simulated environment. Different weather conditions would also affect the efficiency of driving. In this study, virtual environments of P1, PD2, PD3, and PD4 were set to record the average driving speed of autonomous vehicles.

The realization of the automatic driving system is based on the perception of the external environment, and the accurate identification of traffic signs and road obstacles can provide information for vehicle driving. The traditional machine intelligent automatic driving can perceive the environment through inception model, while the knowledge-integrated automatic driving establishes a traffic knowledge base on the basis of inception model, and comprehensively realizes the environment through knowledge representation and image recognition.

Automatic driving environment perception can be divided into four categories according to the results of predictive recognition and actual results: TP indicates that the environment is recognized as a positive example and the actual is also a positive example. FP indicates that the environment is identified as a positive example but is actually a negative example. FN indicates that the environment is identified as a negative example but is actually a positive example. TN indicates that the environment is identified as a negative example and the actual example is also negative.

Environmental perception performance can be comprehensively evaluated by accuracy, precision, recall, and F1 value, and the accuracy formula is expressed as

where N represents the total number of samples,

The precision rate measures how much of the model’s predicted positive class of samples is actually positive class of samples, namely, the accuracy of the predicted positive examples. The formula of precision rate is expressed as

The recall rate measures the proportion of the number of positive class samples correctly predicted by the model to the total number of actual positive class samples, i.e., the model’s ability to identify positive examples. The formula of recall rate is expressed as

The F1 value is determined by the results of formulas (11) and (12), and the F1 value is expressed as

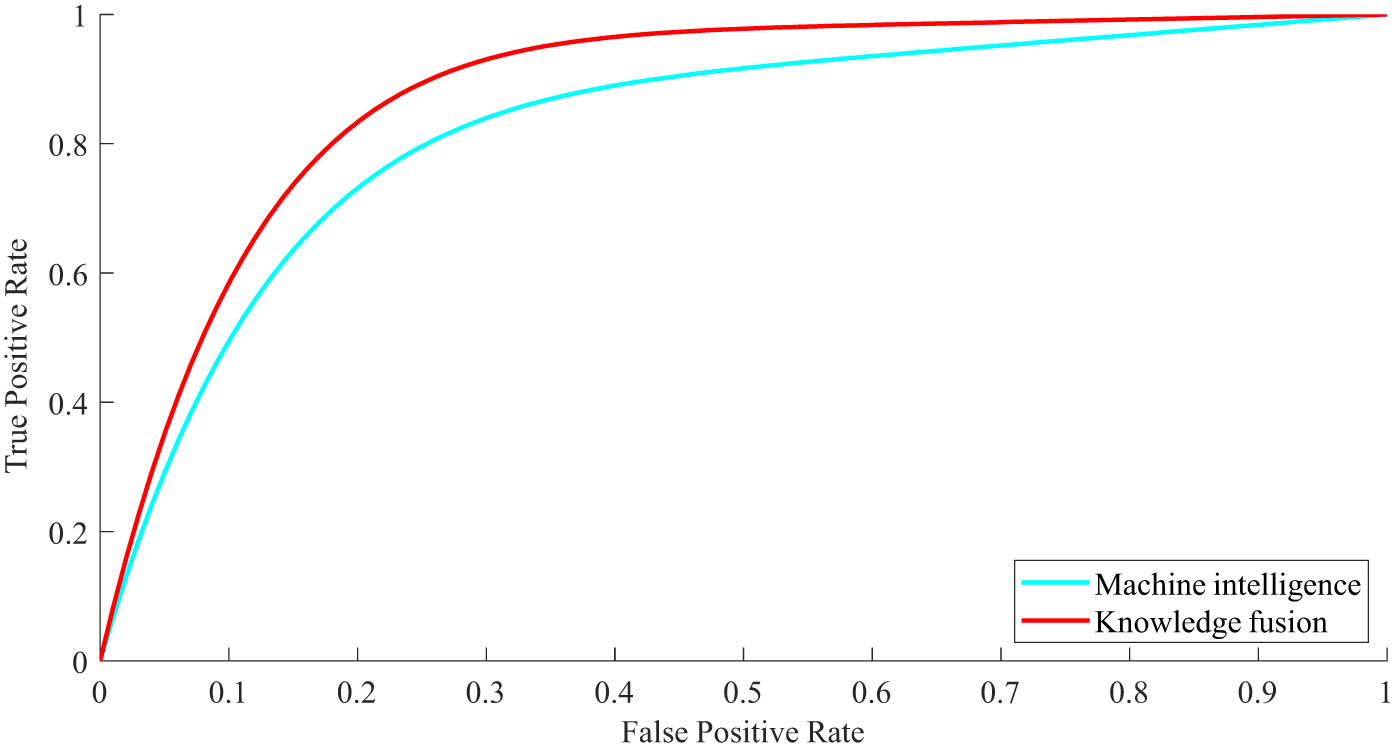

In order to more intuitively reflect the environment perception ability of machine intelligent automatic driving and knowledge fusion intelligent driving, receiver operating characteristic (ROC) curve is drawn to evaluate the environment perception performance of different automatic driving systems.

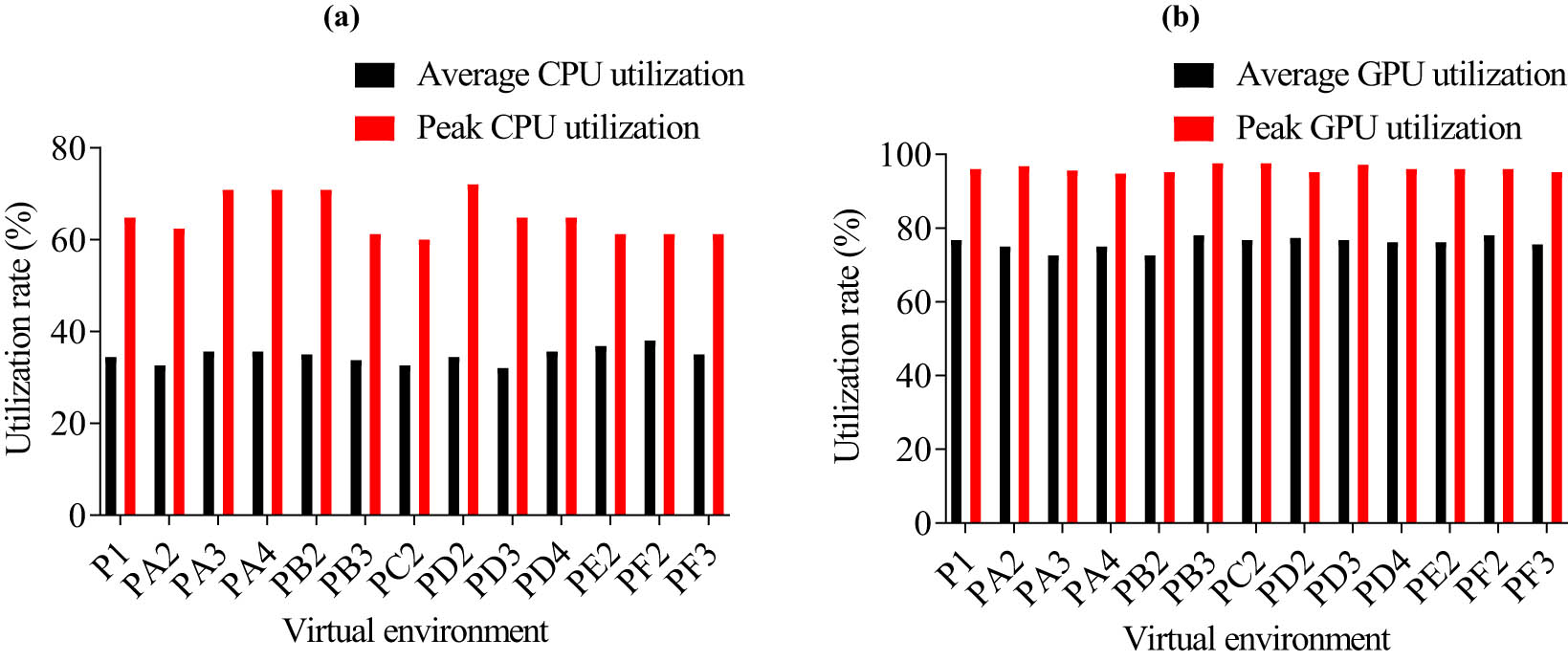

In order to fully analyze the performance of the knowledge fusion autonomous driving system, the resource utilization of the autonomous driving system in the simulated environment is measured. CPU is a key component for performing various computing tasks in an autonomous driving system, and CPU utilization represents the extent to which the CPU’s computing power is used to run an autonomous driving application. GPUs are commonly used in autonomous driving systems for image processing, deep learning inference, and computationally intensive tasks. GPU utilization represents the extent to which it is used when running an autonomous driving application. The simulation environment of automatic driving is set up: P1, PA2, PA3, PA4, PB2, PB3, PC2, PD2, PD3, PD4, PE2, PF2, and PF3.

4 Results

4.1 Security

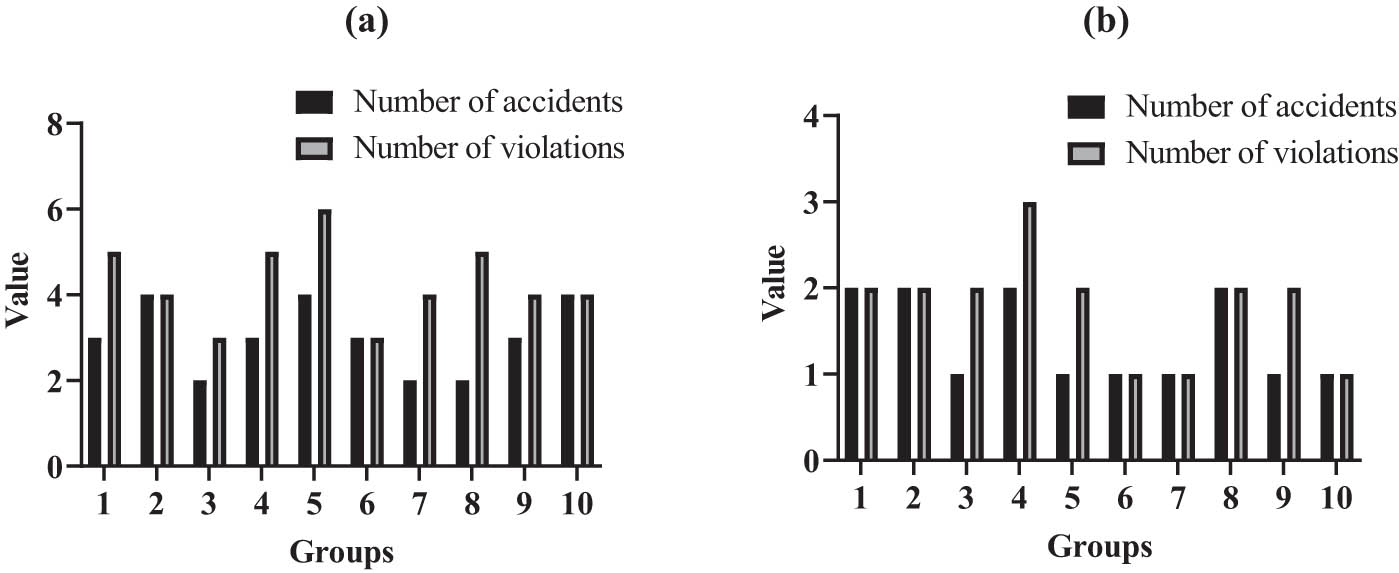

The simulated environment P1 is composed of urban road, congested road, flat road, sunny road, clearly marked road, and intersection. The safety performance of the autonomous vehicle is recorded in P1 environment, and the number of accidents per 100,000 km and the number of violations per 100,000 km are shown in Figure 1.

Security results: (a) safety of automated driving with traditional machine intelligence and (b) safety of knowledge-integrated autonomous driving.

In Figure 1(a), the safety of automated driving with traditional machine intelligence is described. In this study, the number of accidents and violations were measured in 100,000 km in P1 environment, and the horizontal axis indicates that a total of 10 sets of tests were conducted. Traditional machine intelligent autonomous vehicles have been involved in driving accidents and violations in 100,000 km. The average number of accidents of traditional machine intelligence autonomous driving in 100,000 km was 3, and the average number of violations in 100,000 km was 4.3. The safety of knowledge fusion autonomous driving is described in Figure 1(b), and 10 sets of tests were also conducted. In the 100,000-km P1 virtual environment test, the number of accidents and violations of knowledge fusion autonomous driving was smaller than that of traditional machine intelligence autonomous driving. The average number of accidents in 100,000 km was 1.4, and the average number of violations in 100,000 km was 1.8. When perceiving the surrounding environment, the driving knowledge base can be combined with the knowledge base content and the road environment analysis results to make driving decisions together. Knowledge fusion of automatic driving can effectively reduce the number of accidents and violations, thereby improving the safety of automatic driving.

4.2 Driving efficiency

Autonomous driving is autonomous navigation and driving without human intervention, and efficient driving planning can improve road capacity and reduce traffic congestion. This study analyzes the driving efficiency of automatic driving in different traffic intersections and different weather conditions.

4.2.1 Traffic efficiency at different traffic intersections

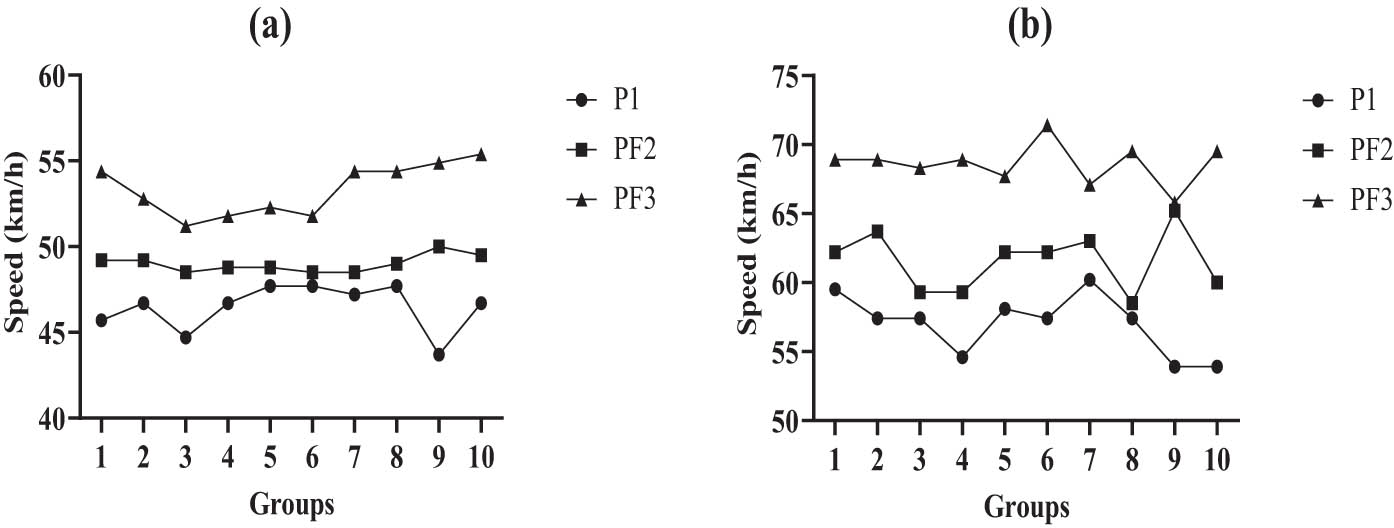

The intersection environment in the virtual environment includes intersections (P1), roundabout (PF2), and T-intersections (PF3), which use the average speed of the autonomous vehicle to reflect the driving efficiency. The driving efficiency results of different traffic intersections are shown in Figure 2.

Traffic efficiency at different traffic intersections: (a) driving efficiency of traditional automatic driving at different traffic intersections and (b) driving efficiency of knowledge fusion automatic driving at different traffic intersections.

In Figure 2(a), the driving efficiency of traditional machine intelligence autonomous driving at different traffic intersections is described. This study sets up three kinds of intersection environment: intersection, roundabout, and T-intersection. Traditional machine intelligent automatic driving in P1, PF2, and PF3 environment are all urban roads, and the driving speed is not very high. The driving speed of different intersection environments is different, which is mainly due to the different traffic complexity of different types of intersection environments. The average driving speed of the 10th group in P1, PF2, and PF3 environments was 46.7, 49.5, and 55.4 km/h, respectively. Figure 2(b) describes the driving efficiency of knowledge-integrated intelligent automatic driving at different traffic intersections, and the driving speed of knowledge-integrated automatic driving in three traffic intersections is higher than that of traditional automatic driving. This is mainly knowledge fusion automatic driving that can integrate a variety of driving knowledge, so as to make more intelligent driving decisions and improve driving speed. The average driving speed of group 10 in P1, PF2, and PF3 environments was 53.9, 60.0, and 69.5 km/h, respectively. Therefore, knowledge fusion automatic driving can improve driving efficiency at different types of traffic intersections.

4.2.2 Driving efficiency under different weather conditions

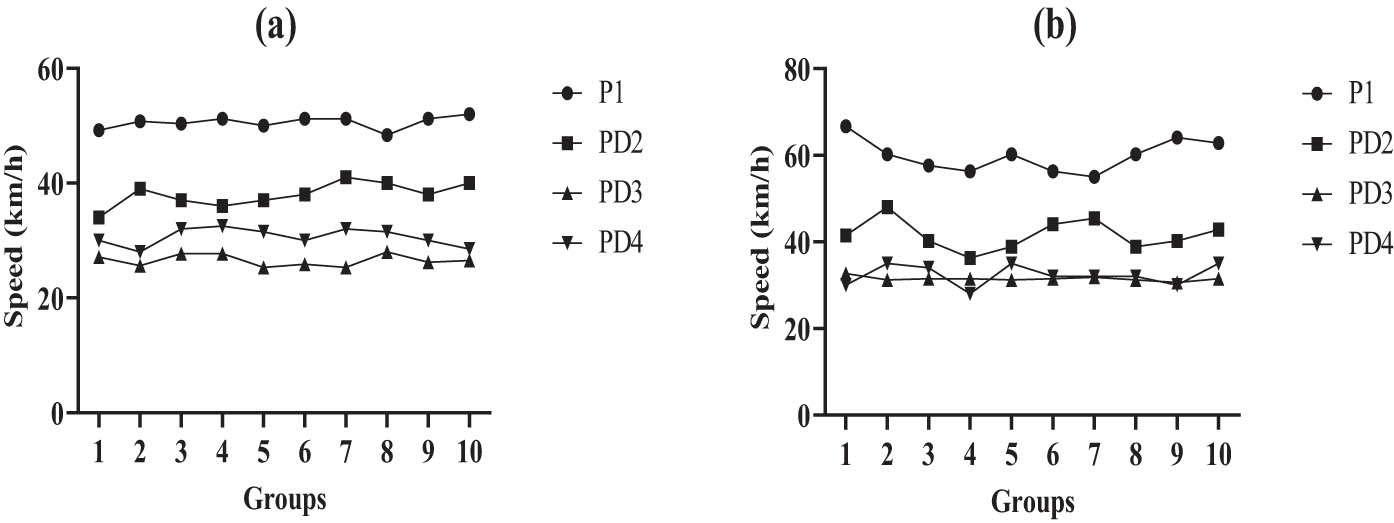

Virtual environments P1, PD2, PD3, and PD4 are set, which are sunny roads, rainy roads, snow and ice roads, and foggy roads, respectively. The driving efficiency results of different weather environments are shown in Figure 3.

Driving efficiency under different weather conditions: (a) driving efficiency of traditional automatic driving in weather environment and (b) driving efficiency of knowledge fusion automatic driving in weather environment.

Figure 3(a) describes the driving efficiency of traditional machine intelligence autonomous driving in different weather environments. In this study, the driving speed of sunny road, rainy road, snow, and ice road and foggy road are calculated. Road friction is different in different weather conditions, and self-driving cars need to adjust their speed according to weather conditions to ensure safety. The driving speed is the highest in sunny days, which is mainly due to the wide driving field of vision in sunny days and the high friction coefficient of the ground. The average driving speed of the 10th group in P1, PD2, PD3, and PD4 environments was 52.0, 40.0, 26.5, and 28.5 km/h, respectively. Figure 3(b) describes the driving efficiency of knowledge fusion automatic driving in different weather environments. The driving speed of knowledge fusion automatic driving is generally higher than that of traditional automatic driving. The average driving speed of group 10 in P1, PD2, PD3, and PD4 environments was 62.8, 42.8, 31.5 and 35.0 km/h, respectively. Therefore, knowledge fusion automatic driving can improve driving efficiency under different weather conditions.

4.3 Environment awareness performance

The environmental perception accuracy rate, precision rate, recall rate, and F1 value of traditional machine intelligence automatic driving and knowledge fusion automatic driving are shown in Table 4.

Environment awareness performance table

| Index | Machine intelligence | Knowledge fusion |

|---|---|---|

| Accuracy (%) | 87.2 | 96.8 |

| Precision (%) | 88.4 | 98.2 |

| Recall (%) | 85.5 | 97.6 |

| F1 value (%) | 86.9 | 97.9 |

Table 4 describes the environmental perception performance of traditional machine intelligence automatic driving and knowledge fusion automatic driving. The accuracy rate, precision rate, recall rate, and F1 value of knowledge fusion automatic driving are all higher than those of traditional machine intelligence automatic driving. This is mainly due to the knowledge fusion of autonomous driving that can conduct comprehensive analysis of traffic regulations, road signs, and traffic accident-related information. The environmental perception accuracy of traditional machine intelligence autonomous driving and knowledge fusion autonomous driving was 87.2 and 96.8%, respectively. The shift from machine intelligence to knowledge intelligence can effectively improve the environment perception performance of autonomous driving systems.

In order to intuitively analyze the environmental perception effects of different automatic driving systems, the ROC curve is shown in Figure 4.

ROC curve of environment perception.

Figure 4 describes the ROC curve of environmental perception. The horizontal axis represents the false-positive case rate, while the vertical axis represents the true-positive case rate. The larger the area under the curve, the better the performance of environmental perception. It is obvious that the area under the ROC curve of knowledge fusion automatic driving is larger than that of traditional machine intelligent automatic driving. Both knowledge fusion autonomous driving and traditional machine intelligent autonomous driving have ROC curves that exceed random classifiers, but knowledge fusion autonomous driving has better performance. The ROC curve of knowledge fusion automatic driving is closer to the point (0, 1), which means that knowledge fusion automatic driving has better environmental perception performance and can more accurately identify traffic signs and road obstacles in complex driving environments.

4.4 Resource utilization result

The resource utilization of the knowledge fusion automatic driving system is analyzed, and the utilization of CPU and GPU is shown in Figure 5.

Resource utilization: (a) CPU utilization and (b) GPU utilization.

In Figure 5(a), CPU utilization is described, and 13 virtual environments are represented on the horizontal axis. Average CPU utilization and peak CPU utilization of 13 virtual environments are counted. The CPU utilization of knowledge fusion autonomous driving system is different in different virtual driving environments, which is mainly due to the computational complexity of different virtual environments. The average CPU utilization of knowledge fusion autonomous driving in 13 virtual environments was 34.7%, and the average peak CPU utilization was 65.1%. In Figure 5(b), GPU utilization is described, and the GPU utilization of the knowledge fusion automatic driving system is very good. It can make good use of GPU resources, so as to effectively improve the computing efficiency of automatic driving. The average GPU utilization of knowledge fusion autonomous driving in 13 virtual environments was 75.9%, and the average peak GPU utilization was 96.1%. Application knowledge fusion of automatic driving can make good use of CPU resources and GPU resources.

5 Conclusions

Autonomous driving is an important field of multi-agent research. Autonomous driving involves sensing the environment and making driving decisions according to the environment, so as to cope with the complex road environment. This study constructed a virtual driving environment; used inception model to identify road images collected; represented information related to traffic regulations, road signs, and traffic accidents; and constructed a knowledge map. Autonomous driving is realized through knowledge fusion and reinforcement learning. This article set up a variety of virtual environments to analyze the performance of knowledge fusion autonomous multi-agent. The experimental results show that the multi-agent knowledge fusion can effectively reduce the number of accidents and violations, improve vehicle driving efficiency and environmental perception ability, and have excellent resource utilization. The shift from machine intelligence to knowledge intelligence can enable multiple agents to have higher logical reasoning, inference, and problem solving. However, this study only analyzed the automatic driving system, and the analysis of multi-agent was too limited. Therefore, studies on more knowledge-intelligent multi-agents would be the direction of future research. The article emphasizes the importance of knowledge intelligence, and future research can be extended to other fields, not limited to autonomous driving, such as applying knowledge intelligence in medical, educational, financial, and other fields, to improve system understanding and decision-making ability.

-

Funding information: This study was supported by the Educational Science Planning Project of Guangdong Province in 2023 (Higher Education Special) (No. 2023GXJK882).

-

Author contribution: The author confirms the sole responsibility for the conception of the study, presented results and manuscript preparation.

-

Conflict of interest: The author declares that there is no conflict of interest regarding the publication of this article.

-

Data availability statement: The data used to support the findings of this study are available from the corresponding author upon request.

References

[1] Pavlo A, Butrovich M, Ma L, Menon P, Lim WS, Van Aken D, et al. Make your database system dream of electric sheep: Towards self-driving operation. Proc VLDB Endow. 2021;14(12):3211–21. 10.14778/3476311.3476411.Search in Google Scholar

[2] Seifrid M, Pollice R, Aguilar-Granda A, Morgan Chan Z, Hotta K, Ser CT, et al. Autonomous chemical experiments: Challenges and perspectives on establishing a self-driving lab. Acc Chem Res. 2022;55(17):2454–66. 10.1021/acs.accounts.2c00220.Search in Google Scholar PubMed PubMed Central

[3] Gwak J, Jung J, Oh R, Park M, Rakhimov MAK, Ahn J, et al. A review of intelligent self-driving vehicle software research. KSII Trans Internet Inf Syst (TIIS). 2019;13(11):5299–320.10.3837/tiis.2019.11.002Search in Google Scholar

[4] Enrong P, Jiafan Y. The ethical framework of artificial intelligence for the technology itself-take the autonomous driving system as an example. Nat Dialectics Newsl. 2020;42(3):33–9.Search in Google Scholar

[5] Goldenberg SL, Nir G, Salcudean SE. A new era: Artificial intelligence and machine learning in prostate cancer. Nat Rev Urol. 2019;16(7):391–403.10.1038/s41585-019-0193-3Search in Google Scholar PubMed

[6] Amann J, Blasimme A, Vayena E, Frey D, Madai VI. Explainability for artificial intelligence in healthcare: a multidisciplinary perspective. BMC Med Inform Decis Mak. 2020;20(1):1–9.10.1186/s12911-020-01332-6Search in Google Scholar PubMed PubMed Central

[7] Thomasian NM, Kamel IR, Bai HX. Machine intelligence in non-invasive endocrine cancer diagnostics. Nat Rev Endocrinol. 2022;18(2):81–95.10.1038/s41574-021-00543-9Search in Google Scholar PubMed PubMed Central

[8] Shad R, Cunningham JP, Ashley EA, Langlotz CP, Hiesinger W. Designing clinically translatable artificial intelligence systems for high-dimensional medical imaging. Nat Mach Intell. 2021;3(11):929–35.10.1038/s42256-021-00399-8Search in Google Scholar

[9] Miller DD. Machine intelligence in cardiovascular medicine. Cardiol Rev. 2020;28(2):53–64.10.1097/CRD.0000000000000294Search in Google Scholar PubMed

[10] Manoharan S, Dr. An improved safety algorithm for artificial intelligence enabled processors in self driving cars. J Artif Intell Capsule Netw. 2019;1(2):95–104. 10.36548/jaicn.2019.2.005.Search in Google Scholar

[11] Stilgoe J. Self-driving cars will take a while to get right. Nat Mach Intell. 2019;1(5):202–3.10.1038/s42256-019-0046-zSearch in Google Scholar

[12] Hong J-W, Wang Y, Lanz P. Why is artificial intelligence blamed more? Analysis of faulting artificial intelligence for self-driving car accidents in experimental settings. Int J Human–Computer Interact. 2020;36(18):1768–74. 10.1080/10447318.2020.1785693.Search in Google Scholar

[13] Amirkhani A, Barshooi AH. Consensus in multi-agent systems: A review. Artif Intell Rev. 2022;55(5):3897–3935.10.1007/s10462-021-10097-xSearch in Google Scholar

[14] Wang J, Hong Y, Wang J, Xu J, Tang Y, Han Q-L, et al. Cooperative and competitive multi-agent systems: From optimization to games. IEEE/CAA J Autom Sin. 2022;9(5):763–83.10.1109/JAS.2022.105506Search in Google Scholar

[15] Gao C, He X, Dong H, Liu H, Lyu G. A survey on fault-tolerant consensus control of multi-agent systems: trends, methodologies and prospects. Int J Syst Sci. 2022;53(13):2800–13. 10.1080/00207721.2022.2056772.Search in Google Scholar

[16] Bin Y, Qi Z, Liang C, Renquan L. Event trigger control of multi-intelligent system with specified performance and full state constraints. J Autom. 2019;45(8):1527–35.Search in Google Scholar

[17] Jiayu C, Supeng L, Ke Z. Research on the collaborative decision-making mechanism of vehicle-linked multi-agent information fusion for autonomous driving applications. J Internet Things. 2020;4(3):69–77.Search in Google Scholar

[18] Yu S, Lei C, Xiliang C, Zhixiong X, Jun L. Review of research on in-depth reinforcement learning of multi-agents. Computer Eng Appl. 2020;56(5):13–24.10.1049/joe.2019.1200Search in Google Scholar

[19] Vishnu C, Abhinav V, Roy D, Mohan CK, Babu CS. Improving multi-agent trajectory prediction using traffic states on interactive driving scenarios. IEEE Robot Autom Lett. 2023;8(5):2708–15.10.1109/LRA.2023.3258685Search in Google Scholar

[20] Grigorescu S, Trasnea B, Cocias T, Macesanu G. A survey of deep learning techniques for autonomous driving. J Field Robot. 2020;373:362–86. 10.1002/rob.21918.Search in Google Scholar

[21] Fujiyoshi H, Hirakawa T, Yamashita T. Deep learning-based image recognition for autonomous driving. IATSS Res. 2019;43(4):244–52. 10.48550/arXiv.1811.11329.Search in Google Scholar

[22] Montanaro U, Dixit S, Fallah S, Dianati M, Stevens A, Oxtoby D, et al. Towards connected autonomous driving: review of use-cases. Veh Syst Dyn. 2019;57(6):779–814. 10.1080/00423114.2018.1492142.Search in Google Scholar

[23] Pisarov J. Autonomous driving. IPSI J IPSI BgD Trans Adv Res (TAR). 2021;17(2):19–27.Search in Google Scholar

[24] Roy K, Jaiswal A, Panda P. Towards spike-based machine intelligence with neuromorphic computing. Nature. 2019;575(7784):607–17.10.1038/s41586-019-1677-2Search in Google Scholar PubMed

[25] Rifaioglu AS, Atas H, Martin MJ, Cetin-Atalay R, Atalay V. Recent applications of deep learning and machine intelligence on in silico drug discovery: methods, tools and databases. Brief Bioinf. 2019;20(5):1878–912. 10.1093/bib/bby061.Search in Google Scholar PubMed PubMed Central

[26] Fang C, Shang Y, Xu D. MUFOLD‐SS: new deep inception‐inside‐inception networks for protein secondary structure prediction. Proteins: Struct Funct Bioinf. 2018;86(5):592–8. 10.1002/prot.25487.Search in Google Scholar PubMed PubMed Central

[27] Stefenon SF, Yow K-C, Nied A, Meyer LH. Classification of distribution power grid structures using inception v3 deep neural network. Electr Eng. 2022;104(6):4557–69.10.1007/s00202-022-01641-1Search in Google Scholar

[28] Jiang J, Hu G, Li X, Xu X, Zheng P, Stringer J. Analysis and prediction of printable bridge length in fused deposition modelling based on back propagation neural network. Virtual Phys Prototyp. 2019;14(3):253–66. 10.1080/17452759.2019.1576010.Search in Google Scholar

[29] Rong X, Liu Y, Chen P, Lv X, Shen C, Yao B, et al. Prediction of creep of recycled aggregate concrete using back‐propagation neural network and support vector machine. Struct Concr. 2023;24(2):2229–44. 10.1002/suco.202200469.Search in Google Scholar

[30] Miller K, McAdam R, McAdam M. A systematic literature review of university technology transfer from a quadruple helix perspective: toward a research agenda. R&d Manag. 2018;48(1):7–24. 10.1111/radm.12228.Search in Google Scholar

[31] Chais C, Patrícia Ganzer P, Munhoz Olea P. Technology transfer between universities and companies: Two cases of Brazilian universities. Innov Manag Rev. 2018;15(1):20–40.10.1108/INMR-02-2018-002Search in Google Scholar

[32] Iqbal A, Tham M-L, Chang YC. Convolutional neural network-based deep Q-network (CNN-DQN) resource management in cloud radio access network. China Commun. 2022;19(10):129–42.10.23919/JCC.2022.00.025Search in Google Scholar

[33] Gu Y, Zhu Z, Lv J, Shi L, Hou Z, Xu S, et al. DM-DQN: Dueling Munchausen deep Q network for robot path planning. Complex Intell Syst. 2023;9(4):4287–300.10.1007/s40747-022-00948-7Search in Google Scholar

[34] Mascardi V, Weyns D, Ricci A, Earle CB, Casals A, Challenger M, et al. Engineering multi-agent systems: State of affairs and the road ahead. ACM SIGSOFT Softw Eng Notes. 2019;44(1):18–28. 10.1145/3310013.3322175.Search in Google Scholar

[35] Changsheng Y, Ruopeng Y, Wei Z, Xiaofei Z, Feng L. Review of layered reinforcement learning of multi-agents. J Intell Syst. 2020;15(4):646–55.Search in Google Scholar

© 2024 the author(s), published by De Gruyter

This work is licensed under the Creative Commons Attribution 4.0 International License.

Articles in the same Issue

- Research Articles

- A study on intelligent translation of English sentences by a semantic feature extractor

- Detecting surface defects of heritage buildings based on deep learning

- Combining bag of visual words-based features with CNN in image classification

- Online addiction analysis and identification of students by applying gd-LSTM algorithm to educational behaviour data

- Improving multilayer perceptron neural network using two enhanced moth-flame optimizers to forecast iron ore prices

- Sentiment analysis model for cryptocurrency tweets using different deep learning techniques

- Periodic analysis of scenic spot passenger flow based on combination neural network prediction model

- Analysis of short-term wind speed variation, trends and prediction: A case study of Tamil Nadu, India

- Cloud computing-based framework for heart disease classification using quantum machine learning approach

- Research on teaching quality evaluation of higher vocational architecture majors based on enterprise platform with spherical fuzzy MAGDM

- Detection of sickle cell disease using deep neural networks and explainable artificial intelligence

- Interval-valued T-spherical fuzzy extended power aggregation operators and their application in multi-criteria decision-making

- Characterization of neighborhood operators based on neighborhood relationships

- Real-time pose estimation and motion tracking for motion performance using deep learning models

- QoS prediction using EMD-BiLSTM for II-IoT-secure communication systems

- A novel framework for single-valued neutrosophic MADM and applications to English-blended teaching quality evaluation

- An intelligent error correction model for English grammar with hybrid attention mechanism and RNN algorithm

- Prediction mechanism of depression tendency among college students under computer intelligent systems

- Research on grammatical error correction algorithm in English translation via deep learning

- Microblog sentiment analysis method using BTCBMA model in Spark big data environment

- Application and research of English composition tangent model based on unsupervised semantic space

- 1D-CNN: Classification of normal delivery and cesarean section types using cardiotocography time-series signals

- Real-time segmentation of short videos under VR technology in dynamic scenes

- Application of emotion recognition technology in psychological counseling for college students

- Classical music recommendation algorithm on art market audience expansion under deep learning

- A robust segmentation method combined with classification algorithms for field-based diagnosis of maize plant phytosanitary state

- Integration effect of artificial intelligence and traditional animation creation technology

- Artificial intelligence-driven education evaluation and scoring: Comparative exploration of machine learning algorithms

- Intelligent multiple-attributes decision support for classroom teaching quality evaluation in dance aesthetic education based on the GRA and information entropy

- A study on the application of multidimensional feature fusion attention mechanism based on sight detection and emotion recognition in online teaching

- Blockchain-enabled intelligent toll management system

- A multi-weapon detection using ensembled learning

- Deep and hand-crafted features based on Weierstrass elliptic function for MRI brain tumor classification

- Design of geometric flower pattern for clothing based on deep learning and interactive genetic algorithm

- Mathematical media art protection and paper-cut animation design under blockchain technology

- Deep reinforcement learning enhances artistic creativity: The case study of program art students integrating computer deep learning

- Transition from machine intelligence to knowledge intelligence: A multi-agent simulation approach to technology transfer

- Research on the TF–IDF algorithm combined with semantics for automatic extraction of keywords from network news texts

- Enhanced Jaya optimization for improving multilayer perceptron neural network in urban air quality prediction

- Design of visual symbol-aided system based on wireless network sensor and embedded system

- Construction of a mental health risk model for college students with long and short-term memory networks and early warning indicators

- Personalized resource recommendation method of student online learning platform based on LSTM and collaborative filtering

- Employment management system for universities based on improved decision tree

- English grammar intelligent error correction technology based on the n-gram language model

- Speech recognition and intelligent translation under multimodal human–computer interaction system

- Enhancing data security using Laplacian of Gaussian and Chacha20 encryption algorithm

- Construction of GCNN-based intelligent recommendation model for answering teachers in online learning system

- Neural network big data fusion in remote sensing image processing technology

- Research on the construction and reform path of online and offline mixed English teaching model in the internet era

- Real-time semantic segmentation based on BiSeNetV2 for wild road

- Online English writing teaching method that enhances teacher–student interaction

- Construction of a painting image classification model based on AI stroke feature extraction

- Big data analysis technology in regional economic market planning and enterprise market value prediction

- Location strategy for logistics distribution centers utilizing improved whale optimization algorithm

- Research on agricultural environmental monitoring Internet of Things based on edge computing and deep learning

- The application of curriculum recommendation algorithm in the driving mechanism of industry–teaching integration in colleges and universities under the background of education reform

- Application of online teaching-based classroom behavior capture and analysis system in student management

- Evaluation of online teaching quality in colleges and universities based on digital monitoring technology

- Face detection method based on improved YOLO-v4 network and attention mechanism

- Study on the current situation and influencing factors of corn import trade in China – based on the trade gravity model

- Research on business English grammar detection system based on LSTM model

- Multi-source auxiliary information tourist attraction and route recommendation algorithm based on graph attention network

- Multi-attribute perceptual fuzzy information decision-making technology in investment risk assessment of green finance Projects

- Research on image compression technology based on improved SPIHT compression algorithm for power grid data

- Optimal design of linear and nonlinear PID controllers for speed control of an electric vehicle

- Traditional landscape painting and art image restoration methods based on structural information guidance

- Traceability and analysis method for measurement laboratory testing data based on intelligent Internet of Things and deep belief network

- A speech-based convolutional neural network for human body posture classification

- The role of the O2O blended teaching model in improving the teaching effectiveness of physical education classes

- Genetic algorithm-assisted fuzzy clustering framework to solve resource-constrained project problems

- Behavior recognition algorithm based on a dual-stream residual convolutional neural network

- Ensemble learning and deep learning-based defect detection in power generation plants

- Optimal design of neural network-based fuzzy predictive control model for recommending educational resources in the context of information technology

- An artificial intelligence-enabled consumables tracking system for medical laboratories

- Utilization of deep learning in ideological and political education

- Detection of abnormal tourist behavior in scenic spots based on optimized Gaussian model for background modeling

- RGB-to-hyperspectral conversion for accessible melanoma detection: A CNN-based approach

- Optimization of the road bump and pothole detection technology using convolutional neural network

- Comparative analysis of impact of classification algorithms on security and performance bug reports

- Cross-dataset micro-expression identification based on facial ROIs contribution quantification

- Demystifying multiple sclerosis diagnosis using interpretable and understandable artificial intelligence

- Unifying optimization forces: Harnessing the fine-structure constant in an electromagnetic-gravity optimization framework

- E-commerce big data processing based on an improved RBF model

- Analysis of youth sports physical health data based on cloud computing and gait awareness

- CCLCap-AE-AVSS: Cycle consistency loss based capsule autoencoders for audio–visual speech synthesis

- An efficient node selection algorithm in the context of IoT-based vehicular ad hoc network for emergency service

- Computer aided diagnoses for detecting the severity of Keratoconus

- Improved rapidly exploring random tree using salp swarm algorithm

- Network security framework for Internet of medical things applications: A survey

- Predicting DoS and DDoS attacks in network security scenarios using a hybrid deep learning model

- Enhancing 5G communication in business networks with an innovative secured narrowband IoT framework

- Quokka swarm optimization: A new nature-inspired metaheuristic optimization algorithm

- Digital forensics architecture for real-time automated evidence collection and centralization: Leveraging security lake and modern data architecture

- Image modeling algorithm for environment design based on augmented and virtual reality technologies

- Enhancing IoT device security: CNN-SVM hybrid approach for real-time detection of DoS and DDoS attacks

- High-resolution image processing and entity recognition algorithm based on artificial intelligence

- Review Articles

- Transformative insights: Image-based breast cancer detection and severity assessment through advanced AI techniques

- Network and cybersecurity applications of defense in adversarial attacks: A state-of-the-art using machine learning and deep learning methods

- Applications of integrating artificial intelligence and big data: A comprehensive analysis

- A systematic review of symbiotic organisms search algorithm for data clustering and predictive analysis

- Modelling Bitcoin networks in terms of anonymity and privacy in the metaverse application within Industry 5.0: Comprehensive taxonomy, unsolved issues and suggested solution

- Systematic literature review on intrusion detection systems: Research trends, algorithms, methods, datasets, and limitations

Articles in the same Issue

- Research Articles

- A study on intelligent translation of English sentences by a semantic feature extractor

- Detecting surface defects of heritage buildings based on deep learning

- Combining bag of visual words-based features with CNN in image classification

- Online addiction analysis and identification of students by applying gd-LSTM algorithm to educational behaviour data

- Improving multilayer perceptron neural network using two enhanced moth-flame optimizers to forecast iron ore prices

- Sentiment analysis model for cryptocurrency tweets using different deep learning techniques

- Periodic analysis of scenic spot passenger flow based on combination neural network prediction model

- Analysis of short-term wind speed variation, trends and prediction: A case study of Tamil Nadu, India

- Cloud computing-based framework for heart disease classification using quantum machine learning approach

- Research on teaching quality evaluation of higher vocational architecture majors based on enterprise platform with spherical fuzzy MAGDM

- Detection of sickle cell disease using deep neural networks and explainable artificial intelligence

- Interval-valued T-spherical fuzzy extended power aggregation operators and their application in multi-criteria decision-making

- Characterization of neighborhood operators based on neighborhood relationships

- Real-time pose estimation and motion tracking for motion performance using deep learning models

- QoS prediction using EMD-BiLSTM for II-IoT-secure communication systems

- A novel framework for single-valued neutrosophic MADM and applications to English-blended teaching quality evaluation

- An intelligent error correction model for English grammar with hybrid attention mechanism and RNN algorithm

- Prediction mechanism of depression tendency among college students under computer intelligent systems

- Research on grammatical error correction algorithm in English translation via deep learning

- Microblog sentiment analysis method using BTCBMA model in Spark big data environment

- Application and research of English composition tangent model based on unsupervised semantic space

- 1D-CNN: Classification of normal delivery and cesarean section types using cardiotocography time-series signals

- Real-time segmentation of short videos under VR technology in dynamic scenes

- Application of emotion recognition technology in psychological counseling for college students

- Classical music recommendation algorithm on art market audience expansion under deep learning

- A robust segmentation method combined with classification algorithms for field-based diagnosis of maize plant phytosanitary state

- Integration effect of artificial intelligence and traditional animation creation technology

- Artificial intelligence-driven education evaluation and scoring: Comparative exploration of machine learning algorithms

- Intelligent multiple-attributes decision support for classroom teaching quality evaluation in dance aesthetic education based on the GRA and information entropy

- A study on the application of multidimensional feature fusion attention mechanism based on sight detection and emotion recognition in online teaching

- Blockchain-enabled intelligent toll management system

- A multi-weapon detection using ensembled learning

- Deep and hand-crafted features based on Weierstrass elliptic function for MRI brain tumor classification

- Design of geometric flower pattern for clothing based on deep learning and interactive genetic algorithm

- Mathematical media art protection and paper-cut animation design under blockchain technology

- Deep reinforcement learning enhances artistic creativity: The case study of program art students integrating computer deep learning

- Transition from machine intelligence to knowledge intelligence: A multi-agent simulation approach to technology transfer

- Research on the TF–IDF algorithm combined with semantics for automatic extraction of keywords from network news texts

- Enhanced Jaya optimization for improving multilayer perceptron neural network in urban air quality prediction

- Design of visual symbol-aided system based on wireless network sensor and embedded system

- Construction of a mental health risk model for college students with long and short-term memory networks and early warning indicators

- Personalized resource recommendation method of student online learning platform based on LSTM and collaborative filtering

- Employment management system for universities based on improved decision tree

- English grammar intelligent error correction technology based on the n-gram language model

- Speech recognition and intelligent translation under multimodal human–computer interaction system

- Enhancing data security using Laplacian of Gaussian and Chacha20 encryption algorithm

- Construction of GCNN-based intelligent recommendation model for answering teachers in online learning system

- Neural network big data fusion in remote sensing image processing technology

- Research on the construction and reform path of online and offline mixed English teaching model in the internet era

- Real-time semantic segmentation based on BiSeNetV2 for wild road

- Online English writing teaching method that enhances teacher–student interaction

- Construction of a painting image classification model based on AI stroke feature extraction

- Big data analysis technology in regional economic market planning and enterprise market value prediction

- Location strategy for logistics distribution centers utilizing improved whale optimization algorithm

- Research on agricultural environmental monitoring Internet of Things based on edge computing and deep learning

- The application of curriculum recommendation algorithm in the driving mechanism of industry–teaching integration in colleges and universities under the background of education reform

- Application of online teaching-based classroom behavior capture and analysis system in student management

- Evaluation of online teaching quality in colleges and universities based on digital monitoring technology

- Face detection method based on improved YOLO-v4 network and attention mechanism

- Study on the current situation and influencing factors of corn import trade in China – based on the trade gravity model

- Research on business English grammar detection system based on LSTM model

- Multi-source auxiliary information tourist attraction and route recommendation algorithm based on graph attention network

- Multi-attribute perceptual fuzzy information decision-making technology in investment risk assessment of green finance Projects

- Research on image compression technology based on improved SPIHT compression algorithm for power grid data

- Optimal design of linear and nonlinear PID controllers for speed control of an electric vehicle

- Traditional landscape painting and art image restoration methods based on structural information guidance

- Traceability and analysis method for measurement laboratory testing data based on intelligent Internet of Things and deep belief network

- A speech-based convolutional neural network for human body posture classification

- The role of the O2O blended teaching model in improving the teaching effectiveness of physical education classes

- Genetic algorithm-assisted fuzzy clustering framework to solve resource-constrained project problems

- Behavior recognition algorithm based on a dual-stream residual convolutional neural network

- Ensemble learning and deep learning-based defect detection in power generation plants

- Optimal design of neural network-based fuzzy predictive control model for recommending educational resources in the context of information technology

- An artificial intelligence-enabled consumables tracking system for medical laboratories

- Utilization of deep learning in ideological and political education

- Detection of abnormal tourist behavior in scenic spots based on optimized Gaussian model for background modeling

- RGB-to-hyperspectral conversion for accessible melanoma detection: A CNN-based approach

- Optimization of the road bump and pothole detection technology using convolutional neural network

- Comparative analysis of impact of classification algorithms on security and performance bug reports

- Cross-dataset micro-expression identification based on facial ROIs contribution quantification

- Demystifying multiple sclerosis diagnosis using interpretable and understandable artificial intelligence

- Unifying optimization forces: Harnessing the fine-structure constant in an electromagnetic-gravity optimization framework

- E-commerce big data processing based on an improved RBF model

- Analysis of youth sports physical health data based on cloud computing and gait awareness

- CCLCap-AE-AVSS: Cycle consistency loss based capsule autoencoders for audio–visual speech synthesis

- An efficient node selection algorithm in the context of IoT-based vehicular ad hoc network for emergency service

- Computer aided diagnoses for detecting the severity of Keratoconus

- Improved rapidly exploring random tree using salp swarm algorithm

- Network security framework for Internet of medical things applications: A survey

- Predicting DoS and DDoS attacks in network security scenarios using a hybrid deep learning model

- Enhancing 5G communication in business networks with an innovative secured narrowband IoT framework

- Quokka swarm optimization: A new nature-inspired metaheuristic optimization algorithm

- Digital forensics architecture for real-time automated evidence collection and centralization: Leveraging security lake and modern data architecture

- Image modeling algorithm for environment design based on augmented and virtual reality technologies

- Enhancing IoT device security: CNN-SVM hybrid approach for real-time detection of DoS and DDoS attacks

- High-resolution image processing and entity recognition algorithm based on artificial intelligence

- Review Articles

- Transformative insights: Image-based breast cancer detection and severity assessment through advanced AI techniques

- Network and cybersecurity applications of defense in adversarial attacks: A state-of-the-art using machine learning and deep learning methods

- Applications of integrating artificial intelligence and big data: A comprehensive analysis

- A systematic review of symbiotic organisms search algorithm for data clustering and predictive analysis

- Modelling Bitcoin networks in terms of anonymity and privacy in the metaverse application within Industry 5.0: Comprehensive taxonomy, unsolved issues and suggested solution

- Systematic literature review on intrusion detection systems: Research trends, algorithms, methods, datasets, and limitations