Abstract

In the field of traditional landscape painting and art image restoration, traditional restoration methods have gradually revealed limitations with the development of society and technological progress. In order to enhance the restoration effects of Chinese landscape paintings, an innovative image restoration algorithm is designed in this research, combining edge restoration with generative adversarial networks (GANs). Simultaneously, a novel image restoration model with embedded multi-scale attention dilated convolution is proposed to enhance the modeling capability for details and textures in landscape paintings. To better preserve the structural features of artistic images, a structural information-guided art image restoration model is introduced. The introduction of adversarial networks into the repair model can improve the repair effect. The art image repair model adds a multi-scale attention mechanism to handle more complex works of art. The research results show that the image detection model improves by 0.20, 0.07, and 0.06 in the Spearman rank correlation coefficient, Pearson correlation coefficient, and peak signal-to-noise ratio (PSNR), respectively, compared to other models. The proposed method outperforms mean filtering, wavelet denoising, and median filtering algorithms by 6.3, 9.1, and 15.8 dB in PSNR and by 0.06, 0.12, and 0.11 in structural similarity index. In the image restoration task, the structural similarity and information entropy indicators of the research model increase by approximately 9.3 and 3%, respectively. The image restoration method proposed in this study is beneficial for preserving and restoring precious cultural heritage, especially traditional Chinese landscape paintings, providing new technological means for cultural relic restoration.

1 Introduction

As a precious heritage of traditional Chinese painting, landscape paintings have been passed down through generations and are considered pinnacle works of art. However, the erosion of time has inevitably caused damage and fading to these traditional landscape paintings over the years [1]. Art image restoration, as an essential means of art preservation and cultural inheritance, has gradually become a widely discussed focus [2]. Traditional art restoration methods often rely on the experience and skills of artists. However, when dealing with complex landscape paintings, traditional methods often struggle to meet the restoration needs due to the complexity of damages and the uniqueness of artworks. Additionally, manual restoration is susceptible to subjective factors, resulting in restoration outcomes inconsistent with the original style. Traditional methods also face difficulties in handling the structural information of artworks, leading to restored results lacking a profound understanding of the essence of the artwork, making it challenging to restore the artistic value of the original piece [3]. Moreover, traditional restoration processes may introduce additional artifacts or traces, compromising the authenticity and credibility of the restoration results. To address these issues, this study proposes an art image restoration method guided by structural information. By incorporating advanced computer vision and image processing techniques, coupled with a deep learning understanding of the intrinsic structure of artworks, this method aims to preserve traditional landscape paintings while maintaining their original artistic styles. Through accurate extraction and restoration of structural information, the restoration results will possess greater uniqueness and authenticity. Additionally, this study explores the clever integration of traditional artists’ experience with advanced technology to achieve a synergistic effect in art image restoration, providing new perspectives and methods for the protection and inheritance of traditional artworks.

The research consists of four parts. The first part provides a summary of the study on structural information guidance and image restoration methods. The second part involves the design of an artistic image restoration model and algorithm, incorporating multi-scale attention-expanding convolution and structural information guidance. The third part focuses on the validation of multi-feature fusion image restoration techniques and the performance of Chinese landscape painting image algorithms. The fourth part concludes the entire study.

2 Related works

Researchers have made significant progress in fields such as image restoration and multimodal image processing, providing robust technical support to enhance accuracy and flexibility. To improve the accuracy of protein structure prediction, Baek et al. designed a three-track network that handles information at the one-dimensional sequence level, two-dimensional distance map level, and three-dimensional coordinate level. This effectively integrates multi-level structural information [4]. In order to confirm whether the use of Landsat-8 image and weighted linear combination (WLC) method can effectively determine the suitable planting area of saffron, Zamani et al. used Landsat-8 image and WLC method to weigh the parameters to determine each importance of the region. They also analyzed the results to identify areas suitable for growing saffron and classify and describe their suitability [5]. For establishing a benchmark deep denoising prior applicable to plug-and-play image restoration, Zhang et al. trained a highly flexible and effective convolutional neural network (CNN) denoiser. They used a large dataset and appropriate loss functions to train the network and embedded the deep denoising prior as a module in a semi-iterative segmentation algorithm, addressing various image restoration problems such as deblurring, super-resolution, and DE mosaicking [6]. To tackle the general problem of multimodal image restoration and multimodal image fusion, Deng and scholars proposed a novel deep CNN named CU-Net. It comprises a unique feature extraction module, a common feature preservation module, and an image reconstruction module. Drawing inspiration from the MCSC model, the structure of different modules in CU-Net is designed [7]. For the flexible application of the same method to multiple image restoration tasks, Jin and their team introduced a flexible deep CNN framework. They achieved this by fully utilizing frequency features of different types of artifacts, introducing a quality enhancement network that employs residual and recursive learning to reduce artifacts of similar frequency features. Horizontal connections transfer features extracted between different frequency streams, and the aggregation network merges the outputs of these streams, further enhancing the recovered image [8].

Researchers have addressed the challenges in image processing by proposing a unified paradigm that combines spectral and spatial characteristics and introducing a pyramid attention module, offering diverse and innovative approaches for performance enhancement and problem resolution in the field. Farmonov et al. used new-generation Digital Earth Surface Information System (DESIS) images to classify the main crop types in southeastern Hungary, using factor analysis to reduce the size of the hyperspectral image data cube. Then, wavelet transform is applied to extract important features and combined with the spectral attention mechanism CNN to improve the accuracy of crop type mapping [9]. To fully exploit self-similarity in images, Mei et al. introduced a pyramid attention module for image restoration. This module captures long-range feature correspondences from a multi-scale feature pyramid and serves as a versatile building block that can be flexibly integrated into various neural network architectures [10]. Addressing the noise issues in images, Smita and other scholars explored various CNN-based image-denoising methods. They evaluated the performance of different CNN models on datasets such as BSD-68 and Set-12 using the peak signal-to-noise ratio (PSNR), with the PDNN model exhibiting superior performance and higher PSNR values [11]. Wang et al. proposed an effective CNN for handling degraded underwater images, comprising two parallel branches: a transmission estimation network (T-network) and a global ambient light estimation network (A-network). They also developed a new underwater image synthesis method to construct a training dataset, simulating images captured in various underwater environments [12]. In order to solve the information redundancy problem in hyperspectral images, Esmaeili et al. adopted a supervised band selection method and used a three-dimensional convolutional network (3D-CNN) embedded with a genetic algorithm (GA) to select the required number of bands. In GA, the embedded 3D-CNN (CNNeGA) is used as the fitness function, and the parent checkbox is considered to improve the efficiency of the GA. Comparative analysis of the effectiveness in terms of time complexity of adding attention layers to 3D-CNN and converting the model to a spiking neural network [13].

To sum up, many experts have conducted research on the problem of image restoration, but there are still some shortcomings, such as insufficient image detection accuracy, low image fidelity, etc., resulting in a gap in the fidelity and fidelity of the repaired image. The details are mediocre. Hence, this study proposes a traditional landscape and artistic image restoration method guided by structural information. The aim is to address current limitations in preserving both structural information and artistic sensibility, ensuring a more refined and accurate image restoration. Through this research, the goal is to provide new perspectives and methods for the field of image restoration, contributing to the development of related areas.

3 Artistic image restoration model integrating multi-scale attention expansion convolution and structure-guided information

Section 2 primarily covers three key image restoration algorithms, namely, the design of an algorithm merging edge restoration with GANs, embedding multi-scale attention expansion convolution in landscape painting image restoration, and an artistic image restoration model based on structure-guided information. These algorithms bring innovation in edge restoration, GANs, and multi-scale attention, aiming to enhance the restoration effects on Chinese landscape painting images.

3.1 Design and process analysis of image restoration algorithm combining edge restoration and GANs

The motivation behind this research is to address two critical issues in image restoration. First, traditional structural models have achieved significant success in edge-assisted image restoration, but their simplified edge-shape assumptions limit the restoration effects on atypical edges. Second, GANs show strength in image restoration, but there are shortcomings in image structure restoration. To overcome these limitations, this study proposes an image restoration algorithm that combines traditional methods and GANs. By introducing GANs for image structure estimation and utilizing edge information for assistance, the algorithm emphasizes the crucial role of edges throughout the entire restoration process. Additionally, the algorithm integrates the ideas of traditional structural information guidance and artistic image restoration methods, aiming to provide a more comprehensive and effective solution for the field of image restoration.

The process of the edge-assisted generative image inpainting algorithm represents a conditional GAN model, as described in [14]. The model takes damaged images and masks as conditions, comprising two fully convolutional networks generators: an edge restoration network and an edge-constrained image restoration network [15,16]. The edge restoration network focuses on repairing the edge information of the image, while the image restoration network combines the output of the edge restoration network with mask information to generate the final restored image. A discriminator is employed to distinguish generated images from real images. The training process aims to minimize the difference between generated and real images, improving the discriminator’s classification performance. The entire training process aims to restore missing or damaged images by introducing an edge information protection mechanism, preserving edge features in the generated images. The algorithm’s output is an image that is restored and preserves edge information, enhancing the recovery effects on damaged images by synthesizing the structural advantages of GANs [17,18].

To better train the GAN, the value range of the original image

The research focuses on two main objectives of using masks. First, masks serve as a mathematical representation, aiming in making image restoration tasks more user friendly by allowing selective restoration based on user preferences. Second, for the input requirements of CNNs, the damaged regions of an image are typically handled by filling them with pixel values to meet the network's regulations. For input in the form of image signals [19]. The architecture of CNN is shown in reference [20].

During this process, masks play a crucial role in identifying the damaged areas. By padding the damaged areas with a constant value of 1, representing white fill, the representation of the damaged image is effectively distinguished between known and damaged regions. This differentiation is achieved by introducing an additional input mask. After introducing the mask, the auxiliary algorithm can clearly identify and process the damaged parts, thereby successfully completing the image restoration task, as specifically shown in the following equation:

Here,

The processing strategy for edge information is the main focus, with a particular emphasis on selecting the holistically nested edge detector (HED) as the edge extractor. This extractor directly generates a real-valued edge map, unlike traditional binary edges, with values mapped to the

The approach in equation (4) is suitable for obtaining the damaged edge map during the training process. Simultaneously, using the damaged edge map E to fill the damaged regions of the edge map in the edge restoration network is done to meet the network’s requirements for a complete input [21]. This process involves using a real-numbered edge map, typically generated by an edge extractor and identifying damaged regions through a mask. The edge restoration network takes the edge map with damaged regions as input and, through its learning ability, fills these damaged regions by understanding and predicting the patterns of damage from the training dataset, ultimately generating effective restoration results. Let R edg represent the edge restoration network, then the network’s output is as shown in the following equation:

In this process, the known condition

The repaired edge map plays the role of prior information in this algorithm and is introduced as input, delivered to the image restoration network with edge constraints, as specifically shown in the following equation:

Here, the known condition m is introduced to the image restoration network, assisting the network in accurately delineating the damaged and known areas in the damaged image

In the final stage, a tensor with a channel number of 4 is generated by concatenating the repaired edge map and the restored image in the channel dimension. This tensor serves as input to the GAN for determining its authenticity [26]. In terms of sample classification, the concatenation of the original edge map

3.2 Embedding multi-scale attention dilated convolution (MADC) in landscape painting image restoration model

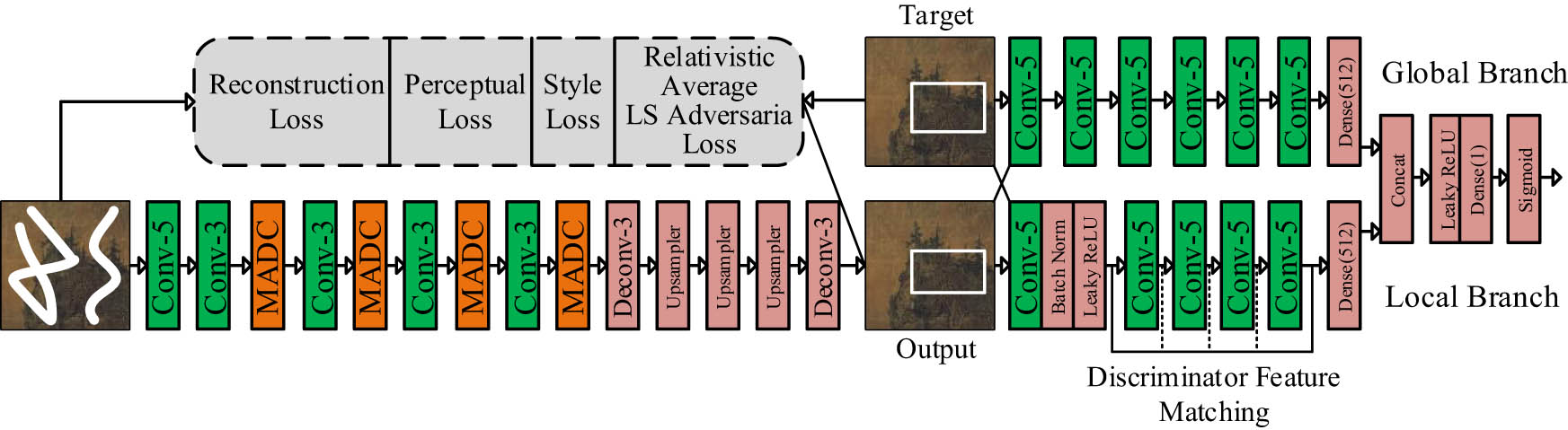

The image repair algorithm that fuses edge repair and generative adversarial networks can achieve accurate repair of missing parts of the image by combining edge information and the powerful generation capabilities of the generative adversarial network. The utilization of edge information makes the repair results more accurate and natural, while the introduction of generative adversarial networks enhances the robustness and generation capabilities of the repair model. This section proposes a landscape painting image restoration model embedding multi-scale attention dilation convolution. This model introduces a multi-scale attention mechanism in the repair process to better capture important information at different scales in the image, helping the repair results to be more detailed and accurate. At the same time, the use of dilated convolution also enhances the receptive field of the model, helping to better understand the semantics and structure of the image. Therefore, the study proposes an improved generative adversarial network model, the core feature of which is to embed a multi-scale attention dilation convolution structure [27,28]. The overall framework of the model includes an encoder–decoder network as the generator and two branches of discriminator – global and local. The generator’s task is to generate restoration images with natural coherence, and through adversarial training, the discriminators assist in generating more credible restoration images. The structural design of the model aims to more effectively meet the specific requirements of artistic image restoration. Figure 1 vividly presents the overall architecture of the model, emphasizing the crucial properties of embedding MADC.

An artistic image restoration model framework embedding MADCs.

In image restoration, a GAN structure has been proposed with a generator employing an encoder-decoder architecture. The feature encoding network of the generator consists of a series of 3 × 3 convolutional layers and multi-scale attention-dilated convolutions [29]. The first convolutional layer employs a 5 × 5 kernel to extract latent information features from the input image. The introduction of the MADC module, combining dilated convolutions and attention mechanisms, enlarges the receptive field and effectively extracts relevant features of interest. The combination of 3 × 3 convolutional layers and multiple attention-dilated convolution modules, as shown in Table 1, preserves more image feature details during the encoding process.

Encoding network structure

| Layer number | Layer type | Number of output channels | Coefficient of expansion | Convolution kernel size | Output size | Stride |

|---|---|---|---|---|---|---|

| 1 | Convolution layer | 64 | 2 | 5 × 5 | 256 × 256 | 1 |

| 2 | Convolution layer | 128 | 1 | 3 × 3 | 128 × 128 | 2 |

| 3 | MADC | — | — | — | — | — |

| 4 | Convolution layer | 128 | 1 | 3 × 3 | 128 × 128 | 1 |

| 5 | MADC | — | — | — | — | — |

| 6 | Convolution layer | 256 | 1 | 3 × 3 | 64 × 64 | 2 |

| 7 | MADC | — | — | — | — | — |

| 8 | Convolution layer | 512 | 1 | 3 × 3 | 32 × 32 | 2 |

| 9 | MADC | — | — | — | — | — |

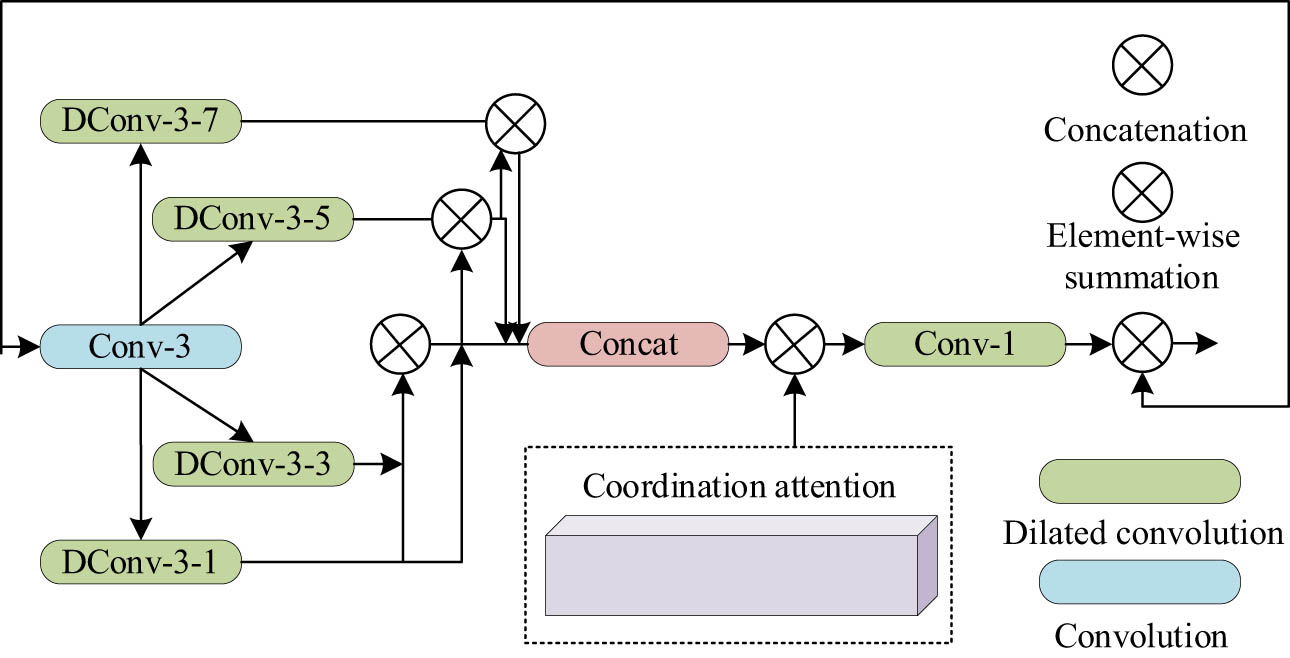

The structure of the decoding network includes a 3 × 3 convolutional layer, three upsampling layers, and a 3 × 3 convolutional layer reused. The last two upsampling layers use ReLU activation functions, while the Tanh activation function is applied after the final convolutional layer [30]. Through the combination of these layers, the decoding network maps the features extracted from the encoding network to the generated restored image. The MADC, serving as a plug-and-play optimization module, integrates features of hybrid dilated convolution blocks and coordinated attention layers, as depicted in Figure 2. Its primary advantages lie in achieving expanded effects without increasing computational complexity through the introduction of dilated convolution blocks and effectively suppressing interference from irrelevant information through the application of coordinated attention layers.

MADC model.

The dilated convolution block, as the core component of the MADC module, draws inspiration from the aesthetic background of traditional landscape paintings and artistic image restoration. In traditional painting, artists achieve precise expression of depth and details through meticulous depiction and the use of perspective techniques. Similarly, in the field of artistic image restoration, special attention to microscopic details in the area of interest is required to restore the natural realism of the image while avoiding the introduction of additional noise or artifacts during the restoration process. The design of the dilated convolution block aims to expand the receptive field while maintaining computational efficiency. By employing dilated convolutions, the receptive field of the convolutional kernel is effectively expanded, inspired by traditional art techniques that create a sense of depth through the drawing of points, lines, and surfaces. In the context of the MADC module, the introduction of the dilated convolution block can be understood as mimicking the attention to detail in traditional art, allowing the network to observe and understand the image structure more comprehensively, thereby producing a more natural and artistically appealing restoration effect.

The application of coordinate attention mechanisms in neural networks, especially in traditional landscape paintings and artistic image restoration, holds significant significance. Traditional landscape paintings emphasize the expression of depth through specific painting techniques, which is equally important in artistic image restoration, particularly in the preservation of key details. The coordinate attention mechanism simulates the way traditional art focuses on details in the landscape by sensitivity to positional information. This enables the neural network to more accurately understand the relationship between positions and channels, enhancing its ability to capture contextual information in the image.

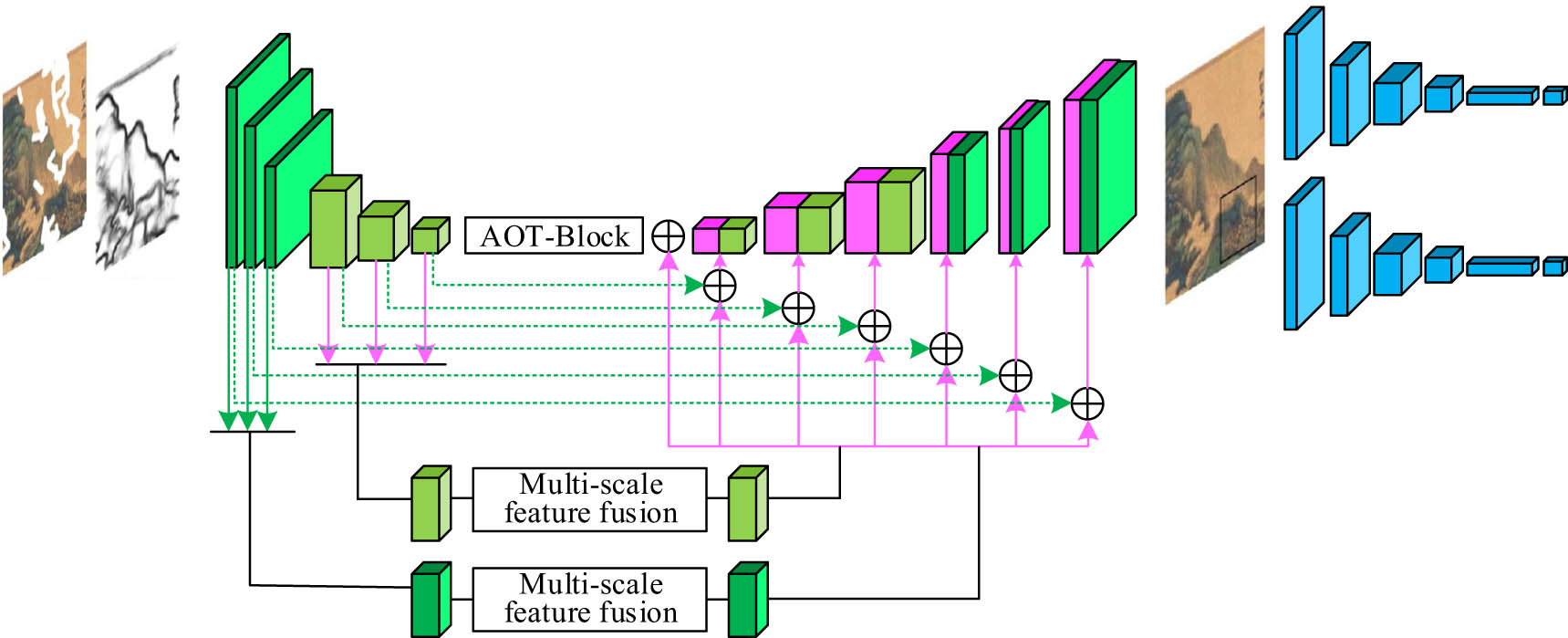

3.3 Design of the network structure and loss function for the artistic image restoration model guided by structural information

Compared with ordinary image restoration, the restoration of artistic images pays more attention to protecting the artwork itself and also needs to consider the unique structure and style of the artwork. By designing a specific network structure and loss function, the research can better retain the original artistic style and structural features when repairing artistic images. Relatively speaking, the solution provided is more objective and reliable and can improve the efficiency and accuracy of repair. The proposed artistic image restoration model guided by structural information consists of an encoder, an AOT module, a multi-scale feature fusion module, and a decoder. The input comprises the image to be restored and the structural information of the artistic image generated by the HED model based on CNNs. The encoder extracts texture features (F) and structural features (F′), while the multi-scale feature fusion module fills local damaged areas at multiple scales through dilated convolutional layers. The AOT block effectively captures global features, enabling the generator to acquire meaningful guidance from distant image contexts and interesting patterns. The decoder generates naturally plausible restored images through multiple layers of transposed convolution, synthesizing global and local information to enhance the restoration effect, as shown in Figure 3.

Image repair structure diagram based on structure generation and texture filling.

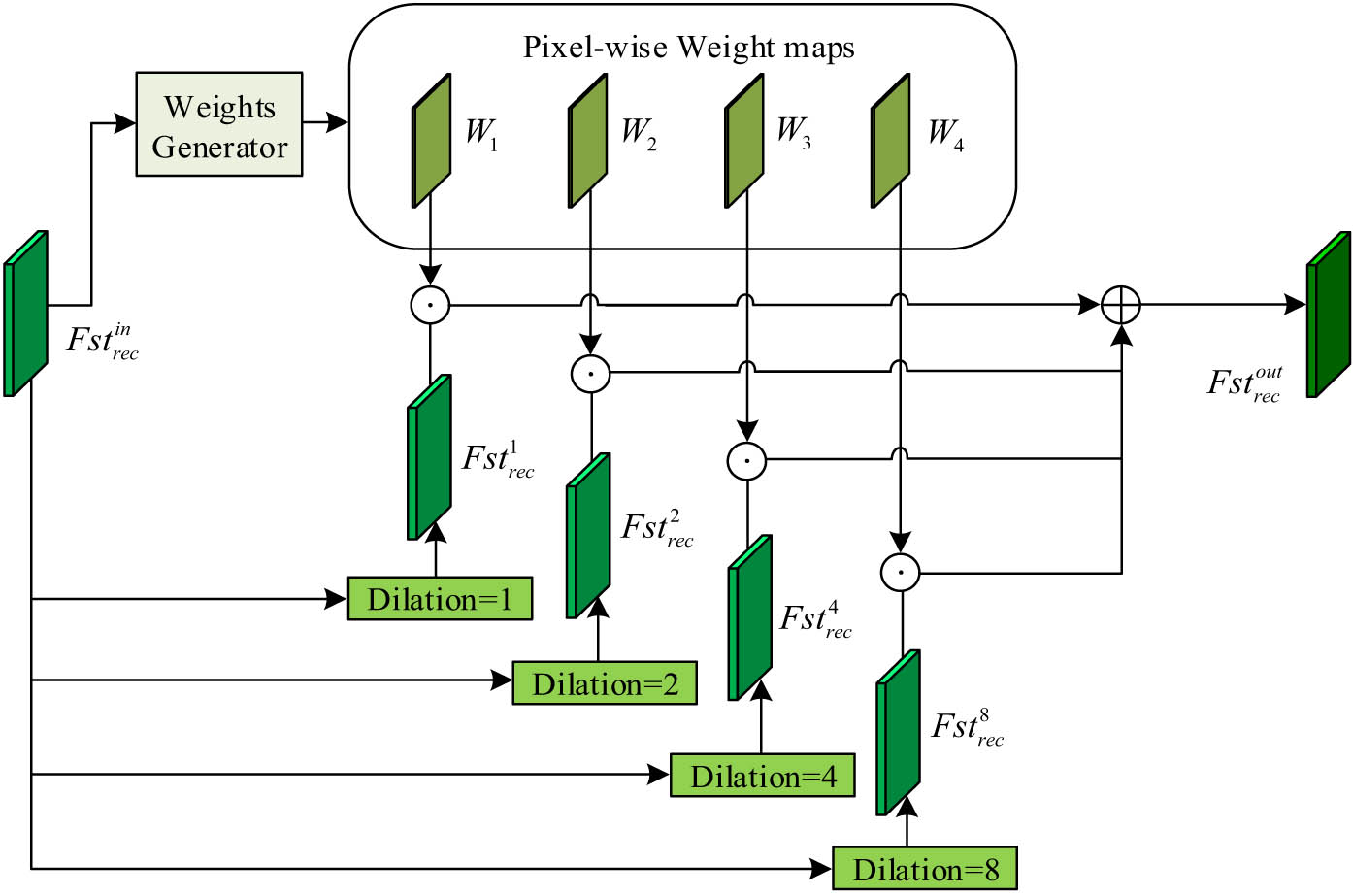

To better address the restoration of missing regions, a multi-scale feature fusion module is introduced in the study. This module aims to comprehensively consider missing areas in complex situations, synthesizing existing regions from different scales and perspectives to better understand which areas are beneficial for filling holes. Unlike the dilated convolution blocks in Section 3, the multi-scale feature fusion module captures features at different semantic levels through the encoding of two branches: texture features (F) and structural features (F′). It models long-range spatial dependencies by enhancing the correlation between features at different levels, ensuring the overall consistency of the restoration results. It is noteworthy that this module performs well in handling more challenging scenarios, such as scale variations, striking a balance between improved accuracy and reduced complexity (Figure 4).

Multi-scale feature fusion structure diagram.

To obtain multi-scale semantic information, the study introduces four sets of dilated convolution layers with different dilation rates. The purpose of this design is to capture semantic information at different scales for a more comprehensive understanding of the image structure and context. Through equation (9), these dilated convolution layers reconstruct feature maps, thus modeling long-range spatial dependencies at multiple scales.

Here, Conv

k

represents a dilated convolution layer with a dilation rate of k, and

Soft max in equation (10) refers to the Soft max operation performed on the channel level.

Slice in equation (11) means the Slice operation on the channel level. Finally, by combining and weighting the multi-scale semantic features through element-wise addition, a detailed feature map is formed, as shown in the following equation:

Here,

Here, L rec, L sty, L cont, and L tv represent the reconstruction loss function, style loss function, content loss function, and the total encoding loss function, respectively. λ adv represents the adversarial loss function. Meanwhile, λ rec, λ cont, λ sty, λ tv, and λ adv are hyperparameters for each loss function. The reconstruction loss function is defined in the following equation:

Equation (14) refers to I out as the image to be restored and I gt as the ground truth image. The content loss function is computed as shown in the following equation:

Here,

Here,

Here,

Here, p data (I gt) represents the distribution of real images, while p data (I out) represents the distribution map of restored images.

4 Multi-feature fusion image restoration techniques and validation of algorithm performance for Chinese landscape painting images

Section 3 primarily covers two aspects. First, it focuses on multi-feature fusion image restoration techniques, exploring their performance in image restoration and validating them using an image quality detection model. Second, it comprehensively evaluates and compares Chinese landscape painting image restoration algorithms, examining the performance of different algorithms to provide in-depth research and a comprehensive assessment of Chinese landscape painting image restoration.

4.1 Validation of multi-feature fusion image restoration techniques and image quality detection model performance

The study utilizes the Chinese-Landscape-Painting dataset to evaluate model performance, comprising 2,192 images of traditional landscape paintings. To address the limited nature of current art image datasets and ensure comprehensive model validation, an innovative image segmentation method was employed to augment the dataset. Through this approach, the research team successfully segmented each landscape painting image into multiple sub-images, obtaining a more diverse set of training samples. This augmentation method not only enhanced the model’s generalization ability across various scenarios but also provided more comprehensive and specific artistic image information for training and validation, further enhancing the credibility and practicality of the study.

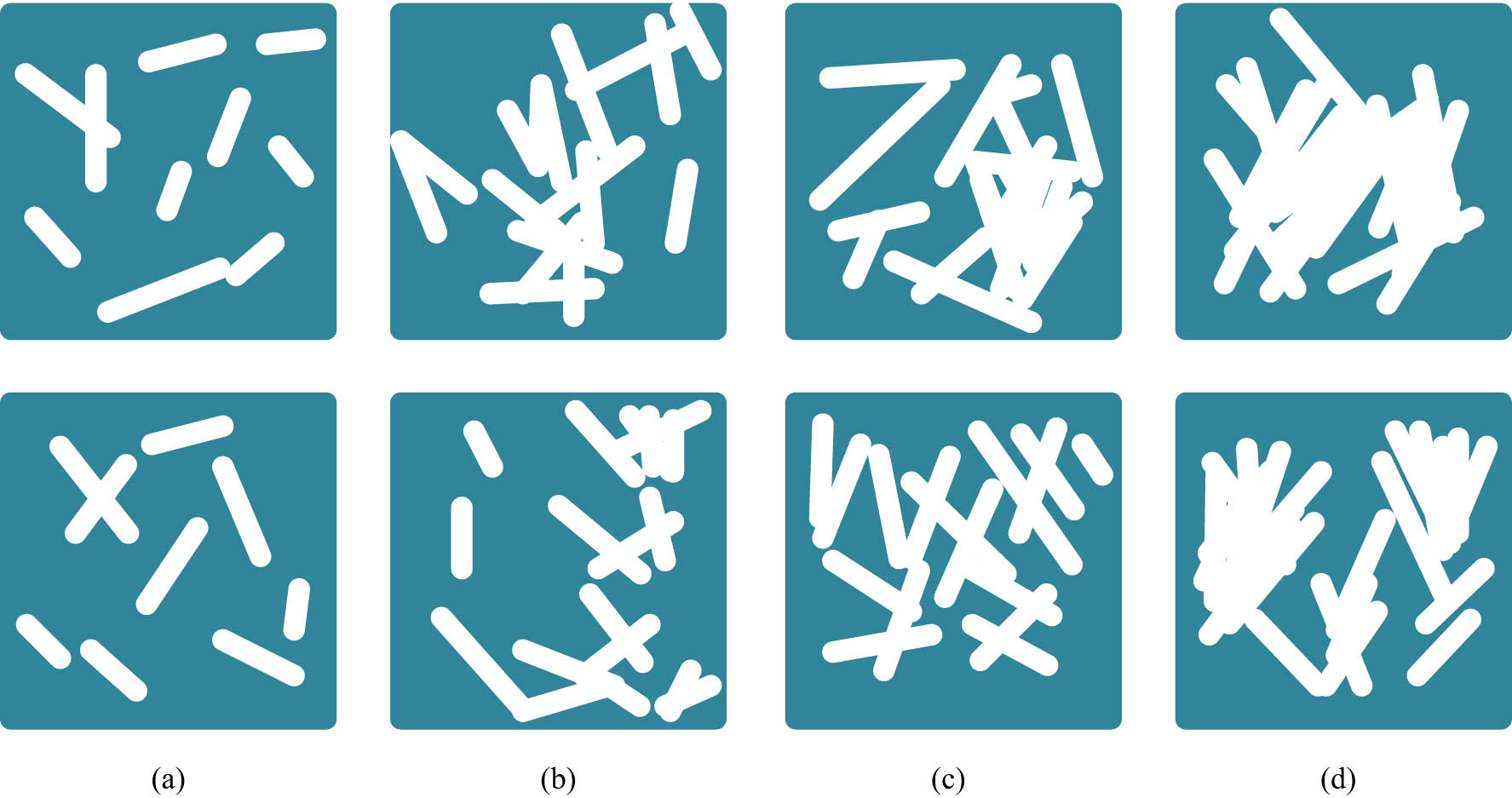

As shown in Figure 5, in order to expand the data set to verify the effectiveness of the model, the study used traditional Chinese high-definition landscape paintings and cut them into multiple images with rich content. Finally, 21,000 images were collected, and the size of each image was 256 × 256 × 3. In order to increase the randomness and uncertainty of the damaged area, the position of the mask in the image is random, and the size of the damaged area is also random.

Masked data samples at different scales: (a) 0–20%, (b) 20–30%, (c) 30–40%, and (d) 40–50%.

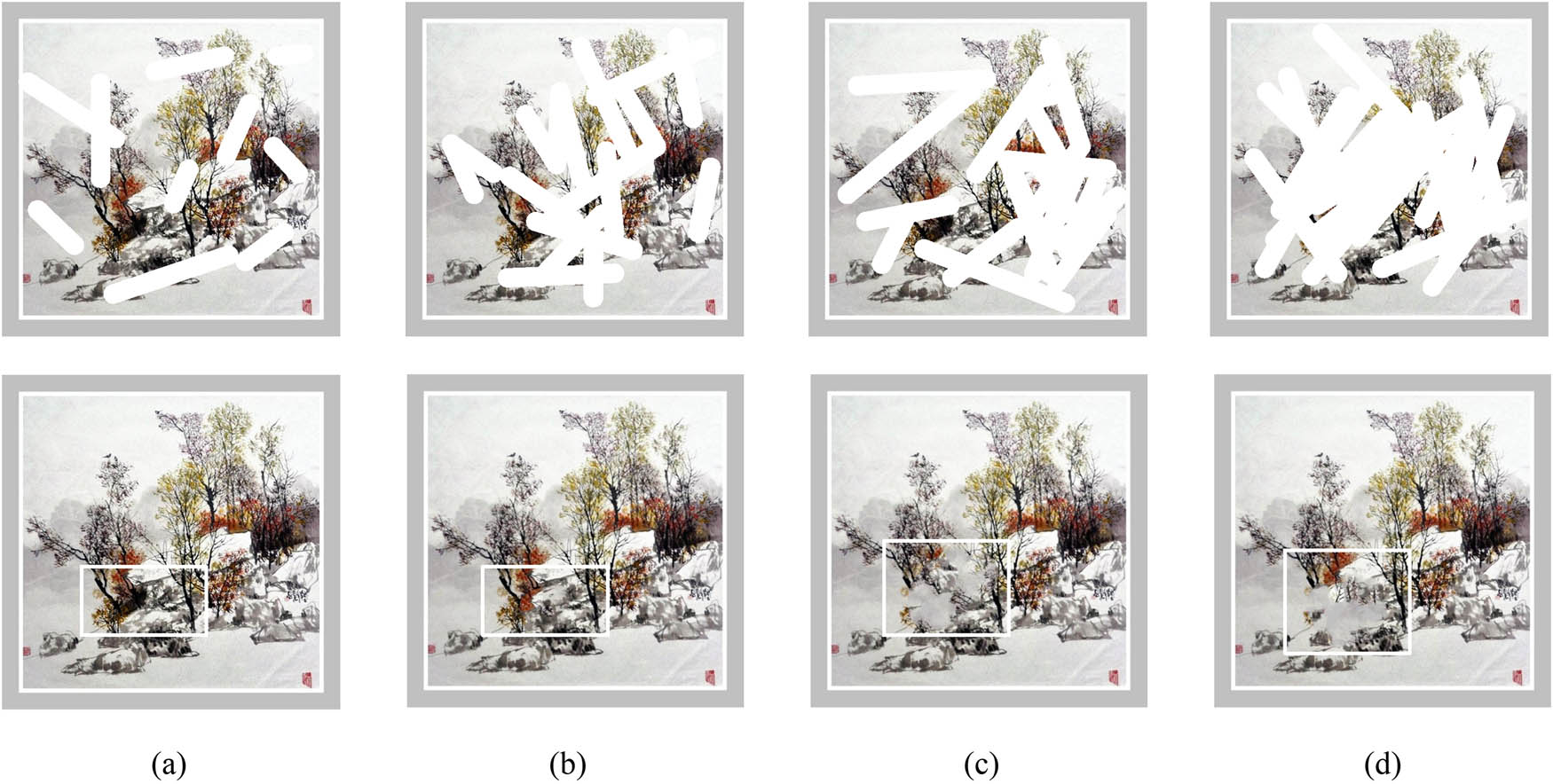

As shown in Figure 6, the study conducted image restoration under different mask proportions. Observations revealed that under mask proportions of 0–20%, the restored results were nearly indistinguishable from the real images, maintaining structural consistency and reasonable textures. However, as the damaged area increased, a noticeable loss of semantic information occurred, leading to discontinuous lines and blurred details, resulting in a decrease in consistency with real images. This trend is less pronounced in Figure 6(a) and (b) but becomes more evident in Figure 6(c) and (d).

Image repair results for irregular areas: (a) 0–20%, (b) 20–30%, (c) 30–40%, and (d) 40–50%.

As shown in Table 2, the proposed image restoration method demonstrates superior quality in pixel, structural, and perceptual aspects compared to existing methods. Particularly noteworthy is its excellent performance in filling irregular holes under different mask scales. This outstanding result is attributed to the innovative multi-feature fusion technique, skillfully integrating local information of structure and texture features while maintaining efficiency in considering the overall context of the image. The introduction of skip connections in the U-Net network and deep semantic information in the decoder successfully combines local and global feature information, resulting in higher-quality restored images.

Quantitative evaluation results of different algorithms on different scale masks

| Mask (%) | DMFB | MADC | Research algorithms | |

|---|---|---|---|---|

| PSNR | 10–20 | 27.751 | 27.726 | 28.148 |

| 20–30 | 27.305 | 25.284 | 28.016 | |

| 30–40 | 24.701 | 23.657 | 25.895 | |

| 40–50 | 23.148 | 20.935 | 24.583 | |

| MAE | 10–20 | 0.015 | 0.012 | 0.014 |

| 20–30 | 0.016 | 0.018 | 0.017 | |

| 30–40 | 0.026 | 0.026 | 0.027 | |

| 40–50 | 0.032 | 0.039 | 0.025 | |

| SSIM | 10–20 | 0.911 | 0.926 | 0.903 |

| 20–30 | 0.916 | 0.875 | 0.914 | |

| 30–40 | 0.829 | 0.789 | 0.852 | |

| 40–50 | 0.757 | 0.694 | 0.831 |

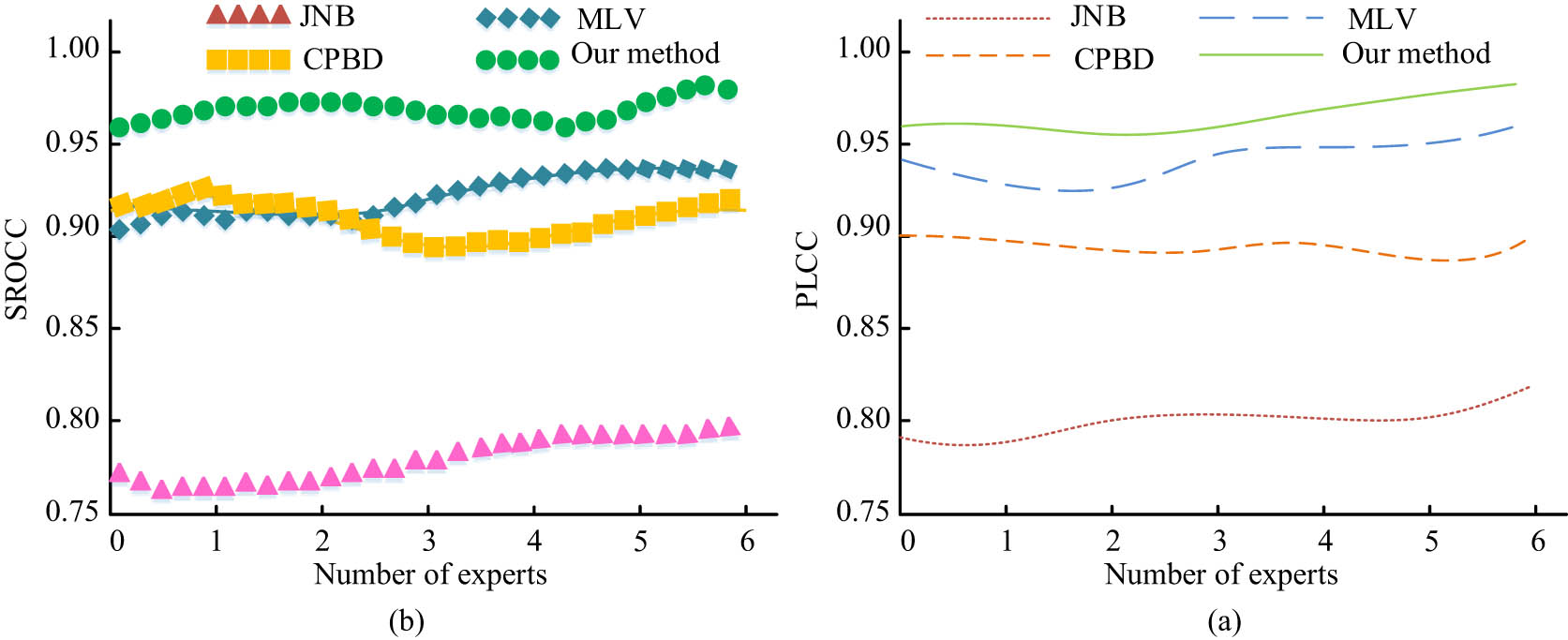

In Figure 7(a), the comparative results of SROCC for various models indicate that the proposed image detection model achieved an SROCC of 0.97. This is an improvement of 0.20 compared to the JNB model, 0.07 compared to the CPBD model, and 0.06 compared to the MLV model. Figure 7(b) displays the PLCC comparison results, with the proposed image detection model having a PLCC of 0.97. This is higher by 0.17 compared to the JNB model, 0.08 compared to the CPBD model, and 0.02 compared to the MLV model, suggesting superior detection performance of the model.

PLCC and SROCC comparison results of different algorithms: (a) PLCC of each method and (b) SROCC of each method.

4.2 Comprehensive evaluation and comparative analysis of performance for Chinese landscape painting image restoration algorithms

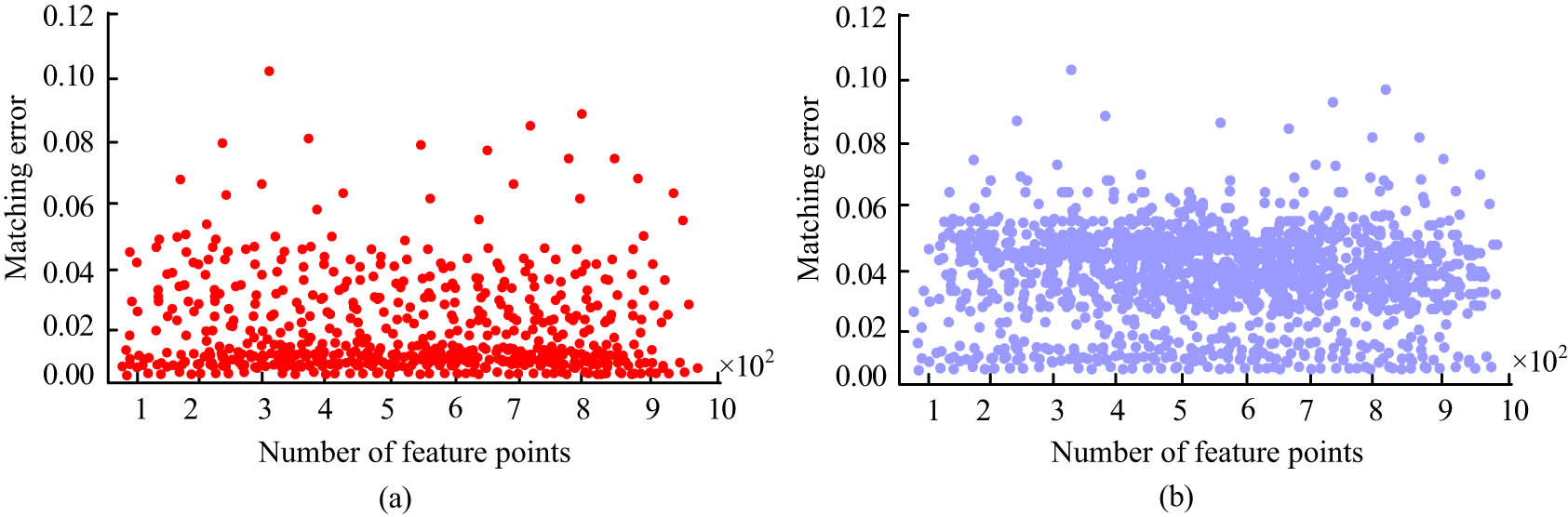

Figure 8 illustrates the error distribution in the edge restoration task between the proposed method and the Canny algorithm. As shown in Figure 8(a), the errors of the research method are mainly within the range of 0.000–0.015. In contrast, Figure 8(b) demonstrates that the errors of the Canny algorithm are concentrated between 0.020 and 0.051. This clearly indicates the more significant performance advantage of the proposed method in edge restoration, with lower error levels compared to the traditional Canny algorithm.

Scatter plot of matching errors between different methods: (a) our method and (b) Canny algorithm.

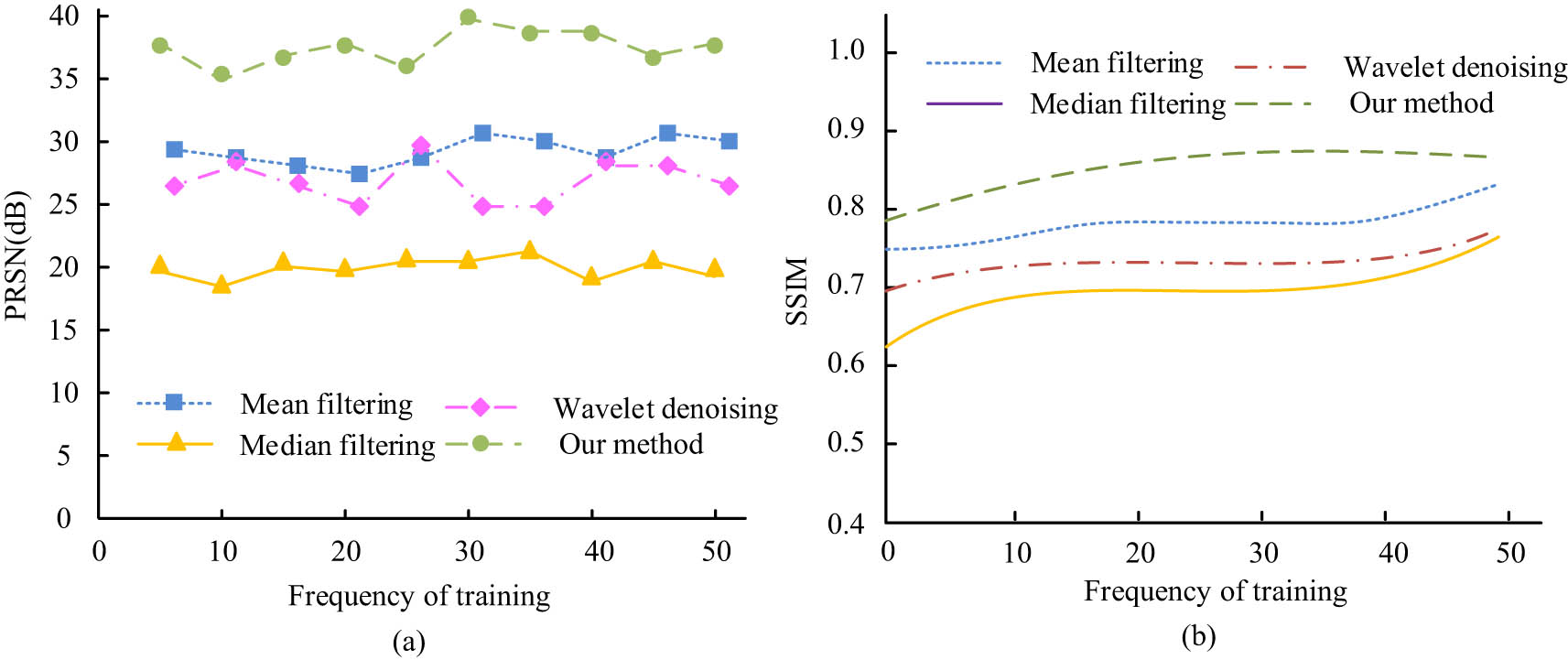

Figure 9(a) presents the PRSN values of various comparative algorithms, with the proposed graphic processing algorithm achieving the highest PRSN of 36.7 dB. Compared to mean-filter-based image processing algorithms, it is higher by 6.3, 9.1 dB higher than wavelet denoising-based algorithms, and 15.8 dB higher than median-filter-based algorithms. In Figure 9(b), SSIM values of different algorithms are displayed, with the proposed graphic processing algorithm having the highest SSIM of 0.85. It surpasses mean-filter-based algorithms by 0.06, wavelet denoising-based algorithms by 0.12, and median-filter-based algorithms by 0.11.

Comparison results of image processing algorithms PRSN and SSIM values: (a) the PRSN results for each algorithm and (b) the SSIM results for each algorithm.

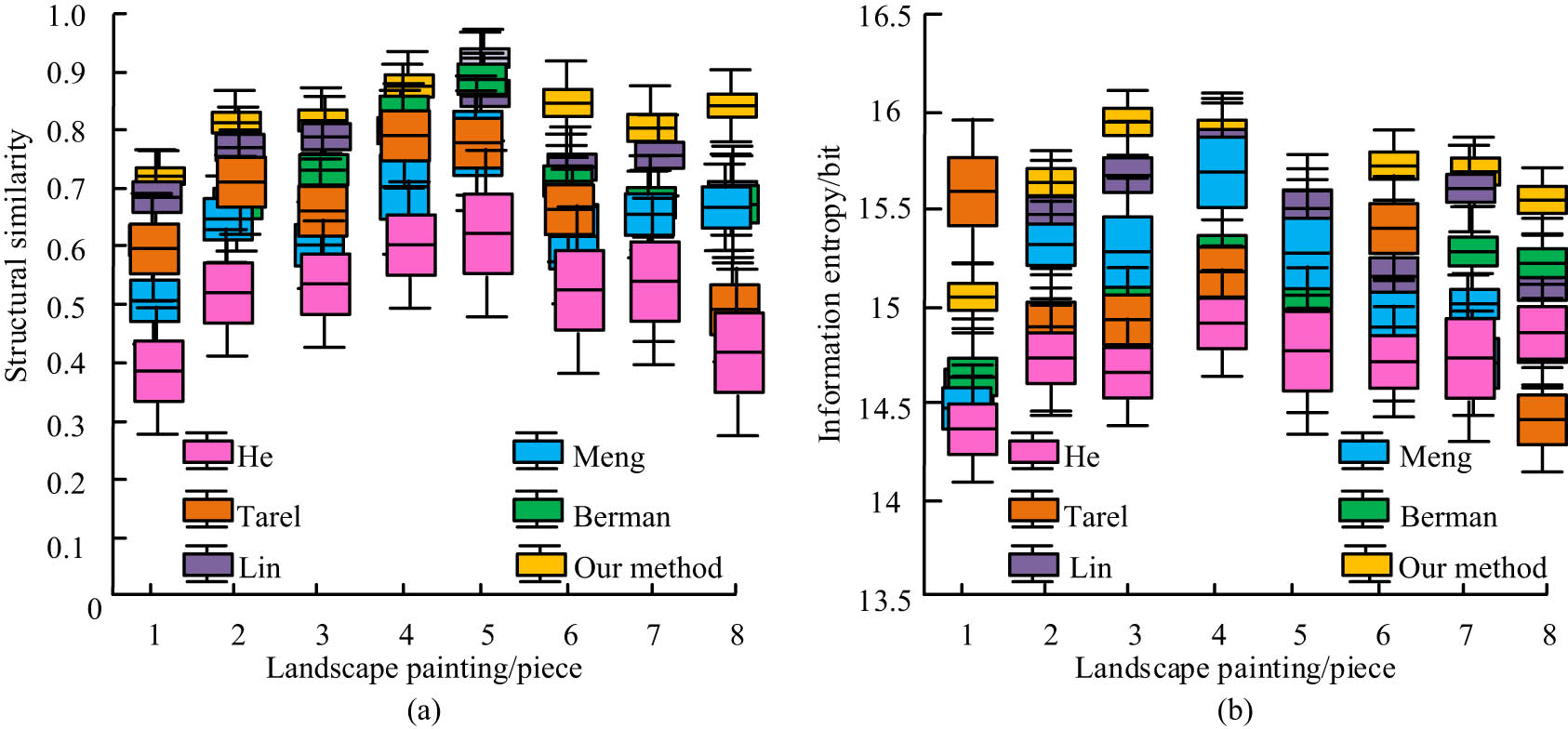

Figure 10(a) illustrates the structural similarity results of algorithms in the landscape painting image restoration task. It distinctly shows that the proposed model excels in image restoration, with an average increase of about 9.3% in the structural similarity index compared to other algorithms. Importantly, its fluctuation is relatively small, indicating the algorithm’s ability to preserve more detailed structural information, ensuring stability and reliability in the restoration effect. In Figure 10(b), the information entropy results show that the proposed model performs exceptionally well in this metric. It averages about a 3% increase compared to other algorithms, emphasizing its authenticity in landscape painting image restoration.

Different algorithms have similar repair effects and structures: (a) structural similarity and (b) information entropy.

Table 3 shows that the image restoration algorithm proposed in the study is significantly better than other algorithms in terms of signal-to-noise ratio, average gradient, structural similarity, and information entropy. Among the repair effects of the four groups of pictures, the signal-to-noise ratio of the image repair algorithm proposed in the study is more than 20, indicating that the ratio between the signal and noise of the repaired image is very high, showing the clarity of the repair effect and good image quality. The structural similarity indicators all reach above 0.9, which shows that the repaired image has a high structural similarity with the original image, and the repair algorithm successfully retains more structural features and detailed information about the image. At the same time, the information entropy exceeds 15, which indicates that the repaired image has a higher amount of information, higher information richness, and a more natural and accurate repair effect.

Comparison of image restoration effects of different algorithms on four groups of landscape paintings

| Indoor fog map | Objective indicators | He | Cai | Ren | Li | Our method |

|---|---|---|---|---|---|---|

| Set 1 | Signal-to-noise ratio (dB) | 15.61 | 15.22 | 16.41 | 18.12 | 20.22 |

| Average gradient | 9.42 | 8.41 | 9.73 | 9.61 | 10.61 | |

| Structural similarity | 0.76 | 0.84 | 0.86 | 0.88 | 0.92 | |

| Information entropy/bit | 15.02 | 15.14 | 15.53 | 15.08 | 16.38 | |

| Set 2 | Signal-to-noise ratio (dB) | 15.58 | 18.23 | 17.97 | 18.25 | 21.65 |

| Average gradient | 8.82 | 9.41 | 10.42 | 9.87 | 10.87 | |

| Structural similarity | 0.78 | 0.79 | 0.82 | 0.85 | 0.91 | |

| Information entropy (bit) | 15.14 | 16.23 | 15.23 | 16.21 | 17.56 | |

| Set 3 | Signal-to-noise ratio (dB) | 18.01 | 20.11 | 19.09 | 20.42 | 23.56 |

| Average gradient | 8.82 | 9.52 | 11.02 | 10.61 | 11.54 | |

| Structural similarity | 0.83 | 0.82 | 0.88 | 0.86 | 0.92 | |

| Information entropy (bit) | 13.25 | 14.26 | 14.52 | 15.29 | 16.23 | |

| Set 4 | Signal-to-noise ratio (dB) | 17.23 | 18.25 | 20.01 | 20.78 | 22.98 |

| Average gradient | 8.08 | 7.51 | 8.99 | 8.25 | 9.62 | |

| Structural similarity | 0.71 | 0.77 | 0.84 | 0.81 | 0.91 | |

| Information entropy (bit) | 14.92 | 13.58 | 14.18 | 13.94 | 15.35 |

5 Conclusion

Traditional landscape paintings and artistic images play a crucial role in history and culture, possessing profound artistic significance. To enhance the image restoration effects of Chinese landscape paintings, this study innovatively designed an image restoration algorithm that integrates edge restoration with GAN. Simultaneously, a restoration model embedding MADCs was employed to enhance the modeling capabilities for details and textures in landscape paintings. Performance evaluations in the study indicate that the image detection model outperformed JNB, CPBD, and MLV models, increasing SROCC and PLCC by 0.20, 0.07, and 0.06, respectively. In edge restoration tasks, the error distribution of the proposed method mainly falls within the range of 0.000–0.015, showcasing more significant improvements compared to the Canny algorithm and overall lower error levels. For image processing algorithms, the proposed method outperformed mean filtering, wavelet denoising, and median filtering algorithms by 6.3, 9.1, and 15.8 dB, respectively, in PSNR values. In terms of SSIM values, the research model outperformed mean filtering, wavelet denoising, and median filtering algorithms by 0.06, 0.12, and 0.11, respectively. In image restoration tasks, the proposed model achieved an average improvement of approximately 9.3 and 3% in structural similarity and information entropy indicators, respectively. Overall, the research method significantly outperforms other algorithms in various metrics, confirming its outstanding performance in the field of image processing and restoration. Future research could consider more comprehensive datasets, refined experimental designs, and algorithm simplification and optimization to further enhance the practicality and universality of the restoration algorithm.

-

Funding information: Author states no funding involved.

-

Author contributions: Zhimin Yao design, writing and revised this paper.

-

Conflict of interest: The author declares that there is no conflict of interest.

-

Data availability statement: Data sharing is not applicable to this article as no datasets were generated or analysed during the current study.

References

[1] Dührkop K, Fleischauer M, Ludwig M, Alexander A, Alexey V. SIRIUS 4: A rapid tool for turning tandem mass spectra into metabolite structure information. Nat methods. March 2019;16(4):299–302. 10.1038/s41592-019-0344-8.Search in Google Scholar PubMed

[2] Ma Y, Gao H, Wang H, Cao X. Engineering topography: effects on nerve cell behaviors and applications in peripheral nerve repair. J Mater Chem B. July 2021;32(9):6310–25. 10.1039/D1TB00782C.Search in Google Scholar PubMed

[3] Greener JG, Kandathil SM, Moffat Land L, Jones D. A guide to machine learning for biologists. Nat Rev Mol Cell Bio. September 2022;23(1):40–55. 10.1038/s41580-021-00407-0.Search in Google Scholar PubMed

[4] Baek M, DiMaio F, Anishchenko I, Justas D. Accurate prediction of protein structures and interactions using a three-track neural network. Science. August 2021;373(6557):871–6. 10.1126/science.abj8754.Search in Google Scholar PubMed PubMed Central

[5] Zamani A, Sharifi A, Felegari S, Tariq A, Zhao N. Agro climatic zoning of saffron culture in miyaneh city by using WLC method and remote sensing data. Agriculture. January 2022;12(1):118–32. 10.3390/agriculture12010118.Search in Google Scholar

[6] Zhang K, Li Y, Zuo W, Zhang L, Gool L. Plug-and-play image restoration with deep denoiser prior. IEEE Trans Pattern Anal Mach Intell. June 2021;44(10):6360–76. 10.1109/TPAMI.2021.3088914.Search in Google Scholar PubMed

[7] Deng X, Dragotti PL. Deep convolutional neural network for multi-modal image restoration and fusion. IEEE Trans pattern Anal Mach Intell. April 2020;43(10). 10.1109/TPAMI.2020.2984244.Search in Google Scholar PubMed

[8] Jin Z, Iqbal MZ, Bobkov D, Zou W, Zhi X. A flexible deep CNN framework for image restoration. IEEE Trans Multimed. August 2019;22(4):1055–68. 10.1109/TMM.2019.2938340.Search in Google Scholar

[9] Farmonov N, Amankulova K, Szatmári J, Sharifi A, Dariush A, Nejad S, et al. Crop type classification by DESIS hyperspectral imagery and machine learning algorithms. IEEE J-STARS. January 2023;16(30):1576–88. 10.1109/JSTARS.2023.3239756.Search in Google Scholar

[10] Mei Y, Fan Y, Zhang Y, Yu J, Zhou Y, Liu D, et al. Pyramid attention network for image restoration. Int J Computer Vis. August 2023;131(no .12):3207–25. 10.1007/s11263-023-01843-5.Search in Google Scholar

[11] Smita TR, Yadav RN, Gupta L. State‐of‐art analysis of image denoising methods using convolutional neural networks. IET Image Process. October 2019;13(13):2367–8. 10.1049/iet-ipr.2019.0157.Search in Google Scholar

[12] Wang K, Hu Y, Chen J, Wu X, Zhao X, Li Y. Underwater image restoration based on a parallel convolutional neural network. Remote Sens. 2019;11(13):1591–611. 10.3390/rs11131591.Search in Google Scholar

[13] Esmaeili M, Abbasi-Moghadam D, Sharifi A, Tariq A, Li Q. Hyperspectral image band selection based on CNN embedded GA (CNNeGA). IEEE J-STARS. February 2023;16(6):1927–50. 10.1109/JSTARS.2023.3242310.Search in Google Scholar

[14] Alzubaidi L, Zhang J, Humaidi A J, Dujaili A, Duan Y, Shamma O, et al. Review of deep learning: concepts, CNN architectures, challenges, applications, future directions. J big Data. 2021;8(53): 1–74.10.1186/s40537-021-00444-8Search in Google Scholar PubMed PubMed Central

[15] Klausen MS, Jespersen MC, Nielsen H, Jensen K. NetSurfP‐2.0: Improved prediction of protein structural features by integrated deep learning. Proteins Struct Funct Bioinf. February 2019;87(6):520–7. 10.1002/prot.25674.Search in Google Scholar PubMed

[16] Rasti B, Chang Y, Dalsasso E, Denis L, Ghamisi P. Image restoration for remote sensing: Overview and toolbox. IEEE Geosci Remote Sens Mag. July 2021;10(2):201–30. 10.1109/MGRS.2021.3121761.Search in Google Scholar

[17] Dornelas RS, Lima DA. Correlation filters in machine learning algorithms to select demographic and individual features for autism spectrum disorder diagnosis. J Data Sci Intell Syst. June 2023;3(1):7–9. 10.47852/bonviewJDSIS32021027.Search in Google Scholar

[18] Yu K, Wang X, Dong C, Tang X, Loy CC. Path-restore: Learning network path selection for image restoration. IEEE Trans Pattern Anal Mach Intell. July 2021;44(10):7078–92. 10.1109/TPAMI.2021.3096255.Search in Google Scholar PubMed

[19] Pan J, Dong J, Liu Y, Zhang J, Ren J, Ta J. Physics-based generative adversarial models for image restoration and beyond. IEEE Trans Pattern Anal Mach Intell. 2020;43(7):2449–62.10.1109/TPAMI.2020.2969348Search in Google Scholar PubMed

[20] Schafer B, Keuper M, Stuckenschmidt H. Arrow R-CNN for handwritten diagram recognition. Int J Doc Anal Recognit. 2021;24(1):3–17.10.1007/s10032-020-00361-1Search in Google Scholar

[21] Guo Y, Mustafaoglu Z, Koundal D. Spam detection using bidirectional transformers and machine learning classifier algorithms. J Comput Cognit Eng. Apr. 2022;2(1):5–9. 10.47852/bonviewJCCE2202192.Search in Google Scholar

[22] An S, Huang X, Wang L, Zheng Z. Semi-Supervised image dehazing network. Vis Computer. Nov. 2022;38(6):2041–23. 10.1049/ipr2.12679.Search in Google Scholar

[23] Rafid Hashim A, Daway HG, Kareem HH. Single image dehazing by dark channel prior and luminance adjustment. Imaging Sci J. Mar. 2020;68(5-8):278–87. 10.1080/13682199.2022.2141863.Search in Google Scholar

[24] Long L, Fu Y, Xiao L. Tropical coastal climate change based on image defogging algorithm and swimming rehabilitation training. Arab J Geosci. Jul. 2021;14(17):1806–21. 10.1007/s12517-021-08124-w.Search in Google Scholar

[25] Xu Y, Xu F, Liu Q, Chen J. Improved first-order motion model of image animation with enhanced dense motion and repair ability. Appl Surf Sci. March 2023;13(7):4137–51. 10.3390/app13074137.Search in Google Scholar

[26] Pietroni E, Daniele F. Virtual restoration and virtual reconstruction in cultural heritage: Terminology, methodologies, visual representation techniques and cognitive models. Information. April 2021;12(4):167–96. 10.3390/info12040167.Search in Google Scholar

[27] Li J, Wang H, Deng Z, Pan M, Chen H. Restoration of non-structural damaged murals in Shenzhen Bao’an based on a generator–discriminator network. Herit Sci. June 2021;9(1):1–14. 10.1186/s40494-020-00478-w.Search in Google Scholar

[28] Lee B, Seo M, Kim D, Han SK. Dissecting landscape art history with information theory. Proc Natl Acad Sci USA. June 2020;117(43):26580–90. 10.1073/pnas.2011927117.Search in Google Scholar PubMed PubMed Central

[29] Li M, Yun W, Xu YQ. Computing for Chinese cultural heritage. Vis Inform. March 2022;6(1):1–13. 10.1016/j.visinf.2021.12.006.Search in Google Scholar

[30] Ciortan IM, George S, Hardeberg JY. Colour-balanced edge-guided digital inpainting: Applications on artworks. Sensors. March 2021;21(6):2091–111. 10.3390/s21062091.Search in Google Scholar PubMed PubMed Central

© 2024 the author(s), published by De Gruyter

This work is licensed under the Creative Commons Attribution 4.0 International License.

Articles in the same Issue

- Research Articles

- A study on intelligent translation of English sentences by a semantic feature extractor

- Detecting surface defects of heritage buildings based on deep learning

- Combining bag of visual words-based features with CNN in image classification

- Online addiction analysis and identification of students by applying gd-LSTM algorithm to educational behaviour data

- Improving multilayer perceptron neural network using two enhanced moth-flame optimizers to forecast iron ore prices

- Sentiment analysis model for cryptocurrency tweets using different deep learning techniques

- Periodic analysis of scenic spot passenger flow based on combination neural network prediction model

- Analysis of short-term wind speed variation, trends and prediction: A case study of Tamil Nadu, India

- Cloud computing-based framework for heart disease classification using quantum machine learning approach

- Research on teaching quality evaluation of higher vocational architecture majors based on enterprise platform with spherical fuzzy MAGDM

- Detection of sickle cell disease using deep neural networks and explainable artificial intelligence

- Interval-valued T-spherical fuzzy extended power aggregation operators and their application in multi-criteria decision-making

- Characterization of neighborhood operators based on neighborhood relationships

- Real-time pose estimation and motion tracking for motion performance using deep learning models

- QoS prediction using EMD-BiLSTM for II-IoT-secure communication systems

- A novel framework for single-valued neutrosophic MADM and applications to English-blended teaching quality evaluation

- An intelligent error correction model for English grammar with hybrid attention mechanism and RNN algorithm

- Prediction mechanism of depression tendency among college students under computer intelligent systems

- Research on grammatical error correction algorithm in English translation via deep learning

- Microblog sentiment analysis method using BTCBMA model in Spark big data environment

- Application and research of English composition tangent model based on unsupervised semantic space

- 1D-CNN: Classification of normal delivery and cesarean section types using cardiotocography time-series signals

- Real-time segmentation of short videos under VR technology in dynamic scenes

- Application of emotion recognition technology in psychological counseling for college students

- Classical music recommendation algorithm on art market audience expansion under deep learning

- A robust segmentation method combined with classification algorithms for field-based diagnosis of maize plant phytosanitary state

- Integration effect of artificial intelligence and traditional animation creation technology

- Artificial intelligence-driven education evaluation and scoring: Comparative exploration of machine learning algorithms

- Intelligent multiple-attributes decision support for classroom teaching quality evaluation in dance aesthetic education based on the GRA and information entropy

- A study on the application of multidimensional feature fusion attention mechanism based on sight detection and emotion recognition in online teaching

- Blockchain-enabled intelligent toll management system

- A multi-weapon detection using ensembled learning

- Deep and hand-crafted features based on Weierstrass elliptic function for MRI brain tumor classification

- Design of geometric flower pattern for clothing based on deep learning and interactive genetic algorithm

- Mathematical media art protection and paper-cut animation design under blockchain technology

- Deep reinforcement learning enhances artistic creativity: The case study of program art students integrating computer deep learning

- Transition from machine intelligence to knowledge intelligence: A multi-agent simulation approach to technology transfer

- Research on the TF–IDF algorithm combined with semantics for automatic extraction of keywords from network news texts

- Enhanced Jaya optimization for improving multilayer perceptron neural network in urban air quality prediction

- Design of visual symbol-aided system based on wireless network sensor and embedded system

- Construction of a mental health risk model for college students with long and short-term memory networks and early warning indicators

- Personalized resource recommendation method of student online learning platform based on LSTM and collaborative filtering

- Employment management system for universities based on improved decision tree

- English grammar intelligent error correction technology based on the n-gram language model

- Speech recognition and intelligent translation under multimodal human–computer interaction system

- Enhancing data security using Laplacian of Gaussian and Chacha20 encryption algorithm

- Construction of GCNN-based intelligent recommendation model for answering teachers in online learning system

- Neural network big data fusion in remote sensing image processing technology

- Research on the construction and reform path of online and offline mixed English teaching model in the internet era

- Real-time semantic segmentation based on BiSeNetV2 for wild road

- Online English writing teaching method that enhances teacher–student interaction

- Construction of a painting image classification model based on AI stroke feature extraction

- Big data analysis technology in regional economic market planning and enterprise market value prediction

- Location strategy for logistics distribution centers utilizing improved whale optimization algorithm

- Research on agricultural environmental monitoring Internet of Things based on edge computing and deep learning

- The application of curriculum recommendation algorithm in the driving mechanism of industry–teaching integration in colleges and universities under the background of education reform

- Application of online teaching-based classroom behavior capture and analysis system in student management

- Evaluation of online teaching quality in colleges and universities based on digital monitoring technology

- Face detection method based on improved YOLO-v4 network and attention mechanism

- Study on the current situation and influencing factors of corn import trade in China – based on the trade gravity model

- Research on business English grammar detection system based on LSTM model

- Multi-source auxiliary information tourist attraction and route recommendation algorithm based on graph attention network

- Multi-attribute perceptual fuzzy information decision-making technology in investment risk assessment of green finance Projects

- Research on image compression technology based on improved SPIHT compression algorithm for power grid data

- Optimal design of linear and nonlinear PID controllers for speed control of an electric vehicle

- Traditional landscape painting and art image restoration methods based on structural information guidance

- Traceability and analysis method for measurement laboratory testing data based on intelligent Internet of Things and deep belief network

- A speech-based convolutional neural network for human body posture classification

- The role of the O2O blended teaching model in improving the teaching effectiveness of physical education classes

- Genetic algorithm-assisted fuzzy clustering framework to solve resource-constrained project problems

- Behavior recognition algorithm based on a dual-stream residual convolutional neural network

- Ensemble learning and deep learning-based defect detection in power generation plants

- Optimal design of neural network-based fuzzy predictive control model for recommending educational resources in the context of information technology

- An artificial intelligence-enabled consumables tracking system for medical laboratories

- Utilization of deep learning in ideological and political education

- Detection of abnormal tourist behavior in scenic spots based on optimized Gaussian model for background modeling

- RGB-to-hyperspectral conversion for accessible melanoma detection: A CNN-based approach

- Optimization of the road bump and pothole detection technology using convolutional neural network

- Comparative analysis of impact of classification algorithms on security and performance bug reports

- Cross-dataset micro-expression identification based on facial ROIs contribution quantification

- Demystifying multiple sclerosis diagnosis using interpretable and understandable artificial intelligence

- Unifying optimization forces: Harnessing the fine-structure constant in an electromagnetic-gravity optimization framework

- E-commerce big data processing based on an improved RBF model

- Analysis of youth sports physical health data based on cloud computing and gait awareness

- CCLCap-AE-AVSS: Cycle consistency loss based capsule autoencoders for audio–visual speech synthesis

- An efficient node selection algorithm in the context of IoT-based vehicular ad hoc network for emergency service

- Computer aided diagnoses for detecting the severity of Keratoconus

- Improved rapidly exploring random tree using salp swarm algorithm

- Network security framework for Internet of medical things applications: A survey

- Predicting DoS and DDoS attacks in network security scenarios using a hybrid deep learning model

- Enhancing 5G communication in business networks with an innovative secured narrowband IoT framework

- Quokka swarm optimization: A new nature-inspired metaheuristic optimization algorithm

- Digital forensics architecture for real-time automated evidence collection and centralization: Leveraging security lake and modern data architecture

- Image modeling algorithm for environment design based on augmented and virtual reality technologies

- Enhancing IoT device security: CNN-SVM hybrid approach for real-time detection of DoS and DDoS attacks

- High-resolution image processing and entity recognition algorithm based on artificial intelligence

- Review Articles

- Transformative insights: Image-based breast cancer detection and severity assessment through advanced AI techniques

- Network and cybersecurity applications of defense in adversarial attacks: A state-of-the-art using machine learning and deep learning methods

- Applications of integrating artificial intelligence and big data: A comprehensive analysis

- A systematic review of symbiotic organisms search algorithm for data clustering and predictive analysis

- Modelling Bitcoin networks in terms of anonymity and privacy in the metaverse application within Industry 5.0: Comprehensive taxonomy, unsolved issues and suggested solution

- Systematic literature review on intrusion detection systems: Research trends, algorithms, methods, datasets, and limitations

Articles in the same Issue

- Research Articles

- A study on intelligent translation of English sentences by a semantic feature extractor

- Detecting surface defects of heritage buildings based on deep learning

- Combining bag of visual words-based features with CNN in image classification

- Online addiction analysis and identification of students by applying gd-LSTM algorithm to educational behaviour data

- Improving multilayer perceptron neural network using two enhanced moth-flame optimizers to forecast iron ore prices

- Sentiment analysis model for cryptocurrency tweets using different deep learning techniques

- Periodic analysis of scenic spot passenger flow based on combination neural network prediction model

- Analysis of short-term wind speed variation, trends and prediction: A case study of Tamil Nadu, India

- Cloud computing-based framework for heart disease classification using quantum machine learning approach

- Research on teaching quality evaluation of higher vocational architecture majors based on enterprise platform with spherical fuzzy MAGDM

- Detection of sickle cell disease using deep neural networks and explainable artificial intelligence

- Interval-valued T-spherical fuzzy extended power aggregation operators and their application in multi-criteria decision-making

- Characterization of neighborhood operators based on neighborhood relationships

- Real-time pose estimation and motion tracking for motion performance using deep learning models

- QoS prediction using EMD-BiLSTM for II-IoT-secure communication systems

- A novel framework for single-valued neutrosophic MADM and applications to English-blended teaching quality evaluation

- An intelligent error correction model for English grammar with hybrid attention mechanism and RNN algorithm

- Prediction mechanism of depression tendency among college students under computer intelligent systems

- Research on grammatical error correction algorithm in English translation via deep learning

- Microblog sentiment analysis method using BTCBMA model in Spark big data environment

- Application and research of English composition tangent model based on unsupervised semantic space

- 1D-CNN: Classification of normal delivery and cesarean section types using cardiotocography time-series signals

- Real-time segmentation of short videos under VR technology in dynamic scenes

- Application of emotion recognition technology in psychological counseling for college students

- Classical music recommendation algorithm on art market audience expansion under deep learning

- A robust segmentation method combined with classification algorithms for field-based diagnosis of maize plant phytosanitary state

- Integration effect of artificial intelligence and traditional animation creation technology

- Artificial intelligence-driven education evaluation and scoring: Comparative exploration of machine learning algorithms

- Intelligent multiple-attributes decision support for classroom teaching quality evaluation in dance aesthetic education based on the GRA and information entropy

- A study on the application of multidimensional feature fusion attention mechanism based on sight detection and emotion recognition in online teaching

- Blockchain-enabled intelligent toll management system

- A multi-weapon detection using ensembled learning

- Deep and hand-crafted features based on Weierstrass elliptic function for MRI brain tumor classification

- Design of geometric flower pattern for clothing based on deep learning and interactive genetic algorithm

- Mathematical media art protection and paper-cut animation design under blockchain technology

- Deep reinforcement learning enhances artistic creativity: The case study of program art students integrating computer deep learning

- Transition from machine intelligence to knowledge intelligence: A multi-agent simulation approach to technology transfer

- Research on the TF–IDF algorithm combined with semantics for automatic extraction of keywords from network news texts

- Enhanced Jaya optimization for improving multilayer perceptron neural network in urban air quality prediction

- Design of visual symbol-aided system based on wireless network sensor and embedded system

- Construction of a mental health risk model for college students with long and short-term memory networks and early warning indicators

- Personalized resource recommendation method of student online learning platform based on LSTM and collaborative filtering

- Employment management system for universities based on improved decision tree

- English grammar intelligent error correction technology based on the n-gram language model

- Speech recognition and intelligent translation under multimodal human–computer interaction system

- Enhancing data security using Laplacian of Gaussian and Chacha20 encryption algorithm

- Construction of GCNN-based intelligent recommendation model for answering teachers in online learning system

- Neural network big data fusion in remote sensing image processing technology

- Research on the construction and reform path of online and offline mixed English teaching model in the internet era

- Real-time semantic segmentation based on BiSeNetV2 for wild road

- Online English writing teaching method that enhances teacher–student interaction

- Construction of a painting image classification model based on AI stroke feature extraction

- Big data analysis technology in regional economic market planning and enterprise market value prediction

- Location strategy for logistics distribution centers utilizing improved whale optimization algorithm

- Research on agricultural environmental monitoring Internet of Things based on edge computing and deep learning

- The application of curriculum recommendation algorithm in the driving mechanism of industry–teaching integration in colleges and universities under the background of education reform

- Application of online teaching-based classroom behavior capture and analysis system in student management

- Evaluation of online teaching quality in colleges and universities based on digital monitoring technology

- Face detection method based on improved YOLO-v4 network and attention mechanism

- Study on the current situation and influencing factors of corn import trade in China – based on the trade gravity model

- Research on business English grammar detection system based on LSTM model

- Multi-source auxiliary information tourist attraction and route recommendation algorithm based on graph attention network

- Multi-attribute perceptual fuzzy information decision-making technology in investment risk assessment of green finance Projects

- Research on image compression technology based on improved SPIHT compression algorithm for power grid data

- Optimal design of linear and nonlinear PID controllers for speed control of an electric vehicle

- Traditional landscape painting and art image restoration methods based on structural information guidance

- Traceability and analysis method for measurement laboratory testing data based on intelligent Internet of Things and deep belief network

- A speech-based convolutional neural network for human body posture classification

- The role of the O2O blended teaching model in improving the teaching effectiveness of physical education classes

- Genetic algorithm-assisted fuzzy clustering framework to solve resource-constrained project problems

- Behavior recognition algorithm based on a dual-stream residual convolutional neural network

- Ensemble learning and deep learning-based defect detection in power generation plants

- Optimal design of neural network-based fuzzy predictive control model for recommending educational resources in the context of information technology

- An artificial intelligence-enabled consumables tracking system for medical laboratories

- Utilization of deep learning in ideological and political education

- Detection of abnormal tourist behavior in scenic spots based on optimized Gaussian model for background modeling

- RGB-to-hyperspectral conversion for accessible melanoma detection: A CNN-based approach

- Optimization of the road bump and pothole detection technology using convolutional neural network

- Comparative analysis of impact of classification algorithms on security and performance bug reports

- Cross-dataset micro-expression identification based on facial ROIs contribution quantification

- Demystifying multiple sclerosis diagnosis using interpretable and understandable artificial intelligence

- Unifying optimization forces: Harnessing the fine-structure constant in an electromagnetic-gravity optimization framework

- E-commerce big data processing based on an improved RBF model

- Analysis of youth sports physical health data based on cloud computing and gait awareness

- CCLCap-AE-AVSS: Cycle consistency loss based capsule autoencoders for audio–visual speech synthesis

- An efficient node selection algorithm in the context of IoT-based vehicular ad hoc network for emergency service

- Computer aided diagnoses for detecting the severity of Keratoconus

- Improved rapidly exploring random tree using salp swarm algorithm

- Network security framework for Internet of medical things applications: A survey

- Predicting DoS and DDoS attacks in network security scenarios using a hybrid deep learning model

- Enhancing 5G communication in business networks with an innovative secured narrowband IoT framework

- Quokka swarm optimization: A new nature-inspired metaheuristic optimization algorithm

- Digital forensics architecture for real-time automated evidence collection and centralization: Leveraging security lake and modern data architecture

- Image modeling algorithm for environment design based on augmented and virtual reality technologies

- Enhancing IoT device security: CNN-SVM hybrid approach for real-time detection of DoS and DDoS attacks

- High-resolution image processing and entity recognition algorithm based on artificial intelligence

- Review Articles

- Transformative insights: Image-based breast cancer detection and severity assessment through advanced AI techniques

- Network and cybersecurity applications of defense in adversarial attacks: A state-of-the-art using machine learning and deep learning methods

- Applications of integrating artificial intelligence and big data: A comprehensive analysis

- A systematic review of symbiotic organisms search algorithm for data clustering and predictive analysis

- Modelling Bitcoin networks in terms of anonymity and privacy in the metaverse application within Industry 5.0: Comprehensive taxonomy, unsolved issues and suggested solution

- Systematic literature review on intrusion detection systems: Research trends, algorithms, methods, datasets, and limitations