Abstract

The aim of this paper is to present a new stable method for smoothing and differentiating noisy data defined on a bounded domain

1 Introduction

It is very common in many scientific applications, such as biological imaging, image processing and computer vision, inverse source and identification problems, to have to differentiate functions specified by data. In many cases, the data is experimental and so substantial noise or imprecision may be present. Since differentiation is an ill-posed problem [1], naive numerical differentiation techniques will amplify the noise resulting in inaccurate and mostly useless results. A concrete example is given by the function

where

Evidently, the maximum error in the data is

The last example showcases the instability associated with the differentiation process: small errors in the data might induce large errors in the computed derivative. To overcome the instability issue of the differentiation operator, several regularization techniques have been developed. In [2], a perturbation based method is used for single variable functions. In [3], a mollification method is given. Smoothing splines is adopted in [4], and results for single variable functions are given. Other methods such as the Lanczos’ generalized derivative [5], and the differentiation by integration using Jacobi polynomials [6], are known for single variable functions only. For a summary of other popular methods, see [7]. Despite its importance in real life applications, only few methods dealing with higher dimensional cases have reported [8,9, 10,11]. A side from limited applicability due to dimension restriction, the aforementioned approaches are relatively slow to suit real life applications, particularly with big data.

In this paper, the smoothing property of the classical diffusion equation is utilized to reconstruct the gradient

where

subject to the initial and boundary conditions:

where

Under reasonable assumptions on true data

The rest of this paper is composed of five sections. Section 2 recalls some preliminary and auxiliary results. In Sections 3, we state the precise formulation of the proposed method and give the motivation behind it, and then we prove the main convergence results in Section 4. We provide several numerical experiments and applications in Section 5. More detailed discussion on the general case is provided in the Appendix.

2 Preliminaries

First, we recall some definitions and results from the theory of Sobolev spaces. Readers may refer to [12,13] for more detailed discussions. Throughout the rest of the paper, we assume that

We use the notation

The induced norm will be denoted by

Let

where the partial derivatives are taken in the distributional sense. It is a Hilbert space under the inner product

and a Banach space under the the corresponding induced norm. In particular, the norm on

The Hilbert space

where the boundary value is understood in the trace sense.

By

From the Sturm-Liouville theory [14], the eigenvalues of the eigenvalue problem

form a nondecreasing sequence of positive numbers

Associated with the eigenvalue problem (3), the Hilbert space

The parameter

3 Motivation of the proposed algorithm

To motivate the proposed regularization approach, let

A standard result [14] is that

and so, due to the exponential term, the Fourier coefficients of

As a consequence of the aforementioned discussion, it would be reasonable to take

Step 1. Compute the smoothed version of the noisy data by

Step 2. Take the gradient

Few remarks are in order. First, from expansion (5), we see that the parameter

4 Stability and consistency analysis

Our goal next is to examine

that is,

We start off with the following stability result.

Lemma 4.1

We have

Proof

Set

By using the fact that

which concludes the proof.□

Throughout the sequel, we shall assume that

Lemma 4.2

If

where

Proof

Set

The last equality together with the fact that

Now, it is an easy matter to show that

for all

which ends the proof.□

Combining Lemmas 4.1, 4.2, and the triangle inequality, we obtain the main convergence result:

Theorem 4.1

Suppose that

Remark 4.1

If we choose the parameter

as

We obtain the fastest convergence when

provided that

5 Numerical experiments

Since data acquisition results in only discrete data in many scientific applications, we assume

where

where

Regarding the choice of the parameter

For such domain

Therefore, the Fourier discrete sine transform

Using the trapezoidal rule over the regular grid

where

where the symbol (

In the following examples, the derivatives of the smoothed data are computed via the central difference formula:

and similarly for the true and noisy data.

Now, we examine examples in several space dimensions. We point out that the computations are extremely quick owing to the fast Fourier transform (FFT) routine, which is available in many computer algebra systems.

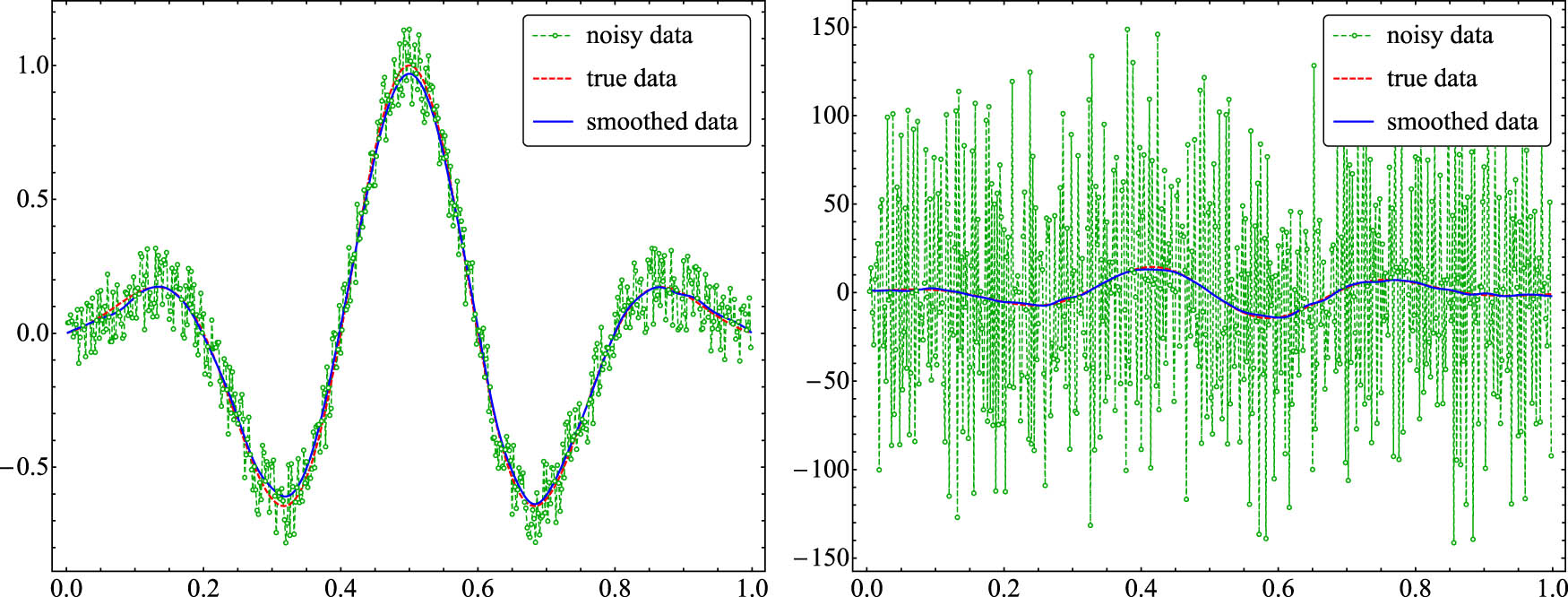

Example 5.1

The true data are given by the function

For noise level

Data (left) and the corresponding derivative (right) for Example 5.1.

|

|

50 | 500 | 5,000 |

|---|---|---|---|

| 0.2 | 0.190 (0.809) | 0.085 (9.084) | 0.043 (89.13) |

| 0.02 | 0.045 (0.080) | 0.030 (0.908) | 0.020 (8.913) |

| 0.002 | 0.007 (0.008) | 0.012 (0.090) | 0.008 (0.891) |

Remark 5.1

The results in Example 5.1 suggest a convergence rate of

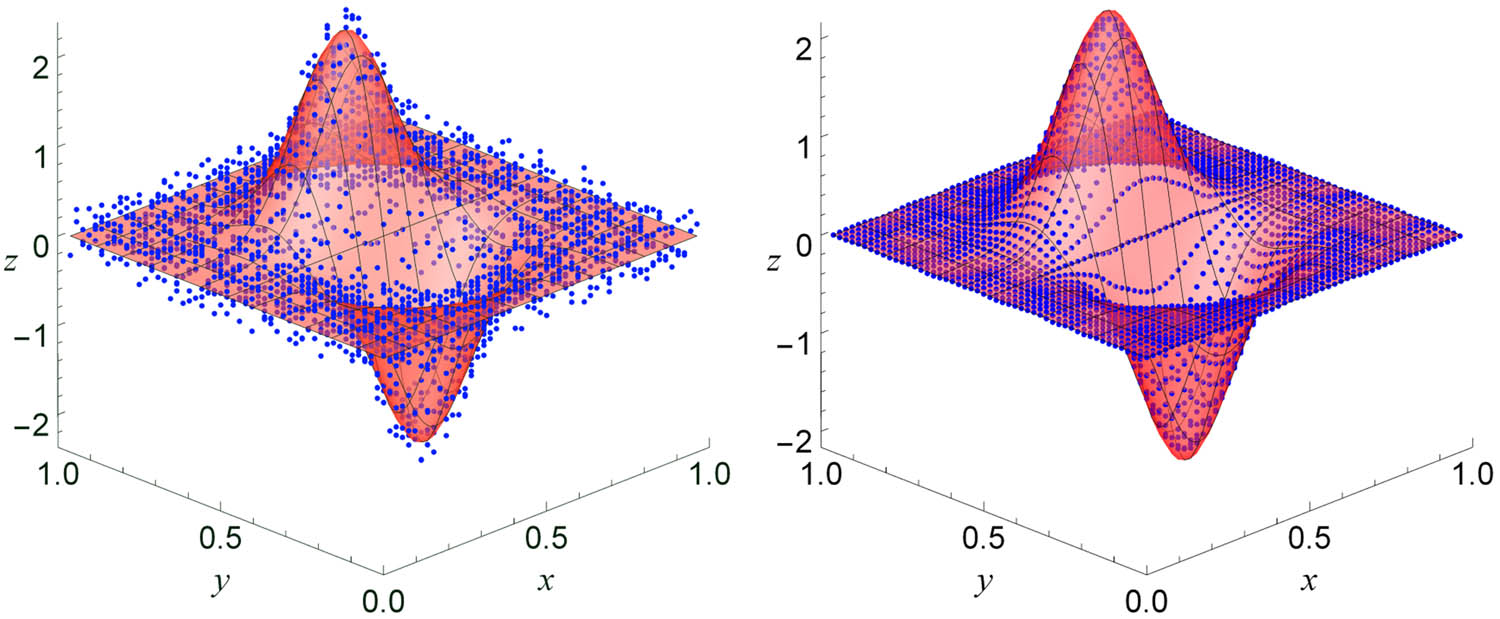

Example 5.2

We consider the recovery of the gradient of the function

where

True data surface along with the noisy data (left) and smoothed data (right) for Example 5.2.

|

|

|

|

|

|---|---|---|---|

| 0.2 | 0.138 (1.671) | 0.056 (16.64) | 0.047 (24.97) |

| 0.02 | 0.051 (0.167) | 0.023 (1.664) | 0.021 (2.497) |

| 0.002 | 0.013 (0.016) | 0.011 (0.166) | 0.009 (0.249) |

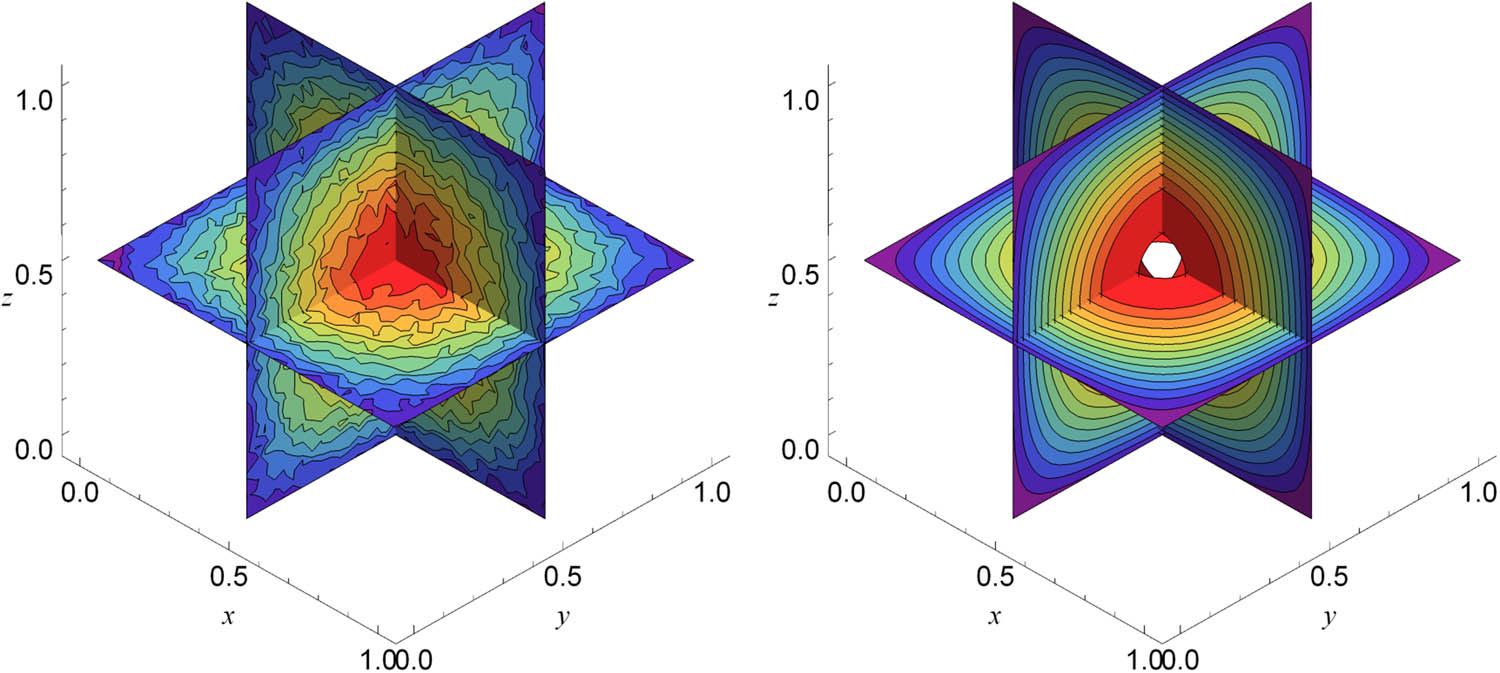

Example 5.3

The true data are given by the function

where

Sliced contours of noisy data (left) and smoothed data (right) for Example 5.3.

|

|

|

|

|

|---|---|---|---|

| 0.2 | 0.061 (4.705) | 0.038 (9.174) | 0.038 (13.64) |

| 0.02 | 0.030 (0.470) | 0.021 (0.917) | 0.020 (1.364) |

| 0.002 | 0.015 (0.047) | 0.011 (0.091) | 0.009 (0.136) |

Remark 5.2

Contrary to the naive method, the error results in Tables 1–3 show that the proposed method is stable with respect to the sample size

Now we present an application on edge detection (emphasizing) of digital images.

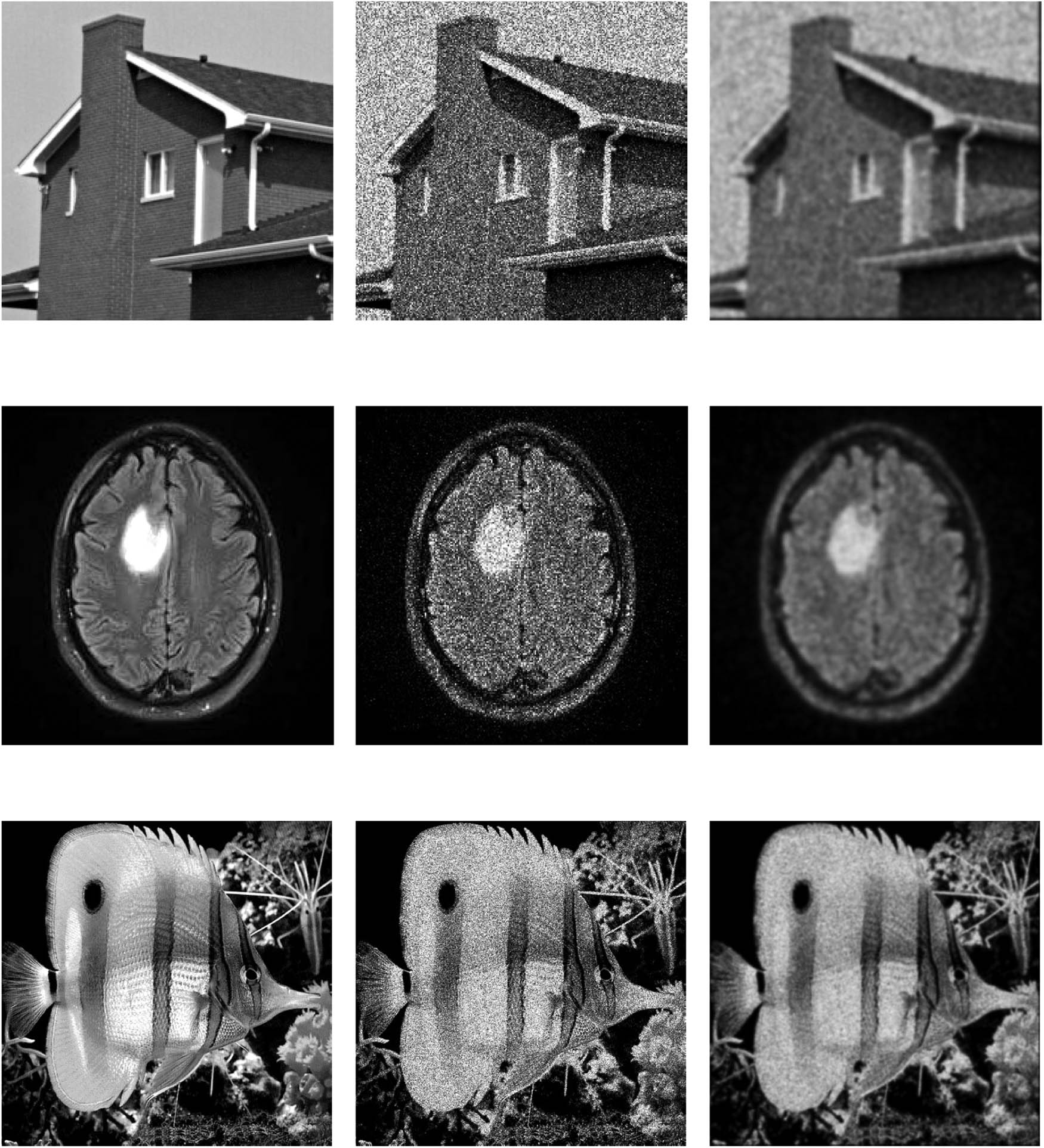

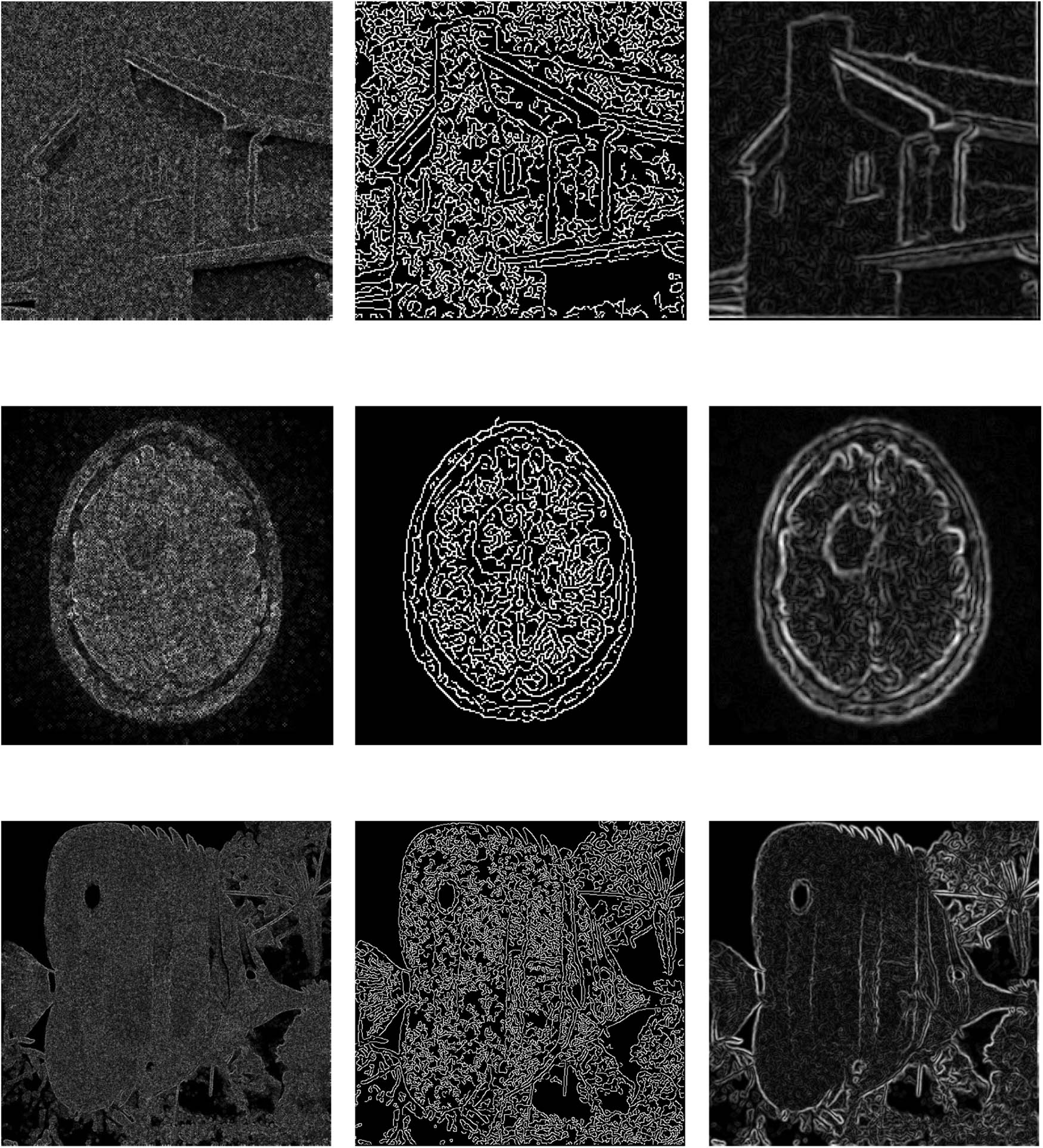

Example 5.4

Image gradient is an essential ingredient in common image processing and computer vision applications, particularly in edge detection algorithms. Since the gradient is sensitive to noise, noisy images must be denoised or smoothed prior to the gradient estimation. In common edge detection algorithms, such as the Canny method, a Gaussian filter is typically used for this purpose. In the following demonstration, we employ our method to smooth out the noisy images, and then the image gradient is approximated using the central difference formula. Consequently, the magnitude of the image gradient at pixel

where

In this demonstration, we consider three noisy images that are obtained by adding a Poisson noise to the noise free images. The original, noisy, and smoothed images by the diffusion method are shown in Figure 4. To assess the quality of the smoothed (denoised) images, we computed the peak signal-to-noise ratio (PSNR) of the noisy and the smoothed images, given by

where

The gradient magnitudes of the noisy and smoothed images are plotted in Figure 5. For the sake of comparison, we also plot the edges of the noisy images obtained by the popular Canny method [15], which consists of five main steps: Gaussian filtering, measuring intensity gradient, edge thinning, double thresholding to determine strong and weak edges, and edge tracking via blob analysis to extract weak edges.

From Table 4, we see that the PSNR of the denoised images are significantly higher than those of noisy images, which indicates the denoised images by the proposed smoothing method have better qualities than the noisy images. Moreover, as it can be observed from Figures 4 and 5, the proposed technique can significantly enhance the quality of the recovered edges, and it seems that the smoothing stage has no bias toward certain direction; this is a major advantage since edge orientation is mostly unknown in real-life applications. It is evident from this experiment that diffusion smoothing gives plausible results, and it can be well suited for such type of applications.

PSNR results for noisy and denoised images in Example 5.4

| Image | Noisy image | Denoised image |

|---|---|---|

| House | 15.129 | 21.530 |

| Brain | 18.092 | 25.216 |

| Fish | 15.568 | 21.164 |

Original images (left), noisy images (middle), and smoothed images (right).

Detected edges: from noisy images (left), by Canny method (middle), and from smoothed images (right).

From the presented examples, we see that the proposed diffusion technique is very suitable for data smoothing and numerical differentiation, and it can excel in various scientific applications due to its speed (in particular, when utilizing the fast Fourier transform), supporting theory behind it, and further, it does not pose any dimension limitations; the method can be extended easily to problems in any dimension, contrary to the most existing methods in which they are limited to one-dimensional domains.

6 Conclusion

The smoothing property of the diffusion equation is utilized to estimate the gradient of functions specified by noisy data. We proved stability and consistency results and carried out the convergence analysis for the proposed method. Several examples in one and higher dimensional domains demonstrate the feasibility and robustness of the proposed approach.

The main advantage of the method lies in multivariable problems, where some of the other smoothing approaches do not easily generalize. Moreover, the method shows decent results under mild assumptions on the true data even in the presence of a large amount of noise, while these could be limitations for other methods. We believe the method can excel in various scientific applications due to its speed, relative ease of implementation, and the supporting theory behind it; we look forward to extending this approach and the theoretical results for higher derivatives estimations. Furthermore, appropriate a priori and a posteriori parameter choice strategies for choosing

Acknowledgements

The authors would like to thank the Scientific Research and Graduate Studies at Yarmouk University for supporting this paper, and they would like express their sincere gratitude to the anonymous referees for their helpful comments that helped us to improve the quality of the manuscript.

-

Conflict of interest: The authors state no conflict of interest.

Appendix

If the true boundary data

then smoothed data can be defined as follows:

where the function

To derive stability and convergence results, we set

where

From the definition of

Using the aforementioned inequality and similar arguments as in the proofs of Lemmas 4.1 and 4.2, it is easy to conclude that

where

Moreover, if we let

as

We obtain the fastest convergence when

provided that

References

[1] H. W. Engl, M. Hanke, and A. Neubauer, Regularization of Inverse Problems, Kluwer Academic, Dordrecht, 1996. 10.1007/978-94-009-1740-8Search in Google Scholar

[2] L. Yang, A perturbation method for numerical differentiation, Appl. Math. Comput. 199 (2008), no. 1, 368–374, https://doi.org/10.1016/j.amc.2007.09.066. Search in Google Scholar

[3] D. A. Murio, C. E. Mejia, and S. Zhan, Discrete mollification and automatic numerical differentiation, Comput. Math. Appl. 35 (1998), no. 5, 1–16, DOI: https://doi.org/10.1016/S0898-1221(98)00001-7. 10.1016/S0898-1221(98)00001-7Search in Google Scholar

[4] M. Hanke and O. Scherzer, Inverse problems light: numerical differentiation, Amer. Math. Monthly 108 (2001), no. 6, 512–521, https://doi.org/10.2307/2695705. Search in Google Scholar

[5] C. W. Groetsch, Lanczos’ generalized derivative, Amer. Math. Monthly 105 (1998), no. 4, 320–326, DOI: https://doi.org/10.2307/2589707. 10.1080/00029890.1998.12004888Search in Google Scholar

[6] D. Y. Liu, O. Gibaru, and W. Perruquetti, Differentiation by integration with Jacobi polynomials, J. Comput. Appl. Math. 235 (2011), no. 9, 3015–3032, https://doi.org/10.1016/j.cam.2010.12.023. Search in Google Scholar

[7] I. Knowles and R. J. Renka, Methods for numerical differentiation of noisy data, Electron. J. Differ. Equ. Conf. 21 (2014), 235–246. Search in Google Scholar

[8] M. F. Al-Jamal, A. K. Alomari, and M. S. Gockenbach, Smoothing via elliptic operators with application to edge detection, Inverse Probl. Sci. Eng. 26 (2018), no. 5, 657–676, https://doi.org/10.1080/17415977.2017.1336552. Search in Google Scholar

[9] Z. Meng, Z. Zhao, D. Mei, and Y. Zhou, Numerical differentiation for two-dimensional functions by a Fourier extension method, Inverse Probl. Sci. Eng. 28 (2020), no. 1, 126–143, https://doi.org/10.1080/17415977.2019.1661410. Search in Google Scholar

[10] A. A. Yahya, J. Tan, B. Su, K. Liu, and A. N. Hadi, Image edge detection method based on anisotropic diffusion and total variation models, J. Eng. 2019 (2019), no. 2, 455–460, https://doi.org/10.1049/joe.2018.5345. Search in Google Scholar

[11] C. Brito-Loeza, R. Legarda-Saenz, and A. Martin-Gonzalez, A fast algorithm for a total variation based phase demodulation model, Numer. Methods Partial Differential Equations 36 (2020), no. 3, 617–636, DOI: https://doi.org/10.1002/num.22444. 10.1002/num.22444Search in Google Scholar

[12] K. Atkinson and W. Han, Theoretical Numerical Analysis: A Functional Analysis Framework, Springer-Verlag, New York, 2009. 10.1007/978-1-4419-0458-4Search in Google Scholar

[13] D. Braess, Finite Elements: Theory, Fast Solvers, and Applications in Solid Mechanics, Cambridge University Press, Cambridge, 2007. 10.1017/CBO9780511618635Search in Google Scholar

[14] R. C. McOwen, Partial Differential Equations: Methods and Applications, Prentice Hall, New Jersy, 2003. Search in Google Scholar

[15] J. Canny, A Computational Approach To Edge Detection, IEEE Tran. Pattern Anal. Machine Intell. 8 (1986), no. 6, 679–698, https://doi.org/10.1109/TPAMI.1986.4767851. Search in Google Scholar

© 2022 Mohammad F. Al-Jamal et al., published by De Gruyter

This work is licensed under the Creative Commons Attribution 4.0 International License.

Articles in the same Issue

- Regular Articles

- A random von Neumann theorem for uniformly distributed sequences of partitions

- Note on structural properties of graphs

- Mean-field formulation for mean-variance asset-liability management with cash flow under an uncertain exit time

- The family of random attractors for nonautonomous stochastic higher-order Kirchhoff equations with variable coefficients

- The intersection graph of graded submodules of a graded module

- Isoperimetric and Brunn-Minkowski inequalities for the (p, q)-mixed geominimal surface areas

- On second-order fuzzy discrete population model

- On certain functional equation in prime rings

- General complex Lp projection bodies and complex Lp mixed projection bodies

- Some results on the total proper k-connection number

- The stability with general decay rate of hybrid stochastic fractional differential equations driven by Lévy noise with impulsive effects

- Well posedness of magnetohydrodynamic equations in 3D mixed-norm Lebesgue space

- Strong convergence of a self-adaptive inertial Tseng's extragradient method for pseudomonotone variational inequalities and fixed point problems

- Generic uniqueness of saddle point for two-person zero-sum differential games

- Relational representations of algebraic lattices and their applications

- Explicit construction of mock modular forms from weakly holomorphic Hecke eigenforms

- The equivalent condition of G-asymptotic tracking property and G-Lipschitz tracking property

- Arithmetic convolution sums derived from eta quotients related to divisors of 6

- Dynamical behaviors of a k-order fuzzy difference equation

- The transfer ideal under the action of orthogonal group in modular case

- The multinomial convolution sum of a generalized divisor function

- Extensions of Gronwall-Bellman type integral inequalities with two independent variables

- Unicity of meromorphic functions concerning differences and small functions

- Solutions to problems about potentially Ks,t-bigraphic pair

- Monotonicity of solutions for fractional p-equations with a gradient term

- Data smoothing with applications to edge detection

- An ℋ-tensor-based criteria for testing the positive definiteness of multivariate homogeneous forms

- Characterizations of *-antiderivable mappings on operator algebras

- Initial-boundary value problem of fifth-order Korteweg-de Vries equation posed on half line with nonlinear boundary values

- On a more accurate half-discrete Hilbert-type inequality involving hyperbolic functions

- On split twisted inner derivation triple systems with no restrictions on their 0-root spaces

- Geometry of conformal η-Ricci solitons and conformal η-Ricci almost solitons on paracontact geometry

- Bifurcation and chaos in a discrete predator-prey system of Leslie type with Michaelis-Menten prey harvesting

- A posteriori error estimates of characteristic mixed finite elements for convection-diffusion control problems

- Dynamical analysis of a Lotka Volterra commensalism model with additive Allee effect

- An efficient finite element method based on dimension reduction scheme for a fourth-order Steklov eigenvalue problem

- Connectivity with respect to α-discrete closure operators

- Khasminskii-type theorem for a class of stochastic functional differential equations

- On some new Hermite-Hadamard and Ostrowski type inequalities for s-convex functions in (p, q)-calculus with applications

- New properties for the Ramanujan R-function

- Shooting method in the application of boundary value problems for differential equations with sign-changing weight function

- Ground state solution for some new Kirchhoff-type equations with Hartree-type nonlinearities and critical or supercritical growth

- Existence and uniqueness of solutions for the stochastic Volterra-Levin equation with variable delays

- Ambrosetti-Prodi-type results for a class of difference equations with nonlinearities indefinite in sign

- Research of cooperation strategy of government-enterprise digital transformation based on differential game

- Malmquist-type theorems on some complex differential-difference equations

- Disjoint diskcyclicity of weighted shifts

- Construction of special soliton solutions to the stochastic Riccati equation

- Remarks on the generalized interpolative contractions and some fixed-point theorems with application

- Analysis of a deteriorating system with delayed repair and unreliable repair equipment

- On the critical fractional Schrödinger-Kirchhoff-Poisson equations with electromagnetic fields

- The exact solutions of generalized Davey-Stewartson equations with arbitrary power nonlinearities using the dynamical system and the first integral methods

- Regularity of models associated with Markov jump processes

- Multiplicity solutions for a class of p-Laplacian fractional differential equations via variational methods

- Minimal period problem for second-order Hamiltonian systems with asymptotically linear nonlinearities

- Convergence rate of the modified Levenberg-Marquardt method under Hölderian local error bound

- Non-binary quantum codes from constacyclic codes over 𝔽q[u1, u2,…,uk]/⟨ui3 = ui, uiuj = ujui⟩

- On the general position number of two classes of graphs

- A posteriori regularization method for the two-dimensional inverse heat conduction problem

- Orbital stability and Zhukovskiǐ quasi-stability in impulsive dynamical systems

- Approximations related to the complete p-elliptic integrals

- A note on commutators of strongly singular Calderón-Zygmund operators

- Generalized Munn rings

- Double domination in maximal outerplanar graphs

- Existence and uniqueness of solutions to the norm minimum problem on digraphs

- On the p-integrable trajectories of the nonlinear control system described by the Urysohn-type integral equation

- Robust estimation for varying coefficient partially functional linear regression models based on exponential squared loss function

- Hessian equations of Krylov type on compact Hermitian manifolds

- Class fields generated by coordinates of elliptic curves

- The lattice of (2, 1)-congruences on a left restriction semigroup

- A numerical solution of problem for essentially loaded differential equations with an integro-multipoint condition

- On stochastic accelerated gradient with convergence rate

- Displacement structure of the DMP inverse

- Dependence of eigenvalues of Sturm-Liouville problems on time scales with eigenparameter-dependent boundary conditions

- Existence of positive solutions of discrete third-order three-point BVP with sign-changing Green's function

- Some new fixed point theorems for nonexpansive-type mappings in geodesic spaces

- Generalized 4-connectivity of hierarchical star networks

- Spectra and reticulation of semihoops

- Stein-Weiss inequality for local mixed radial-angular Morrey spaces

- Eigenvalues of transition weight matrix for a family of weighted networks

- A modified Tikhonov regularization for unknown source in space fractional diffusion equation

- Modular forms of half-integral weight on Γ0(4) with few nonvanishing coefficients modulo ℓ

- Some estimates for commutators of bilinear pseudo-differential operators

- Extension of isometries in real Hilbert spaces

- Existence of positive periodic solutions for first-order nonlinear differential equations with multiple time-varying delays

- B-Fredholm elements in primitive C*-algebras

- Unique solvability for an inverse problem of a nonlinear parabolic PDE with nonlocal integral overdetermination condition

- An algebraic semigroup method for discovering maximal frequent itemsets

- Class-preserving Coleman automorphisms of some classes of finite groups

- Exponential stability of traveling waves for a nonlocal dispersal SIR model with delay

- Existence and multiplicity of solutions for second-order Dirichlet problems with nonlinear impulses

- The transitivity of primary conjugacy in regular ω-semigroups

- Stability estimation of some Markov controlled processes

- On nonnil-coherent modules and nonnil-Noetherian modules

- N-Tuples of weighted noncommutative Orlicz space and some geometrical properties

- The dimension-free estimate for the truncated maximal operator

- A human error risk priority number calculation methodology using fuzzy and TOPSIS grey

- Compact mappings and s-mappings at subsets

- The structural properties of the Gompertz-two-parameter-Lindley distribution and associated inference

- A monotone iteration for a nonlinear Euler-Bernoulli beam equation with indefinite weight and Neumann boundary conditions

- Delta waves of the isentropic relativistic Euler system coupled with an advection equation for Chaplygin gas

- Multiplicity and minimality of periodic solutions to fourth-order super-quadratic difference systems

- On the reciprocal sum of the fourth power of Fibonacci numbers

- Averaging principle for two-time-scale stochastic differential equations with correlated noise

- Phragmén-Lindelöf alternative results and structural stability for Brinkman fluid in porous media in a semi-infinite cylinder

- Study on r-truncated degenerate Stirling numbers of the second kind

- On 7-valent symmetric graphs of order 2pq and 11-valent symmetric graphs of order 4pq

- Some new characterizations of finite p-nilpotent groups

- A Billingsley type theorem for Bowen topological entropy of nonautonomous dynamical systems

- F4 and PSp (8, ℂ)-Higgs pairs understood as fixed points of the moduli space of E6-Higgs bundles over a compact Riemann surface

- On modules related to McCoy modules

- On generalized extragradient implicit method for systems of variational inequalities with constraints of variational inclusion and fixed point problems

- Solvability for a nonlocal dispersal model governed by time and space integrals

- Finite groups whose maximal subgroups of even order are MSN-groups

- Symmetric results of a Hénon-type elliptic system with coupled linear part

- On the connection between Sp-almost periodic functions defined on time scales and ℝ

- On a class of Harada rings

- On regular subgroup functors of finite groups

- Fast iterative solutions of Riccati and Lyapunov equations

- Weak measure expansivity of C2 dynamics

- Admissible congruences on type B semigroups

- Generalized fractional Hermite-Hadamard type inclusions for co-ordinated convex interval-valued functions

- Inverse eigenvalue problems for rank one perturbations of the Sturm-Liouville operator

- Data transmission mechanism of vehicle networking based on fuzzy comprehensive evaluation

- Dual uniformities in function spaces over uniform continuity

- Review Article

- On Hahn-Banach theorem and some of its applications

- Rapid Communication

- Discussion of foundation of mathematics and quantum theory

- Special Issue on Boundary Value Problems and their Applications on Biosciences and Engineering (Part II)

- A study of minimax shrinkage estimators dominating the James-Stein estimator under the balanced loss function

- Representations by degenerate Daehee polynomials

- Multilevel MC method for weak approximation of stochastic differential equation with the exact coupling scheme

- Multiple periodic solutions for discrete boundary value problem involving the mean curvature operator

- Special Issue on Evolution Equations, Theory and Applications (Part II)

- Coupled measure of noncompactness and functional integral equations

- Existence results for neutral evolution equations with nonlocal conditions and delay via fractional operator

- Global weak solution of 3D-NSE with exponential damping

- Special Issue on Fractional Problems with Variable-Order or Variable Exponents (Part I)

- Ground state solutions of nonlinear Schrödinger equations involving the fractional p-Laplacian and potential wells

- A class of p1(x, ⋅) & p2(x, ⋅)-fractional Kirchhoff-type problem with variable s(x, ⋅)-order and without the Ambrosetti-Rabinowitz condition in ℝN

- Jensen-type inequalities for m-convex functions

- Special Issue on Problems, Methods and Applications of Nonlinear Analysis (Part III)

- The influence of the noise on the exact solutions of a Kuramoto-Sivashinsky equation

- Basic inequalities for statistical submanifolds in Golden-like statistical manifolds

- Global existence and blow up of the solution for nonlinear Klein-Gordon equation with variable coefficient nonlinear source term

- Hopf bifurcation and Turing instability in a diffusive predator-prey model with hunting cooperation

- Efficient fixed-point iteration for generalized nonexpansive mappings and its stability in Banach spaces

Articles in the same Issue

- Regular Articles

- A random von Neumann theorem for uniformly distributed sequences of partitions

- Note on structural properties of graphs

- Mean-field formulation for mean-variance asset-liability management with cash flow under an uncertain exit time

- The family of random attractors for nonautonomous stochastic higher-order Kirchhoff equations with variable coefficients

- The intersection graph of graded submodules of a graded module

- Isoperimetric and Brunn-Minkowski inequalities for the (p, q)-mixed geominimal surface areas

- On second-order fuzzy discrete population model

- On certain functional equation in prime rings

- General complex Lp projection bodies and complex Lp mixed projection bodies

- Some results on the total proper k-connection number

- The stability with general decay rate of hybrid stochastic fractional differential equations driven by Lévy noise with impulsive effects

- Well posedness of magnetohydrodynamic equations in 3D mixed-norm Lebesgue space

- Strong convergence of a self-adaptive inertial Tseng's extragradient method for pseudomonotone variational inequalities and fixed point problems

- Generic uniqueness of saddle point for two-person zero-sum differential games

- Relational representations of algebraic lattices and their applications

- Explicit construction of mock modular forms from weakly holomorphic Hecke eigenforms

- The equivalent condition of G-asymptotic tracking property and G-Lipschitz tracking property

- Arithmetic convolution sums derived from eta quotients related to divisors of 6

- Dynamical behaviors of a k-order fuzzy difference equation

- The transfer ideal under the action of orthogonal group in modular case

- The multinomial convolution sum of a generalized divisor function

- Extensions of Gronwall-Bellman type integral inequalities with two independent variables

- Unicity of meromorphic functions concerning differences and small functions

- Solutions to problems about potentially Ks,t-bigraphic pair

- Monotonicity of solutions for fractional p-equations with a gradient term

- Data smoothing with applications to edge detection

- An ℋ-tensor-based criteria for testing the positive definiteness of multivariate homogeneous forms

- Characterizations of *-antiderivable mappings on operator algebras

- Initial-boundary value problem of fifth-order Korteweg-de Vries equation posed on half line with nonlinear boundary values

- On a more accurate half-discrete Hilbert-type inequality involving hyperbolic functions

- On split twisted inner derivation triple systems with no restrictions on their 0-root spaces

- Geometry of conformal η-Ricci solitons and conformal η-Ricci almost solitons on paracontact geometry

- Bifurcation and chaos in a discrete predator-prey system of Leslie type with Michaelis-Menten prey harvesting

- A posteriori error estimates of characteristic mixed finite elements for convection-diffusion control problems

- Dynamical analysis of a Lotka Volterra commensalism model with additive Allee effect

- An efficient finite element method based on dimension reduction scheme for a fourth-order Steklov eigenvalue problem

- Connectivity with respect to α-discrete closure operators

- Khasminskii-type theorem for a class of stochastic functional differential equations

- On some new Hermite-Hadamard and Ostrowski type inequalities for s-convex functions in (p, q)-calculus with applications

- New properties for the Ramanujan R-function

- Shooting method in the application of boundary value problems for differential equations with sign-changing weight function

- Ground state solution for some new Kirchhoff-type equations with Hartree-type nonlinearities and critical or supercritical growth

- Existence and uniqueness of solutions for the stochastic Volterra-Levin equation with variable delays

- Ambrosetti-Prodi-type results for a class of difference equations with nonlinearities indefinite in sign

- Research of cooperation strategy of government-enterprise digital transformation based on differential game

- Malmquist-type theorems on some complex differential-difference equations

- Disjoint diskcyclicity of weighted shifts

- Construction of special soliton solutions to the stochastic Riccati equation

- Remarks on the generalized interpolative contractions and some fixed-point theorems with application

- Analysis of a deteriorating system with delayed repair and unreliable repair equipment

- On the critical fractional Schrödinger-Kirchhoff-Poisson equations with electromagnetic fields

- The exact solutions of generalized Davey-Stewartson equations with arbitrary power nonlinearities using the dynamical system and the first integral methods

- Regularity of models associated with Markov jump processes

- Multiplicity solutions for a class of p-Laplacian fractional differential equations via variational methods

- Minimal period problem for second-order Hamiltonian systems with asymptotically linear nonlinearities

- Convergence rate of the modified Levenberg-Marquardt method under Hölderian local error bound

- Non-binary quantum codes from constacyclic codes over 𝔽q[u1, u2,…,uk]/⟨ui3 = ui, uiuj = ujui⟩

- On the general position number of two classes of graphs

- A posteriori regularization method for the two-dimensional inverse heat conduction problem

- Orbital stability and Zhukovskiǐ quasi-stability in impulsive dynamical systems

- Approximations related to the complete p-elliptic integrals

- A note on commutators of strongly singular Calderón-Zygmund operators

- Generalized Munn rings

- Double domination in maximal outerplanar graphs

- Existence and uniqueness of solutions to the norm minimum problem on digraphs

- On the p-integrable trajectories of the nonlinear control system described by the Urysohn-type integral equation

- Robust estimation for varying coefficient partially functional linear regression models based on exponential squared loss function

- Hessian equations of Krylov type on compact Hermitian manifolds

- Class fields generated by coordinates of elliptic curves

- The lattice of (2, 1)-congruences on a left restriction semigroup

- A numerical solution of problem for essentially loaded differential equations with an integro-multipoint condition

- On stochastic accelerated gradient with convergence rate

- Displacement structure of the DMP inverse

- Dependence of eigenvalues of Sturm-Liouville problems on time scales with eigenparameter-dependent boundary conditions

- Existence of positive solutions of discrete third-order three-point BVP with sign-changing Green's function

- Some new fixed point theorems for nonexpansive-type mappings in geodesic spaces

- Generalized 4-connectivity of hierarchical star networks

- Spectra and reticulation of semihoops

- Stein-Weiss inequality for local mixed radial-angular Morrey spaces

- Eigenvalues of transition weight matrix for a family of weighted networks

- A modified Tikhonov regularization for unknown source in space fractional diffusion equation

- Modular forms of half-integral weight on Γ0(4) with few nonvanishing coefficients modulo ℓ

- Some estimates for commutators of bilinear pseudo-differential operators

- Extension of isometries in real Hilbert spaces

- Existence of positive periodic solutions for first-order nonlinear differential equations with multiple time-varying delays

- B-Fredholm elements in primitive C*-algebras

- Unique solvability for an inverse problem of a nonlinear parabolic PDE with nonlocal integral overdetermination condition

- An algebraic semigroup method for discovering maximal frequent itemsets

- Class-preserving Coleman automorphisms of some classes of finite groups

- Exponential stability of traveling waves for a nonlocal dispersal SIR model with delay

- Existence and multiplicity of solutions for second-order Dirichlet problems with nonlinear impulses

- The transitivity of primary conjugacy in regular ω-semigroups

- Stability estimation of some Markov controlled processes

- On nonnil-coherent modules and nonnil-Noetherian modules

- N-Tuples of weighted noncommutative Orlicz space and some geometrical properties

- The dimension-free estimate for the truncated maximal operator

- A human error risk priority number calculation methodology using fuzzy and TOPSIS grey

- Compact mappings and s-mappings at subsets

- The structural properties of the Gompertz-two-parameter-Lindley distribution and associated inference

- A monotone iteration for a nonlinear Euler-Bernoulli beam equation with indefinite weight and Neumann boundary conditions

- Delta waves of the isentropic relativistic Euler system coupled with an advection equation for Chaplygin gas

- Multiplicity and minimality of periodic solutions to fourth-order super-quadratic difference systems

- On the reciprocal sum of the fourth power of Fibonacci numbers

- Averaging principle for two-time-scale stochastic differential equations with correlated noise

- Phragmén-Lindelöf alternative results and structural stability for Brinkman fluid in porous media in a semi-infinite cylinder

- Study on r-truncated degenerate Stirling numbers of the second kind

- On 7-valent symmetric graphs of order 2pq and 11-valent symmetric graphs of order 4pq

- Some new characterizations of finite p-nilpotent groups

- A Billingsley type theorem for Bowen topological entropy of nonautonomous dynamical systems

- F4 and PSp (8, ℂ)-Higgs pairs understood as fixed points of the moduli space of E6-Higgs bundles over a compact Riemann surface

- On modules related to McCoy modules

- On generalized extragradient implicit method for systems of variational inequalities with constraints of variational inclusion and fixed point problems

- Solvability for a nonlocal dispersal model governed by time and space integrals

- Finite groups whose maximal subgroups of even order are MSN-groups

- Symmetric results of a Hénon-type elliptic system with coupled linear part

- On the connection between Sp-almost periodic functions defined on time scales and ℝ

- On a class of Harada rings

- On regular subgroup functors of finite groups

- Fast iterative solutions of Riccati and Lyapunov equations

- Weak measure expansivity of C2 dynamics

- Admissible congruences on type B semigroups

- Generalized fractional Hermite-Hadamard type inclusions for co-ordinated convex interval-valued functions

- Inverse eigenvalue problems for rank one perturbations of the Sturm-Liouville operator

- Data transmission mechanism of vehicle networking based on fuzzy comprehensive evaluation

- Dual uniformities in function spaces over uniform continuity

- Review Article

- On Hahn-Banach theorem and some of its applications

- Rapid Communication

- Discussion of foundation of mathematics and quantum theory

- Special Issue on Boundary Value Problems and their Applications on Biosciences and Engineering (Part II)

- A study of minimax shrinkage estimators dominating the James-Stein estimator under the balanced loss function

- Representations by degenerate Daehee polynomials

- Multilevel MC method for weak approximation of stochastic differential equation with the exact coupling scheme

- Multiple periodic solutions for discrete boundary value problem involving the mean curvature operator

- Special Issue on Evolution Equations, Theory and Applications (Part II)

- Coupled measure of noncompactness and functional integral equations

- Existence results for neutral evolution equations with nonlocal conditions and delay via fractional operator

- Global weak solution of 3D-NSE with exponential damping

- Special Issue on Fractional Problems with Variable-Order or Variable Exponents (Part I)

- Ground state solutions of nonlinear Schrödinger equations involving the fractional p-Laplacian and potential wells

- A class of p1(x, ⋅) & p2(x, ⋅)-fractional Kirchhoff-type problem with variable s(x, ⋅)-order and without the Ambrosetti-Rabinowitz condition in ℝN

- Jensen-type inequalities for m-convex functions

- Special Issue on Problems, Methods and Applications of Nonlinear Analysis (Part III)

- The influence of the noise on the exact solutions of a Kuramoto-Sivashinsky equation

- Basic inequalities for statistical submanifolds in Golden-like statistical manifolds

- Global existence and blow up of the solution for nonlinear Klein-Gordon equation with variable coefficient nonlinear source term

- Hopf bifurcation and Turing instability in a diffusive predator-prey model with hunting cooperation

- Efficient fixed-point iteration for generalized nonexpansive mappings and its stability in Banach spaces