Abstract

With the advent of the Internet of Things (IoT) era, the application of intelligent devices in the network is becoming more and more extensive, and the monitoring technology is gradually developing towards the direction of intelligence and digitization. As a hot topic in the field of computer vision, face recognition faces problems such as low level of intelligence and long processing time. Therefore, under the technical support of the IoTs, the research uses internet protocol cameras to collect face information, improves the principal component analysis (PCA), poses a PLV algorithm, and then applies it to the face recognition system for remote monitoring. The outcomes demonstrate that in the Olivetti Research Laboratory face database, the accuracy of PLV is relatively stable, and the highest and lowest are 98 and 94%, respectively. In Yale testing, the accuracy of this algorithm is 12% higher than that of PCA algorithm; In the database of Georgia Institute of Technology (GT), the PLV algorithm requires a time range of 0.2–0.3 seconds and has high operational efficiency. In the selected remote monitoring face database, the accuracy of the method is stable at more than 90%, with the highest being 98%, indicating that it can effectively improve the accuracy of face recognition and provide a reference technical means for further optimization of the remote monitoring system.

1 Introduction

Today, information technology has gone through three historic stages of development: computer, Internet, and mobile internet. The Internet of Things (IoTs) is another ongoing development process. The IoTs is an extension of the Internet. With the widespread application of intelligent devices in the network, the range of information applications continues to expand, making people’s daily lives more convenient [1]. With the advent of the IoTs era, monitoring technology is constantly developing towards digitization, intelligence, and networking. The core of remote network monitoring is a new research direction of the IoTs, which is based on network communication and combined with machine learning algorithms [2]. Remote network monitoring refers to accessing video monitoring devices to the Internet through internet protocol (IP) addresses, viewing video images through computers or mobile intelligent terminal devices, and achieving remote monitoring functions. Developing research on facial expression recognition technology for remote network monitoring can quickly and timely obtain facial expression and dynamic change information from a wider range of regions using the Internet, which has important theoretical significance and application value in combating extreme nationalism, multimedia information interaction, and other aspects. Sharma designed a local binary pattern histogram technique for the recognition of unique facial features, which was implemented using the Python programming language. The results show that this method has good performance in visual qualitative analysis [3]. However, there is information loss in the current IP webcam collection of face images, and it is difficult for face recognition systems to achieve ideal recognition results in remote monitoring, which restricts the further promotion of this technology. Therefore, in order to further improve the effectiveness of remote network monitoring, research is based on the IPv6 protocol technology of the IoTs architecture, using IP cameras to collect face information, and improving the principal component analysis (PCA) method for face recognition. The first part of the study is a literature review on face recognition and related technologies in the IoTs. The second part is about the IPv6 protocol technology of the IoTs architecture and the construction of face recognition systems based on PCA. The third part is about the application effect analysis of this technology. Through the Ipv6 protocol technology and improved PCA algorithm face recognition system under the IoTs architecture, the research expects to build a remote monitoring facial expression recognition system under the IoTs architecture and improve the face recognition effect in this scenario to further improve the security of remote monitoring.

2 Related work

The research on face recognition has received the attention of many professionals in recent years. Many methods including PCA, neural network way, local feature method, etc. have achieved good results. Identification has strong reference significance. Aiming at the problem of unconstrained face recognition, Larrain et al. posed a sparse fingerprint classification algorithm, which extracted the face network from the subject’s face pictures in the training phase, extracted the network from the query image in the testing phase, and used the sparse fingerprint classification algorithm. Each patch is converted into a binary sparse representation, and the outcomes demonstrate that it can handle face recognition in the case of facial changes such as occlusion and expression [4]. To help people with low vision and blindness, Neto et al. used Microsoft Kinect sensor as a wearable device for face detection and use PCA dimensionality reduction method and K-means algorithm for face recognition. The outcomes show that it reduces computing resources and improves recognition accuracy [5]. On the basis of a new machine learning algorithm of hierarchical temporal memory, scholars such as Timur et al. proposed a face recognition system based on memristive hierarchical temporary memory, which can create a face recognition system based on the important and unimportant features of the images in the training set. In different categories of image sets, the results show that it reduces memory requirements and achieves an accuracy of 83.5% [6]. Shonal et al. designed a visual assistance system that recognizes moving faces under unconstrained conditions through convolutional neural networks, and it demonstrates good recognition performance under daylight and artificial lighting conditions [7]. For dealing with the interference of illumination, expression, and occlusion on the face recognition system, Okokpujie and John created a face recognition system with constant illumination through a four-layer convolutional neural network, which can recognize face images with different illumination levels. It is shown that its recognition accuracy reaches 99.22%, achieving a robust and adaptive face recognition target [8]. Rashid et al. used median filtering and histogram equalization as image preprocessing methods, and then used Gabor wavelet transform to extract spatial frequency features, directional selectivity, and spatial locality of the face. Then, PCA was used to analyze and reduce the dimensionality of the image. Finally, support vector machine (SVM) was used for classification, and the results demonstrated the robustness and accuracy of the system [9].

In order to recognize faces in unconstrained datasets, Tamilselvi and Karthikeyan have created a hybrid road method that largely uses point set matching convolutional neural networks. The outcomes demonstrate that the accuracy in the labeled faces in the wild face database reaches 96%, and it still has a good recognition effect in various weather and lighting conditions [10]. To reduce the implementation cost of the existing pose robust model, Gunawan and Halimawan designed a deep learning framework that achieved the depth residual equivariant mapping, and the outcomes displayed that its accuracy was increased by 0.07%, and the verification speed difference was 0.17 ms, which is better performance compared to the extreme naive model [11]. Abdulrahman et al. used the OpenCV library and Haar Cascade method to detect faces in automotive safety technology, and implemented facial recognition using local binary pattern histograms to determine the identity information of car owners and thieves [12]. Liu et al. proposed an evaluation model based on continuous Bayesian networks to address the issue of poor reliability in continuous variable measurement in facial recognition systems. This model utilizes clustering tree propagation algorithm to infer continuous variables of facial recognition systems and solve for reliability [13]. To eliminate the problem of student exam simulation and cost rigidity, researchers such as Nagajayanthi have developed an object detection framework, which recognizes students’ faces and records students’ class attendance in a user-safe and friendly way, and the results show that their face detection accuracy was 78%, while their face recognition accuracy was 95% [14]. To reduce the influence of the facial recognition system outdoors, Huu et al. designed a preprocessing method to remove the haze of the input image and raise the image quality, thereby improving the application of convolutional neural network in the face recognition system. The outcomes demonstrate that it increases the accuracy from 90.53 to 98.14%, which greatly improves the recognition efficiency [15].

To sum up, image preprocessing, feature extraction and classification in the face recognition system are the three basic steps. Most researchers have posed corresponding optimization methods from these three aspects, including PCA, SVM, and histogram equalization to achieve phased results. Therefore, optimizing face recognition technology and applying it to remote network monitoring can achieve higher accuracy and recognition effect.

3 Remote monitoring of face recognition under the Ipv6 protocol technology of the IoTs architecture

3.1 IPv6 protocol technology of IoT architecture

The IoT uses equipment such as positioning systems, sensors, and radio frequency identification technology to connect people and things to the network to exchange communication and information, so as to realize identification, positioning, monitoring, and intelligent management. The essence of the IoT is an extension of the Internet. On the basis of the Internet, it has special functions such as remote control, intelligent identification, and real-time monitoring [16]. Under the current network structure standards, the IoT is divided into application layer, transport layer, and perception layer. The face information is collected by the IP camera of the perception layer, then transmitted through the transport layer under the network protocol, and finally processed by the application layer to realize remote monitoring of face recognition. The system architecture is shown in Figure 1.

System architecture diagram based on IoT.

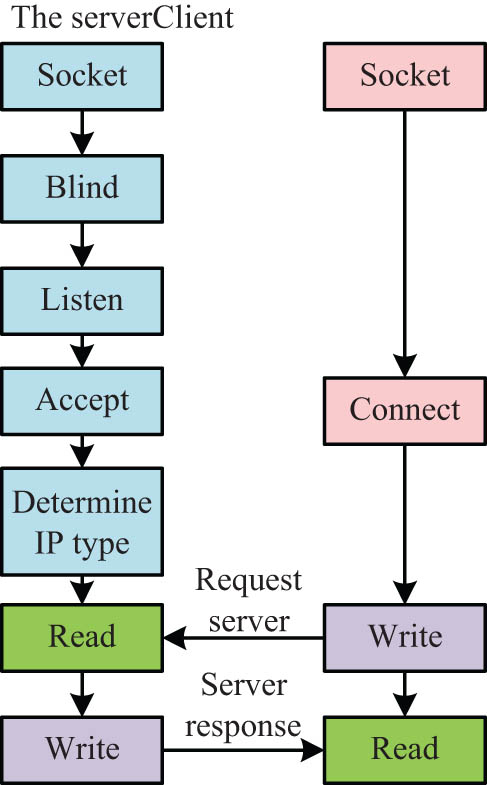

As the key protocol in transmission control protocol/internet protocol (TCP/IP), IP protocol is suitable for network communication of wide area network and local area network. The IPv4 protocol is widely used at present. With the increase in Internet machine access, the 32-bit network space it possesses has made it difficult to meet realistic requirements. The IPv6 protocol is a new generation of Internet protocol. Compared with IPv4, there are three main advantages [17]. First, the address space of IPv6 is 28 bits, which can support more levels of address space, i.e., the address space is wider. Second, there are only six deterministic domains for IPv6, with a total size of 64 bits and no additional options [18]. Finally, IPv6 has added automatic configuration support, which has not only a more intelligent and higher configuration space but also stronger confidentiality and higher security capabilities. The powerful advantages of IPv6 make it necessary to convert IPv4 to IPv6. There are translation mechanism, tunnel mechanism, and dual stack mechanism to realize this process [19]. The tunnel mechanism can avoid the dependencies when upgrading areas, routers, or nodes. The dual stack conversion mechanism not only enables free communication between IPv4 and IPv6, but also enables mapping between IPv4 and IPv6. In the combined IPv4/IPv6 network, communication between these two nodes and between IPv6 nodes is a difficult point to overcome. Therefore, the research adopts the cross-platform, object-oriented Java language to develop [20]. IPv6 addresses are divided into anycast, multicast, and unicast. Arbitrary wave address is routing policy and network addressing, calculates the distance through routing protocol, and transfers data packets to the address interface with the shortest distance. Multicast network addresses deliver packets to all address interfaces by establishing a point-to-multipoint connection, i.e., between a server and a browser [21]. A unicast network address establishes a peer-to-peer connection and only sends packets to a single address interface. The flow of server–client socket communication is shown in Figure 2.

Flow chart of server–client socket communication.

In the dual-stack mechanism system, the Java network stack establishes IPv6 sockets first. In a mixed programming system, Java needs to establish two sockets, IPv6 and IPv4. On the server side, the two sockets established will remain permanent [22]. On the client side, it is resolved by the IP address, and after an IP protocol is adopted, another socket is closed [23]. So, the most important thing in Java socket programming is to obtain and verify the IP address. First, the server creates a ServerSocket instance, then calls Accept and waits for the client to establish a connection. After the connection is established, the client’s Internet protocol, namely IPv6 or IPv4, is determined to process the corresponding data information. In the perception layer, the IP network camera controls the camera through the gateway interface technology, and the general gateway interface can execute the server-side program after compilation [24]. The Pan, Tilt, and Zoom (PTZ) camera used in the study can be controlled with multiple zooms and moved up, down, left, and right. In the face recognition system, the hypertext transfer protocol is obtained through the web camera and then handed over to the Common Gateway Interface program for processing, thereby realizing communication interaction. In remote video surveillance, compression coding is an extremely important part. After the IP network camera collects video data, it will be compressed into M-JPEG format and then transmitted to the face recognition system.

3.2 Face recognition system based on PCA

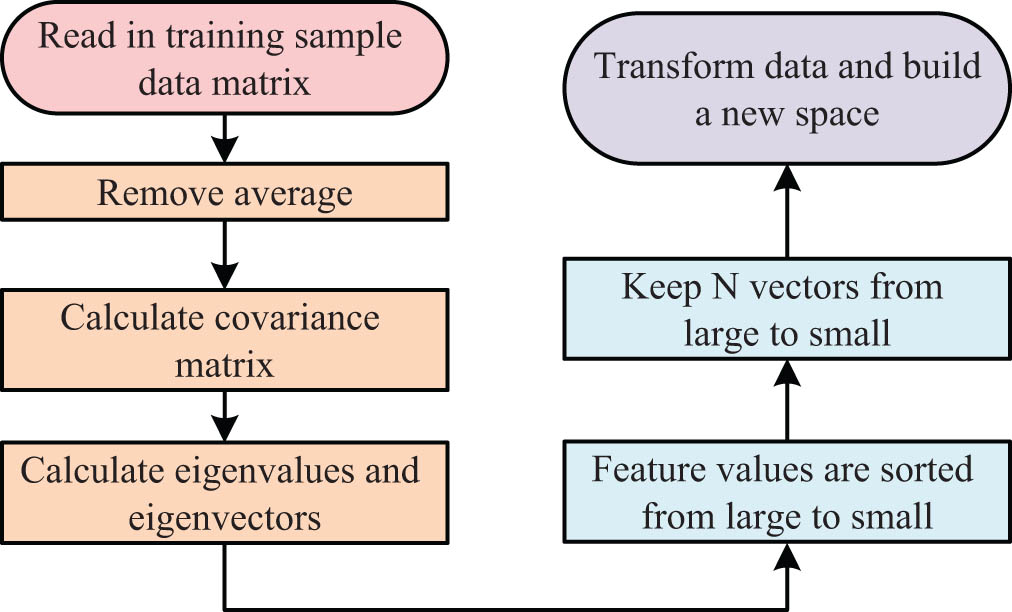

After the video data are collected by the IP network camera and compressed into a picture, a face recognition system is required for identification. Before face recognition, geometric normalization of face image is carried out. After the video data are collected by the IP webcam and compressed into pictures, the face recognition system is required for recognition. The research uses the whole method to extract the features of the face image because the facial expression is generated by causing facial muscle movement, resulting in facial texture and body changes. From an overall point of view, this kind of motion makes the face deform significantly and has a great impact on the global information of the static image. Therefore, the facial expression feature can be extracted from the overall point of view. Based on the overall feature extraction algorithm, the facial features are expressed by extracting the macroscopic information of the face. PCA is an extraction algorithm based on linear features, which can effectively extract the main features of high-dimensional data samples, and is one of the main features and dimensionality reduction methods. PCA algorithm, as a fast and efficient linear algorithm, has been successfully applied to extracting facial features and data dimensionality reduction. However, when the PCA algorithm is used for face recognition, it mainly faces three deficiencies [25]. First, the PCA algorithm does not have a high accuracy rate for face recognition. Second, due to the nonlinear characteristics of human faces, it is difficult for PCA algorithms to process them. Furthermore, it has no classification ability [26]. Therefore, the existing PCA algorithm needs to be optimized. The flow of PCA algorithm is shown in Figure 3.

Flow chart of PCA algorithm.

The PCA algorithm uses a small number of main features to represent the original main part of the data set information by projecting the data set from the high-dimensional space into the low-dimensional feature space [27]. The projection expression of a certain vector in a certain direction is shown in equation (1).

where x

j

represents the vector,

where

where

where

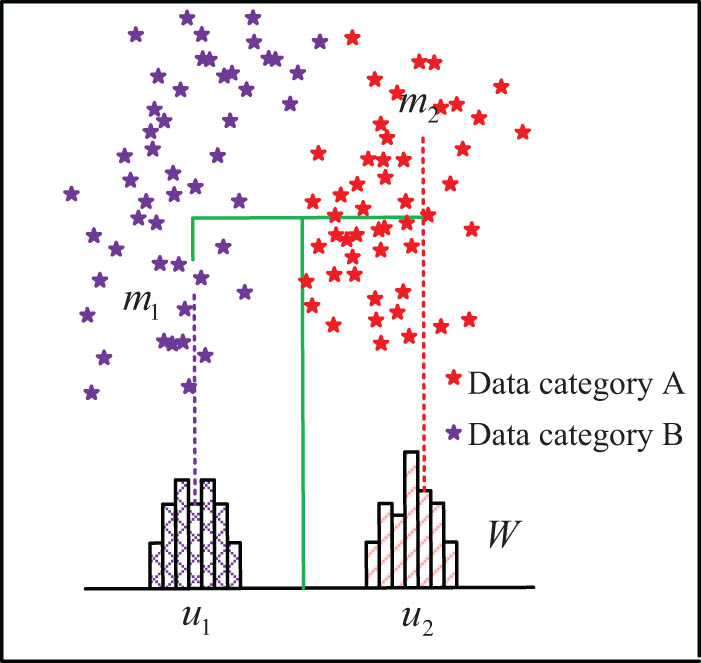

Schematic diagram of LDA algorithm projection.

In Figure 4, five-pointed asterisks with different colors represent different data categories,

where

where

where

where

where

where

where

where

where

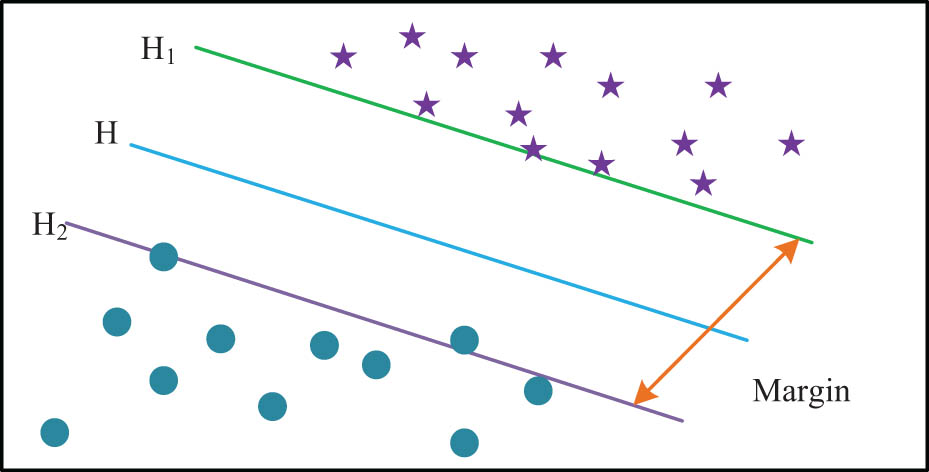

Classification diagram of SVM algorithm.

In Figure 5, different graphs represent different training samples, H1 and H2 represent the parallel planes closest to the optimal hyperplane, and the distance between them is the classification interval, denoted by Margin. The determination equations of H1 and H2 are shown in equation (14):

where

where

4 Analysis of the effect of remote monitoring face recognition under the Ipv6 protocol technology of the IoTs architecture

To verify the accuracy of the proposed PLV algorithm in remote monitoring face recognition, the algorithm was first applied to the Olivetti Research Laboratory (ORL) and Yale face databases. ORL contains 40 face images, a total of 400, i.e., each person has 10, and the pixels are 112 × 92 dp. There are 165 images in Yale, 11 of each, 100 × 100 dp pixels. The main frequency of the experimental operating platform is 2.4 GHz, the CPU is quad-core i3-2370M, the operating system is Win7 Ultimate 64-bit, the memory is 8G, and the simulation software is MATLAB 2017a. At the same time, in the two personal face databases, the first 3, 4, 5, 6, and 7 face pictures of everyone are selected as the training set, and then the remaining pictures are used as the test set. A total of five groups of experiments are included, which are recorded as 1, 2, 3, 4, and 5 samples, respectively. The training set accounts for 70%, and the test set accounts for 30%. The number of test samples and training samples of the five groups of experiments is shown in Table 1.

Number of selected training samples and test samples (piece)

| Face database | Sample 1 | Sample 2 | Sample 3 | Sample 4 | Sample 5 | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| Number of tests | Number of training | Number of tests | Number of training | Number of tests | Number of training | Number of tests | Number of training | Number of tests | Number of training | |

| Yale face database | 120 | 45 | 105 | 64 | 90 | 75 | 75 | 90 | 64 | 105 |

| ORL face database | 280 | 120 | 240 | 160 | 200 | 200 | 160 | 240 | 120 | 280 |

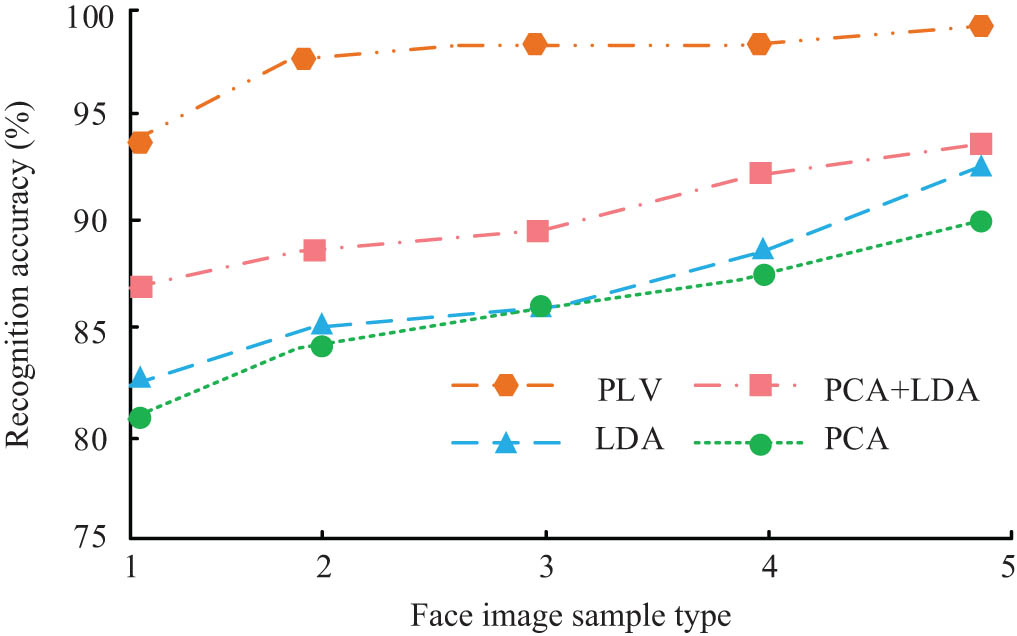

Then, the algorithm is compared with the PCA, LDA, and PCA + LDA algorithms. To ensure the scientificity of the results, each group of experiments will be run six times. The experimental results in the database ORL are shown in Figure 6.

Recognition results of four algorithms in ORL database.

Figure 6 shows that the face recognition accuracy rates of the four algorithms in the five sample numbers are on the rise. Among them, the PCA algorithm has the highest accuracy rate of 87% and the lowest accuracy rate of 81%, which is the lowest among the four algorithms. The LDA algorithm and PCA + LDA algorithm have the highest accuracy of 89 and 91% and the lowest of 83 and 87%, respectively, while the PLV algorithm has the lowest of 94% and the highest of 98%, and in the five groups of samples, the accuracy rate can remain stable, be higher than the other three algorithms, and has higher accuracy. The results obtained in the database Yale are shown in Figure 7.

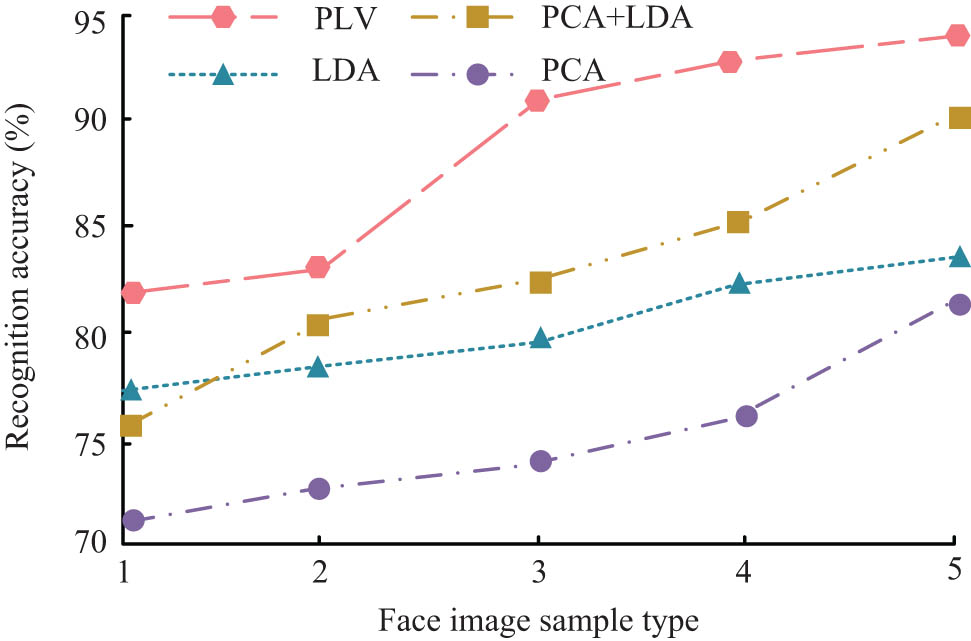

Recognition results of four algorithms in Yale database.

From Figure 7, in the data set Yale, the face recognition accuracy of the PCA algorithm is 78% at the highest and 72% at the lowest. The highest accuracy rates of PCA + LDA and LDA algorithms are 89 and 81%, respectively, while the proposed PLV algorithm is up to 93%, which is up to 15, 12, and 15% higher than PCA, LDA, and PCA + LDA, respectively. And the recognition rate gradually stabilized, improving the accuracy of face recognition. To further compare the face recognition performance of the four algorithms, the database Georgia Tech (GT) is selected, which contains 750 images of 50 people, and each person has 15 color images with different poses and expressions. The comparison is also carried out according to the appeal method, and the comparison of the recognition time is added at the same time, and the obtained results are shown in Figure 8.

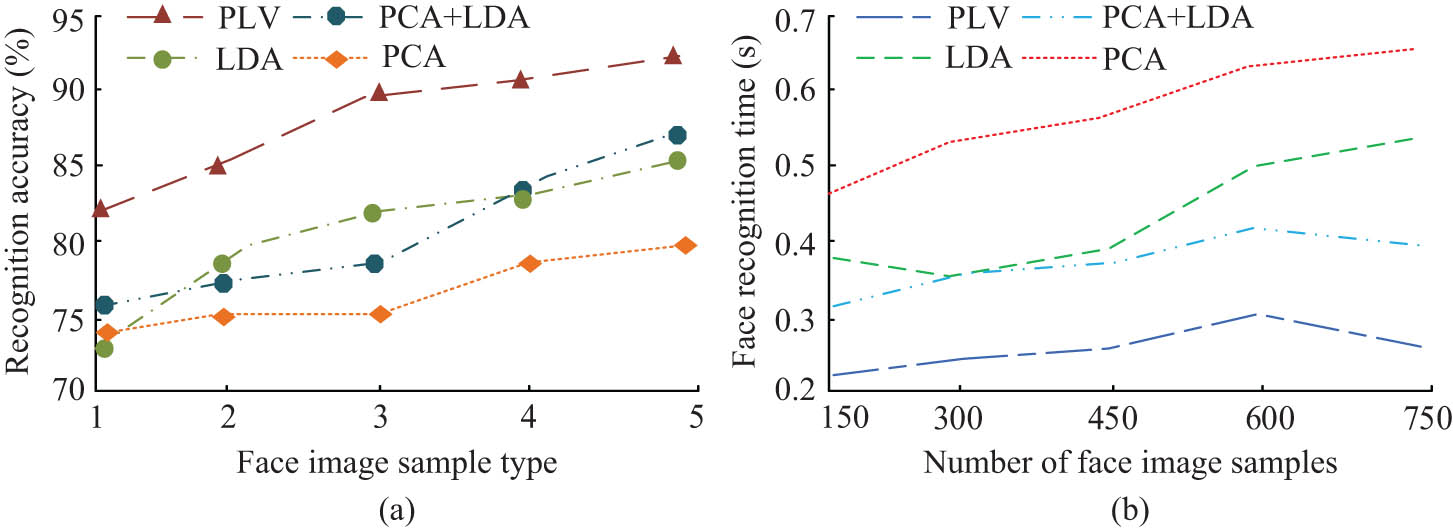

Recognition results of four algorithms in GT database. (a) Accuracy results under database GT. (b) Identification time change results under database GT.

Figure 8(a) is the result of face recognition accuracy in GT database, and Figure 8(b) is the result of recognition time. It can be seen from Figure 8(a) that, in the comparison of the recognition accuracy of the four algorithms, the PCA + LDA algorithm is the highest. The highest accuracy rates of PCA, LDA, and PCA + LDA are 76, 81, and 83%, respectively, while the highest PLV is 92% and the lowest is still above 80%. It can be seen from Figure 8(b) that the recognition time of the PCA algorithm gradually increases with the increase of face image samples, and the recognition time ranges from 0.4 to 0.7 s, which is the shortest among the four algorithms. The identification time of the LDA algorithm generally showed an upward trend, and the highest was 0.5 s. The time required by the PCA + LDA algorithm fluctuates in the range of 0.3–0.4 s, and the time difference between the algorithm and the LDA algorithm is small, but they are still better than the PCA algorithm. The time fluctuation of the proposed PLV algorithm is the smallest, reaching a peak value of 0.3 s when the number of face samples is 600, and the minimum is close to 0.2 s, which is lower than other algorithms and has the highest efficiency. Finally, the four algorithms are used in the face recognition of remote monitoring. The selected sample data are 800 face images collected by remote monitoring, and they are randomly divided into eight groups of 100 images each. The results obtained are shown in Figure 9.

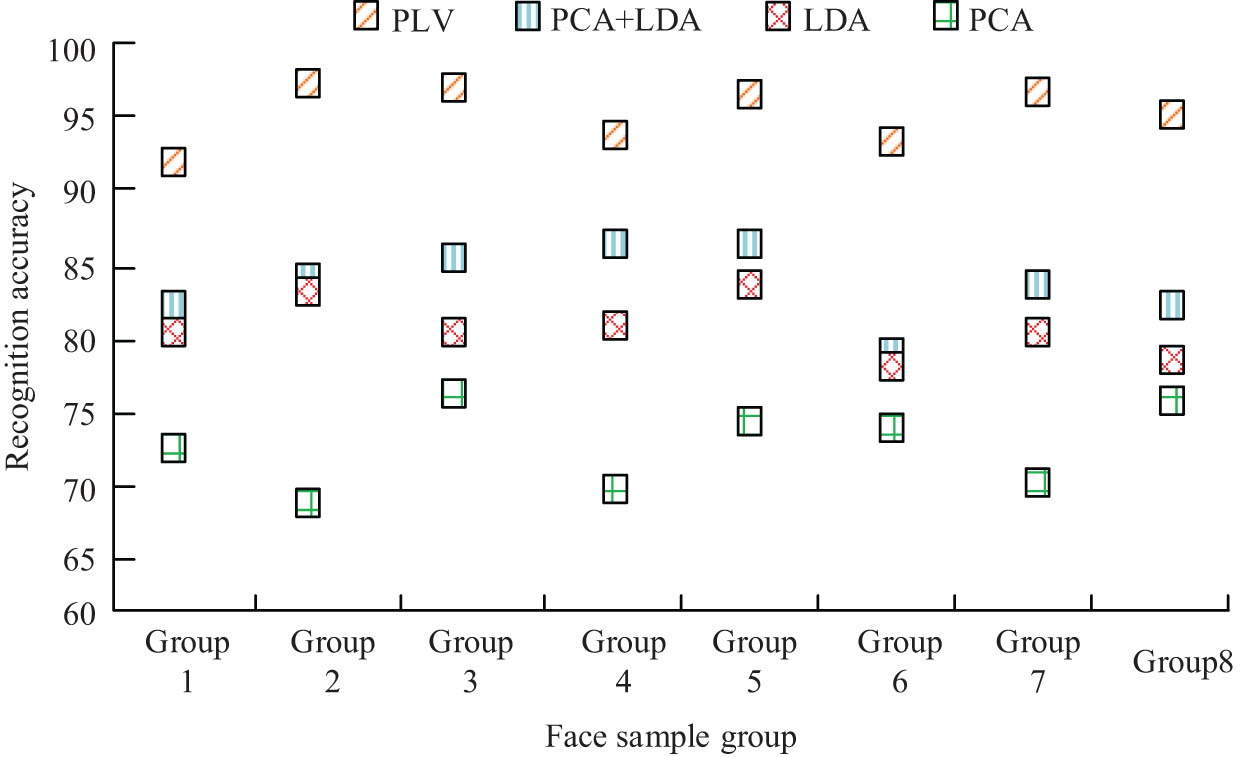

Recognition results in the selected face sample database.

As can be seen from Figure 9, in the selected sample database, the accuracy of the PCA algorithm is at a low level, with a peak value of 76%, and the peak accuracy of the LDA and PCA + LDA algorithms is 84 and 85%, respectively. The main reason is that there is a certain loss of face samples for remote monitoring. The peak accuracy rate of the PLV algorithm reached 98%, and the accuracy rate remained above 90% in the eight groups of face samples. This is mainly because it added the SVM algorithm, which can further classify and improve the processing of face samples and the recognition accuracy.

5 Conclusions

In the era of the IoT, monitoring technology is developing in the direction of networking and intelligence, and face recognition has gradually become a new problem for its further breakthrough. On the basis of analyzing the architecture of the IoT, the research applies IPv6 technology to video data collection and introduces the LDA algorithm to improve PCA. Then, it combines with SVM to form the PLV algorithm, which is applied to remote monitoring of face recognition. The experimental results show that in the data set ORL, PCA, LDA, and PCA + LDA have the highest accuracy of 87, 89 and 91% respectively, while the proposed PLV algorithm has the highest accuracy of 98%; In Yale database, PLV algorithm is 15, 2, and 12% higher than PCA, PCA + LDA, and LDA, respectively. In the GT face database, the recognition time of PCA and PCA + LDA fluctuates in the range of 0.4–0.7 s and 0.3–0.4 s, respectively, while the PLV algorithm has the longest recognition time of 0.3 s when the number of face images is 600, and the overall time required is lower than the other three methods. In face recognition under remote monitoring, the peak values of PCA + LDA, LDA, and PCA algorithms are 85, 84, and 76%, respectively, while PLV is up to 98%, which has high accuracy and operation efficiency, and can realize face recognition under remote monitoring. However, during the research, the number of remote monitoring samples selected is still small, so it is necessary to expand the sample range to further verify the accuracy of the results.

-

Funding information: This article has not received funding.

-

Author contributions: The first draft to the revised draft is completed independently by the author.

-

Conflicts of interest: It is declared by the authors that this article is free of conflict of interest.

-

Data availability statement: The datasets used and/or analyzed during the current study are available from the corresponding author on reasonable request.

References

[1] Huang X. Intelligent remote monitoring and manufacturing system of production line based on industrial Internet of Things. Comput Commun. 2020;150(2):421–8. 10.1016/j.comcom.2019.12.011.Suche in Google Scholar

[2] Mellit A, Benghanem M, Herrak O, Messalaoui A. Design of a novel remote monitoring system for smart greenhouses using the Internet of Things and deep convolutional neural networks. Energies. 2021;14(16):5045–51. 10.3390/en14165045.Suche in Google Scholar

[3] Sharma L. An improved local binary patterns histograms technique for face recognition for real time applications. Int J Recent Technol Eng. 2019;8(27):524–9. 10.35940/ijrte.B1098.0782S719.Suche in Google Scholar

[4] Larrain T, Bernhard JS, Mery D, Bowyer KW. Face recognition using sparse fingerprint classification algorithm. IEEE Trans Inf Forensics Secur. 2019;12(7):1646–57. 10.1109/TIFS.2017.2680403.Suche in Google Scholar

[5] Neto LB, Grijalva F, Maike VR, Martini LC, Florencio D, Baranauskas MC, et al. A Kinect-based wearable face recognition system to aid visually impaired users. IEEE Trans Human-Machine Syst. 2017;47(1):52–64. 10.1109/THMS.2016.2604367.Suche in Google Scholar

[6] Ibrayev T, Myrzakhan U, Krestinskaya O, Irmanova A, James AP. On-chip face recognition system design with memristive hierarchical temporal memory. J Intell Fuzzy Syst. 2018;34(3):1393–402. 10.3233/JIFS-169434.Suche in Google Scholar

[7] Chaudhry S, Chandra R. Face detection and recognition in an unconstrained environment for mobile visual assistive system – ScienceDirect. Appl Soft Comput. 2018;53(1):168–80. 10.1016/j.asoc.2016.12.035.Suche in Google Scholar

[8] Okokpujie K, John SN. Development of an illumination invariant face recognition system. Int J Adv Trends Comput Sci Eng. 2021;9(5):9215–20. 10.30534/ijatcse/2020/331952020.Suche in Google Scholar

[9] Rashid SJ, Abdullah AI, Shihab MA. Face recognition system based on Gabor wavelets transform, principal component analysis and support vector machine. Int J Adv Sci Eng Inf Technol. 2020;10(3):959–63. 10.18517/ijaseit.10.3.8247.Suche in Google Scholar

[10] Tamilselvi M, Karthikeyan S. An ingenious face recognition system based on HRPSM_CNN under unrestrained environmental condition. Alex Eng J. 2022;61(6):4307–21. 10.1016/j.aej.2021.09.043.Suche in Google Scholar

[11] Gunawan KW, Halimawan N. Lightweight end to end pose-robust face recognition system with deep residual equivariant mapping. Procedia Comput Sci. 2021;179(2):648–55. 10.1016/j.procs.2021.01.051.Suche in Google Scholar

[12] Abdurrahman MH, Darwito HA, Saleh A. Face recognition system for prevention of car theft with Haar Cascade and Local Binary Pattern Histogram using Raspberry Pi. EMITTER Int J Eng Technol. 2020;8(2):407–25. 10.24003/emitter.v8i2.534.Suche in Google Scholar

[13] Liu Z, Zhang H, Wang S, Hong W, Ma J, He Y. Reliability evaluation of public security face recognition system based on continuous Bayesian network. Math Probl Eng. 2020;2020(3):1–9. 10.1155/2020/6287394.Suche in Google Scholar

[14] Nagajayanthi B. Decades of Internet of Things towards twenty-first century: A research-based introspective. Wirel Personal Commun. 2022;123(4):3661–97. 10.1007/s11277-021-09308-z.Suche in Google Scholar PubMed PubMed Central

[15] Huu PN, Bao LH, The HL. Proposing an image enhancement algorithm using CNN for applications of face recognition system. J Adv Math Comput Sci. 2020;6(6):1–14. 10.9734/jamcs/2019/v34i630230.Suche in Google Scholar

[16] Saini R, Kumar P, Kaur B, Roy PP, Dogra DP, Santosh KC. Kinect sensor-based interaction monitoring system using the BLSTM neural network in healthcare. Int J Mach Learn Cybern. 2018;10(9):2529–40. 10.1007/s13042-018-0887-5.Suche in Google Scholar

[17] Elngar AA, Kayed M. Vehicle security systems using face recognition based on Internet of Things. Open Comput Sci. 2020;10(1):17–29. 10.1515/comp-2020-0003.Suche in Google Scholar

[18] Kwon GB. Implementation of communication between controlLogix (PLC) and Raspberry Pi based on EtherNet/IP Protocol. Trans Korean Inst Electr Eng. 2020;69(8):1286–94. 10.5370/KIEE.2020.69.8.1286.Suche in Google Scholar

[19] Thubert P, Winter T, Brandt A, Hui JW. RPL: IPv6 routing protocol for low-power and lossy networks. Internet Req Comment. 2019;6550(5):853–61.Suche in Google Scholar

[20] AbualhajMosleh MM, Al-Khatib SN. A new method to boost VoIP performance over IPv6 networks. Transp Telecommun J. 23(1):62–72. 10.2478/ttj-2022-0006.Suche in Google Scholar

[21] Deng X. Multiconstraint fuzzy prediction analysis improved the algorithm in Internet of Things. Wirel Commun Mob Comput. 2021;2021(5):1–7. 10.1155/2021/5499173.Suche in Google Scholar

[22] Rossion B, Lochy A. Is human face recognition lateralized to the right hemisphere due to neural competition with left-lateralized visual word recognition? A critical review. Brain Struct Funct. 2022;227(2):599–629. 10.1007/s00429-021-02370-0.Suche in Google Scholar PubMed

[23] Vu HN, Nguyen MH, Pham C. Masked face recognition with convolutional neural networks and local binary patterns. Appl Intell. 2022;52(5):5497–512. 10.1007/s10489-021-02728-1.Suche in Google Scholar PubMed PubMed Central

[24] Sharma S, Verma VK. An integrated exploration on internet of things and wireless sensor networks. Wirel Personal Commun. 2022;124(3):2735–70. 10.1007/s11277-022-09487-3.Suche in Google Scholar

[25] Karanwal S. A comparative study of 14 state of art descriptors for face recognition. Multimed Tools Appl. 2021;80(8):12195–234. 10.1007/s11042-020-09833-2.Suche in Google Scholar

[26] Zhu YN, Zhu CY, Li XX. Improved principal component analysis and linear regression classification for face recognition. Signal Process: Off Publ Eur Assoc Signal Processing (EURASIP). 2018;145(3):175–82. 10.1016/j.sigpro.2017.11.018.Suche in Google Scholar

[27] Shen Y, Ke J. Sampling based tumor recognition in whole-slide histology image with deep learning approaches. IEEE/ACM Trans Comput Biol Bioinforma. 2021;19(4):2431–41. 10.1109/TCBB.2021.3062230.Suche in Google Scholar PubMed

[28] Khosravy M, Nakamura K, Hirose Y, Nitta N, Babaguchi N. Model inversion attack: analysis under gray-box scenario on deep learning based face recognition system. KSII Trans Internet Inf Syst (TIIS). 2021;15(3):1100–18. 10.3837/tiis.2021.03.015.Suche in Google Scholar

[29] Sanin C, Haoxi Z, Shafiq I, Waris MM, de Oliveira CS, Szczerbicki E. Experience based knowledge representation for Internet of Things and Cyber Physical Systems with case studies. Future Gener Computer Syst. 2019;92(8):604–16. 10.1016/j.future.2018.01.062.Suche in Google Scholar

© 2023 the author(s), published by De Gruyter

This work is licensed under the Creative Commons Attribution 4.0 International License.

Artikel in diesem Heft

- Research Articles

- Salp swarm and gray wolf optimizer for improving the efficiency of power supply network in radial distribution systems

- Deep learning in distributed denial-of-service attacks detection method for Internet of Things networks

- On numerical characterizations of the topological reduction of incomplete information systems based on evidence theory

- A novel deep learning-based brain tumor detection using the Bagging ensemble with K-nearest neighbor

- Detecting biased user-product ratings for online products using opinion mining

- Evaluation and analysis of teaching quality of university teachers using machine learning algorithms

- Efficient mutual authentication using Kerberos for resource constraint smart meter in advanced metering infrastructure

- Recognition of English speech – using a deep learning algorithm

- A new method for writer identification based on historical documents

- Intelligent gloves: An IT intervention for deaf-mute people

- Reinforcement learning with Gaussian process regression using variational free energy

- Anti-leakage method of network sensitive information data based on homomorphic encryption

- An intelligent algorithm for fast machine translation of long English sentences

- A lattice-transformer-graph deep learning model for Chinese named entity recognition

- Robot indoor navigation point cloud map generation algorithm based on visual sensing

- Towards a better similarity algorithm for host-based intrusion detection system

- A multiorder feature tracking and explanation strategy for explainable deep learning

- Application study of ant colony algorithm for network data transmission path scheduling optimization

- Data analysis with performance and privacy enhanced classification

- Motion vector steganography algorithm of sports training video integrating with artificial bee colony algorithm and human-centered AI for web applications

- Multi-sensor remote sensing image alignment based on fast algorithms

- Replay attack detection based on deformable convolutional neural network and temporal-frequency attention model

- Validation of machine learning ridge regression models using Monte Carlo, bootstrap, and variations in cross-validation

- Computer technology of multisensor data fusion based on FWA–BP network

- Application of adaptive improved DE algorithm based on multi-angle search rotation crossover strategy in multi-circuit testing optimization

- HWCD: A hybrid approach for image compression using wavelet, encryption using confusion, and decryption using diffusion scheme

- Environmental landscape design and planning system based on computer vision and deep learning

- Wireless sensor node localization algorithm combined with PSO-DFP

- Development of a digital employee rating evaluation system (DERES) based on machine learning algorithms and 360-degree method

- A BiLSTM-attention-based point-of-interest recommendation algorithm

- Development and research of deep neural network fusion computer vision technology

- Face recognition of remote monitoring under the Ipv6 protocol technology of Internet of Things architecture

- Research on the center extraction algorithm of structured light fringe based on an improved gray gravity center method

- Anomaly detection for maritime navigation based on probability density function of error of reconstruction

- A novel hybrid CNN-LSTM approach for assessing StackOverflow post quality

- Integrating k-means clustering algorithm for the symbiotic relationship of aesthetic community spatial science

- Improved kernel density peaks clustering for plant image segmentation applications

- Biomedical event extraction using pre-trained SciBERT

- Sentiment analysis method of consumer comment text based on BERT and hierarchical attention in e-commerce big data environment

- An intelligent decision methodology for triangular Pythagorean fuzzy MADM and applications to college English teaching quality evaluation

- Ensemble of explainable artificial intelligence predictions through discriminate regions: A model to identify COVID-19 from chest X-ray images

- Image feature extraction algorithm based on visual information

- Optimizing genetic prediction: Define-by-run DL approach in DNA sequencing

- Study on recognition and classification of English accents using deep learning algorithms

- Review Articles

- Dimensions of artificial intelligence techniques, blockchain, and cyber security in the Internet of medical things: Opportunities, challenges, and future directions

- A systematic literature review of undiscovered vulnerabilities and tools in smart contract technology

- Special Issue: Trustworthy Artificial Intelligence for Big Data-Driven Research Applications based on Internet of Everythings

- Deep learning for content-based image retrieval in FHE algorithms

- Improving binary crow search algorithm for feature selection

- Enhancement of K-means clustering in big data based on equilibrium optimizer algorithm

- A study on predicting crime rates through machine learning and data mining using text

- Deep learning models for multilabel ECG abnormalities classification: A comparative study using TPE optimization

- Predicting medicine demand using deep learning techniques: A review

- A novel distance vector hop localization method for wireless sensor networks

- Development of an intelligent controller for sports training system based on FPGA

- Analyzing SQL payloads using logistic regression in a big data environment

- Classifying cuneiform symbols using machine learning algorithms with unigram features on a balanced dataset

- Waste material classification using performance evaluation of deep learning models

- A deep neural network model for paternity testing based on 15-loci STR for Iraqi families

- AttentionPose: Attention-driven end-to-end model for precise 6D pose estimation

- The impact of innovation and digitalization on the quality of higher education: A study of selected universities in Uzbekistan

- A transfer learning approach for the classification of liver cancer

- Review of iris segmentation and recognition using deep learning to improve biometric application

- Special Issue: Intelligent Robotics for Smart Cities

- Accurate and real-time object detection in crowded indoor spaces based on the fusion of DBSCAN algorithm and improved YOLOv4-tiny network

- CMOR motion planning and accuracy control for heavy-duty robots

- Smart robots’ virus defense using data mining technology

- Broadcast speech recognition and control system based on Internet of Things sensors for smart cities

- Special Issue on International Conference on Computing Communication & Informatics 2022

- Intelligent control system for industrial robots based on multi-source data fusion

- Construction pit deformation measurement technology based on neural network algorithm

- Intelligent financial decision support system based on big data

- Design model-free adaptive PID controller based on lazy learning algorithm

- Intelligent medical IoT health monitoring system based on VR and wearable devices

- Feature extraction algorithm of anti-jamming cyclic frequency of electronic communication signal

- Intelligent auditing techniques for enterprise finance

- Improvement of predictive control algorithm based on fuzzy fractional order PID

- Multilevel thresholding image segmentation algorithm based on Mumford–Shah model

- Special Issue: Current IoT Trends, Issues, and Future Potential Using AI & Machine Learning Techniques

- Automatic adaptive weighted fusion of features-based approach for plant disease identification

- A multi-crop disease identification approach based on residual attention learning

- Aspect-based sentiment analysis on multi-domain reviews through word embedding

- RES-KELM fusion model based on non-iterative deterministic learning classifier for classification of Covid19 chest X-ray images

- A review of small object and movement detection based loss function and optimized technique

Artikel in diesem Heft

- Research Articles

- Salp swarm and gray wolf optimizer for improving the efficiency of power supply network in radial distribution systems

- Deep learning in distributed denial-of-service attacks detection method for Internet of Things networks

- On numerical characterizations of the topological reduction of incomplete information systems based on evidence theory

- A novel deep learning-based brain tumor detection using the Bagging ensemble with K-nearest neighbor

- Detecting biased user-product ratings for online products using opinion mining

- Evaluation and analysis of teaching quality of university teachers using machine learning algorithms

- Efficient mutual authentication using Kerberos for resource constraint smart meter in advanced metering infrastructure

- Recognition of English speech – using a deep learning algorithm

- A new method for writer identification based on historical documents

- Intelligent gloves: An IT intervention for deaf-mute people

- Reinforcement learning with Gaussian process regression using variational free energy

- Anti-leakage method of network sensitive information data based on homomorphic encryption

- An intelligent algorithm for fast machine translation of long English sentences

- A lattice-transformer-graph deep learning model for Chinese named entity recognition

- Robot indoor navigation point cloud map generation algorithm based on visual sensing

- Towards a better similarity algorithm for host-based intrusion detection system

- A multiorder feature tracking and explanation strategy for explainable deep learning

- Application study of ant colony algorithm for network data transmission path scheduling optimization

- Data analysis with performance and privacy enhanced classification

- Motion vector steganography algorithm of sports training video integrating with artificial bee colony algorithm and human-centered AI for web applications

- Multi-sensor remote sensing image alignment based on fast algorithms

- Replay attack detection based on deformable convolutional neural network and temporal-frequency attention model

- Validation of machine learning ridge regression models using Monte Carlo, bootstrap, and variations in cross-validation

- Computer technology of multisensor data fusion based on FWA–BP network

- Application of adaptive improved DE algorithm based on multi-angle search rotation crossover strategy in multi-circuit testing optimization

- HWCD: A hybrid approach for image compression using wavelet, encryption using confusion, and decryption using diffusion scheme

- Environmental landscape design and planning system based on computer vision and deep learning

- Wireless sensor node localization algorithm combined with PSO-DFP

- Development of a digital employee rating evaluation system (DERES) based on machine learning algorithms and 360-degree method

- A BiLSTM-attention-based point-of-interest recommendation algorithm

- Development and research of deep neural network fusion computer vision technology

- Face recognition of remote monitoring under the Ipv6 protocol technology of Internet of Things architecture

- Research on the center extraction algorithm of structured light fringe based on an improved gray gravity center method

- Anomaly detection for maritime navigation based on probability density function of error of reconstruction

- A novel hybrid CNN-LSTM approach for assessing StackOverflow post quality

- Integrating k-means clustering algorithm for the symbiotic relationship of aesthetic community spatial science

- Improved kernel density peaks clustering for plant image segmentation applications

- Biomedical event extraction using pre-trained SciBERT

- Sentiment analysis method of consumer comment text based on BERT and hierarchical attention in e-commerce big data environment

- An intelligent decision methodology for triangular Pythagorean fuzzy MADM and applications to college English teaching quality evaluation

- Ensemble of explainable artificial intelligence predictions through discriminate regions: A model to identify COVID-19 from chest X-ray images

- Image feature extraction algorithm based on visual information

- Optimizing genetic prediction: Define-by-run DL approach in DNA sequencing

- Study on recognition and classification of English accents using deep learning algorithms

- Review Articles

- Dimensions of artificial intelligence techniques, blockchain, and cyber security in the Internet of medical things: Opportunities, challenges, and future directions

- A systematic literature review of undiscovered vulnerabilities and tools in smart contract technology

- Special Issue: Trustworthy Artificial Intelligence for Big Data-Driven Research Applications based on Internet of Everythings

- Deep learning for content-based image retrieval in FHE algorithms

- Improving binary crow search algorithm for feature selection

- Enhancement of K-means clustering in big data based on equilibrium optimizer algorithm

- A study on predicting crime rates through machine learning and data mining using text

- Deep learning models for multilabel ECG abnormalities classification: A comparative study using TPE optimization

- Predicting medicine demand using deep learning techniques: A review

- A novel distance vector hop localization method for wireless sensor networks

- Development of an intelligent controller for sports training system based on FPGA

- Analyzing SQL payloads using logistic regression in a big data environment

- Classifying cuneiform symbols using machine learning algorithms with unigram features on a balanced dataset

- Waste material classification using performance evaluation of deep learning models

- A deep neural network model for paternity testing based on 15-loci STR for Iraqi families

- AttentionPose: Attention-driven end-to-end model for precise 6D pose estimation

- The impact of innovation and digitalization on the quality of higher education: A study of selected universities in Uzbekistan

- A transfer learning approach for the classification of liver cancer

- Review of iris segmentation and recognition using deep learning to improve biometric application

- Special Issue: Intelligent Robotics for Smart Cities

- Accurate and real-time object detection in crowded indoor spaces based on the fusion of DBSCAN algorithm and improved YOLOv4-tiny network

- CMOR motion planning and accuracy control for heavy-duty robots

- Smart robots’ virus defense using data mining technology

- Broadcast speech recognition and control system based on Internet of Things sensors for smart cities

- Special Issue on International Conference on Computing Communication & Informatics 2022

- Intelligent control system for industrial robots based on multi-source data fusion

- Construction pit deformation measurement technology based on neural network algorithm

- Intelligent financial decision support system based on big data

- Design model-free adaptive PID controller based on lazy learning algorithm

- Intelligent medical IoT health monitoring system based on VR and wearable devices

- Feature extraction algorithm of anti-jamming cyclic frequency of electronic communication signal

- Intelligent auditing techniques for enterprise finance

- Improvement of predictive control algorithm based on fuzzy fractional order PID

- Multilevel thresholding image segmentation algorithm based on Mumford–Shah model

- Special Issue: Current IoT Trends, Issues, and Future Potential Using AI & Machine Learning Techniques

- Automatic adaptive weighted fusion of features-based approach for plant disease identification

- A multi-crop disease identification approach based on residual attention learning

- Aspect-based sentiment analysis on multi-domain reviews through word embedding

- RES-KELM fusion model based on non-iterative deterministic learning classifier for classification of Covid19 chest X-ray images

- A review of small object and movement detection based loss function and optimized technique