Abstract

Classifier predicates, also known as depictive verbs, are complex signs used to describe motion or localization events in sign languages. Every component of the classifier predicate bears its own meaning: the handshape refers to the semantic class of the referent (e.g., human, car, a round object, etc.), while the trajectory of the motion, its manner and the localization of the sign iconically represent the described event. In this exploratory study, we compare the factors influencing the choice between classifier 1- and 2-handshapes for anthropomorphic referents in two sign languages – Russian Sign Language and Sign Language of the Netherlands (NGT), by comparing data from two parallel subcorpora of cartoon retellings. The findings of this research reveal that both languages use both classifiers for human(-like) referents but the proportion of the use of 1-handshape and 2-handshape is different. Additionally, we identified various morphological, syntactic, and semantic factors that might influence the choice between the two handshapes. Some of these factors have a similar effect in both sign languages, and others influence the choice between the handshapes in the two sign languages in different, often contrasting ways. This observation highlights the linguistic status of whole-entity classifier handshapes for anthropomorphic referents despite the high level of iconicity of classifier constructions.

1 Introduction

Classifier predicates in sign languages are complex signs used to describe motion or localization events. Every component of the classifier predicate bears its own meaning: the handshape refers to the semantic class of the referent (e.g., human, car, a round object, etc.), while the trajectory of the motion, its manner, and the localization of the sign iconically represent the described event. In this study, we focus on the whole-entity classifiers, that is, classifier constructions where the handshape as a whole refers to entities of a particular semantic class. The literature on classifier constructions in sign languages also features research on other types of classifier constructions (e.g., size-and-shape specifiers, handling classifiers, etc. (Zwitserlood 2012)), which are, however, not discussed in the present study.

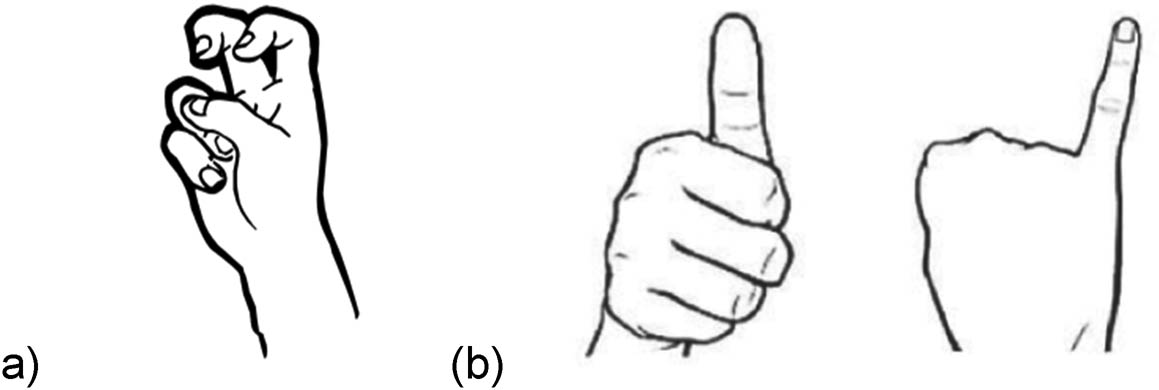

Research shows that the inventories of classifier handshapes differ across sign languages in terms of the handshapes and distinguished semantic classes of referents (Zwitserlood 2012). In this article, we focus on yet another facet of classifier diversity, namely synonymous classifier handshapes, that is, different handshapes referring to a single semantic class of referents. Specifically, we investigate two classifier handshapes denoting anthropomorphic referents (e.g., humans, fictional human-like animals, etc.): the handshape with the extended index finger pointed upward representing the upright human torso (further referred to as 1-handshape; Figure 1a) and the handshape with the extended index and middle fingers pointing downward representing legs (2-handshape; Figure 1b).

While many sign languages use both handshapes to encode anthropomorphic referents in classifier constructions, the factors influencing the choice between handshapes remain unclear.

In this study, we compare the factors influencing the choice between 1-handshape and 2-handshape for anthropomorphic referents in two sign languages – Russian Sign Language (RSL) and Sign Language of the Netherlands (NGT), by comparing data from two parallel subcorpora of cartoon retellings. The research finds that both languages use both classifiers for human(-like) referents but the proportion of the use of the 1-handshape and the 2-handshape is different. Additionally, we found that various morphological, syntactic, and semantic factors influence the choice between the two handshapes. Some of these factors have a similar effect in both sign languages, others influence the choice between the handshapes in the two sign languages in different, often contrasting ways. This observation highlights the linguistic status of classifier handshapes despite the high level of iconicity of classifier constructions.

2 Classifier handshapes in sign languages

The formal approaches to classifier constructions and the term ‘classifier’ in application to the sign language phenomenon in focus have been at the epicenter of a heated debate since the earliest stages of sign language research. Starting from the pioneering work by Frishberg (1975) followed by Supalla (1982, 1986), Meir (2001), and many others, sign language researchers have been studying these highly iconic complex signs in terms of their similarities and differences with respect to spoken language phenomena. Thus, the term ‘classifier’ was initially inspired by spoken language classifier morphemes, which, like classifier handshapes in sign languages, refer to the semantic class of the referent.[1] Consider the example (1) from Imonda (Papuan), where a classifier morpheme attaching to the verb give specifies the class of the object (a flat object vs a small animal).

| (1) Imonda (Papuan; (Seiler 1985, 120–1)) | ||||||

| a. | maluõ | ka-m | lëg-ai-h-u | |||

| clothes | 1sg-obj | clf:flat.object-give-ben-imp | ||||

| “Give me a piece of clothing!” | ||||||

| b. | tõbtõ | ka-m | u-ai-h-u | |||

| fish | 1sg-obj | clf:small.animal-give-ben-imp | ||||

| “Give me the fish!” | ||||||

Using typological spoken language data as a starting point, scholars of sign languages have been putting forward a variety of theories of how classifiers integrate into the grammar of sign language. Classifier handshapes have thus been analyzed as noun incorporation (Zwitserlood 2003), pronominal arguments (Baker 1996), argument-introducing functional heads (Benedicto and Brentari 2004), agreement markers (Kimmelman et al. 2019), and predicate modifiers (Kimmelman and Khristoforova 2018).

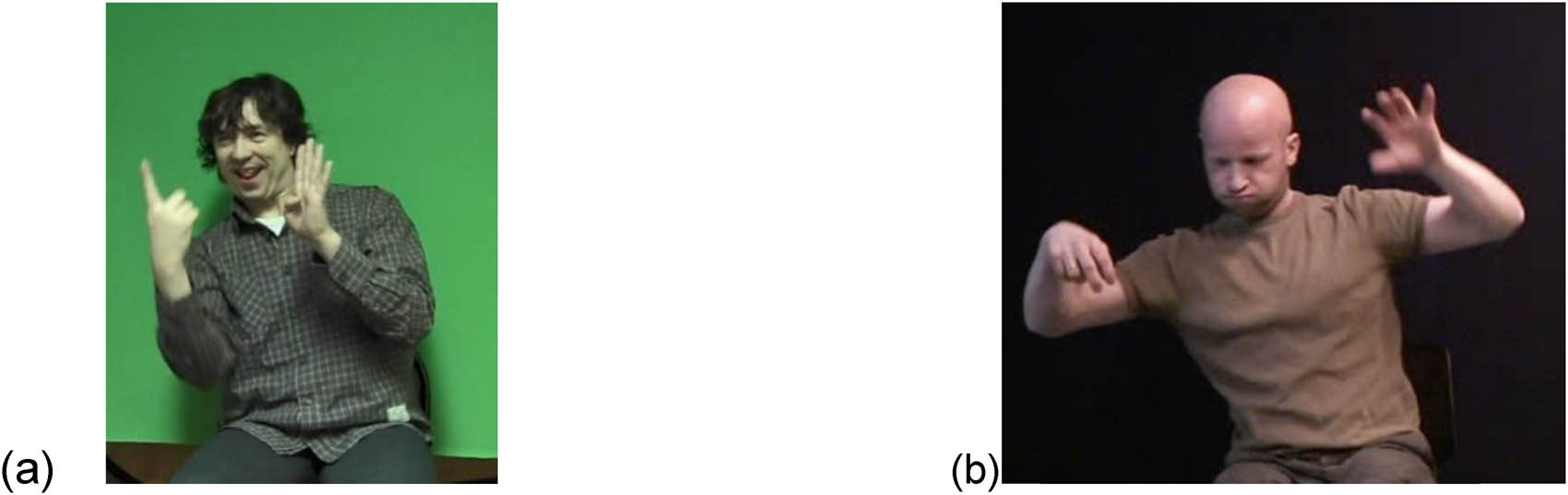

Importantly, classifier handshapes differ across sign languages both in terms of their form and the semantic classes represented. Thus, the classifier handshapes for a vehicle in RSL, NGT, and American Sign Language (ASL) have distinctively different shapes as shown in Figure 2 (Khristoforova 2017, Zwitserlood 2003, Supalla 1982).

ASL also distinguishes a handshape for ‘small animals’ as illustrated in Figure 3a, while RSL and NGT, for instance, do not allocate a separate semantic class to small animal referents and use 1- and 2-handshapes (Figure 1) to refer to all animate referents. Japanese, Korean, and Taiwan Sign Languages famously employ gender classifiers distinguishing between classifier handshapes for male referents (extended thumb) and female referents (extended pinky) (Figure 3b shows an example from Korean Sign Language; McBurney 2002, Fischer and Gong 2010, Nam and Byun 2022), while none of the sign languages in Europe is found to mark gender distinction in the classifier system.

Classifier handshapes for (a) small animals in ASL, and (b) classifiers for male (on the left) and female referents (on the right) in Korean Sign Language (Nam and Byun 2022, 73).

In parallel with the studies building upon the comparison between classifier constructions in spoken and signed languages, a different group of sign language researchers have been arguing against using spoken language notions and terms in application to the construction under discussion (Cogill-Koez 2000, Liddell 2003, Schembri 2003, Johnston and Schembri 2007, Cormier et al. 2012, Beukeleers and Vermeerbergen 2022, Kurz et al. 2023). Instead, some scholars suggest terms such as ‘polycomponential verbs’ (Slobin et al. 2001, Schembri 2003) or ‘depicting verbs’ (Liddell 2003, Cormier et al. 2012), thereby emphasizing the need to analyze these constructions in their own right without alluding to spoken language phenomena, or at least to acknowledge the presence of modality-specific and gestural features of such constructions. Observing similarities that classifier constructions share with gestures used by hearing speakers (Schembri et al. 2005, Quinto‐Pozos and Parrill 2015, Cormier et al. 2012), some of these researchers further conclude that classifier constructions can be analyzed as a combination of morphological and gestural elements. In this sense, they resemble multimodal (gesture + speech) constructions in spoken languages, with the difference of being unimodal in the visual modality (Schembri et al. (2018) for a similar proposal for analyzing agreeing/indicating verbs in sign languages).

Specifically, the studies comparing gestures of non-signers and classifier constructions produced by signers reveal that the movement patterns used by both groups exhibit a considerable overlap. At the same time, the handshapes used by signers of different sign languages and gesturers are shown to diverge significantly. This discrepancy between the unity of the movement patterns and diversity of handshapes tentatively suggests that different components of classifier constructions may have different levels of integration into the grammatical system of the language, and hence potentially different analysis in terms of gestural elements vs morphemes.

Alongside the typological diversity of classifier handshapes illustrated above, the current study thus provides yet another argument in favor of the morphological analysis of the handshape component of the classifier construction by zooming on into the synonymous handshapes for human referent in NGT and RSL (Figure 1) and observing differences and similarities between the two sign languages and the linguistic factors underlying the choice between the handshapes.

3 Methods

3.1 The data sets

To investigate the properties of the classifier 1-handshape and 2-handshape, we analyzed two parallel subcorpora of the online RSL corpus (Burkova 2015) and the Corpus NGT (Crasborn et al. 2008). Both subcorpora represent retellings of eight short episodes of ‘Canary Row’ cartoon (Freleng 1950), which often serves as an elicitation material for sign language research (Kimmelman et al. 2019, Quadros et al. 2020, Loos and Napoli 2023) and for gesture studies (Kita and Özyürek 2003), making it convenient elicitation material for the purposes of comparative typological research across sign languages and beyond.

The Canary Row subcorpus of the online RSL corpus contains 49 retellings recorded from 13 native RSL signers (29–60 y.o., 7 females) primarily residing in Moscow. Every signer provided retellings for maximally four out of eight cartoons. Thus, the whole story (eight cartoons) was retold six times.

The Canary Row data set from the NGT corpus analyzed for the current study contains 73 retellings recorded from 20 signers from the Amsterdam region (17–81 years, 12 females). Every signer provided retellings for maximally four out of eight cartoons. Thus, the whole story (eight cartoons) was retold ten times.

3.2 Annotation

For both languages, we followed the annotation guidelines jointly developed within the framework of ‘Whole-Entity Classifiers in Sign Languages: A Multiperspective Approach’ project. The project aimed to investigate how whole-entity classifier handshapes function across different sign languages, including the factors that influence classifier handshape choice. The members of the core working group jointly developed the annotation guidelines based on existing research and a new exploration of corpus data, which was then used by the project members as well as other teams working on different sign languages for consistent cross-linguistic comparison.

The guidelines provide unified instructions on which classifier handshapes to annotate, how to distinguish classifier predicates from lexical signs, and how to annotate different variants of the forms of 1-handshape and 2-handshape. The guidelines also provide a list of factors that may influence the use of one of the handshapes. In what follows, we summarize the main points outlined in the guidelines. The full guidelines can be accessed at: https://osf.io/m7kt9/.

3.2.1 Exclusion criteria

For this study, we annotated only constructions involving 1-handshape and 2-handshape (including their variants, refer to Section 3.2.2), that refer to anthropomorphic referents, that is humans and human-like creatures (e.g., animals endowed with human-like properties, usually in a fictional context). Note that both handshapes can be used for non-anthropomorphic referents, specifically for thin oblong objects, but those cases are excluded from the current analysis.

As the study focuses on classifier constructions and their properties, it is important to differentiate the classifier constructions from lexical signs. This is not a trivial task since many lexical signs look superficially similar to classifier constructions, which can be potentially attributed to the lexicalization of classifier constructions (Supalla 1982, Johnston and Schembri 1999); see also Zwitserlood (2003) for an alternative analysis. Below we provide a list of criteria distinguishing lexical signs and classifier constructions with examples.

Note that our criteria, while based on previous research, are primarily designed as a methodological tool for the practical task of selecting and annotating the relevant classifier predicates. For this reason, not all differences between lexical and productive signs are considered. For example, Johnston and Schembri (1999, 136) also discuss that productive signs may not obey all phonological constraints found in lexemes, that there is less dialectal variation in these signs, and that the signer is less likely to make eye contact with the addressee and more likely to look at the hands. These observations can be tested and analyzed using our data set in future studies.

![Figure 4

Lexical sign DIE in RSL. RSLM-s1-s13-a11 [00.03.15:100–00.03.16.450].](/document/doi/10.1515/opli-2025-0047/asset/graphic/j_opli-2025-0047_fig_004.jpg)

Lexical sign DIE in RSL. RSLM-s1-s13-a11 [00.03.15:100–00.03.16.450].

![Figure 5

Lexical sign FALL in RSL. RSLM-s1-s14-a15 [00.06.29:430–00.06.29:930].](/document/doi/10.1515/opli-2025-0047/asset/graphic/j_opli-2025-0047_fig_005.jpg)

Lexical sign FALL in RSL. RSLM-s1-s14-a15 [00.06.29:430–00.06.29:930].

The handshape must refer to the moving or localized referent. Consider the RSL verbal sign DIE (Figure 4), which is reminiscent of and potentially derived from a classifier construction. The sign involves the 2-handshape with downward hand motion starting from the non-dominant hand shoulder accompanied by a wrist rotation. If an observer is not acquainted with RSL, they might interpret the sign as a classifier construction given that there is a movement of a hand with a 2-handshape. However, the lexical meaning of this sign does not imply either movement or specific location assigned to the referent. Hence, the sign is excluded from the analysis. The movement can be interpreted depictively. If the sign in question, unlike DIE above, does have a movement-related meaning, it can only be considered a classifier construction if the hand motion iconically represents the described motion event. Consider, for instance, the RSL sign FALL (Figure 5; a similar sign is also used in NGT) which shares several formal properties with the sign DIE discussed above. Thus, this sign also involves the 2-handshape and a downward hand motion with wrist rotation. Unlike DIE, FALL is articulated in the neutral signing space (i.e., in front of the signer’s chest) and can optionally involve the second hand representing the ground. Although signifying a motion event, FALL does not fulfill the iconicity criterion as the wrist rotation involved in the sign may not represent the trajectory of the described movement: the sign is used in the same fixed form regardless of the actual movement trajectory. Like DIE, FALL would not be analyzed as a classifier construction in our analysis.

The cases of FALL and DIE are rather straightforward with respect to the analysis. The matters can get more complicated when the described movement and the hand movement just happen to coincide as opposed to a deliberate iconic mapping between the two. Let us consider the RSL sign MEET (Figure 6; a similar sign is also used in NGT). The sign involves a 1-handshape on both hands approaching each other toward the center of the neutral signing space in a straight line. This sign can have a generalized lexical meaning to meet which may apply to any event involving the meeting of two anthropomorphic referents regardless of the movement involved. If the meeting event involves a non-straight motion path or no motion at all, it would violate the iconicity criterion and the sign would be disregarded for the analysis.

![Figure 6

Lexical sign MEET in RSL. RSLM-s3-s15-a14 [00.00.44:450–00.44:460].](/document/doi/10.1515/opli-2025-0047/asset/graphic/j_opli-2025-0047_fig_006.jpg)

Lexical sign MEET in RSL. RSLM-s3-s15-a14 [00.00.44:450–00.44:460].

However, if two anthropomorphic referents do approach each other in a straight line, for the specific context we won’t be able to tell if the sign is used as a classifier construction or as a lexical sign. As specified in Section 3.2.3, such cases receive a label ‘congruent’ on the tier CLP congruence and are further analyzed together with the rest of the data.

Upon application of the criteria, we identified a total of 286 classifier constructions for NGT and 330 for RSL. Below, we specify how different handshape variants were annotated (Section 3.2.2) and which factors were described for each annotation entry (Section 3.2.3) according to the guidelines.

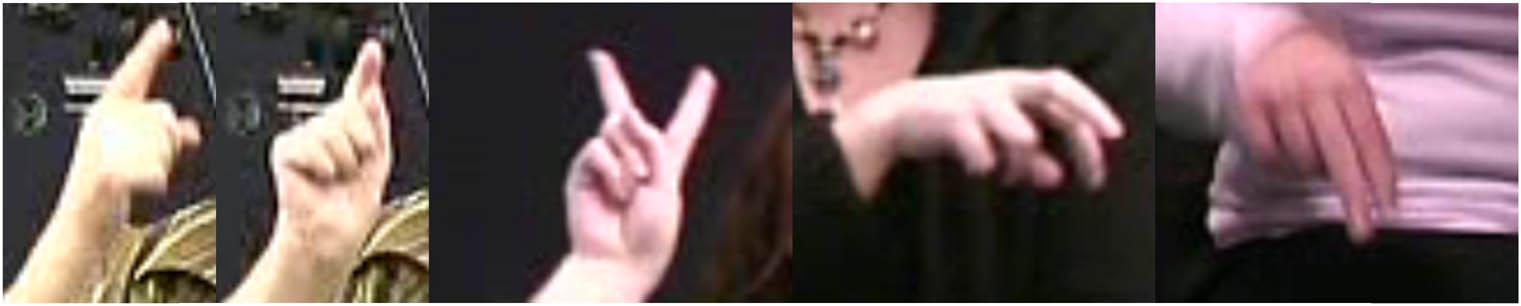

3.2.2 Variants

The 1- and 2-handshapes in our data sets come in slightly different forms without always identifiable differences in meaning. We annotated these forms with different labels on the handshape tier ( CLP handshape ). For both 1-handshape and 2-handshape, the extended fingers can be slightly bent. In this case, the form is annotated as ‘1b’ or ‘2b’, where b stands for bent. ‘2’ handshape can also involve internal movement of the fingers encoded as ‘2m’ and an extended thumb encoded as ‘2t’. If the two extended fingers in ‘2’ handshape are not spread, the form is annotated as ‘N’ with the possibility for ‘Nb’ and ‘Nm’ variants. The modifiers ‘t’, ‘b’ and ‘m’ can be combined (e.g., ‘2bm’ or ‘Nbm’). Figure 7 shows some of the handshape variants (from NGT). For some parts of the statistical analysis, these form labels were collapsed into two groups: ‘1’ and ‘2’.

Handshape variants 1, 1b, 2, 2bm (movement not depicted), and N.

3.2.3 Annotated factors

Below we provide the factors that were annotated for each classifier construction. The name of the respective ELAN tier is provided in brackets for each factor.

As mentioned above, the two defining functions of classifier constructions in this study are description of motion events and localization events (tier CLP event ). The motion classifier construction denotes the movement of the referent iconically mapped onto the signing space. The localizing classifier construction positions the referent in the signing space, making it potentially available for future reference and other manipulations.

For motion events, different movement features were annotated. The motion can be either controlled by the referent (e.g., running, walking, jumping) or it can be uncontrolled, in which case the referent undergoes the motion without being able to affect it (e.g., falling) (tier CLP controlled ). There are also two basic features of motion that can be encoded within the sign: the path of motion (e.g., straight line, a spiral, etc.) and/or its manner (e.g., jumping, limping, running, etc. (tier CLP manner )). The latter is often encoded via the movement of the fingers mentioned above or a bouncing movement of the hand. The classifier construction can provide information on both features or only one of them. It seemed plausible that one of the handshapes might be more likely to occur with either path or manner. The CLP manner tier provided only information if path manner or both are encoded in the sign. If the path is encoded, we would, of course, want to know which form it takes, for instance, if it is straight, spiral, arc, circular, or other shape (tier CLP movement ). The final motion characteristic that we annotated was the direction of the motion with respect to the signer, for instance toward the signer, away from the signer, up, down, right, left (tier CLP direction ).

For both localization and motion events, we specified if the second hand was used in the classifier constructions and what kind or referent it represented (tier CLP second hand ). In classifier constructions involving two hands but also in single-handed constructions, we were interested to see if an interaction between the referents is encoded within the sign. It can thus be either interaction between the referent on two hands or between a referent on the one hand and the body of the signer enacting a second referent in the interaction (tier CLP interaction ).

Another factor taken into account was the discourse status of the handshape referent within a clause, i.e. if we are dealing with the first mention of the referent, if it is being maintained throughout the stretch of the discourse or if it is being reintroduced after not being mentioned in the preceding chunk of the narrative (tier CLP DS [discourse status]).

Finally, for comparative purposes, we annotated the specific scenes described by the classifier constructions (tier CLP scene ) and their generalized meaning (tier CLP meaning ). For instance, one of the main characters, cat Sylvester, is descending the stairs in the first episode of the cartoon. It appeared useful to us to be able to compare which individual signers within each language and between languages chose to describe this scene with the 1-handshape and which with the 2-handshape. We also assumed that the similar situations throughout the episodes would elicit predicates with potentially similar meanings, and hence, we wanted to know if those would be used with the same classifier handshapes.

Table 1 provides the summary of the ELAN tiers implemented in the annotation process in accordance with the guidelines.

Annotation scheme used in the current study

| Elan tier | Values |

|---|---|

| CLP handshape | 1, 2, N + modifiers: b – bent fingers; m – moving fingers; t – thumb opposed |

| CLP congruence | Congruent/depictive |

| CLP event | Motion/localization/unclear |

| CLP controlled | Controlled/uncontrolled/unclear |

| CLP manner | Manner/path/both/unclear |

| CLP movement | List is not fixed. Possible values: complex (if the movement cannot be described by one of the categories because of turns/stops, etc.) |

| CLP direction | List is not fixed. Possible values: toward the signer, away from the signer, up, down, right, left, etc. |

| CLP second hand | Human (including anthropomorphic animals)/animate/inanimate |

| CLP interaction | Yes(body)/yes(hand)/no/unclear |

| CLP DS | Introduced/reintroduced/maintained/unclear |

| CLP scene | See the guidelines for the full list of the scenes |

| CLP meaning | List is not fixed. Possible values: jump, sit, fall, arrive, appear, leave, bump into, approach, pass by |

| CLP comment | Any comments |

3.3 Data processing and statistical analysis

Once the data were annotated according to the guidelines outlined above, we proceeded with the data processing, followed by qualitative and statistical analysis. For the purposes of convenient data representation and processing we used the signglossR package (Börstell 2022) in R (R Core Team 2022), which is a convenient tool to efficiently convert ELAN annotations into csv files that can be fed into statistical models in R. We further used tidyverse (Wickham et al. 2019) and lme4 (Bates et al. 2015) for data processing and statistical analysis.

For the majority of questions, we initially used cross-tables to explore the distribution of categories (e.g., handshapes) and relations between different factors (e.g., handshape and discourse status). Whenever possible, we used generalized mixed-effect regression models (glmer function from the lme4 package (Bates et al. 2015)) with the signer as a random factor to assess the statistical significance of the difference in the proportions of the classifier handshapes between the two sign languages, the difference in the effect of the abovementioned factors on the choice of a classifier handshape and the interactions between these effects. However, this was not always possible due to the relatively small data set, the large number of potential factors we coded for, and the large number of levels within some of the factors.

We want to emphasize that the study is by design exploratory (as opposed to hypothesis-testing). At the outset, we did not have a clear hypothesis of what factors would and would not affect handshape choice. Therefore, we decided to code for and then explore quantitatively a large number of factors, some with a large number of levels. With such a design, individual models for the effects of certain factors, and especially the significance levels produced by the models, should be treated with much caution. Whenever we find a clear and significant effect of a factor, we treat it as an indication that this effect should be further explored in future studies, with larger data sets and smaller number of variables.

The data and the code for the data processing and statistical analysis are available on our OSF webpage: https://osf.io/fc25a/.

4 Results

In total, we found 330 relevant classifier predicates in RSL and 286 in NGT. In both languages, the 2-handshape variants are used considerably more often than the 1-handshape variants, but more so in RSL than in NGT (16% of 1-handshape in NGT, 10% in RSL). The difference between the languages is statistically significant (estimated log odds ratio, 0.69; p-value = 0.03).

4.1 Handshape variants

Zooming in on the specific handshape variants, it is clear that 1-handshape is not very varied in either language: in RSL, it only has the single variant 1, while in NGT, there are three instances of the 1-handshape with the bent index finger (1b). In contrast, 2-handshape is quite varied in both languages and includes variants with and without spreading (2 vs N), with or without bending (e.g., 2b vs 2, or Nb vs N), and with or without moving fingers.

We first looked at the distribution of spreading vs non-spreading handshapes. In both languages, the spread variant is more common (72% in NGT, 84% in RSL). The difference between the languages is statistically significant (estimated log odds ratio 0.91, p < 0.001). Based on our observations, the difference between spreading and non-spreading 2-handshapes is not meaningful, but it is interesting to note that the distributions vary between the languages.

Next, we also considered the distribution of handshapes with moving vs non-moving fingers. In both languages, the handshapes with non-moving fingers are more common (77% in NGT, 75% in RSL), and the difference between the languages is not significant. The difference between moving and non-moving fingers is meaningful and will be discussed in more detail below.

After exploring the variants, we grouped all the 1-variants in one single category, and all the 2-variants in another single category, and proceeded to explore how the various factors correlate with the choice between these two categories in the two sign languages.

4.2 Factors not correlated with handshape choice

We found that some factors do not correlate with the choice between the two handshape categories. These are discourse status and congruence. With respect to congruence, we observed that, in both languages, there are barely any uses of the 1-handshape that can be classified as congruent (5 for NGT (10%) and 4 for RSL (12%)). This probably indicates that lexical signs with this handshape rarely describe motion or localization events, and, therefore, classifier predicates in our data cannot be congruent with them under the exclusion criteria discussed above. For the 2-handshape, 14% of NGT and 12% or RSL classifier predicates with this handshape are annotated as congruent. Due to the very small number of entries for congruent classifier predicates involving 1-handshape, it seems unreasonable to test the effect of the congruence on the choice between the handshapes. The difference between the languages in terms of congruent entries is also not significant.

Concerning discourse status, the majority of cases in both languages are cases of maintained referents, which is natural given that the stories involve a small number of referents that engage in a variety of activities. The distributions of discourse types are similar for the 1 vs 2 handshapes (for both handshapes, maintained is the main type of discourse status), and also similar across the two languages. Note that from this we cannot conclude that discourse status does not correlate with handshape choice in these languages. A data set more varied in terms of discourse status of the referents would be required to further explore this issue.

4.3 Factors correlated with handshape choice qualitatively

A number of factors do appear to correlate with handshape choice in RSL and NGT, but due to the very skewed distributions (typically, the scarcity of 1-handshape entries), or due to the difficulty of annotating the factor (for instance, when the factor is hard to split into clear categories), we cannot analyze the effects quantitatively.

Specifically, in both data sets, localization events (unlike motion events) are only compatible with the 2-handshape. A brief corpus search outside of the scope of chosen subcorpora shows that in fact, in both languages, localization is compatible with the 1-handshape. However, when 1-handshape is used to localize a referent, this is typically done to express contrastive location between two or more referents (Figure 8). Such cases do not occur in the data sets analyzed for the current study.

Example of the use of two instances of the 1-handshape on the two hands, expressing contrastive localization of two referents. Source: Corpus NGT (NB does not come from the data set analyzed for the current study).

Another category, which is impossible to analyze quantitatively for our data sets due to the difficulty of annotation, is the category of potential lexical meaning of the classifier predicate. As mentioned before, the meaning of the classifier constructions can only be approximated toward a semantically related lexical element in the annotation language. For example, a classifier predicate describing a falling event might actually convey much more information than just the fact of falling, such as a specific trajectory, manner, etc., and so labeling its meaning as ‘to fall’ is questionable. Such an approach lacks systematicity, which may affect the quality of the annotation. Nevertheless, we can observe some tendencies. In both languages, the activity of jumping is almost exclusively conveyed by the 2-handshape and the activity of approaching with the 1-handshape. In RSL, the activity of turning around the corner is associated with the 1-handshape (Figure 9).

![Figure 9

A classifier predicate meaning ‘turns around the corner’ in RSL. RSLM-m4-s57-d-std [00.00.33:345–00.00.34:532].](/document/doi/10.1515/opli-2025-0047/asset/graphic/j_opli-2025-0047_fig_009.jpg)

A classifier predicate meaning ‘turns around the corner’ in RSL. RSLM-m4-s57-d-std [00.00.33:345–00.00.34:532].

An alternative way of looking at meaning affecting handshape choice is to look at the specific episodes of the cartoons. However, we found that not all signers choose to use a classifier construction for each and the same episode, which hinders quantitative analysis. We do observe some tendencies though. For example, in NGT, some episodes have a preference for the 1-handshape: the cat climbing inside a pipe and the cat flying up; and some episodes almost exclusively use the 2-handshape: the cat walking back and forth and the cat walking on electric cords. As we will discuss below, this might be explained by a combination of other factors that influence handshape choice.

Another factor that can only be analyzed qualitatively is the shape of movement. As with some of the previous factors, due to the lack of a clear list of pre-specified categories, this factor is difficult to annotate. One interesting pattern emerges, however. In NGT, some classifier predicates have a complex straight bouncy movement: the hand moves straight in some direction, but also bounces up and down slightly, presumably to refer to running/moving fast (Figure 10). This movement pattern is only compatible with the 2-handshape. In RSL, the bouncy movement is almost never used (only 2 clear cases in the data set).

![Figure 10

A classifier predicate with straight bouncy movement, meaning ‘running on the electric cord’, NGT. CNGT1718. [00.00.31:875–00.00.32:825].](/document/doi/10.1515/opli-2025-0047/asset/graphic/j_opli-2025-0047_fig_010.jpg)

A classifier predicate with straight bouncy movement, meaning ‘running on the electric cord’, NGT. CNGT1718. [00.00.31:875–00.00.32:825].

A final factor to discuss here that possibly plays a role in handshape choice is movement direction. Here we observe the following trend: in NGT, the upward movement direction is frequently used with the 1-handshape. In contrast, in RSL this movement direction is not used with the 1-handshape, and direction in general doesn’t seem to be correlated with handshape choice.

4.4 Factors correlated with handshape choice quantitatively

As discussed in Section 3, this research is exploratory by nature, as we did not know in advance what factors can correlate with handshape choice, and focused on exploring a large number of potential factors with a relatively small data set. Therefore, the results of the statistical analysis, and especially the reported statistical significance, should be treated with caution.

With this caveat in mind, some factors appear to significantly correlate with the choice of handshape in RSL and NGT, and there are differences between the languages in these correlations.

4.4.1 The role of the second hand

We start with the factors of interaction and the use of the second hand, as these two factors are intertwined. Recall that we coded for interaction between the referent of either 1- or 2-handshape within the classifier predicate, and another referent, either expressed by the body of the signer, or by the second hand. However, it turned out that interaction with the body of the signer is extremely rare (one case in RSL, four cases in NGT) – although all the cases notably concern the 1-handshape. We thus focused only on the cases of interaction with the referent on the second hand vs absence of interaction.

It appears that interaction with the second hand has a different effect in RSL vs NGT. In RSL, the 2-handshape co-occurs with another referent on the second hand in 75% of the total instances of the 2-handshape, while the 1-handshape in 50% of the instances. In NGT, while the distribution of the 1-handshape is similar (55% of co-occurrence with another referent on the second hand), the pattern for the 2-handshape is the opposite: it only co-occurs with another referent on the second hand in 32% of the cases. Note that the non-dominant hand referents accompanying the 2-handshapes in RSL are exclusively inanimate and often represent a background for the described event (e.g., wall, doorframe, ground, etc.). Single-handed 2-handshape NGT counterparts thus omit the background or use lexical signs to ‘set the scene’ for the described movement. In a model with interaction with the second hand and language as fixed effects, the interaction between these two factors is highly significant (estimated log odds ratio, 2.04; p < 0.001). When building separate models for each language, in both RSL and NGT, the effect of interaction with the second hand is significantly correlated with handshape choice, but in the opposite direction (RSL: estimated log odds ratio, 1.09; p = 0.008 and NGT: estimated log odds ratio −0.95, p = 0.007). Thus, it is clear that the use of the second hand correlates with the choice of the classifier on the dominant hand, but the effects are very different between the languages.

It is also worth noting that the use of the second hand overall is significantly more frequent in RSL than in NGT (72% vs 36%; estimated odds ratio, 1.6; p < 0.0001). This finding is in agreement with previous research showing that weak hand holds in RSL are significantly more frequent than in NGT (Sáfár and Kimmelman 2016).

4.4.2 Control

We also observed that the agency of the referent, i.e. whether it has control over the motion, affects the choice of handshape differently between the two languages.

In NGT, the 1-handshape is used proportionally more often with uncontrolled events than the 2-handshape (39% vs 10% of all uses of each handshape; Figure 11). In RSL, the 1-handshape is never used with uncontrolled events, while 20% of the 2-handshape used are uncontrolled events (Figure 12).

![Figure 11

A classifier predicate with the 1-handshape, meaning ‘the cat flies up in the air’, NGT. CNGT0104. [00.00.25:760–00.00.26:480].](/document/doi/10.1515/opli-2025-0047/asset/graphic/j_opli-2025-0047_fig_011.jpg)

A classifier predicate with the 1-handshape, meaning ‘the cat flies up in the air’, NGT. CNGT0104. [00.00.25:760–00.00.26:480].

![Figure 12

A classifier predicate with the 2-handshape, meaning ‘the cat flies up in the air’, RSL. RSLM-m6-s40-d-std [00:00:48.171–00:00:49.041].](/document/doi/10.1515/opli-2025-0047/asset/graphic/j_opli-2025-0047_fig_012.jpg)

A classifier predicate with the 2-handshape, meaning ‘the cat flies up in the air’, RSL. RSLM-m6-s40-d-std [00:00:48.171–00:00:49.041].

Because of the small number of cases, and the full absence of cases of the 1-handshape for uncontrolled events in RSL, a model with control and language as fixed factors does not converge, and neither does a separate model for RSL with control as a fixed factor. In NGT, the effect of control is highly significant (estimated odds ratio = 1.8, p < 0.001).

While a full quantitative comparison between the languages is thus not possible, it is clear that control correlates with the handshape choice in both languages, but in opposite directions. In NGT, the 1-handshape is more commonly used to describe uncontrolled events, while in RSL, it is never used for such events (in our data).

Note that there is a possible confound, namely that many of the instances of uncontrolled movement concern the episodes where the cat is flying up. As discussed earlier, NGT seems to have a tendency to use proportionally more cases of the 1-handshape with the upward movement direction. It is not possible to say whether the movement direction or the control is a primary factor here, using the limited data we have.

4.4.3 Manner vs path

When coding for manner and path, we distinguished cases when only path is expressed within the sign (e.g., via trajectory of the hand movement), only manner is expressed (e.g., via bouncing hand motion, moving fingers, etc.), or both path and manner are expressed. However, we observed very few cases where only manner was expressed (3 in NGT, 11 in RSL). Interestingly, all of them had the 2-handshape (Figure 13).

![Figure 13

A classifier predicate with the 2-handshape (right hand), meaning ‘the cat runs (while holding the cage)’. The sign only has bouncy up and down movement, with no path movement, NGT. CNGT2079. [00.01:03:200–00.01.03:920].](/document/doi/10.1515/opli-2025-0047/asset/graphic/j_opli-2025-0047_fig_013.jpg)

A classifier predicate with the 2-handshape (right hand), meaning ‘the cat runs (while holding the cage)’. The sign only has bouncy up and down movement, with no path movement, NGT. CNGT2079. [00.01:03:200–00.01.03:920].

We then focused on comparing path-only predicates vs path and manner predicates. Here, we need to remember that in NGT, unlike RSL, a substantial number of predicates contained bouncy movement expressing running. We consider this bouncy movement to express manner, and thus we classify such predicates as path + manner. Given that in RSL the bouncy movement is barely used, we expect this to affect the overall distribution of event types between the languages. This is indeed the case, with path + manner making up 43% of the cases in NGT, but only 23% in RSL.

When looking at the effect of this factor on the choice of handshape, the languages are similar: in both of them, the 1-handshape is predominantly used with events expressing only path, while the 2-handshape is compatible with path and path + manner. In the model with language and manner/path as fixed factors, the effect of manner/path is significant (estimated log odds ratio 2.8, p < 0.0001), while the effects of language and the interaction between the fixed effects are not significant. In the separate models for RSL and NGT, the effect of manner/path is significant.

We thus can conclude that, in both languages, predicates expressing a combination of path and manner, predominantly use the 2-handshape. This finding is not very surprising, given that the 2-handshape is compatible with moving the fingers, which is a way to express manner in addition to path. A more interesting finding mentioned above is that, in NGT, bouncy movement, which is physiologically compatible with both handshapes, is only used with the 2-handshape. This can be an indication that the near absence of the 1-handshape encoding both manner and path is not physiologically/phonologically conditioned, but is a more general phenomenon.

4.4.4 Larger models

Since we discovered that several factors quantitatively correlate with the choice of classifier handshape in RSL and NGT (and, in some cases, the directionality of the effect is different for the two languages), we decided to try and build a model which takes all of these factors into consideration: the role of the second hand, control, path/manner, the language, and interactions.

Not surprisingly, the largest possible model does not converge. The models with language + two other factors and their interactions do not converge either. This can be explained by the small amount of data relative to the number of factors, by the skewed distribution of the handshapes, and also by the fact that, for the factor of control, RSL cannot be explored quantitatively due to the absence of cases of uncontrolled events with the 1-handshape.

We thus tried to build the largest possible model for each of the languages separately. For NGT, the biggest model that converges is the one with control, second hand, and path/manner as fixed factors (but without the interaction between them). In this model, the significant factors are manner/path (estimated odds ratio = 2.6, p < 0.001) and control (estimated odds ratio = 1.3, p = 0.004), while the role of the second hand is not significant (p = 0.7). This diverges slightly from our findings above, where the role of the second hand also significantly contributes to the choice of handshape. It appears that, in our data, the other factors reduce or nullify the effect of the second hand.

For RSL, the biggest model that converges (albeit with a singular fit warning) is the model with manner/path and the second hand (but without their interaction). Both factors significantly correlate with the choice of classifier handshape, in the same direction as in the results above, even when considered together. Remember that control also correlates with handshape choice, but, in our data, the effect is categorical, and thus not quantifiable.

5 Discussion

5.1 Similarities and differences between RSL and NGT

An important finding of this study is that RSL and NGT, two unrelated sign languages without extensive contact, have both similarities and differences in how they use classifier handshapes for human(-like) referents.

A major similarity that we found is that, in the parallel data sets, the 2-handshape is used much more frequently than the 1-handshape in both languages. However, there is also a significant difference between the two languages, with RSL using the 1-handshape less frequently than NGT. We also observe that the N-handshape variant is relatively more frequent in NGT than in RSL (but it is less frequent than the 2-handshape variant in both languages).

Almost all factors we tested (except for discourse status and congruence) appear to correlate with the choice of handshape (between the 1-handshape and the 2-handshape). Some of these factors work similarly in the two languages, while the others work in opposite directions.

The factors similar between the two languages are summarized in Table 2. The factors with different effects are summarized in Table 3.

Cross-linguistically similar factors of classifier handshape choice

| Factor | Effect | Type of evidence |

|---|---|---|

| Motion vs localization | The 1-handshape is never used with localization events, only with motion events | Qualitative |

| Meaning (jumping) | Only the 2-handshape is used for jumping | Qualitative |

| Manner and path | The combination of manner and path in one predicate is strongly associated with the 2-handshape | Quantitative |

Cross-linguistically different factors of classifier handshape choice

| Factor | Effect in RSL | Effect in NGT | Type of evidence |

|---|---|---|---|

| Meaning (approach) | Approaching is expressed more by the 1-handshape | No preference | Qualitative |

| Meaning (walking back and forth) | No preference | Walking back and forth is expressed more by the 1-handshape | Qualitative |

| Direction (up) | Direction up is never used with the 1-handshape | Direction up is often used with the 1-handshape | Qualitative |

| The second hand | The second hand expressing another referent is strongly associated with the 2-handshape | The 2-handshape strongly disprefers the second hand | Quantitative |

| Control | The 1-handshape is never used with uncontrolled events | The 1-handshape is used more often with uncontrolled events | Quantitative |

Looking at these tables, it is not easy to see a clear pattern: why some factors behave similarly, and some behave differently between the two languages. It is important to think about the nature of differences and similarities, and our expectations with respect to them.

As for similarities, those are hard to systematize. It is tempting to suggest that they may indicate the deep commonalities in the morphological makeup of the classifier constructions in the two sign languages and, potentially beyond. However, the relationship between similar factors remains opaque. We also cannot exclude the relevance of potential other factors that might govern the choice between the two handshapes but remain overseen on such a small data set with a highly limited variety of contexts. Ultimately, it should not come as a surprise that classifier predicates, being highly iconic and, at least partially, gestural in nature, may describe similar events in a similar way, even taking into account conventionalization of handshapes. Finally, it is also always possible that the signers of the two languages accidentally choose a similar strategy.

In light of potential explanations for the similarities between the two sign languages, the difference in fact becomes more striking. The observed typological difference can be analyzed as an indication of grammaticalization of the classifier predicates in the two languages, or at least, of different results of conventionalization of the classifier predicate systems.

It seems that the strongest evidence for different grammatical properties of classifier predicates between RSL and NGT concern control and the role of the second hand. In RSL, the 1-handshape is only used to describe controlled actions; the 2-handshape is strongly associated with the presence of another referent on the second hand. In NGT, the 1-handshape is often used for uncontrolled action; the 2-handshape is strongly associated with the absence of another referent on the second hand.

5.2 Implications for theory of classifiers in sign languages

As should be clear from the previous section, the first major implication of the current study for the theory of classifiers in sign languages is that classifier handshapes (at least for whole-entity classifiers) are conventionalized or grammaticalized, and vary cross-linguistically in their semantic, morphological, and syntactic properties. While this has already been demonstrated generally (Section 2), in this study we show this in one specific case: the choice between partially synonymous classifier handshapes. We can see that the choice in such cases is not random, but governed by a variety of language-specific linguistic factors.

However, since we focused specifically on the issue of handshapes, this study does not provide any insights into the nature of the other components of classifier predicates (movement and location). Therefore, our findings are compatible with a hybrid view of classifier predicates, where handshapes are morphosyntactic units which are combined with gestural components (e.g., Davidson (2015), but also Schembri et al. 2018).

In our RSL and NGT data, it appears that the choice of classifier handshape correlates with some factors related to the argument structure and event structure of the predicate. Specifically, the presence of another referent on the second hand, and the controlled vs uncontrolled nature of the event influence the choice of the classifier. While this does not provide direct evidence in favor of a specific theory of classifiers (e.g., Benedicto and Brentari (2004) vs Kimmelman et al. (2019)), it at least implies that classifier handshapes should be represented syntactically in some way as part of argument structure, and/or are active at the level of semantic representation.

Note, however, that another finding of ours that any theory of classifiers should deal with is the variability. It is clear that most factors discussed in this study do not categorically determine the choice of classifier. For example, in NGT, uncontrolled events are more likely to occur with the 1-handshape, but they also occur with the 2-handshape, and some controlled events occur with the 1-handshape. It is thus not likely that the handshape directly encodes the semantic/syntactic feature of control.

5.3 Limitations

As discussed earlier, the study is limited considerably by the relatively small amount of data analyzed, as well as by the skewed distribution: the 1-handshape is used very infrequently in both languages. Obtaining a larger data set is necessary to confirm or disprove the findings of the current study.

In addition, by design the study is exploratory. We annotated a large number of factors that can correlate with classifier handshape choice. For some of these factors, we could not come up with a set small number of categories, which makes it impossible to analyze the results quantitatively. Even for the factors that could potentially be analyzed quantitatively, the skewed distribution did not always allow for the appropriate analysis. Finally, since we were exploring a large number of factors in combinations and in isolation, the reported p-values should be treated with extreme caution. Our results should be interpreted first and foremost as indications of where the real effects can lie, but these effects should be independently verified in future studies.

6 Conclusions

In this article, we have analyzed classifier handshape choice in classifier predicates describing motion and location of human-like entities in two sign languages – RSL and NGT – based on a small parallel corpus of cartoon retellings. We looked specifically at the choice between the 1-handshape and the 2-handshape. The fact that different sign languages have synonymous classifier handshapes, that is, multiple handshapes that can be used to describe the same referent in some contexts, has been established in the literature. However, here (and in other articles in this volume), we investigate this issue in depth and with a (partially) quantitative approach for the first time.

In our data sets, produced by 13 RSL and 20 NGT signers, we found 330 relevant classifier predicates in RSL and 286 in NGT. We found that, in both languages, there is a strong preference for the 2-handshape, and especially so in RSL. We also found some differences in the distribution of variants of each handshape between the languages.

We annotated and analyzed a large number of factors that could potentially influence the choice of handshape in RSL and NGT. Due to the limitations of the data sets, only some factors could be investigated quantitatively. Importantly, we found both similarities and differences in how the classifier choice is realized in the two languages. For example, in both languages, the expression of location (vs motion) strongly favors the 2-handshape, as does the expression of the manner of motion (e.g., running, jumping); note, however, that our results concerning these features are qualitative and preliminary. At the same time, agency of the moving referent, and the presence of another (inanimate) referent on the second hand correlates with the handshape choice in opposite directions in the two languages.

While the similarities between the two languages could potentially be explained by iconicity and the spatial nature of the sign languages (as well as by random chance), the presence of clear differences based on grammatical factors is evidence of the conventionalized and linguistic status of the classifier handshapes, and potential evidence of grammaticalization.

In general, our findings are compatible with the hybrid view of classifier predicates, either coming from the cognitive linguistic tradition (Schembri et al. 2018) or from the formal tradition (Davidson 2015). In this view, the whole-entity classifier handshapes themselves are conventionalized and linguistic (more specifically morphological), contributing semantic value to the predicate in a constrained and predictable manner. At the same time, movement and location are used depictively (as we used this as defining characteristics for selecting the relevant predicates in our data sets), and possibly interpreted in a gradual manner. Note that, since we do not directly explore these depictive components, but focus on the handshape, we do not provide direct evidence for the hybrid theory as a whole.

We acknowledge that the study is limited in terms of the absolute number of examples, by the very small number of languages analyzed, as well as by the specific set of stimuli used to elicit the signed narratives. However, it provides an incentive to further study the issue of classifier handshape variation and choice, in the two languages considered here, as well as in other languages, and going beyond the specific handshapes and specific narratives analyzed here. Future research in this direction is bound to provide a better understanding of the linguistic properties of classifier handshapes, classifier predicates and depiction in general.

Acknowledgments

We acknowledge the support of the Centre for Advanced Study in Oslo, Norway, which funded and hosted Vadim Kimmelman’s Young CAS Fellow research project ‘Whole-Entity Classifiers in Sign Languages: A Multiperspective Approach’ during the academic years of 2022–2024.

-

Funding information: The study was funded by the Center for Advanced Study in Oslo, Norway.

-

Author contributions: Both authors have accepted responsibility for the entire content of this manuscript and approved its submission. Both authors have contributed to the study design. VK mainly carried out the annotation and analysis for NGT and EK mainly carried out the annotation and analysis for RSL. VK developed the code with contributions from EK. VK and EK jointly wrote the manuscript with equal contributions.

-

Conflict of interest: The authors state no conflict of interest.

-

Data availability statement: The data sets generated during and/or analyzed during the current study are available in the OSF repository, https://osf.io/m7kt9/.

References

Allan, Keith. 1977. “Classifiers.” Language 53 (2): 285–311. 10.1353/lan.1977.0043.Suche in Google Scholar

Baker, Mark C. 1996. The Polysynthesis Parameter. New York: Oxford University Press.10.1093/oso/9780195093070.001.0001Suche in Google Scholar

Bates, Douglas, Martin Mächler, Ben Bolker, Steve Walker. 2015. “Fitting Linear Mixed-Effects Models Using lme4.” Journal of Statistical Software 67 (1): 1–48. 10.18637/jss.v067.i01.Suche in Google Scholar

Benedicto, Elena and Diane Brentari. 2004. “Where did all the Arguments Go?: Argument-Changing Properties of Classifiers in ASL.” Natural Language & Linguistic Theory 22 (4): 743–810.10.1007/s11049-003-4698-2Suche in Google Scholar

Beukeleers, Inez and Myriam Vermeerbergen. 2022. “Show Me What You’ve B/Seen: A Brief History of Depiction.” Frontiers in Psychology 13: 808814. 10.3389/fpsyg.2022.808814.Suche in Google Scholar

Börstell, Carl. 2022. “Introducing the signglossR Package.” In Proceedings of the LREC2022 10th Workshop on the Representation and Processing of Sign Languages: Multilingual Sign Language Resources, edited by Eleni Efthimiou, Stavroula-Evita Fotinea, Thomas Hanke, Julie A. Hochgesang, Jette Kristoffersen, Johanna Mesch, and Marc Schulder, 16–23. Marseille, France: European Language Resources Association (ELRA). https://www.sign-lang.uni-hamburg.de/lrec/pub/22006.pdf.Suche in Google Scholar

Burkova, Svetlana. 2015. Russian Sign Language Corpus. http://rsl.nstu.ru/. (1 April, 2018).Suche in Google Scholar

Cogill-Koez, Dorothea. 2000. “A Model of Signed Language ‘Classifier Predicates’ as Templated Visual Representation.” Sign Language & Linguistics 3 (2): 209–36. 10.1075/sll.3.2.04cog.Suche in Google Scholar

Cormier, Kearsy, David Quinto-Pozos, Zed Sevcikova, and Adam Schembri. 2012. “Lexicalisation and De-Lexicalisation Processes in Sign Languages: Comparing Depicting Constructions and Viewpoint Gestures.” Language & Communication 32 (4): 329–48. 10.1016/j.langcom.2012.09.004.Suche in Google Scholar

Crasborn, Onno, Inge Zwitserlood, Johan Ros. 2008. Corpus NGT. An open access digital corpus of movies with annotations of Sign Language of the Netherlands. http://www.ru.nl/corpusngtuk/introduction/welcome/.Suche in Google Scholar

Davidson, Kathryn. 2015. “Quotation, Demonstration, and Iconicity.” Linguistics and Philosophy 38 (6): 477–520. 10.1007/s10988-015-9180-1.Suche in Google Scholar

Fischer, Susan and Qunhu Gong. 2010. “Variation in East Asian Sign Language Structures.” In Sign Languages, edited by Diane Brentari, 499–518. Cambridge: Cambridge University Press. 10.1017/CBO9780511712203.023.Suche in Google Scholar

Freleng, Friz. 1950. Canary Row. Animated Cartoon. Time Warner, New York.Suche in Google Scholar

Frishberg, Nancy. 1975. “Arbitrariness and Iconicity: Historical Change in American Sign Language.” Language 51 (3): 696. 10.2307/412894.Suche in Google Scholar

Johnston, Trevor A. and Adam Schembri. 2007. Australian Sign Language (Auslan): An Introduction to Sign Language Linguistics. Cambridge, UK; New York: Cambridge University Press.10.1017/CBO9780511607479Suche in Google Scholar

Johnston, Trevor and Adam C. Schembri. 1999. “On Defining Lexeme in a Signed Language.” Sign Language & Linguistics 2 (2): 115–85. 10.1075/sll.2.2.03joh.Suche in Google Scholar

Khristoforova, Evgeniia. 2017. Inventar’ semnaticheskih klassifikatorov v russkom zhestovom yazyke.(“Semantic classifiers in Russian Sign Language: an inventory”). Moscow: Russian State University for the Humanities.Suche in Google Scholar

Kimmelman, Vadim and Evgeniia Khristoforova. 2018. On the Nature of Classifiers in Russian Sign Language. GLOW 41, Hungarian Academy of Sciences, Budapest.Suche in Google Scholar

Kimmelman, Vadim, Roland Pfau, and Enoch O. Aboh. 2019. “Argument Structure of Classifier Predicates in Russian Sign Language.” Natural Language & Linguistic Theory 38: 539–79. 10.1007/s11049-019-09448-9.Suche in Google Scholar

Kita, Sotaro and Asli Özyürek. 2003. “What does Cross-Linguistic Variation in Semantic Coordination of Speech and Gesture Reveal?: Evidence for an Interface Representation of Spatial Thinking and Speaking.” Journal of Memory and Language 48 (1): 16–32. 10.1016/S0749-596X(02)00505-3.Suche in Google Scholar

Kurz, Kim B., Geo Kartheiser, Peter C. Hauser. 2023. “Second Language Learning of Depiction in a Different Modality: The Case of Sign Language Acquisition.” Frontiers in Communication 7: 896355. 10.3389/fcomm.2022.896355.Suche in Google Scholar

Liddell, Scott K. 2003. Grammar, Gesture, and Meaning in American Sign Language. Cambridge: Cambridge University Press.10.1017/CBO9780511615054Suche in Google Scholar

Loos, Cornelia and Donna Jo Napoli. 2023. “Depicting Translocating Motion in Sign Languages.” Sign Language Studies 24 (1): 5–45. 10.1353/sls.2023.a912329.Suche in Google Scholar

McBurney, Susan L. 2002. “Pronominal Reference in Signed and Spoken Language: Are Grammatical Categories Modality-Dependent.” In Modality and Structure in Signed and Spoken Languages, edited by Richard P. Meier, Kearsey Cormier, and David Quinto-Pozos, 329–69. Cambridge: Cambridge University Press.10.1017/CBO9780511486777.017Suche in Google Scholar

Meir, Irit. 2001. “Verb Classifiers as Noun Incorporation in Israeli Sign Language.” In Yearbook of Morphology 1999, edited by Gerard Booij and Jacob van Marle, 299–319. Dordrecht: Kluwer.10.1007/978-94-017-3722-7_11Suche in Google Scholar

Nam, Ki-Hyun and Kang-Suk Byun. 2022. “Classifiers and Gender in Korean Sign Language.” In East Asian Sign Linguistics, edited by Kazumi Matsuoka, Onno Crasborn, and Marie Coppola, 71–100. Berlin: De Gruyter. 10.1515/9781501510243-004.Suche in Google Scholar

Quadros, Ronice Müller De, Kathryn Davidson, Diane Lillo-Martin, and Karen Emmorey. 2020. “Code-Blending with Depicting Signs.” Linguistic Approaches to Bilingualism 10 (2): 290–308. 10.1075/lab.17043.qua.Suche in Google Scholar

Quinto‐Pozos, David and Fey Parrill. 2015. “Signers and Co‐speech Gesturers Adopt Similar Strategies for Portraying Viewpoint in Narratives.” Topics in Cognitive Science 7 (1): 12–35. 10.1111/tops.12120.Suche in Google Scholar

R Core Team. 2022. R: A Language and Environment for Statistical Computing. Vienna, Austria: R Foundation for Statistical Computing. https://www.R-project.org/.Suche in Google Scholar

Sáfár, Anna and Vadim Kimmelman. 2016. “Weak Hand Holds in Two Sign Languages and Two Genres.” Sign Language & Linguistics 18 (2): 205–37. 10.1075/sll.18.2.02saf.Suche in Google Scholar

Schembri, Adam C. 2003. “Rethinking “Classifiers” in Sign Languages.” In Perspectives on Classifier Constructions in Sign Languages, edited by Karen Emmorey, 3–34. Mahwah, NJ: Lawrence Erlbaum Associates.Suche in Google Scholar

Schembri, Adam, Kearsy Cormier, and Jordan Fenlon. 2018. “Indicating Verbs as Typologically Unique Constructions: Reconsidering Verb ‘Agreement’ in Sign Languages.” Glossa: A Journal of General Linguistics 3 (1): 89. 10.5334/gjgl.468.Suche in Google Scholar

Schembri, Adam, Caroline Jones, and Denis Burnham. 2005. “Comparing Action Gestures and Classifier Verbs of Motion: Evidence From Australian Sign Language, Taiwan Sign Language, and Nonsigners’ Gestures Without Speech.” Journal of Deaf Studies and Deaf Education 10 (3): 272–90. 10.1093/deafed/eni029.Suche in Google Scholar

Seiler, Walter. 1985. Imonda, a Papuan Language (Pacific Linguistics no. 93). Canberra, Australia: Dept. of Linguistics, Research School of Pacific Studies, Australian National University.Suche in Google Scholar

Slobin, Dan I., Nini Hoiting, Michelle Anthony, Yael Biederman, Marlon Kuntze, Reyna Lindert, Jennie Pyers, Helen Thumann, and Amy Weinberg. 2001. “Sign Language Transcription at the Level of Meaning Components: The Berkeley Transcription System (BTS).” Sign Language & Linguistics 4 (1–2): 63–104. 10.1075/sll.4.12.07slo.Suche in Google Scholar

Supalla, Ted. 1982. Structure and Acquisition of Verbs of Motion and Location in American Sign Language. PhD diss., University of California, San Diego.Suche in Google Scholar

Supalla, Ted. 1986. “The Classifier System in American Sign Language.” In Typological Studies in Language, edited by Colette G. Craig, vol. 7, 181. Amsterdam: John Benjamins Publishing Company. 10.1075/tsl.7.13sup.Suche in Google Scholar

Wickham, Hadley, Mara Averick, Jennifer Bryan, Winston Chang, Lucy D’Agostino McGowan, Romain François, Garrett Grolemund, et al. 2019. “Welcome to the Tidyverse.” Journal of Open Source Software 4 (43): 1686. 10.21105/joss.01686.Suche in Google Scholar

Zwitserlood, Inge. 2003. Classifying Hand Configurations in Nederlandse Gebarentaal (Sign Language of the Netherlands). Utrecht: LOT.Suche in Google Scholar

Zwitserlood, Inge. 2012. “Classifiers.” In Sign Language: An International Handbook, edited by Roland Pfau, Markus Steinbach, and Bencie Woll, 158–86. Berlin: De Gruyter Mouton.Suche in Google Scholar

© 2025 the author(s), published by De Gruyter

This work is licensed under the Creative Commons Attribution 4.0 International License.

Artikel in diesem Heft

- Research Articles

- No three productions alike: Lexical variability, situated dynamics, and path dependence in task-based corpora

- Individual differences in event experiences and psychosocial factors as drivers for perceived linguistic change following occupational major life events

- Is GIVE reliable for genealogical relatedness? A case study of extricable etyma of GIVE in Huī Chinese

- Borrowing or code-switching? Single-word English prepositions in Hong Kong Cantonese

- Stress and epenthesis in a Jordanian Arabic dialect: Opacity and Harmonic Serialism

- Can reading habits affect metaphor evaluation? Exploring key relations

- Acoustic properties of fricatives /s/ and /∫/ produced by speakers with apraxia of speech: Preliminary findings from Arabic

- Translation strategies for Arabic stylistic shifts of personal pronouns in Indonesian translation of the Quran

- Colour terms and bilingualism: An experimental study of Russian and Tatar

- Argumentation in recommender dialogue agents (ARDA): An unexpected journey from Pragmatics to conversational agents

- Toward a comprehensive framework for tonal analysis: Yangru tone in Southern Min

- Variation in the formant of ethno-regional varieties in Nigerian English vowels

- Cognitive effects of grammatical gender in L2 acquisition of Spanish: Replicability and reliability of object categorization

- Interaction of the differential object marker pam with other prominence hierarchies in syntax in German Sign Language (DGS)

- Modality in the Albanian language: A corpus-based analysis of administrative discourse

- Theory of ecology of pressures as a tool for classifying language shift in bilingual communities

- BSL signers combine different semiotic strategies to negate clauses

- Embodiment in colloquial Arabic proverbs: A cognitive linguistic perspective

- Voice quality has robust visual associations in English and Japanese speakers

- The cartographic syntax of Lai in Mandarin Chinese

- Rhetorical questions and epistemic stance in an Italian Facebook corpus during the COVID-19 pandemic

- Sentence compression using constituency analysis of sentence structure

- There are people who … existential-attributive constructions and positioning in Spoken Spanish and German

- The prosodic marking of discourse functions: German genau ‘exactly’ between confirming propositions and resuming actions

- Semantic features of case markings in Old English: a comparative analysis with Russian

- The influence of grammatical gender on cognition: the case of German and Farsi

- Special Issue: Request for confirmation sequences across ten languages, edited by Martin Pfeiffer & Katharina König - Part II

- Request for confirmation sequences in Castilian Spanish

- A coding scheme for request for confirmation sequences across languages

- Special Issue: Classifier Handshape Choice in Sign Languages of the World, coordinated by Vadim Kimmelman, Carl Börstell, Pia Simper-Allen, & Giorgia Zorzi

- Classifier handshape choice in Russian Sign Language and Sign Language of the Netherlands

- Formal and functional factors in classifier choice: Evidence from American Sign Language and Danish Sign Language

- Choice of handshape and classifier type in placement verbs in American Sign Language

- Somatosensory iconicity: Insights from sighted signers and blind gesturers

- Diachronic changes the Nicaraguan sign language classifier system: Semantic and phonological factors

- Depicting handshapes for animate referents in Swedish Sign Language

- A ministry of (not-so-silly) walks: Investigating classifier handshapes for animate referents in DGS

- Choice of classifier handshape in Catalan Sign Language: A corpus study

Artikel in diesem Heft

- Research Articles

- No three productions alike: Lexical variability, situated dynamics, and path dependence in task-based corpora

- Individual differences in event experiences and psychosocial factors as drivers for perceived linguistic change following occupational major life events

- Is GIVE reliable for genealogical relatedness? A case study of extricable etyma of GIVE in Huī Chinese

- Borrowing or code-switching? Single-word English prepositions in Hong Kong Cantonese

- Stress and epenthesis in a Jordanian Arabic dialect: Opacity and Harmonic Serialism

- Can reading habits affect metaphor evaluation? Exploring key relations

- Acoustic properties of fricatives /s/ and /∫/ produced by speakers with apraxia of speech: Preliminary findings from Arabic

- Translation strategies for Arabic stylistic shifts of personal pronouns in Indonesian translation of the Quran

- Colour terms and bilingualism: An experimental study of Russian and Tatar

- Argumentation in recommender dialogue agents (ARDA): An unexpected journey from Pragmatics to conversational agents

- Toward a comprehensive framework for tonal analysis: Yangru tone in Southern Min

- Variation in the formant of ethno-regional varieties in Nigerian English vowels

- Cognitive effects of grammatical gender in L2 acquisition of Spanish: Replicability and reliability of object categorization

- Interaction of the differential object marker pam with other prominence hierarchies in syntax in German Sign Language (DGS)

- Modality in the Albanian language: A corpus-based analysis of administrative discourse

- Theory of ecology of pressures as a tool for classifying language shift in bilingual communities

- BSL signers combine different semiotic strategies to negate clauses

- Embodiment in colloquial Arabic proverbs: A cognitive linguistic perspective

- Voice quality has robust visual associations in English and Japanese speakers

- The cartographic syntax of Lai in Mandarin Chinese

- Rhetorical questions and epistemic stance in an Italian Facebook corpus during the COVID-19 pandemic

- Sentence compression using constituency analysis of sentence structure

- There are people who … existential-attributive constructions and positioning in Spoken Spanish and German

- The prosodic marking of discourse functions: German genau ‘exactly’ between confirming propositions and resuming actions

- Semantic features of case markings in Old English: a comparative analysis with Russian

- The influence of grammatical gender on cognition: the case of German and Farsi

- Special Issue: Request for confirmation sequences across ten languages, edited by Martin Pfeiffer & Katharina König - Part II

- Request for confirmation sequences in Castilian Spanish

- A coding scheme for request for confirmation sequences across languages

- Special Issue: Classifier Handshape Choice in Sign Languages of the World, coordinated by Vadim Kimmelman, Carl Börstell, Pia Simper-Allen, & Giorgia Zorzi

- Classifier handshape choice in Russian Sign Language and Sign Language of the Netherlands

- Formal and functional factors in classifier choice: Evidence from American Sign Language and Danish Sign Language

- Choice of handshape and classifier type in placement verbs in American Sign Language

- Somatosensory iconicity: Insights from sighted signers and blind gesturers

- Diachronic changes the Nicaraguan sign language classifier system: Semantic and phonological factors

- Depicting handshapes for animate referents in Swedish Sign Language

- A ministry of (not-so-silly) walks: Investigating classifier handshapes for animate referents in DGS

- Choice of classifier handshape in Catalan Sign Language: A corpus study