Abstract

The concept of cloud computing has completely changed how computational resources are delivered and used. By enabling on-demand access to collective computing resources through the internet. While this technological shift offers unparalleled flexibility, it also brings considerable challenges, especially in scheduling and resource allocation, particularly when optimizing multiple objectives in a dynamic environment. Efficient allocation and scheduling of resources are critical in cloud computing, as they directly impact system performance, resource utilization, and cost efficiency in dynamic and heterogeneous conditions. Existing approaches often face difficulties in balancing conflicting objectives, such as reducing task completion time while staying within budget constraints or minimizing energy consumption while maximizing resource utilization. As a result, many solutions fall short of optimal performance, leading to increased costs and degraded performance. This systematic literature review (SLR) focuses on research conducted between 2019 and 2023 on scheduling and resource allocation in cloud environment. Following preferred reporting items for systematic reviews and meta-analyses guidelines, the review ensures a transparent and replicable process by employing systematic inclusion criteria and minimizing bias. The review explores key concepts in resource management and classifies existing strategies into mathematical, heuristic, and hyper-heuristic approaches. It evaluates popular algorithms designed to optimize key metrics such as energy consumption, resource utilization, cost reduction, makespan minimization, and performance satisfaction. Through a comparative analysis, the SLR discusses the strengths and limitations of various resource management schemes and identifies emerging trends. It underscores a steady growth in research within this field, emphasizing the importance of developing efficient allocation strategies to address the complexities of modern cloud systems. The findings provide a comprehensive overview of current methodologies and pave the way for future research aimed at tackling unresolved challenges in cloud computing resource management. This work serves as a valuable resource for practitioners and academics seeking to optimize scheduling and allocation in dynamic cloud environments, contributing to advancements in resource management strategies of cloud computing.

1 Introduction

In recent years, cloud computing has emerged as a crucial instrument of the information technology industry. It offers scalability, flexibility, and cost-effectiveness through on-demand computing services that let customers access resources online [1]. However, it faces significant challenges in resource allocation and scheduling that extensively affect its performance and efficiency [2]. One of the primary issues is the dynamic nature of user demands, which can fluctuate significantly over time. This variability necessitates advanced algorithms for effective resource management, ensuring that computational resources are allocated optimally to meet varying workloads. Failure to accurately predict and respond to these fluctuations can lead to underutilization or overloading of resources, resulting in performance degradation and increased operational costs. Effective resource allocation must account for these limitations while making certain that tasks are completed within the required timeframes and efficiently [3].

To address these challenges, the researchers have explored various approaches, ranging from traditional static heuristics to more advanced artificial intelligence (AI)-driven methods. Traditional methods often rely on static heuristics, where decisions are made based on predefined rules or empirical guidelines [4]. AI-driven methods, such as metaheuristic (MH) algorithms, have gained popularity due to their adapting ability to dynamic conditions and optimize multiple objectives simultaneously [5]. Furthermore, the integration of machine learning techniques into scheduling and resource management has opened new avenues for enhancing efficiency and performance in cloud computing [6]. Models of machine learning can analyze historical data to predict workloads and optimize resource allocation more accurately than traditional heuristics. This predictive capability allows for proactive adjustments in resource distribution, minimizing latency, and maximizing throughput [7].

Recent studies show that the reinforcement learning (RIL) is effective for task scheduling, with agents learning optimal policies through trial and error in a simulated cloud environment [8]. These adaptive strategies not only improve immediate task performance; but also contribute to long-term resource optimization by learning from previous decisions and their outcomes. Moreover, the emergence of hybrid models, which combine the strengths of both traditional and AI-driven methodologies, has shown promising results [9]. By leveraging the simplicity of static heuristics alongside the adaptability of machine learning algorithms, these hybrid approaches can provide more robust solutions under varying conditions [10]. The static heuristics shift to advanced AI-driven methods which represents significant progress in managing the complexities of cloud computing. Ongoing research and development are essential for optimizing task scheduling and resource management, ultimately enhancing service quality and user satisfaction in cloud infrastructures [11].

Despite these progressions, resource allocation and task scheduling methods still have significant gaps. First, static heuristics, while straightforward, are often inadequate for handling the dynamic and unpredictable natures of cloud workloads. They lack the flexibility to adapt to the changes in real-time resource availability or user demands, leading to suboptimal performance in dynamic environments [5]. Second, their practical implementation faces challenges related to scalability and computational complexity, while nature-inspired techniques such as genetic algorithms (GAs) and particle swarm optimization (PSO) show promise. These methods often require significant computational resources and time, making them less feasible for large-scale cloud environments [10]. Third, many current studies had focus on single performance metrics, such as energy efficiency or execution speed, without providing a comprehensive evaluation of multiple dimensions, such as fairness, user-centric priorities, and adaptability [12]. This limited emphasis making these techniques less applicable in real-world situations when juggling several goals at once is so necessary. Fourth, there are insufficient standardized experimental platforms and simulation tools for evaluating task scheduling and resource allocation strategies, making it difficult to compare results across studies [13]. Finally, the rapid evolution of cloud computing technologies and workloads necessitates continuous updates to existing methodologies, yet many studies fail to address emerging trends or provide forward-looking insights [14].

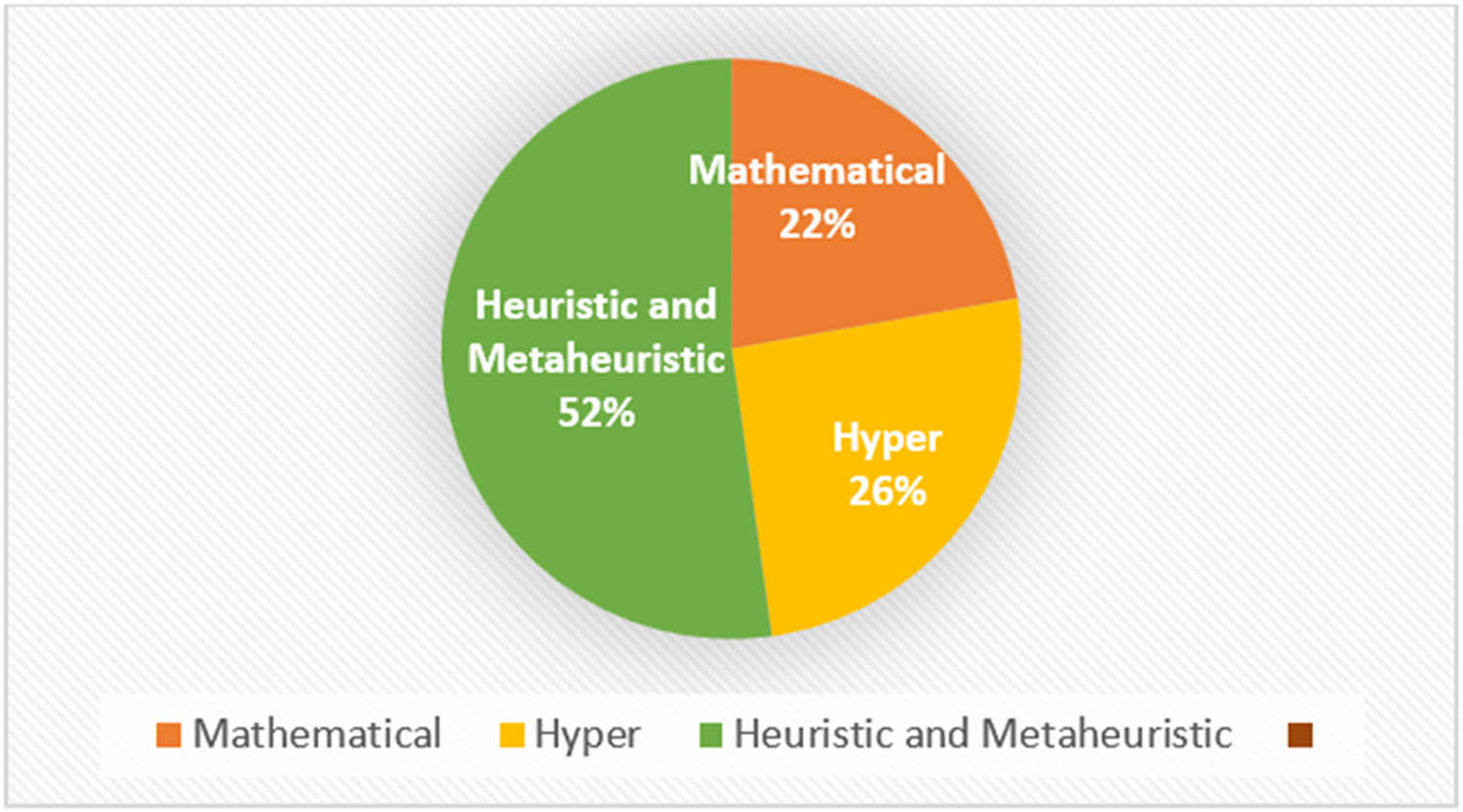

In this systematic literature review (SLR), the latest articles related to resource allocation and task scheduling in clouds have been reviewed. We have investigated and categorized a large number of related articles based on their objectives, characteristics, and simulation tools. By identifying and critically analyzing the shortcomings of current approaches, this SLR provides a roadmap for future research and development. Additionally, it highlights new trends and possible future paths in cloud computing resource management by synthesizing lessons from a variety of studies. By adhering to the preferred reporting items for systematic reviews and meta-analyses (PRISMA) guidelines and employing a rigorous selection process, this SLR aims to provide a high-quality, unbiased synthesis of the research of current state in this field. To guide this SLR and address key aspects of scheduling and allocation issues in cloud computing, the following research inquiries were formulated:

RQ1. What are the primary challenges in cloud computing environments of resource allocation and task scheduling?

RQ2. How do various optimization methods perform in dynamic cloud environments?

RQ3. What are the shortcomings and difficulties of the current task scheduling algorithms and resource allocation strategies?

RQ4. How do mathematical, heuristic, and hyper-heuristic methods compare in terms of efficiency and scalability for task scheduling in cloud environments?

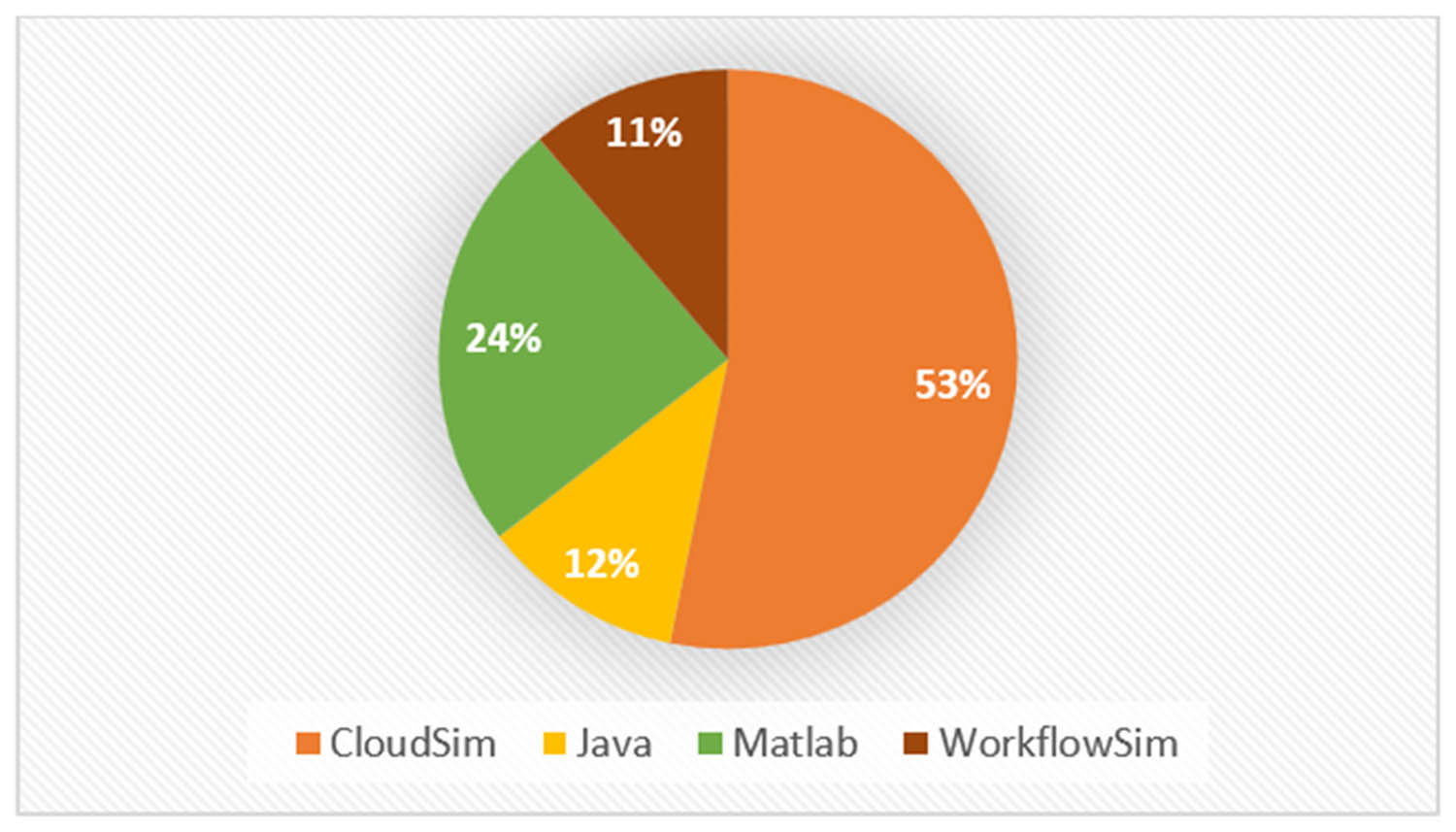

RQ5. Which experimental platforms or simulation tools are utilized to assess task scheduling and resource scheduling strategies in cloud computing?

RQ6. What are the emerging trends and future directions in this area of research?

By addressing these questions, this SLR seeks to highlight key features and provide insights into future resource allocation trends and cloud computing task scheduling trends. It aims to offer a holistic overview of the most recent algorithms and approaches, which will aid future academics in advancing research in this critical area of cloud computing.

This SLR seeks to tackle these significant gaps by providing a comprehensive and structured analysis of resource allocation and task scheduling strategies from 2019 to 2023. Specifically, this review contributes to the field in the following ways:

Conducting a structured review of resource allocation and task scheduling strategies from 2019 to 2023.

Introducing a new taxonomy that categorizes approaches into mathematical, heuristic, and hyper-heuristic methods.

Conducting an in-depth comparative analysis that evaluates methods across multiple performance dimensions, including efficiency, scalability, and adaptability.

Identifying and critically analyzing the shortcomings of current approaches, thus providing a roadmap for future research and development.

Synthesizing insights from a wide range of studies to highlight emerging trends and potential future directions in cloud computing resource management.

The study is structured as follows: Section 2 discusses related work. Section 3 outlines the research methodology. Section 4 discusses resource allocation and task scheduling in cloud computing. Section 5 reviews the current state of resource management approaches. Section 6 provides a discussion. Section 7 presents the study characteristics. Section 8 offers challenges and research gaps, and the final section concludes the study.

2 Related work

The challenge of resource allocation and task scheduling in cloud computing is a well-known NP-hard challenge that has garnered significant attention from researchers. Despite extensive studies, it remains a compelling area of research due to the dynamic and complex nature of cloud environments [5]. Developing efficient scheduling solutions using existing optimization algorithms continues to be a challenge, given the rapidly evolving demands and constraints of cloud computing [15]. This section evaluates the outcomes of previous reviews on optimization algorithms, emphasizing their strengths and weaknesses to provide a comprehensive understanding of their effectiveness in addressing this critical issue.

Jia et al. [16] conducted a systematic review of multi-tenancy scheduling approaches in cloud platforms, examining scheduling policies, cloud provisioning, and deployment. Their classification system encompasses static, complex, offline, online, preemptive, and non-preemptive scheduling algorithms. However, the study lacks specific details on the limitations of the reviewed scheduling approaches on multi-tenancy cloud platforms. Negi et al. [17] conducted a comprehensive analysis of cloud load-balancing techniques using computational paradigms. The study categorizes methods based on soft computing approaches including fuzzy systems, machine learning, neural networks, and bio-inspired computing, evaluating their effectiveness at both virtual machine (VM) and physical machine levels. However, the study lacks a detailed analysis of the performance metrics for evaluating the effectiveness of soft computing techniques in achieving dynamic load balancing.

A significant systematic review of MH algorithms for cloud task scheduling was illustrated by Houssein et al. [18]. The study addresses resource utilization challenges and offers valuable categorization based on scheduling problem nature, objectives, task-resource mapping schemes, and constraints, while highlighting the importance of efficient task distribution across limited resources. Another significant survey on task scheduling techniques in cloud computing was presented by Panwar et al. [11]. The stud explores energy-saving strategies for cloud data centers, addressing the pressing challenges of high electricity consumption and environmental impact. The researchers systematically evaluated various approaches, including machine learning, heuristics, MHs, and statistical methods to enhance resource management and energy utilization. Their findings demonstrated notable energy saving compared to conventional techniques. However, while the study contributes value to energy efficiency research, it does not comprehensively address other crucial aspects of cloud data center operations, such as security measures, system scalability, and service reliability.

Zhou et al. [19] provide a contrasting analysis of MH load-balancing algorithms in cloud computing, evaluating the factors of performance including makespan time, degree of imbalance, data center processing time, flow time, response time, and resource utilization. The study examines challenges in integrating and improving MH methods for load balancing, such as reconfiguring transformation operators, extracting features from input workloads, and implementing hybrid models. However, the study lacks a detailed discussion of specific challenges and limitations for each analyzed algorithm. It also omits critical analysis of potential drawbacks in applying MH methods to load balancing, particularly regarding computational complexity and convergence issues.

Zhou et al. [19] highlighted the significance of task scheduling algorithms in cloud computing, with particular focus on the round robin (RR) algorithm and its enhancements. The RR algorithm is commonly used due to its simplicity and time-sharing capabilities. While it effectively addresses efficient resource utilization and task scheduling challenges, the RR algorithm may still face performance optimization issues, especially in dynamic cloud computing environments. Also, an important comprehensive review of nature-inspired scheduling approaches, evaluating their effectiveness based on qualitative Quality of Service (QoS) parameters and simulation tools in cloud environments was presented by Arunarani et al. [20]. Algorithms, such as henry gas solubility optimization and cat swarm optimization, have demonstrated effectiveness in balancing exploitation and exploration while preventing local optima. These approaches optimize various criteria such as makespan and resource utilization, though certain limitations exist in specific cloud computing scenarios.

Subsequent research [19,21–24] covers various aspects of cloud computing, including priority-based scheduling, MH load balancing, job management techniques, and resource management. Significant contributions include comparative analyses of performance metrics, taxonomies of resource allocation approaches, and evaluations of hybrid algorithms. Collectively, these studies enhance our understanding of cloud computing optimization while highlighting areas needing further research, such as specific technical limitations, computational complexity considerations, and integration challenges.

This review is distinguished by its methodological rigor, time-bound focus, comprehensive classification, and attention to multi-objective optimization in current cloud computing challenges. The focused timeframe (2019–2023) provides current insights into contemporary cloud computing challenges, while the unique classification of methods into mathematical, heuristic, and hyper-heuristic methods provides a more complete theoretical framework compared to previous reviews that often focus on single aspects such as nature-inspired approaches, or energy efficiency. In contrast to previous studies that tackle single objectives, our focus on balancing conflicting objectives, such as energy efficiency and load balancing, better reflects the complexity of real-world scenarios, which earlier studies often simplify by addressing single objectives. Furthermore, this review also integrates modern challenges, emphasizing dynamic and heterogeneous conditions.

3 Research methodology

This SLR employs an exploratory and descriptive procedure to meet the research’s objectives and address the questions raised in the introduction section. This approach facilitates an understanding of the state-of-the-art in the field, identifies previous studies limitations, and emphasizes endorsements for future research. This SLR was systematically carried out in conformity with the PRISMA 2020 statement and established methodological guidelines [25,26]. Its reporting included methods and materials, inclusion and exclusion criteria, search strategies, information sources, risk of bias assessment, data management, and the PRISMA flow chart that represents the overall methodological design.

3.1 Inclusion and exclusion criteria

In this step, systematic and comprehensive reviews of relevant literature were conducted to determine inclusion and exclusion criteria for this study. The inclusion criteria refer to all articles selected for examination during the procedure to perform a systematic review. This selection is based on a strategic relationship with keywords such as “cloud computing,” “resource allocation,” and “task scheduling.” The inclusion criteria encompass peer-reviewed journal articles produced from 2019 to 2023 that focus on resource allocation and task scheduling in cloud computing, utilizing mathematical, heuristic, or hyper-heuristic methods.

The purpose of the exclusion criteria was to exclude studies misaligned with the research objectives during the SLR process, as guided by the PRISMA 2020 statement. The first step in the research selection process was to search for literature sources. After removing duplicates, a three-iteration screening and filtering process was conducted:

First iteration: Articles were excluded according to their titles and abstracts if they did not address the predefined research topics.

Second iteration: Articles were excluded if they were inaccessible due to restricted access, closed-access journals, or other barriers.

Third iteration: Articles were excluded if they did not focus on resource allocation and task scheduling in a cloud computing environment or did not adopt one of the specified approaches: mathematical, heuristic, or hyper-heuristic.

3.2 Data selection source

In January 2024, a search for articles was conducted. Following the PRISMA statement for literature reviews, it is crucial to specify the information sources and the inclusion and exclusion criteria used for the analysis. This SLR examined all publications available in relevant databases from 2019 to 2023. A systematic and comprehensive search was performed in five academic databases: ScienceDirect, Web of Science, Springer, IEEE Xplore, and Scopus, focusing on research publications related to resource allocation and scheduling in cloud computing. Publications from 2019 to 2023 were reviewed and analyzed; 2024 was removed as incomplete. Considering the timing of this SLR, the term “PUBYEAR > 2018 AND PUBYEAR < 2024” was used to provide access to the relevant publications. We also utilized search options to exclude book chapters, blogs, theses, and other non-article formats, prioritizing peer-reviewed journal articles in English to ensure the quality and relevance of our findings.

3.3 Data search strategy

We sought resource allocating and scheduling methods in cloud environments. We created a consistent search strategy using predefined search terms based on the eligibility criteria to ensure that the studies from the five selected databases were relevant to the research objectives and compatible with each database’s search interface. Searches were conducted across the five digital libraries using a search string “cloud computing” OR “cloud environment” with the subsequent keywords “Resource allocation” and “Task scheduling.” Also, we used these keywords: “Mathematical approach,” OR “Heuristic approach” OR “Hyper approach” to limit our scope of searches as shown in Table 1.

Query in digital libraries used in this study

| Digital library | Query/search terms | No. of articles |

|---|---|---|

| ScienceDirect | (“cloud computing” OR “cloud environment”) AND ((“resource allocation” AND “task scheduling”) OR (“resource allocation” OR “task scheduling”)) AND (“Mathematical approach” OR “Heuristic approach” OR “Hyper Heuristic approach”) | 201 |

| Web of Science | TS = (“cloud computing” OR “cloud environment”) AND TS = ((“resource allocation” AND “task scheduling”) OR (“resource allocation” OR “task scheduling”)) AND TS = (“Mathematical approach” OR “Heuristic approach” OR “Hyper Heuristic approach”) | 21 |

| Springer | (“cloud computing” OR “cloud environment”) AND ((“resource allocation” AND “task scheduling”) OR (“resource allocation” OR “task scheduling”)) AND (“Mathematical approach” OR “Heuristic approach” OR “Hyper Heuristic approach”) | 127 |

| IEEE | (cloud computing) AND ((resource allocation AND task scheduling) OR (resource allocation OR “task scheduling)) AND (Mathematical approach OR Heuristic approach OR Hyper Heuristic approach) | 205 |

| Scopus | (“cloud computing” OR “cloud environment”) AND ((“resource allocation” AND “task scheduling”) OR (“resource allocation” OR “task scheduling”)) AND (“Mathematical approach” OR “Heuristic approach” OR “Hyper Heuristic approach”) | 367 |

| Total number of articles collected in the initial search | 921 | |

3.4 Risk of bias assessment

A well-defined and consistent procedure was implemented to evaluate the bias risk in the studies included in this review. Each author contributed to the data collecting and bias risk assessment processes to make sure of the accuracy and results integrity. A Microsoft Excel based automated tool was utilized to facilitate an impartial and consistent evaluation. The authors independently evaluated each article and then worked together to resolve any difficulties or conflicts until they were all in accord.

To ensure the reliability of the results, transparent and consistent criteria were applied in assessing the risk of bias. However, it is important to note that the study was limited to articles retrieved from five databases: ScienceDirect, Web of Science, Springer, IEEE Xplore, and Scopus. This limitation may have introduced bias by potentially excluding relevant research available in other databases or sources related to task scheduling and resource allocation. Despite this constraint, efforts were made to minimize bias by adhering to strict inclusion, exclusion principles and undertaking a comprehensive search across the selected databases.

3.5 Data management and quality control

Using the search algorithms available in each database, 921 empirical studies on scheduling and resource allocation in cloud computing were initially retrieved. Among these, 201 studies were from ScienceDirect, 21 from Web of Science, 127 from Springer, 205 from IEEE Xplore, and 367 from Scopus databases. Owing to the various typological formats of the databases utilized, a data homogenization procedure was performed on the studies in Microsoft Excel to unify the format. To address the study questions that were given, the same technology was utilized to analyze the data and apply the exclusion criteria.

3.6 Data selection process

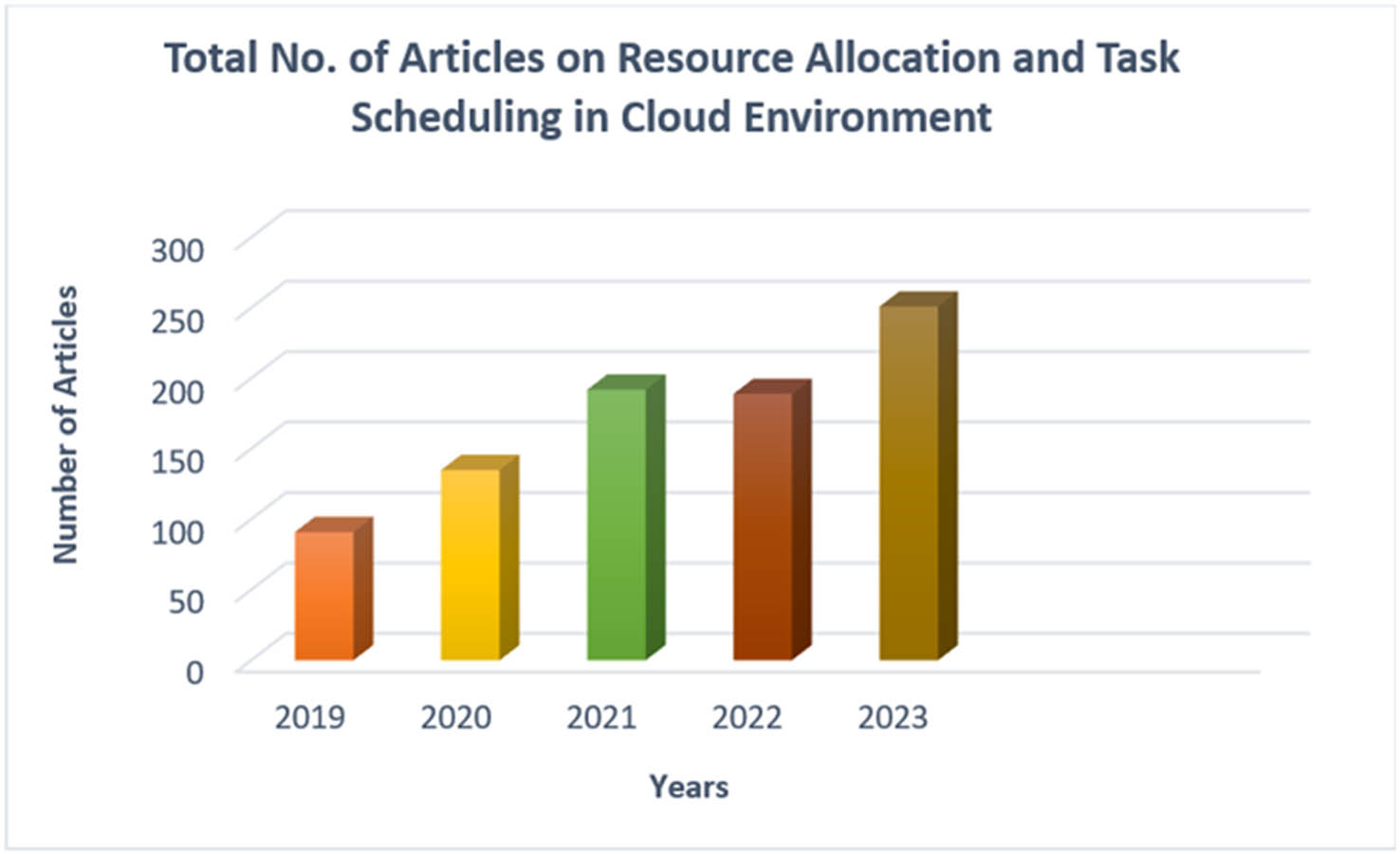

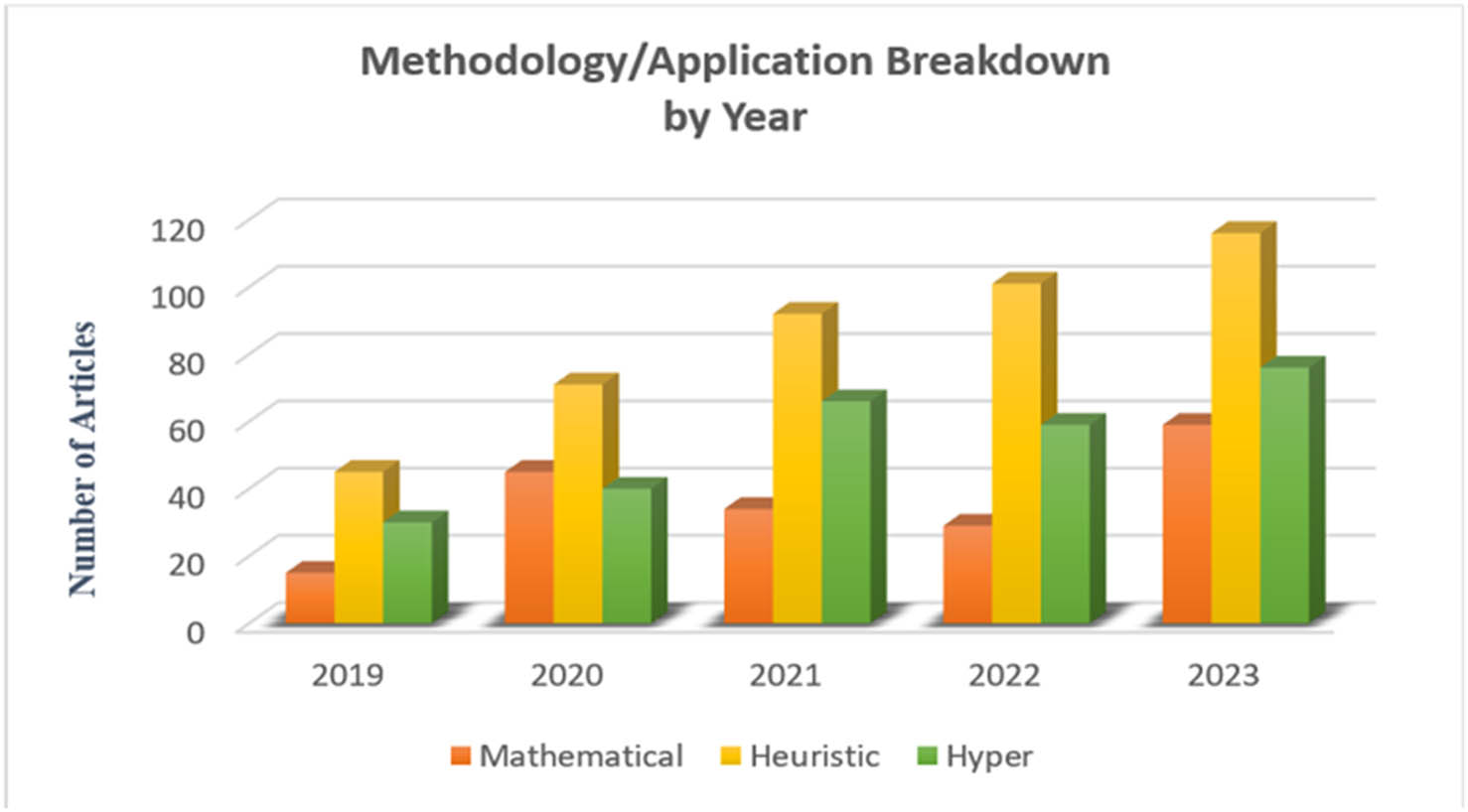

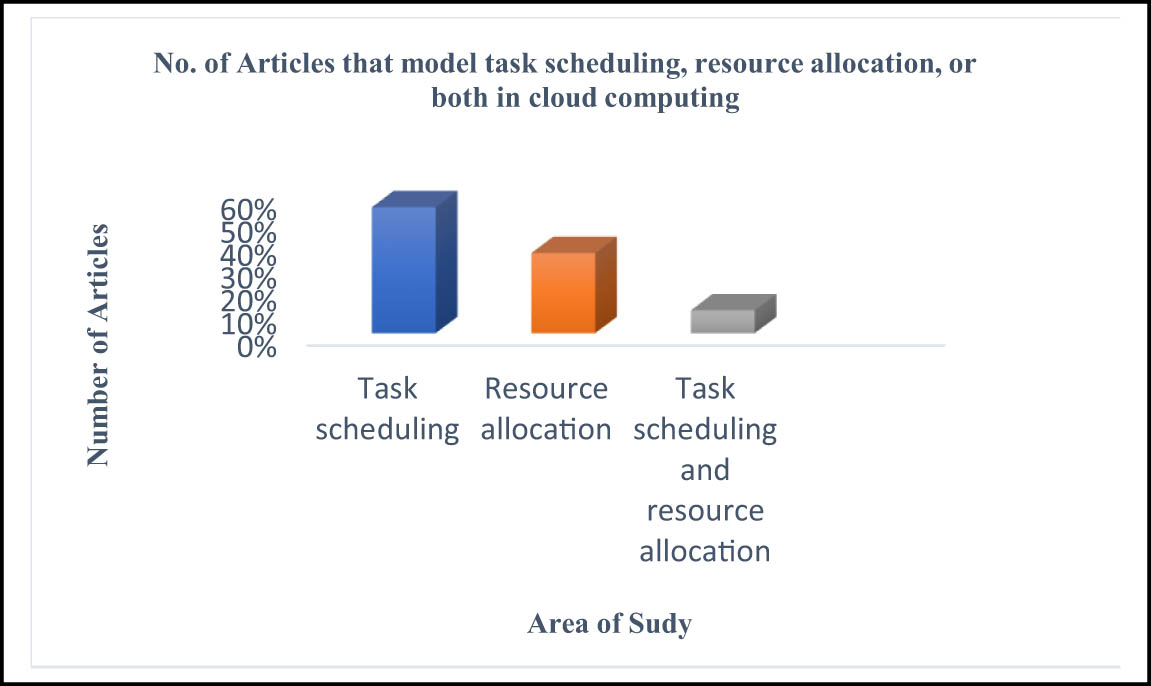

In this section, based on the search process we conducted, we obtained 921 articles from scientific digital libraries as a primary search. Eighty-seven duplicate articles were eliminated, reducing the number to 834. Afterward, 368 articles were excluded due to title and abstract criteria. Furthermore, 282 documents were inaccessible due to publisher restrictions, required institutional subscriptions, or limited online availability. Finally, after reviewing the remaining 184 articles, 84 were excluded based on full-text criteria, leaving 100 studies included in this review. Figure 1 shows the PRISMA flow diagram. Figure 2 shows the number of publications per year in the cloud environment. Figure 3 illustrates the contribution of each methodology/application to the publications over time.

The PRISMA flow diagram (created by the authors).

Rate of publications on resource allocation and task scheduling from 2019 to 2023 according to our systematic review (created by the authors).

The contribution of each methodology/application to the publications over time (created by the authors).

3.7 Impact of study quality and bias on review results

The quality of the studies included in this SLR significantly impacts the reliability and validity of the review results. The reviewed studies exhibit variability in design and methodological rigor, with many relying heavily on simulation-based approaches such as CloudSim, which introduces simulation bias and limits the generalizability of the findings to real-world cloud computing environments. Additionally, the frequent use of small synthetic datasets, which may not reflect the diversity and variability of actual cloud workloads, results in dataset bias and potentially skews algorithm performance evaluations.

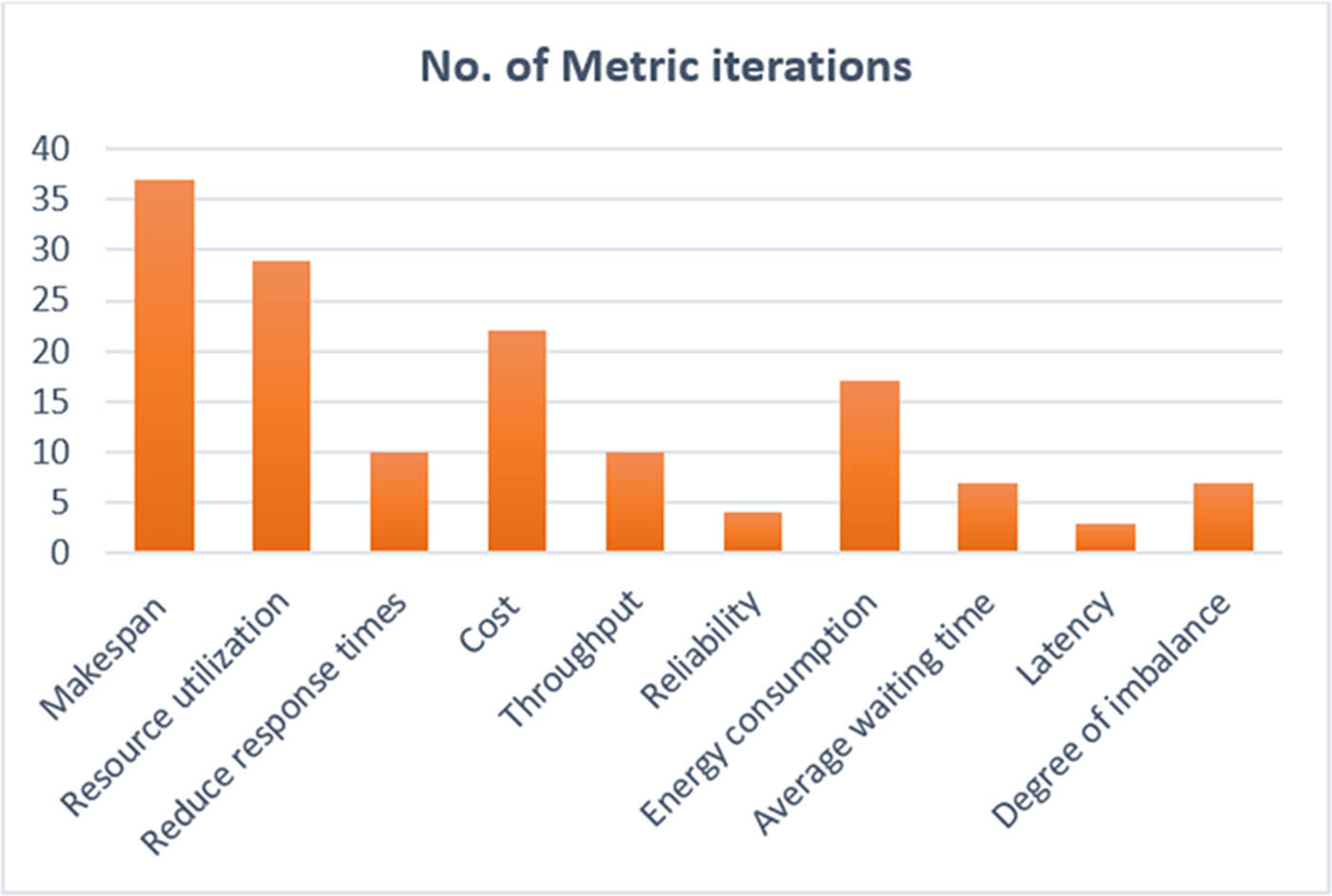

Metric selection bias is shown by the emphasis on traditional metrics such as makespan at the expense of other essential aspects such as user satisfaction, security, dependability, or energy efficiency, which restricts a thorough knowledge of algorithm performance. It is difficult to compare and synthesize findings across research due to the absence of common evaluation standards and benchmarks, further complicating the process of coming to consistent conclusions or suggestions. Utilizing supportive tools such as Microsoft Excel and Cloude makes this analysis easier, but it is vital to note that the authors’ verification is necessary to guarantee the integrity and quality of the data. Overall, the review underscores the need for more rigorous study designs, synthetic datasets, and diverse evaluation metrics to improve the caliber and relevance of studies on scheduling and allocation, ensuring that findings are reliable and relevant to real-world scenarios.

4 Allocation of resource and scheduling of task in cloud computing

In light of the rapid growth and evolution of cloud computing, the efficient allocation of resource and the judicious scheduling of tasks have become critical to improve performance and reduce costs. As organizations increasingly rely on cloud infrastructures to meet their computing requirements, understanding the intricacies of resource management has become critical [27]. These processes are fundamental and vital for optimizing the utilization of cloud resources while simultaneously meeting user requirements and provider objectives [28].

4.1 Definitions and importance

Resource allocation denotes the distribution of computing resources (such as CPU, memory, and storage) among various tasks or applications in a cloud-based system [29]. Task scheduling involves the process of assigning these tasks to the allocated resources in an optimal manner. Effective resource allocation and task scheduling are essential for various reasons [30]:

Performance optimization: Proper resource allocation and task scheduling can significantly improve system performance and reduce task completion times.

Cost efficiency: Efficient resource utilization helps minimize operational costs for cloud providers and users.

QoS: It ensures adherence to service level agreements (SLAs), guaranteeing consistency, timeliness, and reliability.

Energy efficiency: Optimized resource usage can minimize energy consumption in the centers of cloud data.

4.2 Challenges in cloud resource management

The complexity of resource allocation and scheduling task in cloud environments stems from several factors [31]:

Heterogeneity: Cloud resources and user tasks are diverse, making finding optimal matches challenging.

Dynamism: Cloud environments’ workload and resource availability constantly change.

Conflicting objectives: Cloud providers aim to maximize profits and resource utilization, while users seek to minimize costs and execution times.

NP-hard nature: The resource allocation and scheduling problem in cloud computing is NP-hard, making it computationally intensive to find optimal solutions.

4.3 Key entities and their objectives

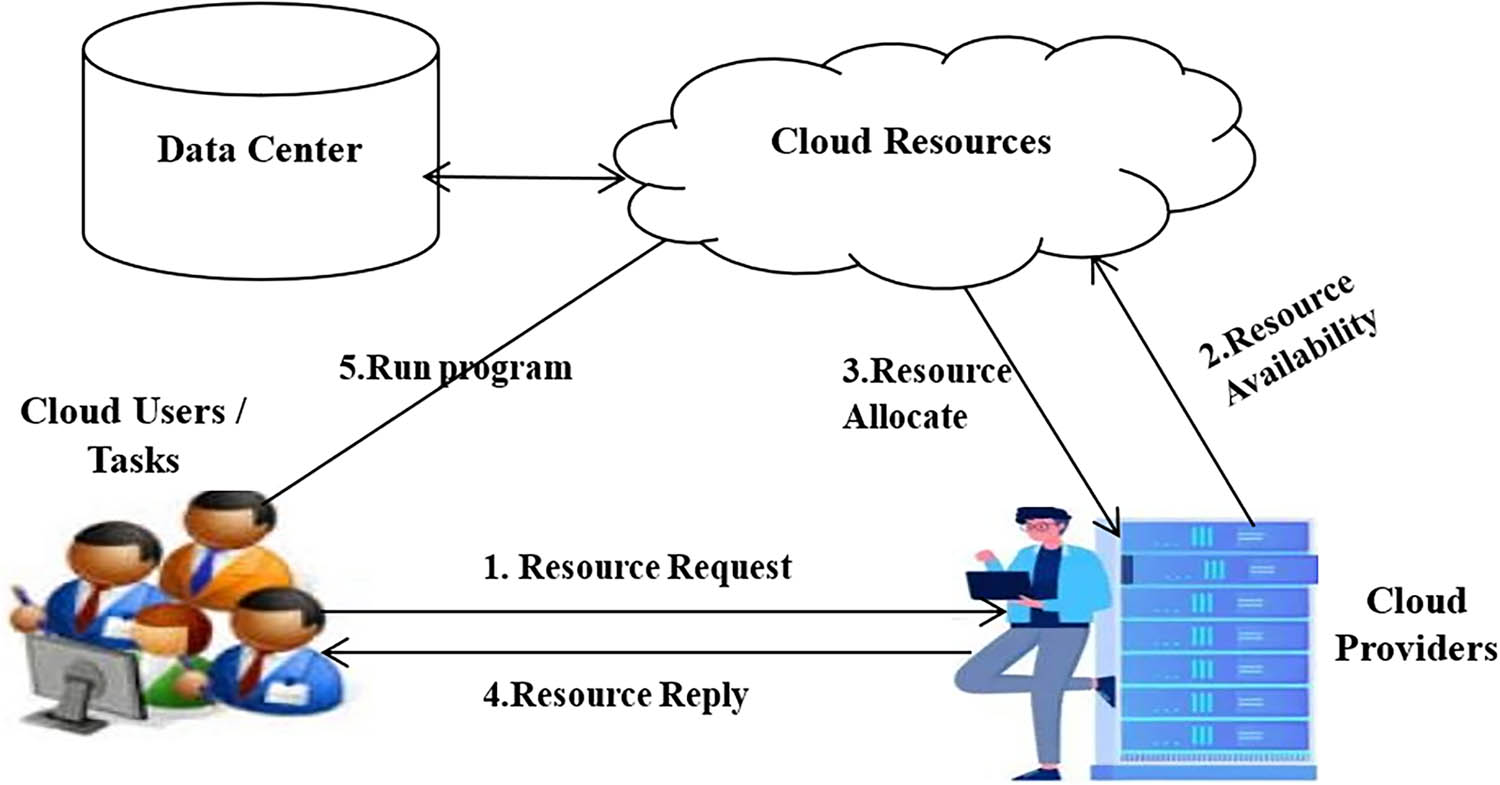

Cloud computing involves two primary entities [32]: The essential interaction of key entities in cloud computing can be easily understood using Figure 4.

Cloud providers:

Establish and maintain cloud data centers

Provide computing resources on a rental basis

Aim to maximize profit through optimal resource utilization

Cloud users (consumers):

Utilize cloud resources for their applications

Seek to run applications within set time and budget constraints

The basic interaction flow between cloud entities (created by the authors).

The main interaction is shown in the following steps [33,34]: A cloud user submits a request (task) for any specific resource to the cloud provider. Upon receiving the request, the cloud provider verifies resource availability. If resources are available, they are allocated to the requesting user, who then utilizes the resources to execute the desired activity or application. If the users no longer require the resources, they release them [35]. Then, the provider schedules and allocates resources to the other requesting clients. The allocation of resources and scheduling tasks in cloud computing is a widely studied optimization problem, making it a highly appealing research area. However, achieving efficient scheduling using state-of-the-art optimization algorithms remains challenging due to the inherent complexity and dynamic nature of cloud computing [5].

Allocation strategies and scheduling methods in cloud computing environments typically optimize various parameters, which can be broadly categorized into consumer-centric and provider-centric goals [36]. Consumer-centric objectives focus on minimizing execution time (makespan), reducing costs, and meeting deadline constraints, all aimed at enhancing user satisfaction and operational efficiency [37]. On the other hand, provider centric goals emphasize maximizing resource utilization, balancing workload across available resources, and minimizing energy consumption [38]. Achieving these goals is essential for ensuing operational efficiency and profitability. The primary challenge lies in balancing these often conflicting objectives to develop effective and holistic solutions [39].

The reviewed literature encompasses several critical subdomains and application scenarios within resource allocation and task scheduling in cloud computing. Edge computing, a prominent area, focuses on latency-sensitive applications such as Internet of Things (IoT) networks and real-time analytics, emphasizing reduced latency and efficient scheduling in resource-constrained environments [40]. Real-time applications, including video streaming and live data processing, require strategies that optimize response times and ensure consistent service under dynamic workloads [41]. Big data processing, decisive for data mining and large-scale analytics, prioritizes fault tolerance and throughput across distributed systems [42]. Energy-efficient cloud systems target sustainability by minimizing energy consumption while balancing performance metrics such as makespan and resource utilization, especially in data centers and containerized environments [43]. Finally, hybrid and multi-cloud environments pose challenges in inter-cloud resource scheduling and load balancing, with strategies focusing on cost optimization, fault tolerance, and workload distribution across heterogeneous infrastructures [30]. These diverse subdomains highlight the breadth and complexity of resource management challenges in cloud computing, providing valuable insights for targeted improvements in future research.

4.4 Impact of IoT, AI, and quantum computing on resource allocation and scheduling

The integration of cloud computing with emerging technologies such as IoT, AI, and quantum computing is significantly influencing resource allocation and scheduling processes. IoT devices generate massive amounts of data that need to be processed and stored efficiently. Effective resource allocation and scheduling are crucial for handling these data deluge [5,40]. Techniques such as fog computing and edge computing are employed to process data closer to the source, emphasizing reduced latency and efficient scheduling in resource-constrained environments [44]. Research has shown that task scheduling mechanisms significantly influence resource allocation in IoT environments.

AI plays a significant role in optimizing resource allocation and scheduling. AI algorithms can predict workload patterns, automate resource provisioning, and optimize task scheduling to improve efficiency and reduce costs. For instance, AI can dynamically allocate resources based on real-time demand, ensuring optimal utilization. Furthermore, AI enhances automation in task scheduling, leading to more streamlined and cost-effective resource management [14].

Quantum computing introduces new paradigms in resource management; it adds another dimension by solving NP-hard optimization problems in resource scheduling with unprecedented efficiency. Quantum cloud platforms allow users to access quantum resources without needing specialized hardware [41]. It enables advanced simulation models for demand prediction and introduces quantum-inspired approaches for near-optimal resource management. Efficient scheduling and resource management are essential to handle the unique characteristics of quantum computations, such as qubit fidelity and queuing times.

5 Current state of resource management approaches

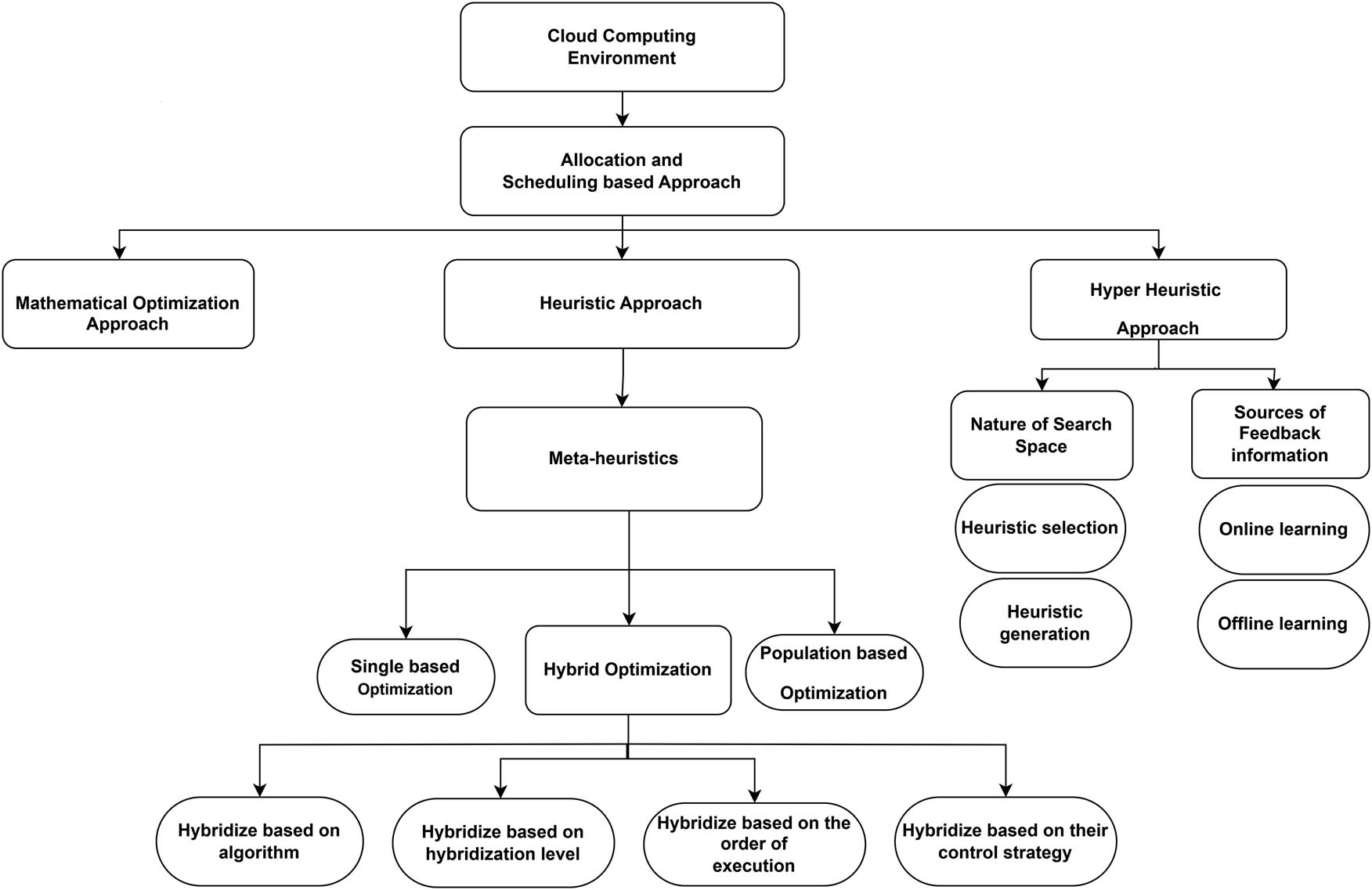

Allocation strategies and scheduling methods in cloud computing utilize a variety of approaches to meet consumer requirements effectively [45]. As the number of user requests increases, efficient scheduling becomes crucial; as poor scheduling can lead to significant performance degradation. An effective task scheduler must be capable of adapting to diverse scenarios and task types [46,47]. Existing optimization algorithms often address optimization problems and the inherent complexity of the optimization problems but often prioritize exploitation over exploration, limiting their ability to discover optimal solutions [48,49]. This section examines the performance of optimization algorithms, including mathematical, heuristic, and hyper approaches. With a particular emphasis on the advantages and limitations of MHs. Figure 5 illustrates a new taxonomy of methods categorizing them based on their underlying approaches.

A new taxonomy diagram representing categorization of approaches based on their methodologies (created by the authors).

5.1 Mathematical approach

The mathematical approach aims to find optimal solutions or near-optimal solutions by formulating the problem as a mathematical model and solving it using optimization techniques, but they may require more computational resources and time [50]. The most popular examples are integer linear programming (ILP) [51], nonlinear programming [51], dynamic programming [52], and game theory-based approaches [52].

These techniques have been applied in various fields, such as AI, operations research, engineering, and astronomy. This is due to their ability to provide structured solutions to many complex problems [53]. For example, ILP is effective in scenarios where decisions are discrete, such as in resource allocation and scheduling problems. On the other hand, when the relationships between variables are not linear, then nonlinear programming is used [54].

While dynamic programming is particularly used in scenarios that involve sequential decision-making, such as inventory management and shortest path problems, it is effective for solving problems that can be broken down into simpler subproblems. Game theory-based methods provide insights into competitive situations where the outcome depends on the actions of multiple agents, and thus provide strategic frameworks for negotiations, pricing strategies, and conflict resolution [51]. To strike a balance between computing efficiency and optimality for addressing real-world issues, researchers continue to explore hybrid approaches that blend heuristic strategies with mathematical rigor [55].

5.1.1 Review of mathematical approach

This section reviews articles on resource allocation and task scheduling algorithms that are based on mathematical approach; Table 2 lists the algorithms used in cloud computing environments.

Mathematical algorithms used in resource allocating and task scheduling

| Algorithms/approaches | Simulation/tool | Objective(s)/key performance metrics | Quantitative results | Sample size (no. of cloud nodes, tasks, or datasets | Main focus area | Limitations |

|---|---|---|---|---|---|---|

| Stackelberg game model for dynamic pricing [51] | CloudSim | Maximizes revenue and resource utilization | Surpassed fixed and auction-based pricing in resource utilization and revenue | Three SaaS cloud providers and one IaaS provider, with three service types, and one type of VM instance | Resource allocation | The study runs under a simplified scenario and does not accurately capture the complexity of real-world cloud computing infrastructures |

| MRFS [52] | CloudSim | Utility maximization, resource dominance minimization | Improved solution quality, maximized resource use, and reduced server count | Three users distributed across three servers, each with a varying VM request profile | Resource allocation | Specific data used not specified |

| ILP [57] | MATLAB | Task completion time and payoff level | The proposed method outperformed RR method in task completion time and reward | IoTcloudServe@TEIN platform (two cloud scenarios) | Task scheduling | Time-series structure of requests not addressed |

| Enhanced ordinal optimization with linear regression [58] | CloudSim | Makespan minimization | Reached the target minimum makespan, and narrowed the search space | Not specified | Task scheduling | No comparison with existing approaches |

| Lagrangian relaxation, Mathematical programming [59] | Not specified | Energy consumption, task execution time, and resource utilization | Reduce energy operations and achieved high performance | Not specified | Resource allocation and Task scheduling | Trade-offs between energy efficiency and additional metrics not addressed |

| DCRNN [60] | CloudSim | Error of root-mean-square, Error of average absolute percentage | Root mean square error: 2.40%, Mean absolute percentage error: 0.18% | PlanetLab CPU usage data | Resource allocation | Focus only on CPU utilization, ignoring other metrics |

| Linear programming (LP-WSC) [61] | Not specified | QoS parameters, cost, availability, reliability | The proposed method outperformed in terms of significant cost reduction and improved reliability compared to traditional methods | Amazon EC2 big dataset taken from personal cloud datasets | Resource allocation | No comparison with state-of-the-art methods |

| BB-BC model [62] | CloudSim | Cost and time | Exceeded the performance of bio-inspired, static, dynamic methods | Not specified | Resource allocation | Limitations not discussed, data source not mentioned |

| Mixed set programming [63] | CloudSim | Resource allocation efficiency, collaboration efficiency | Improved efficiency in inter-enterprise collaboration | Not specified | Resource allocation | No comparison with existing approaches, no large-scale experiments |

Zhu et al. [51] suggested a dynamic pricing system based on a model for the Stackelberg game to tackle the challenge of increasing the income for cloud computing providers of Infrastructure as a Service (IaaS) and Software as a Service (SaaS). The simulation results show that the suggested mechanism performs better than auction-based pricing and fixed pricing systems in terms of revenue maximization and resource usage.

A multi-resource fair scheduling (MRFS) algorithm for heterogeneous cloud computing environments was presented by Hamzeh et al. [52]. The study aims to maximize each user’s utility on a specific server by reducing the number of dominant resources. MRFS was introduced as a solution to the resource scheduling problem in cloud computing. It considers the fair and efficient allocation of resources to users. However, the study did not specify the specific data used. It focuses on introducing the MRFS algorithm and assessing its effectiveness.

Researchers presented a method for allocating resources to meet growing needs in cloud computing [56]. The study introduced a multi-objective optimization technique aimed at aligning resource performance with percentage distances and minimizing the number of physical servers utilized. The RAA-PI-NSGAII method not only maximizes resource utilization and reduces solving time but also improves the quality and uniformity of the solution set distribution. However, the study does not adequately address the specific challenges or limitations encountered during the implementation of the proposed resource allocation algorithm, particularly in the context of increasing cloud computing demands.

A mathematical model to enhance the performance and efficiency of cloud computing by scheduling tasks within containers was introduced by Swatthong and Aswakul [57]. The model employs ILP to increase the average compensation of tasks while adhering to resource and demand constraints. This study evaluated the model using two cloud scenarios: An edge-core cluster and a peer-to-peer federated cloud. Results demonstrated that the proposed model outperformed the standard RR scheduling method in terms of task completion time and reward level. Additionally, the study underscores the importance of flexible and fine-grained task separation in cloud architecture. However, it does not account for the time-series nature of the requests, which could impact the model’s ability to handle maximum load efficiently.

Yadav and Mishra [58] suggested an enhanced ordinal optimization method paired with linear regression to enhance scheduling of task in cloud computing to reduce the makespan. This method narrows down the search space for scheduling and efficiently assigns the workload to the best schedule. Additionally, it forecasts future dynamic workload to achieve a target minimum makespan. However, there is no comparison with existing approaches to validate the effectiveness of the proposed technique.

Tai et al. [59] proposed an enhanced algorithm for managing computing resources and energy consumption in heterogeneous cloud computing centers. The algorithm considered factors such as energy usage, task scheduling, execution time, employing Lagrangian relaxation and mathematical programming, which develop high-performance and energy-efficient cloud computing facilities. However, the study does not address the theoretical trade-offs between energy efficiency and other performance metrics, such as task execution time or resource utilization.

Al-Asaly et al. [60] presented a self-sufficient, intelligent workload prediction technique employing a diffusion convolutional recurrent neural network (DCRNN) model for cloud resource allocation. The objective is to enhance prediction precision and reduce the gap between forecasted and real workloads in cloud computing setups. Tested on real PlanetLab CPU usage data, the model surpassed other deep learning models, achieving a root-mean-square error of 2.40% and an average absolute percentage error of 0.18%. However, the study focuses specifically on CPU utilization as the input data for prediction model; it ignores other metrics or factors such as memory consumption or network traffic.

Ghobaei-Arani and Souri [61] introduced a linear programming method called LP-WSC for web service composition in geographically distributed cloud environments, intending to improve QoS parameters. This approach selects the most efficient service for each request and significantly reduces the cost of choosing and configuring services, while increasing the availability of services and reliability of servers compared to other methods. However, the suggested LP-WSC strategy is not compared to other cutting-edge methods for web service orchestration in geographically dispersed cloud environments in this study.

In order to provide variable job assignments on VMs, Rawat et al. [62] proposed a cost-effective model of Big-Bang Big-Crunch (BB-BC) for resource allocation in cloud setups. It targets a globally optimal outcome through an objective function that considers metrics such as cost and time, surpassing traditional static, dynamic, and bio-inspired provisioning methods. However, the authors did not discuss the limitations and the data source was not mentioned in the study.

Shi et al. [63] proposed a uniform model description of manufacturing resources using ontology and metadata modeling methods. They also outline a strategy for collaborative scheduling of manufacturing resources between enterprises using Mixed Set Programming, which enhances resource collaboration and allocation efficiency. In subsequent work, the authors suggest a model and scheduling mechanism that can be integrated with the study of inter-enterprise logistics to improve the efficiency of inter-enterprise resource collaboration further. However, the study does not compare the proposed model and scheduling strategy with the existing approaches for conducting large-scale experiments.

5.2 Heuristic approach

The heuristic approach depends on empirical rules, guidelines, or experience-based decisions to assign resources and schedule workloads based on simple criteria without considering global optimization [64]. These methods remain popular for their computational efficiency and capacity to offer nearly optimal solutions. The two main parts of the heuristic-based techniques are specific heuristics and MHs. The most famous examples include first come first serve (FCFS) [65], shortest remaining time [65], RR [66], GAs [67], and PSO [67,68].

The heuristics are particularly useful in some scenarios and ineffective in others under heavy loads. These strategies take advantage of problem-specific knowledge to simplify complex decision-making processes, enabling faster responses in dynamic environments. For example, the “first come, first served” principle is commonly used in task scheduling, where the order of execution is determined by the arrival sequence. This makes it simple, but can sometimes be inefficient when dealing with large loads [64]. On the other hand, techniques such as RR are used for distribution of resources by allocating fixed time slots to each task fairly, which is especially useful in multi-user systems. This method mitigates the risk of starvation, ensuring that all processes get attention within a reasonable time frame [69].

In contrast, MH techniques, such as GAs and PSO, introduce a higher level of complexity by exploring a larger solution space. These techniques iteratively improve solutions by utilizing mechanisms inspired by natural processes, such as social behavior in PSO or selection, crossover, and mutation in GAs [70].

Notwithstanding their advantages, heuristic-based approaches may produce suboptimal solutions, especially when the underlying assumptions do not hold true in practice. Therefore, even though they provide valuable insights and speedy fixes, these methods must be used in conjunction with more exacting optimization strategies when accuracy is crucial. As research continues to evolve, hybrid models that combine heuristics with exact algorithms are gaining traction, promising to harness the strengths of both paradigms to achieve superior outcomes in resource allocation and workload scheduling [43].

5.2.1 MH approach

MH are strategies that provide efficient and optimized solutions, helping to solve problems of complex optimization in various fields by providing acceptable answers in a reasonable amount of time. MH search techniques are general, sophisticated approaches that can be used to build basic heuristics to solve specific optimization problems [71]. Since scheduling is an NP-hard problem, most scheduling algorithms do not explore the entire problem space to find the optimal resource allocation; hence, MHs are the best choice for this problem [72].

Single-based optimization algorithms: Generate a single random solution and strive to enhance it through optimization processes [73].

Population-based optimization algorithms (POAs): POAs rely heavily on factors such as the choice of algorithms, strategies, parameter combinations, constraint handling methods, local search methods, surrogate models, and niching methods to determine solution quality for optimization problems, etc. [73].

Hybrid MH: A hybrid MH primarily distinguishes according to four criteria [74]:

Hybridization based on algorithm: Hybridized algorithms include combinations such as MHs with MHs, MHs with Heuristics, MHs with Fuzzy logic techniques, or MHs with statistical techniques.

Hybridization based on hybridization level: Combine algorithms based on their level of coupling strength.

Hybridization based on the order of execution: The individual algorithms are executed either sequentially, intertwined, or even in parallel.

Hybridization based on their control strategy: Hybrids are classified based on their control strategy, which can be either integrative (coercive) or collaborative (cooperative).

5.2.2 Review of heuristic and MH algorithms

This subsection reviews articles on resource allocation and task scheduling algorithms based on heuristic and MH algorithms, including single-based, population-based, and hybrid algorithms. Table 3 lists the algorithms used in cloud computing environments.

Heuristic and MH algorithms used in resource allocating and task scheduling

| Algorithms/approaches | Simulation/tool | Objective(s)/key performance metrics | Quantitative results | Sample size (no. of cloud nodes, tasks, or datasets) | Main focus area | Limitations |

|---|---|---|---|---|---|---|

| OWPSO [29] | CloudSim | Makespan reduction, and reduce energy consumption | Near-optimal solutions, and enhanced improvements in scheduling efficiency | 4,800 scheduling experiments on CEA-Curie and HPC2N workload | Task scheduling | Insufficient exploration-exploitation phase trade-off inadequate exploration ability, high computation complexity, and slow convergence |

| N2TC, and GATA [68] | Simulation-based experiment | System utilization, cost, response time, and execution time | 3.2% in execution time, 13.3% in cost, 12.1% in response time | 405,894 tasks from Google cluster-traces v3 dataset | Task scheduling, and resource allocation | Limited to independent, non-preemptive tasks without deadlines |

| AC-CCTS (Actor-Critic Container Task Scheduling) [70] | CloudSim | RUR, RBD, and QoS | Improved utilization and reduced execution time via extensive simulations | Not specified | Resource allocation and task scheduling | Scheduling complexity due to dynamic workloads and environmental variability |

| LBIMM, Max-Avg, MCT, min-min, PSSLB, RASA, Sufferage, TASA [75] | CloudSim | ARUR, makespan, Throughput, ART | PSSLB and TASA perform well across metrics | HCSP dataset (four instances), GOCJ dataset | Task Scheduling | Restricted to static scheduling algorithms |

| FCFS, min-min, max-min, and RR [76] | WorkflowSim | Makespan | Max-min is best in Montage workflow; FCFS excels in CyberShake | CyberShake and Montage workflows datasets | Task scheduling | Makespan measurement not discussed for increased data centers/hosts |

| Improved backfilling algorithm [77] | MATLAB | Task acceptance ratio, task rejection ratio | 91.94% acceptance ratio, 8.05% rejection ratio | Open Nebula cloud platform | Task scheduling | Limited number of workloads and parameters used |

| Deep RIL [78] | CloudSim | Energy consumption, makespan, and SLA violation | Dynamic optimization of task execution | HPC2N, and NASA worklogs | Task Scheduling | Lack of detailed discussion on algorithm limitations |

| Dynamic resource-aware load balancing algorithm (DRALBA), Resource-aware load balancing algorithm (RALBA), Dynamic load balancing algorithm (DLBA), min-min, max-min, RR [79] | CloudSim | Makespan, ARUR, and Throughput | DRALBA excels competitors in both synthetic and realistic workloads | Synthetic and realistic workloads | Task scheduling | Scalability and real-world performance not discussed |

| TDSA [80] | Not specified | Makespan and resource utilization | 17.4% improvement in makespan, 31.6% in utilization | Randomly generated and real workflows | Task scheduling | Running time and data transfer uncertainty not considered |

| Multiclass priority task scheduling (MCPTS), DE ELECTRE III [12] | CloudSim | Task priority, queueing priority, resource priority | Dynamic task priority adjustment enhances efficiency | (KTH) IBM SP2 log | Task scheduling | Insufficient consideration of dynamic request characteristics |

| DRRHA [81] | CloudSim | Turnaround time, average waiting time and response time | Enhanced scheduling efficiency | Real dataset and a randomly generated dataset | Task scheduling | No comparison with state-of-the-art algorithms for resource usage or energy efficiency |

| Min-min and Density-based spatial clustering of applications with noise (DBSCAN) [82] | CloudSim | Execution time, task completion rates, and defect reduction | Enhanced task completion rates and reduced defects | NASA iPSC workload log file | Task scheduling | Complex job scheduling procedure, limited comparison with existing algorithms |

| LJFP-PSO and MCT-PSO [84] | MATLAB | Makespan, execution time, degree of imbalance, and total energy consumption | Enhanced performance compared to traditional PSO | Not specified | Task scheduling | Specific dataset or real-world scenarios not detailed |

| MTWO [85] | CloudSim | Makespan, throughput, response time, degree of imbalance, power efficiency, and resource utilization | Improved task scheduling and resource allocation | Not specified | Task scheduling, and resource allocation, | Specific data used in simulation not mentioned |

| GWO with RIL [86] | CloudSim | Runtime | Substantial decrease in uptime | Not specified | Task allocation | Assumes prior knowledge of task computational time |

| ICOATS [88] | CloudSim | Makespan and resource utilization | Improved resource utilization and makespan | Real-time worklogs derived from NASA | Task scheduling | Limited consideration of QoS characteristics |

| IWC [89] | MATLAB | Convergence speed, and accuracy | Faster and more accurate convergence | Small- and large-scale computing | Task scheduling | Parallel applications not investigated |

| Q-ACOA (improved Ant Colony Optimization) [90] | CloudSim | Task completion time, data migration time, and cost | Exceeds alternative algorithms | Not specified | Resource allocation, and task scheduling | Dataset not specified |

| ANN-BPSO [91] | CloudSim | Resource utilization, and reduce response times | Enhanced scheduling and load valancing in cloud environments | Not specified | Resource allocation, and task scheduling | Dataset not specified |

| HCSOA-TS [92] | CloudSim | Makespan, execution cost, and load balance | Outperforms GA, PSO, and GA-PSO | Four scenarios tested: (1) 25 tasks, 95 edges, (2) 50 tasks, 206 edges, (3) 100 tasks, 433 edges, (4) 1,000 tasks, 4,485 edges | Task scheduling | The study does not consider real-world cloud workloads or infrastructures |

| BSO-LB [93] | CloudSim | Utilizes resources and reduces makespan | Outperforms load balancing | GoCJ: Google cloud jobs dataset | Task scheduling | The proposed algorithm is slower than other algorithms |

| EHJSO [94] | CloudSim | Makespan, computation time, fitness, iteration-based performance, success rate | Outperforms previous methods | Not specified | Task scheduling | The authors did not specify limitations of the proposed algorithm |

| Reference Vector Guided Evolutionary Algorithm (RVEA-NDAPD) [96] | CloudSim | Makespan, execution cost, VM load, and user expectations | Enhanced performance over MaOEAs | Not specified | Task scheduling | Lack of detailed data information |

| Total resource execution time aware algorithm (TRETA) [97] | CloudSim | Total execution time, and degree of imbalance | Improved resource usage and performance | Real-world workload traces of NASA Ames iPSC/860 | Task scheduling | Not evaluated with other tactics or measures |

| AMO-TLBO [100] | WorkflowSim | Utilization, space, and cost | Outperforms TLBO, MOPSO, and NSGA-II | Not specified | Resource allocation | Dataset source not specified |

| GAECS [105] | MATLAB | Makespan, energy consumption, load balancing, and task completion time | Enhanced allocation and reduced makespan | Stochastic Datasets | Task scheduling | Complex parameter tuning required |

Chhabra et al. [29] addressed the optimization of bag-of-tasks scheduling in cloud data centers by developing the opposition learning enabled whale particle swarm optimization (OWPSO) algorithm, which combines the whale optimization algorithm (WOA) with PSO to improve scheduling efficiency. The study evaluates key performance metrics, including makespan and energy consumption, demonstrating that OWPSO significantly outperforms baseline algorithms in producing near-optimal scheduling solutions. However, the reliance on synthetic datasets for benchmarking and the need for thorough parameter tuning to achieve optimal performance limit the practical applicability of the suggested technique.

Mirmohseni et al. [39] presented the fuzzy PSO (FPSO)-GA approach, which combines GA and fuzzy PSO techniques to achieve effective load balancing in cloud networks. The proposed approach demonstrates significant improvements in load balancing and reducing energy, surpassing algorithms such as LBPSGORA, PSO, and GA in load balancing performance. While the authors highlight the effectiveness of the FPSO-GA algorithm in enhancing load distribution, the study lacks detailed numerical results or specific performance metrics to substantiate the claimed improvements.

Manavi et al. [68] proposed a novel hybrid approach for resource allocation and task scheduling in cloud computing, combining neural network classification with GA optimization. The method aims to improve execution time, cost, response time, and system utilization while considering fairness to prevent task starvation. Using a large Google dataset for simulation, the authors demonstrate improvements of 3.2% in execution time, 13.3% in cost, and 12.1% in response time compared to state-of-the-art methods. However, the study is limited to independent tasks without deadlines and uses a relatively small chromosome size in the GA, which may impact scalability for larger task sets.

Zhu et al. [70] proposed a heuristic multi-objective task scheduling framework for container-based clouds, exploiting actor-critic RIL to enhance task scheduling efficiency. The framework addresses the complexities of resource allocation and task management in dynamic environments, showing significant improvements in metrics such as resource utilization and execution time through extensive simulations. However, the study’s applicability is constrained by its focus on specific cloud environments, potentially limiting the generalizability of the findings. Additionally, the framework may face challenges with real-time adaptability in highly variable workloads.

Ibrahim et al. [75] presented a comparative evaluation of state-of-the-art static task scheduling algorithms in cloud computing, focusing on their performance in terms of resource utilization, makespan, throughput, and response time. The study compares methods such as PSSLB, MCT, min-min, max-avg, LBIMM, RASA, Sufferage, and TASA using CloudSim simulations with HCSP and GOCJ datasets. The results show that while MCT and Sufferage perform well in reaction time but poorly in resource utilization, TASA and PSSLB perform better across various metrics and datasets. The study emphasizes how crucial it is for cloud service providers and end users to balance resource usage and execution time. Nevertheless, the study is constrained by its emphasis on static scheduling techniques and dependence on virtualized settings instead of actual cloud configurations.

Hamid et al. [76] compared different task scheduling algorithms of cloud computing based on makespan using workflows as datasets, with makespan serving as the primary performance metric. Experimental results indicate that the FCFS algorithm outperforms RR, min-min, and max-min in the CyberShake workflow, while max-min outperforms FCFS, RR, and min-min in the Montage workflow. However, the study does not explore how makespan is affected as the number of data centers or hosts increases, leaving a critical aspect of scalability unaddressed.

Nayak et al. [77] intended to minimize the task rejection ratio and maximize the task acceptance ratio in a cloud-based scheduling mechanism for activities with deadlines. It improves upon the existing backfilling algorithm by addressing conflicts among similar leases and allowing the scheduling of new deadline-sensitive tasks during execution. An average lease acceptance ratio of 91.94% and a minimal average lease rejection ratio of 8.05% are attained by the suggested mechanism. It considers the current time and gap time as scheduling parameters, that are not addressed in the current studies. It allows the arrival of a new lease to be planned and does not require a decision maker such as analytic hierarchy process to resolve conflicts between similar leases. However, the performance analysis is based on limited workloads and parameters, which may not fully represent the diverse requirements of different cloud applications.

Mangalampalli et al. [78] proposed a deep RIL-based task scheduling algorithm in cloud computing, designed to enhance significant QoS parameters such as SLA violation, energy consumption, and time. The algorithm dynamically calculates task execution time based on a threshold value and the load on physical hosts, with host utilization determined using specific equations. While the approach effectively addresses key parameters such as makespan, SLA violations, and energy consumption, the study lacks a comprehensive discussion of the algorithm’s limitations. Including such insights could have highlighted potential drawbacks and opportunities for future refinement.

Mishra and Gupta [79] presented a study that compares heuristic algorithms for scheduling tasks in cloud computing, such as RALBA, DRALBA, DLBA, max-min, min-min, and RR, based on performance parameters such as throughput, makespan, and average resource utilization ratio (ARUR). The existing DRALBA approach outperforms other approaches in terms of performance parameters, both on realistic workloads and synthetic, making it an efficient and effective scheduling algorithm for cloud computing environments. However, there is no discussion regarding the suggested algorithms’ scalability and performance with larger workloads or in real-world cloud computing environments.

Yao et al. [80] proposed a task duplication-based scheduling algorithm (TDSA) to enhance the makespan for budget-limited workflows, utilizing idle slots on resources and reallocating the leftover budget. The TDSA algorithm shows notable enhancements in the makespan of workflows (up to 17.4%) and the utilization of cloud computing resources (up to 31.6%) compared to the four baseline algorithms, as evidenced by experiments on randomly generated and actual workflows. However, the study does not consider the running time of workflow tasks, and the amount of data transferred among workflow tasks is highly uncertain.

Ben Alla et al. [12] proposed a novel approach to address the problem of user requests and supplier resources not being prioritized. They proposed an efficient priority task scheduling scheme called MCPTS, in which four task parameters (length, waiting time, deadline, and burst time) are used to determine priority. The task priority, task queuing priority, and resource priority sub models make up the MCPTS scheme. To determine the priority of activities, they suggest using differential evolution (DE), a MH algorithm, and elimination and choice expressing reality version III, a new hybrid multi-criteria decision-making method. They also presented a queueing model-based dynamic priority-queue algorithm. Moreover, they created a productive and adaptable relationship between resource and task classes by dynamically adjusting resource priority depending on the task’s priority model. However, insufficient consideration of dynamic request characteristics and resource availability could affect scheduling choices.

To schedule tasks in cloud computing systems, Alhaidari and Balharith [81] proposed a novel method known as the dynamic RR heuristic algorithm (DRRHA). This method performs noticeably better than previous algorithms evaluated in terms of average waiting time, turnaround time, and response time. DRRHA employs the RR algorithm to increase task scheduling efficiency, modifying its time quantum based on the task’s remaining burst time and time quantum means. However, there has been no comparison between the proposed algorithm and other state-of-the-art task scheduling algorithms regarding resource usage or energy efficiency.

Mustapha and Gupta [82] introduced a fault-aware task scheduling algorithm in cloud computing using min-min and DBSCAN to enhance resource allocation efficiency fault tolerance, and enhance QoS in a dynamic and heterogeneous environment. The approach outperforms existing algorithms such as ant colony optimization (ACO), PSO, BB-BC, and whale harmony optimization (WHO) in terms of execution time, task completion rates, and defect reduction. However, the proposed technique uses DBSCAN to cluster resources, complicating the job scheduling procedure. Moreover, comparing the suggested algorithm with fewer existing algorithms may not provide as clear a picture of the algorithm’s performance as a more extensive comparison with a wider range of state-of-the-art methods.

Khan [83] released a power-efficient cloudlet scheduling (PACS) approach to reduce energy consumption, request processing time, and cloud computing setup costs. When compared to other popular cloudlet scheduling methods, PACS provides a noteworthy 3.80–23.82 speed boost. However, the study did not evaluate how well the suggested PACS technique scales in handling higher numbers of cloudlets and VMs.

Alsaidy et al. [84] reported that efficient task scheduling in cloud computing was vital for cost-effective execution and resource utilization, as addressed by the LJFP-PSO and MCT-PSO algorithms. These algorithms employ heuristic initialization to boost PSO performance, surpassing traditional PSO and comparative techniques in reducing makespan, execution time, imbalance, and energy consumption metrics. However, the study does not provide details about the specific dataset or real-world scenarios for evaluating the proposed algorithms.

Prathiba and Sankar [85] presented the multi-task wolf optimizer (MTWO) method, merging efficient task scheduling and secure resource allocation in cloud computing to tackle data and resource management challenges in a rapidly expanding cloud setting. This technique showcases a brief task schedule employing wolf optimization techniques to minimize makespan time and boost throughput. It further incorporates a deep neural network with cluster optimization for resource allocation efficiency within architectural limits, enhancing response time, power efficiency, and resource utilization. However, the study does not explicitly mention the specific data used in the simulation setup and analysis.

Yuvaraj et al. [86] introduced a machine-learning technique to optimize job allocation between the contributor and the event queue in the serverless framework. To increase the effectiveness of work distribution, they applied the gray wolf optimization (GWO) model. Moreover, the authors optimized GWO settings and improved work allocation using an RIL technique. According to simulation experiments, the suggested GWO-RIL technique significantly reduces runtimes and adapts to shifting load situations. However, the study assumes prior knowledge of the computational time period for each task and similar overheads prior to task scheduling, which may not always be realistic in real-world scenarios.

Nanjappan et al. [87] proposed a technique that integrates adaptive neuro fuzzy inference system (ANFIS) and black widow optimization (BWO) to enhance resource utilization and scheduling in a cloud computing. The ANFIS-BWO method determined which VM was best suited for each task. The BWO technique identifies the optimal solution for the ANFIS scheme. The proposed approach aims to minimize computation time, cost, and energy consumption while optimizing resource utilization. Nevertheless, it was not made clear which particular datasets were used in this investigation.

Tamilarasu and Singaravel [88] proposed the Coati Optimization Algorithm-Based Task Scheduling (ICOATS) to tackle challenges related to the scheduling of task in cloud environment. ICOATS aims to tackle issues such as long scheduling times, increased costs, and optimized VM workloads. A multi-objective fitness function is seamlessly integrated. This function aims to simultaneously reduce the makespan while enhancing the utilization of available resources. An exploitation strategy is incorporated to achieve this, which helps avoid premature convergence and improves the local search potential. ICOATS aims to find efficient solutions for cloud task scheduling by striking a harmony between exploitation and exploration. However, the study’s effectiveness in achieving optimal solutions may be limited due to the lack of consideration for a comprehensive set of QoS characteristics during the optimization process.

An improved WOA algorithm for cloud task scheduling (improved whale optimization [IWC]), using the WOA was described by the researchers [89] to improve task scheduling in cloud computing systems. Optimizing task scheduling plans and resource utilization to increase cloud system performance is one of the most important functions of IWC technology. The proposed IWC algorithm shows superior convergence speed and accuracy when compared to existing MH algorithms. It is versatile and can be applied to both small- and large-scale problems. However, the authors did not investigate parallel applications in cloud environments to reduce the scheduling overhead of this method when dealing with large workloads.

The researchers described an improved version of the ant colony optimization algorithm (ACOA) called Q-ACOAn [90] designed to fulfill predetermined time and cost objectives. This aims to enhance the algorithm for allocating resources and scheduling tasks in cloud computing. Results demonstrate that Q-ACOA outperforms alternative scheduling algorithms in job completion time, data migration time, and overall cost efficiency. Nonetheless, the study lacked explicit information regarding the dataset used in the research.

Alghamdi [91] introduced a novel resource allocation method for cloud computing environments using binary PSO (BPSO) and artificial neural networks (ANN). The proposed method aims to improve particle placements and thus reduce work completion times across VMs. Reducing response times, improving resource utilization, and ensuring good QoS for cloud computing applications are the project’s primary goals. Researchers worked to improve load and scheduling of the task in cloud environments using the proposed ANN-BPSO approach.

Shrichandran et al. [92] introduced a hybrid competitive swarm optimization algorithm for task scheduling (HCSOA-TS) in cloud environments. The method incorporates a Cauchy mutation operator into the Competitive Swarm Optimization algorithm to improve performance. The researchers assessed the HCSOA-TS technique across four situations with different numbers of tasks and edges, comparing it to GA, PSO, and GA-PSO algorithms. Performance metrics included makespan, execution cost, and load balance. Results showed that HCSOA-TS outperformed the other algorithms across all scenarios. However, the study is limited by its simulation-based nature, relatively small-scale scenarios (maximum 1,000 tasks), and lack of consideration for real-world cloud workloads.

The bird swarm optimization load balancing (BSO-LB) algorithm was the load balancing method suggested by Mishra and Majhi [93]. The algorithm views VMs as destination food patches and tasks as birds. The suggested approach seeks to reduce response time and optimize load balancing in cloud system. The load balancing technique is paired with the binary variation of the BSO algorithm. Experimental findings demonstrate that the suggested approach surpasses alternative algorithms. However, it is not as fast as other algorithms.

Paulraj et al. [94] covered the significance of task scheduling in cloud computing and suggested an effective hybrid job scheduling optimization method called efficient hybrid job scheduling optimization (EHJSO), which combines Cuckoo Search Optimization and GWO. Metrics such as makespan, computation time, fitness, iteration-based performance, and success rate are used to compare the suggested method to prior research and are determined to be superior. However, the authors do not specify the limitations of the proposed algorithm.

Hu et al. [95] presented well-organized scheduling algorithm based on energy for processing real-time applications in cloud computing, aiming to abate energy consumption while meeting real-time requirements. The method demonstrates an important decrease in energy consumption compared to existing algorithms, with a higher success rate in finding feasible schedules and comparable computation time. However, the study lacks specific details about the nature or source of the real-case benchmarks or the data used in the synthetic application.

Xu et al. [96] presented a reference vector-guided evolutionary algorithm (RVEA-NDAPD) and a many-objective scheduling strategy for cloud environments in order to solve the model. The performance of the suggested model in a cloud computing environment was effectively improved by the RVEA-NDAPD algorithm compared to the traditional many-objective evolutionary algorithms (MaOEAs) currently in use. However, the study lacks detailed information on the specific data used in the study, including task characteristics, system and user details, as well as performance parameters of VMs and tasks.

Bandaranayake et al. [97] proposed a TRETA method for cloud task scheduling to optimize the total execution time of computing resources. The study demonstrates that the suggested method enhances performance and resource usage for cloud jobs by comparing it with alternative heuristics on real-world workloads. The authors did not evaluate the algorithm when combined with other tactics or in relation to other measures.

Alsubai et al. [98] proposed an innovative swarm-based task scheduling approach that integrates the moth swarm algorithm and the chameleon swarm algorithm to optimize cloud scheduling in terms of efficiency and security. The objective is to enhance the tasks distribution using available resources and encode cloud information during task scheduling, with a focus on optimizing the available bandwidth for efficient scheduling of cloud computing tasks. Improvements in measures such as imbalance score, throughput, cost, average waiting time, reaction time, throughput, latency, execution time, speed, and bandwidth utilization are demonstrated by evaluating the performance of the approach. However, the specific details of the proposed algorithm and its technical implementation are not provided in the sources.

Kaur and Kaur [99] suggested a hybrid approach to improve load balancing in cloud systems by combining heuristic and MH methods. Within the HDD-PLB framework, two hybrid strategies were proposed and examined: hybrid heterogeneous early finishing time (HEFT) with ACO (hyper-heuristic algorithm [HHA]) and hybrid prediction early finishing time with ACO method (HPA). The authors assessed the efficiency of the suggested architecture using two key performance metrics: manufacturing scale and cost. Although the authors claim that the framework operates optimally in terms of cost and scale, no experimental evaluation or findings were offered to support their assertions.

Moazeni et al. [100] presented a new approach for cloud computing resource allocation, relying on the multi-objective-learning-based-optimization (AMO-TLBO). The AMO-TLBO algorithm includes features including the number of teachers, teaching factor adaptive, and self-motivated instructional learning to improve the skills of exploration and exploitation. Increasing utilization while reducing space and cost is one of the most important features of the proposed AMO-TLBO algorithm. Evaluation results show that the proposed method outperforms the TLBO, MOPSO, and NSGA-II algorithms in several performance metrics. However, the source of the dataset is not specified.