Abstract

The enhanced use of artificial intelligence (AI) in organizations has changed and revolutionized the approaches to solving problems, processing information, and decision making. While the algorithms turned out to be highly effective, AI systems faced adversarial attacks, which can be described as slight alterations of inputs that would fool an AI algorithm. These attacks remain major challenges to the dependability and protection of AI systems and thus the need to develop stable and flexible protection strategies and procedures. The aim of this article is to discuss the existing trends in adversarial attack techniques and protection mechanisms. To this end, papers, exact match, and systematically applied operative inclusion/exclusion criteria pertinent to protection strategies against adversarial attacks in multiple databases were incorporated and used. Specifically, 1988 papers were retrieved from Web of Science, IEEE Explore, and Science Direct, which were published between January 1, 2021, and July 1, 2024, where we used 51 of the identified journal articles for the quantitative synthesis in the final stage. Thus, the protection taxonomy, which resulted from our analysis, discusses the motivation, and best practices in relation to the threats in question. The taxonomy also describes challenges and suggests other ideas on how to improve the robustness of adversarial attack systems. Not only this study is a response to gaps in the literature but it also presents the reader with a map for further studies. It is necessary to draw attention to the fact that an objective criterion must be introduced to measure the degree of defense, collaboration with researchers of other fields, and the necessity to consider the ethical implications of the created defense mechanisms. Our results shall assist industry practitioners, researchers, and policymakers in designing an optimal AI security that can protect AI systems against dynamic adversary strategies. This review provides a reference of entry to the topic of AI security and the challenges that may be encountered together with the measures that can be taken to forward the studies.

1 Introduction

The ultimate goal of artificial intelligence (AI) is achieved through data and algorithm integration, with the results that the AI system produces being even more effective than the ones made by humans. Facial recognition, the voice of virtual assistance speech recognition, and self-driving cars are current examples of AI technology that we are accustomed to [1,2]. This type of human-less intelligence is called AI. The ability to recognize one’s surroundings and decide what to do independently or any device or machine that can replicate the human brain’s mental processes is referred to as AI. Together, machine learning (ML) and deep learning (DL) create a family of AI methods that can be called subsets of AI [3–5]. Therefore, ML is a part of the AI branch that focuses on enabling computations to self-learn, meaning that no direct programming is needed. The use of ML entails designing algorithms that can learn presumably from data and then predict the data [6,7]. As AI has dramatically progressed and spread in the past decade, the number of DL applications in industrial Internet-of-thing (IIoT) ecosystems will likely sharply increase over the current decade [8]. However, in recent years, the pervasive integration of ML and DL systems across various domains has revolutionized complex problem solving [9], decision making, and data analysis. As ML and DL algorithms continue to demonstrate remarkable capabilities, they have also become susceptible to adversarial attacks, a burgeoning concern that has captured the attention of researchers, practitioners, and industry experts alike [10,11]. Adversarial attacks are a serious risk to the resilience and dependability of ML and DL models, necessitating a profound exploration of protection strategies and methods [12–14].

This systematic review embarks on a comprehensive journey to dissect and analyze the multifaceted landscape of protection mechanisms deployed to safeguard ML and DL systems against adversarial attacks. Adversarial attacks, often subtle manipulations of input data with the intent of misleading or subverting ML models, have revealed vulnerabilities even in the most sophisticated algorithms. Such attacks have manifested in various domains, including computer vision [15], natural language processing (NLP) [16], and reinforcement learning [17], underscoring the urgency of robust defenses. Our primary objectives in this expansive study are to identify, categorize, and critically evaluate the diverse range of defense strategies and methods proposed in the existing body of literature. By synthesizing insights from a multitude of research papers, articles, and conference proceedings, the goal of this systematic review is to present a comprehensive and organized overview of the most recent state-of-the-art protection in ML and DL against adversarial attacks. The journey begins with an exploration of the fundamental principles underlying adversarial attacks, unraveling the intricate interplay between attackers and defenders in the ML and DL landscapes. We navigate through the taxonomy of adversarial attacks, ranging from evasion attacks [18] that manipulate input data to poisoning attacks [19] that compromise the integrity of training data. Understanding an adversary’s arsenal is pivotal for crafting effective protection mechanisms that can be resilient in the face of evolving threats.

Moving forward, the review systematically categorizes protection strategies on the basis of their methodologies, encompassing approaches such as adversarial training (AT), input preprocessing, robust optimization, and model ensembling. Each category is dissected to unravel the underlying mechanisms, strengths, and limitations, providing a nuanced perspective on the efficacy of various protection strategies. Furthermore, we examine the intersection of these protection strategies with explainable, scalable, and real-world applicability, acknowledging the multifaceted challenges that emerge when deploying protection in practical settings. Throughout this exploration, we emphasize the dynamic nature of the adversarial landscape, emphasizing the need for adaptive protection strategies that evolve in tandem with emerging attack methodologies. The insights garnered from this review not only contribute to a deeper understanding of protection mechanisms but also clarify the path for future research directions and the development of robust, secure, and resilient ML and DL systems. In conclusion, this systematic review serves as a comprehensive guide for researchers, practitioners, and policymakers engaged in the realm of ML and DL security. By synthesizing knowledge from diverse sources, we focus on fostering an understanding of the current state-of-the-art protection against adversarial attacks, catalyzing advancements in developing trustworthy and secure ML systems.

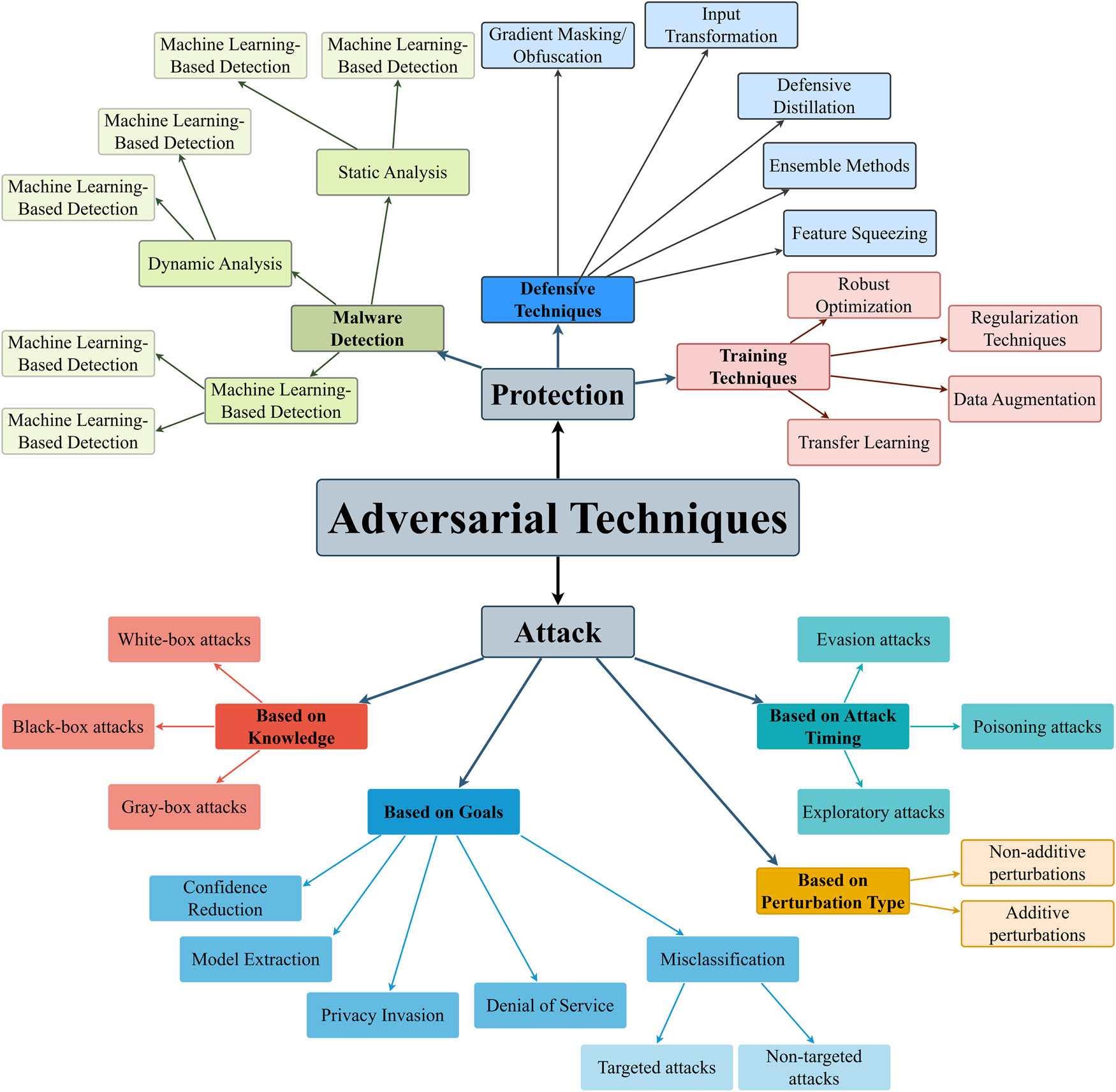

The primary axis, which is usually considered in the majority of studies concerned with adversarial attacks, is the protection aspect. In this systematic literature review, we aim to shed light upon all the dimensions of protection approaches and further evaluate their effectiveness in terms of protecting the ML/DL models. Thus, the mission of this work is to make a small but significant step toward enhancing the protection of AI systems and to support the constant development of effective and robust precautions and protections in the fields in which AI is used (Figure 1).

The divisions of protection systems in adversarial attacks. Source: Created by the authors.

Therefore, according to our opinion and analysis, protection systems include the following:

Defense methods: Advanced preventive actions, such as AT and robust optimization, are intended to identify possible attacks and safeguard against them.

Training on attacks: Introduction of “adversarial” patterns into the training procedure to increase model robustness.

Intrusion detection: Different strategies for distinguishing an attack through an examination of data and the use of smart algorithms.

The research objectives aligned with these motivations are articulated through the following questions:

Q1: What defines an appropriate taxonomy for incorporating protection strategies and methods in AI models against adversarial attacks?

Q2: How do studies address the integration of protection strategies concerning motivations, challenges, recommendations, and limitations?

Q3: What are the notable gaps in the current research on methods of protection versus adversarial attacks in AI methods?

The key contributions of this study are as follows:

Pioneering a thorough exploration of adversarial attacks in the innovation and optimization of protection methods, generation of adversarial attacks, protection strategies, protection robustness, and applications in malware, intrusion, and anomaly detection.

This article provides an extensive literature review that deeply examines prevailing research trends, challenges, motivations, limitations, and recommendations within the domain of protection strategies and methods against adversarial attacks in ML and DL.

Identifying research gaps, proposing future directions and outlining a roadmap to enhancing protection strategies across diverse scenarios of adversarial attacks.

The article’s structure unfolds as follows: Section 2 presents an analysis of adversarial attacks. Section 3 presents the methodology used in the study, and Section 4 outlines the bibliometric analysis of the literature as well as an in-depth analysis of the papers. In addition, the taxonomy results related to protection strategies are explored, and Section 5 discusses motivations, challenges, and recommendations. Section 6 provides a critical analysis, pinpointing gaps in current research and proposing distinct research avenues. Finally, Section 7 summarizes and concludes this study.

2 Adversarial attacks: An overview

The use of ML has been on the rise in the recent past, cutting across almost all disciplines. DL, in particular, has been actively used and continues to act as the basis for applications and services in areas such as computer vision, language processing, translation, and security, whose functions are sometimes better than those at the human level [20]. Nevertheless, the suggested systems are still vulnerable to adversarial attacks. By altering inputs with the goal of either evading detection or changing the model’s classification, adversarial attacks are a significant threat in many applications, such as self-driving cars and health care [21]. Indeed, security threats and their countermeasures from the aspect of adversarial attacks have attracted much attention in recent years. This is because these attacks can compromise the credibility and reliability of ML solutions. Therefore, the field has been oriented toward examining several aspects of these problems. Despite this, there remains a notable gap in the literature, which lacks a distinct classification that can generally describe the adversarial process. Such frameworks are necessary to have a systematic approach to studying adversarial attacks and, therefore, enable the scientific establishment of defenses against them. Therefore, it remains a constant necessity to develop and share reliable and easily discussed graphic classification and division methods on the basis of the forms of adversarial attacks and protection. Adversarial attacks can be classified into many forms, as in previous studies [22,23]. Thus, according to numerous previous studies, we have proposed a general diagram that explains the concept of adversarial techniques in our opinion. This diagram is useful in the literature review of other related research in the future and might help people better understand what adversarial is and categorize it accordingly to further develop the theoretical background of this field. The diagram is presented in Figure 2.

Adversarial techniques. Source: Created by the authors.

As we note in Figure 2, adversarial techniques can be classified into two basic categories: protection and attack, which are as follows:

Adversarial attacks

Based on Knowledge

White-box attacks: The attacker knows the architecture and parameters of the model as well as the data used during training [24].

Black-box attacks: When the model of the attacker is fully unknown, only the input and output [25] are known.

Gray-box attacks: The attacker can design attacks based on knowledge of the model’s architecture but without knowledge of the specific parameters [26].

Based on attack timing

Exploratory attacks: The attacker checks the model for weaknesses or any unusual response, which helps the attacker understand how it works [33].

Based on goals

Misclassification

Nontargeted attacks: The attacker wants to introduce any desired misclassification with no need to direct at a specific class [35].

Confidence reduction: The attacker focuses on confusing the model into being less sure of its classifications even if the classification made is correct [36].

Model extraction: The attacker tries to obtain the model parameters or architecture by querying [37].

Privacy invasion: The attacker attempts to learn the specifics concerning the training data that have informed the creation of the model [38].

Denial of service: The attacker aims to reduce the effectiveness of the model or complete failure of the given model [39].

Adversarial protection

Defensive techniques

Gradient masking/obfuscation: This prevents the attacker from computing good-quality gradients by either changing the model or the loss function used [40].

Input transformation: Cleans the input data to eliminate or reduce the effect of an adversarial perturbation on the given data [41].

Defensive distillation: The model is trained at a high temperature, and the output is suitably softened to be used to train the last model; thus, generating adversarial examples becomes difficult for an attacker [42].

Feature squeezing: This simplifies the input features (for instance, by lowering the number of color bits in images) to restrict the ability of the attacker to create efficient perturbations [45].

Training techniques

Robust optimization: This entails optimizing the model parameters with the inherent trait of being largely acceptable for adversarial perturbations [46].

Regularization techniques: Regularization is used for models to decrease the problem of overfitting, such as L2 regularization or dropout [47].

Data augmentation: Data diversification can be used to increase the robustness of the model by adding new, variable examples into the training dataset [48].

Transfer learning: Training on models that have prior knowledge that has been trained on large, diverse datasets that in itself may contain more robustness [49].

Malware detection

When these classifications are well understood, one can easily embrace the fact that adversarial attacks are quite diverse and complicated and be in a better position to design appropriate protection measures for ML systems against such threats. In this context, the focus of this work will be on protection against adversarial attacks on the basis of a discussion of relevant research contributions and possible advances. This study will seek to illustrate ways through which different measures have been utilized in protection against adversarial threats and how further development could be made in relation to the topic at hand with respect to ML. Thus, our systematic review aims to help advance research in this essential field and inspire innovative approaches.

3 Methodology

This study adhered to the methodology used in prior research [60,61] and included a systematic literature review using the preferred reporting items for systematic reviews and meta-analysis (PRISMA) statement. The analysis section followed the recommended reporting guidelines for systematic reviews and meta-analyses [60,61]. Various bibliographic citation databases covering a range of scientific and social science journals across different disciplines were used for the research. The quest for relevant papers included searching four widely recognized and reliable digital databases: Science Direct (SD), Scopus, IEEE Xplore (IEEE), and Web of Science (WoS) [62,63,64]. These databases are crucial for researchers, offering extensive coverage of scientific and technological research and supplying valuable insights for further analysis and investigation.

3.1 Search strategy

The search process involved comprehensively exploring the four selected databases to gather academic publications published in English. The search scope encompassed all scientific publications from 2021 to 1 July 2024. To conduct the search, a Boolean query was implemented, utilizing the “AND” operator to connect the keywords “adversarial attack,” “machine learning,” and “deep learning” (refer to Figure 5 for the detailed query). The selection of these keywords was based on recommendations provided by experts in AI, ML, DL, and decision making. This approach aimed to ensure a comprehensive and focused search strategy for identifying relevant literature.

3.2 Inclusion and exclusion criteria

The inclusion or selection of papers was based on the following criteria:

The papers had to be written in English and published in reputable journals or conference proceedings.

The papers should include adversarial attacks utilizing AI models (ML or DL).

The selected papers were required to address protection within the realm of adversarial attacks, as mentioned earlier.

The following exclusion criteria were applied:

Papers discussing adversarial attacks in areas unrelated to AI were not considered, and vice versa.

Studies focusing on adversarial attacks in ML and DL but lacking relevance to protection were excluded, and vice versa.

3.3 Study selection

This method comprises a series of structured steps, starting with the identification and elimination of papers in duplicate. The titles and abstracts of the selected articles were carefully evaluated via Mendeley software. This initial screening resulted in the exclusion of numerous unrelated works, ensuring a focus on relevant literature. In instances of differences or inconsistencies among authors’ assessments, the corresponding author played a crucial role in resolution and consensus. The subsequent step involved a thorough examination of the full texts of the articles, which were meticulously evaluated against the previously defined inclusion criteria in Section 3.2. This step aimed to refine the selection process by excluding articles that did not meet the predetermined criteria. The process and its outcomes are depicted in Figure 3, which provides an overview of the steps in filtering and selecting the final set of articles for analysis.

SLR protocol of protection strategies against adversarial attacks in AI models. Source: Created by the authors.

In this research, they focused on identifying and selecting those articles that met a set of specified criteria. First, a comprehensive search revealed 1988 entries comprising the articles from the SD, totaling 1,111; furthermore, 545 in Scopus, 130 in IEEE, and 202 in WoS. For the elimination of redundancy, 287 papers were found and removed, leaving no remaining number of papers at this number (1,701). Therefore, detailed scrutiny of titles and abstracts revealed that 1,073 articles were excluded because they did not comply with the predefined yardsticks. A comprehensive analysis was then performed for the subsequent 628 contributions. A total of 577 studies were excluded from the research because they failed to meet other inclusion criteria; 51 of these studies were excluded because they were determined to be relevant to basic inode requirements. In the end, 51 of these studies were included in the final collection of articles.

4 Finding analysis

By making a systematic attempt at categorization and analysis, this research aims to provide insightful knowledge regarding protection strategies and methods related to how they prevail during adversarial attacks. The conclusive set of findings in the articles is explained in Section 4.1, where a comprehensive analysis and segregation occur, dividing them into separate categories on the basis of their specific objectives as well as contributions that they make to this perspective piece. This section provides a summary of the key findings and insights gained from the selected articles, which helps to better comprehend protection strategies and methods in terms of adversarial attacks.

4.1 Bibliometric analysis

The large number of papers has made us struggle to understand classic writings in our previous studies. Currently, owing to the availability of thousands of guides and articles, a large amount of information is available, which is rather difficult to follow. Some scholars support the PRISMA framework, suggesting the replacement of previous ones with an elaboration of problems, identification of research gaps, and theory development. In addition, even though systematic reviews provide much evidence, they might also contribute to the emergence of research paradigms and literature products, but they still have reliability and objectivity issues. This occurs from the authors’ opinions, which they depend on to rephrase earlier knowledge. To add transparency to the treatment of summarizing past study results, many study projects have promoted holistic science mapping analysis via the RStudio package. The application of the bibliometric approach produces undeniable findings that reveal all the unfolding scenarios drawn from literary and scientific materials with high clarity and trustworthiness. In addition, the suggested tools are simple to use and free of charge, and no special competencies are needed. Consequently, this study makes use of one of the more elaborate bibliometric approaches that are illustrated in the following sections.

4.1.1 Most relevant sources

Figure 4 summarizes the most popular journals as sources of publications appending to the number of citations for each journal. The spot demonstrates the most influential and most often cited journals that were used for papers.

Most relevant sources. Source: Created by the authors.

4.1.2 Words’ frequency over time

The top words found in the titles of the research papers that have been used in this research or even in their abstracts are shown in Figure 5.

Words’ frequency over time. Source: Created by the authors.

4.1.3 Word cloud

The word cloud diagram shown in Figure 6 comprises the most commonly occurring words from the documents discussing protection strategies and methods of ML and DL, which work to fight off adversarial attacks [65]. This finding was expressed in the term “adversarial attack,” which appears most often in this context.

Word cloud. Source: Created by the authors.

4.1.4 Tree map

The depiction of hierarchical data in a conventional way is carried out via a directed tree structure. On the other hand, many trees cannot be displayed in small environments. In this fashion, the tree-map algorithm was conceived to render more than thousands of nodes in a more efficient manner [66]. Figure 7 shows the conceptual layout of the study.

Tree map. Source: Created by the authors.

4.1.5 Cooccurrence network

The fundamental research method within bibliometric analysis is co-occurrence networks which experts use to investigate phenomena. The wide network of important concepts emerges from the analysis through previous linked terms to deliver a conceptual framework to policy experts and professionals about the investigated field [62]. Figure 8 shows the co-occurrence network created from the articles of the study.

Co-occurrence network map. Source: Created by the authors.

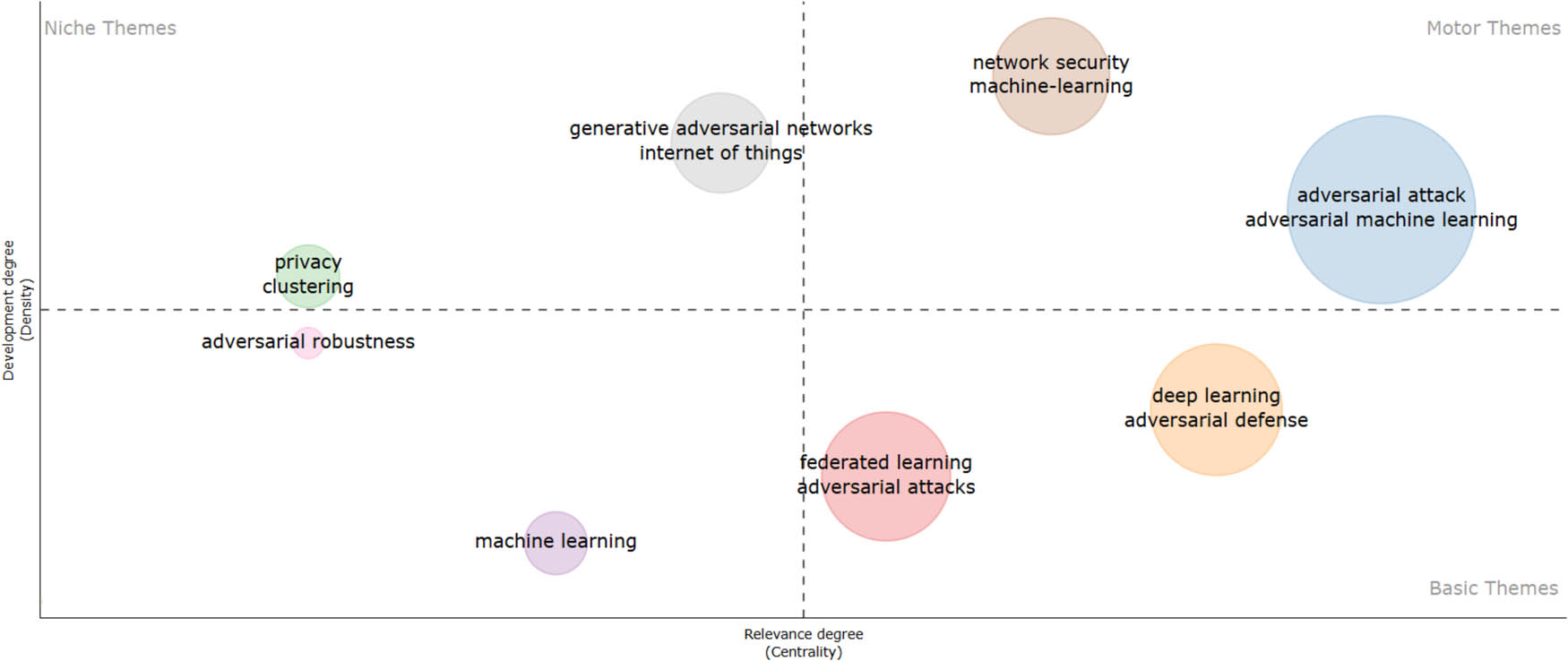

4.1.6 Thematic map

The density and centrality indices served as foundation to create a thematic map which divided into four topological regions (Figure 9). The analysis determined this conclusion through surveys of the abstracts and titles from all analyzed references along with supplementing relevant keywords.

Thematic map. Source: Created by the authors.

4.1.7 Factorial analysis

Factorial analysis provides the similarity standardization capability for bibliographic coupling and cocitation and co-occurrence measures. Researchers operate this method to visualize discipline conceptual frameworks through frequency evaluation of bibliographic clusters [67] (see Figure 10).

Factor analysis – three clusters. Source: Created by the authors.

4.2 Protection against adversarial attacks in AI models: Taxonomy

After the categorization of the 51 chosen articles, four groups were formulated to perform a systematic analysis on the basis of objective evidence and sourced from studies that fulfilled predetermined criteria. These study findings were primarily categorized into various subcategories to improve their organization and clarity during the presentation (Figure 11). These subdivisions allow the in-depth investigation of individual elements and the use of protection within adversarial attacks, thus enabling a holistic discussion of advancements and queasiness in this field. Furthermore, in the chosen articles, subcategories delve into other protection strategies and methods with respect to adversarial attacks that focus on what type of dataset has been used – whether text only – the image or a mix-up between both as far as this article is concerned. The established categories encompass n = 51 contributions, as outlined below:

Innovation and optimization of defense methods: including 27 of 51 contributions.

Malware, intrusion, and anomaly detection: 7 of 51 contributions.

Generate adversarial attack and defense strategy: 7 of 51 contributions.

Defense robustness: 10 of 51 contributions.

Taxonomy of protection strategies in AI models against adversarial attacks. Source: Created by the authors.

4.2.1 Innovation and optimization of defense methods

In the field of innovation and optimization of defense methods, a notable subset comprises 27 articles out of the 51 chosen, which explicitly delve into the implementation of defensive strategies and methodologies against adversarial attacks. These selected articles comprehensively investigate the approaches employed in various papers, analyzing how they leverage datasets, whether in the form of textual data, image data, or the integration of both modalities in their research endeavors.

Two studies delve into the innovation and optimization of defense methods in the text. Shi et al. [68] presented a new defense strategy termed adversarial supervised contrastive learning, which integrates AT with supervised contrastive learning. This approach aims to improve the resilience of deep neural network (DNN)-based models while maintaining their accuracy on clean data. Furthermore, Shao et al. [69] presented a two-step, effective protection mechanism for textual backdoors known as backdoor defense model based on detection and reconstruction: (1) recognizing suspicious terms in the sample and (2) recreating the original text by replacing or deleting words. However, given the complexity of cybersecurity, relying solely on these studies is not sufficient. Further research and collaboration are needed to address this pressing issue comprehensively.

In the realms of images and graphs, there has been a focus on the innovation and optimization of defense methods. The primary objective of Li et al. [70] was to create and assess the applicability of SecureNet, a key-based access license approach to shielding the DNN models’ IP. SecureNet utilized private keys in model access, employed a key recovery mechanism, and had countermeasures for adversarial and backdoor attacks. Al-Andoli et al. [71] suggested and tested an approach named AEDPL-DL, which stands for the AE detection-based protection layer in DL models to improve the DL models’ resilience against adversarial perturbations. The framework also encompasses a protection layer for adversarial examples, as it reduces the reliability, security, and efficiency of DL applications. Unmasking and purification-based methods are fast becoming popular techniques, and since adversarial patches work in different layers of a computer vision system, Yin et al. [72] integrated a modular defense system for easily addressing each layer and aimed to avoid the common problem of image destruction, reduce distribution shifts, and protect these systems against attacks of different kinds, making such systems more secure and trustworthy. Abdel-Basset et al. [73] proposed and assessed the proposed privacy protection-based federated DL (PP-FDL) framework to prevent privacy-related GAN attacks in non-i.i.d. data settings without compromising the classification accuracy in IoT applications such as smart cities. Zhang et al. [74] proposed the realistic-generation and balanced-utility GAN (RBGAN), which is a face deidentification model to address existing issues of privacy preservation and maintain data usefulness through the introduction of disentangled and symmetric-consistency-guided generation parts in the GAN structure. Luo et al. [75] focused on addressing the problem of achieving a natural accuracy‒robustness trade-off in federated learning (FL) when dealing with scenarios with skewed label distributions. To accomplish this, generative adversarial networks for federated adversarial training (GANFAT), which demonstrated better outcomes in terms of traffic security against adversarial attacks on the datasets used in the experiments, are suggested.

In addition, to improve the robustness of neural networks against adversarial attacks, attribution guided sharpening (AGS), which uses explainability approaches such as AGS, employs saliency maps derived from a nonrobust model to direct Choi and Hall’s sharpening technique, which diminishes noise in input images before classification [76]. Hwang et al. [77] are credited with this approach and introduced AID-Purifier to increase the resilience of adversarially trained networks by refining their inputs. This auxiliary network operates as an extension to an already trained primary classifier and is trained to use binary cross-entropy loss as a discriminator to preserve computational efficiency. The objective of the investigation conducted by Dai et al. [78] was to introduce an effective defense method named deep image prior-driven defense (DIPDefend) in opposition to adversarial examples. By using a DIP generator to match the target/adversarial input, they observed intriguing learning preferences in image reconstruction. Specifically, during the first stage, the main focus was on obtaining robust features that can withstand adversarial perturbations. This was accomplished by incorporating nonrobust features that are susceptible to such perturbations. In addition, they devised an adaptive stopping strategy tailored to diverse images. The goal of the research by Rodríguez-Barroso et al. [79] was to introduce a dynamic federated aggregation operator capable of dynamically excluding adversarial clients. This operator aims to safeguard the global learning model from corruption. Researchers have investigated its usefulness as a protection against adversarial attacks by incorporating a DL classification model into an FL framework. The Fed-EMNIST Digits, Fashion MNIST, and CIFAR-10 picture datasets were used in the evaluation. In addition, the goal of the research outlined by Choi et al. [80] is to present two simple yet effective mitigation techniques (parallelization and brightness modification) to show how to improve the robustness of cutting-edge defense strategies. Moreover, a unique protection strategy that makes use of perceptual hashing is proposed. This technique creates a hash sequence from a query image via the perceptual image hashing strategy known as PIHA. The text presents a plethora of defense methods against adversarial attacks but lacks coherence and conciseness. It suffers from verbosity and repetition, with an overwhelming amount of technical jargon, making it difficult to follow. In addition, critical analysis or comparisons between methods are lacking, leaving the reader unsure of their relative effectiveness or practicality. A more focused and streamlined presentation would enhance readability and comprehension. To detect adversarial attacks based on queries, the produced hash sequence is then compared with those from earlier questions. Moreover, the goal of the study described by Li et al. [81] was to introduce a new defensive strategy known as robust training (RT). This approach aims to minimize both the robust risk and standard risk simultaneously. Furthermore, the scope of RT was expanded to a semisupervised mode to bolster adversarial robustness. This extension, SRT, proved effective since the robust risk is unrelated to the true label, and the previously p-bounded neighborhood was broadened to encompass various perturbation types. The research outlined by Lu et al. [82] delved into common backdoor attacks within FL, encompassing model replacement, and adaptive backdoor attacks. On the basis of the initiation round, backdoor attacks were divided into convergence-round and early-round attacks. Researchers have proposed two different security strategies: one that uses backdoor neuron activation to address early-round attacks and the other that uses model preaggregation and similarity assessment to identify and remove backdoor models during convergence-round attacks. Li et al. [83] proposed an effective defense strategy by optimizing the kernels of support vector machines (SVMs) with a Gaussian kernel to counter evasion attacks. In addition, Hassanin et al. [84] aimed to develop an attack-agnostic defense method that integrates a defensive feature layer into a well-established DNN architecture. Through this integration, the effects of illegal perturbation samples in the feature space are lessened. Liu and Jin [85] explored the creation of deep neural architectures via a multiobjective evolutionary algorithm that is resistant to five common adversarial attacks. Furthermore, Pestana et al. [86] presented for the first time a class of robust images that are easy to defend against adversarial attacks and that recover better than random images. To improve the adversarial robustness of deep hashing models, the focus has been on investigating semantic-aware adversarial training (SAAT) [87]. Shi et al. [88] proposed an attack-invariant attention feature-based defense model (AIAF-Defense) in an effort to improve the defensive model’s capacity for generalization. In the study by Yamany et al. [89], in autonomous vehicle (AV) contexts, a unique optimized quantum-based federated learning (OQFL) framework was created to automatically update hyperparameters in FL via a variety of adversarial techniques. Lee and Han [90] suggested a causal attention graph convolutional network (GCN), and Zha et al. [91] presented a clear and innovative method for performing adversarial steganography while improving the opponents’ requirements. In addition, Rodríguez-Barroso et al. [92] discussed robust filtering of one-dimensional outliers (RFOut-1d), a novel federated aggregation operator, as a robust defense against backdoor attacks that poison models. In addition, in the study by Kanwal et al. [93], the aim was to present a feature fusion model that integrates a pretrained network model with manually created features. The objective is to obtain robust and discriminative characteristics. On the other hand, one paper used datasets of text and images; in the investigation by Nair et al. [94], a privacy-preserving framework named Fed_Select was introduced. This framework focuses on ensuring user anonymity in Internet of medical things (IoMT) environments when analyzing large amounts of data under the framework of FL. Fed_Select minimizes potential vulnerabilities during system training by reducing the gradients and participants through alternative minimization. The framework guarantees user anonymity by using hybrid encryption approaches and runs on an edge computing-based architecture. It also has the added benefit of lessening the strain on the central server. These studies present a vast array of defense strategies against adversarial attacks but suffer from several disadvantages. They lack coherence and clarity, overwhelming the reader with technical details. Moreover, these studies may overlook real-world applicability or scalability, hindering their practical utility.

4.2.2 Malware, intrusion, and anomaly detection

This section includes detecting malware, hacks, anomalies, or any threats through adversarial attacks, as this section consists of 7 contributions out of 51.

Numerous studies have been conducted using text datasets. Gungor et al. [95] presented RObust Layered DEFense against adversarial attacks toward IIoT ML-IDMs, which is based on the use of the denoising autoencoder for a better prediction result, as well as protection. In the study by Shaukat et al. [96], ten malware detectors based on neural networks were created. One of these detectors was trained without being exposed to adversarial attacks, but the other nine were trained via a specific adversarial approach. A novel technique is presented to account for the features of various adversarial attacks and leverage the effectiveness of these detectors against evasion tactics. Using a mix of various adversarial approaches, a neural network is trained in this manner, yielding the best performance out of all 11 detectors. Rathore et al. [97] developed a proactive adversary-aware framework to build Android malware detection models that are more effective when faced with hostile obstacles. In addition, Jia et al. [98] introduced the ERMDS, which uses a wide range of model-agnostic adversarial cases to attempt to provide a more realistic assessment of model performance. These studies have several drawbacks. These methods lack real-world applicability because they focus solely on limited datasets. Furthermore, while some techniques show promise, training methods may not adequately prepare models for diverse adversarial scenarios.

Moreover, Lin et al. [99] answered several important questions: (1) Which adversarial attack function is the most effective at eluding an ML-based network intrusion detection systems (NIDSs)? (2) Which ML algorithm is resilient to hostile attacks? (3) To what extent does the transferability property of an adversarial attack affect the performance of an ML-based NIDS? (4) How can different adversarial assaults be thwarted against an ML-based NIDS? (5) How can the particular ML model that an ML-based NIDS employs be found? However, in the fields of anomaly, intrusion, and malware detection, Xue et al. [100] aimed to provide an early warning system and line of defense against adversarial assaults for electromyography (EMG) signals by recommending a correlation feature on the basis of the Chebyshev distance between neighboring channels. Kopcan et al. [101] proposed systems for identifying anomalies in autonomous transport, encompassing roads, railways, and unmanned aerial vehicles. Two anomaly detection models, namely, an adversarial autoencoder (AAE) and a deep convolutional generative adversarial network (DCGAN), were developed on the basis of the frameworks introduced in Autoencoders (2020) and Deep (2020). The training process utilized image datasets, including the MNIST, Fashion-MNIST, and CIFAR10 datasets. This study has several limitations. They may not fully consider the diverse nature of real-world adversarial attacks or their potential impact on intrusion detection systems. In addition, the proposed solutions might not be sufficiently robust or scalable to handle evolving threats across various domains, potentially limiting their effectiveness in practical applications.

4.2.3 Generative adversarial attack and defense strategy

Within the generative adversarial attack and defense strategy category, 7 of the 51 papers focused on two subcategories: the papers that used text datasets and the papers that used image and graph datasets. These papers explore how to generate an adversarial attack and how to defend against this attack.

In the field of text datasets, two papers were published. Zhan et al. [102] presented MalPatch as a method of adversarial attack against DNN-based malware detection systems. MalPatch produces attack-independent adversarial patches to attack various types of detectors; it injects the patches into malware samples to show the evading results and discusses the countermeasures. In the study by Katebi et al. [103], a DL approach for clustering malware in sequential data was presented, and its vulnerability to adversarial attacks was investigated. The method is applied to Android application data streams via static features from the Drebin, Genome, and Contagio datasets. Postattack, deep clustering algorithms yield an FPR exceeding 60% and an accuracy below 83%. However, implementing the suggested defense method mitigates FPR and enhances accuracy on the basis of the findings. In the study by Zhuo et al. [104], a novel black-box attack approach was introduced, which imposes a stringent constraint on a safety-critical industrial fault classification system. This method limits perturbations to a single variable to create adversarial samples. In addition, variable selection is guided by a Jacobian matrix, which makes hostile samples invisible to the human eye in the dimensionality reduction space. By using AT, the research suggests a matching defense tactic that successfully thwarts one-variable attacks and improves classifier prediction accuracy. These studies present notable shortcomings. While the DL approach shows promise for clustering malware, its susceptibility to adversarial attacks raises concerns about its robustness in real-world scenarios. Despite the proposed defense methods, the postattack performance metrics indicate significant vulnerabilities, highlighting the need for more resilient solutions. Similarly, the introduction of a novel black-box attack underscores potential weaknesses in safety-critical systems. Although the research suggests effective defense tactics, the study’s focus on mitigating one-variable attacks may overlook broader security concerns and fail to address multifaceted adversarial threats.

Conversely, in the domain of image and graph datasets, Husnoo and Anwar [8] sought to develop a one-pixel danger model for an IIoT environment. It introduces an innovative image recovery defense mechanism using the accelerated proximal gradient method to identify and counteract one-pixel attacks. Using the CIFAR10 and MNIST datasets, the experimental results revealed the high efficacy and efficiency of the suggested solution in identifying and thwarting such attacks within advanced neural networks, notably LeNet and ResNet. Zhao et al. [105] created adversarial examples to trick image captioning models to protect private information contained within photos. With five versions, these user-oriented adversarial examples enable users to conceal or change important information in the text output, thus protecting personal information from picture captioning models. Xu et al. [106] created two cutting-edge defensive techniques to address hostile cases in deep diagnostic models: misclassification-aware hostile training (MAAdvT) and multPerturbation adversarial training (MPAdvT). They examined quantitative classification results, intermediate features, feature discriminability, and label correlation for both original and adversarially altered images, delving into the investigation of how adversarial cases affect models. Finally, Soremekun et al. [107] focused on strengthening robust models developed with projected gradient descent (PGD)-based robust optimization by introducing and thwarting backdoor assaults. These studies have several drawbacks. While the proposed defense mechanism shows promise against one-pixel attacks, its applicability to real-world scenarios beyond experimental datasets remains uncertain. In addition, the study may lack comprehensive evaluation across diverse neural network architectures and datasets, potentially limiting its generalizability.

4.2.4 Defense robustness

Within this category, 10 articles of the 51 selected papers were further divided into two distinct subcategories: text datasets and image and graph datasets. In the field of text datasets, Roshan et al. [52] discussed crucial NIDS topics, adversarial attacks, and defense mechanisms for strengthening ML- and DL-based NIDS. Charfeddine et al. [108] offered a detailed understanding of ChatGPT’s impact on cybersecurity, privacy, and enterprises concerning various adversarial and protective concepts, including the injection of malicious prompts and NIST security frameworks. First, it suggests secure practices for enterprises; second, it raises some ethical issues; and third, it analyzes potential threats in the near future and the solutions to them. Broadly, Khaleel [109] proposed a method to improve the defense mechanisms of AI learning models used in cybersecurity. On the basis of the Edge-IIoTset dataset for IoT and IIoT applications, techniques such as AT and input preprocessing were incorporated into the study.

In the context of defense robustness, which uses image and graph datasets, the research presented by Meng et al. [110] delves into various classical and cutting-edge adversarial defense strategies within electroencephalogram (EEG)-based brain‒computer interfaces (BCIs). In particular, the article evaluates nine defense strategies on two EEG datasets and three convolutional neural networks (CNNs), creating a thorough benchmark to determine each strategy’s efficacy in the context of BCIs. To establish a proper framework for systematically solving the facial image data privacy issue, Ul Ghani et al. [111] proposed the use of a privacy-preserving self-attention GAN in conjunction with clustering analysis and a blockchain. The CelebA outperformed the state-of-the-art methods in terms of image realism and privacy preservation for various use cases. Finally, the objectives of the study by Wei et al. [112] were to improve the robustness of DNNs against targeted bit-Flip attacks in security applications. The proposed ALERT defense mechanism incorporates source-target-aware searching and weight random switch strategies while achieving high network accuracy. The primary goal of Shehu et al. [113] was to present LEmo, a cutting-edge technique for classifying emotions that uses facial landmarks and is resistant to hostile attacks and distractions. To compare LEmo with these seven cutting-edge techniques, researchers have compared it with neural networks (ResNet, VGG, and Inception-ResNet), emotion categorization tools (Py-Feat, LightFace, and Adv-Network, DLP-CNN), and anti-attack techniques (Adv-Network, Ad-Network). To assess the robustness of the LEmo approach, three different adversarial attack types and a distractor attack were used. In the study by Nayak et al. [114], to address the problem of giving a pretrained classifier verifiable robustness assurances within the limitations of a small amount of training data, the authors developed the DE-CROP technique. To train the denoiser, this method consists of two main steps: (1) creating a variety of boundaries and interpolated samples and (2) effectively integrating these created samples with sparse training data. The proposed losses that guarantee feature similarity between the denoised output and clean data at both the instance and distribution levels are used to train the denoiser. Yin et al. [115] aimed to study the practical security risks of the IIoT, investigating transferable adversarial attacks and proposing methods to enhance transferability while providing deployment guidelines against such threats. In addition, in the mentioned study [116], for AT, the authors suggest two approaches called distribution normalization (DN) and margin balance (MB). By standardizing each class’s features, the DN guarantees uniform variance in all directions. This normalization aids in removing intraclass directions that are simple to manipulate. On the other hand, MB serves the purpose of equalizing the margins between various classes. By doing so, it becomes more challenging to identify directions associated with smaller margins, thus making it harder to launch attacks on confusing class directions. These studies have certain limitations. While crucial topics in NIDSs, EEG-based BCIs, and defense mechanisms are discussed, an in-depth evaluation or comparison of the proposed strategies may be lacking, potentially hindering their practical applicability. While the LEmo technique shows promise in emotion classification, evaluations against adversarial attacks may lack comprehensive testing across diverse scenarios or datasets and face challenges in ensuring the generalizability of the DE-CROP technique across different datasets or domains because of its reliance on specific training data characteristics. Finally, while the suggested approaches for AT, DN and MB, aim to increase model robustness, their effectiveness in mitigating attacks across diverse datasets or scenarios may require further validation.

4.3 Deep and scientific analysis

This review studies defense strategies and methods for ML adversarial attacks on diverse applications, showing the most efficient and effective choices. The articles of this study have made some relevant suggestions and offered significant ideas, although they may need a few improvements. There is no available systematic comparison or benchmarking of the proposed defense approaches with existing state-of-the-art methods, which makes it difficult to evaluate the relative merits of all approaches comprehensively. Furthermore, different sections of the system have different evaluation metrics, which makes it difficult to directly equate the performance level across different areas. Therefore, this article offers a deeper understanding of the practical applicability and limitations of these methods. Future work may include a broad review of adversarial attack transferability against different defense techniques and the possible trade-offs between model robustness and interpretability.

On the other hand, even though the protection of industrial systems and the level of efficiency of algorithms in the industrial field are important, of the 51 papers that we finalized, we note only four papers [8,94,104,115] that discussed the industrial field. One of the groups was an analysis of how the attack was carried out, the second was how to preserve privacy via FL, and the third was how to preserve privacy via unified learning. Several interesting studies [8] have discussed how to defend against one-variable attacks, and according to these studies, the DNN algorithm was used. However, there are no other studies in this field, and our questions include the following: What of the other attack types? And other types of algorithms in this concern? This area is very expansive, and much work is needed in the future. Moreover, numerous studies have shown that classical FL security is still at risk of privacy attacks caused by data leakage and the opportunity for an adversarial attack during gradient transfer operations. Nevertheless, we have only come across one study concerning this aspect and on FL in the industrial field. Owing to the rise in AI methods, understanding the adversarial attack process is now critically essential. Nevertheless, the 51 papers included in the study did not use or address the explainability of ML algorithms (XAIs) during and after the attack, despite their importance.

Similarly, IoT applications are very critical, and the adversarial attack exposure challenge is very high. However, there are no studies in this respect except for the study that established the connection between industrial applications and IoT applications [8]. In addition, the implementation of new defense techniques will likely lead to the formation of more computationally complicated and difficult-to-implement components, and thus, they will be disadvantageous with respect to operability and scalability. In addition, the articles could gain quality from a more detailed analysis of the limitations, assumptions, and vulnerabilities of the suggested methods, which would give them a greater picture of the operational potential of the methods in real-life situations. However, the data from these surveys have observable limitations, and as a result, the overall conclusion emerges to improve defense techniques in ML applications.

5 Discussion

This section focuses on three key aspects related to the defense strategies and methods used in adversarial attacks: motivations, challenges, and recommendations.

5.1 Motivations

This section addresses five main topics related to the motivation for defending against adversarial attacks: (1) malware detection and cybersecurity, (2) adversarial defense in specific domains, (3) ML vulnerabilities and AT, (4) privacy and ethical concerns in AI applications, and (5) FL and network intrusion detection. These groups help organize the paragraphs on the basis of common themes and topics related to defenses against adversarial attacks (Figure 12).

Motivations of protection against adversarial attacks in AI models. Source: Created by the authors.

5.1.1 Malware detection and cybersecurity

In the malware detection and cybersecurity roles, the dynamic evolution of malware and the vulnerability of adversarial attacks against DL-based detectors are discussed, emphasizing the importance of addressing these vulnerabilities. Numerous investigations have been carried out on this topic. Given the increasing concerns surrounding cybersecurity, the continuous evolution of malware remains a significant challenge. DL-based malware detectors are considered promising solutions, but their susceptibility to adversarial attacks underscores the importance of thorough validation. It is imperative to ensure the resilience of these detectors against such attacks [96]. In addition, AID-Purifier, introduced by Hwang et al. [77], is motivated by its role as a lightweight auxiliary network designed to purify adversarial examples. Notably, it introduces a unique purification approach using a straightforward discriminator, which distinguishes itself from previous purifiers by allowing purified images to exist in out-of-distribution regions. Antimalware learning (AML) is a new area of study that is crucial for understanding and defending against adversarial attacks to protect computer networks from various cybersecurity risks [52]. In the contemporary landscape, billions of users rely on Android smartphones, rendering them appealing targets for malware designers seeking lucrative opportunities [97].

5.1.2 Adversarial defense in specific domains

With respect to adversarial defenses in specific domains, the focus is on tailored defense strategies for specific areas, such as image recognition and industrial fault classification systems, which recognize the need for domain-specific protective measures. According to Meng et al. [110], their work represents a pioneering effort in the field of adversarial defense for EEG-based BCIs, which holds significant importance for the practical implementation of BCIs. Furthermore, by comparing nine contemporary and traditional defense strategies across three CNN models and two EEG datasets with different assault scenarios, they created a standard for adversarial protection in BCIs. Similarly, attribution guided sharpening (AGS) creatively uses attribution values produced by a nonrobust classifier to direct the adversarial noise denoising process, as proposed by Perez Tobia et al. [76]. This approach serves as a novel defense strategy for nonrobust models without the need for additional training. Research on emotion categorization has gained significance because of the proliferation of intelligent systems, including human–robot interactions. DL models, as highlighted in the study by Shehu et al. [113], have excelled in various classification tasks. However, their vulnerability to diverse attacks stems from their homogeneous representation of knowledge. Inspired by the ability of deep image priors to capture extensive image statistics from a single image, a robust defense method against adversarial examples is needed [78]. Furthermore, Zhuo et al. [104] were motivated by the absence, to date, of proposed and analyzed adversarial attack and defense methods specifically tailored for industrial fault classification systems. The rationale behind [112] was to meet the demand for large-scale usage of DNNs in security-sensitive domains that include self-driving cars and health care.

5.1.3 ML vulnerabilities and AT

The following sections discuss ML models’ susceptibility to adversarial attacks and how AT can be used to make ML models more resilient in the context of ML vulnerabilities and AT. The increasing dependence on ML within code-driven systems for smart devices and applications has become a crucial aspect of human reliance. While ML has been the subject of much research to address real-world problems such as image categorization and medical diagnosis, a significant gap exists in the understanding of adversarial attacks on safety-critical networked systems. This is critical because attackers can exploit adversarial samples to circumvent pretrained systems, resulting in heightened false positives in black-box attacks and finding patterns in data that diverge from a model of typical behavior, which is known as anomaly detection. Robust anomaly detection systems are essential across various domains [101]. The purpose of the study by Khaleel [109] was to contribute to filling this notable blind spot with respect to susceptibility to adversarial attacks in ML for cybersecurity use cases in the literature.

With the growing autonomy of vehicles on roads, railways, and unmanned aerial vehicles, there is an increasing need to address anomalous situations not covered by trained models, thereby increasing safety risks. Researchers have proposed AT, which involves adding adversarial samples to the training data, to improve the adversarial robustness of neural networks. This strategy, however, might cause overfitting to certain adversarial approaches, which would lower standard accuracy on clean images. In addition, there has been a notable surge in interest, both among academics and industry professionals, in defending DL systems against one-pixel attacks and guaranteeing their robustness and endurance [8]. AT has emerged as a potent defense method for minimizing adversarial risk, and accurate predictions for both benign examples and their perturbed counterparts within the ball have been proposed. The motivation behind the study by Li et al. [81] aimed to specifically and cooperatively increase adversarial robustness and accuracy.

As the complexity and abundance of malware threats continue to rise, traditional signature-based detection methods are experiencing diminished effectiveness [98]. Despite the commendable strides made in industrial applications through rapid ML development, these achievements coexist with notable security vulnerabilities [83]. Adversarial attacks, particularly data poisoning-enabled perturbation attacks in which false data are injected into models, profoundly affect the process of learning and degrade the accuracy and convergence rates without benefiting deeper networks [84]. While progress has been made in developing robust architectures, a common weakness persists in existing AT approaches, which focus on a singular type of attack during evaluation [85]. Even while DL offers state-of-the-art image detection solutions, vulnerabilities persist even against minor perturbations [86]. Deep hashing models are vulnerable to security threats when hostile cases are recognized, highlighting their susceptibility [87]. Current research highlights how ML algorithms are susceptible to transfer-based attacks in real-world black-box situations [115]. It is important to examine the resilience strategy that uses PGD as a universal first-order adversary, especially in light of how it behaves when facing attacks that are fundamentally different from backdoors [107]. Adversarial attacks, characterized by iterative sample movement, emphasize the importance of traversing decision boundaries for classification loss ascent [116]. The demonstrated vulnerabilities in GCNs further underscore the need for enhanced defense mechanisms [90]. In the pursuit of adversarial optimization, insufficient attention has been given to exploring the collaboration between cover enhancement and distortion adjustment, leading to potential local mode collapse [91]. Recognized as an effective strategy, AT involves considering benign and adversarial examples together in the training stage to bolster the robustness of DNNs [68]. The intricate interplay between adversarial attacks and model misbehavior through backdoors becomes apparent in the context of infected models performing well on benign testing samples [69]. In the domain of person reidentification (RE-ID) adversarial attacks, introducing noise or foggy material in images disrupts the model’s ability to recognize the similarity between gallery and probe images, resulting in drastic changes in recognition outcomes [93].

5.1.4 Privacy and ethical concerns in AI applications

The section highlights concerns about privacy breaches and ethical considerations in AI, particularly in image labeling, as well as potential misuse of AI in advertising, underscoring the ethical implications of AI applications. With respect to shifting focus, two studies explored privacy and ethical concerns in AI applications. The significance of the study by Katebi et al. [103] becomes evident in light of the escalating data streams within Android systems, underscoring the necessity for a fundamental analysis. The temporal nature of streaming data, which are available for a specific duration, poses a challenge because of the evolving data models. The proposed clustering methods should effectively handle this temporal characteristic.

Furthermore, adversaries creating malware strive to emulate benign samples, creating a challenge for precise clustering. To address this issue, static analysis of Android malware involves evaluating distinct features between benign and malicious samples. AI systems, which are utilized for the automatic labeling of images on social networks, hold potential for positive applications, such as providing text descriptions for visually impaired individuals. However, concerns arise with the use of cross-modal techniques, raising issues about potential unethical purposes, such as analyzing personal information for advertising. This emphasizes the crucial need to prevent privacy breaches [105]. Owing to the large-scale adoption of DNNs, the threat of privacy attacks against DNN models is always increasing, and hence, intellectual property (IP) protection is needed for such models [70]. The basis for the study by Zhang et al. [74] was the increase in the use of computer vision and surveillance technologies, which threatened privacy on the basis of facial identity information. This spurred the creation of the RBGAN, with the intent of providing optimal privacy while being able to maintain high data utility through the generation of realistic images that preserve attributes.

5.1.5 FL and network intrusion detection

FL and network intrusion detection are examined in a set of papers addressing challenges and vulnerabilities in FL, which encompasses challenges associated with hyperparameter optimization and the vulnerability of NIDSs to adversarial attacks, underscoring the necessity for improved security measures. In this domain, a multitude of articles predominantly rely on techniques such as differential privacy, secure multiparty computation, and homomorphic encryption to safeguard the security of models and data. Nevertheless, these approaches frequently demonstrate inefficiency, particularly in sensitive domains such as healthcare or finance [94]. This inefficiency gives rise to concerns about the suitability of the current literature for application in IoMT systems. Concerns over user privacy are further heightened by FL servers’ sincere yet inquisitive attitudes. Establishing hyperparameter settings is crucial for FL performance efficiency, and the automated refinement of these parameters has the potential to contribute to the creation of reliable FL models [89]. As a distributed ML paradigm, FL faces susceptibility to various adversarial attacks because of its distributed nature and the limited access of the central server to data [92]. While NIDSs have embraced ML for detecting a broad spectrum of attack variants, the inherent vulnerability of ML to adversarial attacks poses a risk, potentially compromising ML accuracy in the process [99].

5.2 Challenges

In every research work, there are some challenges and obstacles facing researchers. This section discusses these difficulties in four groups (Figure 13).

Challenge groups of protection against adversarial attacks in AI models. Source: Created by the authors.

5.2.1 General challenges in adversarial attacks

This section examines the general challenges of adversarial attacks on ML models, including their susceptibility to static malware, time complexity, and need for substantial processing power. A significant concern in the analysis discussed in the study by Katebi et al. [103] was the considerable time complexity, especially when handling high-dimensional data, which results in longer processing times. In addition, similar to previous DL techniques, this approach required a significant amount of computing power, which was not readily accessible on Android operating system host devices at that time. FL, operating across independent devices with heterogeneous and unbalanced data distributions, faces heightened vulnerability to adversarial attacks, particularly backdoor attacks [82]. In addition, FL, an adversarial attack known as Byzantine poisoning, can be used against a decentralized training strategy that is carried out locally on devices without direct access to training data. It is difficult to handle the defense against these threats in an efficient manner given the inadequacy of existing defenses, as highlighted by Rodríguez-Barroso et al. [79]. Furthermore, a noteworthy difficulty lies in the efficient extraction of dependable semantic representations for deep hashing, impeding progress in adversarial learning and hindering its improvement [87]. The reason for prioritizing the technique of creating highlighted adversarial examples in a black-box scenario is grounded in its noteworthy success rate and practical feasibility, as highlighted by Choi et al. [80]. Even with its broad application, conventional FL is still vulnerable to adversarial attacks during gradient transfer procedures and data leakage [94]. In contemporary society, individuals are heavily reliant on social networks such as Facebook and WeChat, which have profoundly transformed our lifestyles. When users engage in activities such as giving thumbs-up, sharing, and commenting, their personal information becomes susceptible to threats [105].

5.2.2 Specific application challenges and defense approaches

This group focuses on specific application challenges, such as issues in fault classification accuracy. Numerous investigations have been carried out on specific application challenges and defense approaches. The primary defense against malware attacks is provided by antimalware/antimalware software products. However, the current literature indicates that prevailing malware detection mechanisms, such as signature and heuristic methods, struggle to address contemporary malware challenges [97]. In addition, a dataset with a variety of traits not observed during LB-MDS training is the primary obstacle to evaluating the robustness of LB-MDS [98]. They reported that as the value of the kernel parameter increases, hostile samples become increasingly undetectable in their thorough testing on three datasets [83]. Furthermore, prevailing defense methods offer resilience against specific attacks, but developing a robust defense strategy against unknown adversarial examples remains a formidable task [88]. While it does not directly control the feature space during training, AT is acknowledged as the most efficient method for improving adversarial robustness [116]. Adversarial steganography has shown state-of-the-art results in the sequential min–max steganographic game. Its goals are to fool steganalysis algorithms and improve embedding security. However, the existing approaches suffer from high computing costs and limited convergence [91]. Addressing defense against model-poisoning backdoor attacks, a significant challenge in FL, was addressed in a previous study [92].

Moreover, it was noted in another study that, compared with the IMDB dataset, the average sentence length of the SST2 dataset is significantly shorter, making the addition of a trigger to the SST-2 dataset more prone to causing significant disruptions and compromising sentence naturalness [69]. Recently, subtle changes in clean images, known as adversarial examples, have demonstrated the high susceptibility of DNNs. Several strong defensive approaches, such as ComDefend, address this problem by focusing on correcting adversarial samples with well-trained models that are derived from large training datasets that contain pairs of adversarial and clean images. These methods, however, ignore the wealth of internal priors included in the input images themselves and instead mostly rely on external priors acquired from massive external training datasets. This constraint hinders defensive model generalization against adversary cases with skewed image statistics relative to the external training dataset [78]. The challenge that forms the theme of the study by Yin et al. [72] was to develop defense mechanisms against adversarial patches, which deceive DL models by applying stickers or particular patterns on objects. This vulnerability was highly dangerous and affected security-critical domains such as security vision systems and self-driving, which we are familiar with. Finally, the difficulty in reidentification (RE-ID) stems from the uncertain bounding boxes of individuals; significant variations in brightness, position, background clutter, and obstruction; and the ambiguous presence of graphics [93].

5.2.3 Security in network traffic and malware detection

This topic addresses security in network traffic and malware detection, highlighting challenges in intrusion detection. Given the difficulty of training generative models and their subpar performance in scenarios with limited data, it becomes crucial to adopt an approach, as suggested by Nayak et al. [114], that generates additional data to mitigate overfitting. The study by Yamany et al. [89] highlights the potential of FL for data privacy but underscores its vulnerability to adversarial attacks, with a specific emphasis on data poisoning attacks that entail injecting malicious vectors during the training phase. In addition, the effective establishment of a resilient FL model against adversarial attacks relies significantly on the appropriate tuning of hyperparameters. In the study by Soremekun et al. [107], the main task was to make it easier for software to automatically identify insecure (backdoor-infected) ML components. The weakness of ML-based intrusion detection systems in the IIoT environment necessitates robust defensive strategies [95]. The matter discussed in the study by Charfeddine et al. [108] was related to the steps transferring in the ethical and logistical aspects of implementing ChatGPT in cyber protection. Considering the possibilities of the proposed solution for improving security activities alongside threatful applications, attention to the ethical use of applications, as well as the problem of effective defense within the NIST framework, was discussed. The problem stated by Ul Ghani et al. [111] consisted of meeting strict privacy constraints when working with facial image data due to the increasing use of facial recognition technology.

5.2.4 ML model vulnerabilities and robustness

This group explores ML model vulnerabilities and robustness, covering issues such as data-driven algorithm vulnerabilities, adversarial security concerns, challenges in adversarial steganography, and problems in NLP tasks. In the study by Hassanin et al. [84], incorporating adversarial examples during classifier training presented two key challenges. First, the features extracted from the input instances, which are employed in the classification step, must be robustly guarded against various adversarial attacks. This guarantees that features from both benign and adversarial instances belonging to the same class are closely aligned in the feature space. Second, achieving a clear separation of classification boundaries between different classes becomes imperative. According to Liu and Jin [85], by predicting the specific types of attacks that a ML model might encounter is practically impossible. In addition, Yin et al. [115] identified security vulnerabilities in existing ML models, where the inclusion of carefully crafted perturbations to input samples could lead to erroneous decisions.

Moreover, attacks causing graph distortion introduce bias that misguides model predictions; addressing this bias is crucial for robust GCNs [90]. Furthermore, Lin et al. [99] outlined three objectives: (1) to assess several protection strategies against adversarial attack functions to ascertain which one is the most successful for NIDSs. (2) Considering the scarcity of publicly available datasets with adversarial samples, we create an adversarial dataset for NIDSs. (3) To forecast an ML-based NIDS model on the basis of the outcomes of an adversary assault, the utility and challenges of identifying the underlying ML method are highlighted. This challenge stems from the diverse ML algorithms used in creating ML-based NIDSs. The use of DL has yielded excellent results in different areas. However, DL models face major difficulties in identification and protection against adversarial samples (AEs) [71].

As indicated in the study by Meng et al. [110], the defense tactics covered are not exclusive of one another; a defense strategy may combine several ideas. Nevertheless, many defense strategies are vulnerable to fresh attacks and can only repel certain kinds of attacks. Furthermore, since black-box assaults involve less knowledge of the target model than white-box attacks do, protecting against them is typically easier. Despite significant strides in DL over the past decade, susceptibility to adversarial attacks has persisted. These attacks can cause neural networks to anticipate things incorrectly because they resemble clean data. Furthermore, DL models frequently serve as “black boxes,” lacking explanations for their outputs, as noted in the study by Perez Tobia et al. [76]. Even though one-pixel attacks are unnoticeable to the human eye, they can have a substantial influence on DNN accuracy. Such attacks can have severe consequences in important fields such as AVs and healthcare [8]. DNN models that incorporate many data patterns have significantly increased the accuracy of fault classification. Nevertheless, these models, which are based on data, are vulnerable to adversarial attacks, and minute variations in the samples might result in imprecise fault predictions. Finally, Shi et al. [68] highlighted that DNNs might suffer severe performance degradation from attacks utilizing adversarial samples.

5.3 Recommendations

This section discusses future directions and recommendations for researchers in the field of defense against adversarial attacks (Figure 14).

Recommendation categories of protection against adversarial attacks in AI models. Source: Created by the authors.

5.3.1 Future directions in adversarial attack and defense research

This section discusses potential future research directions for adversarial attack and defense. Several studies have focused on future directions in adversarial attack and defense research. However, Shaukat et al. [96] covered various adversarial attacks, exploring the robustness of evasion techniques beyond the specified ten remains an open research direction. In addition, a future focus should include assessing false-positive and false-negative rates by poisoning benign samples. Future advancements in the study by Katebi et al. [103] are anticipated to improve generative adversarial network (GAN)-based attack and defense techniques . It is expected that using GAN methods as a data-generating methodology will produce remarkable results, making them useful weapons for both attack and defense. Several suggestions for additional studies are listed in the study by Meng et al. [110]. These include the following: (1) Optimizing resilient training to increase model accuracy on adversarial instances while maintaining maximum accuracy on regular cases. (2) Improving reliable extrapolation from unknown hostile data is a crucial aspect of RT. Given the variability in EEGs, robust generalization is especially vital for BCIs. (3) Developing input transformations specific to EEGs to concurrently enhance both accuracy and robustness. (4) Investigating defensive strategies for traditional ML models in the context of BCIs.