Deep learning assisted intraoperative instrument cleaning station for robotic scrub nurse systems

-

Lars Wagner

, Sven Kolb

Abstract

Due to the ongoing shortage of qualified surgical assistants and the drive for automation, the deployment of robotic scrub nurses (RSN) is being investigated. As such robotic systems are expected to fulfill all indirect and direct forms of surgical assistance currently provided by human operating room (OR) assistants, they must also be capable of performing intraoperative cleaning of laparoscopic instruments, which are prone to contamination when using electrosurgical techniques during minimally invasive procedures. We present a cleaning station for robotic scrub nurse systems which provides intraoperative cleaning of laparoscopic instruments during minimally invasive procedures. The system uses deep learning to decide autonomously on the need of intraoperative cleaning to preserve instrument functions. We performed configuration and durability tests to determine an optimal set of system parameters and to verify the system performance in an application context. The results of the configuration tests indicate that the use of hard brushes in combination with a sodium chloride cleaning solution and a sequence of 3 s cleaning intervals provides the best cleaning performance with a minimal total cleaning time. The results of the durability tests show that the cleaning function is in principle guaranteed for the duration of a surgical intervention. Our evaluation tests have shown that our deep learning assisted cleaning station for robotic scrub nurse systems is capable of performing autonomous intraoperative cleaning of laparoscopic instruments, providing a further step towards the integration of robotic scrub nurse systems into the OR.

Zusammenfassung

Aufgrund des anhaltenden Mangels an qualifizierten operationstechnischen Assistenten (OTA) und des Strebens nach Automatisierung wird derzeit der Einsatz von Robotic Scrub Nurses (RSN) untersucht. Da solche Robotersysteme alle indirekten und direkten Formen der chirurgischen Assistenz erfüllen sollen, die derzeit von menschlichen OTAs geleistet werden, müssen diese in der Lage sein, die intraoperative Reinigung von Instrumenten durchzuführen, die bei der Verwendung elektrochirurgischer Techniken bei minimalinvasiven Eingriffen anfällig für Kontamination sind. Wir stellen eine Deep-Learning-unterstützte Reinigungsstation für RSN-Systeme vor, welche eine intraoperative Reinigung von laparoskopischen Instrumenten bei minimalinvasiven Eingriffen ermöglicht und autonom über die Notwendigkeit einer intraoperativen Reinigung entscheidet, um die Funktion der Instrumente zu erhalten. Wir haben Konfigurations- und Lebensdauertests durchgeführt, um eine optimale Konfiguration von Systemparametern zu bestimmen und die Systemperformance in einem Anwendungskontext zu verifizieren. Die Ergebnisse der Konfigurationstests zeigen, dass die Verwendung harter Bürsten in Kombination mit einer Natriumchlorid-Reinigungslösung und einem Reinigungsintervall von 3 s die beste Reinigungsleistung bei minimaler Gesamtreinigungszeit bietet. Die Ergebnisse der Lebensdauertests zeigen, dass die Reinigungsfunktion im Prinzip für die Dauer eines chirurgischen Eingriffs gewährleistet ist. Unsere Evaluierung hat gezeigt, dass unsere Deep-Learning-unterstützte Reinigungsstation für robotergestützte RSN-Systeme in der Lage ist, eine autonome intraoperative Reinigung von laparoskopischen Instrumenten durchzuführen, was einen weiteren Schritt in Richtung der Integration von RSN-Systemen in den OP darstellt.

1 Introduction

In the past few years, the application of robotic scrub nurse systems (RSN) has been investigated in the scientific community [1] due to the ongoing shortage of qualified operating room (OR) assistants [2] and the drive for automation. Robotic scrub nurses assist surgeons in the selection and delivery of surgical instruments. In this regard, such robotic systems should fulfill all indirect and direct forms of surgical assistance that are currently provided by human OR assistants. This includes not only the provision of required instruments and the reception of instruments and surgical specimens that are no longer required, but also the intraoperative cleaning of instruments in order to ensure a complication-free surgical workflow. Current RSNs such as the well-known Gestonurse [3] and the 3D gaze-guided [4] systems do not address the possibility of intraoperative cleaning. To provide a fully supportive RSN system, we are developing the Situation Aware Sterile Handling Arm for the OR (SASHA-OR), a robotic platform that is context-sensitively integrated into the surgical workflow of minimally invasive interventions and can make autonomous decisions about the delivery and cleaning of laparoscopic instruments.

The cleaning aspect in particular has gained importance with the increasing use of electrosurgical techniques, which have become indispensable during minimally invasive procedures due to significant advantages compared to conventional cutting techniques using a scalpel, including time benefits [5] as well as minimization of patient blood loss [6]. Electrosurgery or high-frequency surgery refers to the application of high-frequency electric current to cut tissue (electrotomy) or seal bleeding vessels (electrocoagulation) [7]. Generally, a distinction is made between these two types of application. In electrotomy, when tissue is cut with electric current, the current heats the tissue very quickly so that the cell wall explodes due to the resulting pressure. In contrast, in coagulation, the current heats the tissue more slowly, so that the extracellular and intracellular fluids evaporate. As a result, the tissue shrinks and open blood vessels are sealed [5]. However, both monopolar and bipolar application techniques result in adhesions and contamination on the laparoscopic instrument end effectors [8].

Sterile OR assistants accordingly perform intraoperative cleaning procedures to roughly remove residual blood and tissue that can interfere with the work flow of a surgery [5]. Inadequate removal of organic contaminants increases the risk of intraoperative complications. For example, adhesions of blood to the shaft of instruments lead to increased resistance during insertion into the trocar shaft [9]. When removing a used instrument, the sterile OR assistant cleans it manually by hand with a suitable textile such as an abdominal linen. Alternatively, cleaning can be done either by wiping with a soft, disposable cloth soaked in cleaning fluid [10] or by using a sterile, lint-free sponge moistened with water [11]. This process is illustrated in Figure 1.

Sterile OR assistant cleans a laparoscopic instrument intraoperatively with a disposable cloth soaked in cleaning fluid.

To extend RSN systems by the possibility of intraoperative cleaning, we present a deep learning assisted cleaning station for robotic scrub nurse systems. The cleaning station provides autonomous intraoperative cleaning of laparoscopic instruments during minimally invasive procedures. In addition, the system uses deep learning methods to decide on the need for intraoperative cleaning to preserve instrument functions.

2 Methods

2.1 Workflow integration

For autonomous decision-making on the need for intraoperative cleaning, a camera mounted to the robot arm is used. In the first step, the camera detects the tip or end effector of the instrument after it is placed back on the instrument tray by the surgeon. The system then uses the image section of the instrument tip to decide whether contamination by residual blood or tissue is present and the instrument should be cleaned. If the system decides that cleaning is required, the RSN system picks up the laparoscopic instrument at the shaft and moves to the cleaning station attached to the robotic platform. At the cleaning station, the instrument is immersed in the cleaning container and cleaned. After the cleaning process, the robotic handling arm places the instrument back on the instrument tray.

2.1.1 Autonomous cleaning decision

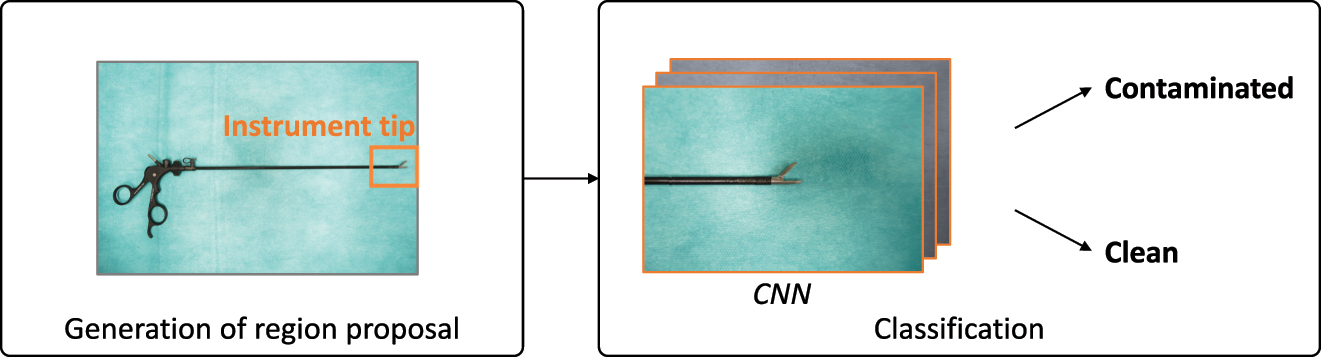

The computational pipeline of the autonomous cleaning decision can be divided into the detection of the instrument tip and classification of its contamination. The proposed system, illustrated in Figure 2, is implemented in Python and deployed with the NVIDIA Clara AGX Dev Kit.

Computational pipeline of the two-stage algorithm.

2.1.1.1 Tip detection

For instrument tip detection, we use the YOLOv5 architecture [12]. To create a dataset for training and evaluation of the proposed method, 3694 images of laparoscopic instruments were acquired from different heights of the instrument tray used in our experimental setup. The images were captured with an Intel RealSense D435i depth camera. The laparoscopic instruments included those predestined for contamination during minimally invasive procedures such as the scissors, probe excision forceps, grasping forceps or the bipolar grasping forceps. All images were annotated with bounding boxes on the instrument tip using the annotation tool CVAT [13]. The generated dataset was shuffled and split into a training (70 %) validation (20 %) and test (10 %) dataset. We trained the YOLOv5m model for a total of 30 epochs using the training set with a batch size of 16 and an initial learning rate of 0.01, which was reduced to 0.001 after 30 epochs. The hyperparameters, including bounding box size, batch size, and learning rate schedule were tuned based on the validation set. To avoid overfitting and improve overall performance, data augmentation was conducted during online training. It did not affect the number of available training images, but randomly changed the available images in each epoch.

2.1.1.2 Contamination level

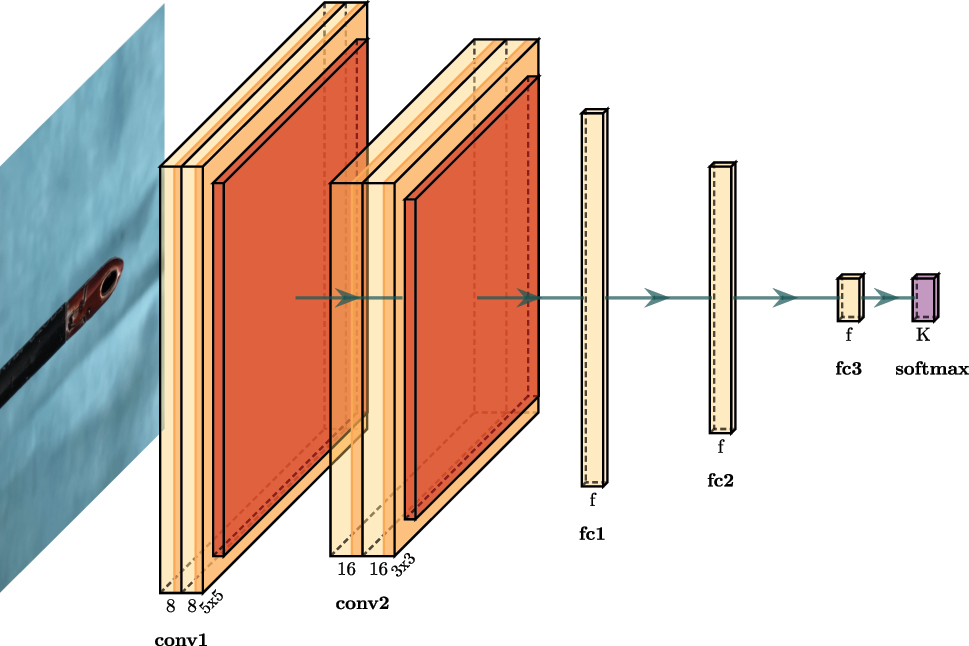

While the YOLOv5 network focuses only on instrument tip detection, we use a simple Convolutional Neural Network (CNN) at the second stage to decide on the need of cleaning an instrument. For this purpose, the region of interest generated by the YOLOv5 network is resized to the size of 128 × 128 pixels. The CNN deciding on the need for cleaning consists of two convolutional layers [5 × 5 conv, 8], a max pooling layer [5 × 5], two convolutional layers [3 × 3 conv, 16], a max pooling layer [3 × 3] and three fully connected layers. Each layer is activated by the RELU function as shown in Figure 3. For training the CNN, we acquired 3376 images of above mentioned laparoscopic instruments in clean and contaminated conditions. Similar to the tip detection pipeline, the generated dataset was split and shuffled into a training, validation, and test dataset. The CNN was trained for a total of 15 epochs using the training set with a batch size of 16 and an initial learning rate of 0.01. We also augment and normalize the training dataset to avoid overfitting.

Convolutional neural network for contamination level detection.

The tip detection provides a potential bounding box of the instrument tip for each frame at time t and its output probability p TD,t . The contamination level detection provides the class c CL,t (clean = 0, contaminated = 1) and its output probability p CL,t . For robust detection and as a starting point for manipulation of an instrument s t , we aggregate the normalized information of the last N = 24 frames:

If s t exceeds the value of 0.5, the cleaning task is initiated.

2.1.2 Cleaning station

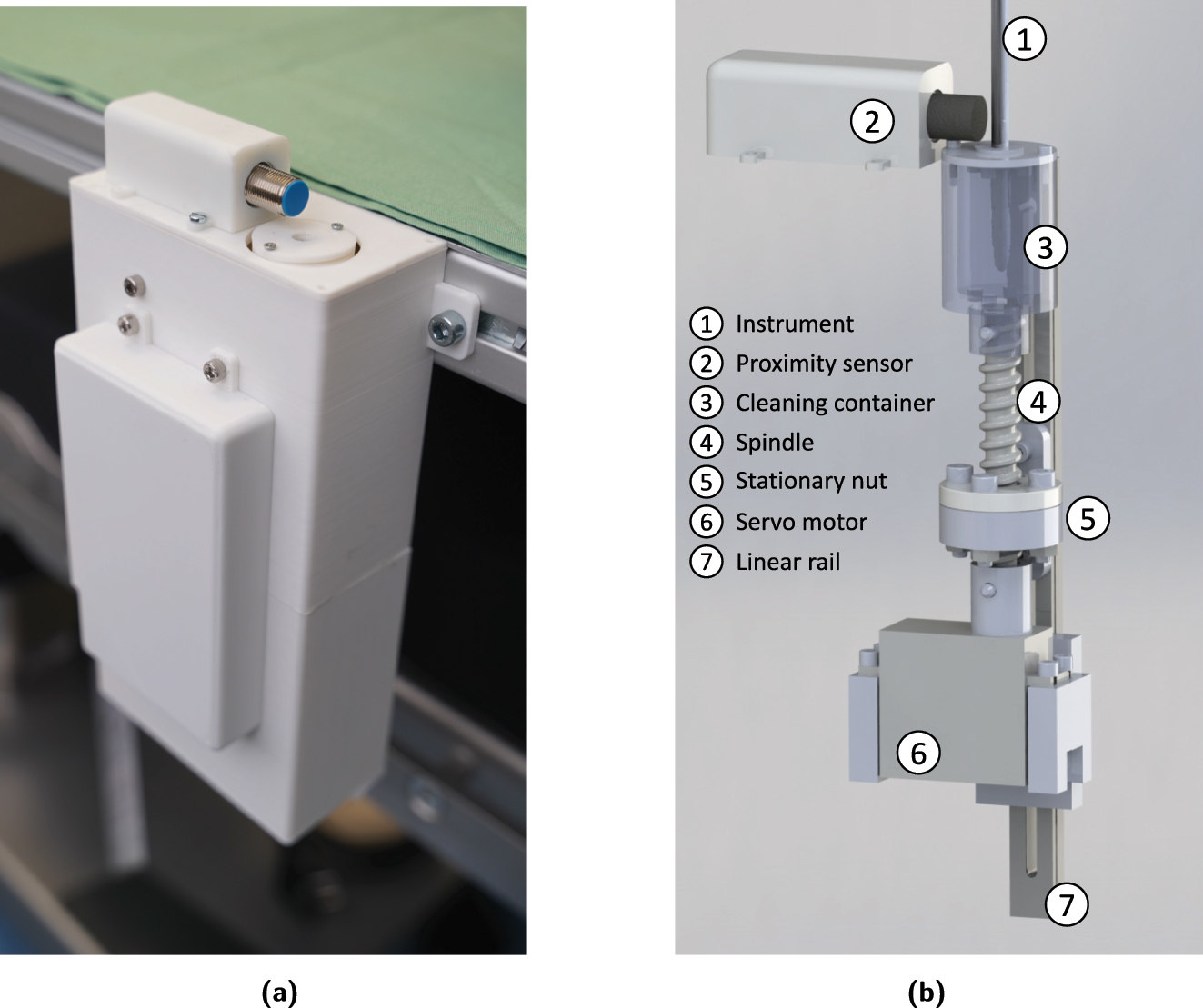

Once the cleaning task is initiated, the robotic scrub nurse system picks up the contaminated laparoscopic instrument at the shaft and moves it to the cleaning station. The cleaning station, which is shown in Figure 4, consists of an embedded cleaning container, a motion unit, an inductive proximity sensor and a power unit. The cleaning container is equipped with nylon cleaning brushes and a cleaning fluid and is 3D printed using Fused Deposition Modeling. This allows the container to be replaced after each operation to meet the required hygiene standards. The motion unit consists of a servo motor mounted on a linear rail, a stationary nut attached to the enclosure, as well as a spindle upon which the cleaning container is mounted. When the contaminated instrument is inserted into the cleaning container, the proximity sensor detects the metallic instrument tip and triggers the container motion sequence. The servo motor rotates the spindle, which in turn causes a simultaneous rotation and vertical translation of the cleaning container due to the stationary nut.

Hardware integration of cleaning station. (a) Cleaning station with enclosure attached to the robotic platform. (b) Actuator technology of the cleaning station.

2.2 Configuration and durability tests

To evaluate the main task of the cleaning station during operation, configuration and durability tests were performed to verify the system performance with respect to different components in an application context. There are a number of variables of the cleaning station which significantly affect the system performance. This includes the total cleaning time t clean, the cleaning fluid f c and the type of cleaning brush b c . We used a probe excision forceps as a test instrument, assuming that its challenging geometry is representative for a large number of laparoscopic instruments with a diameter of 5 mm.

To determine the best possible configuration of the cleaning station, we performed two test scenarios in which we contaminated a probe excision forceps by soaking it in corn syrup-based imitation blood (scenario S 1) and coagulating animal tissue (scenario S 2). We changed the configuration of the cleaning station as shown in Table 1 by varying the cleaning solutions (sodium chloride NaCl and distilled water H2O) and the nylon cleaning brush hardness (hard and soft). In addition, we examined different cleaning durations by using cleaning intervals of t iv = 2 s and t iv = 3 s.

Configuration types.

| Configuration ID | Fluid type f c | Brush type b c |

|---|---|---|

| A | NaCl | Hard |

| B | H2O | Hard |

| C | NaCl | Soft |

| D | H2O | Soft |

For each configuration, we conducted an entire cleaning process and checked the cleaning result after each cleaning interval t iv . The cleaning result was evaluated by a surgeon, who decided whether it was successful or not. If there was no improvement after a number of n = 5 consecutive cleaning intervals, the cleaning process was aborted and evaluated as failed.

Since in current minimally invasive interventions the cleaning process of an instrument occurs several times, the cleaning station should be able to acceptably perform the cleaning routine repeatedly, at least as often as required for completing an entire surgical intervention. A change of cleaning fluid or brushes should not be necessary until after the intervention. To evaluate the durability, we again performed two tests in which we contaminated a probe excision forceps by soaking it in imitation blood (scenario S 1) and coagulating animal tissue (scenario S 2). We chose a configuration for t clean, t iv , f c , and b c which achieved the best results in the configuration tests and performed a sequence of n cleaning procedures. After each cleaning procedure, the instrument was contaminated again. We stopped the test as soon as the surgeon noticed an unsuccessful cleaning procedure.

3 Results

3.1 Autonomous cleaning decision

The results of the instrument tip detection with YOLOv5 are shown in Table 2. The trained model is able to accurately detect the instrument tip, with a precision of 99.4 % and a recall of 98.8 % on the training set. The mean average precision with an intersection over union (IoU) threshold of 0.5 (mAP0.5) is 99.4 %. It is noticeable that the performance difference between test and training set is rather small, indicating that there is no significant overfitting problem. The accuracy of the contamination level detection is 98.4 %. Both the precision with 97.6 % and the recall with 99.1 % reach high values, indicating a robust detection of the contamination level. During the cleaning tests, each contaminated instrument was detected as contaminated, while the starting point s t for manipulation of a contaminated instrument by the robot was initiated after 2.2 ± 0.4 s on average. The processing time per frame amounts to ∼16 ms with a GeForce RTX 6000 GPU. Considering that the robot has a given time to make a decision, the processing time of our algorithm is considered sufficiently fast for use in a robotic application.

YOLOv5 detection results on the dataset splits.

| Split | Precision | Recall | mAP0.5 |

|---|---|---|---|

| Training set | 0.994 | 0.988 | 0.994 |

| Validation set | 0.985 | 0.992 | 0.994 |

| Test set | 0.992 | 0.994 | 0.992 |

3.2 Configuration and durability tests

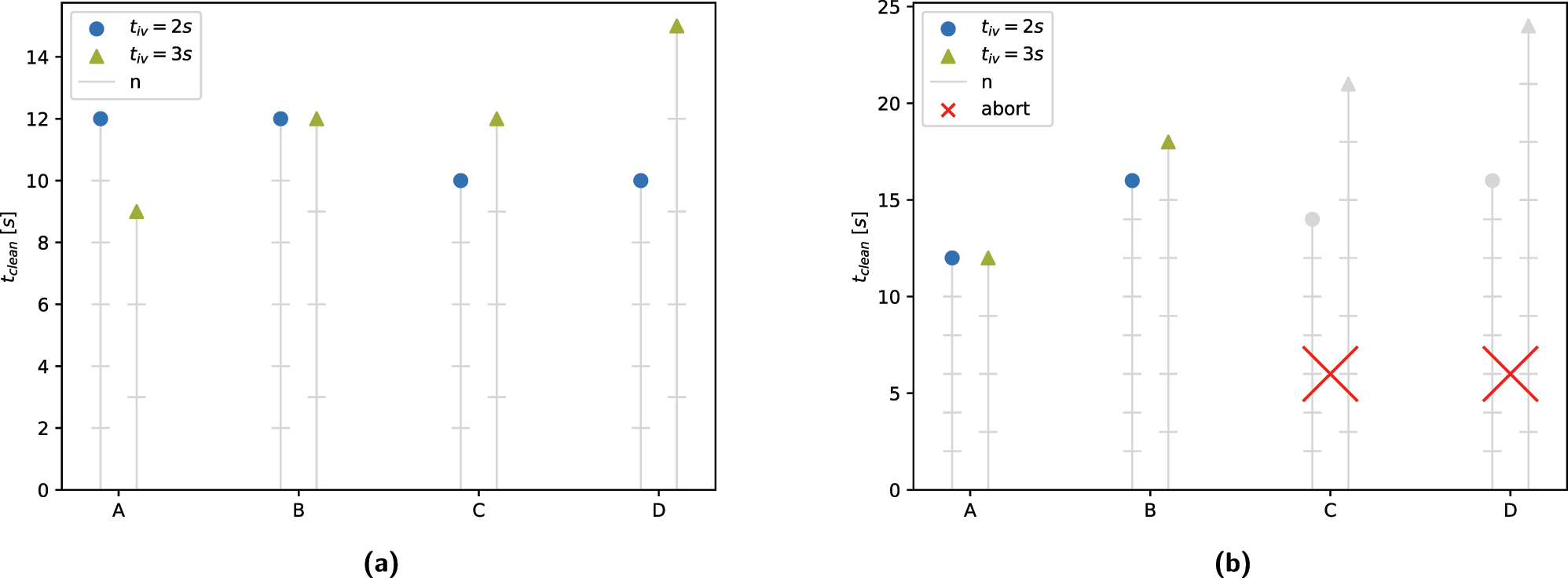

The aim of the configuration tests was to determine the ideal combination of system parameters for an optimal system performance. The results of the tests, shown in Figure 5a and b, indicate that the combination of configuration A with a cleaning interval of t iv = 3 s resulted in a minimal total cleaning time of t clean,1 = 9 s in contamination scenario S 1 and t clean,2 = 12 s in S 2. Examining the cleaning intervals shows that a longer cleaning interval of t iv = 3 s yielded better cleaning results per interval than a shorter interval of t iv = 2 s, resulting in fewer overall required cleaning intervals. Comparing the different combinations of cleaning brush b c and cleaning fluid f c reveals that the hard brushes were able to remove contamination more effectively than the soft brushes. This is especially apparent in S 2, where the soft brushes failed to clean the instrument to a sufficient degree, regardless of the cleaning fluid or cleaning interval. Moreover, the use of NaCl as a cleaning fluid appears to improve the system performance compared to H2O, as the configurations using NaCl performed better than the ones using H2O in all examined scenarios.

Results of configuration tests. (a) Contamination scenario S1. (b) Contamination scenario S2.

Using the optimal configuration and cleaning interval obtained through the configuration tests, the aim of the subsequent durability tests was to evaluate the durability of a cleaning container under repeated use. For this purpose, we measured the performance of the system in configuration A with a cleaning interval of t iv = 3 s. The results of the durability tests show that the evaluated cleaning container was capable of withstanding n = 5 consecutive cleaning procedures when removing blood residue, and n = 4 consecutive procedures when removing coagulated coarse tissue residue.

4 Discussion

The results of the computational pipeline of autonomous contamination level detection show that it is sufficient for fast and robust operation during robotic assisted minimally invasive procedures. The two stage algorithm provides accurate detection of the instrument tip as well as the degree of contamination. The decision-making rapidity of the algorithm on the need of cleaning can be considered comparable to that of a human OR assistant. Nevertheless, the system uses a supervised learning approach, which may lead to non-perfect predictions in unknown data. A possible alternative method could be an unsupervised learning approach, being trained to detect anomalies to provide a better degree of generalization.

The configuration tests show that our proposed cleaning system is capable of removing contaminations such as coagulated coarse tissue residue and blood residue without any problems in a configuration using hard brushes and NaCl as cleaning fluid, with a cleaning interval of t iv = 3 s.

The durability tests show that the cleaning function is in principle guaranteed for the duration of a surgical intervention. However, for a more valid statement regarding durability, the tests should be reproduced with a larger number of test runs. Furthermore, the failure condition used in the durability tests could be reconsidered, as a hard limit after a single unsuccessful cleaning procedure does not necessarily represent the process performed during a manual intraoperative cleaning task.

In the course of our experimental evaluation, we additionally identified other limitations of our system and the corresponding test setup. One main limitation is presented by the fact that we only implemented one cleaning method using moving brushes, while it would be interesting to investigate other modalities such as ultrasound baths or pressurized jets.

A challenge during the configuration tests was the repeatability of initial instrument contamination levels, as it was impossible to reproduce the exact contamination pattern for each test run. While this factor may have influenced the results of the cleaning tests, we chose to use this method of evaluation regardless, as in a real-world scenario no two instrument contaminations are identical either.

5 Conclusions

In this paper, we presented our deep learning assisted intraoperative instrument cleaning station for robotic scrub nurse systems. We conclude that the system ensures fast and robust detection of the contamination level of laparoscopic instrument tips via a two-stage deep learning algorithm. Using a modular cleaning station, our robotic scrub nurse can clean contaminated instruments by simply immersing the instrument into the autonomously actuated cleaning container. While the performance of the computational pipeline was comparable to that of a human OR assistant, we identified some constraints of the mechanical system offering further potential for improvement. Overall, the proposed system supports the general practicability of robotic scrub nurses for minimally invasive interventions.

About the authors

Lars Wagner received his B.Sc. degree in 2019 and M.Sc. degree in 2021, both in Mechanical Engineering from the Technical University of Darmstadt. He is currently a research associate at the Research Group MITI of the University Hospital rechts der Isar and pursuing the doctoral degree at the Chair of Robotics, Artificial Intelligence and Real-Time Systems at the Technical University of Munich. His research interests include robotics and multimodal machine learning in surgery.

Sven Kolb is a research associate at the Research Group MITI of the University Hospital rechts der Isar. Following a degree in Engineering Science, he completed his master‘s studies in Mechanical Engineering at the Technical University of Munich. His current area of research focuses on the development of a robotic telemedical examination system.

Patrick Leuchtenberger is a Mechanical Engineering student at the Technical University of Munich. His research interests include robotics and assistance systems in the medical domain.

Lukas Bernhard is the scientific lead of the Research Group MITI. After receiving a B.Sc. in Mechatronics, he specialized in biomedical engineering and robotics during his master’s studies. While currently being a doctoral candidate at the Chair of Robotics, Artificial Intelligence and Real-Time Systems at the Technical University of Munich, he is involved in several research projects with a strong focus on assistive robotics and autonomy for different application scenarios within the healthcare domain.

Alissa Jell is a resident for abdominal surgery at the Department of Surgery at the University Hospital rechts der Isar/TUM. The focus of her research is the combination and fusion of multiple diagnostic means by the help of AI to enhance the diagnostic yield, thus reducing time and costs in daily medical practice.

After graduating with research on combined laparoscopic-endoscopic procedures and receiving the postgraduate lecture graduation on transluminal interventions, Dirk was recognized as professor in surgery in 2018. Currently, he is a consultant surgeon, assistant managing director and lead for robotic and colorectal surgery at the University Hospital rechts der Isar/TUM. Dirk leads the Research Group MITI, which fosters collaborative research in the field of high-tech medicine, and which is closely linked to many partners within the clinic, the Technical University and industry. Since 2020 he has been recognized as the director of the centre for medical robotics and machine intelligence (MRMI) at the University Hospital rechts der Isar/TUM.

-

Author contributions: All the authors have accepted responsibility for the entire content of this submitted manuscript and approved submission.

-

Research funding: The project was funded by the Bavarian Ministry of Economic Affairs, Regional Development and Energy (StMWi) (grant number: DIK0372).

-

Conflict of interest statement: The authors declare no conflicts of interest regarding this article.

References

[1] X. Sun, J. Okamoto, K. Masamune, and Y. Muragaki, “Robotic technology in operating rooms: a review,” Curr. Robot. Rep., vol. 2, pp. 333–341, 2021. https://doi.org/10.1007/s43154-021-00055-4.Suche in Google Scholar PubMed PubMed Central

[2] W. H. Organization and Others, “Global strategy on human resources for health: workforce 2030,” 2016.Suche in Google Scholar

[3] M. Jacob, Y.-T. Li, G. Akingba, and J. P. Wachs, “Gestonurse: a robotic surgical nurse for handling surgical instruments in the operating room,” J. Robot. Surg., vol. 6, no. 1, pp. 53–63, 2012. https://doi.org/10.1007/s11701-011-0325-0.Suche in Google Scholar PubMed

[4] A. Kogkas, A. Ezzat, R. Thakkar, A. Darzi, and G. Mylonas, “Free-view, 3D gaze-guided robotic scrub nurse,” in International Conference on Medical Image Computing and Computer-Assisted Intervention, 2019, pp. 164–172.10.1007/978-3-030-32254-0_19Suche in Google Scholar

[5] M. Liehn, B. Lengersdorf, L. Steinmüller, and R. Döhler, OP-Handbuch: Grundlagen, Instrumentarium, OP-Ablauf, Berlin, Heidelberg, Springer-Verlag, 2016.10.1007/978-3-662-49281-9Suche in Google Scholar

[6] R. Kramme, Medizintechnik: Verfahren-Systeme-Informationsverarbeitung, Berlin, Heidelberg, Springer-Verlag, 2016.10.1007/978-3-662-45538-8Suche in Google Scholar

[7] M. Liehn, J. Köpcke, H. Richter, and L. Kasakov, OTA-Lehrbuch: Ausbildung zur Operationstechnischen Assistenz, Berlin, Heidelberg, Springer-Verlag, 2018.10.1007/978-3-662-56183-6Suche in Google Scholar

[8] T. Carus, Operationsatlas Laparoskopische Chirurgie: Indikationen-Operationsablauf-Varianten-Komplikationen, Berlin, Heidelberg, Springer-Verlag, 2014.10.1007/978-3-642-31246-5Suche in Google Scholar

[9] M. Liehn and H. Schlautmann, 1 × 1 der chirurgischen Instrumente, Berlin, Heidelberg, Springer, 2011.10.1007/978-3-642-16924-3Suche in Google Scholar

[10] R. K. I. Robert Koch Institut, “Anforderungen an die Hygiene bei der Aufbereitung flexibler Endoskope und endoskopischen Zusatzinstrumentariums,” 2002.Suche in Google Scholar

[11] L. Cowperthwaite and R. L. Holm, “Guideline implementation: surgical instrument cleaning,” AORN J., vol. 101, no. 5, pp. 542–552, 2015. https://doi.org/10.1016/j.aorn.2015.03.005.Suche in Google Scholar PubMed

[12] G. Jocher, A. Stoken, J. Borovec, et al.., Ultralytics/Yolov5: v7.0 – YOLOv5 SOTA Realtime Instance Segmentation, Zenodo, 2022.Suche in Google Scholar

[13] C. ai Corporation, Computer Vision Annotation Tool (CVAT), Zenodo, 2023.Suche in Google Scholar

© 2023 the author(s), published by De Gruyter, Berlin/Boston

This work is licensed under the Creative Commons Attribution 4.0 International License.

Artikel in diesem Heft

- Frontmatter

- Editorial

- Special issue: Minimal-invasive robotics

- Methods

- Musculoskeletal model-based control strategy of an over-actuated glenohumeral simulator to assess joint biomechanics

- Qualitative and quantitative assessment of admittance controllers for hand-guiding surgical robots

- Continuum robot actuation by a single motor per antagonistic tendon pair: workspace and repeatability analysis

- Development of an AI-driven system for neurosurgery with a usability study: a step towards minimal invasive robotics

- Compact flexible actuator based on a shape memory alloy for shaping surgical instruments

- Shape-sensing by self-sensing of shape memory alloy instruments for minimal invasive surgery

- Minimally invasive in situ bioprinting using tube-based material transfer

- Applications

- Deep learning assisted intraoperative instrument cleaning station for robotic scrub nurse systems

Artikel in diesem Heft

- Frontmatter

- Editorial

- Special issue: Minimal-invasive robotics

- Methods

- Musculoskeletal model-based control strategy of an over-actuated glenohumeral simulator to assess joint biomechanics

- Qualitative and quantitative assessment of admittance controllers for hand-guiding surgical robots

- Continuum robot actuation by a single motor per antagonistic tendon pair: workspace and repeatability analysis

- Development of an AI-driven system for neurosurgery with a usability study: a step towards minimal invasive robotics

- Compact flexible actuator based on a shape memory alloy for shaping surgical instruments

- Shape-sensing by self-sensing of shape memory alloy instruments for minimal invasive surgery

- Minimally invasive in situ bioprinting using tube-based material transfer

- Applications

- Deep learning assisted intraoperative instrument cleaning station for robotic scrub nurse systems