Abstract

This paper presents a metasurface-based light receiver tailored for compact off-axis light detection and ranging (LiDAR) systems, addressing the critical challenge of simultaneously enhancing the field of view (FOV) and effective signal reception while adhering to strict size and weight limitations. A general design principle for the metasurface-based light receiver with large FOV capability is proposed, leveraging mapping relations to achieve optimal performance. As a proof of concept, a 20-mm-diameter 4-region metasurface device was designed and fabricated by deep ultraviolet (DUV) projection stepper lithography on an 8-inch fused silica wafer. The metasurface-based light receiver achieves a large FOV of ±30° and demonstrates a significant power enhancement ranging from 1.5 to 3 times at 940 nm when coupled with a 3-mm-diameter avalanche photodiode (APD). The innovation not only establishes a new paradigm for compact, high-performance LiDAR systems but also enables deployment in advanced fields such as unmanned aerial vehicles (UAVs) and miniaturized robots.

1 Introduction

Light detection and ranging (LiDAR) is a high-speed and high-accuracy technology for acquiring three-dimensional information, widely applied in fields such as self-driving cars [1], unmanned aerial vehicles (UAVs) [2], environmental monitoring [3] and ocean exploration [4]. A typical LiDAR system comprises two main components: the transmitting system and the receiving system. The transmitting system scans the target by dynamically deflecting a laser beam. The receiving system, which integrates both optical elements and detectors, is responsible for capturing the echo light signals reflected by the target. To enable LiDAR systems to receive weak signals with small size and low power consumption, avalanche photodiode (APD) [5], [6], [7] and single photon avalanche diode (SPAD) [8], [9] with single-photon detection capability are commonly employed as detectors. The detectors rely on the optical elements capable of providing a large field of view (FOV) and enhancing the strength of the received signals to increase the detection range and signal-to-noise ratio (SNR). Conventional optical configurations, composed of lenses and auxiliary optical elements such as fiber optic taper and immersion lens, can expand the FOV while maintaining the focusing capability. However, these configurations are bulky, heavy, difficult to install and limited in increasing the FOV, making them unsuitable for lightweight and compact LiDAR systems with large FOVs [10]. Although some LiDAR systems utilizing APD arrays to enlarge the FOV have been proposed [11], [12], [13], these arrays involve complex manufacturing processes, significant costs, and still result in relatively large system volumes. Therefore, there remains a strong demand for a compact light receiver with a large FOV using a single detector.

Metasurfaces, as a novel class of diffractive optical elements, use subwavelength nanopillars arranged periodically on a thin substrate to manipulate the phase, amplitude and polarization of incident light [14]. Compared to conventional optical elements, metasurfaces offer superior advantages due to their lightweight, ultrathin nature, flexibility in design and compatibility with semiconductor processes [15]. Metasurfaces have been extensively studied for imaging and beamforming applications [16]. In LiDAR transmitting systems, for example, metasurfaces have been used for transmissive beam steering and deflection angle enlarging [17], [18], [19]. However, the exploration of metasurfaces in non-imaging scenarios, such as light receiving, remains limited [20], [21]. The light receiver of a LiDAR system requires the metasurface to achieve signal enhancement across a large FOV. While the large FOV metasurfaces have been investigated using various methods, such as cascaded metasurface [22], metasurface array [23], and quadratic metasurface [24], [25], [26], almost all of them are utilized for imaging applications like palm print recognition [27], fingerprint recognition [28] and wide-angle stereo imaging [29].

Currently, the primary fabrication method for metasurface involves electron-beam (e-beam) lithography [30], ensuring a nanoscale patterning resolution. However, this kind of point-by-point techniques is time-consuming, expensive and not well-suited for mass production. As the diameter increases, the number of nanopillars grows significantly, making it challenging to fabricate large-aperture metasurfaces. In recent years, several viable fabrication methods for large-aperture metasurface have been proposed, such as DUV projection lithography [31], [32], [33], nanoimprint lithography (NIL) [34] and e-beam lithography based on the variable shaped beam (VSB) and character projection (CP) writing principle [35]. The maximum exposure area of DUV projection lithography is approximately 20–30 mm, allowing a metasurface with a 20 mm diameter to be conveniently fabricated in a single exposure.

In this study, a large FOV metasurface-based light receiver for LiDAR systems is systematically investigated. Compared to conventional receiving systems, the metasurface-based light receiver has the capacity to significantly reduce the weight and size of the LiDAR system, thereby attaining a compact footprint. This paper first elucidates the design principle for the metasurface-based light receiver with large FOV capability, which is grounded in mapping relationships. It then introduces a metasurface fabrication method based on the DUV projection stepper lithography technique to satisfy the requirement for batch production of large-aperture metasurfaces. Subsequently, a 20-mm-diameter 4-region metasurface device with a ±30° FOV at 940 nm was designed and fabricated. The metasurface device can converge the echo signal onto a 3-mm-diameter APD located 15 mm away from the metasurface plane. The performance of the metasurface was experimentally evaluated, confirming a power enhancement ranging from 1.5 to 3 times. Finally, through the 3D imaging experiment conducted with a microelectromechanical system (MEMS) LiDAR, the feasibility of metasurface in practical applications is demonstrated.

2 Design and fabrication of the metasurface

2.1 Design

A general design methodology of the non-imaging metasurface for large FOV light receiving is elaborated in this subsection, as shown in Figure 1. The light receiver consists of an optical metasurface and an APD detector with a diameter of b (Figure 1a). The metasurface device, placed in the xOy plane, ensures efficient light signal reception by the detector under various incident angles across the entire targeted FOV. To begin with, we assume that the metasurface converges a normally incident plane wave into a point with a focusing phase profile Φ(x, y). To maintain the focal position unchanged under oblique incidence compared to normal incidence, the metasurface is further imparted with a linear phase compensation, and the total phase profile can be expressed as:

where λ is working wavelength,

r

= (x, y) = r (cosφ

r

, sinφ

r

) is the position vector on the metasurface plane,

Working principles of the metasurface-based light receiver. (a) Schematic diagram of the metasurface-based light receiver with a large field of view (FOV). (b) Graph of the designed staircase function g 1(r). (c) Graph of the designed function g 2(φ r ). (d) Phase profile of the 20-mm-diameter 4-region metasurface device.

Subsequently, we consider the light receiving metasurface capable of directing beams from various directions to the original focal point simultaneously. In this scenario, θ

k

can take any value within [0, θ

max], and φ

k

can take any value within [0, 2π], where ±θ

max represents the targeted FOV. Accordingly, r ∈ [0, R] and φ

r

∈ [0, 2π], where R denotes the radius of the metasurface. The design strategy involves ensuring that each incident direction

As an example to demonstrate the proposed methodology, a quadratic phase profile is selected for focusing and g 1(r) is chosen as a staircase function. The metasurface is designed as (Figure 1b and c):

where f is the focal length, n (= 1, 2, …, N) indexes the distinct segments of g 1(r), R n represents the boundary of the segmented ring regions, and θ kn is a pre-defined constant. By this design, the metasurface device is divided into N regions and each ring region is responsible for receiving signals within a specific sub-FOV, denoted as FOV n . The overall FOV can be achieved by integrating the individual FOV n of each region.

To obtain appropriate θ kn values, more properties of the design should be analyzed. The quadratic phase profile converts the change in the incident angle of the beam into a horizontal displacement of the focal point in the focal plane as follows:

where α is the incident angle of the beam, ϕ(x, y) is the phase distribution of the outgoing light. Since the last term has no relation to variables and doesn’t affect the wavefront, each incident angle corresponds to a focus in the focal plane with an in-plane translation of f·sinα. For the detector with a diameter of b, the maximum incident angle to maintain the focal position within the photosensitive area is α max = arcsin (b/2f). Hence, the staircase function g 1(r) can be detailed as:

In this case, θ kn is the center angle of the specific FOV n .

Finally, the metasurface phase profile can be expressed according to Eq. (1):

By this design, each region of the metasurface can receive the echo signal with an incident angle range from (θ kn – α max) to (θ kn + α max), the focus offset equals to f·(sinθ kn – sinα) based on Eq. (3) and the focal position is in the center of the detector when α equals to θ kn . The phase profile has rotational symmetry about point O, the beams with incident angles ranging from –(θ kN + α max) to (θ kN + α max) can be received by the detector. Note that we chose quadratic phase profile for the sake of simplicity, but similar discussion can be conducted for other function form (hyperbolic phase profile, for example) if Taylor expansion formula is applied.

The specific phase profile is determined by the APD diameter b, the FOV of the light receiver, the distance f between the metasurface and detector, and the boundary radius R n according to Eq. (5). As a proof of concept, a 20-mm-diameter 4-region metasurface device was designed, and the phase profile is shown in Figure 1d. The device is expected to direct all echo light signals within a FOV of about ±30° onto the effective photosensitive area of a 3-mm-diameter APD. The APD is positioned 15 mm away from the plane of the metasurface device.

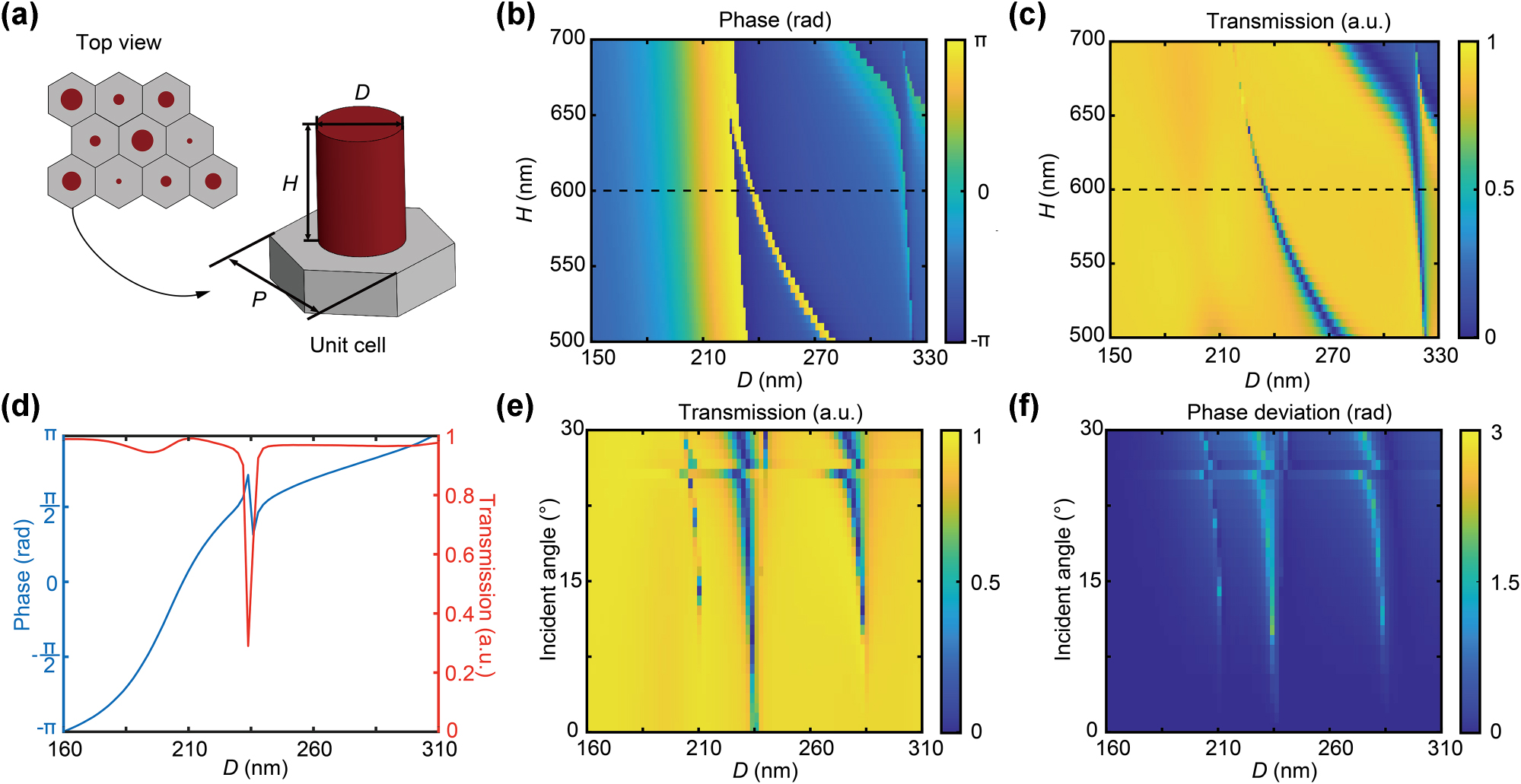

The designed metasurface phase profile was then implemented by periodically arranged subwavelength unit cells, as shown in Figure 2. Each unit cell comprises an amorphous silicon cylinder placed on a fused silica substrate (Figure 2a), which is insensitive to the polarization state of the incident light. A hexagonal lattice arrangement is adopted to enhance the sampling resolution of the phase profile. For the working wavelength of 940 nm, the period P is set to 450 nm according to the Nyquist sampling criteria. The transmission coefficients can be modulated by varying the diameter (D) and height (H) of the nanocylinder (Figure 2b and c). The transmissive phase and transmission values are calculated using the finite-difference time-domain (FDTD) method, with H ranging from 500 to 700 nm and D ranging from 150 to 330 nm. Considering both the fabrication feasibility and the transmission modulation capability, H is fixed at 600 nm, ensuring an easily attainable aspect ratio. And the transmissive phase fully covers the range from −π to π while maintaining the transmission above 95 % by adjusting D from 160 to 320 nm (Figure 2d). To verify the incident-angle-insensitivity of the cylindrical unit cell design, additional numerical simulations were performed, and the results are presented in Figure 2e and f, which are detailed in Section S1 (Supplementary Material).

Design and simulation of the metasurface unit cell. (a) Schematic diagram of a single metasurface unit cell with period P, including an amorphous silicon cylinder of diameter D and height H on a fused silica substrate. (b) The transmissive phase and (c) transmission values of the unit cells as functions of D and H with a fixed period P = 450 nm at the wavelength of 940 nm. (d) The phase and transmission responses of the unit cell for H = 600 nm across varying Ds. (e) Angle-dependent optical power transmission of the cylindrical meta-atoms for different Ds and different oblique incident angles. (f) The deviation in the phase of the meta-atoms under oblique incidence compared to normal incidence.

2.2 Fabrication

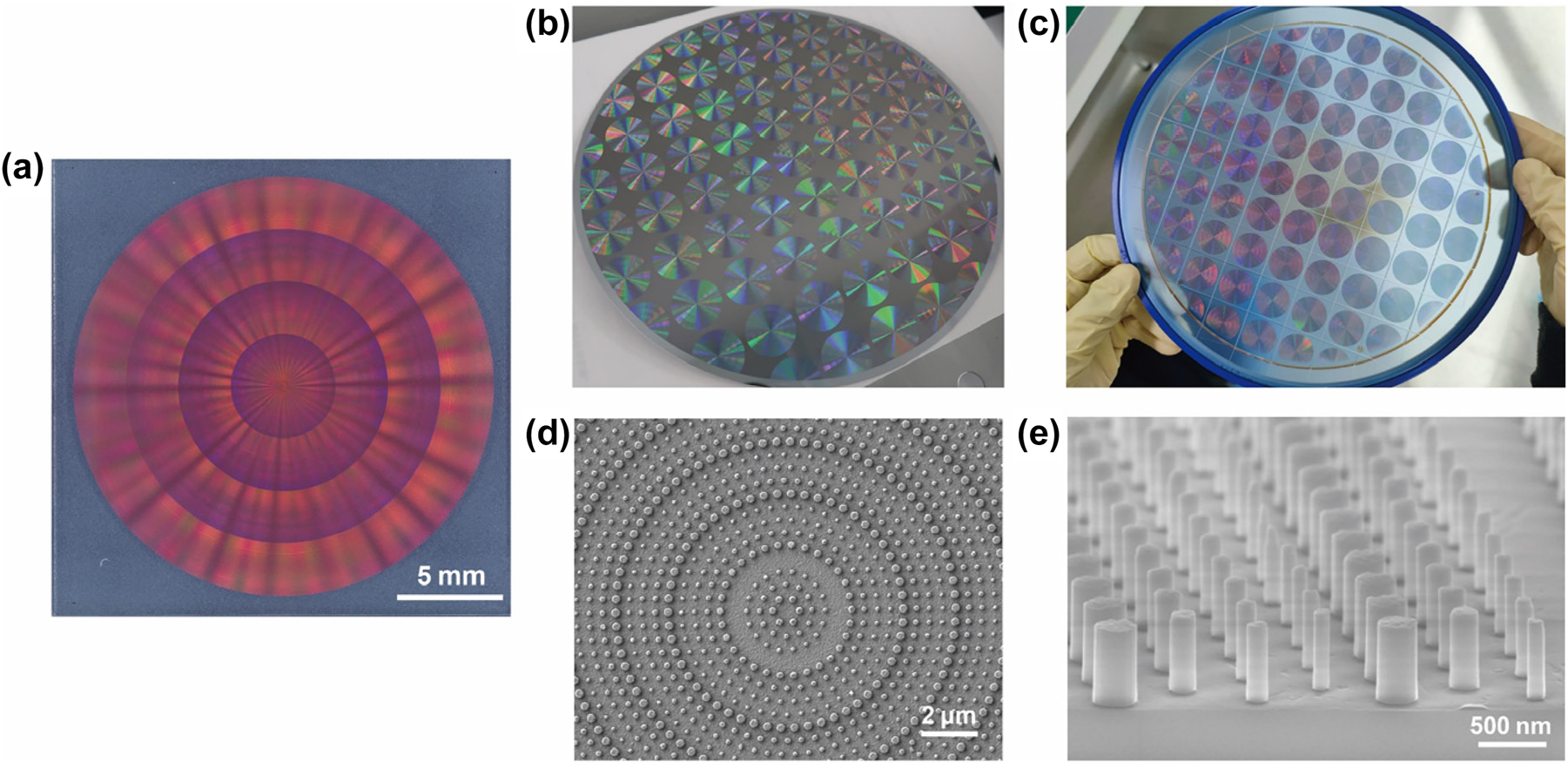

To meet the requirement for low-cost batch production, a high-throughput DUV stepper photolithography process was developed to fabricate 45 metasurface devices with a diameter of 20 mm on a single 8-inch fused silica wafer (Figure 3). Initially, a 600-nm-thick amorphous silicon layer was deposited on the substrate via plasma-enhanced chemical vapor deposition (PECVD). Subsequently, chromium (Cr) was deposited on the amorphous silicon layer as a hard mask for etching the nanopillar arrays, and ensure compatibility with the DUV lithography system, which cannot recognize transparent substrates. Then, the intended metasurface patterns were transferred to a spin-coated photoresist layer using DUV projection lithography. Figure 3b presents a photograph of the wafer after photolithography. Next, nanopillars were formed by inductively coupled plasma (ICP) etching. Finally, the Cr hard mask was removed by wet etching and the wafer was diced into individual devices by blade dicing (Figure 3c). Scanning electron microscopy (SEM) characterization confirmed the effectiveness of the process (Figure 3d and e).

Images of the fabricated metasurface devices. (a) An optical microscopic image of one of the metasurface devices. (b) The entire 8-inch wafer before the etching process. (c) The entire 8-inch wafer after blade dicing. (d, e) Scanning electron microscopy (SEM) images of amorphous silicon nanopillars constitute the metasurface.

3 Results and discussions

3.1 Performance of the metasurface-based light receiver

The light receiver in a LiDAR system is a typical non-imaging optical system, with the FOV and the capability to enhance the received signal strength as two critical parameters affecting the detection range and SNR. The FOV defines the angular extent over which the system can receive echo signals, while the capability to enhance the received signal strength refers to the increased SNR and intensity of the signal captured by the receiver relative to a mere detector or other configurations.

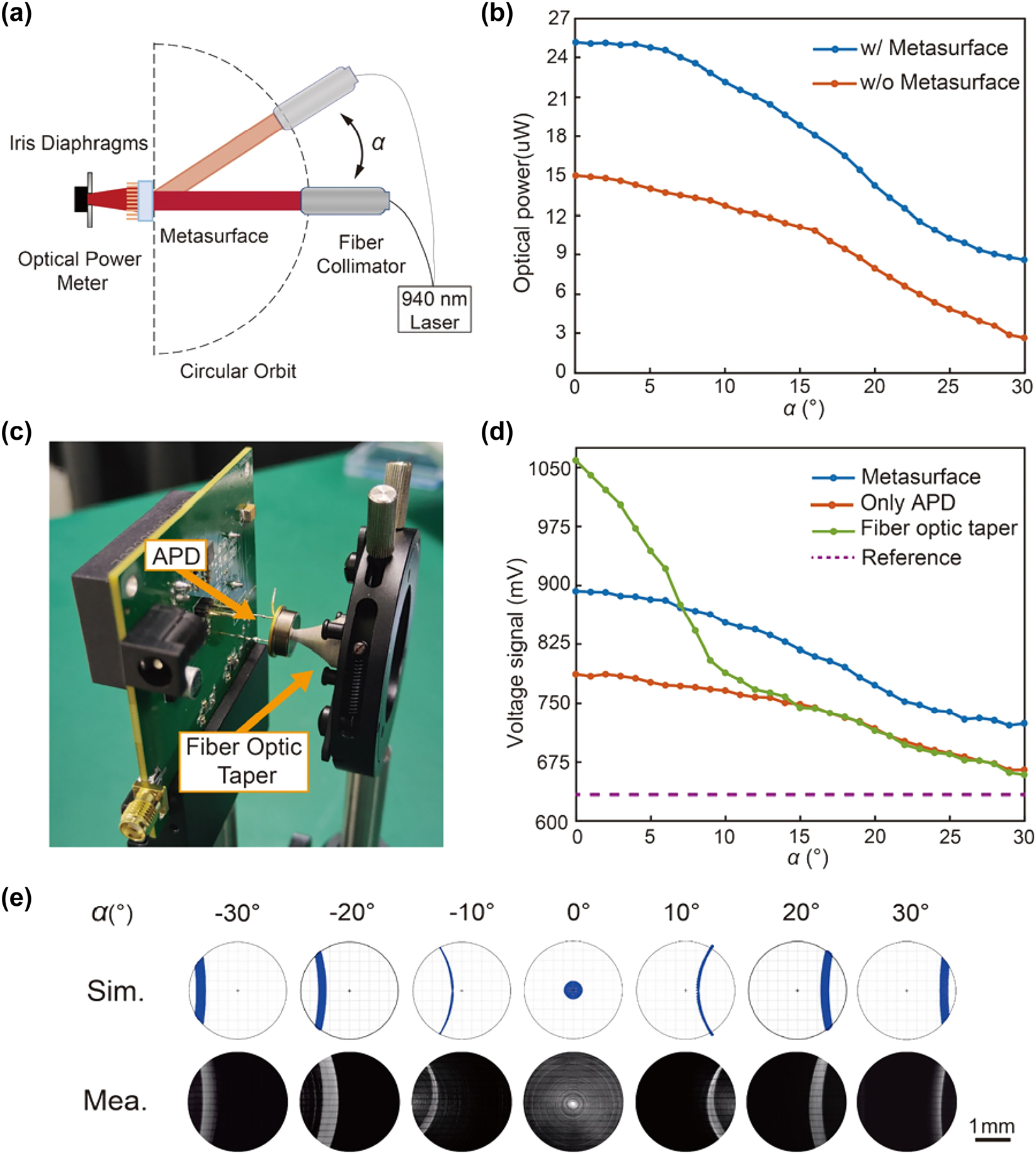

These two parameters were experimentally characterized to assess the light-gathering performance of the proposed metasurface device, as shown in Figure 4. The experimental setup is exhibited in Figure 4a. The fiber collimator includes a collimating lens that produces a 20-mm-diameter beam and a 0.1 % light attenuator. An iris diaphragm is positioned in front of the sensor of the optical power meter, with the aperture size set to 3 mm to emulate the effective photosensitive area of the APD. Given the symmetric configuration, we only considered the incident angle from 0 to 30°. The measured results (Figure 4b) demonstrate a significant power enhancement ratio of approximately 1.5–3 times compared to the scenario without the metasurface. This non-uniformity may be further mitigated by more rational segmentation of the metasurface area. The decreasing trend of the received optical power originates from the uneven intensity distribution of the Gaussian beam, as well as the reduced optical power per unit area resulting from higher incident angles. Overall, the experiment results highlight the remarkable light-gathering ability of the fabricated metasurface across the targeted FOV of ±30°.

Experimental characterization of the metasurface-based light receiver. (a) Schematic of the experimental setup for assessing the light-gathering performance of the metasurface. α denotes the incidence angle. (b) Measured optical power of the light receiver upon various incident angles with/without the metasurface device. (c) Photograph of a practical light receiver including the avalanche photondiode (APD) and the fiber optic taper. (d) Measured voltages of the light receiver upon various incident angles of different configurations. (e) Measured (Mea.) and simulated (Sim.) light field intensity distributions at different incident angles.

Similar measurements were conducted to evaluate the light-gathering performance of a practical light receiver, with an APD (Hamamatsu, S8890-30) sensor replacing the optical power and the iris diaphragm in Figure 4a. The APD can convert optical signals into electrical voltage biases. In addition to the metasurface, a fiber optic taper with a large-end diameter of 18 mm and a small-end diameter of 3 mm was also investigated for comparison, as shown in Figure 4c. The dotted reference line in Figure 4d indicates the voltage signal of the APD under dark conditions, which is closely associated with the circuit design. The results (Figure 4d) demonstrate that the fiber optic taper exhibits superior signal strength enhancement within a narrow range of incident angles but its performance degrades rapidly as the incident angle increases. In contrast, the metasurface-based light receiver displays a rather uniform performance across the entire FOV, resulting in a much larger detection range of the LiDAR system.

Furthermore, we utilized a CMOS image sensor to measure the light intensities on the receiving plane upon various incident angles (Figure 4e). It was observed that there is always a certain amount of light energy distributing within the central 3-mm-diameter circle area, enabling the large FOV feature of the metasurface-based receiver. The intensity distributions agree well with the simulated results obtained through ray-tracing techniques, thereby validating the design and fabrication of the metasurface device.

3.2 Application in a MEMS LiDAR system

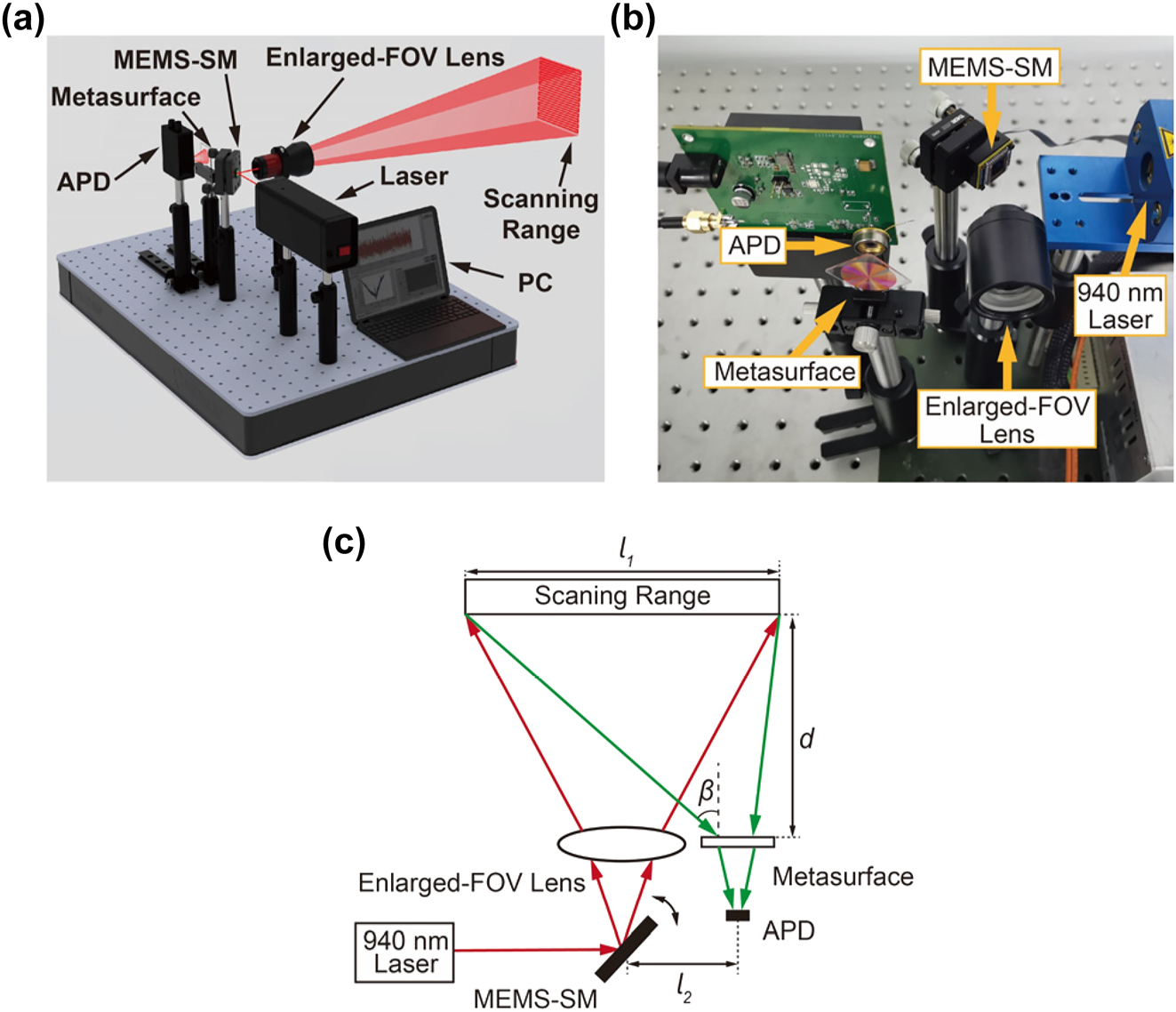

The proposed metasurface-based light receiver was subsequently integrated into a custom-built MEMS LiDAR system to demonstrate its applications in practical scenarios, as shown in Figure 5a and b. A modulated beam from a 940 nm laser source is incident on the MEMS scanning mirror (MEMS-SM) (Mirrorcle, S30348), which dynamically redirects the reflected beam towards an enlarged-FOV lens. By controlling the MEMS-SM, the output beam is deflected across a predefined scanning range. Then, the echo signals from the target are captured by a receiving system including the metasurface and the APD. The distance values are obtained by the time-of-flight (ToF) method, and data-processed 3D images are displayed on PC. Figure 5c illustrates the geometrical schematic of the MEMS LiDAR system. The maximum receiving angle β in the MEMS LiDAR system can be calculated as:

where l 1 denotes the scanning range length including the target and background, l 2 represents the distance between the MEMS-SM and the APD, and d is the distance between the target and metasurface. When l 1 and d are fixed, we can obtain different β values by varying l 2.

Experimental setup of the self-built LiDAR system. (a) Schematic diagram of the MEMS LiDAR system. (b) Photograph of the MEMS LiDAR system. (c) Geometrical illustration of the MEMS LiDAR system.

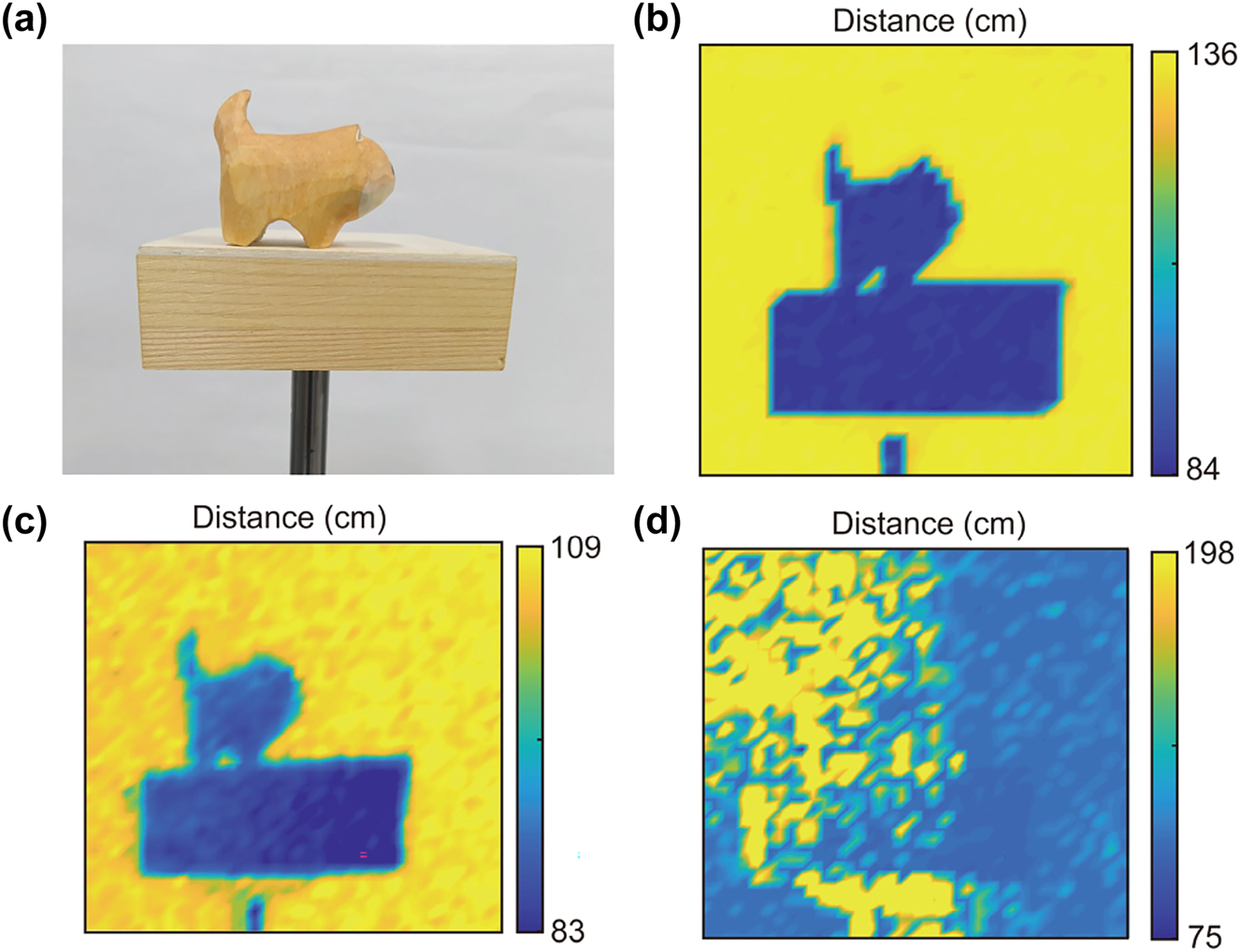

To investigate the large FOV 3D imaging capability of the MEMS LiDAR, a wooden dog model (45 × 25 × 35 mm) standing on a wooden pedestal (85 × 85 × 27 mm) was selected for depth profiling, a paper baffle located at a certain distance from the scanning target was used as the background, as shown in Figure 6a. A grid of 40 × 40 equally distributed points along the scanning path are utilized for distance retrieving. The distance d was fixed at 84 cm, the scanning range was about 13 × 13° and the length l 1 was 12 cm. The distance l 2 was first set to 3 cm with the maximum receiving angle β at 7.5°. The obtained depth map of the target is exhibited in Figure 6b. The outlines of the wooden dog model and the wooden pedestal are clearly distinguished, and the distance precision is evaluated to be less than 2 cm. This experiment validates the light-gathering ability of the metasurface-based light receiver in a small FOV.

3D imaging performance of the MEMS LiDAR system. (a) Photograph of the target for imaging. (b) Depth map obtained by the system in a small FOV with the metasurface-based light receiver. (c, d) Depth maps obtained by the system in a large FOV with the metasurface-based receiver and fiber-optic-taper-based receiver, respectively.

Next, the distance l 2 was changed to 42 cm, resulting in a maximum receiving angle β of 30°. 3D imaging using the metasurface or fiber optic taper as the receiving optics is conducted for comparisons, and the results are displayed in Figure 6c and d, respectively. These experiments were conducted within a FOV ranging from 23° to 30°, which is detailed in Section S2 (Supplementary Material). The former image retains the details of the objects and the background, whereas the latter image is severely blurred and fails to resolve most information about the objects. These experimental results agree well with Figure 4d and validate the metasurface’s ability to effectively receive the echo light signals at an incident angle as large as ±30°. The imaging experiment underscores the extraordinary potential of the metasurface for use in the compact and highly integrated LiDAR systems.

4 Conclusions

This study systematically investigates the metasurface-based light receiver, establishes a general design methodology based on mapping relationships, and experimentally validates its performance in a MEMS LiDAR system. The methodology establishes a design framework that correlates metasurface parameters with APD diameter, system dimensions, and FOV requirements. By employing a segmented phase compensation strategy and DUV lithography-based mass production, we demonstrated a compact and cost-effective solution that a 20-mm-diameter 4-region metasurface device achieves a significant signal enhancement ranging from 1.5 to 3 times across ±30° FOV. Furthermore, integration into a MEMS LiDAR system confirms its feasibility for real-world applications, delivering clear 3D imaging even at wide angles, thereby verifying system-level feasibility. This innovative approach not only reduces the size and weight of the LiDAR system but also ensures high performance, making it a promising solution for various applications such as UAVs, miniaturized robots, and self-driving cars. Future research efforts may focus on refining the design of mapping relationships for metasurface devices to achieve higher enhancement and greater uniformity in performance.

Funding source: Innovation Project of Optics Valley Laboratory

Award Identifier / Grant number: OVL2023ZD001

Funding source: National Key Research and Development Program of China

Award Identifier / Grant number: 2022YFB3204202

Acknowledgments

The authors thank the Hubei Jiufengshan Laboratory for its support in fabrication technology.

-

Research funding: National Key Research and Development Program of China (2022YFB3204202); Innovation Project of Optics Valley Laboratory (OVL2023ZD001).

-

Author contributions: All authors have accepted responsibility for the entire content of this manuscript and consented to its submission to the journal, reviewed all the results and approved the final version of the manuscript. LZ and JW conceived the project. JW and ZY supervised the project. HG designed the samples, conducted performance tests. HG and BG conducted the MEMS LiDAR imaging experiment. HG, XZ and JY analysed data. HG, LZ and XZ cowrote the manuscript.

-

Conflict of interest: Authors state no conflict of interest.

-

Data availability: Data underlying the results presented in this paper are available from the authors upon reasonable request.

References

[1] K.-Y. Huang, et al.., “Scheme of flash LiDAR employing glass aspherical microlens array with large field of illumination for autonomous vehicles,” Opt. Express, vol. 32, no. 20, pp. 35854–35870, 2024, https://doi.org/10.1364/oe.537170.Suche in Google Scholar PubMed

[2] T. T. Sankey, J. McVay, T. L. Swetnam, M. P. McClaran, P. Heilman, and M. Nichols, “UAV hyperspectral and lidar data and their fusion for arid and semi-arid land vegetation monitoring,” Remote Sens. Ecol. Conserv., vol. 4, no. 1, pp. 20–33, 2018, https://doi.org/10.1002/rse2.44.Suche in Google Scholar

[3] M. Michałowska and J. Rapiński, “A review of tree species classification based on airborne LiDAR data and applied classifiers,” Remote Sens., vol. 13, no. 3, p. 353, 2021, https://doi.org/10.3390/rs13030353.Suche in Google Scholar

[4] Y. Zhou, et al.., “Shipborne oceanic high-spectral-resolution lidar for accurate estimation of seawater depth-resolved optical properties,” Light Sci. Appl., vol. 11, no. 1, p. 261, 2022, https://doi.org/10.1038/s41377-022-00951-0.Suche in Google Scholar PubMed PubMed Central

[5] L. Qian, et al.., “Infrared detector module for airborne hyperspectral LiDAR: design and demonstration,” Appl. Opt., vol. 62, no. 8, pp. 2161–2167, 2023, https://doi.org/10.1364/ao.482626.Suche in Google Scholar

[6] Q. Hao, Y. Tao, J. Cao, and Y. Cheng, “Development of pulsed-laser three-dimensional imaging flash lidar using APD arrays,” Microw. Opt. Technol. Lett., vol. 63, no. 10, pp. 2492–2509, 2021, https://doi.org/10.1002/mop.32978.Suche in Google Scholar

[7] Q. Li, S. Wang, J. Wu, F. Chen, H. Gao, and H. Gong, “Design of lidar receiving optical system with large FoV and high concentration of light to resist background light interference,” Micromachines, vol. 15, no. 6, p. 712, 2024, https://doi.org/10.3390/mi15060712.Suche in Google Scholar PubMed PubMed Central

[8] S. W. Hutchings, et al.., “A reconfigurable 3-D-Stacked SPAD imager with in-pixel histogramming for flash LIDAR or high-speed time-of-flight imaging,” IEEE J. Solid-State Circuits, vol. 54, no. 11, pp. 2947–2956, 2019, https://doi.org/10.1109/jssc.2019.2939083.Suche in Google Scholar

[9] F. Villa, F. Severini, F. Madonini, and F. Zappa, “SPADs and SiPMs arrays for long-range high-speed light detection and ranging (LiDAR),” Sensors, vol. 21, no. 11, p. 3839, 2021, https://doi.org/10.3390/s21113839.Suche in Google Scholar PubMed PubMed Central

[10] N. Li, et al.., “Spectral imaging and spectral LIDAR systems: moving toward compact nanophotonics-based sensing,” Nanophotonics, vol. 10, no. 5, pp. 1437–1467, 2021, https://doi.org/10.1515/nanoph-2020-0625.Suche in Google Scholar

[11] Y. Cheng, J. Cao, F. Zhang, and Q. Hao, “Design and modeling of pulsed-laser three-dimensional imaging system inspired by compound and human hybrid eye,” Sci. Rep., vol. 8, no. 1, p. 17164, 2018, https://doi.org/10.1038/s41598-018-35098-9.Suche in Google Scholar PubMed PubMed Central

[12] Q. Li, et al.., “Design of non-imaging receiving system for large field of view lidar,” Infrared Phys. Technol., vol. 133, p. 104802, 2023, https://doi.org/10.1016/j.infrared.2023.104802.Suche in Google Scholar

[13] X. Lee, et al.., “Increasing the effective aperture of a detector and enlarging the receiving field of view in a 3D imaging lidar system through hexagonal prism beam splitting,” Opt. Express, vol. 24, no. 14, pp. 15222–15231, 2016, https://doi.org/10.1364/oe.24.015222.Suche in Google Scholar

[14] L. Zhang, et al.., “A review of cascaded metasurfaces for advanced integrated devices,” Micromachines, vol. 15, no. 12, p. 1482, 2024, https://doi.org/10.3390/mi15121482.Suche in Google Scholar PubMed PubMed Central

[15] A. I. Kuznetsov, et al.., “Roadmap for optical metasurfaces,” ACS Photonics, vol. 11, no. 3, pp. 816–865, 2024, https://doi.org/10.1021/acsphotonics.3c00457.Suche in Google Scholar PubMed PubMed Central

[16] L. Zhang, et al.., “Four-dimensional imaging based on a binocular chiral metalens,” Opt. Lett., vol. 50, no. 3, pp. 1017–1020, 2025, https://doi.org/10.1364/ol.545263.Suche in Google Scholar

[17] L. Zhang, et al.., “Highly tunable cascaded metasurfaces for continuous two-dimensional beam steering,” Adv. Sci., vol. 10, no. 24, p. 2300542, 2023, https://doi.org/10.1002/advs.202300542.Suche in Google Scholar PubMed PubMed Central

[18] X. Li, et al.., “Cascaded metasurfaces enabling adaptive aberration corrections for focus scanning,” Opto-Electron. Adv., vol. 7, no. 10, p. 240085, 2024, https://doi.org/10.29026/oea.2024.240085.Suche in Google Scholar

[19] R. Juliano Martins, et al.., “Metasurface-enhanced light detection and ranging technology,” Nat. Commun., vol. 13, no. 1, p. 5724, 2022, https://doi.org/10.1038/s41467-022-33450-2.Suche in Google Scholar PubMed PubMed Central

[20] Y. Yang, et al.., “Integrated metasurfaces for re-envisioning a near-future disruptive optical platform,” Light Sci. Appl., vol. 12, no. 1, p. 152, 2023, https://doi.org/10.1038/s41377-023-01169-4.Suche in Google Scholar PubMed PubMed Central

[21] R. Guan, H. Xu, Z. Lou, Z. Zhao, and L. Wang, “Design and development of metasurface materials for enhancing photodetector properties,” Adv. Sci., vol. 11, no. 34, p. 2402530, 2024, https://doi.org/10.1063/5.0232606.Suche in Google Scholar

[22] B. Groever, W. T. Chen, and F. Capasso, “Meta-lens doublet in the visible region,” Nano Lett., vol. 17, no. 8, pp. 4902–4907, 2017, https://doi.org/10.1021/acs.nanolett.7b01888.Suche in Google Scholar PubMed

[23] J. Chen, et al.., “Planar wide-angle-imaging camera enabled by metalens array,” Optica, vol. 9, no. 4, pp. 431–437, 2022, https://doi.org/10.1364/optica.446063.Suche in Google Scholar

[24] A. Martins, et al.., “On metalenses with arbitrarily wide field of view,” ACS Photonics, vol. 7, no. 8, pp. 2073–2079, 2020, https://doi.org/10.1021/acsphotonics.0c00479.Suche in Google Scholar

[25] Y. Guo, X. Ma, M. Pu, X. Li, Z. Zhao, and X. Luo, “High-efficiency and wide-angle beam steering based on catenary optical fields in ultrathin metalens,” Adv. Opt. Mater., vol. 6, no. 19, p. 1800592, 2018, https://doi.org/10.1002/adom.201800592.Suche in Google Scholar

[26] M. Pu, X. Li, Y. Guo, X. Ma, and X. Luo, “Nanoapertures with ordered rotations: symmetry transformation and wide-angle flat lensing,” Opt. Express, vol. 25, no. 25, pp. 31471–31477, 2017, https://doi.org/10.1364/oe.25.031471.Suche in Google Scholar PubMed

[27] E. Lassalle, et al.., “Imaging properties of large field-of-view quadratic metalenses and their applications to fingerprint detection,” ACS Photonics, vol. 8, no. 5, pp. 1457–1468, 2021, https://doi.org/10.1021/acsphotonics.1c00237.Suche in Google Scholar

[28] Y. Kuang, S. Wang, B. Mo, S. Sun, K. Xia, and Y. Yang, “Palm vein imaging using a polarization-selective metalens with wide field-of-view and extended depth-of-field,” npj Nanophoton., vol. 1, no. 1, p. 24, 2024, https://doi.org/10.1038/s44310-024-00027-4.Suche in Google Scholar

[29] D. K. Sharma, et al.., “Stereo imaging with a hemispherical field-of-view metalens camera,” ACS Photonics, vol. 11, no. 5, pp. 2016–2021, 2024, https://doi.org/10.1021/acsphotonics.4c00087.Suche in Google Scholar

[30] M. Khorasaninejad, W. T. Chen, R. C. Devlin, J. Oh, A. Y. Zhu, and F. Capasso, “Metalenses at visible wavelengths: diffraction-limited focusing and subwavelength resolution imaging,” Science, vol. 352, no. 6290, pp. 1190–1194, 2016, https://doi.org/10.1126/science.aaf6644.Suche in Google Scholar PubMed

[31] T. Hu, et al.., “Demonstration of color display metasurfaces via immersion lithography on a 12-inch silicon wafer,” Opt. Express, vol. 26, no. 15, pp. 19548–19554, 2018, https://doi.org/10.1364/oe.26.019548.Suche in Google Scholar PubMed

[32] L. Zhang, et al.., “High-efficiency, 80 mm aperture metalens telescope,” Nano Lett., vol. 23, no. 1, pp. 51–57, 2023, https://doi.org/10.1021/acs.nanolett.2c03561.Suche in Google Scholar PubMed

[33] J.-S. Park, et al.., “All-glass 100 mm diameter visible metalens for imaging the cosmos,” ACS Nano, vol. 18, no. 4, pp. 3187–3198, 2024, https://doi.org/10.1021/acsnano.3c09462.Suche in Google Scholar PubMed PubMed Central

[34] D. K. Oh, T. Lee, B. Ko, T. Badloe, J. G. Ok, and J. Rho, “Nanoimprint lithography for high-throughput fabrication of metasurfaces,” Front. Optoelectron., vol. 14, no. 2, pp. 229–251, 2021, https://doi.org/10.1007/s12200-021-1121-8.Suche in Google Scholar PubMed PubMed Central

[35] U. Zeitner, M. Banasch, and M. Trost, “Potential of E-beam lithography for micro- and nano-optics fabrication on large areas,” J. Micro/Nanopatterning, Mater., Metrol., vol. 22, no. 4, p. 041405, 2023, https://doi.org/10.1117/1.jmm.22.4.041405.Suche in Google Scholar

Supplementary Material

This article contains supplementary material (https://doi.org/10.1515/nanoph-2025-0161).

© 2025 the author(s), published by De Gruyter, Berlin/Boston

This work is licensed under the Creative Commons Attribution 4.0 International License.

Artikel in diesem Heft

- Frontmatter

- Review

- Nonlinear multimode photonics on-chip

- Research Articles

- DeepQR: single-molecule QR codes for optical gene-expression analysis

- Image analysis optimization for nanowire-based optical detection of molecules

- Neural network connectivity by optical broadcasting between III-V nanowires

- High-repetition-rate ultrafast fiber lasers enabled by BtzBiI4: a novel bismuth-based perovskite nonlinear optical material

- Self-ordered silver nanoparticles on nanoconcave plasmonic lattices for SERS multi-antibiotic detection

- A reconfigurable multi-channel on-chip photonic filter for programmable optical frequency division

- On-chip deterministic arbitrary-phase-controlling

- An atlas of photonic and plasmonic materials for cathodoluminescence microscopy

- Metasurface-based large field-of-view light receiver for enhanced LiDAR systems

- Characterizing nanoscale spatiotemporal defects of multi-layered MoSe2 in hyper-temporal transient nanoscopy

- Generation of Bessel beams with tunable topological charge and polarization

Artikel in diesem Heft

- Frontmatter

- Review

- Nonlinear multimode photonics on-chip

- Research Articles

- DeepQR: single-molecule QR codes for optical gene-expression analysis

- Image analysis optimization for nanowire-based optical detection of molecules

- Neural network connectivity by optical broadcasting between III-V nanowires

- High-repetition-rate ultrafast fiber lasers enabled by BtzBiI4: a novel bismuth-based perovskite nonlinear optical material

- Self-ordered silver nanoparticles on nanoconcave plasmonic lattices for SERS multi-antibiotic detection

- A reconfigurable multi-channel on-chip photonic filter for programmable optical frequency division

- On-chip deterministic arbitrary-phase-controlling

- An atlas of photonic and plasmonic materials for cathodoluminescence microscopy

- Metasurface-based large field-of-view light receiver for enhanced LiDAR systems

- Characterizing nanoscale spatiotemporal defects of multi-layered MoSe2 in hyper-temporal transient nanoscopy

- Generation of Bessel beams with tunable topological charge and polarization