Abstract

In this paper we describe the development of a self-test on content knowledge as one element of a digital learning environment. The self-test on prior knowledge consists of tasks in the categories Periodic Table of Elements, chemical bonding, chemical formulas, and chemical reactions (reaction equations and reaction mechanisms). For the study, tasks from all topics have been used in a paper-pencil multiple-choice and multiple response test on the task at hand and an accompanying questionnaire with closed and open items. The results of the study show that the students rated the tasks as suitable. Comments for improving the tasks regarding the wording or the design were implemented. Because of students’ lack of understanding regarding some of the technical terms, a glossary and games will be added to the digital learning environment. Many students overestimated their knowledge and their competences, therefore the self-test in the learning environment will include feedback to ensure that the students can improve their content knowledge and its application.

1 Introduction

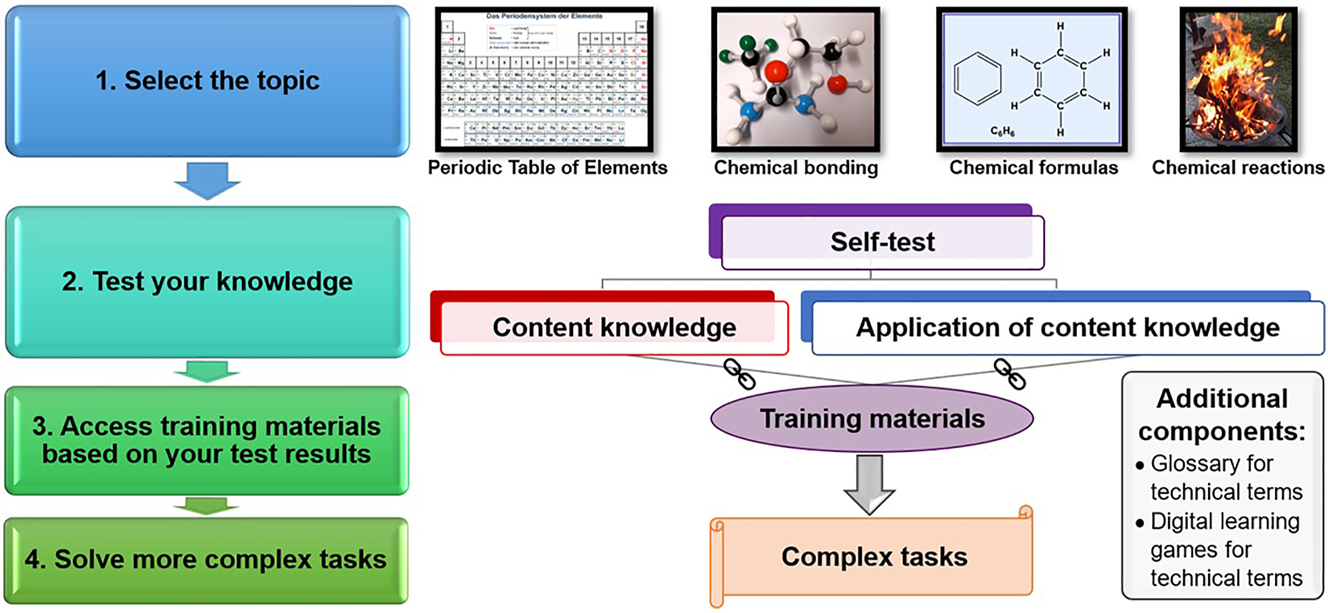

In all STEM subjects, drop out quotes are very high. In the years 2018–2020, 43–51 % of the students (in Germany) dropped out of their bachelor studies which is higher compared to the general drop out (between 32 and 39 %) (Heublein et al., 2022). At the beginning of university studies in chemistry, insufficient prior knowledge in general chemistry is one of the main factors for drop out (Averbeck, 2021). For being successful in general chemistry, prior knowledge is one of the significant factors amongst others such as cognitive abilities, interest, and course of study (Freyer et al., 2014). Therefore, diagnosing the deficits in prior knowledge as soon as possible could prevent the drop out (Averbeck, 2021). To be successful (and not drop out), prior knowledge should be deep level prior knowledge (Hailikari & Nevgi, 2010). For preventing drop out, several universities organize bridging courses before the students start with their courses in the first semester, for example in chemistry. Here, results of a study on the effects of a bridging course on students’ success at the end of the semester show that students with low prior knowledge only enhance their content knowledge for a short period of time. Only students with high prior knowledge seem to benefit from participating in the bridging courses; they achieve significantly better exam results than students who did not participate (Eitemüller & Habig, 2020). Therefore, to give all students the opportunity to close their gap on content knowledge, new concepts and instruments should be developed. In this paper, the development of a self-test on prior knowledge on basic principles of chemistry will be described and discussed. This self-test on content knowledge will be one part of a digital learning environment for testing and training basic principles of chemistry which consists of the topics Periodic Table of Elements, chemical bonding, chemical formulas, and chemical reactions (reaction equations and reaction mechanisms). Additionally, the self-test in the environment also consists of tasks on the application of the prior knowledge. Many tasks for training this application, combined with scaffolds and digital learning games to support students, are also included. At the end, the students can test their skills by solving more complex tasks. Figure 1 shows the design of the digital learning environment.

The digital learning environment on basic principles of chemistry.

2 Theoretical background

Students who want to use the self-test should be able to regulate their learning process independently. Therefore, an overview of literature on self-regulated learning will be given below. All other literature used for the development of the self-test as (self)-assessment and on the use of digital learning environments will also be discussed.

According to Zimmerman (2001), self-regulation is “the self-directive process through which learners transform their mental abilities into task-related skills”. This quote fits the goal of the learning environment; after closing gaps in prior knowledge in a self-regulatory way, the students apply this knowledge for solving the tasks. The self-test and the feedback students receive can also support the development of self-regulated learning (SRL), because students are then active learners who take control of their own learning process (Feldman-Maggor, 2023). SRL combines cognitive, meta-cognitive and resource management learning-strategy categories, whereas cognitive skills include for example problem-solving abilities, and selecting and processing relevant information (Birenbaum, 1997). If SRL means that students approach tasks in a planned way to achieve their learning goal (Brandmo et al., 2020), then the learning environment should support the students not only in enhancing their prior knowledge and in solving tasks but also in developing their SRL. A positive relationship between SRL and learning outcomes has been found (Fazriah et al., 2021). Teachers’ feedback can also support SRL positively and directly if the students’ perception is germane (Zheng et al., 2023). Schraw et al. (2006) list six strategies for improving SRL: inquiry-based learning, collaborative support, strategy and problem-solving instruction, the construction of mental models, the use of technology to support learning, and the role of personal beliefs. The digital learning environment presented in this paper focusses mainly on the strategies “strategy and problem-solving instruction” and “the use of technology to support learning”. The support provided is individual and not collaborative because the students work independently with the digital environment. Students’ self-regulation and motivational beliefs influence science learning (Olakanmi & Mishack, 2017). It is therefore not surprising that students who were trained in SRL performed better in class than those students who were not trained (Olakanmi & Mishack, 2017). Muhab et al. (2022) showed that SRL contributes positively to students’ SRL strategies in learning chemistry, especially if students planned their goals using their abilities and learning resources. Then, they tend to feel more confident in completing tasks.

Digital learning environments are described as “new possibilities and opportunities” by Peters (2000). Students are challenged to be more active and to interact with the information provided. The digital learning environment is suitable for autonomous learners because there is a great variability (Peters, 2000). A digital learning environment can be seen as “enabler of independent and self-determined learning” (Arnold, 1993). By using information and communication technology, digital learning environments enrich the traditional environment (Jansen et al., 2001). Therefore, digital learning environments are learning environments that use technology (Mirande et al., 1997). Here, the learner interacts with specific resources (Collis, 1996). Therefore, the digital environment integrates the possibilities of digital tools, as for example adding videos (Platanova et al., 2022). Learning environments can be hybrid learning environments and then can contain analogous and digital elements as for example the ZuKon 2030 which can be used face-to-face and in distance learning settings (Karayel et al., 2023). Designing digital learning environments should focus on tools that support the students’ characteristics and learning processes (Ifenthaler, 2012).

For the learning and teaching process, assessment is essential. It is used to get to know how students perform. There are three types of assessment: assessment of learning (summative), assessment for learning (formative) and assessment as learning (knowledge-based) (Opateye & Ewim, 2021). For the digital learning environment, the focus is on students’ self-assessment. Their ability to self-assess affects their learning and ultimately their academic performance (Wang et al., 1990). Self-assessment is an important aspect of metacognition (Tashiro et al., 2021). When comparing students’ self-assessment with their results in exams, Karatjas (2013) observed that students who performed well predicted their exam scores accurately and students who performed poorly did not. They overestimated their skills as already discussed by Kruger and Dunning (1999). To be accurate in their self-assessment students need higher-order cognitive skills (HOCS). To ensure that students can develop those skills, persistent and purposeful HOCS-oriented chemistry teaching and learning is necessary (Zoller et al., 1999). Wiediger and Hutchinson (2002) state: “Developing the skills of self-assessment, to know when one doesn’t know, is essential to making the transition from novice to expert”. Therefore, in the digital learning environment the students receive feedback on their work, and support by several scaffolds. Using the self-test and the other tasks as intended should therefore contribute to the development of their self-assessment skills. Several tests for chemistry education are known.

For this paper, some existing tests from chemical education literature for first- and second-year students will be summarized. In 1975, Blizzard et al. published the Chemistry Assessment Test, which determines the chemical knowledge of students at the beginning of their first-year course. Another test, also for first-year students, asks “what knowledge, skills, and abilities students must have in a traditional general chemistry course” (Russell, 1994). Nugent (1989) developed a multiple-choice test for students in their first semester of organic chemistry. An online self-diagnostic test for introductory general chemistry has been developed and used by Kennepohl et al. (2010). The students can use the tool individually and receive assessment of potential success in the course (test scores and overall grades correlate) as well as general advice. The observation that students struggle with course content led to the development of a one-page quiz with 14 brief questions about general chemistry topics necessary for learning organic chemistry: this assessment predicts students’ outcomes reliably (Jakobsche, 2023). A review on assessment in chemistry education is given by Stowe and Cooper (2019). In most assessments, the prior knowledge of students that is needed for the course is assessed. The development, use and evaluation of the self-test (as part of the digital learning environment) which focusses on prior knowledge and on basic knowledge in general will be described in detail below.

3 Design of the study

The self-test on prior knowledge is one element of the digital learning environment. Therefore, the items for this self-test have been evaluated in a study. For this study, items from all topics have been used in a paper-pencil test. This test consisted of the tasks (multiple-choice, multiple response, sequence, and allocation) and questionnaires with items on the wording of the tasks and open questions on technical terms and general remarks for improving the tasks (for an overview on the tasks and examples for all categories of tasks used in this study, see Supplementary Material).

3.1 Goal

For this study the following research questions will be investigated and answered:

How do the students rate the tasks?

How is the students’ content knowledge?

How do the students rate their own competences regarding the tasks?

3.2 Sample

The study was conducted in summer 2022 in the course “Organic Chemistry” for non-major chemistry students. These students study life sciences or nutritional science and are in their first year. The course consists of a lecture (3 h/week), a seminar (2 h/week), and a laboratory internship (one week). The testing of the tasks in preparation for the self-test has been done in the accompanying seminars with two groups of students (one Mondays and one Wednesdays). The tasks on reaction mechanisms were tested during the laboratory internship. In the seminars, depending on the group between 14 and 30 students (Mondays) or 12 and 35 students (Wednesdays) have actively taken part in the study. Because attendance of the seminar (and of this study) is voluntary, the students do not always attend regularly or do not stay till the end of the seminar (sometimes because of train or bus schedules). Therefore, during the semester the tasks have been solved at the beginning of the seminar instead of at the end, which led to better attendance in the evaluation. The students also switch between different groups (beside the two groups used for this study, other groups have been offered online). The students have been informed of the goal of the study and the use of the data. Ethical guidelines were followed. At this university, the approval of the Institutional Review Board is not required.

3.3 Design of the tasks

The tasks have been designed as multiple-choice or multiple response (Cook, 2023) questions because multiple-choice items are considered an effective form of educational assessment (Gierl et al., 2017). To choose the right answer option, the students must use their knowledge and problem-solving skills (Gierl et al., 2017). Butler (2018) lists several best practice tips for multiple-choice and multiple response items: they should not be too complex, they should engage specific cognitive processes, “none-of-the-above” and “all-of-the-above” as response options should be avoided, three plausible response options should be used, and the tests should be challenging but not too difficult. Therefore, the following design elements for the multiple-choice and multiple response items used in this study have been used:

The verbs telling the students what do to were printed in bold to support the students.

If more than one answer is correct, the plural form is underlined (this design element has been added during the semester, because of students’ mistakes while solving the first tasks).

The questions were formulated as clearly and easily understandable as possible.

The answers are as short as possible.

It should be avoided that all statements are correct.

The answers were arranged alphabetically regarding the key terms as for example electronegativity.

For each task, a questionnaire with closed and open items has been designed and used. All elements of the task are shown exemplary for the task on chemical bonding in Table 1. For this paper, the task was translated from German to English.

The task on chemical bonding (electron pair bonds) as multiple response question.

| Element | Example | ||||

|---|---|---|---|---|---|

| The task |

Consider which statements about electron pair bonds are correct and then tick the correct answers

|

||||

|

|

|||||

| Evaluation of the task | Strongly disagree | Disagree | Agree | Strongly agree | |

|

|

|||||

| I have understood the task description | |||||

| I knew, what I had to do | |||||

| I could solve the task without problems | |||||

| I knew all technical terms | |||||

| I knew the meaning of the technical terms | |||||

| I can explain all technical terms | |||||

| Technical terms | For the following technical terms, I did not know what they mean: | ||||

| Improvement | For the task I have the following suggestions for improvement: | ||||

During the course, the questionnaires were evaluated after using the task at hand in the seminar. Then, as a formative assessment, the results were used for rephrasing the following tasks, especially if the tasks were very similar (for an example see Table 2). By using the original wording in task 3, the students did not understand that they had to tick only one box out of four; they did not identify the task as multiple-choice task, but often solved it as a multiple response task. The new task makes clear that only one box should be ticked, and that the description only fits for one answer.

Rephrasing the text for tasks on trends in the periodic table of elements.

| Original (task 3) | New (task 3) |

|---|---|

|

Assign the following description to the correct trend in the periodic table Increase: within a group with rising ordinal number Decrease: within a period with rising ordinal number |

Consider which trend of the PSE the description fits and then tick the appropriate one Description: the trend decreases within a period of rising ordinal number (↘) and increases within a group of rising ordinal number (↗) |

3.4 The coding process

For evaluating and discussing the assessment of the results, the statistical software SPSS has been used for the closed items. The open items have been coded by both authors according to the work of Kuckartz (2016) using the method of qualitative content analysis. Both coders discussed and compared their assignment till 100 % inter-rater agreement was reached (Saldaña, 2013). For all codes and examples see Supplementary Material.

4 Results and discussion

4.1 Research question 1: how do the students rate the tasks?

Although literature-based design features for the tasks have been used, the students were asked to rate the tasks. Especially the items “I have understood the task description” and “I knew, what I had to do” were of interest, although other reasons than the wording and design of the task can influence their rating, for example their reading competences or distractions during the reading and solving process. Therefore, the open item “for the task I have the following suggestions for improvement:” was included in the evaluation.

For the item “I have understood the task description” the arithmetic mean over all tasks was in the range of 3.66 till 3.88 (therefore between agree and strongly agree). For the item “I knew, what I had to do” the results are quite similar: M = 3.61–3.88 (for all results, see Table 3). Correlations for both items are statistically significant (r S = 0.749**) which is not surprising.

Results for all tasks (number of tasks, arithmetic means and standard deviations).

| Category/item | I have understood the task description | I knew, what I had to do |

|---|---|---|

| Periodic table of elements |

N = 149 M = 3.66 (SD = 0.530) |

N = 149 M = 3.61 (SD = 0.623) |

| Chemical bonding |

N = 161 M = 3.73 (SD = 0.512) |

N = 161 M = 3.75 (SD = 0.488) |

| Chemical formula |

N = 75 M = 3.87 (SD = 0.380) |

N = 75 M = 3.88 (SD = 0.327) |

| Chemical reaction equations |

N = 183 M = 3.88 (SD = 0.358) |

N = 183 M = 3.85 (SD = 0.356) |

| Reaction mechanisms |

N = 190 M = 3.75 (SD = 0.456) |

N = 190 M = 3.72 (SD = 0.494) |

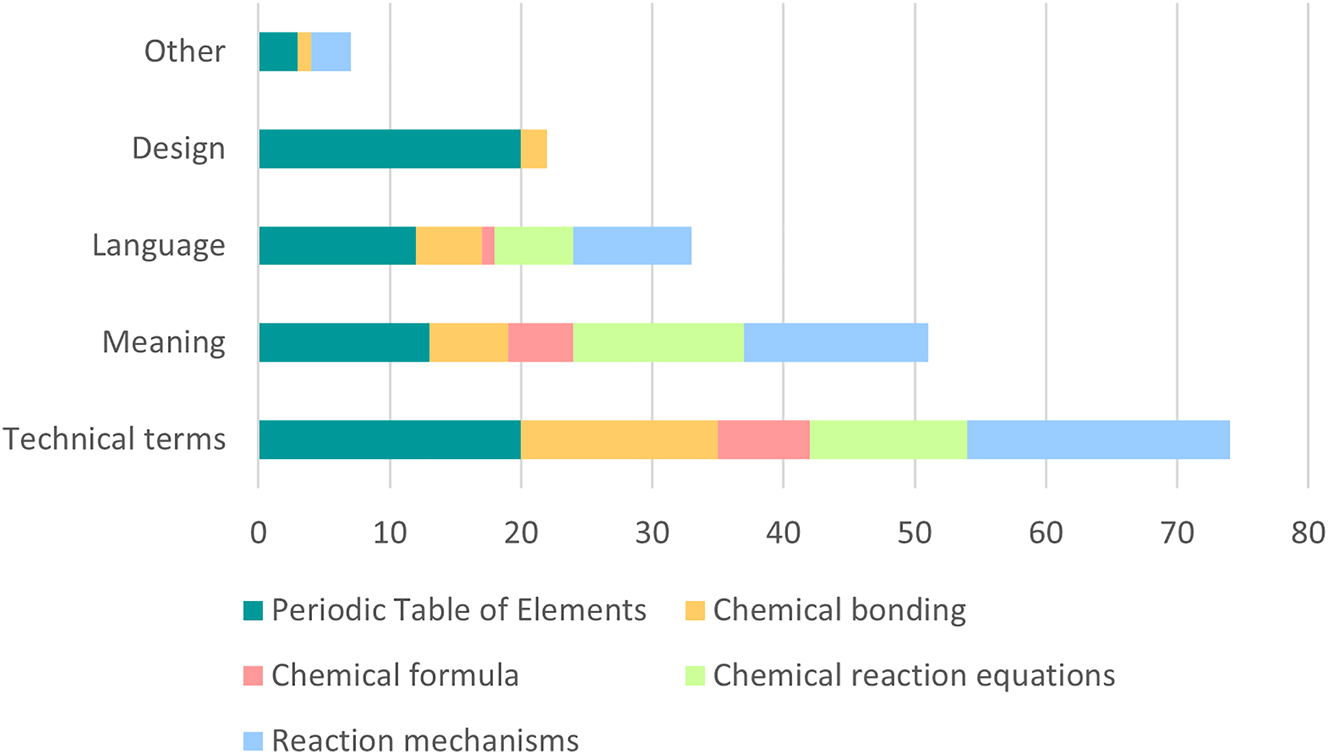

For the further development of the tasks, the results of these items alone are not sufficient. However, the evaluation of the open item “for the task I have the following suggestions for improvement:” leads to the required information (for a summary see Figure 2, for all results see Supplementary Material).

Summary of the codes for all categories.

Especially for the first category (Periodic Table of Elements), the students commented on the design of the task. As described above, those comments have been used for improving the design. Because comments on the design cannot be observed for the following categories it seems that students were more content with the design.

During the whole semester, students commented on the language of the tasks (also see Figure 2). Here an evaluation is more complex, because the reasons can be the formulations used in the task, but can also be of personal nature. Therefore, all statements coded with “language” have been evaluated anew. The statements were assigned to “task” or “personal” depending on the wording. For the first two categories (Periodic Table of Elements and Chemical bonding) the number of assignments to both codes are quite similar (10 for task and 9 for personal). For the last two categories more codes were assigned to task (7 versus 3). The changes in the formulations that have been made (for an example see Table 2) seem to result in a decreasing number of statements, especially for personal statements as for example “For me the third formulation was not clearly understandable”. Overall, the students’ ratings of the tasks support the intended use in the self-test; therefore, all rephrased and redesigned tasks will be used in the digital learning environment.

4.2 Research question 2: how is the students’ content knowledge?

For the development of the digital learning environment, information on the students’ content knowledge (knowledge of technical terms and concepts and their application) are of interest. It is shown in the literature that students need content knowledge and learning opportunities for their application (Carmel et al., 2015; Hermanns, 2021). If students have problems with certain technical terms, scaffolds can be used (Keller & Hermanns, 2023). For the digital learning environment, a glossary and learning games were planned. For all tasks of the self-test the items “I knew all technical terms”, “I knew the meaning of the technical terms” and “I can explain all technical terms” were included in the questionnaire. Table 4 shows for each item the highest und lowest ranking (all other rankings of technical terms were in between).

Rating of the items regarding the technical terms (task).

| Item | Highest ranking (influencing the chemical equilibrium) M (SD) | Lowest ranking (chemical bond models) M (SD) |

|---|---|---|

| I knew all technical terms | 3.88 (0.332) | 2.83 (0.857) |

| I knew the meaning of the technical terms | 3.94 (0.243) | 2.72 (0.752) |

| I can explain all technical terms | 3.88 (0.332) | 2.30 (0.609) |

All lowest ratings are between disagree and agree; the highest between agree and strongly agree. This means that for all tasks some of the technical terms are either unknown to the students or their meaning is not clear. For identifying which terms are problematic, the questionnaires included the item: “For the following technical terms, I did not know what they mean”. For all tasks evaluated in the study, the students have written down technical terms (for an overview see Supplementary Material). They also often added the information that they had heard of the technical term but did not know exactly what it meant. However, students also added the information that they knew and understood all technical terms in the task at hand. Most terms were named twice, as for example valence bond, electrophile and nucleophile or resonance. The terms named most are period and bond-line structure (5×), heteroatom and Lewis base (7×), and ionization energy (9×). For the task where the students rated the technical terms best, they did not write down any unknown term. This can be seen as a hint for the fitting of their rating and naming of unknown technical terms. However, the digital learning environment should offer explanations for technical terms. As students are always heterogeneous groups, the explanations for the technical terms should not be visible for all students, because this would only unnecessarily enhance the cognitive load (Sweller, 1988) during the solving process. Therefore, the results of this evaluation are used for the development of a glossary and of suitable digital games. Students who need an explanation can use the glossary, and for learning the terms and their explanation the digital games.

For assessing the quality and the level of the tasks, the percentage of completely correct answers have been determined for all tasks. They vary between 0.0 and 88.2 %. For multiple-choice items (only one correct answer) the percentage varies between 11.5 and 81.0 %; for multiple response items (2–4 correct answers) between 0 and 71.4 %, and the other tasks (sequence or allocation) between 44.4 and 88.2 % (for all results see Supplementary Material). The students solved the multiple response tasks less successful than the multiple-choice tasks. Therefore, those tasks have been analyzed in more detail. First, both tasks with a success rate of zero percent will be discussed. For the task “octet rule” three out of four answers were correct. No student chose all these three answers. The answer “the octet rule is a special rule of the common noble gas rule” was chosen by few students. Because this knowledge is not necessary for using the octet rule correctly, the task has been changed accordingly. This answer has been removed and was replaced by a wrong answer. Therefore, in the newly designed task, only two correct answers should be chosen. The task “correct statements regarding acid-base reactions” has also been redesigned, because only two students chose the correct answer “reactions between Lewis bases and electron deficient compounds are acid base reactions”, this answer has been rephrased into “acid base reaction can also be described as reaction of a nucleophile with an electrophile”. All tasks of the self-test have been analyzed. For those tasks, where the students did not choose the correct answers, the answers have been rephrased or replaced if the answer was assessed as unnecessary.

However, the results show that the students’ prior knowledge shows gaps that can be closed by working with the learning environment. On the other hand, many answers the students have given are correct (very often, for multiple response tasks, they did not choose all correct answers). This is also important, because otherwise the students’ frustration with their own competences could be a hindrance for working with the learning environment. If there are too many gaps, their motivation most likely would decrease. The self-test seems to fit for the intended learning group which are students of the first two years of study. Therefore, the improved self-test will be evaluated as part of the complete learning environment by students in their first or second year in the winter term 2023–2024.

4.3 Research question 3: how do the students rate their own competences regarding the tasks?

As discussed for research question 1, the students rated the tasks. For this, a questionnaire with a four-item Likert scale (Allen & Seaman, 2007) has been designed and used (see Table 1). For comparing the item “I could solve the task without problems” with the students’ solving of the tasks, the students’ answers have been coded as completely correct or not correct. For the multiple-choice tasks, for 29.6–69.2 % of the tasks the students’ estimation was fitting. For the multiple response tasks, for 21.4–85.7 % of the tasks the students predicted their solving of the task correctly. For the tasks where the students had to put items in a sequence or allocate meanings to terms, the concordance was best (between 50.0 and 82.4 %). The most homogeneous results could be observed for the tasks the students solved during their laboratory internship. Here, the students’ concordance of their assessment with their own solving shows an arithmetic mean of 58.6 with a standard deviation of 9.3. One reason can be that during the laboratory internship only those students attend that are seriously studying or are still studying. During the second year, many students stop with their studies. It can be assumed that students with problems or who are not interested belong to the group that stops with their study and not to the group that takes part in the laboratory internship.

Overall, not all students estimate their own knowledge correctly. Depending on the task, between 14.3 and 78.6 % overestimate their knowledge as observed repeatedly in other studies and known as Dunning-Kruger effect (Kruger & Dunning, 1999). The students’ ratings of the technical terms in relation to their rating of the item “I could solve the task without problems” have also been evaluated by calculating Spearman correlations. For all three items regarding the technical terms, statistically significant correlations can be observed (r S = 0.407**; 0.477** and 0.514**). The students’ overestimation of their knowledge is therefore consistent for those items that can be seen as relevant for the solving process. Therefore, the self-test includes feedback for all tasks. The students receive information about the results of the individual tasks and use this information for deciding which training materials to choose.

5 Limitations

For the study in this paper, some limitations must be discussed. The self-test on prior knowledge has been evaluated in one learning group (all non-major chemistry students) and not all tasks could be evaluated because the number of tasks was too large to be evaluated completely during the seminar. Evaluating more than one task per seminar would have taken too much time from the available learning time of the students. Therefore, representative tasks for all categories have been chosen for the evaluation to ensure that the results could be used for redesigning or rephrasing similar tasks. Five tasks have been evaluated during breaks in the students’ laboratory internship. The number of students also varied during the course. This is a recurring problem in German universities because attendance is voluntary. Overall, the number of students for the evaluation was sufficient for answering the research questions and for the further development of the self-test and its intended use in the digital learning environment. Additionally, not all students used the opportunity for answering the open questions. Therefore, only statements the students have written down could be evaluated. However, the results of the study discussed here should be of interest especially for teachers who plan similar tests and/or the evaluation of such tests.

6 Conclusions and outlook

The study has been evaluated for testing the suitability of the self-test as part of a digital learning environment on basic content knowledge. Table 5 lists the major results of the study and its implications.

Results and implication from the study.

| Result | Implication |

|---|---|

| The students rate the tasks as suitable | The tasks will remain part of the self-test |

| The students gave tips for improving the tasks regarding wording and design | The tasks will be rephrased and redesigned using students’ tips |

| The students overestimated their own performance as is already known as Dunning-Kruger effect | The self-test will include feedback to make transparent to the students which knowledge and competences they already have or not |

| The meaning of some of the technical terms was not clear to the students | A glossary on the technical terms and digital games for training the technical terms will be included in the digital learning environment |

The revised version of the self-test will be evaluated as part of a study on the use of the whole digital learning environment in a mixed-methods study in the winter term 2023–2024. The results of this study will be published in due course.

Funding source: Bundesministerium für Bildung und Forschung

Award Identifier / Grant number: 01JA1816

Acknowledgment

We thank all students who participated in this study. We thank Helen Kunold for designing Figure 2. This project is part of the “Qualitätsoffensive Lehrerbildung”, a joint initiative of the Federal Government and the Länder which aims to improve the quality of teacher training. The program is funded by the Federal Ministry of Education and Research. The authors are responsible for the content of this publication.

-

Research ethics: Not applicable.

-

Author contributions: The authors have accepted responsibility for the entire content of this manuscript and approved its submission.

-

Competing interests: The authors state no conflict of interest.

-

Research funding: BMBF 01JA1816.

-

Data availability: The raw data can be obtained on request from the corresponding author.

References

Allen, E., & Seaman, C. (2007). Likert scales and date analyses. Qual. Progress, 7, 64–65.Search in Google Scholar

Arnold, R. (1993). Natur als Vorbild. Selbstorganisation als Modell der Pädagogik [The nature as role model. Selforganisation als pedagogical model]. VAS-Verlag für akademische Schriften.Search in Google Scholar

Averbeck, D. (2021). Zum Studienerfolg in der Studieneingangsphase des Chemiestudiums [About study success in the introductory phase of the studies of chemistry. Logos-Verlag.Search in Google Scholar

Birenbaum, M. (1997). Assessment preferences and their relationship to learning strategies and orientations. Higher Education, 33, 71–84, https://doi.org/10.1023/a:1002985613176 10.1023/A:1002985613176Search in Google Scholar

Blizzard, A. C., Humphreys, D. A., Srikameswaran, S., & Martin, R. R. (1975). A chemistry assessment test. Journal of Chemical Education, 52(12), 808. https://doi.org/10.1021/ed052p808 Search in Google Scholar

Brandmo, C., Panadero, E., & Hopfenbeck, T. E. (2020). Bridging classroom assessment and self-regulated learning. Assessment in Education: Principles, Policy & Practice, 27(4), 319–331. https://doi.org/10.1080/0969594x.2020.1803589 Search in Google Scholar

Butler, A. C. (2018). Multiple-choice testing in education: Are the best practices for assessment also good for learning? Journal of Applied Research in Memory and Cognition, 7, 323–331. https://doi.org/10.1016/j.jarmac.2018.07.002 Search in Google Scholar

Carmel, J. H., Jessa, Y., & Yezierski, E. J. (2015). Targeting the development of content knowledge and scientific reasoning: Reforming college-level chemistry for nonscience majors. Journal of Chemical Education, 92(1), 46–51. https://doi.org/10.1021/ed500207t.Search in Google Scholar

Collis, B. (1996). Tele-learning in a digital world. The future of distance learning. International Thomson Computer Press.Search in Google Scholar

Cook, J. (2023). https://www.bristol.ac.uk/esu/media/e-learning/tutorials/writing_e-assessments/page_31.htm (assessed November 2023).Search in Google Scholar

Eitemüller, C., & Habig, S. (2020). Enhancing the transition? – effects of a tertiary bridging course in chemistry. Chemistry Education: Research and Practice, 21, 561–569. https://doi.org/10.1039/c9rp00207c Search in Google Scholar

Fazriah, S., Irwandi, D., & Fairusi, D. (2021). Relationship of self-regulated learning with student learning outcomes in chemistry study. Journal of Physics: Conference Series, 1836. https://doi.org/10.1088/1742-6596/1836/1/012075 Search in Google Scholar

Feldman-Maggor, Y. (2023). Identifying self-regulated learning in chemistry classes – a good practice report. Chemistry Teacher International, 5(2), 203–211. https://doi.org/10.1515/cti-2022-0036 Search in Google Scholar

Freyer, K., Epple, M., Brand, M., Schiebener, J., & Sumfleth, E. (2014). Studienerfolgsprognose bei Erstsemesterstudierenden in Chemie [Predicting student succes of freshmen in chemistry]. Zeitschrift für Didaktik der Naturwissenschaften, 20, 129–142. https://doi.org/10.1007/s40573-014-0015-3 Search in Google Scholar

Gierl, M. J., Bulut, O., Guo, Q., & Zhang, X. (2017). Developing, analyzing, and using distractors for multiple-choice tests in education: A comprehensive revies. Review of Educational Research, 87(6), 1082–1116. https://doi.org/10.3102/0034654317726529 Search in Google Scholar

Hailikari, T. K., & Nevgi, A. (2010). How to Diagnose At-Risk Students in Chemistry: The case of prior knowledge assessment. International Journal of Science Education, 32(15), 2079–2095. https://doi.org/10.1080/09500690903369654 Search in Google Scholar

Hermanns, J. (2021). Training OC - a new course concept for training the application of basic concepts in organic chemistry. Journal of Chemical Education, 98(2), 374–384. https://doi.org/10.1021/acs.jchemed.0c00567 Search in Google Scholar

Heublein, U., Hutzsch, C., & Schmelzer, R. (2022). Die Entwicklung der Studienabbruchquoten in Deutschland [The development of drop out in Germany]. (DZHW Brief 05|2022). DZHW. (assessed October 2023).Search in Google Scholar

Ifenthaler, D. (2012). Design of learning environments. In N. M. Seel (Ed.), Encyclopedia of the sciences of learning. Vol. 4, (pp. 929–931). Springer.10.1007/978-1-4419-1428-6_186Search in Google Scholar

Jakobsche, C. E. (2023). How to identify – with as little as one question – students who are likely to struggle in undergraduate organic chemistry. Journal of Chemical Education, 100(10), 3866–3972. https://doi.org/10.1021/acs.jchemed.3c00344 Search in Google Scholar

Jansen, P. A., Fisser, P., & Terlouw, C. (2001). Designing digital learning environments. In H. Taylor & P. Hogenbirk (Eds.), Information and Communication Technologies in Education IFIP – The international federation for information processing Vol. 58, (pp. 259–270). Springer.10.1007/978-0-387-35403-3_21Search in Google Scholar

Karatjas, A. G. (2013). Comparing college students’ self-assessment of knowledge in organic chemistry to their actual performance. Journal of Chemical Education, 90(8), 1096–1099. https://doi.org/10.1021/ed400037p Search in Google Scholar

Karayel, C. E., Krug, M., Hoffmann, L., Kanbur, C., Barth, C., & Huwer, J. (2023). ZuKon 2030: An innovative learning environment focused on sustainable development goals. Journal of Chemical Education, 100(1), 102–111. https://doi.org/10.1021/acs.jchemed.2c00324 Search in Google Scholar

Keller, D. & Hermanns, J. (2023). The digital task navigator as scaffold for supporting higher education students while solving tasks in organic chemistry. Journal of Chemical Education 100 (10), 3818–3824, https://doi.org/10.1021/acs.jchemed.2c00518 Search in Google Scholar

Kennepohl, D., Guay, M., & Thomas, V. (2010). Using an online, self-diagnostic test for introductory general chemistry at an open university. Journal of Chemical Education, 87(11), 1273–1277. https://doi.org/10.1021/ed900031p Search in Google Scholar

Kruger, J. & Dunning, D. (1999). Unskilled and unaware of it: How difficulties in recognizing one’s own incompetence lead to inflated self-assessments. Journal of Personality and Social Psychology, 77(6), 1121–1134, https://doi.org/10.1037/0022-3514.77.6.1121 Search in Google Scholar

Kuckartz, U. (2016). Qualitative Inhaltsanalyse. Methoden, Praxis, Computerunterstützung [Qualitative content analysis. Methods, practice, computer assistance]. Beltz: Weinheim, Germany.Search in Google Scholar

Mirande, M., Riemersma, J., & Veen, W. (1997). De digitale leeromgeving [The digital learning environment}. Wolters Noordhoff.Search in Google Scholar

Muhab, S., Irwanto, I., Allans, E., & Yodela, E. (2022). Improving students’ self-regulation using online self-regulated learning in chemistry. Journal of Sustainability Science and Management, 17(10), 1–12. https://doi.org/10.46754/jssm.2022.10.001 Search in Google Scholar

Nugent, J. F. (1989). Multiple-Choice self-test. Journal of Chemical Education, 66(8), 649–650. https://doi.org/10.1021/ed066p649 Search in Google Scholar

Olakanmi, E. E., & Mishack, T. G. (2017). The effects of self-regulated learning training on students’ metacognition and achievement in chemistry. International Journal of Innovation in Science and Mathematics Education, 25(2), 34–48.Search in Google Scholar

Opateye, J. A., & Ewim, D. R. E. (2021). Assessment for learning and feedback in chemistry: A case for employing information and communication technology tools. International Journal of Rehabilitation and Special Education, 3(2), 18–27. https://doi.org/10.31098/ijrse.v3i2.660 Search in Google Scholar

Peters, O. (2000). Digital learning environments: New possibilities and opportunities. International Review of Research in Open and Distributed Learning, 1(1), 1–19. https://doi.org/10.19173/irrodl.v1i1.3 Search in Google Scholar

Platanova, R. I., Khuziakhmetov, A. N., Prokopyev, A. I., Rastorgueva, N. E., Rushina, M. A., & Chistyakov, A. A. (2022). Knowledge in digital environments: A systematic review of literature. Frontiers in Education, 7, 1060455. https://doi.org/10.3389/feduc.2022.1060455 Search in Google Scholar

Russell, A. A. (1994). A rationally designed general chemistry diagnostic test. Journal of Chemical Education, 71(4), 314–317. https://doi.org/10.1021/ed071p314 Search in Google Scholar

Saldaña, J. (2013). The Coding Manual for Qualitative Researchers. Sage Publications Inc.Search in Google Scholar

Schraw, G., Crippen, K. J., & Hartley, K. (2006). Promoting self-regulation in science education: Metacognition as part of a broader perspective on learning. Research in Science Education, 36, 111–139. https://doi.org/10.1007/s11165-005-3917-8 Search in Google Scholar

Stowe, R. L., & Cooper, M. M. (2019). Assessment in chemistry education. Israel Journal of Chemistry, 59, 598–607. https://doi.org/10.1002/ijch.201900024 Search in Google Scholar

Sweller, J. (1988). Cognitive load during problem solving: Effects on learning. Cognitive Science, 12, 257–285. https://doi.org/10.1207/s15516709cog1202_4 Search in Google Scholar

Tashiro, J., Parga, D., Pollard, J., & Talanquer, V. (2021). Characterizing change in students’ self-assessments of understanding when engages in instructional activities. Chemistry Education: Research and Practice, 22, 662–682. https://doi.org/10.1039/d0rp00255k Search in Google Scholar

Wang, M. C., Haertel, G. D., & Walberg, H. J. (1990). What influences learning? A content analysis of review literature. Journal of Educational Research, 84(1), 30–43. https://doi.org/10.1080/00220671.1990.10885988 Search in Google Scholar

Wiediger, S. D., & Hutchinson, J. S. (2002). The significance of accurate student self-assessment in understanding of chemical concepts. Journal of Chemical Education, 79(1), 120–124. https://doi.org/10.1021/ed079p120 Search in Google Scholar

Zheng, X., Luo, L., & Liu, C. (2023). Facilitating undergraduates’ online self-regulated learning: The role of teacher feedback. The Asia-Pacific Education Researcher, 32, 805–816. https://doi.org/10.1007/s40299-022-00697-8.Search in Google Scholar

Zimmerman, B. J. (2001). Theories of self-regulated learning and academic achievement: An overview and analysis. In B. J. Zimmerman & D. H. Schunk (Eds.), Self-regulated Learning and Academic Achievement: Theoretical Perspectives (pp. 1–65). Lawrence Erlbaum Associates.Search in Google Scholar

Zoller, U., Fastow, M., Lubzky, A., & Lubezky, A. (1999). Students’ self-assessment in chemistry examinations requiring higher- and lower-order cognitive skills. Journal of Chemical Education, 76(1), 112–113. https://doi.org/10.1021/ed076p112 Search in Google Scholar

Supplementary Material

This article contains supplementary material (https://doi.org/10.1515/cti-2023-0068).

© 2023 the author(s), published by De Gruyter, Berlin/Boston

This work is licensed under the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.

Articles in the same Issue

- Frontmatter

- Editorial

- EDITORIAL for Volume 6

- Research Articles

- Virtual undergraduate chemical engineering labs based on density functional theory calculations

- From screen to bench: unpacking the shifts in chemistry learning experiences during the COVID-19 transition

- Good Practice Report

- Learning about Confucian ecological ethics to promote education for sustainable development in Chinese secondary chemistry education

- Research Article

- Interactive instructional teaching method (IITM); contribution towards students’ ability in answering unfamiliar types questions of buffer solution

- Special Issue Paper

- The times of COVID-19 and beyond: how laboratory teaching evolved through the Pandemic

- Research Article

- Development of a self-test for undergraduate chemistry students: how do students solve tasks on basic content knowledge?

- Good Practice Report

- IUPAC International Chemical Identifier (InChI)-related education and training materials through InChI Open Education Resource (OER)

- Everyday referenced use of a digital temperature sensor – how well do alternative ice cubes really cool a drink?

- Editorial

- List of reviewers contributing to volume 5, 2023

Articles in the same Issue

- Frontmatter

- Editorial

- EDITORIAL for Volume 6

- Research Articles

- Virtual undergraduate chemical engineering labs based on density functional theory calculations

- From screen to bench: unpacking the shifts in chemistry learning experiences during the COVID-19 transition

- Good Practice Report

- Learning about Confucian ecological ethics to promote education for sustainable development in Chinese secondary chemistry education

- Research Article

- Interactive instructional teaching method (IITM); contribution towards students’ ability in answering unfamiliar types questions of buffer solution

- Special Issue Paper

- The times of COVID-19 and beyond: how laboratory teaching evolved through the Pandemic

- Research Article

- Development of a self-test for undergraduate chemistry students: how do students solve tasks on basic content knowledge?

- Good Practice Report

- IUPAC International Chemical Identifier (InChI)-related education and training materials through InChI Open Education Resource (OER)

- Everyday referenced use of a digital temperature sensor – how well do alternative ice cubes really cool a drink?

- Editorial

- List of reviewers contributing to volume 5, 2023