Abstract

Deep learning-based image super resolution (SR) is an image processing technique designed to enhance the resolution of digital images. With the continuous improvement of methods and the growing availability of large real-world datasets, this technology has gained significant importance in a wide variety of research fields in recent years. In this paper, we present a comprehensive review of promising developments in deep learning-based image super resolution. First, we give an overview of contributions outside the field of microscopy before focusing on the specific application areas of light optical microscopy, fluorescence microscopy and scanning electron microscopy. Using selected examples, we demonstrate how the application of deep learning-based image super resolution techniques has resulted in substantial improvements to specific use cases. Additionally, we provide a structured analysis of the architectures used, evaluation metrics, error functions, and more. Finally, we discuss current trends, existing challenges, and offer guidance for selecting suitable methods.

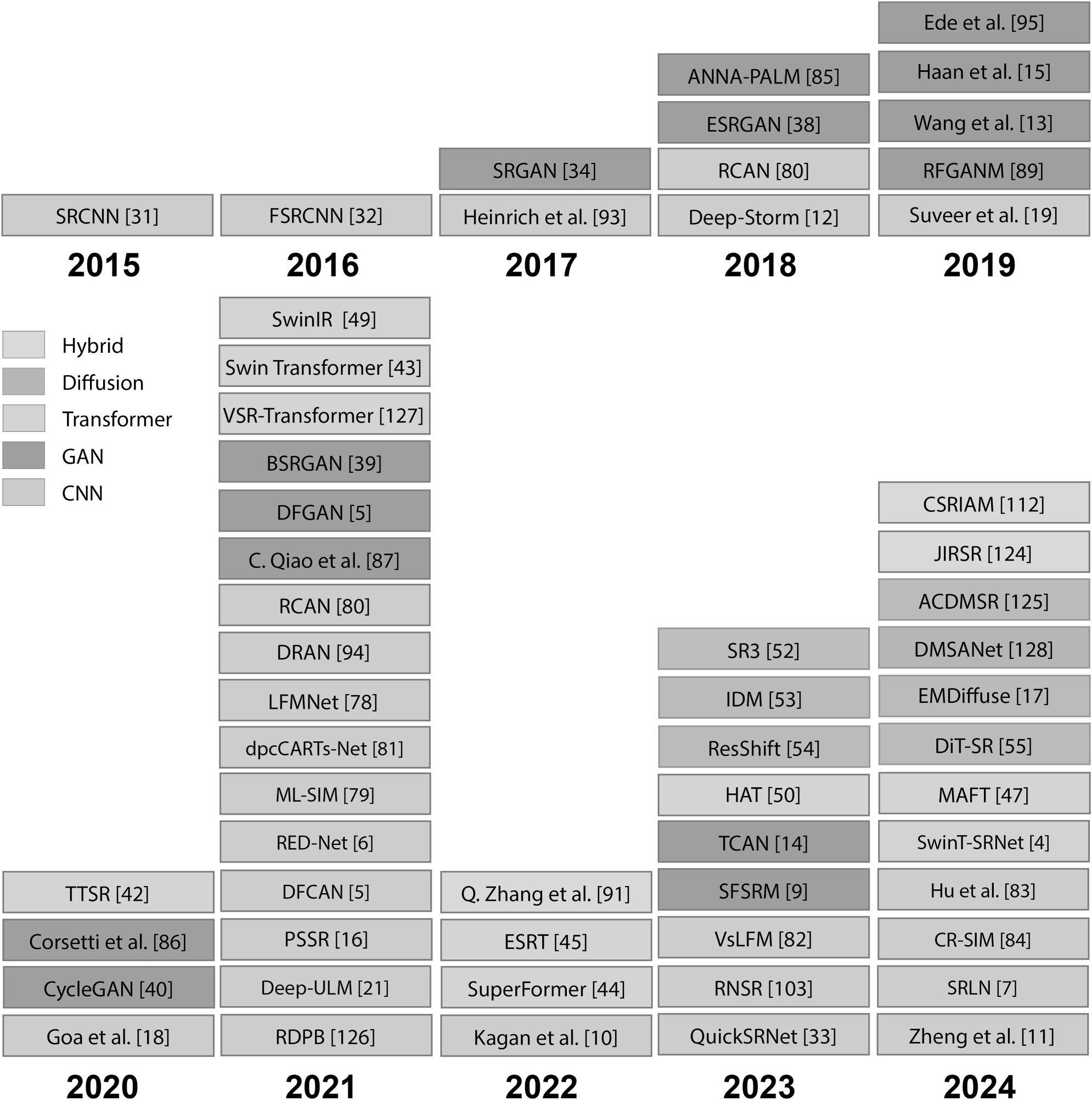

1 Introduction

From a conventional perspective, super resolution in microscopy refers to an imaging technique that achieves a higher resolution than traditional light optical microscopy. In particular, approaches such as stimulated emission depletion (STED) microscopy [1], structured illumination microscopy (SIM) [2] and stochastic optical reconstruction microscopy (STORM) [3] are well known. However, this review paper is dedicated to an alternative method for generating high-resolution images: deep learning-based image super resolution (SR). This method is independent of the acquisition technique and is therefore used in light optical microscopy [4], [5], [6], [7], [8] including fluorescence microscopy [9], [10], [11], [12], [13], [14] and other imaging techniques such as scanning electron microscopy [8], [15], [16], [17], [18], [19], [20] and ultrasound localization microscopy [21]. It is even possible to combine conventional imaging techniques for super resolution (like STED, SIM, STORM) with the deep learning-based image super resolution presented in this work [5], [6]. The approach is based on the digital upscaling of images by a trained deep learning model, which can be done either in situ during the acquisition or for already acquired images in a post-processing step. This makes image SR using deep learning an extremely flexible and versatile method for image quality enhancement in spatial resolutions (minimal separation distance between two objects with high contrast points), temporal resolutions (frequency at which images can be retrieved) and even for noise reduction [22].

Our focus in this review paper is on the application of deep learning-based image super resolution specifically in the field of microscopy. The aim is to provide a comprehensive overview of the scenarios in which this technology is used and the added value its application offers. Compared to other super resolution review publications, which mainly focus on technical aspects, improvement of conventional SR methods or other domains [23], [24], [25], [26], [27], [28], [29], [30] this review aims towards the detailed consideration of microscopy-specific applications.

The main contribution of this work is as follows:

We provide a comprehensive survey of image super resolution methods as well as their applications. We describe and show how super resolution has enabled significant improvements.

We provide a systematic overview of the available methods, their areas of application, as well as the datasets and metrics used.

Finally, we discuss current trends, existing challenges, and provide guidance for the selection of suitable methods.

Furthermore, the review is structured as follows to provide a comprehensive understanding of both the basic methods and their specific applications.

Fundamentals: Provides an explanation of the most important terms and concepts. It provides a general overview of different architectural designs for deep learning-based image super resolution and illustrates their successful application outside of microscopy.

Deep learning-based image super resolution in microscopy: The emphasis in this section is on the application of super resolution methods in microscopy, with a particular focus on light optical microscopy and scanning electron microscopy.

Summary: In this section we provide the special characteristics of the deep learning-based image super resolution methods derived from this literature review.

Challenges and Opportunities: This section provides final thoughts on the challenges and opportunities of image super resolution using deep learning methods, focusing on real-world data, evaluation metrics, loss functions and architectures.

2 Fundamentals

2.1 Problem definition

The aim of deep learning-based image super resolution is to reconstruct a high-resolution image from a low-resolution image, which can be described by the formula in Equation (1).

Problem definition of image super resolution.

where IHR represents the high-resolution image, ILR the low-resolution image, N the noise factor based on the acquisition system and ↑(*) the upsampling method, which is the focus of this literature survey. The upsampling of images is an ill-posed problem, as there are theoretically an infinite number of possible results for the interpolation and reconstruction of individual pixel values. In other words, image super resolution is based on an estimate of what the original, low-resolution image might look like in an enlarged version. Therefore, the reconstruction result should be interpreted with caution. It is essential to carefully validate the trained deep learning models with respect to their specific use case.

2.2 Terminology and concepts

This chapter is intended to help introduce the topic of image super resolution by providing a brief summary of important keywords and their descriptions.

2.2.1 Convolutional Neural Networks (CNN)

A Convolutional Neural Network (CNN) uses filters to extract local features such as edges and textures from an image with a sliding window (Figure 1). During training, the filters are dynamically adjusted so that the network learns to recognize relevant features for a specific task. A typical CNN consists of a convolutional layer, an activation function and an up- or downsampling layer. This modular structure makes it possible to implement a wide variety of architectures that can be adapted to specific requirements. A very common CNN architecture are encoder-decoder networks like U-Net, that consist of an encoder network to extract and condense relevant image features into a so-called latent space representation and a decoder network (similar to the one shown in Figure 1, often a symmetrical inverse of the encoder) that reconstructs an image from a latent space representation.

Schematic illustration of a CNN architecture using the example of a decoder that generates a higher resolution from a lower resolution. In the figure, the size of the feature maps (colored) increases as the layers progress, while the number of feature maps decreases. The white square within the feature maps is the learned filter, with each feature map having its own filter.

In the field of image super resolution, Dong et al. [31] presented one of the first CNN models called SRCNN, whose convolutional filters have learned a function to map an LR image to an HR image. The fact that an HR output is directly generated from an LR input makes the model one of the first end-to-end models. To ensure that their model can also be used for real-time requirements, the authors optimized their first model SRCNN for speed and named it Fast Super-Resolution Convolutional Neural Network (FSRCNN) [32]. They achieved a speed improvement of roughly 40× without sacrificing quality and were able to upscale videos to 720p with 24 fps by directly using the LR as input and reducing the filter sizes to 3×3. A further advance was achieved by the authors Berger et al. [33] with QuickSRNet which extracts architecture features from a VGG-like structure, without input-to-output residual connections. The authors claimed that their architecture produces 1080p outputs via 2× upscaling in only 2.2 ms for a single frame on a modern smartphone, which makes it ideal for high-fps real-time applications.

2.2.2 Generative Adversarial Networks (GAN)

A Generative Adversarial Network (GAN) consists of two networks: a generator and a discriminator (Figure 2). These are involved in an adversarial learning process in which the generator attempts to deceive the discriminator, while the discriminator becomes continuously better at recognizing generated data. During training, the generator improves and produces more realistic images over time. During inference, the discriminator is no longer needed and only the generator is used. In image super resolution, the generator produces a HR image from a LR image.

Schematic illustration of a training processes of a GAN architecture. The generator generates a new HR image from an LR image. The discriminator tries to distinguish between real and fake images. Eventually the generator learns to generate realistic images.

One of the first GAN architectures for image super resolution was the Super-Resolution GAN (SRGAN) proposed by Ledig et al. [34]. SRGAN comprises a generator based on residual networks and incorporates perceptual loss for texture recovery in the generated HR images. SRGAN effectively upscales images by a factor of 4× while maintaining perceptual quality. The authors emphasized the focus of this work for perceptual quality rather than computational efficiency. Using the performance metric mean opinion score (MOS), SRGAN outperformed its predecessors, as shown on datasets like Set5 [35], Set14 [36], and BSD 100 [37]. Wang et al. [38] proposed ESRGAN, an architecture based on SRGAN, with a residual-in-residual dense block (RRDB) architecture not using batch normalization. It allowed for better feature extraction and improved visual quality in the generated images. It also utilizes a perceptual loss function that focuses on high-level features extracted from pre-trained networks, as a final remark ESRGAN has been also used in other works such as [8], [39]. CycleGAN [40] proposed by Zhu et al. is another example, although primarily known for style transfer, can also be adapted for super resolution tasks by learning to map low-resolution images to high-resolution counterparts even without requiring paired training data. In the landscape of GAN models, Conditional GAN (CGAN) [41] is another variant that incorporates additional information (like class labels) into the GAN framework. This can be beneficial for certain super resolution tasks where conditional information is also available.

2.2.3 Transformer

Transformer models have overtaken CNNs and GANs and are now considered state-of-the-art in many areas of image processing. Their success is primarily based on self-attention layers and good scalability. In contrast to CNNs, which can only capture local dependencies through their filters, self-attention enables the capture of global dependencies. This means that information from different image areas can be processed together. Figure 3 shows a self-attention layer. The input is first transformed into query, key and value. The similarity between query and key is then calculated. Finally, the values are summed up in a weighted manner to generate a new representation of the input. An extended concept is multi-head attention, in which several self-attention modules are stacked. Each head can focus on different features, allowing the model to perform a more comprehensive analysis of the input information.

![Figure 3:

Schematic illustration of a self-attention module, as is commonly used in a Transformer model. Key, query and value are labeled K, Q and V respectively, and []T indicates a transposed matrix.](/document/doi/10.1515/mim-2024-0032/asset/graphic/j_mim-2024-0032_fig_003.jpg)

Schematic illustration of a self-attention module, as is commonly used in a Transformer model. Key, query and value are labeled K, Q and V respectively, and []T indicates a transposed matrix.

One of the first transformer models for image super resolution was introduced by Yang et al. with TTSR [42]. This model belongs to the field of reference-based super-resolution. During training, an HR image is used in addition to the LR image to transfer features. Even after training, the model requires an HR image that is similar to the input image in terms of colors and textures. The Swin Transformer, developed by Liu et al. [43], achieves state-of-the-art results without requiring an HR image as additional input. By applying local self-attention mechanisms in a hierarchical structure, the model reduces the computational complexity compared to vision transformer approaches. The linear computational complexity in relation to the image size makes the Swin Transformer very efficient. Forigua et al. [44] have adapted the Swin Transformer for 3D data and developed the SuperFormer model. This model was specifically designed for volumetric medical imaging, for example for MRI scans. SuperFormer integrates local self-attention mechanisms and 3D-relative position coding to enable high-resolution 3D reconstructions with fine details. In addition, the Efficient Super-Resolution Transformer (ESRT) by Lu et al. [45] combines a Lightweight Transformer Backbone (LTB) with a Lightweight CNN Backbone (LCB). As a result, long-range dependencies are captured while the computational effort remains low. The unique selling point of this work lies in lower memory requirements. Compared with the original Transformer [46] which occupies 16 GB GPU memory, ESRT needed 4 GB of GPU memory during training with a patch size of 48×48 and a batch size of 16. The Multi-Attention Fusion Transformer (MAFT) proposed by Li et al. [47] integrates multiple attention mechanisms for improved reconstruction of super-resolved images. By attempting to balance the dependency between short-term and long-term spatial information, shifted windows are introduced, leading to better image quality. Compared to state-of-the-art methods, the proposed MAFT achieved 0.09 dB gains on the Urban100 [48] dataset for 4× SR task while containing merely 32.55 % fewer parameters and 38.01 % lower floating-point operations (FLOPs), respectively. Most recently Bommanapally et al. [8] used popular state of the art transformer-based super resolution techniques SwinIR [49] and HAT [50], to evaluate their performance in different deep learning approaches, using supervised, contrastive and noncontrastive self-supervised learning. They found that when using SR images, the supervised and self-supervised approach outperforms by 2–6 % those architectures that do not use SR images. This demonstrates the versatility of implementation that transformers architectures might have in super-resolution related tasks.

2.2.4 Diffusion models

A diffusion model is a generative model that is divided into two phases. In the first phase, noise is gradually added to the LR image until no original image information is apparent. In the second phase, the first process is reversed. Deep learning models are used which gradually reduce the noise in order to produce an HR image. However, the calculation is very time-consuming as it consists of many individual steps. More recent models therefore use an encoder to transfer the input image into the latent space, which makes the process more efficient. The entire process is illustrated in Figure 4.

![Figure 4:

Schematic illustration of a diffusion model operating in latent space. Z represents a feature map in the latent space, T represents the number of steps in the diffusion process. The DL model (deep learning model, often a U-Net) gradually reduces the noise. The schematic is a simplified representation of the architecture from [51].](/document/doi/10.1515/mim-2024-0032/asset/graphic/j_mim-2024-0032_fig_004.jpg)

Schematic illustration of a diffusion model operating in latent space. Z represents a feature map in the latent space, T represents the number of steps in the diffusion process. The DL model (deep learning model, often a U-Net) gradually reduces the noise. The schematic is a simplified representation of the architecture from [51].

The first diffusion models for image super resolution include SR3 [52], IDM [53] and ResShift [54]. SR3 proposed by Saharia et al. was developed specifically for general data and focuses particularly on the super resolution of faces and nature images. It uses a U-Net model for noise reduction and can upsample images from 64×64 to 256×256 and from 256×256 to 1024×1024. In a survey with participants, the generated images achieved a fool rate of 50 %. IDM proposed by Gao et al. was tested on the same datasets as SR3 and showed improved performance. Unlike SR3, IDM uses only the encoder of the U-Net for noise reduction, while the decoder was replaced by a special architecture for an implicit neural representation. In addition, a coordinate-based multi-layer perceptron (MLP) is used to encode images as features in continuous space, enabling highly detailed reconstructions. Another advantage of IDM is its flexible scaling: while conventional methods often only support scaling factors of exponents of 2, for instance of 4× or 16×, IDM also enables any intermediate levels such as a 10× magnification. ResShift proposed by Yue et al. takes a different approach by reducing the number of diffusion steps to make the super resolution process more efficient. It does this by using a Markov chain that shifts the residual between HR and LR images to improve transition efficiency. In addition, ResShift has a flexible noise scheduler that adjusts the shifting speed and noise strength during the diffusion process to achieve a better balance between image quality and computational effort. Recently, Cheng et al. [55] proposed DiT-SR, an diffusion transformer that adopts a U-shaped architecture for multi-scale hierarchical feature extraction. It uses a uniform isotropic design for transformer blocks across different stages. A frequency-adaptive time-step conditioning module also gets incorporated for improved processing of distinct frequency information during the diffusion process.

2.2.5 Up- and downsampling

In the field of image super resolution, which aims to increase the resolution of an image, upsampling methods play a central role. These can be divided into two categories: Fixed methods and learnable methods. Fixed methods upsample an image or a feature map based on predefined calculation parameters. Well-known algorithms are bilinear and bicubic interpolation, resize convolution, unpooling (max unpooling, average unpooling), nearest-neighbor and sub-pixel convolution [56]. Compared to fixed methods, learnable methods such as deconvolution and Meta-Upscale Module [57] adapt dynamically during training. The downsampling of images and feature maps can also be divided into fixed methods such as pooling layers (max pooling, average pooling), wavelet transformations and anti-aliasing filters, as well as methods that can be learned such as strided convolutions.

Finally, a detailed analysis of the advantages and disadvantages of the algorithms is presented in the summary of this paper.

2.2.6 Loss functions

The loss function is one of the most important hyperparameters when training a model. It plays a central role in evaluating the deviation between the output generated by the model and the actual training labels. A high deviation is penalized by the loss function more than a low one, forcing the model to update its parameters to minimize errors and improve accuracy. There are two main categories of loss functions: pixel-based and perceptual-based loss functions.

Pixel-based loss functions calculate the direct deviation between the pixel values of the model prediction and those of the label. They are widely used and are almost always applied. Well-known pixel-based error functions include the mean absolute error (MAE, L1), the mean squared error (MSE, L2), root mean squared error (RMSE), binary cross entropy loss (BCE) and dice coefficient. In addition to these common loss functions, there are also specialized variants such as the Carbonnier Loss, the Barlow Twins Loss and the Cosine Similarity, which can be preferred depending on the use case.

However, pixel-based loss functions do not capture human perception very well, which is why perception-based loss functions are being developed specifically for this purpose. These compare extracted features of the model prediction with those of the training label. As with pixel-based error functions, larger deviations are penalized more heavily. One of the most well-known perceptual loss functions is based on the VGG network and was developed by K. Simonyan and A. Zisserman of the Visual Geometry Group (VGG) at Oxford University – hence the name VGG [58]. Originally designed as a convolutional network for object recognition, it has been widely used due to its high performance and free availability. The architecture of the VGG network enables the extraction of deep features, making it particularly well suited for perceptual error features.

The training of a model can be significantly improved by selecting the right loss function or rather combination of loss functions, since it has a direct influence on the adaptation of the model parameters and thus on the model performance.

2.2.7 Performance metrics

Metrics are used to evaluate the performance of a trained model. The literature offers a variety of such metrics for different application areas. These can be roughly divided into two categories: Reference-based and non-reference-based metrics. The difference is that reference-based metrics require a reference to evaluate a comparison or performance. In contrast, non-reference-based metrics do not require a reference; these metrics can be applied directly to the model output. The most well-known non-reference-based metrics include Perception-Based Image Quality Evaluator (PIQE) [59], Natural Image Quality Evaluator (NIQE) [60] and Blind/Referenceless Image Spatial Quality Evaluator (BRISQUE) [61].

Similar to the loss functions, reference-based metrics can be divided into two categories: Distortion-based and perceptual-based metrics. It is noteworthy that loss functions described above are also listed as reference-based metrics. In principle, metrics can be used as loss functions and vice versa - however, the model training could become unstable. Distortion-based metrics are often based on pixel or frequency-based methods. Well-known representatives are Peak Signal-to-Noise Ratio (PSNR), MAE, MSE, RMSE, Structural Similarity Index Measure (SSIM) and Multi-Scale Structural Similarity Index Measure (MS-SSIM). The perceptual-based metrics, which also include feature-based metrics, include Learned Perceptual Image Patch Similarity (LPIPS) [62], PieAPP [63], SAMScore [64], Inception Score (IS) and Fréchet Inception Distance (FID) [65].

A detailed description of the advantages and disadvantages of these metrics can be found in the Summary and in the Challenges and Opportunities section at the end of this paper.

2.2.8 Geometric registration approach

Geometric registration is a very important topic for image super resolution. The aim is to ensure that the positions of the structures in the low-resolution image (LR) match the positions of the structures in the high-resolution image. The better the match, the more promising the model training. The simplest approach of geometric registration is with synthetic datasets. Here, the HR images are downsampled, which ensures that there is a match between LR and HR. A problem only arises with real datasets. Depending on the acquisition technique, a match between LR and HR can lead to extreme overhead. A one hundred percent match is often not feasible due to the tolerances of the acquisition system, which is why the training data already contains deviations. Fortunately, this does not mean that the model training is unsuccessful. However, the model performance decreases with increasing deviation in the dataset. In the summary section the subject of geometric registration is addressed again.

3 Deep learning-based image super resolution in microscopy

Besides the factors governing the performance of deep-learning-based super resolution methods, and its potential applications in microscopy such as optimization of imaging parameters, image denoising, acceleration of image speed and cross-modality super-resolution reconstruction [66], this section aims to show the different approaches that have been developed to implement deep learning-based image super resolution techniques for quality enhancement of microscopy images.

3.1 Light optical microscopy

In classical light optical microscopy, the optical resolution indicates the minimum distance at which two closely spaced points still appear as separate units in the image. As originally proposed, the resolution is limited by the Abbe diffraction limit, which is around 200 nm for visible light [67]. This limit is determined by the wavelength of the light used and the numerical aperture (NA) of the objective. Fluorescence microscopy, which is a variant of light optical microscopy, is one of the most popular techniques in the field of biology. This is given that this method possesses a high sensitivity, specificity (ability to specifically mark molecules and structures of interest), and simplicity, which makes it possible to be used on living cells and organisms [68]. This technique also has different modalities, such as widefield where the entire sample is exposed to the light source whenever there is a change of position in the axial direction [69], light-field which uses light field theory to scan volumetric images of the sample at high speed [70], bright-field which is considered one of the simplest techniques, where the sample is illuminated throughout and the visual contrast is facilitated when the light is absorbed in its denser areas [71], and finally, dark-field technique that enhances contrast in materials which are difficult to image under normal lighting conditions by using oblique illumination [72]. Even though the existing limitations of this techniques might undermine the generation of images with an appropriate contrasts and higher quality, modern deep learning-based image super resolution methods offer the possibility to help overcome both the Abbe’s diffraction limit and these limitations to significantly improve the image [73].

3.1.1 Convolutional neural networks

One case of a successful implementation is found in Nehme et al. [12] where they designed an approach that uses a deep convolutional neural network which is trained and evaluated with both simulated and experimental data of blinking emitters. To generate the simulated data they used the ThunderSTORM plugin [74] and ImageJ which is a popular digital image processing software [75], [76]. The experimental data was collected using SMLM (single-molecule localization microscopy) technique with an inverted fluorescence microscope. Furthermore, in order to achieve a variety of SNRs and emitter densities, images were taken with several levels of laser powers and combined in post processing. The architecture of this approach encodes the image into a dense, aggregated feature representation, through three 3×3 convolutional layers with increasing depth, interleaved with 2×2 max-pooling layers. Subsequently the output goes through the decoding stage, which has three successive deconvolution layers, each consisting of 2×2 up-sampling modules, interleaved with 3×3 convolutional layers with decreasing depth. Here the spatial dimensions are processed in order to have the same shape as the input image.

A representation of the model’s capabilities on image reconstruction can be seen in Figure 5(II). Results of this work report microtubule reconstructions obtained on a 12.5 nm pixel grid and QD reconstructions on a grid with a 13.75 nm pixel size. Furthermore, the authors reported an increment in the minimal resolvable distance between stripes behaving as a function of emitter density with values ranging from at least 19 nm for 1

![Figure 5:

The encoder-decoder CNN Deep-STORM. (I) Simple depiction of the Deep-STORM model for super resolution. (II) Examples of the diffraction limited input images (a) and a super-resolved result (b) [12].](/document/doi/10.1515/mim-2024-0032/asset/graphic/j_mim-2024-0032_fig_005.jpg)

The encoder-decoder CNN Deep-STORM. (I) Simple depiction of the Deep-STORM model for super resolution. (II) Examples of the diffraction limited input images (a) and a super-resolved result (b) [12].

Later in 2021, Vizcaíno et al. [78] presented a deep learning approach to reconstruct confocal microscopy 3D image stacks of mouse brain with the size of 1287×1287×64 voxels (112×112×57.6 µm3) from a single light field image of size 1287×1287 pixels. Their network called LFMNet has a first layer with a 4D convolutional layer, the image that is output by this is converted to a 2D image trough a mapping function, later a U-Net carries out convolutions as downscaling operators, completes the feature extractions and then the 3D reconstruction. In order to assess the performance of their method they compared two different variants of its network architecture a shallow U-Net composed by 2 down/up-sample operations with a receptive field of 19 pixels and a full U-Net composed by 4 down/up-sample operations with a receptive field of 104 pixels. Then they measured the PSNR and SSIM metrics to quantify the improvements and identify which had the best performance on reconstructing a HR version of the ground truth. For instance, their shallow model in the final design outperforms the remaining methods with a PSNR value of 34.45 and a SSIM index of 0.87 and a time for reconstructing a super-resolved image of 20 ms. Based on these results and their analysis they demonstrated that by using the appropriate deep learning model they can reduce considerably the image acquisition time, and improve the capability for imaging in highly dynamic and light-sensitive events, such as live cells and biology-related tasks in general.

Furthermore, transfer learning has taken participation in super resolution enhancement proceedings in microscopy. Christensen et al. [79] created a method called ML-SIM that aimed to work out the drawbacks of traditional SIM reconstruction process, these being time consuming and tendency to produce artefacts especially for images with low signal-to-noise ratios, which is a scenario where traditional methods would produce the wrong reconstructions by confusing noise as signal. The proposed methodology uses transfer learning, with a parameter-free model that aims to generalize further in the reconstruction process of data recorded by a determined imaging device as well as a specific sample type. They selected RCAN [80] as the baseline for their the ML-SIM architecture, which has around 100 convolutional layers and 10 residual groups with 3 residual blocks. The model was trained with the DIV2K image dataset which is a popular repository used by other authors in the field. Additionally, they generated synthetic data so they were able to use it as input as well for the supervised learning approach.

In the evaluation stage of their approach, they compared the wide-field image with the reconstructed version produced by their approach. They observed a noticeable resolution improvement, quantified by a PSNR increase of over 7 dB and a SSIM index that was 28.09 % higher. A visual representation of this improvements can be seen in Figure 6. These outcomes show that ML-SIM reconstructions contain less noise and a considerably reduced number of artefacts than the other methods, it also improves visual quality in terms of resolution which benefits the structured illumination microscopy image acquisition process and can potentially be used in biology and cellular studies that involve SIM.

![Figure 6:

ML-SIM results compared to OpenSIM, CC-SIM and FairSIM, with the original wide-field input image on the left. Fluorescent beads, as well as the endoplasmic reticulum and cell membrane [79]. (Image cropped from the original version).](/document/doi/10.1515/mim-2024-0032/asset/graphic/j_mim-2024-0032_fig_006.jpg)

ML-SIM results compared to OpenSIM, CC-SIM and FairSIM, with the original wide-field input image on the left. Fluorescent beads, as well as the endoplasmic reticulum and cell membrane [79]. (Image cropped from the original version).

Further work was developed by Shah et al. [6] proposing a residual convolutional neural network (RED-Net) designed to denoise and reconstruct data generated with SIM microscopy. In the first part of their work, they introduce an end-to-end architecture along with a baseline related to existing work combining SIM and deep learning. They proposed a network called super resolution residual encoder–decoder structured illumination microscopy (SR-REDSIM), which takes raw SIM images as input and generates a super-resolved image as output. In the second part, they presented Residual Encoder–Decoder FairSIM (REDfairSIM), which combines standard computational SIM reconstruction with a deep learning network. This method focuses on reconstructing images using typical frequency-domain algorithms while leveraging a deep convolutional neural network for artifact reduction and denoising. In addition to the aforementioned, they also provide a workflow called preRED-fairSIM, where deep learning is applied to the raw SIM images for denoising before SIM reconstruction. This approach aimed to evaluate the model’s generalization capabilities under four different noise levels: level 0 for the highest SNR at timestamp 0, level 1 for timestamps 25–40 level 2 for timestamps 75–100, level 3 for timestamps 125–150 and level 4 for timestamps 175–200. The architecture seen in Figure 7 follows an encoding–decoding framework with symmetric convolutional–deconvolutional layers along with skip-layer connections. In addition, they used and Adam optimizer and the least squares error.

![Figure 7:

SR-REDSIM vs. SR-REDSIM vs. Red-Net. (I) SR-REDSIM model architecture for cell structure image enhancement, composed of three different blocks: Convolutional layers, deconvolutional layers and upsampling layers. Red-Net (as used by RED-fairSIM) does not have the upsampling layers. (II) Qualitative evaluation of the proposed method at different noise levels [6].](/document/doi/10.1515/mim-2024-0032/asset/graphic/j_mim-2024-0032_fig_007.jpg)

SR-REDSIM vs. SR-REDSIM vs. Red-Net. (I) SR-REDSIM model architecture for cell structure image enhancement, composed of three different blocks: Convolutional layers, deconvolutional layers and upsampling layers. Red-Net (as used by RED-fairSIM) does not have the upsampling layers. (II) Qualitative evaluation of the proposed method at different noise levels [6].

The dataset used to train the models was composed of 101 different cell structures. The model performance was evaluated by measuring the PSNR and SSIM at different noise levels and comparing the metrics against other state-of-the-art approaches. The results demonstrated that their networks display a fair robustness and a good performance in terms of image quality reconstruction. This was evidenced by testing all methods on 500 images. For instance, at noise level 4, SR-REDSIM achieved an 11.31 % improvement in PSNR and a 57.97 % improvement in SSIM compared to the fairSIM baseline. Similarly, U-Net-fairSIM showed an 11.90 % increase in PSNR and a 57.35 % increase in SSIM, while RED-fairSIM achieved the highest improvements, with a 15.59 % increase in PSNR and a 59.15 % increase in SSIM. These results have shown that image reconstruction and subsequent denoising via RED-Net produce outputs that are resilient against the image reconstruction artifacts, in addition, proves that this approach is able to properly generalize at different noise levels and it is a great complement to structured illumination microscopy image acquisition tasks as it can both be employed to denoise and reconstruct high-quality representations.

Additional work was carried out in 2021, as reported by Wang et al. [81], where they developed a method that combines deep learning and an external aperture modulation subsystem (EAMS). Their study aimed to overcome the diffraction limit that is one of the most common image quality bottlenecks in light microscopy, particularly in wide-field microscopy. The proposed deep learning architecture, referred to as dpcCARTs-Net (depicted in Figure 8) is built upon deep pyramidal cascaded channel attention residual transmitting (CART) blocks. The architecture has three vital components: primitive feature extraction, deep pyramidal cascaded CART blocks, and residual reconstruction.

![Figure 8:

Training and inference workflow of dpcCARTs-Net. (I) Schematic of the proposed dpcCARTs-Net architecture. (II) Overview of the super resolution process as well as an inference example of random generated point sources were m represents the resolution ratio between the LR and HR images [81].](/document/doi/10.1515/mim-2024-0032/asset/graphic/j_mim-2024-0032_fig_008.jpg)

Training and inference workflow of dpcCARTs-Net. (I) Schematic of the proposed dpcCARTs-Net architecture. (II) Overview of the super resolution process as well as an inference example of random generated point sources were m represents the resolution ratio between the LR and HR images [81].

After training the model with 2-aperture and 3-aperture modulation strategies, the results (Figure 9c and d) were evaluated using a baseline comparison of a three-times interpolated and theoretically super-resolved image of two-point source, as seen in Figure 9b. The visual results demonstrate that the model effectively reconstruct a HR version of the low-quality input. And additionally, the dpcCARTs-Net version trained with 3-aperture modulation strategy outperforms the model version trained with the 2-aperture modulation strategy. Quantitative results are provided and shown in Figure 9c and d, where the width at half maxima (FWHM) are 0.56 µm and 0.47 µm, respectively. The resolutions represent approximately 44.84 % and 37.59 % reductions compared to the diffraction-limited resolution of 1.25 µm. These results have shown the benefits of implementing this approach in surpassing the diffraction limit, overcoming time constraints on the data acquisition process and creating novel ways to go beyond the traditional super resolution imaging tasks of label-free moving objects, such as living cells.

![Figure 9:

Performance evaluation of the proposed dpcCARTs-Net on two-point source paris. (a) Diffraction limited input (b) 3 times theoretically super-resolved image (c) dpcCARTs-Net output trained with the 2-aperture modulation strategy and (d) dpcCARTs-Net output trained with the 3-aperture modulation strategy. Additionally, the cross-sectional profiles for the super resolution approach of (e) 2.2 times and (f) 2.7 times [81].](/document/doi/10.1515/mim-2024-0032/asset/graphic/j_mim-2024-0032_fig_009.jpg)

Performance evaluation of the proposed dpcCARTs-Net on two-point source paris. (a) Diffraction limited input (b) 3 times theoretically super-resolved image (c) dpcCARTs-Net output trained with the 2-aperture modulation strategy and (d) dpcCARTs-Net output trained with the 3-aperture modulation strategy. Additionally, the cross-sectional profiles for the super resolution approach of (e) 2.2 times and (f) 2.7 times [81].

In 2022, Kagan et al. [10] implemented convolutional networks together with a super resolution radial fluctuation (SRRF) algorithm for network training, which is a fast, parameter-free, computational method, in order to enhance the spatial resolution of near-infrared fluorescence microscopy images of single-walled carbon nanotubes (SWCNT). This network is based on U-Net with encoder and decoder blocks. However, the architecture proposed by these authors has an asymmetric architecture with two supplementary decoding blocks in addition to the 4 decoding blocks that are followed by the 4 encoding blocks of the network. The architecture of this network can be seen in Figure 10(I).

![Figure 10:

Overview of the proposed network architecture with a U-Net shape and its two additional layers (I) and prediction examples (cropped from the original figure of Kagan et al.) after training (II) [10].](/document/doi/10.1515/mim-2024-0032/asset/graphic/j_mim-2024-0032_fig_010.jpg)

Overview of the proposed network architecture with a U-Net shape and its two additional layers (I) and prediction examples (cropped from the original figure of Kagan et al.) after training (II) [10].

The findings of this work demonstrated the advantages of implementing deep learning on super resolution and fluorescence imaging. They validated the network on diverse scenarios such as different SWCNT densities and discovered and improvement in the average spatial-resolution of 22 % compared to the original images also reaching an improvement in image-quality with the SNR values on both the original and SRRF. Furthermore, the SNR measurements in the predictions of the proposed model were higher than the SRRF benchmark output by an average of 47 % in contrast to a 24 % respectively. These results create new opportunities for accessible and rapid super-resolution nIR fluorescent SWCNTs images and additionally demonstrating its possible implementation as nanoscale optical probes.

In 2023, Lu et al. [82] work goal was to enhance the limitations in image quality caused by optical aberrations and artefacts in light-field microscopy. (LFM) by implementing an approach called virtual-scanning LFM (VsLFM). Their approach is a deep learning framework that uses techniques based onphysics to increase the resolution of LFM images beyond the diffraction limit. For their VsLFM model, they used both synthetic and experimental data of various transient 3D subcellular dynamics in cultured cells, a zebrafish embryo, zebrafish larvae, drosophila and mice at several physiological scenarios at a frame rate up to 500 volumes-per-second.

The model as seen in Figure 11 has three main components. The first component is feature extraction, which consists three 2D convolution layers. The second component, feature interaction and fusion, includes several sub-modules: the spatial-angular interaction module, which has three 2D convolution layers, one concatenation layer, and one Leaky ReLU activation layer; the first fusion module, which has one 2D convolution layer and one Leaky ReLU activation layer; the light field interaction module, which contains four 2D convolution layers and one Leaky ReLU activation layer; and the angular-mixed interaction module, which comprises three 2D convolution layers and two Leaky ReLU activation layers. Two 2D convolution layers and one Leaky ReLU activation layer in the second fusion module and one 2D convolution layer and Leaky ReLU activation layer for the final fusion module. Finally, in the feature upsampling, it has two 2D convolution layers and then interpolation using bicubic method is done. The authors evaluated the robustness of their approach by comparing the noise performance of VsLFM, VCD-Net and HyLFM-Net, all of which were trained in high signal-to-noise ratio conditions and tested in photon-limited imaging conditions.

![Figure 11:

Schematic of the proposed virtual-scanning light-field microscopy model architecture [82]. (Image cropped from the original version).](/document/doi/10.1515/mim-2024-0032/asset/graphic/j_mim-2024-0032_fig_011.jpg)

Schematic of the proposed virtual-scanning light-field microscopy model architecture [82]. (Image cropped from the original version).

Through this quantitative evaluation the authors found that VsLFM learned the physical constraint between angular views, and can precisely distinguish signals from strong noise, thus producing images with better resolution and contrast than the other methods, to further demonstrate this, authors measured the FWHM finding that VsLFM shows an increment of at least four-fold greater than LFM and two-fold higher than VCD-Net and HyLFM-Net. Furthermore, the authors worked their model over data that was a numerical simulation of synthesized 3D distributed tubulins and found that VsLFM outperformed LFM by having more high-frequency features within the Fourier spectrum, being this validated by an improvement of 2 db in the SNR and 0.2 in the SSIM values related to the spatial–angular domain respectively, after the reconstruction process. Figure 12 shows that the iterative tomography process based on Richardson–Lucy deconvolution framework, inherently provides denoising capabilities. These breakthroughs show the benefit of implementing a physics-based virtual-scanning mechanism as it can provide superior quality, extended generalization on different datasets and versatility to be used in biological practical applications where LFM might find complications due to the challenging scenarios.

![Figure 12:

Overview of reported results by Lu et al. [82]. (a) Ground truth acquisition of 1 µm diameter synthetic tubulins, acquired by sLFM with a 63/1.4 NA oil-immersion objective. (b) results of the porposed VSLFM model compared to VCD-Net and HyLFM-Net. (c) Pearson correlations of the obtained results (d) qualitative comparison within a single image (e) Normalized intensity profiles along the blue line in d. and (f) SSIM versus aberration levels of the compared methods.](/document/doi/10.1515/mim-2024-0032/asset/graphic/j_mim-2024-0032_fig_012.jpg)

Overview of reported results by Lu et al. [82]. (a) Ground truth acquisition of 1 µm diameter synthetic tubulins, acquired by sLFM with a 63/1.4 NA oil-immersion objective. (b) results of the porposed VSLFM model compared to VCD-Net and HyLFM-Net. (c) Pearson correlations of the obtained results (d) qualitative comparison within a single image (e) Normalized intensity profiles along the blue line in d. and (f) SSIM versus aberration levels of the compared methods.

Most recent works have proposed a different approach. In 2024, Zheng et al. [11] explored the advantages of using deep learning to extract scale-variant features and bypass abundant low-frequency data independently,. The development of the model architecture was inspired by deep residual channel attention networks RCAB and multi-scale residual network MSRB and has 4 foundational modules. A shallow feature extraction module to extract low level features from the input, a spatial extraction module to extract features at different scale a frequency filter module to bypass the abundant low frequency information and focus on the high frequency information representing detailed structure, and an upsampling module for reconstructing the final high-resolution output to the desired size, from a low resolution feature map.

Figure 13 describes all the modules that were mentioned above and that are part of the network architecture. The model was trained with the DIV2K state of the art dataset, and in order to assess its performance they follow the Crouther criterion, which basically correlates the increment of resolution with the increase in the number of projections for valid tomographic reconstruction. Therefore, tomographic reconstruction from XRF projections of 30 nm were reprojected to 270 distributed from an angle of 90° to −90°. Then this reprojections were enhanced by a scale factor of 4, resulting in enhanced XRF images with pixel size of 7.5 nm. On a different perspective using the Fourier shell analysis discovered that the resolution of the raw XRF improved from 60.8 nm to 14.8 nm. These results, demonstrates the improvements in the spatial resolution of the image, allowing also the detection of finer structures in batteries, like cracks and pores and offer valuable insights about the irreversible formation of Zn/Mn side products during cycling.

![Figure 13:

Overview of the proposed dual-branch model by Zheng et al. [11]. (a) schematic of the general architecture design (b) illustrates the architecture of the MSRB and (c) displays the residual group (RG).](/document/doi/10.1515/mim-2024-0032/asset/graphic/j_mim-2024-0032_fig_013.jpg)

Overview of the proposed dual-branch model by Zheng et al. [11]. (a) schematic of the general architecture design (b) illustrates the architecture of the MSRB and (c) displays the residual group (RG).

In 2024 Hu et al. [83] devised an approach which objective was to mitigate the resolution problems of conventional optical microscopy and enhance the quality of gold nanoparticles microscopy images. The architecture follows a deep non-local U-Net which incorporates the non-local denoising module into the popular U-Net model. This integration allows the network to implicitly incorporate self-similarity priors across multiple scales within the U-Net structure, and explicitly reveal the non-local self-similarity across the single scale via the non-local denoising module.

A visual representation of this architecture can be seen in Figure 14a. The performance of the method was evaluated by calculating the accuracy using the correlation coefficient between the network outputs and ground-truth across five different multimers, which ranged from roughly 0.5 to 0.8, depending on the number of particles per cluster. The results demonstrate the capability of the model to generate super-resolved images of gold nanoparticles clusters, which in the end helps the study and understanding of homogeneity in plasmonic sensors, thereby preserving the integrity of sensing surface molecules. This also fulfills the particular objective of improving the detection and reconstruction of important structural features such as size, shape and spatial arrangement. This creates novel alternatives for deep-learning based optical microscopy super resolution related tasks and will benefit different areas, specially the bioimaging and nanoscale manufacturing.

![Figure 14:

Hu et al. developed a encoder-decoder network that incorporates a non-local denoising module. a) Model architecture for gold nanoparticle image enhancement for light optical microscopy. b) Input image example. c) Output image example for the given input. [83].](/document/doi/10.1515/mim-2024-0032/asset/graphic/j_mim-2024-0032_fig_014.jpg)

Hu et al. developed a encoder-decoder network that incorporates a non-local denoising module. a) Model architecture for gold nanoparticle image enhancement for light optical microscopy. b) Input image example. c) Output image example for the given input. [83].

In the same year Chen et al. [84] developed a method to overcome the issue with precision optics and stability from the light sources that limit the generation of high-contrast illumination stripe patterns in SIM microscopy. Their method called contrast-robust structured illumination microscopy (CR-SIM) follows an architecture that has a classical encoder-decoder pipeline which also has been broadly used in other research works as well as evidence in this review. The encoder is in charge of extracting the most important image gestures from the raw SIM images, and on the other hand, the decoder basically uses the extracted features and improves them to recreate an output with a higher quality from the raw SIM images. The encoder consists of 4 downscaling modules, that include two 3×3 convolutions and a 2×2 maximum pooling layer. The decoder incorporates 4 upscaling modules, each containing two 3×3 convolutions designed to further optimize the features and create an output based on the encoder’s processing of the input data. Finally, each 3×3 convolution in the network is post-connected to a rectified linear unit (ReLU) activation function. The architecture is shown in Figure 15(I).

![Figure 15:

CR-SIM follows the widely used encoder-decoder scheme. (I) CR-SIM method architecture. (II) Quantitative evaluation of the proposed CR-SIM model based on SSSIM and PSNR [84].](/document/doi/10.1515/mim-2024-0032/asset/graphic/j_mim-2024-0032_fig_015.jpg)

CR-SIM follows the widely used encoder-decoder scheme. (I) CR-SIM method architecture. (II) Quantitative evaluation of the proposed CR-SIM model based on SSSIM and PSNR [84].

The results demonstrated the feasibility to use the model on real experimental raw data containing low-contrast illumination stripes after successfully reconstructing SIM images that were captured in low-contrast illuminated strip scenes, including polarization-unadapted SIM systems and projection DMD-SIM. They quantified their method on clathrin-coated pits (CCPs) and microtubules (MTs), which are specimens with increasing structural complexity and measured the performance of other benchmark methods in comparison to CR-SIM by calculating PSNR (Peak Signal-to-Noise Ratio) and SSIM (Structural Similarity Index Measure) for the ground truth HR images as it can be seen in Figure 15(II). CR-SIM outperformed the benchmark methods on both datasets providing the highest PSNR and SSIM at varying contrast levels. Therefore, these results show that CR-SIM enhances the reconstruction process of SIM and produces samples with a higher structured illumination contrast which enables more accurate calculations of phase, frequency, and other relevant features within the image. The proposed CR-SIM method benefits fields such as biomedicine and chemistry due to its ability to enhance image resolution and quality, enabling accurate and detailed information acquisition for research tasks.

Finally, Song et al. [7] proposed a super resolution SIM reconstruction approach, which implements a multi-scale neural network called Scale Richardson-Lucy Network (SRLN). This method uses a lightweight, multiscale network to link the nonlinear relation between the low-resolution images obtained with wide-field microscopy and super-resolved images generated with structured illumination microscopy. This particular case of nonlinear relationship is interpreted with the Richardson-Lucy deconvolution. The aim of using Richardson-Lucy deconvolution is to reduce the SRLN network’s reliance on training data and aligning the reconstruction process with this iterative method. They evaluated their method by measuring the spatial resolution and compare the performance with two other state-of-the-art models ML-SIM and DFCAN. In their results the authors found that with this methodology is possible to reconstruct a super-resolved image with a 70 nm spatial resolution for different types of biological structures in cells, thus outperforming in a 45.31 % the spatial resolutions generated by the other two benchmark models. In their findings they mentioned that using the SRLN network promotes a dataset independent process, provide interpretable reconstructions of the LR images and might reduce the reconstruction time by 90 %.

3.1.2 Generative adversarial networks

In 2018 Ouyang et al. [85] developed an approach called ANNA-PALM that used deep learning to generate super resolved images from sparse, rapidly acquired localization and widefield microscopy low resolved images of microtubules, nuclear pores and mitochondria. Their experiments were carried on a model comprising 25 convolutional layers, 42 million parameters and an architecture based on U-Net and GAN. Both experimental and simulated data were used to train an validate the model. For generating the synthetic data, they used Brownian dynamic simulations thus obtaining a total of 200 images of semiflexible filaments, mimicking microtubules with a resolution close to 23 nm and for the experimental part they used standard PALM imaging (by acquiring long diffraction limited image sequences) to obtain the images of microtubules, nuclear pores, and mitochondria. With their approach, they were able to obtain images with fields of views greater than 1000 and containing the same number of cells within a timeframe of 3 h. With this, they also found that its approach improved the general spatial resolution by producing an image with spanning spatial scales ranging from 20 nm to 2 nm. This proves that the method, benefits the live-cell super-resolution image generation and offers high throughput rates, that simplifies the process as well as making it faster.

In 2020, Corsetti et al. [86] proposed a deep learning-based approach for processing light sheet microscopy images of cleared mouse brain, colorectal tissues and breast tissues. Their approach begins with a deconvolution step using a pre-calculated two-dimensional point-spread function (PSF). Later the super resolution part is done by a GAN model architecture originally presented by Wang et al. [13] which they found to be suitable for the LSM system, in both Airy and Gaussian illuminations. To assess the network’s performance in resolving objects of known size and shape, they measured the FWHM of vertical line profiles. The low-resolution image had a FWHM of 5.4 µm, the high-resolution 4.6 µm and the network output 4.7 µm. Similarly, for horizontal line profiles, the FWHM was 5.8 µm for the low-resolution image, 4.4 µm for the high-resolution image, and 4.8 µm for the network output. This metrics show the advantages and possibilities that can be achieved with this approach, for instance when the deep learning algorithm is implemented on the cleared mouse brain plaques, it yields a sharper image, with superior quality and considerable improvements in the image contrast, which brings more opportunities on the study of clinical Alzheimer as it facilitates the analysis of several brain regions, such as the subiculum, the lateral septum and the neocortex. On the other hand, for colorectal studies this implementation improves the contrast with respect to those acquired with the Gaussian beam and allow single nuclei to be seen even at a depth of 82 μm, this allows to track structures in three dimensions, under the epithelial surface, and may offer vital insights on the direction and extent of cancer cell invasion. Likewise, occurs with the breast tissue data as this method offers the possibility to follow the features, uneven topography and heterogeneity in the analysis of breast cancer studies. These results demonstrate that the deep learning method accurately reconstructs images closely matching high-resolution counterparts. The network output corresponded closely to the expected bead size of 4.8 µm, illustrating its effectiveness in light sheet microscopy to achieve a two-fold increase in the dynamic range of the imaged length scale.

Additional research in the topic was carried out by Qiao et al. [87] where they used deep learning to improve the quality 3D Structured Illumination Microscopy images of microtubules and lysosomes. The architecture consisted of a channel attention generative adversarial network (caGAN) that combines pixel-wise loss, such as MAE or MSE, and discriminative loss. This combination yields a better performance, particularly when inferring intricate or fine structures of biology specimens. The extracted shallow features are passed through 16 cascaded residual channel attention blocks (RCAB) which have two Conv-LReLU modules and a channel attention module. The output from the final RCAB is processed through an upsampling layer that increases the spatial dimensions (X and Y) of the feature channels using bilinear interpolation. Finally, two Conv-LReLU modules are applied to merge the feature channels into one super-resolved image volume. To evaluate the performance of the caGAN-SIM model, the authors used the normalized root mean square error (NRMSE) metric to assess the quality of the output image volumes of biological specimens (microtubules, lysosomes and mitochondria). Based on the results, they concluded that while conventional 3D-SIM produces reliable outcomes with GT-SIM at relatively high SNR, when there are conditions that yield a lower signal-to-noise ratio (SNR) in microtubule and lysosome images, this indicates a reduction of the performance at lower signal levels. In the specific case of conventional 3D-SIM it achieved NRMSE values of 0.04 % for microtubules and 0.029 % for lysosomes, whereas caGAN-SIM outperformed this with NRMSE values of 0.025 % for microtubules and 0.022 % for lysosomes. This demonstrates the superior performance of caGAN-SIM’s under challenging low-SNR conditions and that caGAN-SIM’s primary advantage is that it allows the use of 3D-SIM in quick and continuous live imaging due to the fact that it enables a reduction by 7.5-fold in the number of raw images obtained and a reduction in the overall photon budget by more than 15-fold without appreciably compromising reconstruction quality. This offers new opportunities and solutions on biological research and biology-related microscopy tasks at a cellular scale.

In 2021 Qiao et al. [5] further improved the understanding of deep learning super resolution (DLSR) imaging by developing two architectures: the deep Fourier channel attention networks (DFCAN) and its GAN-based derivative (DFGAN). They trained their models with low-resolution inputs of clathrin-coated pits (CCPs), endoplasmic reticulum (ER), microtubules (MTs) and F-actin filaments obtained through widefield microscopy, paired with high-resolution ground truth images acquired via Structured Illumination Microscopy (SIM). The DFCAN architecture begins with a convolutional layer and a Gaussian error linear unit (GELU) activation function. The output of the GELU function then feeds the residual groups which are four Fourier Channel Attention Blocks (FCAB). The DFGAN is designed based on a conditional generative adversarial network (cGAN) and a LeakyReLU activation function. The cGAN model has two parts, a Generative model which performs the image transformation and a discriminator model which is in charge of distinguishing whether the generated image came from the training data or was an actual output of the generator model. Their findings showed that this deep learning approach exploits the characteristics of the power spectrum of distinct feature maps in the Fourier domain and leverage the frequency content difference across distinct features to adaptively rescale their weightings when propagating them through the network. This strategy enables the network to learn precise hierarchical mappings from low-resolution to high resolution images. However, despite their good results, they mentioned that regardless the DLSR model and the ability to leverage a large amount of well-registered data to learn good statistical transformation, it is theoretically impossible for network inference to obtain ground truth images in every detail.

Deep learning SR techniques are often used as a complementary quality enhancement in the fluorescence microscopy imaging process, including its variants like volumetric fluorescence microscopy as reported by Park et al. [88]. In 2019, an approach called RFGANM was developed by Zhang et al. [89] where they implemented GAN models as complement to light optical microscopy and used different sources of biological data to train and evaluate it. They used bovine pulmonary artery endothelial cells (BPAEC), healthy human prostate tissue and human prostate cancer tissue as the benchmark dataset for the method, to acquire the images they used dual-color fluorescent microscopy and bright-field microscopy respectively. The architecture of the model has a first convolution layer with a kernel size of 3 followed by a ReLU activation function. The output would then go through 16 residual blocks with identical layout. Inside the residual blocks, there are two convolutional layers with small 3×3 kernels and 64 feature maps to extract the most important characteristics of the images. Then is followed by batch normalization layers and a ReLU activation function. Finally, to increase the resolution of the image the used sub-pixel convolution with 2 layers as proposed by Shi et al. [56]. The architecture is depicted in Figure 16.

![Figure 16:

The GAN-based RFGANM approach. (I) Schematic of the proposed RFGANM model architecture. (II) Qualitative evaluation of the trained model based on BPAE cells (cropped from the original figure). [89].](/document/doi/10.1515/mim-2024-0032/asset/graphic/j_mim-2024-0032_fig_016.jpg)

The GAN-based RFGANM approach. (I) Schematic of the proposed RFGANM model architecture. (II) Qualitative evaluation of the trained model based on BPAE cells (cropped from the original figure). [89].

In order to improve the performance of the generator they used a perceptual loss function firstly introduced by Ledig et al. [34] that is the result of the weighted sum of the MSE loss, the feature reconstruction loss and the adversarial loss as shown in Equation (2).

Loss function of the RFGANM model.

Where GθG is the generator parameterized by θG,

In the same year, Wang et al. [13] presented a GAN-based approach which aimed to reconstruct diffraction-limited input images into super-resolved ones. The model was trained with endocytic clathrin-coated structures in SUM159 cells and Drosophila embryos images, these datasets were, acquired with stimulated emission depletion (STED), wide-field fluorescence, confocal, and Total Internal Reflection Fluorescence (TIRF) microscopy. The model architecture is a generative network which consists of four downsampling blocks and four upsampling blocks. The downsampling blocks contain three residual convolutional blocks which the input is zero-padded and added to the output of the same block, in this process the spatial downsampling is achieved by an average pooling layer. Then a convolutional layer lies at the bottom of the U-shape structure that links the downsampling and upsampling blocks. Finally, the last layer is another convolutional layer that maps the 32 channels into 1 channel that corresponds to a monochrome grayscale image. The findings of the authors have shown that their network was able to generate high quality images that matched the images generated with STED and TIRF even outputting images with sharper details in comparison to the ground truth images, especially for the F-actin structures. They also achieved an improvement in the field of vision resulting from a low-Numerical aperture input image and increased depth of field, allowing to disclose finer characteristics that could be out of focus in different color channels with a higher Numerical aperture objective. They quantify this by measuring the full width at half maximum value where they go from 290 nm for the network input to 110 nm for the network output. This result showcases the improvements in resolution of images acquired with low-numerical-aperture objectives, and the ability of the model to match the resolution of images high-numerical-aperture objectives. This is beneficial in biology-related tasks and other areas where light microscopy is implemented.

Huang et al. [14] developed a method that follows a two-channel attention network (TCAN) network and was trained with nano-beads and nucleus data generated via confocal fluorescence microscopy. The architecture of this approach is based on U-Net and the Deep Fourier Channel Attention Network (DFCAN), followed by a generative adversarial network (cGAN) which has a generator and a discriminator model. The input image goes first through a convolutional block, then the outputs of the U-Net and DFCAN respectively are summed and go through another convolutional block to altogether form the network output. The visual representation of this architecture is illustrated in Figure 17.

![Figure 17:

Schematic of the proposed network architecture of TCAN. (a) visualizing the TCAN generator and (b) the discriminator of the cGAN approach [14].](/document/doi/10.1515/mim-2024-0032/asset/graphic/j_mim-2024-0032_fig_017.jpg)

Schematic of the proposed network architecture of TCAN. (a) visualizing the TCAN generator and (b) the discriminator of the cGAN approach [14].

The U-Net has four downsampling and upsampling blocks respectively, and contains two convolutional layers, where the last one is in charge to map 32 channels into 1 that is a monochrome gray-scaled high-resolution image. The DFCAN module is the generator that is in charge to improve the ability to learn the features in the frequency domain. It is composed by a convolutional layer that generates the feature maps and then a Gaussian error linear unit function to help them learn these features. The output goes to five residual groups which contain two Fourier channel attention blocks and skip connection each. Finally, the last residual group is followed by a convolutional layer and the nearest neighbor interpolation method is again used but in this time to upscale the image t the same size as the forum truth.

They evaluated the performance of the model on images of nano-beads, nucleus and microtubules by measuring the FWHM of the PSF and image quality estimated by the SNR. Results showed that the FWHM of the confocal microscope PSF is 239 ± 25 nm, and in contrast with the PSF distribution of the network output which is even better than the STED system with a value of 58 ± 1 nm and 83 ± 9 nm, respectively. The SNR values of the network output for nano-beads, nucleus and microtubules were 120 %, 233.33 % and 13.54 % respectively which are outstanding results in comparison to the confocal input and is evidence of the descriptive improvement in the image quality this approach succeeds in transforming low-resolution confocal images into super-resolved images while getting the most out of both spatial representations and frequency domains to accurately carry out the mapping from low-resolution images to high-resolution ones. These breakthroughs pave the way to successfully implement this model to assists the investigation of dynamic instability of live-cell microtubules by capturing long-term time-lapse images, furthermore offering the opportunity to carry out live-cell imaging with reduced photobleaching and phototoxicity.

3.1.3 Transformers

Zu et al. [4] proposed a classification framework with an image super resolution reconstruction network called SwinT-SRNet, shown in Figure 18(I). This method is based on the efficient super-resolution transformer (ESRT), which main objective is to produce a high-quality image without the blurring issue that happen when a low-resolution image is resized to fit the input training shape of the SwinT model. This model approach was trained and evaluated with a dataset composed by pollen images obtained with a brightfield microscope. The framework for pollen image classification consists of SwinT-SRNet which performs the image resizing and super resolution task, a high frequency module that extracts the most important frequency-related features of the pollen images and finally the Swin transformer mode which performs the pollen image classification. However special attention is place on the SwinT-SRNet as it is the one that carries out the reconstructions to higher quality. The architecture of the SR model is a CNN+Transformer that has four main blocks. First the data passes through the extraction layer where the shallow features of the LR input image are acquired by means of convolutional layer. Then the data passes through the LBC module that is in charge to adjust the size of the feature map dynamically and extract deep features, this is done by using High Preserving Blocks (HPBs) [90]. Then the output is fed to the LTB module which basically captures long-term dependencies between similar patches by using Efficient Transformer (ET) and Efficient Multi-Head Attention (EMHA) mechanism [90]. Finally, the output of the LTB module and the output of the extraction layer are fed to the reconstruction layer to generate the SR version of the image.

They discovered that when fusing the ESRT and HF modules into SwinT the reproduction of high-quality images improves increasing the F1-score of the SwinT model by 0.007 and the accuracy by 0.63 %. After training and testing the model, they found that the accuracy of the SwinT-SRNet model reaches a 99.46 % and 98.98 % accuracy on the POLLEN8BJ and POLLEN20L-det datasets respectively, which are 1.05 % and 1.19 % higher than the conventional SwinT. These values and results show that this approach is able to accurately classify and identify relevant features in pollen images, which benefits in the effective monitoring and forecasting of allergenic pollens that are in the air. This improves the health and the living quality of citizens, as in certain areas pollen allergy could become a seasonal epidemic disease with a high incidence rate.

3.1.4 Hybrid architectures

In 2022 Zhang et al. [91] proposed a deep learning-based method for structure illumination microscopy aimed at reconstructing SR images using only a single frame of SIM data. Their architecture combines five GANs with a modified U-Net, which they referred to as a “deformation” DU-Net. The five GANs generate raw SIM images with three phases in two perpendicular directions. These generated images, along with the original raw SIM image are fed into the DU-Net. The DU-Net consists of six encoder channels and one decoder channel, where each encoder extracts feature information from the respective raw image, and the decoder integrates all features to generate the final SR image. A complete description of this method architecture can be seen in Figure 19.

![Figure 19:

Schematic overview of the proposed deep learning-based single-shot structured illumination microscopy architecture, which illustrates the interaction between the five GAN architectures and the individual encoders and the single decoder of the DU-Net to generate the final SR image [91].](/document/doi/10.1515/mim-2024-0032/asset/graphic/j_mim-2024-0032_fig_019.jpg)

Schematic overview of the proposed deep learning-based single-shot structured illumination microscopy architecture, which illustrates the interaction between the five GAN architectures and the individual encoders and the single decoder of the DU-Net to generate the final SR image [91].

In order to train and validate their method, the authors used simulated data consisting of random binary images of points, lines and curves, superposed illuminating patterns and convolved with the point spread function (PSF) and compared the performance with an alternative super-resolution algorithm called OpenSIM. The evaluation reported that the FWHM value of a wide-field image was 423 nm for a single signal point with the consideration that the wavelength is 550 nm and the resolution for a wide-field image is 420 nm. For the SR version of the same signal point, the FWHM values were 211 nm (OpenSIM 6), 213 nm (OpenSIM 1), and 213 nm (DU-Net), representing a 50.35 % improvement in their proposed method with respect to the LR image. They also tested their model on a high-throughput gene sequencing scenario as the imaging speed and the quantity of DNBs (DNA nanoballs) that may be detected by fluorescence microscopy define the throughput of the gene sequencing method inside a specific field of view. For this, they scanned the whole DNB array, imaging each field of view with a OpenSIM reconstruction using 6 exposures, as well as a single exposure reconstruction using their proposed method. This evaluation was carried out on four different bases A, C, G and T and concluded that the resolution produced by DU-Net improved by 68.27 %, 64.47 %, 60.92 % and 59.85 % respectively in comparison to the widefield reference image. Their method also produced results with very similar quality as the OpenSIM reconstruction (SSIM of 0.82–0.92) while requiring only 1/6 of the imaging time. Therefore, these results have demonstrated that their method is designed to maximize the extraction of important features and improve the SR reconstruction of the raw input images. This leads to a high-throughput for gene sequencing by being able to balance spatial resolution and imaging speed appropriately.

3.1.5 Tabular summary for light optical microscopy

Table 1 gives a summary of literature sources, the performance metrics, loss functions, the microscopy method as well as training data used.

Deep learning-based methods in super resolution: light optical microscopy comparison.

| Methods | Performance metric | Loss function | Microscopy method | Training data | LR | HR |

|---|---|---|---|---|---|---|

| Ouyang et al., ANNA-PALM [85] | MS-SSIM | MAE+MS-SSIM and MSE | Wide-field | Real+synthetic | Not specified | 2560×2560 px |

| Corsetti et al. [86] | FWHM | Adversarial loss regularized by MSE and structure-similarity estimators | Wide-field | Real | 2 µm/px | 0.5 µm/px |

| Qiao et al. [87] | SNR, SSIM | Customized version of MAE, MSE | SIM | Real | 64×64 px | 128×128 px |

| Qiao et al., DFCAN [5] | NRMSR, SSIM | MSE | WF and SIM | Real | 128×128 px | 256×256 px |

| Zhang et al., RFGANM [89] | PSNR and SSIM | MSE, feature reconstruction loss+adversarial loss | LSFM | Real | 100×100 px | 400×400 px |

| Wang et al. [13] | SSIM and SNR | MSE | STED, wide-field fluorescence, and TIRF | Real | 64×64 px | 1024×1024 px |

| Huang et al., TCAN [14] | SNR and resolution quality | MSE, binary cross-entropy (BCE), SSIM | STED, confocal microscopy | Real | 256×256 px | 512×512 px |

| Nehme et al., Deep-STORM [12] | NMSE and SNR | MAE and MSE | SMLM | Real+synthetic | 26×26 px | 208×208 px |

| Vizcaíno et al., LFMNet [78] | SSIM and SNR | MSE | Confocal microscopy | Real+synthetic | 112×112 px | 1287×1287 px |

| Christensen et al. [79] | PSNR, SSIM | Not specified | SIM | Real+synthetic | Not specified | Not specified |

| Shah et al., RED-Net [6] | PSNR, SSIM | MSE | SIM | Real | 512×512 px | 1024×1024 px |

| Wang et al., dpcCARTs-Net [81] | FWHM, SNR | MSE, MAE, SSIM | Wide-field | Real | 64×64 px | Not specified |

| Kagan et al. [10] | PSNR | MSE | TIRF | Real | 512×512 px | 2048×2048 px |

| Lu et al., VsLFM [82] | SNR, cut-off frequency, SSIM | MAE | Light-field | Real+synthetic | Variable | Variable |

| Zheng et al. [11] | FSC, Crouther criterion | MAE | XRF | Real | 140×140 px | 560×560 px |

| Hu et al. [83] | Correlation coefficient R | MAE | Dark-field | Real | 96×96 px | 400×400 px |

| Chen et al., CR-SIM [84] | PSNR, SSIM | Not specified | SIM | Real+synthetic | 65 nm | Not specified |

| Song et al., SRLN [7] | PSNR, SSIM | MAE | SIM | Real | 128×128 px | 256×256 px |

| Zhang et al., DL-based single-shot SIM [91] | FWHM, SSIM | RMSE | SIM | Synthetic | 256×256 px | Not specified |

| Zu et al., SwinT-SRNet [4] | Precision, recall, specificity, F1-score, accuracy | MAE | Bright-field | Real | Variable | 416×416 px |

3.2 Electron microscopy