Abstract

Background

The cognitive pathways that lead to an accurate diagnosis and efficient management plan can touch on various clinical reasoning tasks (1). These tasks can be employed at any point during the clinical reasoning process and though the four distinct categories of framing, diagnosis, management, and reflection provide some insight into how these tasks map onto clinical reasoning, much is still unknown about the task-based clinical reasoning process. For example, when and how are these tasks typically used? And more importantly, do these clinical reasoning task processes evolve when patient encounters become complex and/or challenging (i.e. with contextual factors)?

Methods

We examine these questions through the lens of situated cognition, context specificity, and cognitive load theory. Sixty think-aloud transcripts from 30 physicians who participated in two separate cases – one with a contextual factor and one without – were coded for 26 clinical reasoning tasks (1). These tasks were organized temporally, i.e. when they emerged in their think-aloud process. Frequencies of each of the 26 tasks were aggregated, categorized, and visualized in order to analyze task category sequences.

Results

We found that (a) as expected, clinical tasks follow a general sequence, (b) contextual factors can distort this emerging sequence, and (c) the presence of contextual factors prompts more experienced physicians to clinically reason similar to that of less experienced physicians.

Conclusions

These findings add to the existing literature on context specificity in clinical reasoning and can be used to strengthen teaching and assessment of clinical reasoning.

Introduction

Background

Clinical reasoning is a complex phenomenon that is associated with taking a history, performing a physical exam, ordering and interpreting laboratory and/or radiographic tests (at times) as well as designing a management plan that is appropriate for a patient’s circumstances and preferences [1], [2], [3]. It comprises processes that allow the clinician to properly diagnose and manage an illness, requiring wisdom, insight, and experience [4]. Deconstructing this inherent complexity somewhat, Juma and Goldschmidt delineated 26 tasks that physicians may implement when encountering patients [1]. The first three tasks focus on framing the patient encounter, the next eight tasks [4], [5], [6], [7], [8], [9], [10], [11] emphasize the diagnostic process, the following 13 tasks [12], [13], [14], [15], [16], [17], [18], [19], [20], [21], [22], [23], [24] address the management of care, and the remaining two tasks [25], [26] entail self-reflection. Through these stages of framing, diagnosis, management, and reflection, they identified that physicians assess priorities, differentiate most likely diagnosis, and establish management plans [1]. Similarly, McBee and colleagues studied diagnostic and therapeutic reasoning comprised of the aforementioned framework using carefully constructed videotapes [5]. McBee et al. highlight the role of context and expert performance in driving variability across clinical encounters and how physicians employ a variety of clinical reasoning tasks [5]. Additionally, their study findings suggested that resident physicians used tasks in a varied and non-sequential manner [5]. Both of these sets of authors speculate about patterns that might emerge in these tasks to help us better understand the process of clinical reasoning; in this study, we begin this process.

Situated cognition and context specificity

Our investigation was informed by situated cognition [6]. Situated cognition is grounded in the notion that thinking (in this case clinical reasoning) is situated (or located) in experience, dividing the clinical experience into physician, patient, and environmental facets (factors) and their interactions [7]. Clinical reasoning is believed to emerge from these various interactions and thus, modification of or differences in these situation-specific elements would be expected to impact clinical reasoning. As such, there may be more than one path to diagnosis and this non-linearity can impact a physician’s diagnostic and management decisions.

Evidence for this situated cognition approach includes the finding of context specificity [8]. Context specificity is the phenomenon whereby a physician arrives at two different diagnoses for two patients with the same symptoms, findings, and underlying diagnosis, due to the patients’ differing situations. Durning et al. explored the interactions among the physician, patient, and environment in their study of contextual factors (alterations of context such as a patient suggesting a diagnosis, the electronic medical record improperly functioning, or the physician being sleep deprived – patient, environment, and physician contextual factors, respectively) and how they impact diagnostic reasoning of board-certified internists [7]. They found that experts’ performance was impacted by contextual factors and this observed impact was consistent with the tenets of situated cognition and cognitive load theories. Cognitive load theory pertains to our limited cognitive architecture and how we can only hold or process a limited number of pieces of information in our short-term (or working) memory [9]. High cognitive load is believed to be a potential mechanism underpinning context specificity [10].

Here, we investigate the presence of emerging patterns – both sequential (from case beginning to end across all participants) and comparative (comparing the aforementioned sequences in cases with and without contextual factors) – that we believe could yield valuable insights into context specificity and situated cognition. This investigation can reveal how clinical reasoning tasks unfold for physicians and how contextual factors may affect this process. First, we sought to determine if a discernable pattern emerges in physicians’ clinical reasoning. Second, we explored whether specific contextual factors influenced the observed patterns. And finally, we sought to delineate whether these situation-specific (contextual factors present or not) patterns provide additional evidence of the impact of contextual factors on clinical reasoning performance.

Building upon the work of McBee et al. [5], we provide a visual, sequential map that elucidates the patterns extant in clinical reasoning tasks and how they might be altered by contextual factors. Adding to earlier work on patterns in clinical reasoning [11], we examine the clinical reasoning process, or the tasks that physicians complete during a patient encounter. This process-oriented, sequence-based approach expands on previous work that examined the prevalence of epistemic activities in clinical reasoning [12]. Consistent with situated cognition, we hypothesize that this is not a linear process, rather one that is emergent, as clinical reasoning is iterative in nature. More specifically, we predicted that the sequence of task categories would unfold in a way similar to a typical clinical encounter. In other words, broadly, the clinical reasoning process begins with taking a patient’s history (framing), performing a physical and generating a diagnosis (diagnosis), developing a management plan (management), and finally, reflecting on their overall reasoning process (reflection). Within this broad pattern, we predicted smaller iterative cycles of some of these task types. Such findings could help inform our understanding of clinical reasoning and context specificity and provide additional evidence for situated cognition as an appropriate theory for exploring clinical reasoning. Exploring seemingly varied and non-sequential patterns through the lens of context specificity may not only build on what script theory predicts but also allows us to better understand how clinical reasoning unfolds as contexts shift. Moreover, the emergence of a lucid pattern may provide a useful framework for teaching and assessing clinical reasoning processes.

Materials and methods

Sequence visualization of task categories

The sample included for this analysis was derived from a larger study focused on clinical reasoning performance [13]. Physicians either viewed a videotape or participated in a standardized patient encounter and then they completed a post-encounter form (PEF) [14]. Immediately following PEF completion, they were asked to think aloud about how they reached their diagnosis and treatment plan while either re-watching the videotape or watching a video of the standardized patient encounter. Physicians viewed two videotapes or participated in two standardized patient encounters. In short, 60 think-aloud transcripts from 30 physicians who participated in two separate cases – one with a contextual factor and one without – were coded for 26 clinical reasoning tasks [1]. Contrary to earlier work [15], rather than focusing solely on initial diagnostic hypotheses, we introduced contextual factors as distractors throughout the clinical reasoning process. For the purpose of this paper, we refer to the organic order in which tasks were demonstrated during a think-aloud as “steps”. For example, a participant may exhibit task 3 as their first step, task 8 as their fourth step, and task 24 as their final step and may repeat some tasks multiple times.

Frequencies of each of the 26 tasks were aggregated and categorized by framing the encounter (tasks 1–3), diagnosis (tasks 4–11), management (tasks 12–24), and reflection (tasks 25 and 26). These categorical counts were visualized using stacked area charting via Microsoft Excel to better understand the emerging, sequential nature of clinical reasoning tasks. Additionally, based on the frequencies, percentages were also calculated in order to numerically visualize the task category sequences.

“Crests” in clinical reasoning task categories

In addition to visualizing said data, based on percentages of task categories, we sought to identify “crests” in the demonstration of clinical reasoning tasks. For the purposes of this study, the highest crest, or surge in the percentage of tasks in a particular category, was identified in each task category. For example, the largest crest constitutes the highest average percentage of tasks during the think-alouds. We employed this crest visualization technique in an effort to better understand when particular task categories peaked in usage. We highlight each crest in our aggregate sample and compare crests between cases with and without contextual factors and participant clinical experience.

Additionally, because each think-aloud accounts for one task count per step, a range of 0–60 task counts (representing 0–60 think-alouds) could be observed for each step. As such, any steps that amounted to less than 11 task counts (or 11 think-alouds) were removed from the dataset. For the purposes of our study, this ensured that all four task categories were consistently and adequately represented and prevented skewed percentages as a result of extended think-alouds that tapered off into a repetitive, single task count. This resulted in different ranges, but allowed for a cleaner and more accurate representation of the cresting within the task categories.

Results

The range of steps per participant was 15–85 and the average number of steps for all physicians was 36.58 [standard deviation (SD)=13.39]. In other words, one physician required 15 steps in order to complete their think-aloud while another required 85, regardless of contextual factor presence. This range indicates the varying number of tasks that physicians completed during each case.

General sequence and pattern

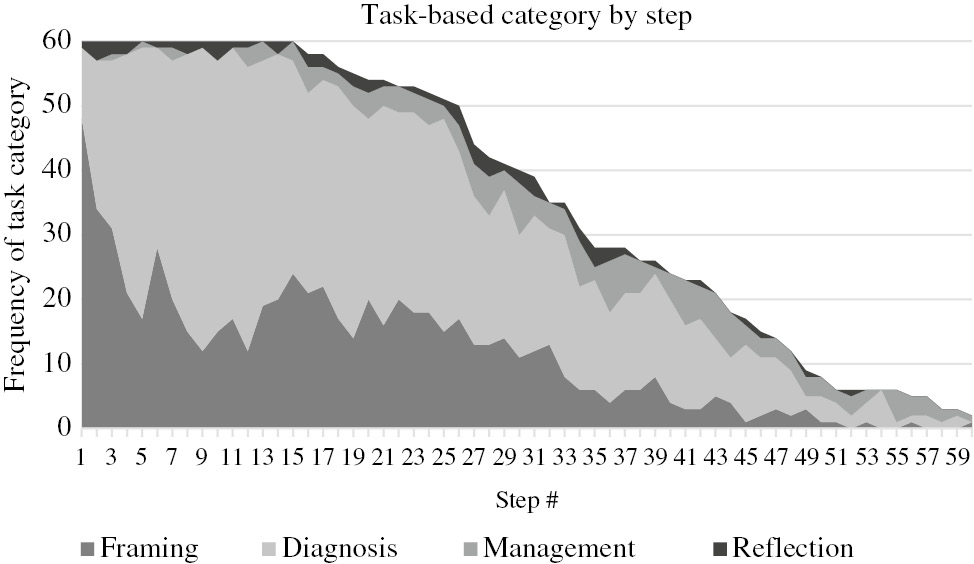

Our first visualization represents all 60 transcripts (or 30 physicians), i.e. think-alouds from all physicians regardless of contextual factor presence (Figure 1). Two outliers (think-alouds that ended with repeated identical tasks) were removed from our visual analysis, resulting in a maximum of 60 steps. This allowed us to view the temporal nature of clinical reasoning tasks as it unfolded during the think-alouds, in aggregate.

Task-based category by step (n=60).

During the first 15 steps, regardless of contextual factor presence or not, all participants are engaged in a clinical reasoning task. At step 15, our first participant concludes their think-aloud and as a result, our total task count begins its decline. At step 1, 80% of participants are engaged in a framing task, 18% in a diagnosis task, 0% in a management task, and 2% in a reflection task. As the think-aloud progresses into steps 8–12, framing tasks decline sharply (~23%) and diagnosis tasks increase (~75%). Moving further along the think-aloud process, we see management tasks begin to pick up at step 36 and continue until the end of the think-aloud. Reflection seems to occur throughout the think-aloud process; though, the two reflection tasks seem to peak at step 35.

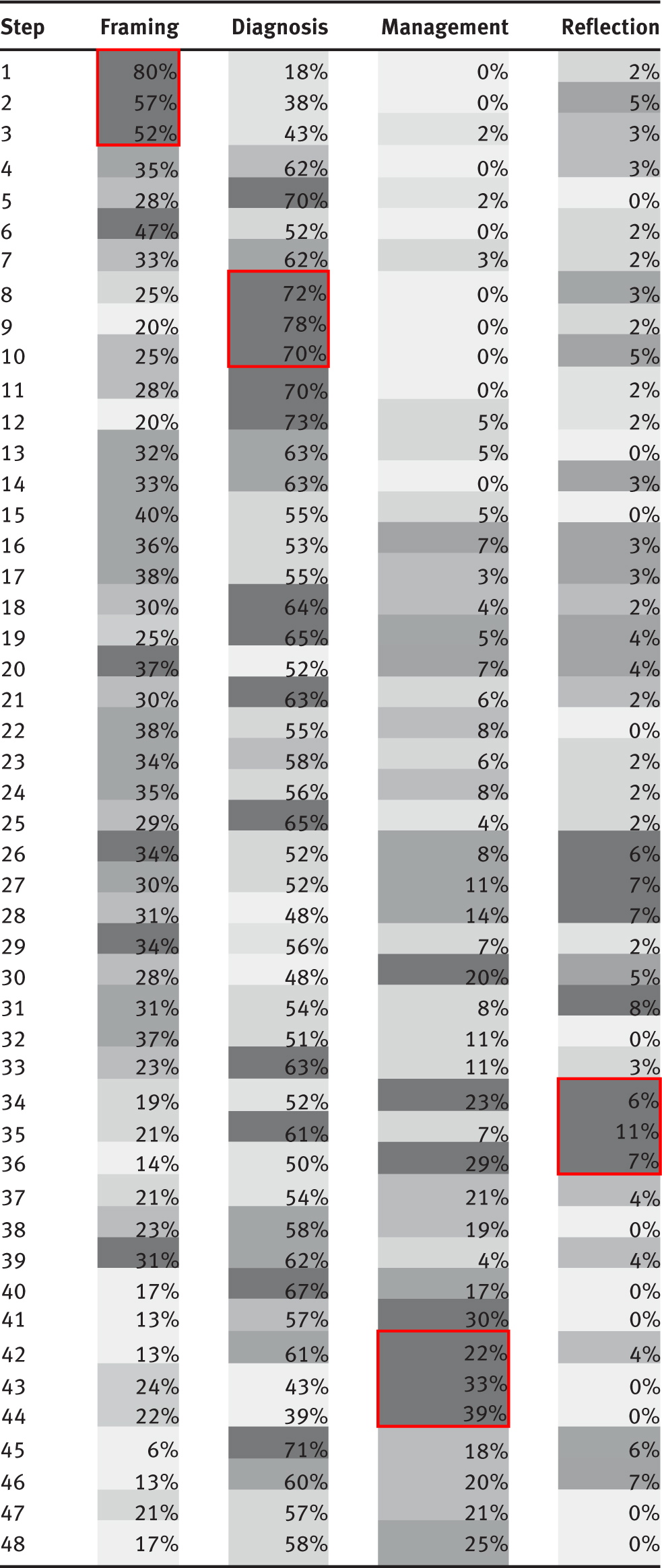

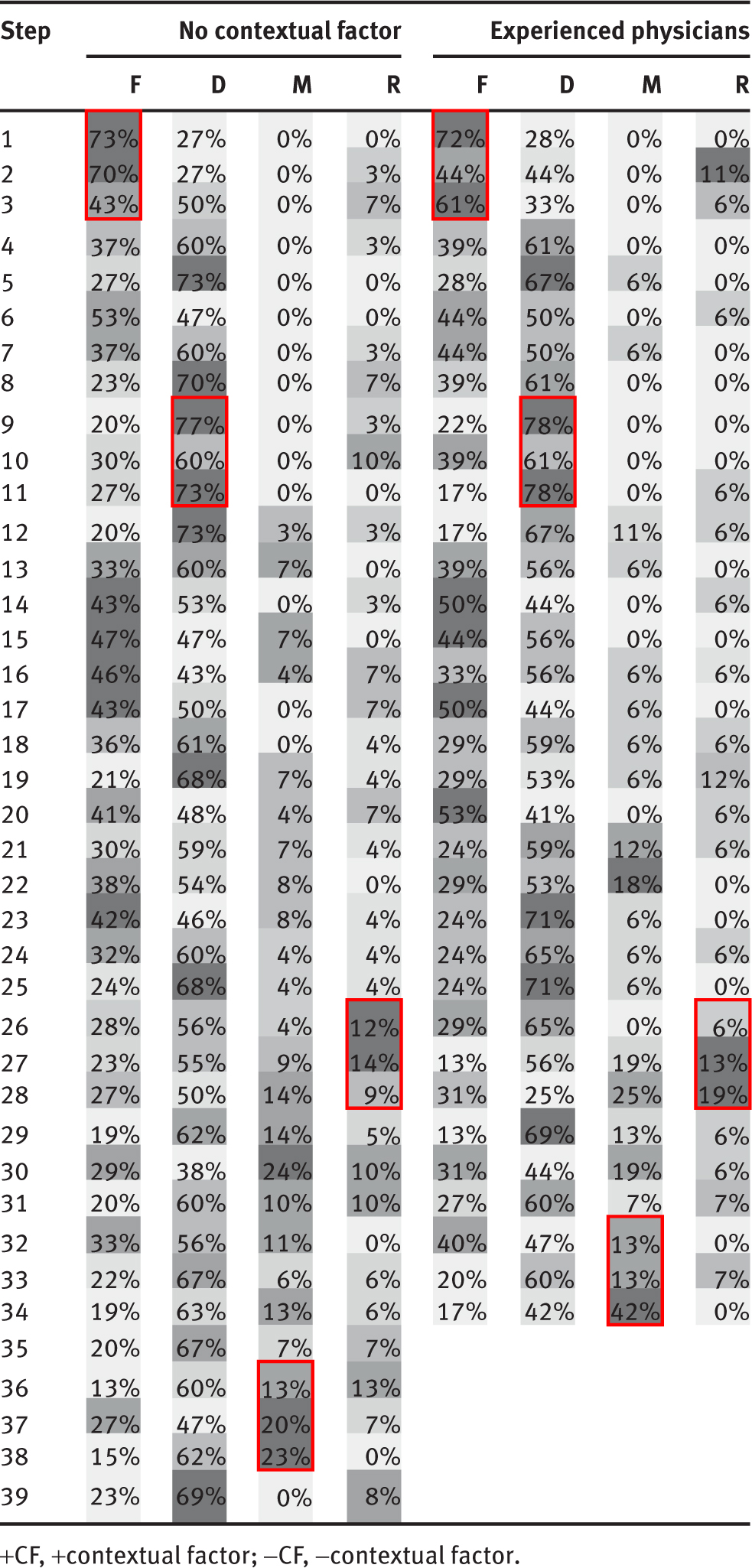

In order to further illustrate the sequential nature of the task-based categories, Table 1 showcases the percentage calculations as bands of intensity. In other words, the darker the band, the higher the frequency of a given task category (framing, diagnosis, management, or reflection). The steps within a crest are highlighted in red.

Crests in clinical reasoning task categories (n=60).

|

As Table 1 indicates, framing tasks peak during steps 1–3, diagnosis tasks peak during steps 8–12, management tasks peak during steps 36–44, and reflection tasks peak during steps 27–36.

Contextual factor patterns

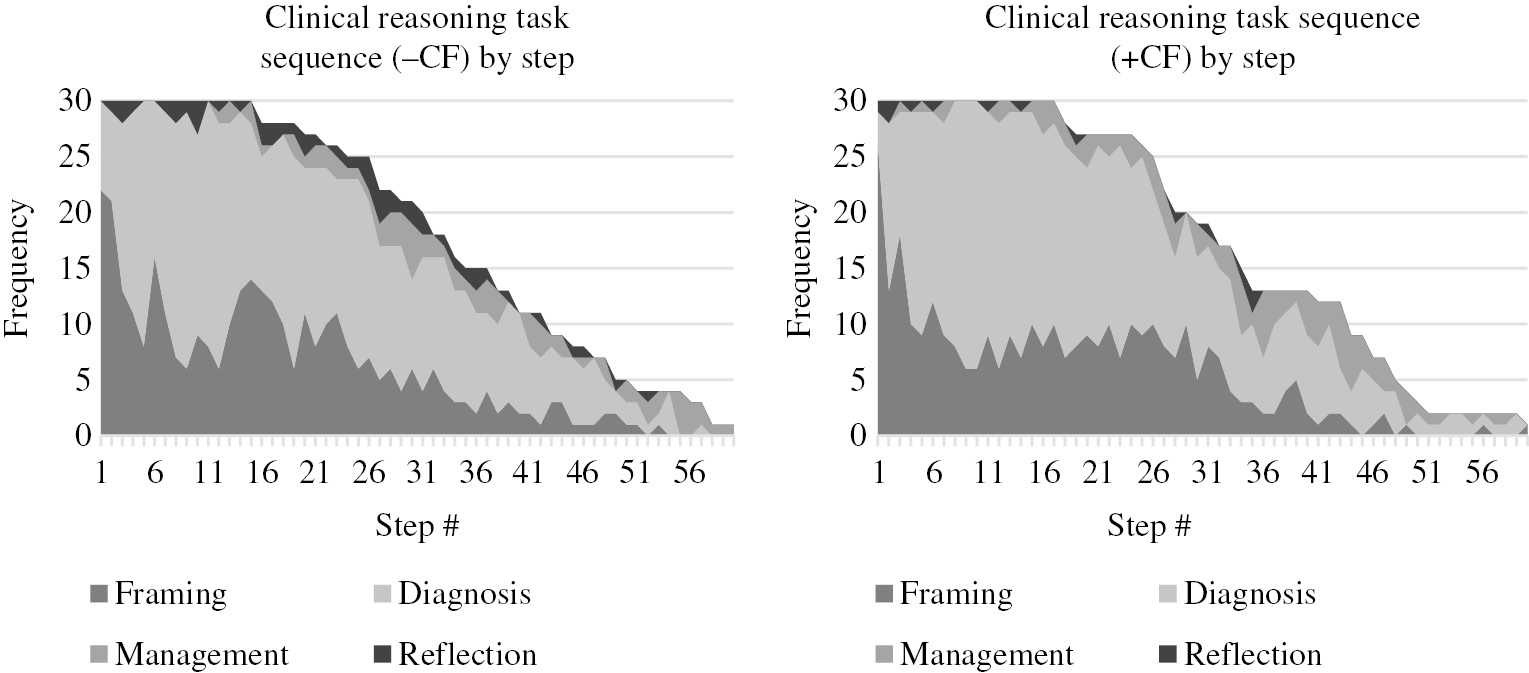

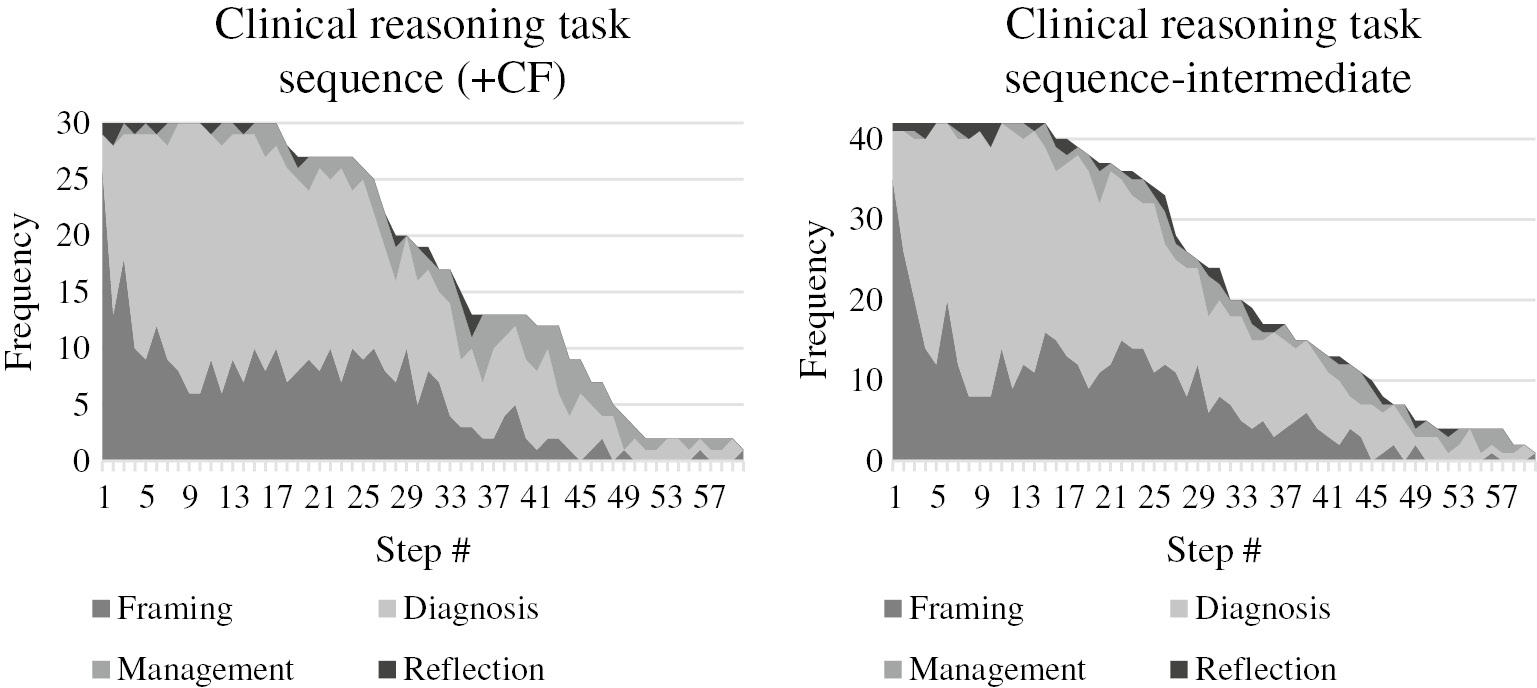

The difference between cases with and without contextual factors is shown in Figure 2. In the absence of contextual factors, the lines demonstrate larger increases and decreases of various task categories, primarily when framing the encounter. For instance, the “peaks” and “valleys” observed in the frequencies are much steeper as physicians progress through their early tasks (steps 1–16). This variability in amplitude continues throughout the think-aloud but is much more prominent through step 25. Conversely, in the presence of contextual factors, the lines appear less varied. This trend is consistent across the four clinical reasoning task categories. The visual representations depicted in Figure 2 capture this phenomenon.

Task-based category by step, without and with a contextual factor (n=30).

In the absence of a contextual factor, not only does there seem to be more variability while framing the encounter, but framing tasks also seem to be occurring at a higher rate. Also, in the absence of a contextual factor, management tasks do not occur until much later in the think-aloud process (step 12 vs. step 3 in the presence of a contextual factor). And finally, as documented by the thicker black band across the top of both charts, reflection seems to occur most consistently and at a much higher rate in the absence of a contextual factor.

Contextual factors and experience

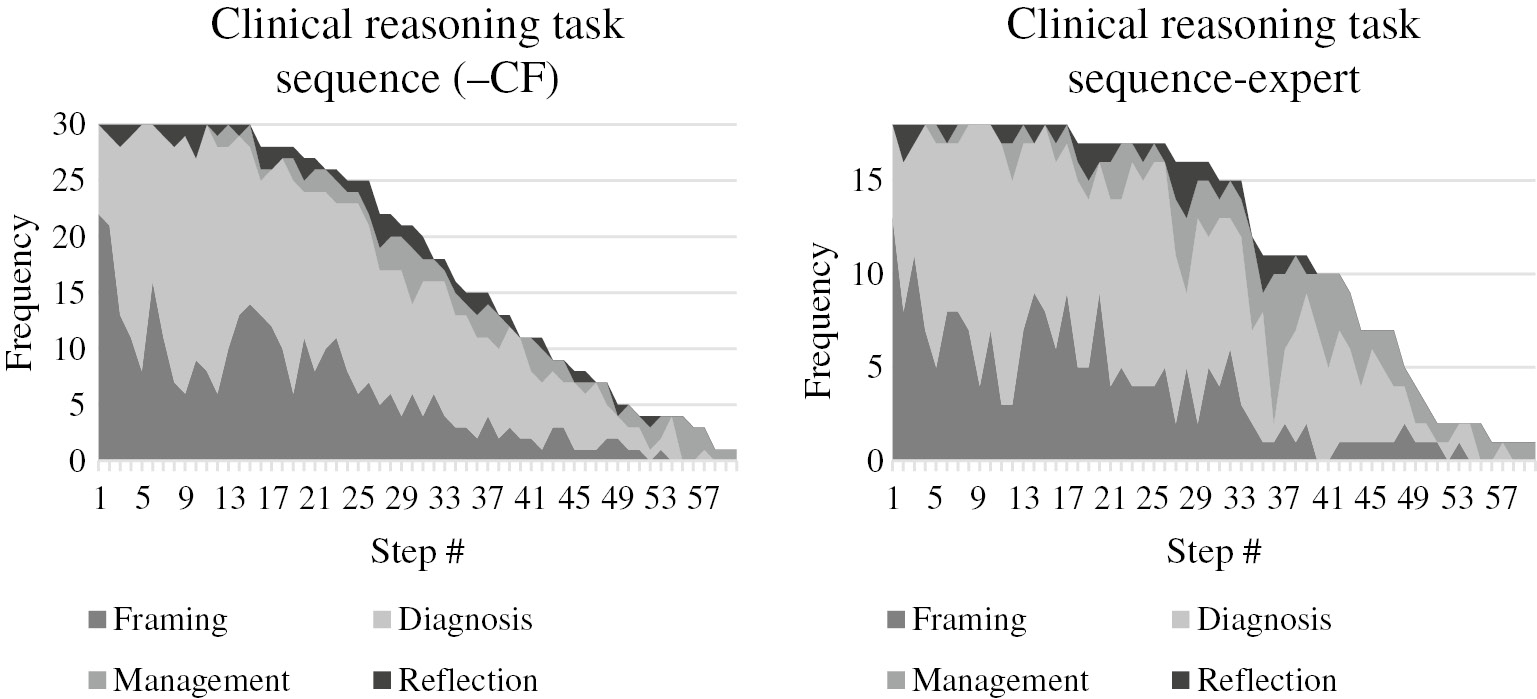

The differences in patterns in the absence and presence of contextual factors seem to resemble the differences in patterns between more and less experienced physicians [over a decade (practicing physicians) compared to less than a decade (residents)]. The fluid up and down movements are present in the reasoning tasks displayed by experienced physicians in the absence of contextual factors. Figures 3 and 4 and Tables 2 and 3 show how experienced physicians become more like less experienced physicians in the presence of contextual factors, which serve as cognitive “distractors”.

Task-based category by step, no contextual factor (n=30) vs. experienced physicians (n=18).

Comparison of crests in clinical reasoning task categories: no contextual factors vs. experienced physicians [all −CF think-alouds, n=30 vs. experienced think-alouds (+CF and −CF), n=18].

|

Table 2 further delineates the relationship between clinical reasoning task sequencing in the absence of a contextual factor and that of an experienced physician. The crests – highlighted in red and also indicated by darker shaded bands in Tables 2 and 3 – are occurring at nearly identical sequence points for experienced physicians (regardless of whether a contextual factor was present or not) and the whole group of physicians reasoning in the absence of a contextual factor. In other words, we are seeing the highest crest forming at steps 1–3 (framing), 9–11 (diagnosis), 32–38 (management), and 26–28 (reflection) in both think-aloud samples.

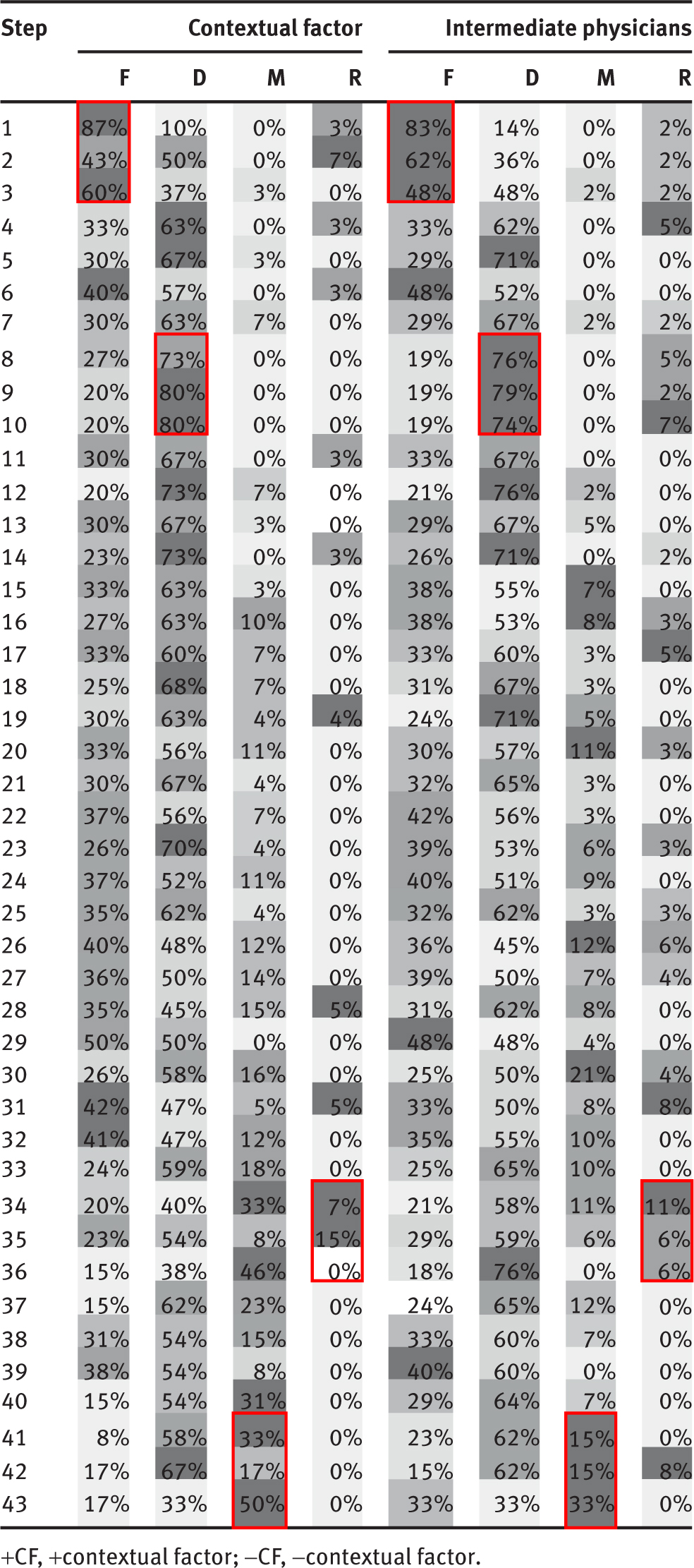

Comparison of crests in clinical reasoning task categories: contextual factors vs. intermediate physicians [all +CF think-alouds, n=30 vs. intermediate think-alouds (+CF and −CF), n=42].

|

Figure 4 offers a different glimpse into the patterns and sequences of intermediate physicians (in the presence and absence of a contextual factor) and all physicians performing in the presence of a contextual factor. The light gray band representing diagnosing tasks is much thicker for both samples and management and reflection tasks are exercised much less frequently (as noted by the thinner gray and black bands).

Task-based category by step, contextual factor (n=30) vs. intermediate physicians (n=42).

As shown in Table 3, the crests observed in contextual factor think-alouds and intermediate physician think-alouds are synchronous. The highest crest observed in framing occurs during steps 1–3, in diagnosis during steps 8–10, in management during steps 41–43, and in reflection during steps 34–36.

Discussion

This study investigated physicians’ clinical reasoning tasks in an effort to identify if discernable patterns exist. The sequential pattern of clinical reasoning tasks that emerged from this investigation demonstrates that overall, framing tasks are typically the first employed, followed by diagnostic, and then management. This is consistent with expectations given the sequential nature of taking a history followed by performing a physical and then generating diagnostic and management decisions. Overall, reflection, though occurring throughout all think-aloud processes, seems to take place closer to the end of the think-aloud process, when present. In fact, based on our analysis, we believe it may occur more frequently once management tasks begin to increase. This runs contrary to our initial hypothesis and may suggest that physicians begin to engage in a more thoughtful and effortful processing of reflection prior to establishing a management plan. That is, physicians may reflect on an established diagnostic label and then come up with a plan that is specific to a patient’s circumstances and preferences. Empirical evidence supports that this type of iterative reflection contributes to adopting a new perspective that may yield optimal clinical decisions [16]. This back and forth movement may also be indicative of physicians moving from higher- to lower-level cognitive reasoning activities and/or may reflect that management reasoning is less a matter of pattern recognition than arriving at a diagnostic label. Dewey’s seminal work characterized this back and forth, or double, movement as an essential component of reflective thinking; this important reasoning process depicts the evolution between a plausible conditional idea and conflicting details until a more coherent perspective is corroborated [17]. This sort of a fluid process, whereby individuals engage in deliberate “refining of cognitive mechanisms”, has been characterized as expert-level performance [18]. Similarly, Feltovich et al. [19] found that experienced physicians abandoned rigid scripts to flexibly adjust and arrive at accurate diagnosis compared to their novice counterparts. Overall, the steps that physicians take and their pathway to diagnosis have been identified as important elements in achieving an accurate and timely diagnosis [6]. Herein, as expected, physicians displayed a variety of paths (different steps) before arriving at diagnostic and management decisions in these cases.

While this analysis captures the general trends and patterns observed, it is important to highlight that this is not a linear process because all task types, in some magnitude, are in use throughout the think-alouds. This is consistent with situated cognition theory which maintains that clinical reasoning emerges from interactions among the physician, the patient, and the environment. For instance, a handful of physicians employed reflection as their first task, others diagnosis, others framing, and some management. This variability suggests the critical role context likely plays in driving clinical reasoning processes, such that each physician proceeds uniquely even when presented with an identical case.

Guided by situated cognition, we delved into our emerging pattern to examine the role of contextual factors on clinical reasoning task sequences. Our findings potentially demonstrate the constraints contextual factors pose on cognitive processes, such that the fluidity and flexibility of clinical reasoning, mimicking that of experienced physicians, is only evident in the absence of contextual factors. Perhaps this is an indication that the presence of a contextual factor thwarts both reflective and management activities. Our data suggest that this fluidity may allow for more cognitive flexibility, which leads to a higher rate of framing tasks, a delayed transition into management tasks, and more opportunities to reflect on their patient encounter. In other words, these non-linear movements indicate that physicians may more frequently attempt to re-frame their reasoning processes and as a result, begin their management tasks much later. Additionally, these back and forth movements may help physicians reflect more often during their clinical reasoning process. This suggests that clinical reasoning is a dynamic process that is context specific. Whether this process leads to a more accurate diagnosis deserves further examination. These patterns are in line with previous research findings that support the role of cognitive overload, as a result of contextual factors, and its potential to inhibit cognitive flexibility [4], [7].

Finally, we identified additional evidence of contextual factors indeed disparately impacting clinical reasoning performance. As shown in Figures 2–4 and Tables 2 and 3, the presence of contextual factors has the potential to interrupt the range of clinical reasoning processes. In particular, in the presence of contextual factors, experienced physician patterns are similar to the performance of less experienced physicians. A plethora of studies have examined the cognitive and metacognitive differences between experts and novices [20], [21], [22], [23]. Reingold and Sheridan [24] and Taylor [25] are among the many who have studied the differences in perceptions between novices and experts in the medical field. Similarly, Gobet et al. [26] asserts that while medical students begin with a list of possible diagnoses, more experienced physicians analyze the symptoms to form a diagnosis. Future research could disaggregate task categories into their 26 distinct tasks to further investigate whether experienced physicians are focusing on more complex management tasks.

Gobet et al. [26] and Greeno and Simon [27] also argued that experts often rely on general heuristics (pattern recognition) and have the ability to successfully integrate their domain-specific knowledge to other areas. This may help explain the increase in amplitude, or likely cognitive flexibility, that emerged when examining sequence in experienced physicians’ think-alouds. The ability to apply patterns and loop in domain-specific knowledge may provide additional insight as to why more experienced physicians seem to “jump” back and forth between clinical reasoning tasks [28]. This is not to suggest that physicians deviate from “common clinical reasoning signposts” at all times; rather, under complex conditions, one such example being when contextual factors are present, “seasoned clinicians are attentive to a wide variety of dimensions of a clinical encounter” as Charlin et al. cogently presented [29]. Additionally, and consistent with the literature, when presented with a potentially more cognitively demanding task (presence of contextual factors), experts have a tendency to employ more robust problem representation where novices rely upon superficial features [30], [31]. This is corroborated by the comparative patterns observed herein. Based on our analysis, less experienced physicians seem to perform in manners that mimic a proclivity for “more superficial representations”. Conversely, experienced physicians seem to perform in ways that demonstrate that they have more robust representations of a clinical encounter.

Our study was limited by several factors. First, our sequential data were derived from a larger study on clinical reasoning tasks. As such, we employed secondary data that were not designed or collected with the sole purpose of examining patterns and sequences in clinical reasoning tasks. Second, the observed impact of a contextual factor on clinical reasoning task sequencing may be a result of the specific type of contextual factor chosen for the study. Third, the sample breakdown of physician experience was skewed toward the less experienced group (42 vs. 18 experienced). A more balanced range of experience levels in participants may have yielded more discernible patterns in our comparative analysis. And fourth, the use of think-alouds presents unique limitations that prevent a more comprehensive and in-depth examination of participants’ thought processes. Participants may not have reflective awareness, or insight, of their own clinical reasoning processes and thus, may not intentionally verbalize these tasks when engaged in a think-aloud process.

These emerging sequences and patterns can be used to inform teaching and assessment of clinical reasoning. Though Juma and Goldszmidt disaggregated the clinical reasoning process into 26 separate teachable tasks, our findings provide further evidence that these tasks mirror the sequence of a traditional patient encounter. As such, instructors can target tasks within any of the four categories (framing, diagnosis, management, and reflection) based on a clinical encounter. This categorization and sequencing of clinical reasoning may help trainees navigate the complexities of a patient encounter. For example, if a trainee is having challenges revolving around the diagnosis of a patient, an instructor may want to consider focusing on any of the first three clinical reasoning tasks to help the trainee improve how he/she may be framing the encounter. Extending this example further, if a trainee is having issues related to the patient’s management plan, an instructor may choose to initially encourage the trainee to practice tasks 1–11 in an effort to help them build a stronger foundation of evidence prior to establishing a management plan. While additional empirical investigation is needed, providing trainees with such a framework may provide a foundation for establishing more optimal clinical reasoning practices early on in medical education. Previous research demonstrates cognitive flexibility and higher-level reflection skills are trainable and can be developed [6].

If, in fact, contextual factors regress clinical reasoning performance as our findings suggest, this may help instructors design educational experiences, such as simulations, that can be used in continuing medical education. Relying on the concept of deliberate practice [32], this consistent and continued practice in the presence of contextual factors may lead to improved diagnostic accuracy and error reduction.

Our findings provide further insight into the relationship between clinical reasoning and context specificity and how our observed patterns may serve as a useful tool for instruction and assessment of clinical reasoning. This research adds to the existing body of evidence for situated cognition as an appropriate theory for exploring clinical reasoning and informs how future studies might further inform diagnostic and management accuracy. Furthermore, establishing an awareness of the extant sequential patterns promotes considerable progress toward a more complete understanding of clinical reasoning steps. Future research may fruitfully be directed at exploring these task-based patterns and processes to reduce error and enhance diagnostic precision.

Author contributions: All authors have accepted responsibility for the entire content of this manuscript and approved its submission.

Research funding: This study was supported by a grant from the JPC-1, CDMRP – Congressionally Directed Medical Research Program (No. NH83382416).

Competing interests: Authors state no conflict of interest.

Informed consent: Informed consent was obtained from all individuals included in this study.

Ethical approval: This study was approved by the Institutional Review Boards at all participating institutions.

Disclaimer: The views expressed in this article are those of the authors and do not necessarily reflect the official position or policy of the U.S. Government, Department of Defense, Department of the Navy, or the Uniformed Services University.

References

1. Juma S, Goldszmidt M. What physicians reason about during admission case review. Adv Health Sci Educ 2017;22:691–711.10.1007/s10459-016-9701-xSearch in Google Scholar PubMed PubMed Central

2. Schmidt HG, Norman GR, Boshuizen HP. A cognitive perspective on medical expertise: theory and implications. Acad Med. 1990;65:611–21.10.1097/00001888-199010000-00001Search in Google Scholar PubMed

3. Eva K. What every teacher needs to know about clinical reasoning. Med Educ 2005;39:98–106.10.1111/j.1365-2929.2004.01972.xSearch in Google Scholar PubMed

4. Durning S, Artino A, Pangaro L, van der Vleuten C, Schuwirth L. Context and clinical reasoning: understanding the perspective of the expert’s voice. Med Educ 2011;45:927–38.10.1111/j.1365-2923.2011.04053.xSearch in Google Scholar PubMed

5. McBee E, Ratcliffe T, Goldszmidt M, Schuwirth L, Picho K, Artino A, et al. Clinical reasoning tasks and resident physicians: what do they reason about? Acad Med 2016;91:1022–8.10.1097/ACM.0000000000001024Search in Google Scholar PubMed

6. Durning S, Artino A. Situativity theory: a perspective on how participants and the environment can interact: AMEE Guide no. 52. Med Teach 2011;33:188–99.10.3109/0142159X.2011.550965Search in Google Scholar PubMed

7. Durning S, Artino A, Boulet J, Dorrance K, van der Vleuten C, Schuwirth L. The impact of selected contextual factors on experts’ clinical reasoning performance (does context impact clinical reasoning performance in experts?). Adv in Health Sci Educ 2012;17:65–79.10.1007/s10459-011-9294-3Search in Google Scholar PubMed

8. ten Cate O, Durning S. Understanding clinical reasoning from multiple perspectives: a conceptual and theoretical overview. Principles and Practice of Case-based Clinical Reasoning Education. Springer, Cham; 2018:35–46.10.1007/978-3-319-64828-6_3Search in Google Scholar PubMed

9. Sweller J. Cognitive load theory. In: Mestre JP, Ross BH, editors. The psychology of learning and motivation: the psychology of learning and motivation: Cognition in education, Vol. 55. Cambridge, MA, USA: Elsevier Academic Press, 2011:37–76.10.1016/B978-0-12-387691-1.00002-8Search in Google Scholar

10. Mcbee E, Ratcliffe T, Picho K, Artino A, Schuwirth L, Kelly W, et al. Consequences of contextual factors on clinical reasoning in resident physicians. Adv Health Sci Educ 2015;20:1225–36.10.1007/s10459-015-9597-xSearch in Google Scholar PubMed

11. Kiesewetter J, Ebersbach R, Tsalas N, Holzer M, Schmidmaier R, RFischer M. Knowledge is not enough to solve the problems – the role of diagnostic knowledge in clinical reasoning activities. BMC Med Educ 2016;16:303.10.1186/s12909-016-0821-zSearch in Google Scholar PubMed PubMed Central

12. Lenzer B, Ghanem C, Weidenbusch M, Fischer M, Zottmann M, editors. Scientific reasoning in medical education: a novel approach for the analysis of epistemic activities in clinical case discussions. In Proceedings of the 5th International Conference for Research in Medical Education; 2017. doi: 10.3205/17rime02.10.3205/17rime02Search in Google Scholar

13. Konopasky A, Ramani D, Ohmer M, Battista A, Artino A, McBee E, et al. It totally possibly could be: how a group of military physicians reflect on their clinical reasoning in the presence of contextual factors. Mil Med 2020;185(Suppl 1):575–82.10.1093/milmed/usz250Search in Google Scholar PubMed

14. Durning SJ, Artino A, Boulet J, La Rochelle J, Van der Vleuten C, Arze B, et al. The feasibility, reliability, and validity of a post-encounter form for evaluating clinical reasoning. Med Teach 2012;34:30–7.10.3109/0142159X.2011.590557Search in Google Scholar PubMed

15. Hobus P, Schmidt H, Boshuizen H, Patel V. Contextual factors in the activation of first diagnostic hypotheses: expert-novice differences. Med Educ 1987;21:471–6.10.1111/j.1365-2923.1987.tb01405.xSearch in Google Scholar PubMed

16. Zelazo P. Executive function: reflection, iterative reprocessing, complexity, and the developing brain. Dev Rev 2015;38:55–68.10.1016/j.dr.2015.07.001Search in Google Scholar

17. Dewey J. How we think. Mineola, NY, USA: Courier Corporation, 1997.Search in Google Scholar

18. Ericsson K. Attaining excellence through deliberate practice: insights from the study of expert performance. In: Ferrari M, editor. The educational psychology series. The pursuit of excellence through education. Mahwah, NJ, USA: Lawrence Erlbaum Associates Publishers, 2002:21–55.Search in Google Scholar

19. Feltovich P, Spiro R, Coulson R. Issues of expert flexibility in contexts characterized by complexity and change. Expertise in context: human and machine. 1997:125-46.Search in Google Scholar

20. Ball L, Ormerod T, Morley N. Spontaneous analogising in engineering design: a comparative analysis of experts and novices. Design Studies 2004;25:495–508.10.1016/j.destud.2004.05.004Search in Google Scholar

21. Bedard J, Chi M. Expertise. Curr Dir Psychol Sci 1992;14:135–9.10.1111/1467-8721.ep10769799Search in Google Scholar

22. Cross N. Creative thinking by expert designers. J Design Res 2004;4:123–43.10.1504/JDR.2004.009839Search in Google Scholar

23. Dixon R. Selected core thinking skills and cognitive strategy of an expert and novice engineer. J STEM Teacher Educ 2011;48:7.10.30707/JSTE48.1DixonSearch in Google Scholar

24. Reingold E, Sheridan H. Eye movements and visual expertise in chess and medicine. In: Liversedge SP, Gilchrist I, Everling S, editors. Oxford handbook of eye movements, Oxford: Oxford University Press, 2011.10.1093/oxfordhb/9780199539789.013.0029Search in Google Scholar

25. Taylor P. A review of research into the development of radiologic expertise: implications for computer-based training. Acad Radiol 2007;14:1252–63.10.1016/j.acra.2007.06.016Search in Google Scholar PubMed

26. Gobet F, Johnston SJ, Ferrufino G, Johnston M, Jones MB, Molyneux A, et al. “No level up!”: no effects of video game specialization and expertise on cognitive performance. Front Psychol 2014;5:1337.10.3389/fpsyg.2014.01337Search in Google Scholar PubMed PubMed Central

27. Greeno J, Simon H. Problem solving and reasoning, 2nd ed. Atkinson R, Hernstein R, Lindzey G, Luce R, editors. New York: Wiley; 1988.Search in Google Scholar

28. Schuwirth L, Verheggen M, Van der Vleuten C, Boshuizen H, Dinant G. Do short cases elicit different thinking processes than factual knowledge questions do? Med Educ 2001;35:348–56.10.1046/j.1365-2923.2001.00771.xSearch in Google Scholar PubMed

29. Charlin B, Lubarsky S, Millette B, Crevier F, Audetat M, Charbonneau A, et al. Clinical reasoning processes: unravelling complexity through graphical representation. Med Educ 2012;46:454–63.10.1111/j.1365-2923.2012.04242.xSearch in Google Scholar PubMed

30. Hmelo-Silver C, Nagarajan A, Day R. “It’s harder than we thought it would be”: a comparative case study of expert–novice experimentation strategies. Sci Educ 2002;86:219–43.10.1002/sce.10002Search in Google Scholar

31. Larkin J, McDermott J, Simon D, Simon H. Expert and novice performance in solving physics problems. Science 1980;208:1335–42.10.1126/science.208.4450.1335Search in Google Scholar PubMed

32. Ericsson K. Deliberate practice and acquisition of expert performance: a general overview. Acad Emerg Med 2008;15:988–94.10.1111/j.1553-2712.2008.00227.xSearch in Google Scholar PubMed

©2020 Walter de Gruyter GmbH, Berlin/Boston

Articles in the same Issue

- Frontmatter

- Editorials

- Progress understanding diagnosis and diagnostic errors: thoughts at year 10

- Understanding the social in diagnosis and error: a family of theories known as situativity to better inform diagnosis and error

- Sapere aude in the diagnostic process

- Perspectives

- Situativity: a family of social cognitive theories for understanding clinical reasoning and diagnostic error

- Clinical reasoning in the wild: premature closure during the COVID-19 pandemic

- Widening the lens on teaching and assessing clinical reasoning: from “in the head” to “out in the world”

- Assessment of clinical reasoning: three evolutions of thought

- The genealogy of teaching clinical reasoning and diagnostic skill: the GEL Study

- Study design and ethical considerations related to using direct observation to evaluate physician behavior: reflections after a recent study

- Focused ethnography: a new tool to study diagnostic errors?

- Phenomenological analysis of diagnostic radiology: description and relevance to diagnostic errors

- Original Articles

- A situated cognition model for clinical reasoning performance assessment: a narrative review

- Clinical reasoning performance assessment: using situated cognition theory as a conceptual framework

- Direct observation of depression screening: identifying diagnostic error and improving accuracy through unannounced standardized patients

- Understanding context specificity: the effect of contextual factors on clinical reasoning

- The effect of prior experience on diagnostic reasoning: exploration of availability bias

- The Linguistic Effects of Context Specificity: Exploring Affect, Cognitive Processing, and Agency in Physicians’ Think-Aloud Reflections

- Sequence matters: patterns in task-based clinical reasoning

- Challenges in mitigating context specificity in clinical reasoning: a report and reflection

- Examining the patterns of uncertainty across clinical reasoning tasks: effects of contextual factors on the clinical reasoning process

- Teamwork in clinical reasoning – cooperative or parallel play?

- Clinical problem solving and social determinants of health: a descriptive study using unannounced standardized patients to directly observe how resident physicians respond to social determinants of health

- Sociocultural learning in emergency medicine: a holistic examination of competence

- Scholarly Illustrations

- Expanding boundaries: a transtheoretical model of clinical reasoning and diagnostic error

- Embodied cognition: knowing in the head is not enough

- Ecological psychology: diagnosing and treating patients in complex environments

- Situated cognition: clinical reasoning and error are context dependent

- Distributed cognition: interactions between individuals and artifacts

Articles in the same Issue

- Frontmatter

- Editorials

- Progress understanding diagnosis and diagnostic errors: thoughts at year 10

- Understanding the social in diagnosis and error: a family of theories known as situativity to better inform diagnosis and error

- Sapere aude in the diagnostic process

- Perspectives

- Situativity: a family of social cognitive theories for understanding clinical reasoning and diagnostic error

- Clinical reasoning in the wild: premature closure during the COVID-19 pandemic

- Widening the lens on teaching and assessing clinical reasoning: from “in the head” to “out in the world”

- Assessment of clinical reasoning: three evolutions of thought

- The genealogy of teaching clinical reasoning and diagnostic skill: the GEL Study

- Study design and ethical considerations related to using direct observation to evaluate physician behavior: reflections after a recent study

- Focused ethnography: a new tool to study diagnostic errors?

- Phenomenological analysis of diagnostic radiology: description and relevance to diagnostic errors

- Original Articles

- A situated cognition model for clinical reasoning performance assessment: a narrative review

- Clinical reasoning performance assessment: using situated cognition theory as a conceptual framework

- Direct observation of depression screening: identifying diagnostic error and improving accuracy through unannounced standardized patients

- Understanding context specificity: the effect of contextual factors on clinical reasoning

- The effect of prior experience on diagnostic reasoning: exploration of availability bias

- The Linguistic Effects of Context Specificity: Exploring Affect, Cognitive Processing, and Agency in Physicians’ Think-Aloud Reflections

- Sequence matters: patterns in task-based clinical reasoning

- Challenges in mitigating context specificity in clinical reasoning: a report and reflection

- Examining the patterns of uncertainty across clinical reasoning tasks: effects of contextual factors on the clinical reasoning process

- Teamwork in clinical reasoning – cooperative or parallel play?

- Clinical problem solving and social determinants of health: a descriptive study using unannounced standardized patients to directly observe how resident physicians respond to social determinants of health

- Sociocultural learning in emergency medicine: a holistic examination of competence

- Scholarly Illustrations

- Expanding boundaries: a transtheoretical model of clinical reasoning and diagnostic error

- Embodied cognition: knowing in the head is not enough

- Ecological psychology: diagnosing and treating patients in complex environments

- Situated cognition: clinical reasoning and error are context dependent

- Distributed cognition: interactions between individuals and artifacts