Abstract

Structured light is a widely used 3D imaging method with a drawback that it typically requires a long baseline length between the laser projector and the camera sensor, which hinders its utilization in space-constrained scenarios. On the other hand, the application of passive 3D imaging methods, such as depth from depth-dependent point spread functions (PSFs), is impeded by the challenge in measuring textureless scenes. Here, we combine the advantages of both structured light and depth-dependent PSFs and propose a baseline-free structured light 3D imaging system. A metasurface is designed to project a structured dot array and encode depth information in the double-helix pattern of each dot simultaneously. Combined with a straightforward and fast algorithm, we demonstrate accurate 3D point cloud acquisition for various real-world scenarios including multiple cardboard boxes and a living human face. Such a technique may find application in a broad range of areas including consumer electronics and precision metrology.

1 Introduction

Three-dimensional (3D) imaging technology, due to its pivotal role in enabling machines and artificial intelligence to perceive and interact with the world, has drawn enormous interest in recent years [1], [2], [3], [4], [5]. Structured light, as one of the most commonly adopted 3D imaging technologies [6], can allow highly reliable acquisition of 3D point clouds and has found extensive applications in emerging fields such as consumer electronics and robotics. Structured light technology relies on the triangulation principle to measure depth, which consequently necessitates a baseline length between the laser projector and the camera sensor and oftentimes requires a complicated image-matching algorithm [7], [8]. The baseline length between the projector and receiver leads to bulky hardware. For instance, smartphones equipped with structured light-based 3D imaging modules typically have multiple black openings or a long black stripe on their screens. Moreover, an extremely long baseline length is required for high-accuracy 3D imaging at long distances. The matching algorithm, typically including calibration, image correlation, cost aggregation, and depth calculation, imposes a high demand on computational resources [9], [10].

Depth-from-defocus (DfD) is another widely studied 3D imaging method, since it is not limited by the principle of triangulation and can obtain depth information from axial image blur using only a single camera [11], [12], [13], [14], [15]. More sophisticated depth-dependent point-spread functions (PSFs) are also proposed to further improve the depth accuracy of DfD, such as double-helix PSF, which features two foci rotating around a central point with the rotation angle depending on the axial depth of the object point [16], [17], [18], [19], [20], [21], [22]. Nevertheless, since its depth calculation relies on the texture of the target object, DfD often fails in measuring textureless scenes. In addition, DfD typically requires the use of relatively complex image feature extraction and matching algorithms, resulting in even higher computational costs. A 3D imaging system combining the high reliability of conventional structured light techniques and the compactness of the DfD method is highly desired.

Optical metasurfaces [23], [24], [25], [26], [27], [28], [29], [30], [31], [32], [33], [34], due to their versatility in tailoring the light field at subwavelength scale, have been widely adopt to remarkably enhance the performance and compactness of 3D imaging systems [19], [35], [36], [37], [38], [39], [40], [41], [42], [43]. Notably, Li et al. recently proposed a structured light-based 3D imaging method without triangulation based on 3D holograms using metasurface [44]. By eliminating the need for baseline length, the volume of structured light systems can be reduced. Nonetheless, since the light intensity distribution of 3D holograms is only considered at a few discrete distances, clear and continuous correspondence between distance and hologram distribution is absent. In addition, the image correlation algorithm is still rather complicated. It remains a major challenge to build a compact structured light-based 3D imaging system that allows high-accuracy depth sensing for continuous depth values using a fast algorithm.

Here, we propose and experimentally demonstrate accurate 3D point cloud generation for various complex scenes using a compact baseline-free structured light system equipped with a metasurface double-helix dot projector. Leveraging the versatility of metasurface to manipulate the light field at subwavelength precision, we design and optimize a metasurface projector that projects a structured dot array and encodes depth information in the double-helix pattern of each dot simultaneously. The rotation angle of the double-helix pattern of each dot has a continuous monotonic one-to-one correspondence relationship with depth. A beam splitter is employed to fold the projection and receiving light path to enable a single-opening system configuration. Combined with a straightforward and fast algorithm to calculate depth from the rotation angle of the dot patterns, we demonstrate accurate 3D point cloud acquisition for scenes including multiple boxes and a living human face, towards applications including robotic operation and 3D face authentication.

2 Results and discussions

2.1 Framework of the baseline-free structured light 3D imaging system

The framework of the baseline-free structured light 3D imaging system is schematically illustrated in Figure 1. At the projection end, with a collimated 635-nm laser as the light source, a metasurface is designed and optimized to project a 64 × 64 dot array with a 60° diagonal field-of-view (FOV), while encoding depth information in the double-helix pattern of each dot. The receiving end consists of a camera with a sufficient FOV and high resolution. The optical axes of the dot projector and the camera are perpendicular to each other, with a beam splitter adopted to fold the projection and receiving light paths and align the center of the camera imaging plane with that of the projector. Consequently, the projector and receiver share a single opening. From a single image captured by the camera, based on the one-to-one correspondence between the rotation angle of the double-helix pattern and the object depth, an accurate 3D point cloud of the scene can be generated.

Schematic of baseline-free structured light 3D imaging system using a metasurface double-helix dot projector. The light source of the projector is a collimated laser, after being modulated by the metasurface, a dot array with depth-related double-helix patterns is projected. The rotation angle of each projected double-helix pattern can be mapped to the depth value z obj, thus eliminating the baseline length requirement in a traditional structured light system. The receiver is a conventional camera that captures the structured light pattern. We use a beam splitter to fold the projection and receiving light path and align the center of the camera imaging plane with the center of the projector. Therefore, the projector and receiver share a single opening. From a single image captured by the camera, one can reconstruct an accurate 3D point cloud of the scene.

2.2 Metasurface design and fabrication

To realize a dot projector with a depth-dependent double-helix pattern, we first need to determine the transmission phase distribution of the metasurface. The phase to generate a rotating double-helix pattern is initialized by arranging generalized Fresnel zones carrying spiral phase profiles with gradually increasing topological quantum numbers towards the outer rings of the zone plate [45], [46]. The phase term

where u is the normalized radial coordinate and φu

is the azimuth angle in the aperture plane.

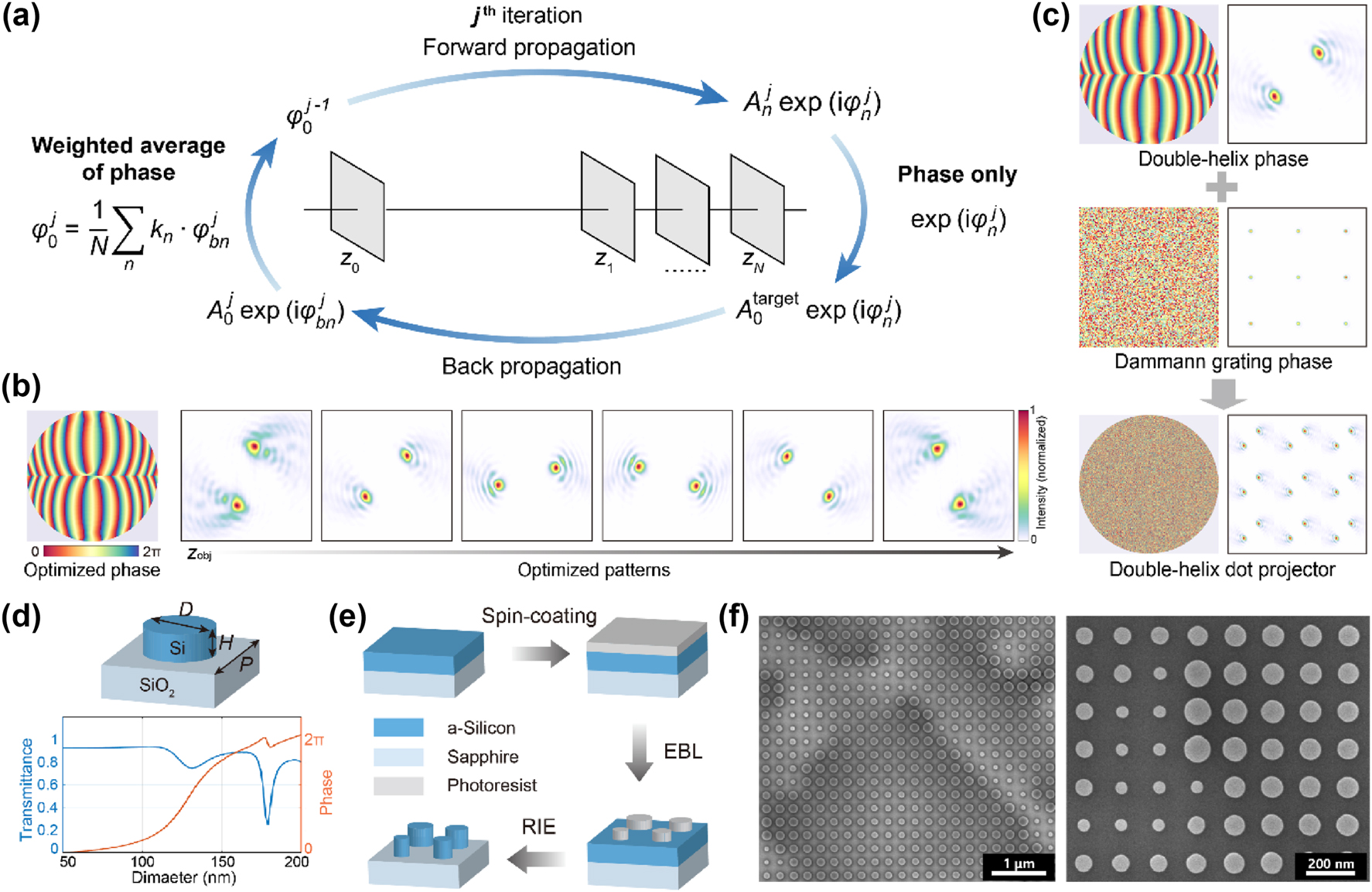

Subsequently, an iterative Fourier transform algorithm is employed to maximize the energy in the main lobe of the double-helix pattern within the 180° rotation range, as shown in Figure 2a. The iterative optimization process improves the peak intensity of the main lobe of the double-helix pattern by 31 % in average. The optimized phase to generate double-helix patterns and the corresponding patterns at different projection distances are shown in Figure 2b. To avoid ambiguities, the double-helix patterns beyond the 180° rotation range are designed to spread significantly, and can be easily distinguished from those within the 180° rotation range.

Metasurface design and fabrication. (a) Optimization process of the metasurface phase profile. The forward propagation and inverse propagation are calculated using the angular spectrum method. z

0 is the location of the metasurface plane, and z

1 ∼ z

N

are different propagation distances.

Meanwhile, a Dammann grating phase that projects a highly uniform 64 × 64 normal structured light dot array over the 60° diagonal FOV is designed using the Gerchberg–Saxton algorithm [48]. Through the superposition of the double-helix pattern encoding phase profile and the Dammann grating phase profile, we obtain the target phase profile of the metasurface that projects a double-helix dot array, as shown in Figure 2c.

The unit cell of the metasurface is composed of silicon nano-cylinder (top panel of Figure 2d), with a height H = 300 nm, and a period U = 250 nm. The diameter D of the nano-cylinder is swept between 50 and 200 nm to achieve a full 2π phase modulation and a transmittance above 78 % (bottom panel of Figure 2d). As shown in Figure 2e, the fabrication of the metasurface starts with spin-coating of photoresist on a silicon-on-sapphire substrate, where the thickness of the monocrystalline silicon film is 300 nm. Electron beam lithography (EBL) is employed to write the metasurface pattern onto the photoresist. Subsequently, the pattern is transferred to the silicon layer via reactive ion etching (RIE). Finally, the photoresist layer is removed. The scanning electron microscopy images of the fabricated metasurface are shown in Figure 2f.

2.3 Experimental system setup

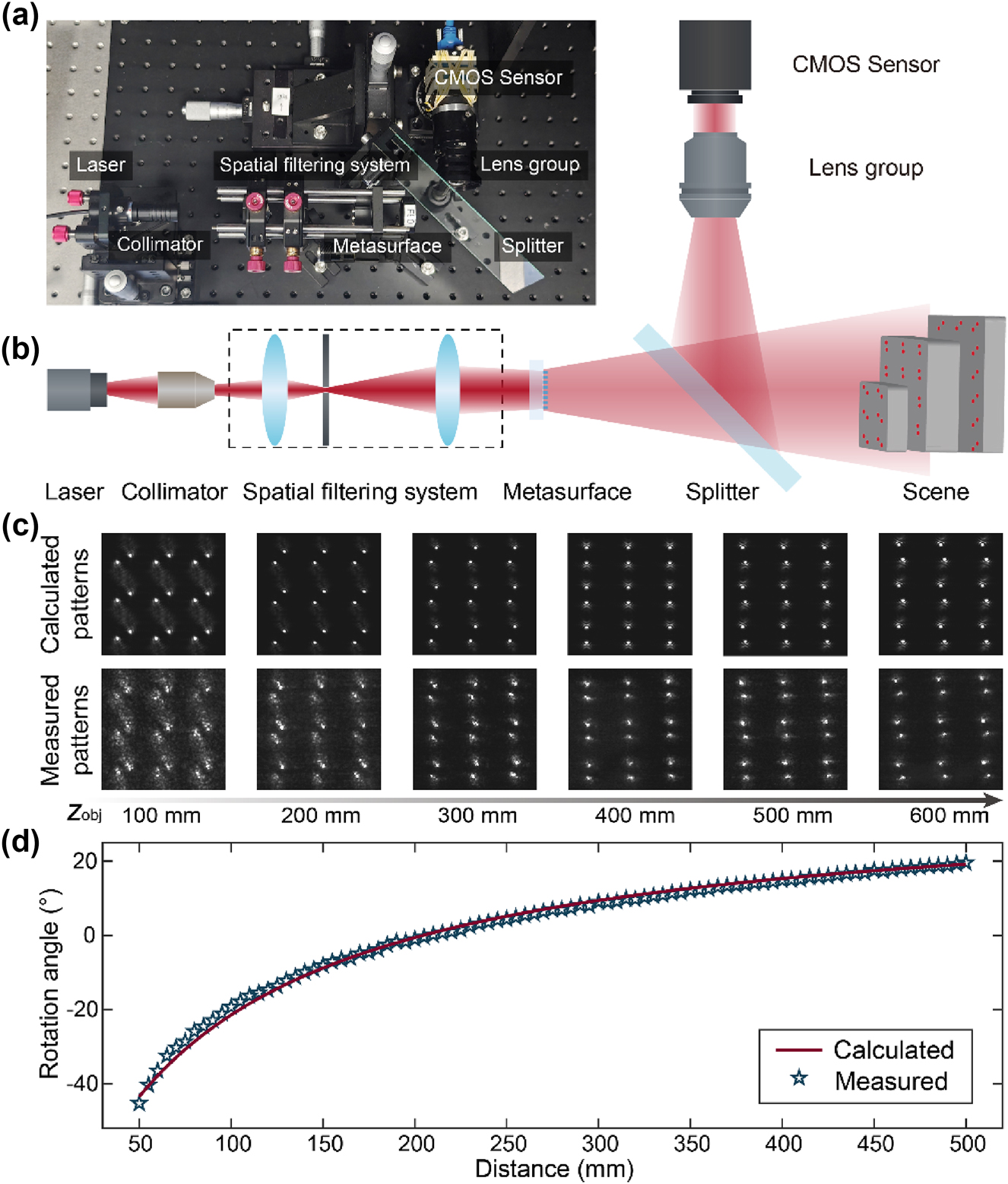

The photograph of the experimental setup is shown in Figure 3a. As schematically illustrated in Figure 3b, the light source of the experimental system is a 635-nm diode laser (SZ Laser ZLMAD635-16GD). After being collimated, the laser passes through a spatial filtering system consisting of two lenses and a pinhole, which is used to improve the laser beam quality, and is incident on the metasurface. Although the relative positions of the projector and the camera in the structured light system can be arbitrarily arranged, we use a beam splitter to fold the projection and receiving light paths and align the center of the camera’s imaging plane and the center of the metasurface at the projection end so that they completely overlap in their corresponding mirror space. Thereby, we can realize a single-opening system configuration. Moreover, since the central position of each dot is fixed in the captured image, the depth reconstruction process can be drastically simplified and accelerated. The camera is equipped with a CMOS image sensor (Daheng Imaging ME2P – 2622 – 15U3M) with an active area of 12.8 × 12.8 mm2 and a 12-mm focal length lens group with a low image distortion.

Experimental system and calibration. (a) Photograph of the experimental set-up. (b) Schematic of the experimental set-up. (c) Calculated (top panel) and experimentally measured (bottom panel) patterns of the central area of the dot array. (d) Calculated (red line) and experimentally measured (blue star) rotation angles of the projected pattern as a function of the distance.

The correspondence between distances and rotation angles of the double helix pattern is calibrated before 3D imaging of actual scenes. We place a flat plate at different distances to measure the distributions of the projected double helix dot array. The comparison between central areas of the measured and calculated double helix dot arrays is shown in Figure 3c. Figure 3d shows that the calculated and experimental measured relationships between distances and the rotation angles of the double helix pattern are in close agreement.

2.4 Experimental demonstration of 3D imaging

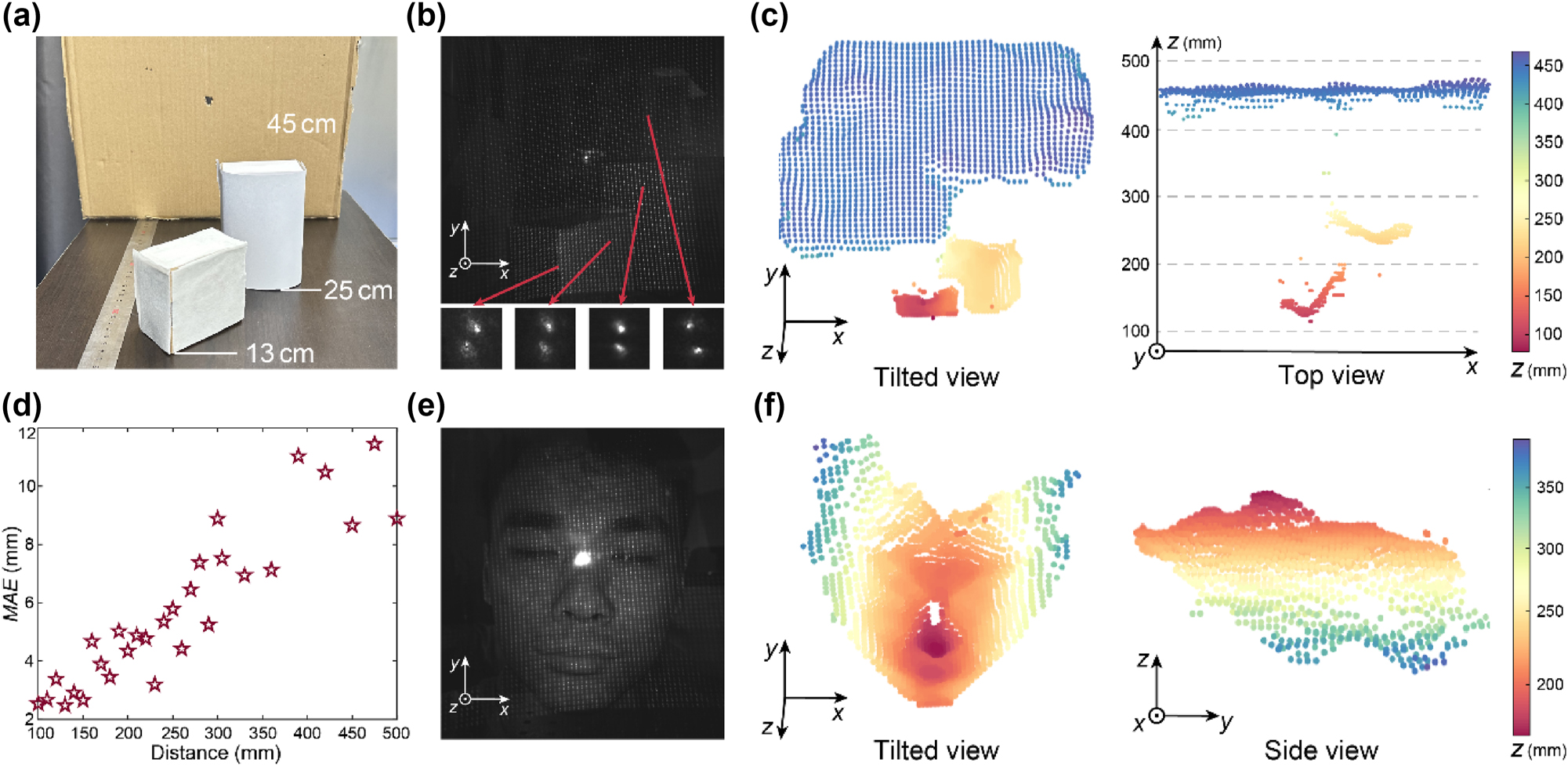

To demonstrate 3D imaging of real-world scenarios, we first set up a scene consisting of three cardboard boxes located at different distances, a scenario that may occur in industrial robotic operations, as shown in Figure 4a. Figure 4b shows the raw image captured by the proposed system. In the inset of Figure 4b, four double helix patterns at different distances are magnified, showcasing differences in their rotation angles. Based on the experimentally calibrated relationship between the rotation angles of the double helix patterns and distances and a straightforward algorithm that calculates the rotation angle of the double helix pattern of each dot, a 3D point cloud can be generated within 0.4 s from the raw measurement, on a laptop computer with Intel i7-10875H CPU and 16 GB RAM. As shown in Figure 4c, the calculated 3D point cloud accurately depicts the 3D distribution of the scene, including the individual shapes and precise absolute distances of each box. There are some less accurate stray points in the 3D point cloud, which could potentially be minimized by enhancing the signal-to-noise ratio of the imaging system and by optimizing the 3D reconstruction algorithm. The reconstruction can also be further accelerated when deploying to edge computation platforms, through operator optimization or hardware acceleration [49].

Experimental demonstration of 3D imaging. (a) Photograph of a target scene consisted of three cardboard boxes. (b) The raw image captured by the proposed structured light system for the scene shown in panel (a). The red arrows point to the regions of the magnified dot patterns. (c) Tilted view (left panel) and top view (right panel) of the 3D point cloud of the scene shown in panel (a) generated by the baseline-free structured light 3D imaging system. (d) Quantitative test result of depth accuracy. The red stars are the measured mean absolute error (MAE) at each distance. (e) Raw image captured by our structured light system for a living human face (the face of the 1st-author). (f) Tilted view (left panel) and side view (right panel) of the 3D point cloud of the scene shown in panel (e) generated by the baseline-free structured light 3D imaging system.

To quantitatively assess the depth measurement accuracy of the baseline-free structured light 3D imaging system, we deploy a flat cardboard plate at different distances and calculate the mean absolute error (MAE) between the measured depth value of each dot and the true depth value to characterize the distance measurement error. As shown in Figure 4d, the depth measurement errors are predominantly below 1 cm for all distances within the depth measuring range of 500 mm. We further calculate the relative depth measurement error, defined as distance measurement error Δz obj divided by true distance z obj, of our system. The calculated relative depth measurement error of our system is about 2.4 %, which is close to the relative depth measurement error of within 2 % of typical commercial structured light products, such as Intel® RealSense™ Depth Camera D435.

A single-opening structured light system is ideally suited for space-constrained platforms. Here, 3D reconstruction of a living human face, which is a widely used function on smartphones, is demonstrated using the proposed system. We capture an image of a living human face located at distances between 20 and 40 cm, as shown in Figure 4e. The resulting 3D point cloud exhibits fine features of the human face, as shown in Figure 4f. Note that the zero-order light spot in Figure 4e has a measured power of less than 0.01 mW, which complies with the laser safety standard for human eyes [50], and could be substantially diminished by optimizing the fabrication process of the metasurface.

3 Conclusions

In summary, we have demonstrated a baseline-free structured light system for accurate and rapid 3D imaging of different real-world scenarios. By exploiting the subwavelength precision light field manipulation ability of metasurfaces for the projection of double-helix dot array, high-accuracy 3D imaging for complex scenes using a baseline-free structured light system is achieved.

The single-opening configuration enabled by the folded light path, which can be substantially shrunk in volume via the standard lens module assembly process [51], holds potential in various application scenarios that require miniaturization of the 3D imaging system, such as 3D face authentication of smartphones, robotic operations, and endoscopes. For application scenarios that are highly sensitive to the thickness of the 3D imaging system, such as smartphones, we envision that by adopting a 45° prism mirror that has been widely applied in periscope telephoto modules on smartphones [52] to rotate the optics by 90° in the housing, the thickness of the baseline-free single-opening structured light system may be further reduced.

The straightforward depth calculation algorithm, simpler than those of existing structured light techniques, may empower high frame-rate 3D imaging on various mobile platforms that can only carry limited computing resources. For applications requiring larger FOV, by using near-to-far field transformation methods that do not rely on the paraxial approximation in the Dammann grating phase design process, the FOV of our system may be expanded up to 180° [41], [53].

Similar to the effect of increasing baseline length L in triangulation-based 3D structured light systems, the increase of the diameter D of the metasurface projector in our baseline-free system can be used to increase the depth measurement accuracy and range. For instance, to maintain the same relative depth measurement error Δz obj/z obj for 100 times further distance, triangulation-based 3D structured light systems need 100 times larger baseline length L, while our baseline-free system needs 10 times larger diameter D of the metasurface projector [21]. Apart from diameter D of the metasurface projector, the relatively low signal-to-noise ratio (SNR) of the captured image and the fabrication error of the metasurface are the other two factors that restrict the current accuracy of our system and can be further improved in the future engineering implementation process. We anticipate that by applying a high-quality laser source with higher power to improve the SNR of our captured image, refining our metasurface fabrication process, as well as optimizing the metasurface design for better fabrication error tolerance, further improvement of our depth measurement accuracy can be realized. Such improvements in the 3D imaging range and accuracy of our system may help it to be adapted to an even broader range of application scenarios. Such a compact, accurate, and reliable 3D imaging solution may be useful for numerous application domains, including but not limited to consumer electronics, robot vision, autonomous driving, and biomedical imaging.

Funding source: National Natural Science Foundation of China

Award Identifier / Grant number: 62135008

Funding source: Beijing Municipal Science & Technology Commission, the Administrative Commission of Zhongguancun Science Park

Award Identifier / Grant number: Z231100006023006

Funding source: National Key Research and Development Program of China

Award Identifier / Grant number: 2023YFB2805800

-

Research funding: National Key Research and Development Program of China (2023YFB2805800), Beijing Municipal Science & Technology Commission, the Administrative Commission of Zhongguancun Science Park (Z231100006023006), National Natural Science Foundation of China (62135008).

-

Author contributions: All authors have accepted responsibility for the entire content of this manuscript and approved its submission.

-

Conflict of interest: Authors state no conflicts of interest.

-

Informed consent: Informed consent was obtained from all individuals included in this study.

-

Research ethics: The conducted research is not related to either human or animal use.

-

Data availability: The simulated and experimental data that support this study are available from the corresponding author upon reasonable request.

References

[1] I. Kim, R. J. Martins, and J. Jang, “Nanophotonics for light detection and ranging technology,” Nat. Nanotechnol., vol. 16, no. 5, pp. 508–524, 2021, https://doi.org/10.1038/s41565-021-00895-3.Search in Google Scholar PubMed

[2] C. Rogers, A. Y. Piggott, and D. J. Thomson, “A universal 3D imaging sensor on a silicon photonics platform,” Nature, vol. 590, no. 7845, pp. 256–261, 2021, https://doi.org/10.1038/s41586-021-03259-y.Search in Google Scholar PubMed

[3] J. Wu, Y. Guo, and C. Deng, “An integrated imaging sensor for aberration-corrected 3D photography,” Nature, vol. 612, no. 7938, pp. 62–71, 2022, https://doi.org/10.1038/s41586-022-05306-8.Search in Google Scholar PubMed PubMed Central

[4] X. Zhang, K. Kwon, J. Henriksson, J. Luo, and M. C. Wu, “A large-scale microelectromechanical-systems-based silicon photonics LiDAR,” Nature, vol. 603, no. 7900, pp. 253–258, 2022, https://doi.org/10.1038/s41586-022-04415-8.Search in Google Scholar PubMed PubMed Central

[5] X. Huang, C. Wu, and X. Xu, “Polarization structured light 3D depth image sensor for scenes with reflective surfaces,” Nat. Commun., vol. 14, no. 1, 2023, https://doi.org/10.1038/s41467-023-42678-5.Search in Google Scholar PubMed PubMed Central

[6] J. Geng, “Structured-light 3D surface imaging: a tutorial,” Adv. Opt. Photonics, vol. 3, no. 2, pp. 128–160, 2011, https://doi.org/10.1364/aop.3.000128.Search in Google Scholar

[7] X. Huang, J. Bai, and K. Wang, “Target enhanced 3D reconstruction based on polarization-coded structured light,” Opt. Express, vol. 25, no. 2, pp. 1173–1184, 2017, https://doi.org/10.1364/oe.25.001173.Search in Google Scholar PubMed

[8] T. Jia, X. Yuan, T. Gao, and D. Chen, “Depth perception based on monochromatic shape encode-decode structured light method,” Opt. Lasers Eng., vol. 134, p. 106259, 2020, https://doi.org/10.1016/j.optlaseng.2020.106259.Search in Google Scholar

[9] Y. Boykov, O. Veksler, and R. Zabih, “Fast approximate energy minimization via graph cuts,” IEEE Trans. Pattern Anal. Machine Intel., vol. 23, no. 11, pp. 1222–1239, 2001, https://doi.org/10.1109/34.969114.Search in Google Scholar

[10] B. Pan, H. Xie, Z. Wang, and K. Qian, “Study on subset size selection in digital image correlation for speckle patterns,” Opt. Express, vol. 16, no. 10, pp. 7037–7048, 2008, https://doi.org/10.1364/oe.16.007037.Search in Google Scholar PubMed

[11] A. P. Pentland, “A new sense for depth of field,” IEEE Trans. Pattern Anal. Machine Intel., vol. 9, no. 4, pp. 523–531, 1987, https://doi.org/10.1109/tpami.1987.4767940.Search in Google Scholar PubMed

[12] S. K. Nayar and Y. Nakagawa, “Shape from focus,” IEEE Trans. Pattern Anal. Machine Intel, vol. 16, no. 8, pp. 824–831, 1994, https://doi.org/10.1109/34.308479.Search in Google Scholar

[13] S. Liu, F. Zhou, and Q. Liao, “Defocus map estimation from a single image based on two-parameter defocus model,” IEEE Trans. Image Process., vol. 25, no. 12, pp. 5943–5956, 2016, https://doi.org/10.1109/tip.2016.2617460.Search in Google Scholar PubMed

[14] Q. Guo, Z. Shi, and Y.-W. Huang, “Compact single-shot metalens depth sensors inspired by eyes of jumping spiders,” Proc. Natl Acad. Sci. USA, vol. 116, no. 46, pp. 22959–22965, 2019, https://doi.org/10.1073/pnas.1912154116.Search in Google Scholar PubMed PubMed Central

[15] H. Ikoma, C. M. Nguyen, and C. A. Metzler, “Depth from defocus with learned optics for imaging and occlusion-aware depth estimation,” in IEEE International Conference on Computational Photography, Haifa, Israel, IEEE, 2021, pp. 1–12.10.1109/ICCP51581.2021.9466261Search in Google Scholar

[16] A. Greengard, Y. Y. Schechner, and R. Piestun, “Depth from diffracted rotation,” Opt. Lett., vol. 31, no. 2, pp. 181–183, 2006, https://doi.org/10.1364/ol.31.000181.Search in Google Scholar PubMed

[17] S. R. P. Pavani, M. A. Thompson, and J. S. Biteen, “Three-dimensional, single-molecule fluorescence imaging beyond the diffraction limit by using a double-helix point spread function,” Proc. Natl Acad. Sci. USA, vol. 106, no. 9, pp. 2995–2999, 2009, https://doi.org/10.1073/pnas.0900245106.Search in Google Scholar PubMed PubMed Central

[18] Y. Shechtman, L. E. Weiss, A. S. Backer, M. Y. Lee, and W. E. Moerner, “Multicolour localization microscopy by point-spread-function engineering,” Nat. Photon., vol. 10, no. 9, pp. 590–594, 2016, https://doi.org/10.1038/nphoton.2016.137.Search in Google Scholar PubMed PubMed Central

[19] S. Colburn and A. Majumdar, “Metasurface generation of paired accelerating and rotating optical beams for passive ranging and scene reconstruction,” ACS Photonics, vol. 7, no. 6, pp. 1529–1536, 2020, https://doi.org/10.1021/acsphotonics.0c00354.Search in Google Scholar

[20] B. Ghanekar, V. Saragadam, and D. Mehra, “PS2F: polarized spiral point spread function for single-shot 3D sensing,” IEEE Trans. Pattern Anal. Machine Intel., pp. 1–12, 2022. https://doi.org/10.1109/TPAMI.2022.3202511.Search in Google Scholar PubMed

[21] Z. Shen, F. Zhao, C. Jin, S. Wang, L. Cao, and Y. Yang, “Monocular metasurface camera for passive single-shot 4D imaging,” Nat. Commun., vol. 14, no. 1, p. 1035, 2023, https://doi.org/10.1038/s41467-023-36812-6.Search in Google Scholar PubMed PubMed Central

[22] Z. Cao, N. Li, L. Zhu, J. Wu, Q. Dai, and H. Qiao, “Aberration-robust monocular passive depth sensing using a meta-imaging camera,” Light Sci. Appl., vol. 13, no. 1, p. 236, 2024, https://doi.org/10.1038/s41377-024-01609-9.Search in Google Scholar PubMed PubMed Central

[23] N. Yu, P. Genevet, and M. A. Kats, “Light propagation with phase discontinuities: generalized laws of reflection and refraction,” Science, vol. 334, no. 6054, pp. 333–337, 2011, https://doi.org/10.1126/science.1210713.Search in Google Scholar PubMed

[24] N. Yu and F. Capasso, “Flat optics with designer metasurfaces,” Nat. Mater., vol. 13, no. 2, pp. 139–150, 2014, https://doi.org/10.1038/nmat3839.Search in Google Scholar PubMed

[25] Y. Yang, W. Wang, P. Moitra, I. I. Kravchenko, D. P. Briggs, and J. Valentine, “Dielectric meta-reflectarray for broadband linear polarization conversion and optical vortex generation,” Nano Lett., vol. 14, no. 3, pp. 1394–1399, 2014, https://doi.org/10.1021/nl4044482.Search in Google Scholar PubMed

[26] Y. Yang, I. I. Kravchenko, D. P. Briggs, and J. Valentine, “All-dielectric metasurface analogue of electromagnetically induced transparency,” Nat. Commun., vol. 5, no. 1, p. 5753, 2014, https://doi.org/10.1038/ncomms6753.Search in Google Scholar PubMed

[27] A. Arbabi, Y. Horie, M. Bagheri, and A. Faraon, “Dielectric metasurfaces for complete control of phase and polarization with subwavelength spatial resolution and high transmission,” Nat. Nanotechnol., vol. 10, no. 11, pp. 937–943, 2015, https://doi.org/10.1038/nnano.2015.186.Search in Google Scholar PubMed

[28] J. Engelberg and U. Levy, “The advantages of metalenses over diffractive lenses,” Nat. Commun., vol. 11, no. 1, p. 1991, 2020, https://doi.org/10.1038/s41467-020-15972-9.Search in Google Scholar PubMed PubMed Central

[29] M. Liu, W. Zhu, and P. Huo, “Multifunctional metasurfaces enabled by simultaneous and independent control of phase and amplitude for orthogonal polarization states,” Light Sci. Appl., vol. 10, no. 1, p. 107, 2021, https://doi.org/10.1038/s41377-021-00552-3.Search in Google Scholar PubMed PubMed Central

[30] S.-W. Moon, C. Lee, and Y. Yang, “Tutorial on metalenses for advanced flat optics: design, fabrication, and critical considerations,” J. Appl. Phys., vol. 131, no. 9, 2022, https://doi.org/10.1063/5.0078804.Search in Google Scholar

[31] Y. Yang, J. Seong, and M. Choi, “Integrated metasurfaces for re-envisioning a near-future disruptive optical platform,” Light Sci. Appl., vol. 12, no. 1, p. 152, 2023, https://doi.org/10.1038/s41377-023-01169-4.Search in Google Scholar PubMed PubMed Central

[32] S. So, J. Mun, J. Park, and J. Rho, “Revisiting the design strategies for metasurfaces: fundamental physics, optimization, and beyond,” Adv. Mater., vol. 35, no. 43, p. 2206399, 2023, https://doi.org/10.1002/adma.202206399.Search in Google Scholar PubMed

[33] Y. Yang, H. Kang, and C. Jung, “Revisiting optical material platforms for efficient linear and nonlinear dielectric metasurfaces in the ultraviolet, visible, and infrared,” ACS Photonics, vol. 10, no. 2, pp. 307–321, 2023, https://doi.org/10.1021/acsphotonics.2c01341.Search in Google Scholar

[34] J. Seo, J. Jo, and J. Kim, “Deep-learning-driven end-to-end metalens imaging,” Adv. Photonics, vol. 6, no. 6, p. 066002, 2024, https://doi.org/10.1117/1.ap.6.6.066002.Search in Google Scholar

[35] Q. Guo, Z. Shi, and Y. W. Huang, “Compact single-shot metalens depth sensors inspired by eyes of jumping spiders,” Proc. Natl Acad. Sci. USA, vol. 116, no. 46, pp. 22959–22965, 2019, https://doi.org/10.1073/pnas.1912154116.Search in Google Scholar PubMed PubMed Central

[36] C. Jin, M. Afsharnia, and R. Berlich, “Dielectric metasurfaces for distance measurements and three-dimensional imaging,” Adv. Photonics, vol. 1, no. 3, p. 036001, 2019, https://doi.org/10.1117/1.ap.1.3.036001.Search in Google Scholar

[37] R. J. Lin, V. C. Su, and S. Wang, “Achromatic metalens array for full-colour light-field imaging,” Nat. Nanotechnol., vol. 14, no. 3, pp. 227–231, 2019, https://doi.org/10.1038/s41565-018-0347-0.Search in Google Scholar PubMed

[38] Y. Ni, S. Chen, Y. Wang, Q. Tan, S. Xiao, and Y. Yang, “Metasurface for structured light projection over 120° field of view,” Nano Lett., vol. 20, no. 9, pp. 6719–6724, 2020, https://doi.org/10.1021/acs.nanolett.0c02586.Search in Google Scholar PubMed

[39] J. Park, B. G. Jeong, and S. I. Kim, “All-solid-state spatial light modulator with independent phase and amplitude control for three-dimensional LiDAR applications,” Nat. Nanotechnol., vol. 16, no. 1, pp. 69–76, 2021, https://doi.org/10.1038/s41565-020-00787-y.Search in Google Scholar PubMed

[40] G. Kim, Y. Kim, and J. Yun, “Metasurface-driven full-space structured light for three-dimensional imaging,” Nat. Commun., vol. 13, no. 1, p. 5920, 2022, https://doi.org/10.1038/s41467-022-32117-2.Search in Google Scholar PubMed PubMed Central

[41] E. Choi, G. Kim, J. Yun, Y. Jeon, J. Rho, and S. H. Baek, “360° structured light with learned metasurfaces,” Nat. Photonics, vol. 18, pp. 848–855, 2024, https://doi.org/10.1038/s41566-024-01450-x.Search in Google Scholar

[42] Z. Zhang, Q. Sun, A. Qu, M. Yang, and Z. Li, “Metasurface-enabled 3D imaging via local bright spot gray scale matching using the structured light dot array,” Opt. Lett., vol. 49, no. 21, pp. 6325–6328, 2024, https://doi.org/10.1364/ol.538443.Search in Google Scholar

[43] C. H. Chang, W. C. Hsu, and C. T. Chang, “Harnessing geometric phase metasurfaces to double the field of view in polarized structured light projection for depth sensing,” IEEE Photonics J., vol. 16, no. 4, pp. 1–6, 2024, https://doi.org/10.1109/jphot.2024.3429348.Search in Google Scholar

[44] C. Li, X. Li, and C. He, “Metasurface-based structured light sensing without triangulation,” Adv. Opt. Mater., vol. 12, no. 7, p. 2302126, 2024, https://doi.org/10.1002/adom.202302126.Search in Google Scholar

[45] S. Prasad, “Rotating point spread function via pupil-phase engineering,” Opt. Lett., vol. 38, no. 4, pp. 585–587, 2013, https://doi.org/10.1364/ol.38.000585.Search in Google Scholar PubMed

[46] R. Berlich and S. Stallinga, “High-order-helix point spread functions for monocular three-dimensional imaging with superior aberration robustness,” Opt. Express, vol. 26, no. 4, pp. 4873–4891, 2018, https://doi.org/10.1364/oe.26.004873.Search in Google Scholar

[47] C. Jin, J. Zhang, and C. Guo, “Metasurface integrated with double-helix point spread function and metalens for three-dimensional imaging,” Nanophotonics, vol. 8, no. 3, pp. 451–458, 2019, https://doi.org/10.1515/nanoph-2018-0216.Search in Google Scholar

[48] R. W. Gerchberg, “A practical algorithm for the determination of phase from image and diffraction plane pictures,” Optik, vol. 35, pp. 237–246, 1972.Search in Google Scholar

[49] M. Psarakis, A. Dounis, A. Almabrok, S. Stavrinidis, and G. Gkekas, “An FPGA-based accelerated optimization algorithm for real-time applications,” J. Signal Process. Syst., vol. 92, no. 10, pp. 1155–1176, 2020, https://doi.org/10.1007/s11265-020-01522-5.Search in Google Scholar

[50] D. H. Sliney, “Laser safety,” Laser Surg. Med., vol. 16, no. 3, pp. 215–225, 1995, https://doi.org/10.1002/lsm.1900160303.Search in Google Scholar PubMed

[51] Y. Zhang, X. Song, and J. Xie, “Large depth-of-field ultra-compact microscope by progressive optimization and deep learning,” Nat. Commun., vol. 14, no. 1, p. 4118, 2023, https://doi.org/10.1038/s41467-023-39860-0.Search in Google Scholar PubMed PubMed Central

[52] V. Blahnik and O. Schindelbeck, “Smartphone imaging technology and its applications,” Adv. Opt. Technol., vol. 10, no. 3, pp. 145–232, 2021, https://doi.org/10.1515/aot-2021-0023.Search in Google Scholar

[53] Z. Li, Q. Dai, and M. Q. Mehmood, “Full-space cloud of random points with a scrambling metasurface,” Light: Sci. Appl., vol. 7, no. 1, p. 63, 2018. https://doi.org/10.1038/s41377-018-0064-3.Search in Google Scholar PubMed PubMed Central

© 2025 the author(s), published by De Gruyter, Berlin/Boston

This work is licensed under the Creative Commons Attribution 4.0 International License.

Articles in the same Issue

- Frontmatter

- Editorial

- Special issue: “Metamaterials and Plasmonics in Asia”

- Reviews

- All-optical analog differential operation and information processing empowered by meta-devices

- Metasurface-enhanced biomedical spectroscopy

- Topological guided-mode resonances: basic theory, experiments, and applications

- Letter

- Ultrasensitive circular dichroism spectroscopy based on coupled quasi-bound states in the continuum

- Research Articles

- Data-efficient prediction of OLED optical properties enabled by transfer learning

- Semimetal–dielectric–metal metasurface for infrared camouflage with high-performance energy dissipation in non-atmospheric transparency window

- Deep-subwavelength engineering of stealthy hyperuniformity

- Tunable structural colors based on grayscale lithography and conformal coating of VO2

- A general recipe to observe non-Abelian gauge field in metamaterials

- Free-form catenary-inspired meta-couplers for ultra-high or broadband vertical coupling

- Enhanced photoluminescence of strongly coupled single molecule-plasmonic nanocavity: analysis of spectral modifications using nonlocal response theory

- Spectral Hadamard microscopy with metasurface-based patterned illumination

- Tunneling of two-dimensional surface polaritons through plasmonic nanoplates on atomically thin crystals

- Highly sensitive microdisk laser sensor for refractive index sensing via periodic meta-hole patterning

- Scaled transverse translation by planar optical elements for sub-pixel sampling and remote super-resolution imaging

- Hyperbolic polariton-coupled emission optical microscopy

- Broadband perfect Littrow diffraction metasurface under large-angle incidence

- Role of complex energy and momentum in open cavity resonances

- Are nanophotonic intermediate mirrors really effective in enhancing the efficiency of perovskite tandem solar cells?

- Tunable meta-device for large depth of field quantitative phase imaging

- Enhanced terahertz magneto-plasmonic effect enabled by epsilon-near-zero iron slot antennas

- Baseline-free structured light 3D imaging using a metasurface double-helix dot projector

- Nanophotonic device design based on large language models: multilayer and metasurface examples

- High-efficiency generation of bi-functional holography with metasurfaces

- Dielectric metasurfaces based on a phase singularity in the region of high reflectance

- Exceptional points in a passive strip waveguide

Articles in the same Issue

- Frontmatter

- Editorial

- Special issue: “Metamaterials and Plasmonics in Asia”

- Reviews

- All-optical analog differential operation and information processing empowered by meta-devices

- Metasurface-enhanced biomedical spectroscopy

- Topological guided-mode resonances: basic theory, experiments, and applications

- Letter

- Ultrasensitive circular dichroism spectroscopy based on coupled quasi-bound states in the continuum

- Research Articles

- Data-efficient prediction of OLED optical properties enabled by transfer learning

- Semimetal–dielectric–metal metasurface for infrared camouflage with high-performance energy dissipation in non-atmospheric transparency window

- Deep-subwavelength engineering of stealthy hyperuniformity

- Tunable structural colors based on grayscale lithography and conformal coating of VO2

- A general recipe to observe non-Abelian gauge field in metamaterials

- Free-form catenary-inspired meta-couplers for ultra-high or broadband vertical coupling

- Enhanced photoluminescence of strongly coupled single molecule-plasmonic nanocavity: analysis of spectral modifications using nonlocal response theory

- Spectral Hadamard microscopy with metasurface-based patterned illumination

- Tunneling of two-dimensional surface polaritons through plasmonic nanoplates on atomically thin crystals

- Highly sensitive microdisk laser sensor for refractive index sensing via periodic meta-hole patterning

- Scaled transverse translation by planar optical elements for sub-pixel sampling and remote super-resolution imaging

- Hyperbolic polariton-coupled emission optical microscopy

- Broadband perfect Littrow diffraction metasurface under large-angle incidence

- Role of complex energy and momentum in open cavity resonances

- Are nanophotonic intermediate mirrors really effective in enhancing the efficiency of perovskite tandem solar cells?

- Tunable meta-device for large depth of field quantitative phase imaging

- Enhanced terahertz magneto-plasmonic effect enabled by epsilon-near-zero iron slot antennas

- Baseline-free structured light 3D imaging using a metasurface double-helix dot projector

- Nanophotonic device design based on large language models: multilayer and metasurface examples

- High-efficiency generation of bi-functional holography with metasurfaces

- Dielectric metasurfaces based on a phase singularity in the region of high reflectance

- Exceptional points in a passive strip waveguide