Abstract

The mechanization of farming is currently the most pressing problem facing humanity and a burgeoning academic field. Over the last decade, there has been an explosion of Internet of Things (IoT) application growth in agriculture. Agricultural robotics is bringing about a new era of farming because they are growing more intelligent, recognizing causes of variation on the farm, consuming fewer resources, and optimizing their efficiency to more flexible jobs. The purpose of this article is to construct an IoT-Fog computing equipped robotic system for the categorization of weeds and soy plants during both the hazy season and the normal season. The used dataset in this article included four classes: soil, soybean, grass, and weeds. A two-dimensional Convolutional Neural Network (2D-CNN)-based deep learning (DL) approach was implemented for data image classification with dataset of height and width of 150 × 150 and of three channels. The overall proposed system is considered an IoT-connected robotic device that is capable of applying classification through the Internet-connected server. The reliability of the device is also enhanced as it is enabled with edge-based Fog computing. Hence, the proposed robotic system is capable of applying DL classification through IoT as well as Fog computing architecture. The analysis of the proposed system was conducted in steps including training and testing of CNN for classification, validation of normal images, validation of hazy images, application of dehazing technique, and at the end validation of dehazed images. The training and validation parameters ensure 97% accuracy in classifying weeds and crops in a hazy environment. Finally, it concludes that applying the dehazing technique before identifying soy crops in adverse weather will help achieve a higher classification score.

1 Introduction

Agriculture is an essential part of the global economy since it is both a major source of revenue and an essential link in the global food supply chain. This makes agriculture one of the most important industries in the world. Agriculture is a sector that has a considerable influence on the economy. The Internet of Things (IoT) is quickly becoming ingrained in our routines, and the number of devices that can be connected is continuously growing. Farmers and other organizations now have access to a reliable and speedy technique for monitoring plants in real time thanks to the advent of digital farming, which combines novel strategies with cutting-edge technology and is housed within a singular framework [1]. The use of supplemental technology innovations to agricultural production processes with the goals of decreasing waste and increasing output is the goal of smart agriculture. Because of this, intelligent farms make use of technical resources that assist in many phases of the production process. These phases include crop surveillance, agronomic practices, conservation measures, pest management, and delivery tracking, to name a few. A promising answer for digital farming as well as for resolving the issues of worker shortages and diminishing profitability is the use of farming robotics [2].

Agricultural field robots, on the other hand, have evolved into an integral aspect of future vegetable cultivation and crops [3]. They contribute to an increase in both the accuracy of operations and the health of the soil, and they also contribute to an increase in yield. Typically, they come equipped with two or more sensors and cameras for navigational monitoring, simultaneous mapping, localization, and algorithmic route planning. The process of digital farming includes the collection of land and weather data using ground or aerial-based sensors, the transmission of these data to a fundamental advisory unit, the analysis and retrieval of information, and the provision of recommendations and actions to farmers, field robots, or agro-industrial companies. Even though the number of web units may have reached millions at this point, IoT systems often generate enormous quantities of data. Transferring all of this information over the Internet will use up bandwidth and place a burden on the cloud storage system. In massive IoT applications, a potentially game-changing technique called fog computation can remove networking and computational roadblocks. The use of fog computing is seen as an innovative approach to the processing of significant amounts of time-sensitive and essential data. Weeds are plants that should not be allowed to grow on the agricultural property because they are a nuisance and frequently contend for and take up most of the available sunshine, space, water, and soil nutrients. There are many environmental as well as social factors that must be considered. The major biotic limitation on agricultural produce is caused by invading species, which are among these factors. To solve the issue of weeds diminishing the quality and quantity of yields, new ways are being developed as part of an effort to increase the number of soybeans harvested. Poor visibility occurs frequently during hazy or foggy weather systems and can have a substantial impact on the accuracy or even the enhancement of agriculture vision systems. Poor visibility is prevalent during certain types of weather patterns. Changes were made to the conventional dehazing algorithm to improve the stability and reliability of the agrarian economy when it is hazy and to rectify the dim illumination and oversaturation of the restoration results on hazy days when using the traditional dehazing algorithm. This was done to make the traditional dehazing algorithm more accurate. It may be possible to see more clearly by employing a variety of techniques, such as a dehazing system, although this will depend on how cloudy the weather is [4].

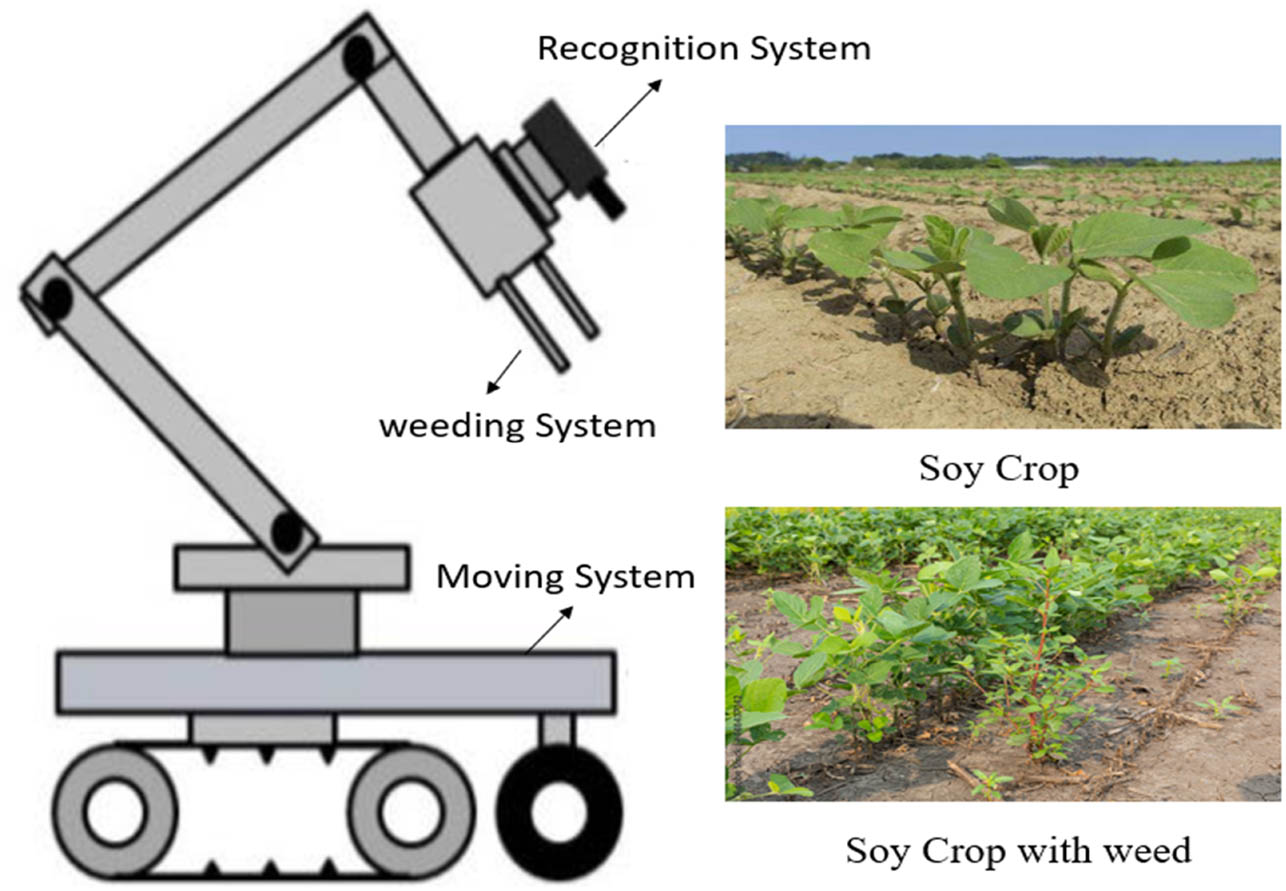

Soybean cultivation is grooming day by day as it is a good source of vegan protein. The production of such crops depends on the precise removal of weeds. This study aims to have implemented IoT-Fog computing-enabled robotic systems and Imaging adoption in smart agriculture. As shown in Figure 1, the authors have implemented an IoT-Fog computing-enabled robotic system for the classification of weeds and soy plants during hazy and normal seasons. The proposed system is considered an IoT-connected robotic device that is capable of applying classification through the Internet-connected server. The reliability of the device is also enhanced as it is enabled with edge-based Fog computing. Hence, the proposed robotic system is capable of applying deep learning (DL) classification through IoT as well as Fog computing architecture. The analysis of the proposed system was conducted in steps including training, and testing of CNN for classification, validation on normal images, validation of hazy images, application of dehazing technique, and at the end validation of hazy images. In light of this, the remaining work can be broken down into the following categories: in Section 2, existing literature on weed sensing systems is presented. In Section 3, the methodology that was used for the experiment is detailed. In Section 4, the results for IoT-Fog-enabled robotics-based robust classification of hazy and normal season agricultural images for weed detection are discussed in detail. In Section 5, the conclusions are presented.

IoT-Fog computing-enabled robotic system for the classification of weeds and soy plants.

2 Literature survey

Earlier research on site-specific weed control centered on real-time sensing and spraying systems. Due to their limited computational power and consequently slow operation, they were incapable of covering large areas. Earlier research on weed sensing was utilized to extract features from digital images to differentiate between crops and weed species. It was discovered that color-based indices were effective at distinguishing plant pixel value from the soil background, but that it was difficult to distinguish between crops and weeds. Texture features that encapsulate the spatial changes of pixel intensities, in addition to shape features such as roundness and perimeter, were able to differentiate between broadleaf and grassy plants. However, they were incapable of actually differentiating between the many species of weeds. Traditional machine learning (ML) methods, including random forest and the Support Vector Machine method, are required for weed detection. These techniques require human intervention in the design of the features to be used, and their success is heavily dependent on the quality of image acquisition, pre-processing, and feature extraction.

Zhu and Zhu suggested a classification system based on the shape and texture of five types of weeds found on farmland [5]. Hung et al. proposed an innovative learning-based strategy that makes use of feature learning to cut down on the amount of manual labor that is required. The classification of species of invasive plants is accomplished through the use of this approach. Small unmanned aerial vehicles (UAVs) are perfect for this purpose because they can collect information with good spatial resolution, which is essential for categorizing weed epidemics that are either localized or limited in scope [6]. In a study carried out by Kiani and Seyyedabbasi, the application of precision agriculture, which included the use of IoT, resulted in the field being segmented into different blocks, and sensors were used to collect data on the temperature, humidity, and moisture content in each of these areas. As a direct consequence of this, the farmers were able to cultivate and maintain their fields effectively [1]. Dankhara et al. studied weed identification using selective herbicide, used many approaches for precise and reliable weed detection in agriculture, and presented an IoT-based smartweed detection architecture [7]. Kulkarni and colleagues suggested weed detection using CNN and image processing based on the IoT. The Raspberry Pi was used to deploy a trained CNN model. A ML system built on the Raspberry Pi received images from the camera. By breaking the image up into smaller pieces, the Raspberry Pi does image segmentation. Watershed Segmentation Algorithm is the one that is employed. Each segment is given to the trained CNN model, which will categorize it as crop or weed [8].

Typically, computer vision systems consist of two key components. The first part can recognize crops and determine their traits, while the second part classifies them. Utilizing computer vision technology to detect weeds in fields typically requires either traditional image recognition or DL. Image characteristics such as colors, texture, and size are extracted during the weed detection phase, which is accomplished using traditional image-processing techniques. Wu et al. reviewed various techniques for weed identification in recent years, analyzed the benefits and drawbacks of existing methods, and introduced a variety of related leaves and stems, weed datasets, and weeding equipment [9]. The public image database for precision agriculture, which consists of 9 datasets on various uses, 10 datasets on fruit classification, and 15 datasets on weed control, was examined by Lu and Young [10].

As computing power and data volume continue to grow, Convolutional Neural Network (CNN) improved data expression capabilities for images make it possible for DL techniques to extract the discretization and multidimensional spatial semantic feature description of weeds. This allows these algorithms to avoid the limitations of traditional extraction methods. As a direct consequence of this, they have attracted the attention of more researchers. Rakhmatulin et al. presented an in-depth analysis of intelligence-based plant detection techniques, with a particular emphasis on current advancements in DL. The purpose of this article is to provide light on the overall capabilities, utility, and performance of several different DL approaches. According to the findings of this study, the quick implementation of intelligence in commercial processes is hampered by a variety of barriers. The following are some suggestions for addressing and overcoming these challenges [11].

Kamilaris and Prenafeta-Boldú examined the differences in performance between DL and other well-established methods that are currently employed for regression and classification. According to the findings of their research, DL outperforms other image-processing techniques that are currently popular in terms of accuracy [12]. Hasan et al. examined the methods of weed recognition and classification that are currently being used and found that they are based on DL. The following four key processes – data collection, dataset creation, DL approaches used for detection, localization, and classification of weeds in farms, and evaluation metrics approaches – are discussed in some detail: They were able to upgrade pre-trained models to attain good classification accuracy by using any plant dataset [13]. Deep CNN was created by Yu and colleagues to detect spotted spurge, ground ivy, and daisy in perennial ryegrass [14].

Table 1 summarizes the results of a survey that was conducted in the past on the detection of agricultural weeds using ML, IoT technology, computer vision, and DL.

Literature survey of weed detection based on Traditional ML, IoT, Computer Vision, and DL technologies

| Ref. | Authors | Purpose | ML | IOT | CV | DL |

|---|---|---|---|---|---|---|

| [5] | Zhu and Zhu | The application SVM in weed classification | ✔ | ✘ | ✘ | ✘ |

| [6] | Hung et al. | Method for the classification of weeds that makes use of high-resolution aerial photos | ✔ | ✘ | ✘ | ✘ |

| [1] | Kiani and Seyyedabbasi | WSN and IoT in precision agriculture | ✘ | ✔ | ✘ | ✘ |

| [7] | Dankhara et al. | An investigation of reliable methods for weed detection based on the IoT | ✘ | ✔ | ✘ | ✘ |

| [8] | Kulkarni et al. | IoT-Based Weed Detection Made Possible Through the Use of Image Analysis and CNN | ✘ | ✔ | ✘ | ✘ |

| [9] | Wu et al. | A review of various weed detection techniques that rely on computer vision | ✘ | ✘ | ✔ | ✘ |

| [10] | Lu and Young | A survey of datasets available to the public for use in computer vision applications related to precision agriculture | ✘ | ✘ | ✔ | ✘ |

| [11] | Rakhmatulin et al. | Real-Time Weed Detection from Crops Using DNN Technology in Agricultural Environments | ✘ | ✘ | ✘ | ✔ |

| [12] | Kamilaris and Prenafeta-Boldú | The application of DL techniques in agricultural production | ✘ | ✘ | ✘ | ✔ |

| [13] | Hasan et al. | Techniques based on DL for the identification of unwanted weeds in the image | ✘ | ✘ | ✘ | ✔ |

| [14] | Yu et al. | The Use of DL for Weed Identification in Perennial Ryegrass CNN | ✘ | ✘ | ✘ | ✔ |

✔ indicates the presence of technology and ✘ indicates the absence of technology.

ML, Machine learning; IoT, Internet of Things; DL, deep learning; CNN, convolutional neural network; DNN, deep neural network; WSN, wireless sensor network; SVM, support vector machine, CV: computer vision.

3 Proposed work

Because of the ever-increasing demand for agricultural goods and the ongoing requirement to improve the plantation system, people in the agricultural and academic communities have been making many efforts to remove invasive plants for decades. The level of human engagement and labor that is required for various agricultural tasks may be dramatically reduced if the agriculture business adopted intelligent autonomous robots that made use of the IoT.

The proposed method has been broken down and examined as a diagrammatic illustration in Figure 2. In the beginning, the already-existing dataset is used to teach the ML model through the process of transfer learning by cropping photographs. The difficulty lies in determining whether the model is capable of appropriately identifying or classifying the type of photos. To do this, the live hazy video is captured, and hazy frames are extracted from the video. First, the model is examined using these frames as a basis. The clarity of the hazy frames has been reduced as a result of the influence that the haze has had on them; hence, they need to have the haze removed from them first. The photos have been dehazed by employing a technique known as dark channel prior (DCP). Propagation and global atmospheric light are the two factors of the haze predictor that can be obtained by the DCP technique [16]. Following the collection of these parameters, the dazed photographs are developed, and the framework is validated with the help of these images.

Schematic representation of the suggested technique.

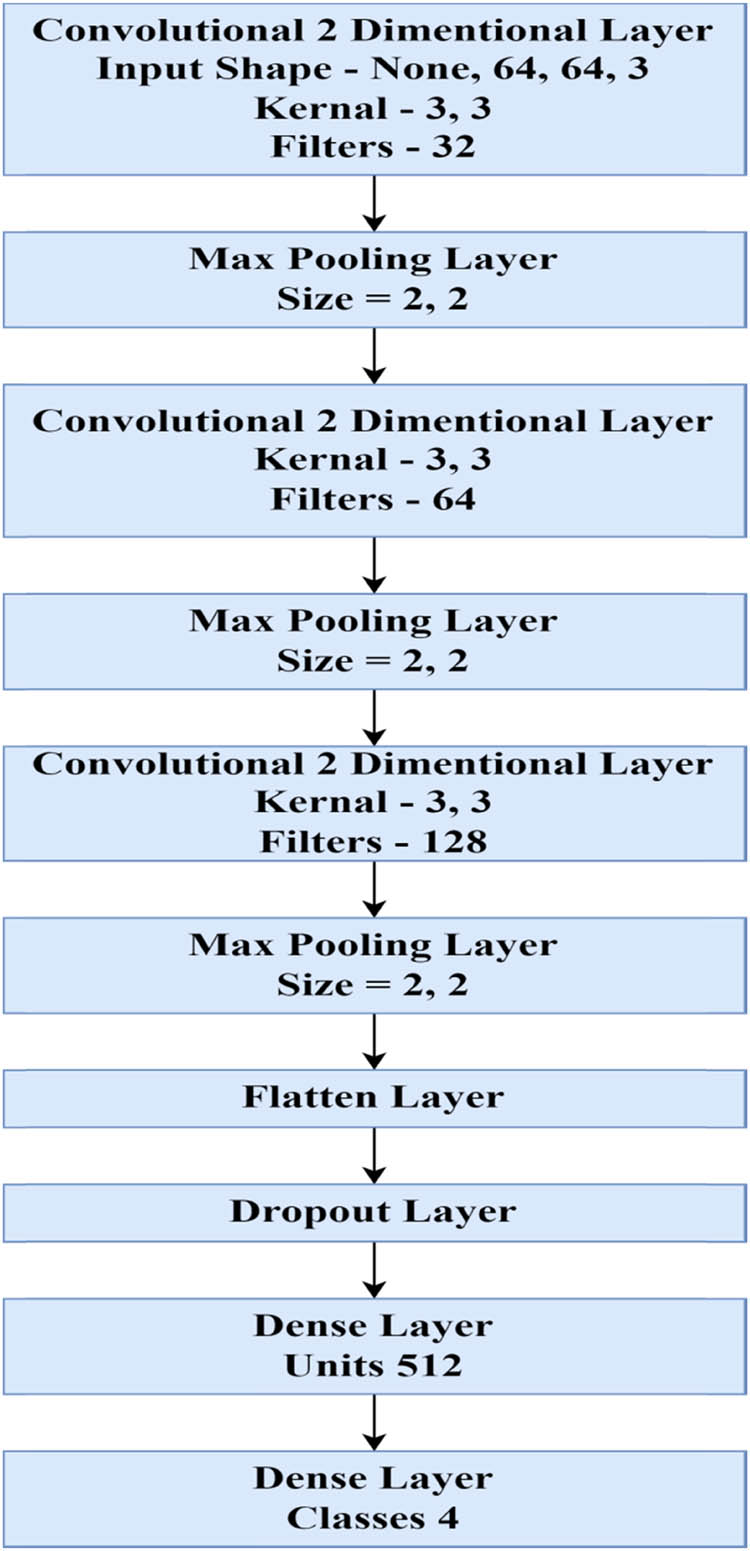

Figure 3 describes an image showing the architecture of each neural network used along with parameter values used in the neural network configuration. In this article, a 2D CNN was applied and analyzed.

Architecture of each neural network used.

3.1 Dehazing algorithm

This section describes an algorithm to obtain a de-hazed Image N from a hazy Image F using parameters of Eq. (1) with the help of the DCP [2] method.

Obtain image F′ by extracting 25% image rows of F. Find dark channel image F′dark from F′ by using the DCP method. Finally, obtain L (Global Atmospheric Light) from F ′dark by using the DCP method.

Find F dark from the normalized hazy image F c(z)/L c and obtain the transmission map (s) by using DCP.

Obtain the de-hazed image N c 0 from F, L c and s by using DCP.

Obtain the post-processed image N c′ from N c by using DCP.

For example, when the weather is hazy and misty, the visual of automated driving camera systems becomes obscured. This results in ambiguous refraction and glare, which makes it difficult for modern self-driving vehicles to function properly. Because of this, dehazing has become an increasingly favorable strategy for both contrast enhancement and video-processing tasks. The advancements that come from this strategy will then benefit a wide variety of emerging application fields, such as video surveillance and autonomous or assisted driving [15]. It is frequently used to determine the haze, which is the fundamental premise for the coarse spatial resolution and color diminishing of hazy images. The dispersing framework is vital to the concept of computer vision and plays a significant role in this identification.

In Eq. (1), F(

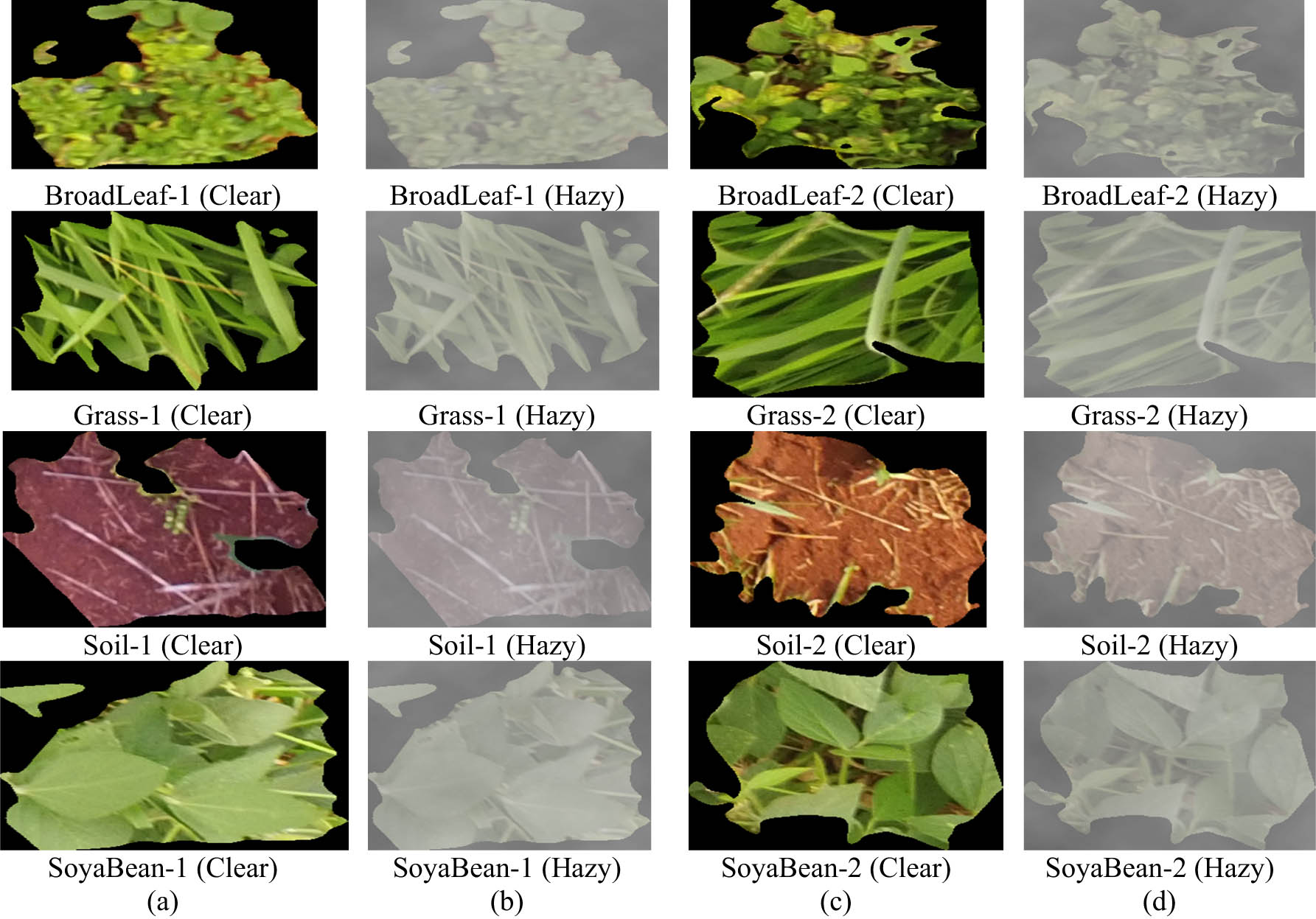

(a and c) Clear images of broadleaf, grass, soil, and soyabean; (b and d) hazy images corresponding to images shown in (a and c).

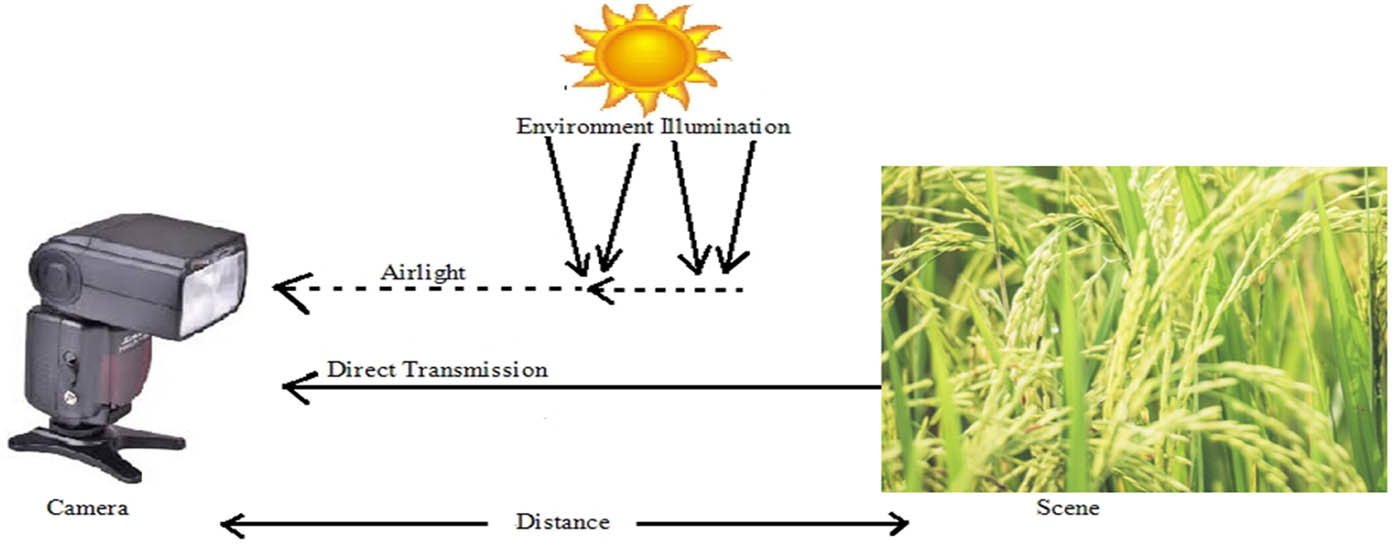

Attributed to the formation of haze in the atmosphere, images taken in outdoor settings frequently exhibit poor visibility, diminished contrasts, color shifts, and faded surfaces. The inclusion of haze, which is exacerbated by airborne particulates such as dust, thick haze, and smoke, tends to add noise to images, causing haze removal a particularly difficult image reconstruction and augmentation problem. In addition, many image processing algorithms are only effective with a haze-free scene’s luminance. However, a reliable and consistent vision system must sketch out the full spectrum of degradation processes from unconstrained environments. Figure 4 depicts the optical scattering occurring within the atmosphere.

The technique for image dehazing is to derive a clear image N(x) from a hazy image F(z). It is improperly posed because only F(x) is known. Several techniques [15,16,17,18] examine the development of clear images to construct a type of efficient image priors for estimating the transmission map s(z). A few data-driven strategies [19,20,21,22] have already been proposed because these prior records involve the use of statistical estimations and often do not apply to challenging scenarios. These algorithms calculate transmission maps using a traditional ML approach or fully CNNs s(z). After figuring out the image s(z), the above-mentioned methods assume that atmospheric light is the same everywhere and then use this assumption to figure out how to take clear photos Eq. (1). When s(z) and A are calculated separately, there may be errors that cause color distortions in the results, even though satisfactory results have been reached. Numerous methodologies [23,24,25,26,27] create end-to-end learnable networks to straightforwardly calculate sharp pictures from hazy images. Other physical model-based dehazing techniques, such as refs [28,29,30], can produce superior results, since image dehazing is a very poorly posed problem that usually needs history or careful design to make it a well-posed, feed-forward system that directly figures out a clear image out of a low-resolution image and could have trouble trying to show the relationship between a hazy input and a clear image. To make haze removal easier, it is important to figure out what makes a hazy image what it is. The device was designed to ensure that crops and weeds are distinguished on hazy days. This device implements crop detection surveillance and image analysis in real-time using machine intelligence, transfer learning, and DL [31,32]. The operation of this device is dependent on two distinct predetermined data sets, i.e., with and without weed. Then, each instance of output is generated by comparing these datasets to the inputs. With the Keras, Weka, and OpenCV, ML algorithms, Python’s embedded script is being used to optimize throughput, efficiency, and so on. The Deep Neural Network and Recurrent Neural Network applications of the previous device fell short of the exact requirements and needs [33]. These methods failed because they lacked precision, effectiveness, throughput, etc. [34]. Thus, this scheme ensures that the necessary conditions are met. The device would assist farmers in identifying crops and weeds in hazy conditions [35,36].

4 Experimentation and discussion

Initially, DL models were trained on an acquired dataset including images of crops. The acquired images are distributed into train and test images in five-fold ratios to train and test DL models. Metrics resulted in a higher effect of the implementation of haze removal algorithms, and so accuracy along with other parameters got raised to 97%. Hence, haze removal algorithms also impact to improve the accuracy parameters. The UAV took a series of photos, and out of those photos, only the ones that showed weeds were chosen, which resulted in a total of 400 photographs. These photos were segmented with the Pynoviso software using the SLIC algorithm, and the segments were manually labeled with their corresponding classes. These sections were incorporated toward the building of the imagenet dataset in some capacity. There are 15,336 segments in this image dataset: 3,249 of which are soil, 7,376 of which are soybean, 3,520 of which are grass, and 1,191 of which are broadleaf weeds [37]. Steps involved in Weed Detection in Soybean Crops are shown in Figure 5, and are as follows:

train and validate classification using CNN using input image size 150 × 150;

test on basic testing images [37];

added haze in images using basic blur, Gaussian, and Box Blur;

test on dehazed testing images;

remove haze in images;

test on dehazed removed testing images.

Optical scattering in the atmosphere.

4.1 Training and validation on plain dataset

Train and validate classification using CNN input image of size 150 × 150. The model is trained using the data from the training set. The values of the model parameters are learned by the model from the training data. The validation test helps tune hyperparameters to decide which model is the best out of several possible options. Figure 6 shows training and validation on a plain dataset.

![Figure 6

Training and validation on plain dataset [37].](/document/doi/10.1515/pjbr-2022-0105/asset/graphic/j_pjbr-2022-0105_fig_006.jpg)

Training and validation on plain dataset [37].

4.2 Testing on plain dataset

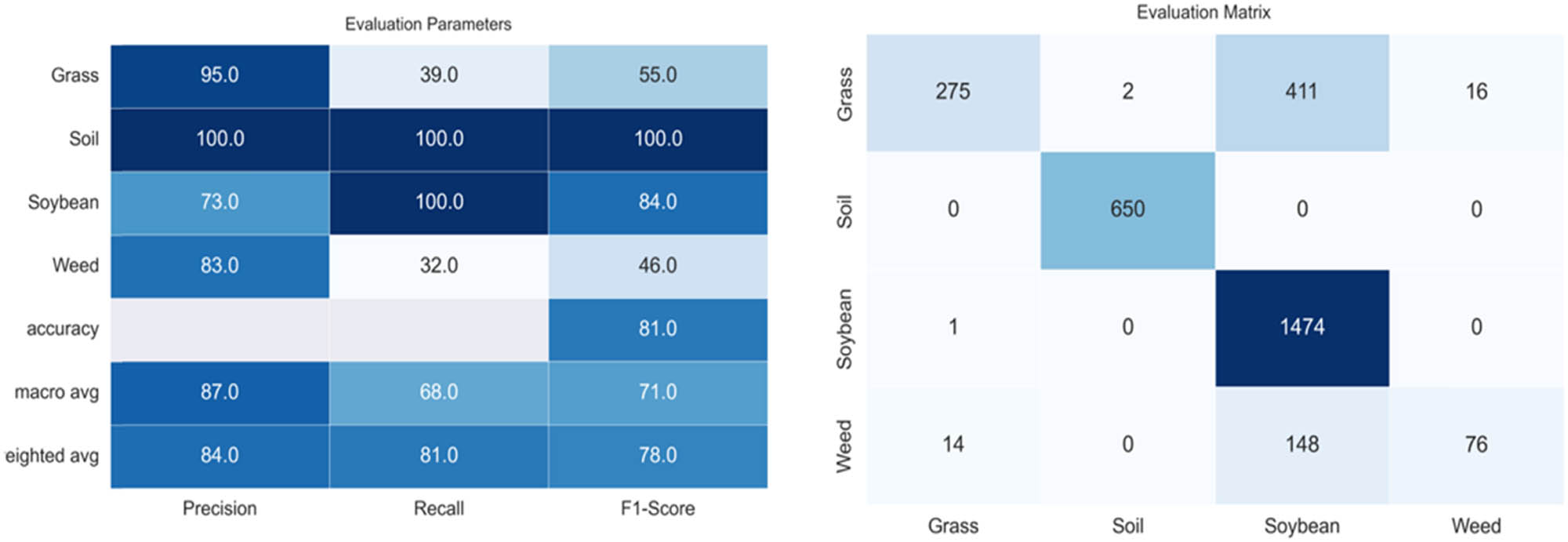

Following the completion of the tuning process, the best model was chosen based on the ideal combination of hyperparameters. Figure 7 presents testing on a plain dataset.

![Figure 7

Testing on plain dataset [37].](/document/doi/10.1515/pjbr-2022-0105/asset/graphic/j_pjbr-2022-0105_fig_007.jpg)

Testing on plain dataset [37].

4.3 Testing on hazy dataset

Test on basic testing images was performed, and blur was added in images using basic blur, Gaussian, and Box Blur. Figure 8 presents testing on hazy dataset.

Testing on haze dataset.

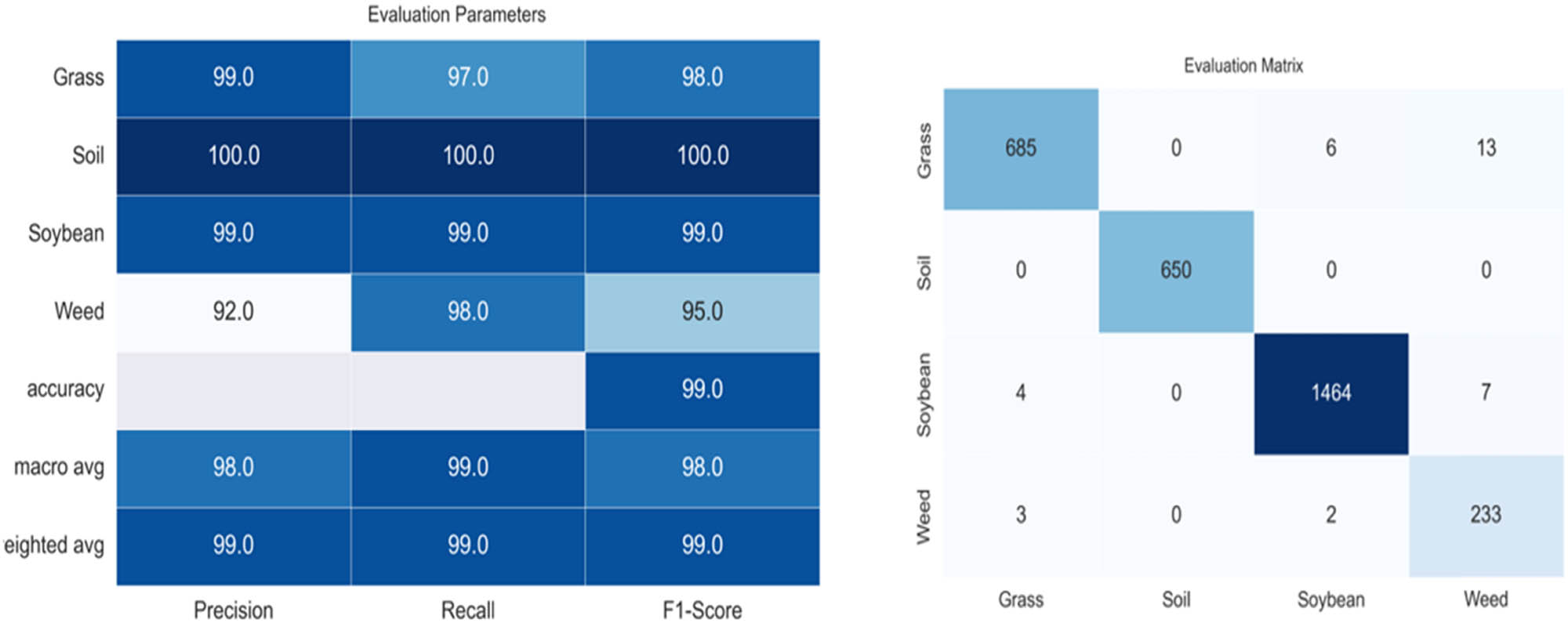

4.4 Testing on haze removed dataset

At this step, tests on the haze-removed dataset were performed. Figure 9 presents testing on haze-removed dataset.

Testing on dehazed dataset.

5 Conclusion

Because soybeans are an excellent source of protein for vegans, there has been a recent uptick in the cultivation of soybeans. The successful cultivation of such crops is contingent on the meticulous elimination of unwanted plants. Figuring out whether or not agricultural land is covered in crops or weeds can be challenging. If the classification has to be carried out in less-than-ideal conditions, such as fog, haze, and rain, the difficulty of the task significantly increases. The purpose of this article is to construct an IoT-Fog computing-enabled robotic system for the categorization of weeds and soybean plants during both the hazy season and the normal season. Four different types of vegetations were represented in the dataset that was used for this study: soil, soybeans, grass, and weeds. For data and picture categorization, a DL strategy that is based on a 2D-CNN was put into action. The dataset that was used has dimensions of 150 on each side and three distinct channels. The total system that is being proposed can be thought of as a robotic device connected to the IoT that can apply categorization through an Internet-connected server. Because it is enabled with edge-based Fog computing, the device’s reliability has also increased significantly. As a result, the suggested robotic system can implement DL classification using IoT and fog computing architecture. The evaluation of the suggested system was carried out in stages, which included the training and testing of CNN for classifications, evaluation of images, evaluation of hazy images, application of the dehazing technique, and, finally, validation of photos that had been dehazed. It has been determined, as a result, that employing the dehazing method before the detection of weeds in conditions where visibility is low will be beneficial and will contribute to the achievement of a better classification score. In the future, a wide range of innovative dehazing strategies will be implemented to further improve precision as well as other aspects.

Acknowledgements

The author wishes to thank publisher, editor, and reviewer of the article.

-

Funding information: The authors state no funding is involved.

-

Author contributions: IK designed the experiments and VK carried them out. RP and RK developed the model code and performed the simulations. JV prepared the manuscript with contributions from all coauthors.

-

Conflict of interest: The authors state no conflict of interest.

-

Informed consent: Informed consent was obtained from all individuals included in this study.

-

Ethical approval: The conducted research is not related to either human or animals use.

-

Data availability statement: The datasets generated during and/or analyzed during the current study are available in https://data.mendeley.com/datasets/3fmjm7ncc6/2.

References

[1] F. Kiani and A. Seyyedabbasi, “Wireless sensor network and internet of things in precision agriculture,” Int. J. Adv. Comput. Sci. Appl., vol. 9, no. 6, pp. 99–103. 2018.10.14569/IJACSA.2018.090614Suche in Google Scholar

[2] E. Navarro, N. Costa, and A. Pereira, “A systematic review of IoT solutions for smart farming,” Sensors, vol. 20, no. 15, p. 4231, 2020 Jul 29.10.3390/s20154231Suche in Google Scholar PubMed PubMed Central

[3] T. Talaviya, D. Shah, N. Patel, H. Yagnik, and M. Shah, “Implementation of artificial intelligence in agriculture for optimisation of irrigation and application of pesticides and herbicides,” Artif. Intell. Agric., vol. 4, pp. 58–73, 2020 Jan 1.10.1016/j.aiia.2020.04.002Suche in Google Scholar

[4] R. R Shamshiri, C. Weltzien, I. A. Hameed, I. J Yule, T. E Grift, S. K. Balasundram, et al. “Research and development in agricultural robotics: A perspective of digital farming,” Int. J. Agric. & Biol. Eng., vol. 11, no. 4, pp. 1–14, 2018.10.25165/j.ijabe.20181104.4278Suche in Google Scholar

[5] W. Zhu and X. Zhu, “The application of support vector machine in veed classification,” In 2009 IEEE International Conference on Intelligent Computing and Intelligent Systems, Vol. 4, IEEE, 2009 Nov 20, 532–536.10.1109/ICICISYS.2009.5357638Suche in Google Scholar

[6] C. Hung, Z. Xu, and S. Sukkarieh, “Feature learning based approach for weed classification using high resolution aerial images from a digital camera mounted on a UAV,” Remote Sens, vol. 6, no. 12, pp. 12037–12054, 2014 Dec 3.10.3390/rs61212037Suche in Google Scholar

[7] F. Dankhara, K. Patel, and N. Doshi, “Analysis of robust weed detection techniques based on the Internet of Things (IoT),” Procedia Comput. Sci., vol. 160, pp. 696–701, 2019 Jan 1.10.1016/j.procs.2019.11.025Suche in Google Scholar

[8] S. Kulkarni, S. A. Angadi, and V. T. Belagavi, “IoT based weed detection using image processing and CNN,” Int. J. Eng. Appl. Sci. Technol., vol. 4, no. 3, pp. 606–609, 2019.10.33564/IJEAST.2019.v04i03.089Suche in Google Scholar

[9] Z. Wu, Y. Chen, B. Zhao, X. Kang, and Y. Ding, “Review of weed detection methods based on computer vision,” Sensors, vol. 21, no. 11, p. 3647, 2021 Jan.10.3390/s21113647Suche in Google Scholar PubMed PubMed Central

[10] Y. Lu and S. Young, “A survey of public datasets for computer vision tasks in precision agriculture,” Comput. Electron. Agric, vol. 178, p. 105760, 2020 Nov 1.10.1016/j.compag.2020.105760Suche in Google Scholar

[11] I. Rakhmatulin, A. Kamilaris, and C. Andreasen, “Deep neural networks to detect weeds from crops in agricultural environments in real-time: A review,” Remote Sens., vol. 13, no. 21, p. 4486, 2021 Nov 8.10.3390/rs13214486Suche in Google Scholar

[12] A. Kamilaris and F. X. Prenafeta-Boldú, “Deep learning in agriculture: A survey,” Comput. Electron. Agric., vol. 147, pp. 70–90, 2018 Apr 1.10.1016/j.compag.2018.02.016Suche in Google Scholar

[13] A. M. Hasan, F. Sohel, D. Diepeveen, H. Laga, and M. G. Jones, “A survey of deep learning techniques for weed detection from images,” Comput. Electron. Agric., vol. 184, p. 106067, 2021 May 1.10.1016/j.compag.2021.106067Suche in Google Scholar

[14] J. Yu, A. W. Schumann, Z. Cao, S. M. Sharpe, and N. S. Boyd, “Weed detection in perennial ryegrass with deep learning convolutional neural network,” Front. Plant. Sci., vol. 10, p. 1422, 2019 Oct 31.10.3389/fpls.2019.01422Suche in Google Scholar PubMed PubMed Central

[15] R. T. Tan, “Visibility in bad weather from a single image,” In 2008 IEEE conference on computer vision and pattern recognition, IEEE, 2008 Jun 23, pp. 1–8.10.1109/CVPR.2008.4587643Suche in Google Scholar

[16] K. He, J. Sun, and X. Tang, “Single image haze removal using dark channel prior,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 33, no. 12, pp. 2341–2353, 2010 Sep 9.10.1109/TPAMI.2010.168Suche in Google Scholar

[17] R. Fattal, “Dehazing using color-lines,” ACM Trans. Graph. (TOG), vol. 34, no. 1, pp. 1–4, 2014 Dec 29.10.1145/2651362Suche in Google Scholar

[18] D. Berman and S. Avidan, “Non-local image dehazing,” In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2016, pp. 1674–1682.10.1109/CVPR.2016.185Suche in Google Scholar

[19] B. Cai, X. Xu, K. Jia, C. Qing, and D. Tao, “Dehazenet: An end-to-end system for single image haze removal,” IEEE Trans. Image Process, vol. 25, no. 11, pp. 5187–5198, 2016 Aug 10.10.1109/TIP.2016.2598681Suche in Google Scholar PubMed

[20] W. Ren, S. Liu, H. Zhang, J. Pan, X. Cao, and M. H. Yang, “Single image dehazing via multi-scale convolutional neural networks,” In European Conference on Computer Vision, Cham, Springer, 2016 Oct 8, pp. 154–169.10.1007/978-3-319-46475-6_10Suche in Google Scholar

[21] H. Zhang and V. M. Patel, “Densely connected pyramid dehazing network,” In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018, 3194–3203.10.1109/CVPR.2018.00337Suche in Google Scholar

[22] W. Ren, J. Zhang, X. Xu, L. Ma, X. Cao, G. Meng, et al., “Deep video dehazing with semantic segmentation,” IEEE Trans. Image Process, vol. 28, no. 4, pp. 1895–1908, 2018 Oct 15.10.1109/TIP.2018.2876178Suche in Google Scholar PubMed

[23] R. Li, J. Pan, Z. Li, and J. Tang, “Single image dehazing via conditional generative adversarial network,” In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018, pp. 8202–8211.10.1109/CVPR.2018.00856Suche in Google Scholar

[24] W. Ren, L. Ma, J. Zhang, J. Pan, X. Cao, W. Liu, et al., “Gated fusion network for single image dehazing,” In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018, pp. 3253–3261.10.1109/CVPR.2018.00343Suche in Google Scholar

[25] X. Liu, Y. Ma, Z. Shi, and J. Chen, “Griddehazenet: Attention-based multi-scale network for image dehazing,” In Proceedings of the IEEE/CVF International Conference on Computer Vision, 2019, pp. 7314–7323.10.1109/ICCV.2019.00741Suche in Google Scholar

[26] H. Dong, J. Pan, L. Xiang, Z. Hu, X. Zhang, F. Wang, et al., “Multi-scale boosted dehazing network with dense feature fusion,” In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020, pp. 2157–2167.10.1109/CVPR42600.2020.00223Suche in Google Scholar

[27] H. Wu, Y. Qu, S. Lin, J. Zhou, R. Qiao, Z. Zhang, et al., “Contrastive learning for compact single image dehazing,” In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 10551–10560.10.1109/CVPR46437.2021.01041Suche in Google Scholar

[28] I. Kansal and S. S. Kasana, “Improved color attenuation prior based image de-fogging technique,” Multimed. Tools Appl., vol. 79, no. 17, pp. 12069–12091, 2020 May.10.1007/s11042-019-08240-6Suche in Google Scholar

[29] I. Kansal and S. S. Kasana, “Minimum preserving subsampling-based fast image de-fogging,” J. Mod. Opt., vol. 65, no. 18, pp. 2103–2123, 2018 Oct 24.10.1080/09500340.2018.1499976Suche in Google Scholar

[30] I. Kansal and S. S. Kasana, “Weighted image de-fogging using luminance dark prior,” J. Mod. Opt., vol. 64, no. 19, pp. 2023–2034, 2017 Oct 28.10.1080/09500340.2017.1333641Suche in Google Scholar

[31] Z. Kang, B. Yang, Z. Li, and P. Wang, “OTLAMC: An online transfer learning algorithm for multi-class classification,” Knowl. Syst., vol. 176, pp. 133–146, 2019 Jul 15.10.1016/j.knosys.2019.03.024Suche in Google Scholar

[32] A. Kaya, A. S. Keceli, C. Catal, H. Y. Yalic, H. Temucin, and B. Tekinerdogan, “Analysis of transfer learning for deep neural network based plant classification models,” Comput. Electron. Agric., vol. 158, pp. 20–29, 2019 Mar 1.10.1016/j.compag.2019.01.041Suche in Google Scholar

[33] I. Kansal, R. Popli, J. Verma, V. Bhardwaj, R. Bhardwaj, “Digital image processing and IoT in smart health care-A review,” In 2022 International Conference on Emerging Smart Computing and Informatics (ESCI), IEEE, 2022 Mar 9, pp. 1–6.10.1109/ESCI53509.2022.9758227Suche in Google Scholar

[34] D. S. Ting, L. Carin, V. Dzau, and T. Y. Wong, “Digital technology and COVID-19,” Nat. Med., vol. 26, no. 4, pp. 459–461, 2020 Apr.10.1038/s41591-020-0824-5Suche in Google Scholar PubMed PubMed Central

[35] M. Snehi, and A. Bhandari, “Security management in SDN using fog computing: A survey,” In Strategies for E-Service, E-Governance, and Cybersecurity, Apple Academic Press, New York, 2021 Dec 28, pp. 117–126.10.1201/9781003131175-9Suche in Google Scholar

[36] M. Snehi and A. Bhandari, “An SDN/NFV based intelligent fog architecture for DDoS defense in cyber physical systems,” In 2021 10th International Conference on System Modeling & Advancement in Research Trends (SMART), IEEE, 2021 Dec 10, pp. 229–234.10.1109/SMART52563.2021.9676241Suche in Google Scholar

[37] https://data.mendeley.com/datasets/3fmjm7ncc6/2.Suche in Google Scholar

© 2023 the author(s), published by De Gruyter

This work is licensed under the Creative Commons Attribution 4.0 International License.

Artikel in diesem Heft

- Regular Article

- The role of prior exposure in the likelihood of adopting the Intentional Stance toward a humanoid robot

- Review Articles

- Robot-assisted therapy for upper limb impairments in cerebral palsy: A scoping review and suggestions for future research

- Is integrating video into tech-based patient education effective for improving medication adherence? – A review

- Special Issue: Recent Advancements in the Role of Robotics in Smart Industries and Manufacturing Units - Part II

- Adoption of IoT-based healthcare devices: An empirical study of end consumers in an emerging economy

- Early prediction of cardiovascular disease using artificial neural network

- IoT-Fog-enabled robotics-based robust classification of hazy and normal season agricultural images for weed detection

- Application of vibration compensation based on image processing in track displacement monitoring

- Control optimization of taper interference coupling system for large piston compressor in the smart industries

- Vibration and control optimization of pressure reducer based on genetic algorithm

- Real-time image defect detection system of cloth digital printing machine

- Ultra-low latency communication technology for Augmented Reality application in mobile periphery computing

- Improved GA-PSO algorithm for feature extraction of rolling bearing vibration signal

- COVID bell – A smart doorbell solution for prevention of COVID-19

- Mechanical equipment fault diagnosis based on wireless sensor network data fusion technology

- Deep auto-encoder network for mechanical fault diagnosis of high-voltage circuit breaker operating mechanism

- Control strategy for plug-in electric vehicles with a combination of battery and supercapacitors

- Reconfigurable intelligent surface with 6G for industrial revolution: Potential applications and research challenges

- Hybrid controller-based solar-fuel cell-integrated UPQC for enrichment of power quality

- Power quality enhancement of solar–wind grid connected system employing genetic-based ANFIS controller

- Hybrid optimization to enhance power system reliability using GA, GWO, and PSO

- Digital healthcare: A topical and futuristic review of technological and robotic revolution

- Artificial neural network-based prediction assessment of wire electric discharge machining parameters for smart manufacturing

- Path reader and intelligent lane navigator by autonomous vehicle

- Roboethics - Part III

- Discrimination against robots: Discussing the ethics of social interactions and who is harmed

- Special Issue: Humanoid Robots and Human-Robot Interaction in the Age of 5G and Beyond - Part I

- Visual element recognition based on profile coefficient and image processing technology

- Application of big data technology in electromechanical operation and maintenance intelligent platform

- UAV image and intelligent detection of building surface cracks

- Industrial robot simulation manufacturing based on big data and virtual reality technology

Artikel in diesem Heft

- Regular Article

- The role of prior exposure in the likelihood of adopting the Intentional Stance toward a humanoid robot

- Review Articles

- Robot-assisted therapy for upper limb impairments in cerebral palsy: A scoping review and suggestions for future research

- Is integrating video into tech-based patient education effective for improving medication adherence? – A review

- Special Issue: Recent Advancements in the Role of Robotics in Smart Industries and Manufacturing Units - Part II

- Adoption of IoT-based healthcare devices: An empirical study of end consumers in an emerging economy

- Early prediction of cardiovascular disease using artificial neural network

- IoT-Fog-enabled robotics-based robust classification of hazy and normal season agricultural images for weed detection

- Application of vibration compensation based on image processing in track displacement monitoring

- Control optimization of taper interference coupling system for large piston compressor in the smart industries

- Vibration and control optimization of pressure reducer based on genetic algorithm

- Real-time image defect detection system of cloth digital printing machine

- Ultra-low latency communication technology for Augmented Reality application in mobile periphery computing

- Improved GA-PSO algorithm for feature extraction of rolling bearing vibration signal

- COVID bell – A smart doorbell solution for prevention of COVID-19

- Mechanical equipment fault diagnosis based on wireless sensor network data fusion technology

- Deep auto-encoder network for mechanical fault diagnosis of high-voltage circuit breaker operating mechanism

- Control strategy for plug-in electric vehicles with a combination of battery and supercapacitors

- Reconfigurable intelligent surface with 6G for industrial revolution: Potential applications and research challenges

- Hybrid controller-based solar-fuel cell-integrated UPQC for enrichment of power quality

- Power quality enhancement of solar–wind grid connected system employing genetic-based ANFIS controller

- Hybrid optimization to enhance power system reliability using GA, GWO, and PSO

- Digital healthcare: A topical and futuristic review of technological and robotic revolution

- Artificial neural network-based prediction assessment of wire electric discharge machining parameters for smart manufacturing

- Path reader and intelligent lane navigator by autonomous vehicle

- Roboethics - Part III

- Discrimination against robots: Discussing the ethics of social interactions and who is harmed

- Special Issue: Humanoid Robots and Human-Robot Interaction in the Age of 5G and Beyond - Part I

- Visual element recognition based on profile coefficient and image processing technology

- Application of big data technology in electromechanical operation and maintenance intelligent platform

- UAV image and intelligent detection of building surface cracks

- Industrial robot simulation manufacturing based on big data and virtual reality technology