Abstract

The traditional ray casting algorithm has the capability to render three-dimensional volume data in the viewable two-dimensional form by sampling the color data along the rays. The speed of the technique relies on the computation incurred by the huge volume of rays. The objective of the paper is to reduce the computations made over the rays by eventually reducing the number of samples being processed throughout the volume data. The proposed algorithm incorporates the grouping strategy based on fuzzy mutual information (FMI) over a group of voxels in the conventional ray casting to achieve the reduction. For the data group, with FMI in a desirable range, a single primary ray is cast into the group as a whole. As data are grouped before casting rays, the proposed algorithm reduces the interpolation calculation and thereby runs with lesser complexity, preserving the image quality.

1 Introduction

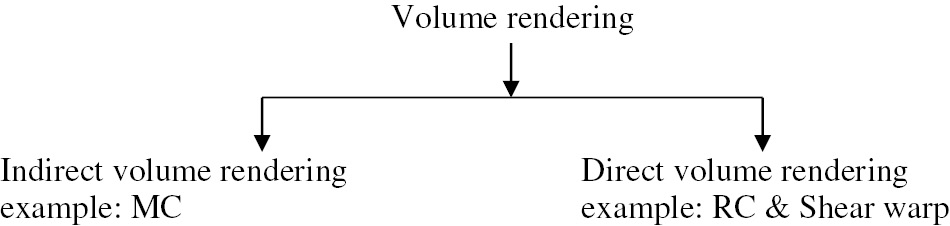

Imaging of medical data has become essential for an efficient diagnosis and treatment of diseases. Prior analysis of infections or abnormalities gives a better idea of the disease of the patients, and therefore physicians can be more accurate. Medical image processing has been a vast and very much focused area of research during the past decades. Researchers pay attention to segmentation, enhancement, and visualization of the medical image data obtained by various modalities like computed tomography (CT), magnetic resonance imaging (MRI), and positron emission tomography (PET). The CT/MRI/PET slices provide information only in the corresponding axes location. In order to improvise the visualization, the slices from the modalities are rendered together in three-dimensional (3D) form. The volume rendering algorithms are broadly classified into two categories, namely the indirect volume rendering (IVR) and the direct volume rendering (DVR) methods. This categorization is made out of the difference in the way the methods process the input 2D data.

The IVR algorithms generate an intermediate surface from the actual input; it is then transformed to a 3D image. Marching cube is the most common and widely used IVR algorithm. The DVR algorithm works through the input directly without any intermediate surfaces and renders the volume. Examples of DVR techniques are ray casting and shear warp. The basic concept to render the volume data by casting rays into it was proposed by Levoy in his paper [7]. The rendering pipeline of a volume follows the sequence as shown in Figure 1.

Rendering Pipeline of Ray Casting.

The ray casting algorithm is a direct volume rendering technique that casts rays directly into the volume, along which samples are taken and the interpolated sampling points are composited into a single color value using Eqs. (1) and (2):

where Cout is the output color, Cin is the accumulated color, Cnow is the current voxel’s color, and α is the opacity factor. It is this single value that represents all the corresponding voxels of the volume data in the image plane, as shown in Figure 2.

Rays Traversing a Set of Voxels Along Its Path.

The rendering procedure depicted in Figure 1 broadly comes under two main levels:

Data preparation level – sampling and interpolation

Appearance generation level – gradient estimation, classification, shading, composition

Each level incurs its own complexity. The color of a location in the resultant output image is determined by re-sampling the samples taken at regular intervals. The unknown samples are computed using tri-linear interpolation, which performs 17 additions and 16 multiplications [8] for a single computation. Thus, the total complexity of the rendering algorithm is directly proportional to the total number of interpolations made. The motivation of this paper is to bring down the rendering complexity of the ray casting algorithm by grouping the rays originating from relevant neighboring voxels and then to cast them. This way of optimizing the ray casting technique avoids interpolation of similar unknown voxels and also does not compromise the rendering quality. In addition to the other optimizations that also aim for the same as discussed in the following section, this work provides a different aspect in casting the rays, achieving the reduction in the complexity. The organization of the paper is as follows. Section 1 is the introduction. Section 2 reviews the works that have been proposed for the improvement of the traditional ray casting and those works that have used fuzzy mutual information (FMI). Section 3 reveals the proposed improvements in ray casting. Section 4 discusses the results, and Section 5 is the conclusion.

2 Related Works

The quality of the conventional ray casting algorithm has been improved in several ways, which include the improvisation of rendering speed, minimizing the artifacts, and improving the quality of rendering. The objective of the paper is to come out with a faster ray casting. Hence, we limit our survey with works of the same concern. Empty space leaping is done in Ref. [10] by separating the target object from the background using an axis aligned bounding box. Hence, whenever a ray is cast into the volume, a check is made to confirm if it passes through the bounding box and interpolation is carried out only for those samples lying within it. This scheme uses a fast look-up algorithm to identify non-empty cubes and thus avoids blind assignment on the optical properties with a reduction in the computation time. The volume data is cut using the bounding box and is compressed using the octree data structure in Ref. [1]. The object space obtained by cutting the volume data forms the root of the linear octree. The eight children nodes are assigned one of the following states – (i) all voxels having same color (full, F), (ii) some voxels having the same color (partial, P), and (iii) empty nodes (empty, E). This way of state assignment helps not only to skip empty voxels but also to avoid duplication in sampling, i.e. voxels in F state are also skipped. This reduces the ray composition time by three times. Kreeger et al. in their work [4] improved the rendering speed of the conventional ray casting by adaptively increasing the distance of sampling and the number of rays. It begins with a single ray, and the rays get split at each level of sampling and at a larger sampling distance. They used effective filters on their input to improve the quality of the rendered image. The scheme in Ref. [8] performs sampling in three steps – determination of intersection points and the corresponding plane, determination of optical property of all intersection points by linear interpolation, and finally determination of optical property of intersection points between adjacent intersection points using definite proportion and separated points. This reduced one-third of the total computation. The work in Ref. [13] separated the foreground voxels from the background and incorporated the space leaping technique to render the foreground. It also used a multi-factor in the place of simple transfer functions to determine the opacity. This improvement had a tradeoff between speed and image quality. The work described in Ref. [9] fragmented the rays as per their vertical extent in the volume. This avoids unnecessary interpolation in homogeneous regions and modifies the fundamental color and opacity, calculating equations by including an opacity summation term that adds the opacity of all the samples within a fragment. Another accelerated ray casting algorithm proposed in Ref. [12] skips the voxels not contributing to the rendering. These voxels are referred to as the empty voxels. Whenever an empty voxel is encountered, the algorithm makes a skip by an incrementing step value until a non-empty voxel is reached. As it does not process the empty voxels, it greatly reduces the computation overhead with a tradeoff in the image quality. The technique in Ref. [6] introduced precalculated virtual samples between real samples to speed up the rendering process with an even better-quality image. The value of the virtual samples is computed using the Catmull-Rom spline curve. Through these virtual samples, the sampling rate increases by four times that of the classic ray casting.

An efficient way to determine the features coming under a class is to employ FMI. The basic concept and usage of FMI was described by Kasko in Ref. [3]. Since then, it has been used widely for different purposes. In Ref. [5], FMI is used to judge the features for stability among the features extracted by wavelet packet decomposition of the input image. The work in Ref. [2] combined FMI with several classifiers to bring out a more efficient classification technique. The relevance of the inputs is studied using FMI in Ref. [11] and thus discriminated the highly informative variables from apparently less informative ones, thus using FMI as a possibility-based measure. It provides more stable and accurate estimations regarding the relation between features [14].

The following section employs the FMI in a completely different purpose but with the same context of finding the closeness of a set of inputs.

3 Proposed Approach

The traditional ray casting algorithm treats all voxels as individuals during the process of data preparation and thus tri-linearly computes all voxels. As the computational complexity of the data preparation level in the rendering pipeline depends upon the processing of each of the samples, it is obvious that the overall complexity decreases when that of the individual samples becomes reduced. This is achieved by initializing to cast the rays from groups of voxels rather than from singles. The scheme is implemented by subdividing the individual data slices into continuous, non-overlapping blocks as shown in Figure 3, and preparing the data for the virtual blocks rather than for virtual voxels.

A Single Slice Sub-divided into Blocks.

Prior to casting a single ray onto a group, the relevance among the group members is measured to confirm if it is reasonable to limit with that single ray. This test is performed by determining the FMI, which is the sum of the FMIs of all available classes, calculated as in Eqs. (3) to (6):

where uc is the membership of each variable x to a cluster c, D is the actual match degree of the data to the cluster c, and FMIc is the dependence value among the members of cluster c. An optimum fuzzy threshold is fixed and the calculated FMI value is compared with the threshold. A single primary ray is hit to a group of (m×m) voxels if the computed FMI is greater than the threshold and one voxel from the group serves as the reference. On the other case, when the FMI lies within the optimum threshold, the voxels are treated as individuals and rays are cast to each of them, thus splitting the primary ray into (m×m) number of rays. This concept is illustrated in Figure 4. The figure shows three corresponding voxel groups from the consecutive slices. A 3×3 group from the first slice is assumed to have FMI within the threshold, and hence a single ray is cast to it. The reference voxel of the set is shown as a solid cube. The corresponding set from the second slice has a greater FMI, and so limiting to cast a single ray from it has the risk of information loss. Therefore, rays are shot to all of its voxels independently. This process extends throughout the volume.

Rays Cast into Groups – An Illustration.

3.1 FMI Mask

FMI-based data grouping acquires the use of an additional data structure – an FMI mask as shown in Figure 5. The FMI mask stores the details of whether the voxels are grouped or not; in the case of maintaining the groups, this mask holds the information about the reference voxel. A look-up table is created to save the interpolated values of the virtual voxels. The interpolated value of the reference voxels is used for the members of its group.

FMI Mask in Real and Virtual Slices – An Illustration.

Whenever a voxel group is begun to be processed, its FMI mask is looked up to get its reference voxel, which is identified as the voxel with mask value 1. In general, the top-left-corner voxel is registered as the reference voxel, as in Figure 5. Hence, it is sufficient to interpolate only the reference voxel, and that value is assigned as the data value for its group members. This way of grouping avoids performing interpolation for closely related and relevant samples, and so reduces the computational complexity. In short, as rays proceed along the data, all samples are traversed but not all are processed.

3.2 Pseudocode

| Input: 2D CT/MRI slices, sampling distance SD, direction vector DV, fuzzy threshold FT, group size (GR×GC) |

| Output: 2D resultant image with 3D details |

| Steps: |

| for each slice |

| Initialize groups (GR×GC), group1to groupn, processed_flag {p_flag=0 for virtual slices; p_flag=1 for real slices} |

| for each groupi |

| Compute FMI |

| if FMI is greater than FT //relevant voxels |

| for [i,j]=[GR,GC] |

| fuzzy_mask(groupi)[1,1]=1 |

| fuzzy_mask(groupi)[i,j]=0 |

| else //independent voxels |

| for [i,j]=[GR,GC] |

| fuzzy_mask(groupi)[i,j]=1 |

| Cast rays into the volume |

| If fuzzy_mask(samplei)=1 |

| Tri-linearly interpolate the sample |

| Else |

| if p_flag=0 |

| Look up for the corresponding reference voxel |

| else |

| Retrieve the value |

| Compute the accumulated intensity |

4 Experimental Results

The ray casting and the proposed ray casting techniques are executed with two different data sets (a 150×150×52 CT head volume and a 150×150×100 tibia fibula CT) in a system with 4-GB RAM and 4-GHz processor, and the results are shown below (Figures 6 and 7). The results are registered for varied sampling distance values and the rendering time for multiple views using classical ray casting and the proposed optimization, and are tabulated in Tables 1–4.

CT Head Dataset.

Rendered using (A) ray casting and (B) proposed approach at sampling distance=0.5, viewpoint=90°.

CT Tibia Fibula Dataset.

Rendered using (A) ray casting and (B) proposed approach at sampling distance=0.5, viewpoint=45°.

Analysis of Ray Casting vs. Proposed Approach for CT Head Dataset.

| Method | Data orientation | Viewpoint | Samp. Dist. | Total no. of samples (×105) | Interpolated samples (×105) | DCT (s) |

|---|---|---|---|---|---|---|

| Ray casting | 90° | 90° | 0.5 | 23.175 | 11.475 | 6.972 |

| 0.25 | 46.125 | 34.425 | 20.674 | |||

| 0.2 | 57.6 | 45.9 | 27.562 | |||

| 0.1 | 114.975 | 103.275 | 48.3635 | |||

| 45° | 90° | 0.5 | 23.175 | 11.475 | 6.8335 | |

| 90° | 45° | 0.5 | 16.19276 | 8.0155 | 4.8299 | |

| Proposed approach | 90° | 90° | 0.5 | 23.175 | 6.36748 | 3.0505 |

| 0.25 | 46.125 | 18.62507 | 9.9702 | |||

| 0.2 | 57.6 | 22.79684 | 12.5471 | |||

| 0.1 | 114.975 | 43.5932 | 25.6046 | |||

| 45° | 90° | 0.5 | 23.175 | 7.37596 | 4.6525 | |

| 90° | 45° | 0.5 | 16.19276 | 4.21677 | 3.0995 |

MVRT for CT Head Dataset Using Ray Casting and Proposed Approach.

| Method | GT (s) | ∑ DCT (s) | MVRT=GT+∑ DCT (s) |

|---|---|---|---|

| Ray casting | 0 | 115.2349 | 115.2349 |

| Proposed approach | 25.5676 | 58.9244 | 84.4914 |

Analysis of Ray Casting vs. Proposed Approach for CT Tibia Fibula Dataset.

| Method | Data orientation | Viewpoint | Samp. Dist. | Total no. of samples (×105) | Interpolated samples (×105) | DCT (s) |

|---|---|---|---|---|---|---|

| Ray casting | 90° | 90° | 0.5 | 44.775 | 22.275 | 13.7004 |

| 0.25 | 89.325 | 66.825 | 40.5139 | |||

| 0.2 | 111.6 | 89.1 | 55.625 | |||

| 0.1 | 222.975 | 150.75 | 92.3033 | |||

| 45° | 90° | 0.5 | 44.775 | 22.275 | 13.6688 | |

| 90° | 45° | 0.5 | 21.741 | 10.8075 | 6.6899 | |

| Proposed approach | 90° | 90° | 0.5 | 44.775 | 10.5962 | 6.5113 |

| 0.25 | 89.325 | 21.13915 | 15.9597 | |||

| 0.2 | 111.6 | 26.4106 | 17.8641 | |||

| 0.1 | 222.975 | 52.76801 | 28.32 | |||

| 45° | 90° | 0.5 | 44.775 | 10.5962 | 6.5062 | |

| 90° | 45° | 0.5 | 21.741 | 5.1451 | 3.1014 |

MVRT for CT Tibia Fibula Dataset using Ray Casting and Proposed Approach.

| Method | GT (s) | ∑ DCT (s) | MVRT=GT+∑ DCT (s) |

|---|---|---|---|

| Ray casting | 0 | 222.5013 | 222.5013 |

| Proposed approach | 64.7543 | 78.2627 | 143.017 |

The measures used in the analysis are the total number of interpolated samples, data computation time (DCT), and multi-view rendering time (MVRT). MVRT is determined as in Eq. (7):

where GT is the time taken to perform the grouping of data throughout the volume. This time includes the time to check the data for relevance and implement the grouping. In general, the computational complexity of classical ray casting is given as in Eq. (8):

where V is the total number of virtual samples traversed for rendering and δ is the perspective weight (0<δ≤1) to signify the variation in the sample count when the viewpoint varies. It is the variable V that influences the complexity of the algorithm. The value of V for ray casting and the proposed approach is given in Eqs. (9) and (10), respectively:

where vi is the number of virtual voxels in each plane i and vi(I) is the number of independent virtual voxels, i.e. ungrouped virtual voxels in each plane i.

5 Conclusion

The ray casting algorithm incorporated with grouping based on FMI reduces the computations incurred by interpolation. It works under the assumption that medical data do not vary much in successive voxels and in successive slices, and hence grouping becomes reasonable. As the work presented in the paper interpolates only one voxel on behalf of all the members of a group, the number of interpolation calculations gets significantly reduced, and hence the computational complexity as well. Moreover, as the check for relevance among the group members is performed on every subsequent sample locations, it is assured that variations in data are also detected, if any. The sampling distance can be selected as per user requirement. A small sampling distance value generates more accurate and smoother result than the higher values.

The huge fall in the interpolation count is not reflected in the rendering time because of the time incurred by the grouping strategy. In spite of the advantage of reduction in the overall rendering time for multiple views, the result generated by this algorithm shows some block artifacts as a result of grouping, and hence the work is to be extended to reduce the block artifacts and to produce smoother results.

Bibliography

[1] F. Gong and H. Wang, An accelerative ray casting algorithm based on crossing-area technique, in: International Conference on Machine Vision and Human-Machine Interface, China, 2010.10.1109/MVHI.2010.198Search in Google Scholar

[2] J. Grande, M. d. R. Suarez and J. R. Villar, A feature selection method using a fuzzy mutual information measure, in: Innovations in Hybrid Information Systems, Springer, Berlin, 2007.10.1007/978-3-540-74972-1_9Search in Google Scholar

[3] B. Kasko, Fuzzy entropy and conditioning, Inf. Sci.40 (1986), 165–174.10.1016/0020-0255(86)90006-XSearch in Google Scholar

[4] K. Kreeger, I. Bitter, F. Dachille, B. Chen and A. Kaufman, Adaptive perspective ray casting, in: Symposium on Volume Visualization, 1998.10.1145/288126.288154Search in Google Scholar

[5] R. Kushaba, A. Al-Jumeliy and A. Al-Ani, Novel feature extraction method based on fuzzy entropy and wavelet packet transform for myoelectric control, in: International Symposium on Communications and Information Technologies (ISCIT 2007), 2007.10.1109/ISCIT.2007.4392044Search in Google Scholar

[6] B. Lee, J. Yun and J. Seo, Fast high quality ray casting with virtual samplings, IEEE Trans. Vis. Comput. Graph.16 (2010), 1525–1532.10.1109/TVCG.2010.155Search in Google Scholar PubMed

[7] M. Levoy, Display of surfaces from volume data, IEEE Comput. Graph. Appl.8 (1988), 29–37.10.1109/38.511Search in Google Scholar

[8] L. Lin, S. Chen, Y. Shao and Z. Gu, Plane-based sampling for ray casting algorithm in sequential medical images, Comput. Math. Methods Med.2013 (2013).10.1155/2013/874517Search in Google Scholar PubMed PubMed Central

[9] T. Ling and Q. Zhi-Yu, An improved fast ray casting volume rendering algorithm of medical images, in: 4th International Conference on Biomedical Engineering and Informatics, IEEE, China, 2011.10.1109/BMEI.2011.6098239Search in Google Scholar

[10] D. Qing, J. Chen and Z. Wang, An improved ray-casting algorithm based on AABB, in: IEEE International Conference on Audio Language and Image Processing, China, 2010.10.1109/ICALIP.2010.5684395Search in Google Scholar

[11] L. Sánchez, R. Suárez, J. R. Villar and I. Couso, Some Results About Mutual Information Based Feature Selection and Fuzzy Discretization of Vague Data, IEEE, London, 2007.10.1109/FUZZY.2007.4295665Search in Google Scholar

[12] Y. Shicai, W. Qianjun and L. Rong, A High Efficient and Speed Algorithm of Ray Casting in Volume Rendering, IEEE, China, 2011.10.1109/CECNET.2011.5768256Search in Google Scholar

[13] Y. Tian, M.-Q. Zhou and Z.-K. Wu, A rendering algorithm based on ray-casting for medical images, in: Proceedings of International Conference on Machine Learning and Cybernetics, China, 2008.10.1109/ICMLC.2008.4621002Search in Google Scholar

[14] Y. S. Tsai, U.- C. Yang, I.- F. Chung and C.- D. Huang, A comparison of mutual and fuzzy-mutual information-based feature selection strategies, in: International Conference on Fuzzy Systems, IEEE, India, July 2013.Search in Google Scholar

©2019 Walter de Gruyter GmbH, Berlin/Boston

This article is distributed under the terms of the Creative Commons Attribution Non-Commercial License, which permits unrestricted non-commercial use, distribution, and reproduction in any medium, provided the original work is properly cited.

Articles in the same Issue

- Frontmatter

- An Effective Technique to Track Objects with the Aid of Rough Set Theory and Evolutionary Programming

- A Novel Word Clustering and Cluster Merging Technique for Named Entity Recognition

- Simulation-Based Analysis of Intelligent Maintenance Systems and Spare Parts Supply Chains Integration

- Retinal Fundus Image for Glaucoma Detection: A Review and Study

- Task Reallocating for Responding to Design Change in Complex Product Design

- Fuzzy Mutual Information-Based Intraslice Grouped Ray Casting

- An Efficient Compound Image Compression Using Optimal Discrete Wavelet Transform and Run Length Encoding Techniques

- A Fast Internal Wave Detection Method Based on PCANet for Ocean Monitoring

- A Wheelchair Control System Using Human-Machine Interaction: Single-Modal and Multimodal Approaches

- Design of Optimized Multiobjective Function for Bipedal Locomotion Based on Energy and Stability

- Hybridization of Genetic and Group Search Optimization Algorithm for Deadline-Constrained Task Scheduling Approach

- An Effective Optimization-Based Neural Network for Musical Note Recognition

Articles in the same Issue

- Frontmatter

- An Effective Technique to Track Objects with the Aid of Rough Set Theory and Evolutionary Programming

- A Novel Word Clustering and Cluster Merging Technique for Named Entity Recognition

- Simulation-Based Analysis of Intelligent Maintenance Systems and Spare Parts Supply Chains Integration

- Retinal Fundus Image for Glaucoma Detection: A Review and Study

- Task Reallocating for Responding to Design Change in Complex Product Design

- Fuzzy Mutual Information-Based Intraslice Grouped Ray Casting

- An Efficient Compound Image Compression Using Optimal Discrete Wavelet Transform and Run Length Encoding Techniques

- A Fast Internal Wave Detection Method Based on PCANet for Ocean Monitoring

- A Wheelchair Control System Using Human-Machine Interaction: Single-Modal and Multimodal Approaches

- Design of Optimized Multiobjective Function for Bipedal Locomotion Based on Energy and Stability

- Hybridization of Genetic and Group Search Optimization Algorithm for Deadline-Constrained Task Scheduling Approach

- An Effective Optimization-Based Neural Network for Musical Note Recognition