Abstract

Detailed kinetic models play a crucial role in comprehending and enhancing chemical processes. A cornerstone of these models is accurate thermodynamic and kinetic properties, ensuring fundamental insights into the processes they describe. The prediction of these thermochemical and kinetic properties presents an opportunity for machine learning, given the challenges associated with their experimental or quantum chemical determination. This study reviews recent advancements in predicting thermochemical and kinetic properties for gas-phase, liquid-phase, and catalytic processes within kinetic modeling. We assess the state-of-the-art of machine learning in property prediction, focusing on three core aspects: data, representation, and model. Moreover, emphasis is placed on machine learning techniques to efficiently utilize available data, thereby enhancing model performance. Finally, we pinpoint the lack of high-quality data as a key obstacle in applying machine learning to detailed kinetic models. Accordingly, the generation of large new datasets and further development of data-efficient machine learning techniques are identified as pivotal steps in advancing machine learning’s role in kinetic modeling.

1 Introduction

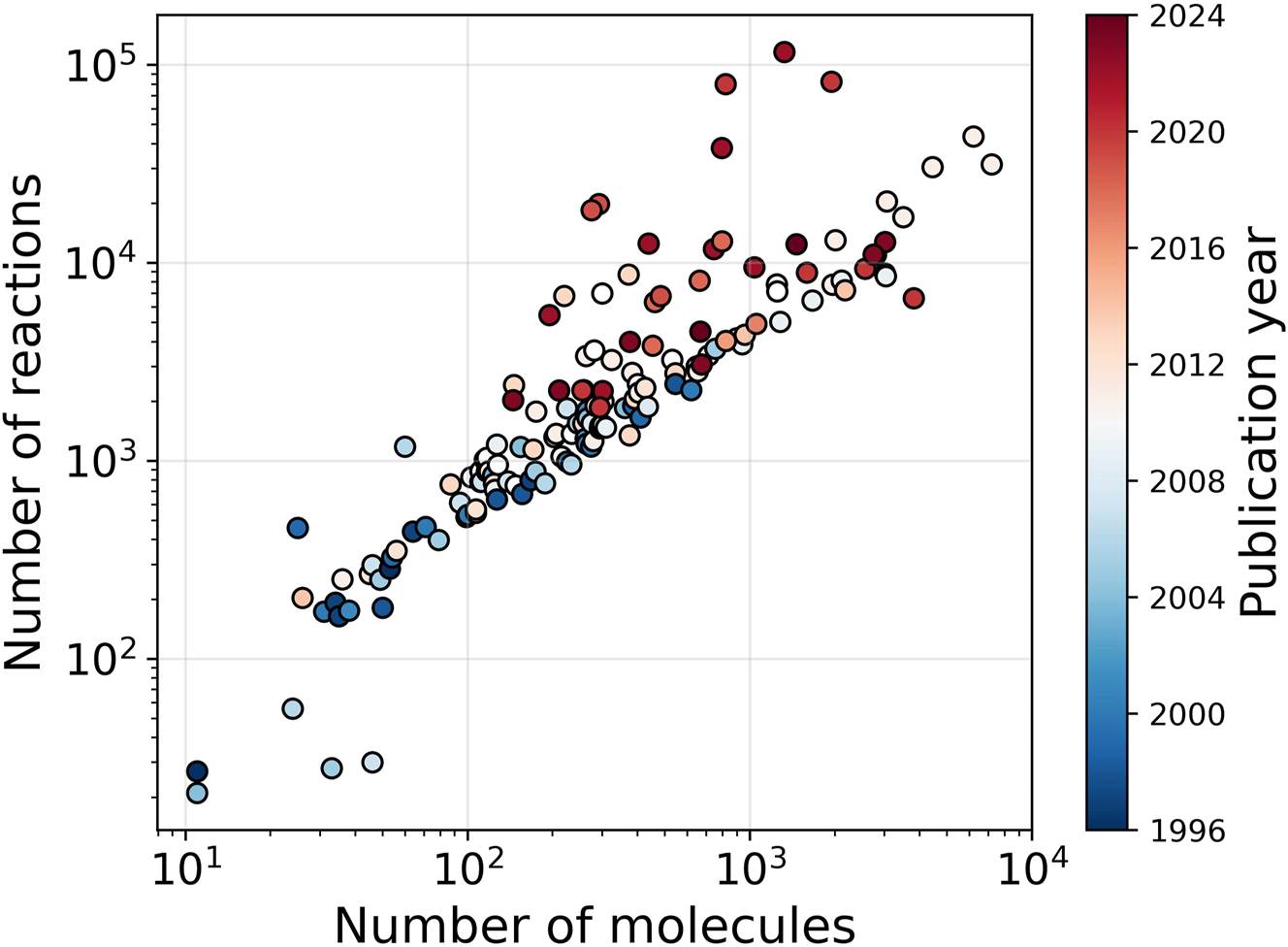

Detailed kinetic models are an extremely powerful tool to gain insight into chemical processes. While experiments yield valuable data on process parameter effects, they often do not allow to gain mechanistic insights in a straightforward way. Detailed chemical kinetic models, on the other hand, provide insight into how the overall reaction proceeds but are tedious to develop. These detailed kinetic models consist of molecules, and reactions linking these molecules. For some processes, such as pyrolysis or combustion processes, these models can contain thousands of molecules and tens of thousands of reactions. Figure 1 shows the size of kinetic models of gas-phase processes developed during the last and previous decades, illustrating that the model size has increased over time.

Evolution of the size of detailed kinetic gas-phase models from 1996 to 2024. Data until 2015 is based on the work of Van de Vijver et al. (2015).

Due to their size, large kinetic models are usually generated automatically. Over time, many groups have developed software for automatic kinetic model generation. Examples of such software tools include Genesys (Vandewiele et al. 2012), RMG (Gao et al. 2016), NETGEN (Broadbelt et al. 1994), MAMOX (Ranzi et al. 1997), and RING (Rangarajan et al. 2012). Automatic kinetic model generators typically operate based on user-defined reaction families. Initial molecules undergo reactions according to these families, producing new species. Subsequently, these newly formed species engage in further reactions via the specified families, resulting in a complex chemical reaction network. These automatically generated reaction networks, however, often need some manual manipulation, due to an incomplete reaction mechanism or an incorrect thermodynamic or kinetic parameter assignment (vide infra) (Faravelli et al. 2019). In practice, most detailed kinetic models are thus generated semi-automatically (Dogu et al. 2021; Miller et al. 2021; Zádor et al. 2011).

Describing the thermodynamics of the molecules and the kinetics of the reactions is essential for gaining insights into the processes these reaction networks model. While small kinetic models can have all their thermochemical and kinetic properties fitted to experimental data, this becomes impractical for larger models due to the vast number of parameters involved, risking overfitting. This can thus lead to different combinations of thermodynamic and kinetic parameters that can describe the experimental trends, due to a cancelation of errors (Katare et al. 2004; Park and Froment 1998). The obtained thermodynamic and kinetic values thus have a high uncertainty. Additionally, experimental data often only provide yields, output concentrations, and conversions, necessitating the selection of a reactor model alongside the kinetic model for regression purposes. For simple processes, a simple reactor model, which describes the concentration of the species as a function of time and the position in the reactor via simple mathematical equations, may be satisfactory. Examples of such simple reactor models include an ideal plug flow reactor model and a continuous stirred-tank reactor model. However, for more complex processes, constructing a suitable reactor model is more challenging (Xu and Froment 1989; Zapater et al. 2024). This increased complexity may introduce additional errors in parameter fitting, leading to wrong mechanistic insights.

Given these challenges, thermodynamics and kinetics are often computed using in silico methods, particularly for large kinetic models. While quantum chemical methods often offer accurate predictions, their computational demand is prohibitive for large mechanisms. Hence, less accurate but faster methods such as group additivity (Benson et al. 1969) and reaction rules are commonly employed. However, since quantum chemistry is time-consuming and faster methods sacrifice accuracy, there exists an opportunity for a more effective approach to calculate the necessary thermodynamic and kinetic properties. Machine learning emerges as a promising candidate to address this gap, given its demonstrated utility in various areas of chemical engineering such as computational fluid dynamics (CFD), rational fuel design (Fleitmann et al. 2023; Kuzhagaliyeva et al. 2022), and synthesis planning (Coley et al. 2018; Kochkov et al. 2021; Pirdashti et al. 2013). Machine learning has also been applied to predict outcomes of chemical processes. The input to the machine learning model is in this case process parameters such as inlet composition, temperature, and pressure. Similar to detailed kinetic models combined with a reactor model, the machine learning model predicts yields, conversions, and outlet concentrations. The usual purpose of these models is process optimization within a narrow range of process parameters. However, these machine learning models cannot be used to gain mechanistic insight into a process due to their “black box” nature. While the machine learning model may provide yield predictions, users lack insight into how these predictions are generated. Moreover, because these machine learning models are trained on experimental data, their performance beyond the training range may be uncertain. As mentioned above, machine learning can be used within a kinetic modeling approach, namely, to predict the thermodynamics and kinetics. The aforementioned method of automatic kinetic model generation, and where machine learning can be employed for property prediction is shown in Figure 2. This figure shows that first a reaction network is created based on the initial molecules and the reaction families. These families can include conventional reactions or more complex reaction types such well-skipping reactions, represented by the brown arrow. The latter reaction type, will, due to the complexity of its underlying physics, be addressed in future work. Once a reaction network is generated, the thermodynamic and kinetic parameters must be assigned. As presented in Figure 2, the thermodynamic property of interest is the Gibbs free reaction energy, determined by the enthalpy of formation, the intrinsic entropy, and the heat capacity of reactants and products. As these properties are temperature dependent, they are often represented by NASA polynomials, which allow to calculate the property value at a given temperature, as shown in equations (1)–(3). In these equations h i represents the enthalpy of a species i, s i its entropy, Cp,i its heat capacity, and R the gas constant.

When looking at liquid-phase processes, also solvation properties should be taken into account. Examples of such properties are the enthalpy of solvation ΔHsolv, or the Gibbs free energy of solvation ΔGsolv. A third type of process is heterogeneous catalytic processes. For modeling these processes, the adsorption enthalpy and entropy are of great importance. Besides the thermodynamic effects, kinetics effects are also important in detailed kinetic models. The kinetics are described by the rate coefficients of the reactions in the models, as shown in Figure 2. The rate coefficients are often represented by the modified Arrhenius equation, presented in equation (4), in order to include temperature dependence. The pre-exponential factor A, the activation energy E a and the temperature exponent coefficient n are the parameters in this equation required to describe the kinetics.

Process of automatic kinetic model generation and the role of machine learning in facilitating thermochemical and kinetic property prediction for this purpose.

Throughout this work when we refer to either thermodynamic or kinetic properties, these are the underlying properties of interest.

In this article, we review the state-of-the-art in machine learning for property prediction of molecules and reactions. The first part deals with the discussion of the methods currently incorporated in kinetic model generators for the calculation of thermochemical and kinetic properties. After that, machine learning approaches are discussed by their three main pillars: the data, the representation of the data, and the mathematical model. This is followed by an assessment of alternative training methods that improve the prediction performance. More specifically, we focus on methods that allow training on multiple datasets. Eventually, we elaborate on the accuracy that can currently be achieved with machine learning and the impact of these accuracies on detailed kinetic models. We end the review with the current limitations that are encountered, hampering the implementation of machine learning in detailed kinetic models.

2 Classical methods for thermodynamic and kinetic property calculation

As mentioned in the introduction, fitting the properties to experimental data is unfeasible for large kinetic models. Therefore, in silico techniques to calculate these properties are frequently used. The most fundamental way to calculate thermochemical properties is via quantum chemistry. In this approach, geometry optimization and energy calculations of molecules are performed via designated quantum chemistry packages like Gaussian (Frisch et al. 2016), TurboMole (Furche et al. 2014), or ORCA (Neese 2012). For the geometry optimization step, fast density functional theory (DFT) methods are usually accurate enough. Once the optimal geometry is obtained, the energy of the structure must be calculated. For these energy calculations, the earlier used DFT methods are usually not accurate enough. Therefore, more accurate quantum chemical methods such as coupled cluster methods or CBS-QB3 (Montgomery et al. 1999) are required. The use of these more advanced methods comes at the expense of a higher computational time. Furthermore, to achieve chemical accuracy i.e., deviations lower than 4.184 kJ/mol, corrections on the initial result might be required to compensate for inappropriate assumptions. A common example of such an assumption is the harmonic oscillation approximation. Here, the vibrational partition functions are calculated by assuming a harmonic potential at the vicinity of the minimum. This approach only requires a computationally-friendly calculation of the vibrational frequencies, but may lack accuracy. One popular way to go beyond this approximation is using the 1D-hindered rotor scheme (Pfaendtner et al. 2007). In this scheme, the potential energy surface for the rotation around a bond is calculated for each rotatable bond. This increases the accuracy of the property calculation but comes again at the expense of a larger computational time since a lot of additional DFT-calculations must be performed for the calculations of the potential energy surfaces.

Next to gas-phase properties, solvation properties can also be calculated quantum chemically (Cramer and Truhlar 1999; Klamt 2011; Tomasi et al. 2005). Many implicit solvation models are available in popular quantum chemical packages, which can model solvent effects without much increase in the computational time (Cramer and Truhlar 1999). Popular examples of implicit models are the polarizable continuum model (PCM) (Miertuš et al. 1981) and the solvation model based on density (SMD) (Marenich et al. 2009). These methods, however, often lack accuracy. Another option is to model the solvent explicitly. This often yields more accurate results, but, due to the larger system size, requires more computational time. A third option is using the conductor like screening model for real solvents (COSMO-RS) method (Eckert and Klamt 2002; Klamt 1995; Klamt and Eckert 2000). This semi-empirical method calculates solvent properties by matching the quantum chemically calculated COSMO surfaces of the solute and solvent. This approach shows satisfactory results, but its performance on certain molecule classes such as radicals remains unclear.

In addition, quantum chemical calculations are also valuable for property prediction of compounds in catalytic reactions. In heterogeneous catalysis, adsorption properties of reactants and products are required for the development of heterogeneous catalytic models. These properties can be determined ab initio but are challenging to predict as (I) the adsorption site is often ill-defined, (II) the obtained values generally have lower accuracy, (III) and the calculations are much more computationally intensive. Here, we will elaborate on the nature of these three challenges. Within heterogeneous catalysis, it is often up to debate what the exact nature of the adsorbed species is. In metal catalysts, the type of site such as bridge, terrace, or edge determines the stability of the adsorbed complex. Moreover, the catalyst structure in operando conditions can differ from what is experimentally determined at other conditions. Also in zeolite catalysis, the location of the acid site in the framework influences the adsorption properties. It is unfortunately not straightforward to determine the exact structure of the active site as the exact location of the Bronsted acid site is often unknown. Second, the complex nature of the adsorbed complex limits the accuracy of ab initio calculations. Adsorption properties can be calculated statically (i.e., via transition state theory) at a DFT level of theory. However, these approaches often fail to predict the adsorption entropy accurately, even though heuristics exist (De Moor et al. 2011a). For zeolite adsorption properties typical accuracies are in the order of ∼8 kJ/mol (Berger et al. 2023), while more accurate methods exist for metal sites (Sauer 2019). To overcome this shortcoming, molecular dynamics calculations, in which the geometry and energy of a species is tracked over time, can be performed which allow to achieve chemical accuracy. These increase the accuracy of the calculations, but also significantly increase the computational cost.

Kinetic properties can be obtained by following the same procedures described above for the reactants and transition state, as presented by transition state theory (Truhlar et al. 1996). Finding the correct transition state structure is significantly more challenging than finding the geometry of a stable species. This is because finding a transition state structure requires a good initial guess, which is hard to automate and therefore often requires human intervention. A bad initial guess could namely result in finding a too energetic saddle point or not converge to a saddle point at all. Overall, the quantum chemical procedure thus consists of many time-consuming steps. For reaction networks containing thousands of molecules and tens of thousands of reactions, these calculations are unfeasible, certainly if they require human interventions. Therefore, less accurate but faster and automated methods are often relied on to calculate thermochemical and kinetic properties.

The most popular computationally friendly approach to calculate molecular properties is group additivity, introduced by Benson et al. (1969). This method relies on the assumption that the thermodynamic properties of a molecule can be calculated by summing a certain contribution from every group in the molecule. The contribution that every group gives is usually obtained by regression towards ab initio or experimental values. The downside of this approach is that every group contribution is constructed based on the local neighborhood of that group, ignoring longer-range interactions. This problem has been partially mitigated by adding correction terms like non-nearest-neighbor interactions and ring strain corrections (Cohen 1996). Although these corrections improve the predictions, the accuracy might be insufficient for certain molecules, especially complex structures like polycyclic molecules. Also for the fast prediction of solvation properties, different methods have been proposed. Mintz and coworkers (Mintz et al. 2008; Mintz et al. 2009), for example, introduced a linear free energy relationship to predict the enthalpies of solvation. Group additive methods have also been applied to predict solvation properties (Khachatrian et al. 2017). The downside of these methods is that they only consider one solvent or one class of solvents. Consequently, the fitting procedure must be repeated for every new solvent or solvent class. Likewise, group additive approaches have also been developed for adsorbed species to calculate the adsorption energy (Gu et al. 2017; Salciccioli et al. 2010; Wittreich and Vlachos 2022). One major drawback of this approach is that new group additive values (GAVs) are required for every catalyst surface. A d-band model (Greeley et al. 2002; Hammer and Nørskov 1995) can be used to extrapolate toward other catalysts but has some limitations (Esterhuizen et al. 2020; Gajdoš et al. 2004; Vojvodic et al. 2014; Xin and Linic 2010; Xin et al. 2014). Furthermore, group additive models have also been developed for zeolite frameworks. Yu et al. (2023) developed a group additive method for the estimation of thermodynamic properties for a wide range of compounds relevant to methanol-to-olefins in a SAPO-34 catalyst. Besides group additivity, other linear relationships have been developed for the estimation of adsorption properties in heterogeneous catalysts. For example in zeolites, De Moor et al. (2011b) and Nguyen et al. (2011) found a linear relation between the adsorption energy and the number of carbon atoms for both linear paraffins and linear olefins, which have been shown to be also accurate for branched hydrocarbons (Denayer et al. 1998). In another approach, taken by RMG-cat, an automatic catalytic reaction network generator, adsorption properties are estimated based on the similarity of the queried compound and an existing library. This library comprises small hydrocarbons, nitrogenates, and oxygenates on metal sites (Goldsmith and West 2017).

There are many methods to predict kinetic properties in a fast manner. Evans and Polanyi introduced a famous linear free-energy relationship to predict the activation energy of a reaction based on the reaction energy (Evans and Polanyi 1936). This relationship, which has been used extensively in kinetic models (Froment 2013), has been extended to include more effects (Roberts and Steel 1994), and account for non-linear relationships (Blowers and Masel 2000). Similar to thermodynamic properties, kinetic properties can be calculated via group additive methods. A popular approach to employ group additivity in kinetics is to define a reference reaction with a corresponding value for a given reaction family. GAVs are calculated for all possible structural changes to the surrounding groups of the reactive center (with respect to the reference reaction) (Atkinson 1987; Saeys et al. 2004; Sabbe et al. 2008b). The target kinetic property then equals the sum of the value of the reference reaction and all group contributions of the made structural changes. Note that this is different from the group additivity scheme used for the calculation of thermodynamic properties. Here, for the calculation of kinetic properties, only groups in the surrounding of the reactive center (usually the atoms in the alpha-position) are considered, while for thermodynamic properties, all groups in the molecule are considered. This approach has been used to calculate activation energies, as well as pre-exponential factors of reactions (Paraskevas et al. 2015, 2016; Sabbe et al. 2008b, 2010; Van de Vijver et al. 2018). Another popular approach to predicting kinetics is rate rules. In this approach, a rule to calculate the rate of a certain type of reaction is constructed. A ‘type of reaction’ is here usually defined as all reactions with a certain substructure in and around the reactive center. The rate rule for such a reaction type can range from an Evans-Polanyi relationship to more complex rules (Johnson and Green 2024).

Overall, these fast methods to calculate thermodynamic and kinetic properties are significantly less accurate than the quantum chemical results on which they are based. Quantum calculations often do yield satisfactory results but are computationally too expensive, in particular for large kinetic models. Machine learning, on the other hand, is a promising technique to obtain fast predictions that are closer to quantum chemical accuracy than the traditional approximative approaches.

3 Machine learning for molecular property prediction

In this and the next chapter, machine learning methods to predict thermodynamic and kinetic properties will be discussed. Machine learning models transform the input (molecule or reaction) into the targeted output (thermodynamic or kinetic properties). During the training step, the model learns how to predict the output by regression toward training data. Once the model is trained, its performance can be assessed by evaluating the predictions of a test dataset. The quality and the amount of the data thus have a strong influence on the final performance of the model. Data is thus the first important pillar for the creation of machine learning models. This data (training or test) can usually not be fed to the machine learning model ‘as is’. First, it needs to be represented in a way that can be treated by a machine learning model. This introduces the second pillar: representation. Once the data (training or test) is converted to a suitable representation, it is fed into a machine learning model. The choice of the mathematical model is also an important step in generating machine learning models. Therefore, model choice is the third and last pillar on which machine learning models are built. In this and the following chapter, machine learning models for the prediction of molecular and reaction properties will be described via these three pillars.

3.1 Thermodynamic datasets

The first step in creating a machine learning application is collecting data. This is one of the most important elements determining the success of the machine learning model as low-quality or sparse data is detrimental to the final model performance. Different datasets exist that contain a high amount of molecules, such as GDB-17 (Ruddigkeit et al. 2012) or the PubChem database (Kim et al. 2019). These datasets, however, only contain molecules, and no thermodynamic properties linked to them. Therefore, these datasets are not suitable for the prediction of thermodynamic properties. For machine learning methods aimed at the prediction of thermodynamic properties, the data must link the input of the model with the targeted output. One of the most popular datasets containing gas-phase thermodynamic properties is QM9 (Ramakrishnan et al. 2014). This dataset was constructed by first taking a subset of the GDB-17 dataset. More specifically, only non-ionic molecules with a maximum of nine heavy atoms (all atoms excluding hydrogen) are considered. Furthermore, all molecules containing atoms other than carbon, hydrogen, oxygen, nitrogen, and fluorine are also excluded from the subset. Lastly, all charged molecules except zwitterionic species are removed from the dataset. This resulted in the QM9 dataset containing 133,885 molecules, for which thermodynamic properties have been calculated using the DFT method B3LYP/6-31G(2df,p). In this way, the dataset links the 3D geometry of molecules with the following important properties: the zero-point vibrational energy, the internal energy at 0 K, the internal energy at 298.15 K, the enthalpy at 298.15 K, the free energy at 298.15 K, and the heat capacity at 298.15 K. The accuracy of the calculations was tested by comparing the atomization enthalpies in the dataset with enthalpies calculated by the more accurate G4MP2, G4, and CBS-QB3 methods. For all of these methods, the mean absolute difference in the enthalpy of atomization was around 20 kJ/mol. Other properties such as the energy of the HOMO and LUMO are also present in this dataset but are less relevant for kinetic modeling purposes. Besides the 3D geometry of molecules, line-based identifiers such as SMILES and InChI are provided. More details about how molecules can be represented will be given in the next section. Although this dataset has been used widely, it has some serious shortcomings regarding kinetic modeling. First, the achieved accuracy of the calculations is very low. Furthermore, the dataset contains a significant amount of less occurring species, such as molecules containing three- or four-rings. Lastly, the dataset only contains closed-shell neutral species, which is not suitable for radical mechanisms. The latter problem has been mitigated by the work of St. John et al. (2020). They constructed a dataset containing 40,000 closed-shell and 200,000 radical species. The closed-shell molecules in this dataset were constructed by taking all neutral molecules from the PubChem database. Only molecules containing carbon, hydrogen, nitrogen, and oxygen, with a maximum of 10 heavy atoms were considered. In contrast to the QM9 dataset, zwitterionic species are not present in this dataset. Radicals were generated by breaking all single, non-ring bonds of the closed-shell molecules homolytically. Thermodynamic properties were calculated for both the closed- and open-shell molecules using the M06-2X/def2-TZVP DFT method. This M06-2X functional is considered to be more accurate than the B3LYP functional used for the QM9 dataset. With this method, important thermodynamic properties such as enthalpy and free energies were calculated. The molecules in this dataset are represented by their 3D structures, as well as their SMILES string, similar to the QM9 dataset. For completeness, we note ANI-1 as another large dataset containing 20 million data points (Smith et al. 2017). These data points correspond to different off-equilibrium conformations of 57,462 small molecules. The usefulness of these off-equilibrium conformations is rather limited for direct machine learning of thermodynamic properties. This dataset, however, can be used to train neural network potentials. These kinds of neural networks predict the structure of the potential energy surface, which can then be used to optimize molecules and predict their properties. This technique is however outside the scope of this review. More information about machine learning potentials can be found in the following reviews (Behler 2021; Kocer et al. 2022; Manzhos and Carrington 2021). A downside of the discussed datasets is that the properties therein are calculated via DFT methods. As already indicated, more advanced quantum chemical calculations might be required to obtain sufficiently accurate predictions of thermochemical properties. These more advanced techniques are computationally more demanding and might require human interventions, for example, to perform the 1D-hindered rotor scheme. These difficulties prevent the construction of large databases with more accurately predicted properties. However, smaller datasets, usually not for machine learning purposes, have been constructed. A major disadvantage of this data is that it is spread around the scientific literature. It is therefore unfortunately challenging to collect all thermodynamic data present in the literature. Nonetheless, Table 1 summarizes a selection of dataset sources and their specifications.

Selection of thermodynamic databases found in the literature.

| Molecule type | Number of data points | Molecule representation | Properties | Method | Source(s) |

|---|---|---|---|---|---|

| Oxygenates (including radicals) | 450 | SMILES | Δ

f

H° ΔS° C p |

CBS-QB3 + 1D-HR + SOC + BAC |

Paraskevas et al. (2013) |

| Hydrocarbons (including radicals) | 233 | SMILES | Δ f H° | CBS-QB3 + BAC |

Sabbe et al. (2005) |

| Hydrocarbons (including radicals) | 253 | SMILES + 3D geometry | ΔS° C p |

B3LYP/ 6-311G(d,p) + 1D-HR |

Sabbe et al. (2008a) |

| Carbenium ions | 165 | Name | Δ

f

H° ΔS° C p |

CBS-QB3 + 1D-HR + SOC + BAC |

Ureel et al. (2023a) |

| Alkanes, alkyl hydroperoxides (including radicals) | 192 | SMILES | Δ

f

H° ΔS° C p |

STAR-1D or STAR-1D_DZ | (Ghosh et al. 2023b, a) |

| Oxygenated polycyclic aromatic hydrocarbons (including radicals) | 92 | Name + 3D geometry | Δ

f

H° ΔS° C p |

G3 + 1D-HR |

Wang et al. (2023) |

| Molecules relevant to atmospheric chemistry | 323 | 3D geometry + Lewis structure | Δ

f

H° ΔS° C p |

G3 | Khan et al. (2009) |

| Silicon-hydrogen compounds | 135 | Lewis structure | Δ

f

H° ΔS° C p |

G3 | Wong et al. (2004) |

| H, C, O, N, and S containing species | 371 | InChI | Δ f H° | CBS-QB3 + 1D-HR + SOC + BAC |

Pappijn et al. (2021) |

| Small combustion molecules | 219 | Name + Lewis structure | Δ

f

H° ΔS° C p |

RQCISD(T)/cc-PV∞QZ + 1D-HR + SOC + BAC |

Goldsmith et al. (2012) |

| Cyclic hydrocarbons and oxygenates (including radicals) | 3,926 | SMILES + InChI | Δ

f

H° ΔS° C p |

CBS-QB3 + SOC + BAC |

Dobbelaere et al. (2021a) |

| Radicals containing C, O, and H | 2,210 | SMILES | Δ

f

H° ΔS° C p |

CBS-QB3 + AEC + BAC |

Pang et al. (2024) |

| H, C, O containing species | 1,340 | SMILES + InChI | Δ

f

H° ΔS° C p |

G3 + 1D HR |

Yalamanchi et al. (2022) |

| Halocarbons (including radicals) | 16,813 | SMILES | Δ

f

H° ΔS° C p |

G3 + 1D HR |

Farina et al. (2021) |

-

Only datasets containing enthalpy of formation, standard entropy, and heat capacities are considered. The following abbreviations have been used: 1D-HR, 1D-hindered rotor; SOC, spin-orbit corrections; BAC, bond additive correction; AEC, atom energy corrections.

One downside of these different data sources is shown in the ‘Method’ column of Table 1. Since there is not one gold standard method to perform quantum chemical calculations, these calculations are often performed at different levels of theory. The different (biased) errors of these methods introduce an additional challenge in the subsequent training of the machine learning model. Another shortcoming of this data is the gaps in the molecular space. Combining the datasets in Table 1 will namely miss important molecule classes, such as species containing both oxygen and a halogen atom or ionic species other than hydrocarbons. To identify these gaps and to gather data from various sources, different initiatives have been started to develop large databases from literature data. The RMG database, for example, combines data from 45 different libraries (Johnson et al. 2022). Another example containing enthalpies of formation is the Active Thermochemical Tables (ATcT) (Ruscic et al. 2004). A downside is that for these collected datasets, different calculation methods are used. Datasets containing experimentally measured values can overcome this problem. The NIST Computational Chemistry Comparison and Benchmark Database (CCCBDB) contains, next to computational values, experimental thermochemical properties of more than a thousand molecules. Similarly, the commercial DIPPR database contains around 2000 molecules with the corresponding experimentally measured enthalpy of formation and entropy (Bloxham et al. 2021; Thomson 1996). However, overall, a large dataset containing accurately predicted or measured thermochemical properties does not exist at this moment. This limited size of the accurate datasets is one of the reasons that makes traditional methods such as group additivity still the most popular choice to predict thermodynamic properties while making detailed kinetic models.

All previously presented datasets comprise thermochemical properties of gas-phase molecules. To include machine learning in liquid-phase kinetic models, databases containing the Gibbs free energy of solvation are essential. The Minnesota Solvation database contains 3037 solute-solvent pairs for which the free energy of solvation has been experimentally determined (Marenich et al. 2020). For both the solvent and solute the 3D coordinates, calculated at the M06-2X/MG3S level of theory, are included. Similarly, the CompSol database contains experimental free solvation energies at different temperatures and pressures for 14,102 solvent-solute pairs (Moine et al. 2017). Another literature dataset is FreeSolv, published by Mobley and Guthrie (2014). This dataset contains 643 small molecules for which the hydration free energy in water has been measured. Vermeire and Green (2021) combined the aforementioned datasets and an additional dataset developed by Grubbs et al. (2010) into one big database, comprising 10,145 solvent-solute pairs including 291 solvents and 1,368 different solutes with their experimental Gibbs free energy of solvation. Along with this experimental dataset, they have also developed a quantum chemically calculated dataset containing one million solvent-solute combinations. The Gibbs free energies of solvation in this dataset were calculated using the COSMO-RS methodology described in Section 2. Using this methodology, the calculation of the thermodynamic properties of N different species in M different solvents only requires N + M quantum chemical calculations.

This combinatorial advantage is not present for adsorption properties. Different databases exist for the properties and structure of catalytic materials such as metal catalysts, zeolites, and metal-organic-frameworks (MOF) (Jain et al. 2013; Kirklin et al. 2015). However, these databases do not include adsorption properties. The determination of the adsorption energies of N species on M different surfaces usually requires N × M quantum chemical calculations, making the construction of large databases time-consuming. Similar to gas-phase species, catalytic thermodynamic data is often spread around different publications (Andersen et al. 2019; Dickens et al. 2019; Esterhuizen et al. 2020; García-Muelas and López 2019; Schmidt and Thygesen 2018; Xu et al. 2021). However, also for surface species, databases that combine different data sources have been developed. One example is the pGrAdd software of the Vlachos group (Wittreich and Vlachos 2022). The package allows the calculation of thermodynamics based on group additivity. For these group additive schemes, data of surface species have been collected. This dataset contains standard enthalpies, entropies, and heat capacities, mainly of species on the Pt(111) surface. Another example is the Catalysis-Hub project (Winther et al. 2019). This project collected reaction energies, including adsorption energies, from more than 50 publications in one database. Similarly, a collection containing experimental datasets was constructed by Wellendorff et al. (2015). However, the limited size of this dataset makes it unusable for training large machine learning models. A bigger dataset was created in the Open Catalyst project, namely the OC20 dataset (Chanussot et al. 2021). This dataset was created by performing DFT relaxations on 1,281,040 catalyst-adsorbate combinations. In this publication three community challenges were also launched, each with their designated dataset. The first task is to predict the energy of and the forces on a (non-optimized) geometry. The second challenge is to predict the relaxed structure starting from the initial geometry. These two tasks are thus mainly relevant for the development of neural network potentials. This field of machine learning is, as mentioned before, out of the scope of this review. The third task, namely the prediction of the relaxed energy from the initial structure, is more relevant for this review. The dataset corresponding to this task links hundreds of thousands of initial geometry guesses to their relaxed energy and is therefore very relevant for the direct prediction of adsorption energies. Predicting the energy from an initial geometry guess could namely mean that the adsorption energy could be calculated in fractions of a second. In general, surface species data faces the same limitations as gas-phase data, but even stronger due to the increased computational complexity of determining adsorption properties. The data is often spread around literature and only encompasses a certain range of the molecular space. Furthermore, most databases are constructed by performing static quantum chemical calculations, instead of the more accurate molecular dynamics approach. The absolute accuracy of this data can therefore be questioned.

Overall, there clearly are limitations when looking at the data pillar for the prediction of thermodynamic properties. Large datasets already exist, but are usually calculated at a low level of theory. More accurate data is spread around the literature and contains important gaps i.e., for some molecule classes there is no accurate data available. Liquid-phase properties are less available than gas-phase data. Nonetheless, large datasets describing solute-solvent pairs and their free energy of solvation exist. This is not the case for adsorbed species on catalytic surfaces, for which there is little data describing their thermodynamic properties around literature and where quantum chemical calculations still lack accuracy to obtain chemically accurate properties at a reasonable computational cost. The collection of data is thus a major challenge in the creation of machine learning models predicting thermodynamic properties.

3.2 Molecular representation

Once the data is obtained, the molecules need to be computationally represented for the machine learning model. An ideal computational representation should answer to certain criteria which will be outlined here. A first desired property of a representation method is its uniqueness. This means that one molecule, using a representation method, can only be represented in one manner. If this is not the case, one molecule may be represented in two different ways, leading to two different property predictions by the machine learning model. This property may seem trivial, but in what follows, an example will be shown for which this is not the case. Secondly, the representation must be unambiguous. This means that a certain representation can only correspond to one molecule. If not, two molecules with the same representation will always get the same prediction from the machine learning model, which is clearly undesirable. A third important factor is that the representation must be easy to generate. The aim of the machine learning models discussed here is to obtain a fast prediction of the thermodynamic properties. If the representation step in this process takes a long time, the main advantage of machine learning is lost.

One of the most common ways to represent molecules is line-based string identifiers. These are identifiers in which a molecule is represented by a single string. The most used line-based identifier is the simplified molecular input line entry system (SMILES) string (SMILES – A simplified chemical language). This SMILES string describes a molecule unambiguously and is easy to generate. However, the SMILES string is not unique i.e. for one molecule many correct SMILES can be generated. This shortcoming can be remedied by using canonical SMILES, for which a mathematical algorithm re-orders the atoms and corresponding string, making it a unique representation. A challenge with this representation is that it is based on the bonds between the atoms. Deriving the correct bonds and bond orders may be challenging when only the 3D coordinates of the atoms are known. Another popular string-based method to identify molecules is the International Chemical Identifier (InChI) (Heller et al. 2015). This representation is unique, unambiguous, and easy to generate. A downside of InChI with respect to SMILES is that it is less human-readable. A third string-based representation of molecules that is worth mentioning is SELFIES (Krenn et al. 2019). The major advantage of this representation is that it is robust, meaning that when certain grammar rules are followed, every possible SELFIES string is related to a valid molecule. Therefore, this representation is promising to be used in generative machine learning models. However, due to its novelty, it has not been widely used in the general representation of molecular data, or as input for predictive machine learning models (Krenn et al. 2022). The aforementioned line-based identifiers only represent molecules in a 2D manner. They are in fact unambiguous using a 2D view but may be ambiguous when considering 3D conformations of molecules. Different conformers will namely be represented in the same way.

To properly represent a conformer of the molecule, 3D information must be incorporated into the representation. It is unfeasible to store all the 3D information i.e., the coordinates of the atoms, in a string. Therefore, a 3D molecule is usually represented via specific file formats such as xyz-files, mol-files, or sdf-files. While all these text-based representations (string or file) are readable for computers, they can usually not be used directly as input to a machine learning model. An exception to this is the recently emerging language models. These models can directly use the SMILES representation as input.

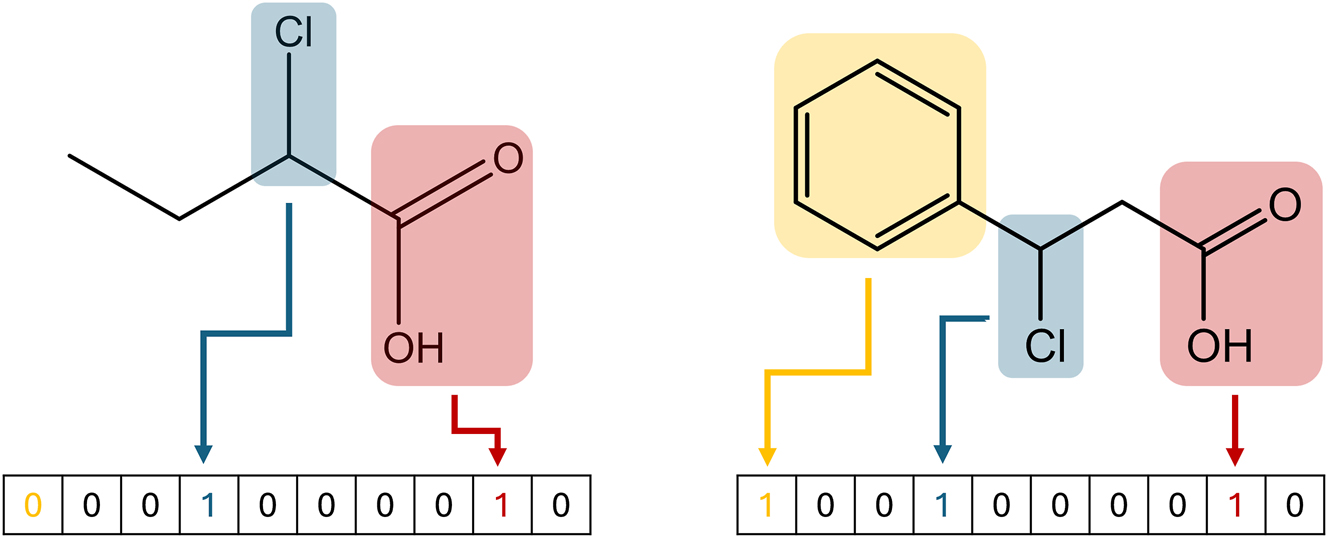

However, mostly, the aforementioned representations should first be converted to some sort of mathematical representation of the molecule. One of the most common representations for machine learning purposes is the numerical vector. Different features, such as molecular mass or number of atoms can be chosen as elements of this vector. It is important to consider the ambiguity when constructing vectors in this manner. Only selecting the molar mass and number of atoms would namely lead to many molecules having the same representation. Furthermore, the chosen feature must be easy to calculate. For example, using quantum chemical properties of the molecule would slow down the representation step significantly. Over time, many open-source and commercial packages to automatically generate such properties have been developed. Examples of such tools include Mordred (Moriwaki et al. 2018), ChemoPy (Cao et al. 2013b), Dragon (Mauri et al. 2006), and others (Yap 2011; Cao et al. 2013a). By using these tools, users can create vectors containing up to thousands of features in a reasonably short time period and without much manual intervention. In addition to these features, also structural features can be added. The structural features describe the presence or count of a substructure in the molecule. A popular way to include these substructures is the molecular access system (MACCS) key. This key encodes 166 substructures into a single representation vector, as shown in Figure 3.

Generation of the MACCS key for two different molecules. Every element in the vector corresponds to a different substructure. If the substructure is present in the molecule, the corresponding value is set to 1. Else, the value is set at 0.

This is an example of a well-established fingerprint that can be used for various purposes. These fingerprints are often included in cheminformatic packages such as RDKit (Landrum 2013), OpenBabel (O’Boyle et al. 2011), and CDK (Steinbeck et al. 2003). Other examples of such fingerprints are the RDKit fingerprint and the extended-connectivity fingerprint (ECFP) (Rogers and Hahn 2010). This ECFP fingerprint starts by assigning an initial representation to each atom. For a user-defined number of iterations, each atom representation is then updated based on the representations of the neighboring atoms. In this way, each atom has a final representation not only describing itself, but also its environment. These atom representations are then combined to obtain one molecular representation. The advantage of these built-in fingerprints is that they do not require expert knowledge. Furthermore, these fingerprint methods have the advantage of being unique and easy to generate. However, in some cases, for example, if two molecules contain the same groups or substructures, the representation might be ambiguous. Another downside is that these representations are not tailored to the targeted purpose. Furthermore, they do not include 3D information of the molecule.

One classical way of including 3D information is using Coulomb matrices (CM) (Montavon et al. 2012; Rupp et al. 2012). The diagonal elements of this matrix represent the atoms, and the off-diagonal elements contain the Coulomb repulsion between two nuclei. An advantage of using such a representation of the geometry is that it is invariant to external translations or rotations. Usually, a machine learning model requires a fixed-length vector as input. Therefore, this matrix must first be converted to a fixed-size matrix. This can be done by adding zeros (zero padding) until the desired matrix size is obtained. To transform this matrix into a vector, one can list all the elements of the matrix in vector form. More often, the eigenvalues of the matrix are calculated and put into a fixed-length vector (Montavon et al. 2012). Ordering the eigenvalues in descending order makes the representation invariant to atom numbering. Since the representation is now invariant to translation, rotation, and numbering, it is a unique representation of the molecule. Furthermore, the representation is easy to generate and unambiguous, even using a 3D view. Another method for adding geometrical information in the molecular representation is using histograms of distances, angles, and dihedrals (HDAD), first introduced by Faber et al. (2017) and further developed by Dobbelaere et al. (2021a). First, histograms are made of all distances, angles, and dihedral angles between atom types. Then, for each histogram, a number of Gaussians is fitted as shown for three histograms in Figure 4. After that, a vector containing the probability that a feature is found under each Gaussian is created for each geometric feature (distance, angle, dihedral). This vector has a length equal to the total number of Gaussians. These vectors are then added to obtain a representation vector of the molecule. A disadvantage of this approach is that the representation of a molecule is dependent on the dataset in which it is included. Again, this representation is invariant to translation, rotation, and atom numbering of the molecule, and is thus unique. Furthermore, it describes a molecule unambiguously in a 3D manner. Fitting the Gaussians over the histograms may be challenging, but once this is performed, the representation vector is also easy to determine. Besides the two geometrical representation methods presented here, many other approaches can be used (Faber et al. 2017; Hansen et al. 2015; Plehiers et al. 2021).

Histograms and fitted Gaussians of the C–C distance, the C–C–H angle, and the C–C–H–H dihedral angle.

The earlier mentioned ECFP is a fingerprint that is based on the graph representation of the molecule. In this molecular graph, every node corresponds to an atom of the molecule, and every edge corresponds to a bond of the molecule. Once this graph is created, a priori defined operations are performed on the graph to obtain a numerical representation of the molecule. However, since the use of graph neural networks (GNNs) has recently become more popular, the transformation to a numerical vector is no longer needed. GNNs can namely take graphs as input to predict molecular properties. Therefore, next to string and vector representations, a graph is the third way to represent a molecule for machine learning purposes. For a molecular graph to be suited as input of a GNN, feature vectors must be assigned to the nodes (atoms) and/or edges (bonds). Common atom features to include in the vector are atomic number, number of bonded hydrogen atoms, number of non-hydrogen bonds, and implicit valence (Pathak et al. 2020; Rogers and Hahn 2010; Yang et al. 2019). Also more chemically inspired features such as electronegativity can be added. The most common choice of bond feature is the bond type i.e., single double, triple, and aromatic. This can be extended to more specific features such as whether the bond is conjugated or whether the bond is in a ring (Pathak et al. 2020; Yang et al. 2019). It is also possible to include 3D information of a molecule in its graph representation (Gasteiger et al. 2020). Gilmer et al. (2017), for example, included an encoding of the bond length in the bond feature vector when the geometry of the molecule was available. These graphs give a unique representation of the molecule when the atomic number is chosen as an atom feature and the bond order as a bond feature. It is also unambiguous in a 2D view. If 3D information is added, it can also describe different conformers unambiguously. Furthermore, using cheminformatic packages like RDKit, the molecular graph and its features are also easy to determine. For predicting properties, these graphs are treated by GNNs. By doing this, a latent vector representation of the molecule is created. However, since this vector representation is created by a machine learning model, this will be discussed in Section 3.3. For completeness we mention that the representation graph is sometimes constructed in a different manner. In these cases the nodes still correspond to the atoms in the molecule, but the edges are assigned differently. Namely, an edge can be added between atoms (nodes) that are closer to each other than a user-defined cutoff distance (Batzner et al. 2022; Gasteiger et al. 2020; Schütt et al. 2018, 2021). Setting this cutoff distance very high can even lead to a fully connected graph. In graphs created with a cutoff distance bond orders cannot be used as edge features. Therefore, the user must rely on geometrical features such as the interatomic distance.

An overview of important properties of the discussed representation methods is shown in Figure 5. As mentioned before, unique means that one molecule corresponds to only one representation. Unambiguous means that one representation corresponds to one molecule (not considering conformers). The 3D information property shows if any conformational information is contained in the representation. In Figure 5, the box is half-shaded if it is the user’s choice whether to include it. A property is easy to generate if it can be created within fractions of a second. Note that if 3D information is used (like in CM, HDAD, and possibly graphs), the representation is only easy to generate if the geometry is already available. A representation is classified as human readable if it is simple to determine from the representation what the corresponding molecule is. For this category, half-shaded represents that it requires experience to deduce the initial molecule. Furthermore, a representation is tunable if the user can make choices in the representation, to tune it for the desired task. The last row shows if a representation requires bond knowledge to be generated. If this is required and only the 3D coordinates of the atom are given, the bonds in the molecules must be generated based on interatomic distances. These bonds can be assigned in different ways, influencing the uniqueness of the representation.

Overview of important properties of the discussed molecular representation methods. A cell is colored in gray if the representation follows the desired property. For the 3D information property the cell is half-filled if it is the user’s choice whether or not to include the 3D information. For the human-readable property, the cell is half-filled if it requires experience of the user to interpret the representation.

For the prediction of solvation properties in a single solvent, the molecular representation stated above can be used (Ferraz-Caetano et al. 2023; Goh et al. 2017; Hutchinson and Kobayashi 2019; Rong et al. 2020; Wu et al. 2018; Yang et al. 2019). However, for predicting solvation properties in a variety of solvents, a solvent representation must be created. Since solvents are molecules, they can be represented by a SMILES string. Again, this is usually not sufficient for machine learning purposes. To use it as input, the string must be translated into a numerical representation. One option is to embed both the solute and solvent in a feature vector (Chen et al. 2023; Liao et al. 2023a; Subramanian et al. 2020). Both the representation of the solute and solvent are then used as input for a machine learning model. In this case, care should be taken so that the representations are tailored to describe solvation effects. Solvation is namely dominated by intermolecular forces, while the gas-phase thermodynamic properties are determined by intramolecular interactions. An example of such a tailored representation is the COSMOtherm feature vector. These features are well suited to describe solvation effects but require quantum chemical calculations. However, when the number of different solvents is low in comparison to the total number of data points, the few time-consuming quantum chemical calculations are justifiable. Another option is to represent both the solute and solvent with a graph (Chung et al. 2022; Pathak et al. 2020; Vermeire and Green 2021). Here, again, the atom and/or bond features are preferable specific to describe the solvation process. In principle, it is also possible to have a graph input for the solute and a vector input for the solvent. Machine learning models that can treat these inputs will be discussed in the next section.

For the prediction of adsorption energies, selecting features to construct a suitable representation of the catalyst is the most popular approach. Often, the d-band center and other density of state features are used together with some limited feature selection tools (Andersen et al. 2019; Fung et al. 2021; Goldsmith et al. 2018; Nayak et al. 2020; Toyao et al. 2018; Xu et al. 2021). The downside is the requirement of DFT calculations of the catalytic materials to obtain the molecular representation. This is thus only justifiable if the number of different catalysts is low in comparison to the total number of data points. To lower the computational cost, it is, however, possible to estimate these d-band features at a reduced computational cost (Noh et al. 2018). Even more computationally demanding than using d-band features is using DFT-calculated energies of certain species-catalyst combinations to calculate the adsorption energies of another species-catalyst pair (Andersen and Reuter 2021; García-Muelas and López 2019; Tran and Ulissi 2018). The generation of this representation is computationally less intensive than the quantum chemical determination of the adsorption properties for all adsorbate-catalyst pairs but is still too time-consuming for kinetic modeling applications. In addition to these computationally expensive representations, it is also possible to use easy-to-calculate features such as electronegativities and atomic radii (Andersen and Reuter 2021; Esterhuizen et al. 2020). Ideally, one does not need to compute ab initio properties as an input to obtain model predictions. Therefore, Xie and Grossman (2018) used the atom coordinates of the metal crystal as an input for their model to facilitate material property prediction. It should be noted that this is only an inexpensive representation if the geometry of the catalyst is already known. Also for the calculation of adsorption energies, graph representations have been used (Pablo-García et al. 2023). In this approach, the adsorbate and catalyst were encoded as one graph, and the node feature vector was a one-hot encoding of the corresponding element. Overall, by employing either the known geometry or other easy-to-determine features as model input, a much more user-friendly and faster prediction is achieved which is essential for the automatic generation of kinetic models.

In conclusion, there are three main types of molecular representation for machine learning purposes: string representation, vector representation, and graph representation. Also when looking at solvation or adsorption properties, molecules can be represented via a vector or graph representation. The advantage of the graph representation is that it contains a lot of information. It contains information about the atoms of the molecule, and with which bonds they are connected. Such a high amount of information is usually not contained in a vector representation. This is because all information must be compiled into a fixed-length vector. The least amount of chemical information is contained in a SMILES string. This might be counterintuitive since this SMILES is often the starting point of graph representations. However, for a machine learning model, the SMILES input is just a string without any additional meaning. The graph representation is thus the most complete representation of the molecule. However, in the next chapter, a major disadvantage of this graph representation will be touched upon.

3.3 Machine learning models for molecules

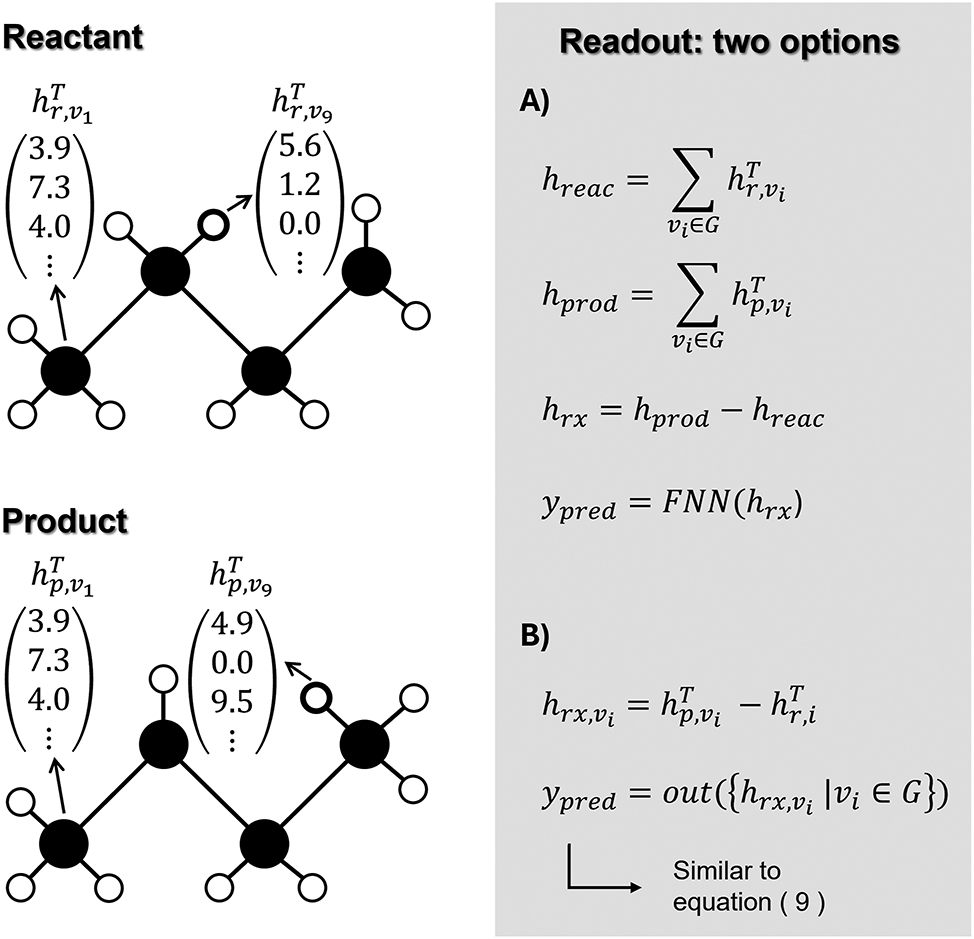

Following the view of Dobbelaere et al. (2021b), the third big pillar of machine learning, besides data and representation, is the machine learning model. The type of model that can be used depends on the type of representation that is chosen. If the molecule is represented by a numerical vector, a wide variety of machine learning models are suited for the task. Any model that transforms the input vector to the target output can be chosen. The simplest option is linear regression. However, due to their simplicity and linearity, these models are not within the scope of this machine learning review. Besides these linear models, more complex methods, such as support vector regression (SVR) (Dashtbozorgi et al. 2012; Yalamanchi et al. 2019, 2020), kernel ridge regression (KRR) (Faber et al. 2017; Noh et al. 2018; Rupp et al. 2012) or feedforward neural networks (FNN) (Dashtbozorgi et al. 2012; Dobbelaere et al. 2021a; Li et al. 2017; Yalamanchi et al. 2019) are often used for the prediction of thermochemical properties. When the molecule is represented by a mathematical graph, these classical methods are not suitable. In this case, GNNs are used. These are neural networks specifically dedicated to processing graph data. Many different GNNs have been developed to predict molecular properties (Wieder et al. 2020). Mostly, these models are based on iteratively updating the node representation based on its surroundings. However, other methods have been designed that update this representation based on the complete graph (Kearnes et al. 2016; Wu et al. 2018). Here, we will discuss message passing neural networks (MPNN), which is the most used architecture for predicting thermochemical properties (Ma et al. 2020; Wieder et al. 2020). As discussed in the representation section, the input to such models is a graph G. Each node in this molecular graph has an associated feature vector. This is a requirement if a node-centered MPNN, which is the most occurring type, is used. This feature vector will be denoted as x

v

, where v is the node of which this is the feature vector. Often, also the edges have an associated feature vector. The vector of the edge between node v and w will be denoted as e

vw

. In node-centered MPNNs, every node v has a hidden state

This initialization function can be as simple as init(x

v

) = x

v

. However, then, the size of the hidden state

After initialization, the iterative procedure of MPNNs starts. Every iteration consists of two stages: the message passing stage and the update stage. In the message passing stage, every node receives information from its neighboring nodes. The messages the node receives are then added to create the overall message

In this equation N(v) denotes the collection of all neighbors of node v and M

t

the message function at iteration t. The function M

t

, which can be different for every iteration, is chosen by the user. The function can be as simple as

Again, the complexity of this update function can range from simple arithmetic operations to a neural network. One special, but frequently used update function is the gated recurrent unit (GRU) (Feinberg et al. 2018; Liao et al. 2019; Withnall et al. 2020). This learned unit, originally designed for recurrent neural networks, has found its popularity in GNNs in recent years. After T iterations, every node has a learned representation describing its environment. This approach thus very much resembles the earlier mentioned ECFP, with the significant difference that here, the final atom representations are learned, whereas in the ECFP procedure, the atom representations are fixed. Finally, the hidden states of all nodes are converted to the targeted thermodynamic property, as is shown in equation (9).

In this equation, the output function out can be any function, learned or fixed, that transforms the node representations to the prediction(s). Mostly, it is a learned function in which the first step is adding all the hidden representations,

Representation of the construction of the molecular graph of 3-hexene, and a visualization of the iterative step in the MPNN.

More details about MPNNs and GNNs can be found in the work of Gilmer et al. (2017) and the review of Wieder et al. (2020). One major advantage of using a graph representation in combination with a GNN is that the model can optimize the latent vector representation of the molecule for the given task by tuning the model parameters. Depending on the task and even the training data, the molecule will thus be represented by a different vector. Another advantage is that, since this vector representation is learned, expert knowledge is not really required to construct a machine learning model. A drawback of using GNNs is that they contain a high number of parameters and are as a consequence very data-intensive.

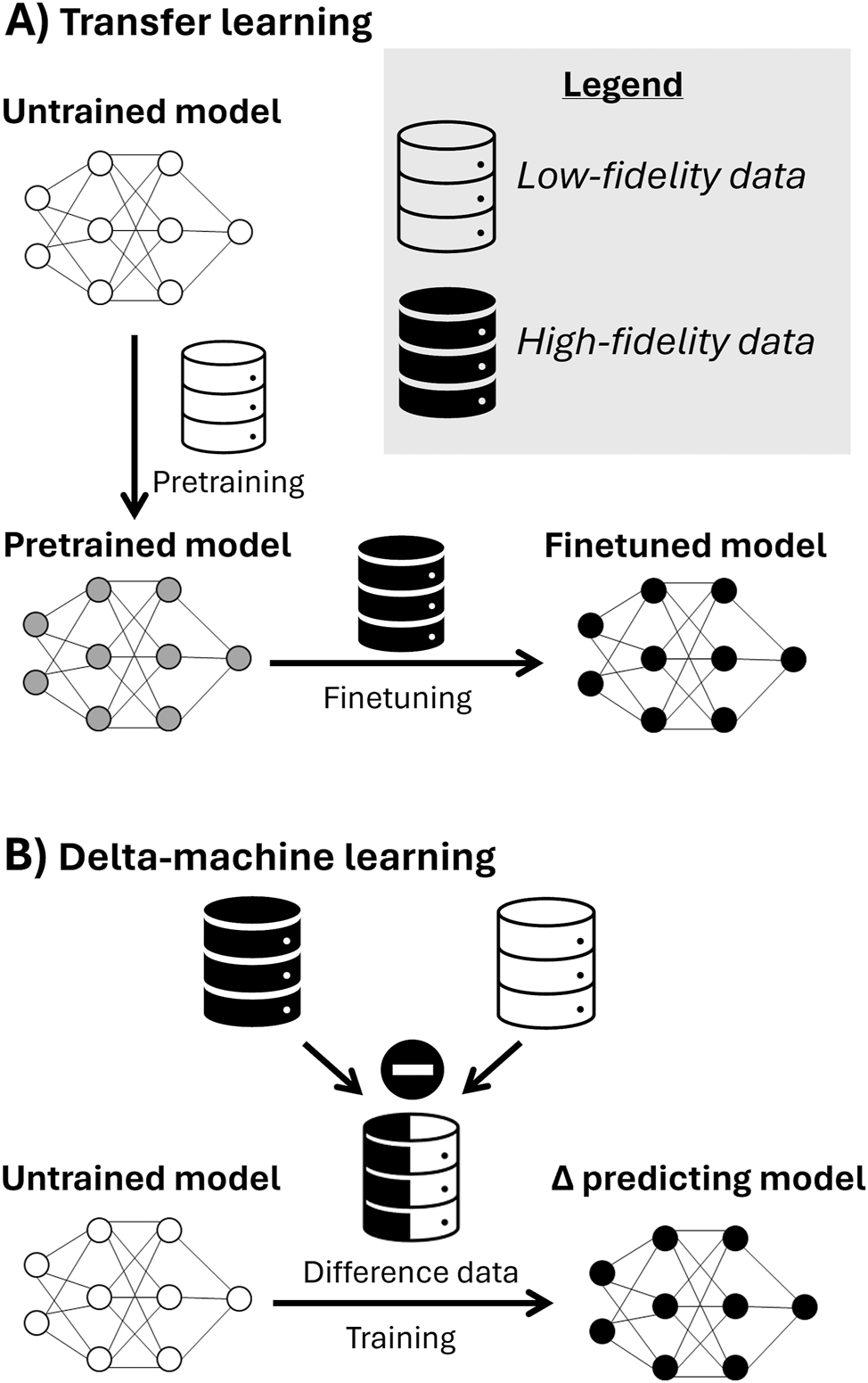

The last type of molecular representation that could be used as input for a machine learning model is the SMILES string itself. In these machine learning models, the SMILES strings are converted to a latent representation. Historically, recurrent neural networks (RNNs) were used to perform this task (Gómez-Bombarelli et al. 2018; Xu et al. 2017). Over recent years, great advances have been achieved in the field of natural language processing (NLP) (Devlin et al. 2018; Vaswani et al. 2017). The main novelty of these works is that language can be treated only using attention mechanisms, removing the need for RNNs. More details about this new technique can be found in the original publications (Devlin et al. 2018; Vaswani et al. 2017). The first part of such language models is usually a Bidirectional Encoder Representations from Transformers (BERT) (Chithrananda et al. 2020; Wang et al. 2019; Wu et al. 2022; Zhang et al. 2021). This encoder transforms a string, here the SMILES of the molecule, to a mathematical vector representation. After this BERT encoder, an FNN is usually used to predict the target property from the vector representation (Wang et al. 2019; Zhang et al. 2021). It is possible to train this combination of the BERT encoder and FNN via the conventional method. However, these two parts can also be trained separately. Alternatively, a transfer learning approach is frequently used to improve the prediction accuracy. This separate training and transfer learning approach will be discussed in a following section.

The models used for the prediction of solvation properties are similar to the ones used for the prediction of gas-phase properties. For the prediction of solvation energies in the same solvent for all data points, the solvent does not need to be represented, and the solute can be embedded similarly to gas-phase molecules. Therefore, the same model architectures can also be used. When the solvent differs along the dataset, the solvent must also be represented. When the solvent and solute are each represented by a feature vector, the vectors can be concatenated to obtain one vector describing the solute-solvent pair. This vector can then be used to calculate the target property using any of the aforementioned models that transform a vector into a numerical output (Chen et al. 2023; Liao et al. 2023a). If the solute and solvent are represented by a graph, GNNs can be used to treat them. After the iteration step, the latent representation of both are concatenated and then fed into an FNN (Chung et al. 2022; Vermeire and Green 2021). Also other techniques to predict solvation energies exist. For example, some works calculate interactions between atoms of the solute and solvent, mimicking the physical solvation process (Lim and Jung 2021; Pathak et al. 2020). Based on these interactions, the solvation energies are then calculated. As mentioned before, it is also possible to have a graph input for the solute and a vector input for the solvent. The solvent representation is then appended to the latent solute representation in the GNN.

The prediction of adsorption energies can be done in an analogous way to the prediction of solvation energies. In principle, the catalyst feature vector can be appended to the adsorbate descriptor. This larger vector can then again be used as input to a classical machine learning model (SVR, KRR, FNN) (Nayak et al. 2020; Toyao et al. 2018). However, most models for predicting adsorption energy are for a single adsorbate. Using the catalyst descriptor solely is thus sufficient in this case. Fung et al. (2021) created a machine learning model that could handle varying catalysts and adsorbates. After some steps latent feature vectors were obtained which were then concatenated. This vector was then fed into a feedforward neural network to obtain the adsorption energy prediction. Pablo-García et al. (2023) also predicted the adsorption energies of various adsorbate-catalyst combinations. The complete adsorbate-catalyst pair was embedded in a single graph. Therefore, a single GNN could be used to predict the adsorption energy. Besides this work, others have also used GNN to predict adsorption energies or related properties using a similar approach (Ghanekar et al. 2022; Li et al. 2023). While the number of machine learning models for direct thermochemical property prediction for heterogeneous catalysts remains limited, many efforts have been made in the area of neural network potentials for catalytic processes. Especially to predict the OC20 datasets, many machine learning potentials have been developed (Gasteiger et al. 2021; Liao et al. 2023b; Zitnick et al. 2022). Furthermore, important steps are being taken in model development for the prediction of crystal properties (Park and Wolverton 2020; Xie and Grossman 2018). Xie and Grossman (2018) developed a crystal graph convolutional neural network specifically for material property prediction. Park and Wolverton (2020) improved upon this model by incorporating information on Voronoi tessellated crystal structures. These types of models allow a complete and unique representation of the materials which is an important prerequisite for the prediction of adsorption energies in heterogeneous catalysts.

When selecting a model, an important consideration is its data requirements. Some models necessitate less data for training compared to others. For instance, training a large neural network (i.e., one with a high number of parameters) with a small dataset often leads to overfitting. Conversely, employing the same dataset to train a smaller neural network or simpler models like SVR or KRR tends to mitigate overfitting. This phenomenon can pose challenges when using a graph representation since it must always be paired with GNNs, which typically have numerous parameters. Thus, while a graph offers the most complete representation, it may not be optimal when data availability is limited. Using a string representation presents a similar issue. The BERT encoder, for example, comprises numerous parameters that require fitting, potentially resulting in overfitting. Nonetheless, as previously mentioned, alternative training methods can be employed to address this issue, which will be discussed in Section 5.

4 Machine learning for reaction property prediction

4.1 Kinetic datasets

Machine learning of reaction properties is less common in literature than the prediction of molecular properties. One of the main reasons for this gap is the availability of data. Reaction datasets are not as well established as molecular datasets. The first reason for this is the computational cost of constructing one. Constructing reaction databases usually requires searching, optimizing, and calculating the energy of transition states. This search and optimization procedure on transition states is computationally more demanding than it is on stable species. This higher computational cost leads to smaller datasets. Furthermore, the theoretical reaction space is larger than the molecular space (Stocker et al. 2020). Building a general machine learning model to predict properties for a wide range of inputs, would thus require more data when treating reactions, in comparison with having molecules as input. The lack of sufficient qualitative data is thus a major bottleneck for machine learning of kinetic properties. Nonetheless, efforts have been made to construct reliable datasets. One example is the datasets designed by the Green group (Grambow et al. 2020b; Spiekermann et al. 2022a). First, molecules involving hydrogen, carbon, nitrogen, and oxygen, with six or seven heavy atoms were selected from the GMB-7, which is a subset of the GDB-17 database. Starting from the optimized geometry of these molecules, transition states were sought via a single-ended method at the B97-D3/def2-mSVP level of theory. In single-ended methods, transition states are searched for starting from the reactant(s), while not having any knowledge about the products (Zimmerman 2015). After checking the validity of the transition state, the reaction energy and the activation energy were calculated at the B97-D3/def2-mSVP level of theory. This resulted in a dataset of approximately 16,000 reactions. To increase the accuracy, the geometry optimizations and energy calculations were reperformed at the ωB97-D3/def2-mSVP and the CCSD(T)-F12a/cc-pVDZ-F12 levels of theory for a dataset of approximately 12,000 reactions. Remarkable about this dataset are the types of reactions. Since the reactions are generated via an automatic single-ended method, a high variety of reaction types is obtained. This can both be an advantage and a disadvantage, depending on the aim of the machine learning model. A downside of these reaction datasets is that they only contain unimolecular reactions. Although the reactions can be reversed to obtain bimolecular reactions, the dataset still describes a limited range of the chemical reaction space. Either the reactants or products would consist of only one species. This limitation is not present in the work of Zhao et al. (2023b). They calculated the kinetics for almost 177,000 reactions. First neutral closed-shell molecules were selected from the PubChem database. The selected molecules consisted of C, H, O, and N, and contained no more than 10 heavy atoms. On these initial reactants, reactions are enumerated where two bonds are broken, and two bonds are formed. Both the reactant and the product are then used as input for a double-ended transition state search, at the GFN2-xTB level of theory. In such a double-ended search, a transition state is sought based on both the reactant(s) and product(s). Often, this search resulted in transition states relating to unexpected reactants and/or products. These unintended reactions were retained to increase the diversity of the dataset. Because of this, also bimolecular reactions and reactions breaking or forming less or more bonds are included in this dataset. For these reactions, the energetics were calculated at the B3LYP-D3/TZVP level of theory. In contrast to the previous dataset, not only reaction and activation energies were calculated, but also Gibbs free reaction and activation energies. A downside of the aforementioned reaction datasets is that the kinetic properties are calculated at a level of theory with a relatively low accuracy i.e., all but one are calculated using a DFT method. Furthermore, these datasets are constructed by automatically generating possible reactions. The first ones were constructed by searching for a transition state on the potential energy surface. The last database was constructed by breaking and forming bonds in the molecular graph. Both approaches may lead to reactions that are irrelevant or even unrealistic to occur in reality. The kinetics of a more relevant class of reactions was calculated by von Rudorff et al. (2020). They calculated the activation energy for thousands of E2 and SN2 reactions. These reactions are relevant in synthetic chemistry, but less prevalent in high-temperature reaction networks. Data considering high-temperature reactions is, similar to molecular data, usually spread around literature. Table 2 shows a selection of high-temperature reaction data calculated at a high level of theory that is available in the literature.

Selection of high-temperature kinetic databases calculated at a high level of theory found in the literature.

| Reaction type | Number of data points | Reaction representation | Properties | Method | Source(s) |

|---|---|---|---|---|---|

| Hydrogen transfer between oxygenates | 118 | Drawing + TS geometry | Arrhenius | CBS-QB3 + 1D HR |

Paraskevas et al. (2015) |

| Radical addition and β-scission of oxygenates | 66 | Drawing + geometries | Arrhenius | CBS-QB3 + 1D HR |

Paraskevas et al. (2016) |

| Intramolecular hydrogen abstraction | 448 | Drawing + TS geometry | Arrhenius | CBS-QB3 + 1D HR |

Van de Vijver et al. (2018) |

| Carbon-centered radical additions and β-scissions | 51 | Drawing + TS geometry | Arrhenius | CBS-QB3 + 1D HR |

Sabbe et al. (2008c) |

| Nitroethane flame reactions | 729 | Chemkin file format | Arrhenius | QCISD(T)/CBS | Zhang et al. (2013) |

| Tetrafluroropropene combustion reactions | 1,530 | Chemkin file format | Arrhenius | CBS-QB3 | Needham and Westmoreland (2017) |

-

Arrhenius properties include the pre-exponential factor A, activation energy E a , and may include the temperature coefficient n.

This table shows two types of data sources. The first four sources contain data to construct kinetic GAVs, whereas the last two sources contain data to construct detailed kinetic models. The advantage of the data generated for GAV purposes is that it only contains data of a strictly defined reaction class. This facilitates the generation of a machine learning model aimed at the prediction of the kinetics of that reaction class solely. The downside of these sources is their limited number of data points. On the contrary, when calculating kinetics for kinetic models, more data points are generated. However, these reactions span a wide range of reaction classes, which makes the creation of reaction class-specific machine learning models infeasible. Similar to molecular datasets, the RMG database has collected data from diverse sources in one database. Again, the downside of such a collected database is that the included properties may have a different calculation method and accuracy. The same issue is present in the NIST Chemical Kinetics Database (Mallard et al. 1992). However, the advantage of this database is that it, besides computed kinetic properties, also contains experimentally measured properties, which are generally more accurate.