Abstract

Medical imaging plays a crucial role in the accurate diagnosis and prognosis of various medical conditions, with each modality offering unique and complementary insights into the body’s structure and function. However, no single imaging technique can capture the full spectrum of necessary information. Data fusion has emerged as a powerful tool to integrate information from different perspectives, including multiple modalities, views, temporal sequences, and spatial scales. By combining data, fusion techniques provide a more comprehensive understanding, significantly enhancing the precision and reliability of clinical analyses. This paper presents an overview of data fusion approaches – covering multi-view, multi-modal, and multi-scale strategies – across imaging modalities such as MRI, CT, PET, SPECT, EEG, and MEG, with a particular emphasis on applications in neurological disorders. Furthermore, we highlight the latest advancements in data fusion methods and key studies published since 2016, illustrating the progress and growing impact of this interdisciplinary field.

1 Introduction

Medical imaging has revolutionized healthcare, providing accurate and non-invasive methods for diagnosing numerous medical conditions and forming a crucial foundation for patient care and treatment (Zhao et al. 2023). Each imaging modality offers unique insights into the body’s anatomy and physiological processes, making them indispensable in modern medicine. However, no single imaging technique is comprehensive enough to capture all aspects of a disease. To address this limitation, data fusion techniques have emerged, integrating multiple imaging modalities to enhance diagnostic accuracy and deepen our understanding of disease mechanisms, ultimately enabling more effective treatments (Arco et al. 2023; Martinez-Murcia et al. 2024). Data fusion integrates diverse modalities, each contributing valuable information about different disease aspects (Koutrintzes et al. 2023). This integration is crucial for achieving a holistic understanding of a patient’s condition, and it has become a key focus in recent research. Some imaging systems, such as dual scanners, facilitate this integration by acquiring multimodal images simultaneously, improving diagnostic precision and efficiency.

Imaging modalities vary in the type of information they provide and in spatial resolution. Each has inherent advantages and limitations, making it necessary to combine multiple modalities for more comprehensive and accurate assessments. For instance, structural MRI offers excellent soft tissue contrast, making it ideal for tumor detection and clinical research without the radiation exposure of computed tomography (CT). However, magnetic resonance imaging’s (MRI’s) sensitivity for functional information is limited compared to positron emission tomography (PET) and functional magnetic resonance imaging (fMRI) (Musafargani et al. 2018; Pei et al. 2024). Conversely, electroencephalography (EEG) (Liu et al. 2024c; Wu et al. 2024) provides high temporal but low spatial resolution, while magnetoencephalography (MEG) strikes a balance with excellent temporal and satisfactory spatial resolution. Therefore, integrating these modalities through cross-information fusion improves disease detection and diagnosis. in.

The complexity and volume of medical imaging data have significantly elevated the role of machine learning (Mirzaei and Adeli 2016a; Mirzaei and Adeli 2022). Machine learning (ML) also includes reinforcement learning (Raj and Mirzaei 2023) and deep learning (DL), which has made considerable advancements in tackling disease-related challenges (Ruiz et al. 2023; Urdiales et al. 2023; Yousif and Ozturk 2023). DL, in particular, has revolutionized medical image analysis, with applications in segmentation (Díaz-Francés et al. 2024; Mirzaei and Adeli 2018; Zhou et al. 2019), registration, data fusion (Zhao et al. 2019), image retrieval (Zhu et al. 2023b), lesion detection (Kamnitsas et al. 2017), and the diagnosis of various neurological disorders such as epilepsy (Nogay and Adeli 2020a), autism spectrum disorder (Nogay and Adeli 2020b, 2023, 2024; Colonnese et al. 2024), major depressive disorder (Shahabi et al. 2024), and schizophrenia (Fahoum and Zyout 2024). These advancements underscore DL’s superiority over traditional ML techniques, as DL models often require less preprocessing and feature engineering, thanks to their ability to learn complex patterns directly from raw data (Razzak et al. 2018). Moreover, DL is instrumental in combining inter- and intra-modal imaging data, driving progress in medical research and diagnostics. Their disadvantage, however, is the need for significant computing processing and larger databases.

This paper highlights the evolving field of multimodal imaging data fusion, focusing on its applications to neurological disorders. Recent advancements in fusion methodologies are systematically reviewed, emphasizing techniques developed since 2016. The review delves into the integration of imaging modalities such as MRI, CT, PET, single photon emission computed tomography (SPECT), and EEG, examining how their fusion can leverage complementary strengths to provide more comprehensive diagnostic insights. The review covers both ML-based techniques as well as traditional statistical and non-ML approaches, offering a holistic perspective on this rapidly advancing field.

2 Overview of medical imaging modalities

Medical imaging contains a diverse array of techniques used to generate detailed visualizations of the internal anatomy and physiological processes of the human organs. These modalities can be categorized into structural and functional imaging as described in the following sections.

2.1 Structural imaging

Structural imaging provides detailed anatomical information, making it invaluable for identifying physical abnormalities and assessing organ structures. Key modalities include:

Magnetic resonance imaging (MRI): MRI uses strong magnetic fields and radio waves to produce high-resolution images of soft tissues. It is superior to CT in terms of soft tissue contrast and is widely used for tumor detection and brain imaging. Importantly, MRI does not expose patients to ionizing radiation (Feng et al. 2020), making it a safer option for repeated imaging throughout the course of treatment. However, MRI has limitations in imaging bone structures and may require longer scan times, which can be challenging for patients. MRI imaging provides various contrasts and techniques based on tissue characteristics such as T1-weighted, T2-weighted, fluid-attenuated inversion recovery (FLAIR), diffusion-weighted imaging (DWI), etc.

X-ray: X-rays use ionizing radiation to produce two-dimensional images of dense structures in the body, such as bones, teeth, and certain soft tissues. They are widely used for diagnosing fractures, infections, and lung conditions, as well as for routine screenings like chest X-rays. While quick and cost-effective, X-rays provide limited soft tissue contrast compared to advanced modalities like CT or MRI, and are rarely used for direct brain imaging. However, they can be utilized in specific scenarios related to brain imaging such as skull fractures, neurosurgical planning, or emergency settings where CT and MRI are not available.

Computed tomography (CT): CT scans use X-rays to create detailed cross-sectional images of the body. They are particularly effective for visualizing bone structures and detecting acute conditions, such as fractures or internal bleeding. While CT provides valuable diagnostic information, its exposure to ionizing radiation limits repeated use. Advances in low-dose CT techniques aim to mitigate this concern, making the modality safer for follow-up imaging in certain cases.

2.2 Functional imaging

Functional imaging techniques provide insights into the body’s physiological processes, revealing how organs and tissues function. This is crucial for understanding diseases related to organ performance. Major modalities include:

Electroencephalography (EEG): EEG measures the electrical activity of the brain within a given period such as 20 or 30 min (Lian and Xu 2023). It is used to study brain functions in real-time by detecting changes in neural activities in different regions of the brain (Ji et al. 2024).

Functional MRI (fMRI): fMRI detects brain activity by measuring changes in blood flow and oxygenation levels, leveraging the blood-oxygen-level-dependent (BOLD) contrast. This technique maps functional areas of the brain, providing insights into neural connectivity and brain function. fMRI is extensively used in neuroscience research to study cognitive processes, sensory responses, and motor functions, and it plays a critical role in diagnosing and monitoring neurological disorders such as epilepsy, stroke, and neurodegenerative diseases such as Alzheimer’s disease (Cheng et al. 2018; Mirzaei and Adeli 2016b).

Positron emission tomography (PET): PET scans use radiotracers to monitor metabolic activity, making them useful for cancer detection, neurological disorders, and cardiac health. PET is often combined with structural imaging such as MRI or CT to improve diagnostic accuracy.

Single photon emission computed tomography (SPECT): SPECT imaging, similar to PET, utilizes gamma rays to assess organ function. Unlike PET, which detects gamma rays resulting from positron annihilation, SPECT directly measures gamma rays emitted by the radiotracer. SPECT is commonly used to diagnose conditions like heart disease and certain brain disorders, offering valuable information about blood flow and tissue function. Although SPECT is generally more cost-effective and widely available than PET, it has lower spatial resolution and sensitivity.

3 Imaging fusion types

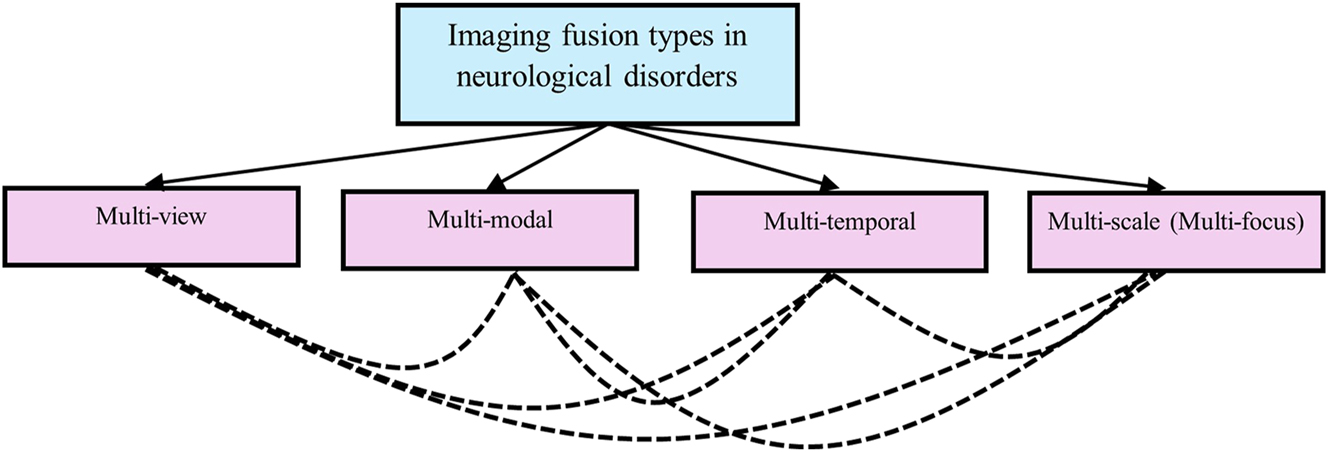

Image fusion can be classified into several categories, as outlined by Dogra et al. (2018). Figure 1 provides a visual overview of the current research trends in each fusion category. Detailed explanations of each fusion type are presented below.

Imaging fusion types used in neurological studies

3.1 Multi-view fusion

This approach integrates images captured from the same modality but taken under different conditions or from various angles at the same time. It enhances the understanding of a scene by combining multiple perspectives of the same subject. This technique is frequently applied in MRI imaging, where data is acquired in various planes such as sagittal, coronal, and axial. Typically, multi-view MRI imaging involves two-dimensional slices from each plane, which are then combined to extract comprehensive features.

Multi-view imaging has become a key technique in the study and diagnosis of neurological disorders, with significant advancements in its application to diseases like Alzheimer’s and brain tumors. In 2017, Hon and Khan focused on the axial plane of 3D MRI scans for Alzheimer’s classification, utilizing deep learning with transfer learning. They fine-tuned the fully connected layers of their network using a limited number of MRI images, providing a foundation for future work in multi-view imaging. Building on this, Neffati et al. (2019) explored the potential of coronal-view MRI data in predicting Alzheimer’s disease. (Tian et al. 2019) introduced multi-view data fusion techniques based on EEG signals for detecting epileptic seizures. By integrating various views of EEG data – such as the frequency, time, and time-frequency domains – this work highlighted the versatility of multi-view fusion across different neurological disorders.

Mirzaei (2021) combined sagittal, coronal, and axial views of structural MRI (sMRI) images, using an ensemble of convolutional neural networks (CNNs) for Alzheimer’s disease classification. Similarly, Chen et al. (2022) extracts sagittal, coronal, and axial views from MRI scans and feeds them into a CNN to capture complementary spatial information. By integrating features from these three orientations, the model achieves improved diagnostic accuracy compared to single-view approaches, applied multi-view data for fast brain tumor segmentation, focusing on necrotic and peritumoral edema images, further expanding the utility of multi-view techniques in neuroimaging. For early detection of Alzheimer’s disease, Zayene et al. (2024) employed multi-view PET imaging to classify Alzheimer’s disease into different stages. Li et al. (2024d) also used multi-view MRI for detecting Alzheimer’s disease, reinforcing the importance of multi-view techniques in early diagnosis. More recently, Wu et al. (2025) developed a multi-view MRI approach for segmenting brain and scalp blood vessels, utilizing cascade integration to combine results from each view. Rahim et al. (2025) also applied multi-view MRI to detect the early progression from mild cognitive impairment (MCI) to Alzheimer’s disease, further demonstrating the impact of multi-view imaging in tracking disease progression.

3.2 Multi-modal fusion

This type of fusion combines images obtained from different imaging modalities, such as MRI, CT, PET or other modaltities, to leverage the unique advantages of each modality and provide a more comprehensive representation of anatomical or functional information that cannot be achieved using a single modality. This fusion technique is prevalent in neuroimaging studies and holds great potential for disease diagnosis by combining complementary data sources.

Multi-modality fusion has been extensively applied in brain imaging and has received significant attention for its ability to integrate complementary information. Calhoun and Sui (2016) demonstrated the power of multi-modal fusion by integrating three modalities: functional MRI (fMRI), structural MRI (sMRI), and diffusion MRI (dMRI). fMRI measured the dynamic hemodynamic response related to neural activity, sMRI estimated tissue types for each brain voxel (including gray matter, white matter, and cerebrospinal fluid), and dMRI assessed white matter tract integrity and structural connectivity. Correlating features from one modality with voxels from the others allowed for a more holistic understanding of brain function and structure. Among various multi-modal techniques, the fusion of MRI and PET has been widely utilized in research studies.

Zhou et al. (2019) utilized PET/MRI fusion imaging with latent space learning for Alzheimer’s disease detection, demonstrating the enhanced diagnostic capabilities of integrating structural and metabolic information. Zhang et al. (2020) offered an extensive overview of multimodal data fusion in neuroimaging, underscoring the significant advancements and challenges in the field. Dimitri et al. (2022) introduced a generative multimodal approach that jointly analyzes structural, functional, and diffusion MRI data, linking multimodal information to colors for intuitive interpretation. Luo et al. (2024) discussed methods and applications of multimodal fusion in brain imaging, emphasizing the importance of combining structural and functional data to better understand neural mechanisms. Lee et al. (2024) provided an extensive review of integrated PET/MRI hybrid imaging in neurodegenerative diseases. Similarly a review of multimodal medial imaging is provided by Basu et al. (2024). Takamiya et al. (2025) utilized PET/MRI to investigate late-life depression, focusing on differences in grey and white matter in the medial temporal lobe, further demonstrating the power of multi-modal imaging in understanding psychiatric conditions. Satyanarayana and Mohanaiah (2025) also used MRI/PET fusion for a more accurate diagnosis with a multi-modal approach. Luo et al. (2024) discussed methods and applications of multimodal fusion in brain imaging, emphasizing the importance of combining structural and functional data to better understand neural mechanisms. Lee et al. (2024) provided an extensive review of integrated PET/MRI hybrid imaging in neurodegenerative diseases. Similarly a review of multimodal medial imaging is provided by Basu et al. (2024). Additionally, Takamiya et al. (2025) utilized PET/MRI to investigate late-life depression, focusing on differences in grey and white matter in the medial temporal lobe, further demonstrating the power of multi-modal imaging in understanding psychiatric conditions. Satyanarayana and Mohanaiah (2025) used MRI/PET fusion for a more accurate diagnosis with a multi-modal approach.

Other modalities also have been fused such as MRI and DTI (Hechkel and Helali 2025) and MRI and SPECT (Prasad and Ganasala 2025). Luo et al. (2025) proposed a technique that can be applied across multiple imaging modalities, including CT-MRI, PET-MRI, and SPECT-MRI. Recent studies also utilize different modalities in a guided approach to generate synthetic data for one modality or enhance the resolution of one modality by leveraging the information from another. For example, generating PET images from MRI can help overcome PET’s high costs and radiation exposure, making it more accessible. Li et al. (2024e) developed a method to generate synthetic PET scans from MRI for Alzheimer’s disease detection. Techniques used in multimodal fusion studies are discussed in Section 5. Table 1 shows different fusion techniques used in multi-modal imaging studies in brain disorders.

Fusion techniques proposed for neural disorders since 2016.

| Authors | Modalities | Medical application | Technique |

|---|---|---|---|

| Du et al. (2016b) | MRI/CT, MRI/PET | General brain imaging | Parallel color and edge features on multi-scale local extrema scheme |

| Du et al. (2016c) | MRI/SPECT, MRI/PET, MRI/CT | General brain imaging | Union laplacian pyramid |

| Xu et al. (2016a, b) | CT/MRI, MR-T1/MR-T2, MR/PET | General brain imaging | Discrete fractional wavelet |

| Xu et al. (2016a, b) | CT/MR, MR-T1/MR-T2, MR-T2/SPET | General brain imaging | Pulse-coupled neural network optimized by quantum-behaved particle swarm optimization |

| Chavan et al. (2016) | CT/MRI | General brain imaging | Software: rotated wavelet transform |

| Manchanda and Sharma (2016) | MRI/PET, CT/PET, Mr-T1/MR-T2, MRI/CT, MRI/SPECT | General brain imaging | Fuzzy transform |

| Dai et al. (2017) | CT/MRI | Cerebral infarction | Wavelet transform |

| Li et al. (2017b) | MRI/MRSI | Brain tumors | Non-negative matrix factorization (NMF) |

| PET/MRI | Oncology | Software: co-registration algorithm | |

| Liu et al. (2017a, b) | CT/MRI, MR-Gad/MR-T2, MR-T1/MR-T2, | General brain imaging | Convolutional neural network |

| CT/MRI | General brain imaging | Convolutional neural network (CNN) and a dual – channel spiking cortical model | |

| Akhonda et al. (2018) | EEG/fMRI | Schizophrenia | Consecutive independence and correlation transform (C-ICT) |

| Aishwarya and Thangammal (2018) | CT/MRI, MR-T1/MR-T2, SPECT/MRI, PET/MRI |

General brain imaging | Sparse representation and modified spatial frequency |

| CT/MRI/PET | General brain imaging | Nonsubsampled shearlet transform and simplified pulse coupled neural networks (S-PCNNs) | |

| MR-T1/MR-T2 | Tumor segmentation | Discrete wavelet Transform based principal component averaging (DWT-PCA) fusion | |

| Liu et al. (2019) | PET/MRI | Normal, Alzheimer’s, and neoplastic brain images | Software: multi resolution and nonparametric density model |

| Benjamin et al. (2019) | SPECT/PET/MRI | Not specified | Software: adaptive cloud model (ACM) based local laplacian pyramid (LLP) |

| Task-based fMRI/resting state fMRI | IQ classification | Alternating diffusion map | |

| Yang et al. (2019) | CT/MRI, MRI/PET/SPET, | General brain imaging | Structural patch decomposition (SPD) and fuzzy logic |

| MRI/MRSI | Tumor detection | Source extraction (SSSE) | |

| Rajalingam et al. (2019) | CT/MRI | General brain imaging | Combination of dual tree complex wavelet Transform and non-subsampled contourlet Transform |

| Jia et al. (2019) | fMRI/MRI based diffusion tensor imaging (DTI) | Schizophrenia. | Consecutive independence and correlation transform |

| Acar et al. (2019) | fMRI/sMRI/EEG | Schizophrenia | Structure-revealing coupled matrix and tensor factorizations (CMTF) |

| CT/SPECT/MRI | Tumor classification (benign/malignant) | Software: optimal deep neural network optimized by discrete gravitational search optimization | |

| Liu et al. (2022) | MR-T1/MR-T2 | Tumor segmentation | Convolutional neural network (CNN) with attention mechanism |

| Weisheng et al. (2022) | MRI/SPECT, MRI/PET, MRI/CT | General brain imaging | Encoder-decoder |

| MRI/CT | General brain imaging | Discrete cosine transformation (DCT) image fusion algorithm | |

| Dang et al. (2023) | EEG/fMRI | General brain imaging | Optimized forward variable selection decoder |

| Wen et al. (2024b) | MRI/CT/PET/SPECT | General brain imaging | Modified convolutional neural network (CNN) |

| Xie et al. (2023) | MRI/CT PET/MRI SPECT/MRI | General medical imaging | Combination of Swin transformer and convolutional neural network (CNN) |

| Li et al. (2024e) | MRI/CT PET/MRI SPECT/MRI | General medical imaging | Combination of flexible semantic-guided architecture and mask-optimized framework |

| PET/SPECT/CT | General brain imaging | Modified convolutional neural network (CNN) | |

| Chu et al. (2024) | EEG-fMRI | Somatosensory analysis | Combination of unsupervised K-mean algorithm, Gaussian classifier and K-nearest neighbor classifier |

| Meeradevi et al. (2024) | MRI/CT | General brain imaging | Combination of Shearlet transform and discrete wavelet Transform |

| Zhang et al. (2024) | MRI/CT | Organ at risk | Elastic Symmetric normalization |

| DW-MRI/CT | Stroke detection | Wavelet transform | |

| Wen et al. (2024a) | MRI/PET | General brain imaging | Adaptive linear fusion method based on convolutional neural network (CNN) |

| Zayene et al. (2024) | MRI multi-views | Early detection of AD | Multi-view Separable residual CNN |

| Li et al. 2024d) | MRI multi-views | Alzheimer | CNN + Transformer |

| Thompson et al. (2025) | Multi-tempral | Dementia | Statistical analysis |

| Wu et al. (2025) | MRI multi views | Brain and scalp blood vessel segmentation | ResUnet++ |

| Rahim et al. (2025) | MRI multi views | Early detection of AD | CNN + MLP + LSTM |

| Hechkel and Helali (2025) | MRI/DTI | Alzheimer | YOLOv11 |

| Prasad and Ganasala (2025) | MRI/SPECT | General brain imaging | Adaptive fusion rule in multiscale transform domain |

| Takamiya et al. (2025) | MRI/PET | Late-life depression | Voxel-based statistical analysis |

| Namgung et al. (2025) | Multi-temporal | Migraine | Regularization-based feature selectin + random forest(RF) |

| Zhang et al. (2025) | Multi-temporal | Alzheimer | Linear mixed-effects (LME) |

| Liu et al. (2025) | EEG-fNIRS | General brain imaging | Spatial-temporal alignment network |

3.3 Multi-temporal fusion

This category involves the integration of images from the same modality or view, captured at different times. It is particularly useful for monitoring changes over time, such as tracking disease progression or treatment response in longitudinal studies of diseases, such as dementia and Parkinson’s disease where symptoms evolve gradually (González et al. 2023).

Van der Burgh et al. (2020) applied multi-temporal fusion in a longitudinal study to monitor the progression of amyotrophic lateral sclerosis using brain MRI imaging. They collected a comprehensive dataset from patients, including images, functional assessments, cognitive evaluations, and behavioral data, during follow-up visits spaced 4–6 months apart. By employing linear mixed-effects models, they fused the multi-temporal data to identify phenotypic and genotypic factors contributing to cerebral degeneration. Similarly, Clancy et al. (2021) utilized multi-temporal MRI imaging to study cerebral small vessel diseases, a major cause of dementia and stroke. Their research involved collecting clinical, cognitive, and physiological assessments alongside MRI images over a one-year period, with patients undergoing a minimum of three evaluations at baseline, 6 months, and 12 months post-index stroke. The study identified small vessels at the highest risk of early disease progression, showcasing the potential of multi-temporal fusion in tracking and understanding disease dynamics over time. Kim et al. (2021) proposed a generative framework for longitudinal modeling of brain MR images to predict lesion progression over time. It employs temporal fusion by disentangling structural and longitudinal state features in the latent space. They proposed it for Alzheimer’s disease, using MR images captured at different time points, including baseline, 6 months, 1 year, 18 months, and 2 years, to model the progression and predict future or missing scans. Amaral et al. (2024) proposed a data-driven temporal stratification approach to identify distinct disease progression patterns in amyotrophic lateral sclerosis (ALS) patients. Longitudinal studies have also been conducted in various other conditions, including dementia (Thompson et al. 2025), glioma (McDonald et al. 2024), bipolar disorder (Landén et al. 2025), and migraine (Namgung et al. 2025). Zhang et al. (2025) conducted a longitudinal brain study using resting-state functional MRI to examine temporal dynamics in brain network complexity across stages of Alzheimer’s disease.

3.4 CNN Multi-focus fusion

This method, also known as multi-scale fusion, merges images taken simultaneously from the same viewpoint but with different focal points, bringing various objects or areas into focus to achieve an image with a higher depth of field. This technique utilizes compression methods to achieve optimal focus across the entire image. Although this approach is commonly used in fields like photography, remote sensing and other applications, it is less frequently applied in medical imaging (e.g., MRI, CT, PET scans), where the emphasis is typically on obtaining high-resolution images of specific anatomical structures at a single focal point. Additionally, technological limitations of medical imaging scanners often prevent the capture of multiple focal planes within a single acquisition.

However, in medical imaging, ‘multi-scale’ often refers to machine learning or techniques that operate at different spatial resolutions to capture information at various scales. For example, Zhang et al. (2017) and Tan et al. (2020) explored the use of multi-scale morphological gradient (MSMG) as a focus metric to identify boundaries and determine focused regions by analyzing contrast intensities within a pixel’s neighborhood. Zafar et al. (2020) reviewed various multi-focus fusion techniques applied across different domains including medical imaging. They classified these methods into two primary categories: spatial domain techniques, which include pixel-based, feature-based, and decision-based fusion, and transform domain techniques, such as wavelet-based, curvelet fusion, and discrete cosine transform (DCT)-based methods. Wei et al. (2021) introduced multi-focus fusion techniques that integrate deep learning with sparse representation, using a densely structured three-path dilated network encoder to extract multi-scale features by expanding the receptive field, marking a significant advancement in multi-focus data fusion. A survey of the state-of-the-art in multi-focus image fusion is provided by Liu et al. (2020a). Li et al. (2024a, b, c, d, e) introduced a multimodal fusion framework that bridges the gap between multi-focus and multimodal image fusion. They developed a semi-sparsity-based smoothing filter to decompose images into structure and texture components. A multi-scale operator was then proposed to fuse the texture components, effectively capturing significant information by considering pixel focus attributes and relevant data from different modalities. Hu et al. (2024) proposed a multi-scale segmentation technique, which utilizes a weighted least squares filter to enhance the accuracy of 3D tumor reconstruction in brain glioma MRI images. Similarly, Kang et al. (2024) proposed a multiscale Attentional Feature Fusion for brain tumor detection. Chowdhury and Mirzaei (2025) developed a deep learning approach using atrous spatial pyramid pooling that effectively captures multi-scale contextual information through parallel atrous convolutions with varying dilation rates. Vinisha and Boda (2025) developed residual attention-multiscale dilated inception network for brain tumor detection and classification. Multi-scale techniques are also widely used in brain tumor segmentation (Dhamale et al. 2025; Li et al. 2025).

4 Image fusion techniques

The development and exploration of data fusion techniques in medical imaging have garnered significant attention from researchers, as these methods aim to integrate information from multiple images – whether unimodal or multimodal – while preserving the critical features of each image or modality. The fusion techniques are classified into distinct categories based on various research frameworks.

Du et al. (2016a) outlined multimodal medical imaging fusion techniques in three main steps: image decomposition/reconstruction, image fusion rules, and image quality assessment. Image decomposition/reconstruction techniques involve various methods such as intensity-hue-saturation, pyramid decomposition, discrete wavelet transform, non-subsample contourlet transform, Shearlet transform, sparse representation, and salient feature extraction. Image fusion rules are established using methods like fuzzy logic, principal component analysis (PCA), and pulse-coupled neural networks. Image quality assessment techniques include metrics like peak signal-to-noise ratio, root mean square error, entropy (Amezquita-Sanchez et al. 2021), spatial frequency, the universal image quality index, structural similarity, and natural image quality evaluation.

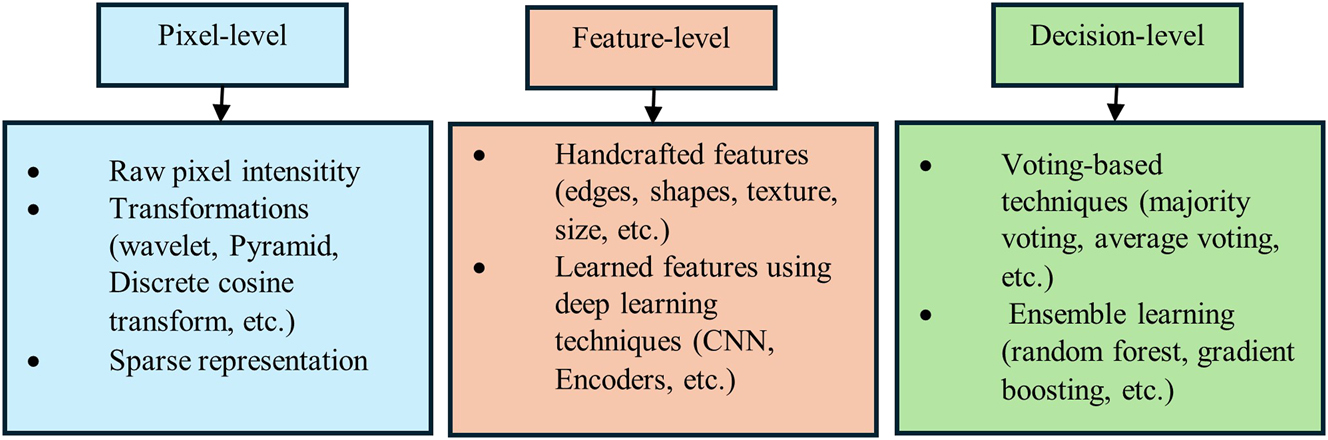

Expanding on these methods, Yadav and Yadav (2020) provided an updated review of multimodal medical imaging fusion techniques, introducing advancements and refinements in the classification of approaches. They identified two primary categories: spatial fusion and multiresolution fusion. Spatial fusion is a simpler technique, integrating images based on spatial information to produce fused outputs. In contrast, multiresolution fusion focuses on enhancing spectral details and improving the signal-to-noise ratio, employing more complex processes for higher fidelity and nuanced outputs. James and Dasarathy (2015) offered a foundational classification of fusion techniques into two main categories: feature-level and decision-level methods. Feature-level fusion integrates specific features from reference images to form a composite image, enhancing image quality and revealing previously hidden features. Decision-level fusion, by contrast, operates at a higher level, combining decisions derived from different sensors to achieve a comprehensive outcome. Dogra et al. (2018) expanded on the classification from James and Dasarathy (2015), by adding pixel-level fusion, which focuses on integrating pixel-level information to create a more detailed image. A recent study (Basu et al. 2024) provided a review of multimodal medical imaging with the focus of decomposition rules. Li et al. (2024c) categorized multi-source image fusion methods into four categories as transform domain methods, spatial domain methods, combined methods, and deep learning methods. Additionally Li et al. (2024b) provided a review of multi-modal techniques with focus on deep learning techniques. While most fusion review studies cover all modalities, Guelib et al. (2025) focused specifically on the fusion of PET and MRI for Alzheimer’s disease.

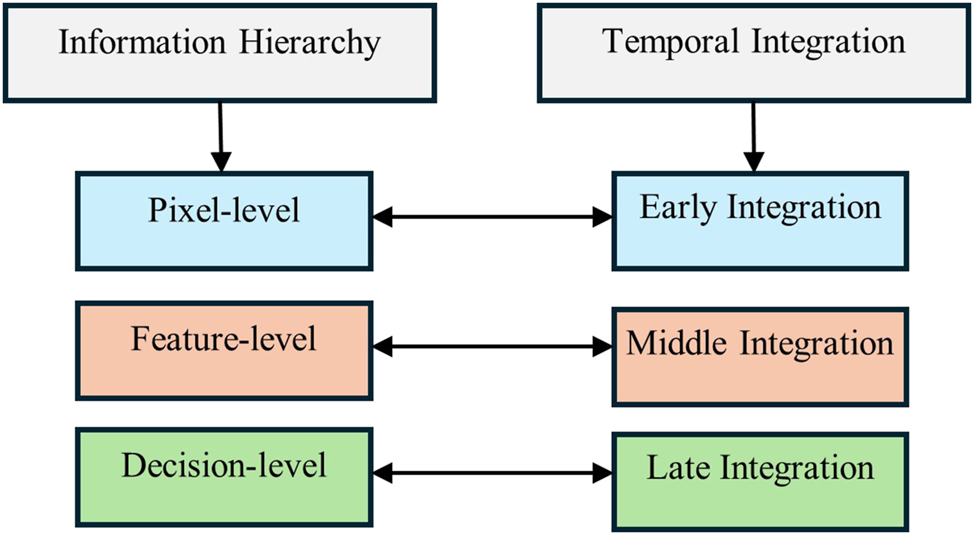

In this study, authors review the fusion techniques classified by James and Dasarathy (2015) and extended by Dogra et al. (2018), specifically in the context of neurological disorders since 2016. The image fusion studies are reviewed within two main categories: 1) Information hierarchy and 2) Temporal integration. Information hierarchy classifies fusion techniques based on the level of abstraction or type of information being combined. On the other hand, temporal integration categorizes fusion techniques according to the stage in the data processing pipeline where the integration occurs. This classification emphasizes the timing of the fusion process in relation to data acquisition, feature extraction, and decision-making.

Information hierarchy is divided into three levels: pixel level, feature level, and decision level. Similarly, temporal integration is divided into three categories: early integration, intermediate integration, and late integration, as illustrated in Figure 2. The type of fusion within the information hierarchy is inherently linked to the stage of temporal integration. Figure 3 highlights the techniques most commonly studied within each information hierarchy group, which will be discussed in detail in the following sections.

Classification of imaging fusion techniques based on information hierarchy and temporal integration.

Overview of multimodal imaging fusion techniques.

4.1 Pixel-level fusion (early integration)

Pixel-level fusion combines raw pixel intensities or low level features from multiple source images, integrating information from the original data, usually in the early stage of processing. This approach, also known as an early integration, merges input data before any higher-level analysis. Pixel-level based image transformation fusion is further divided into the following techniques (Li et al. 2017a, b):

Multi-scale decomposition: methods such as pyramid (Du et al. 2016b), wavelet (Xu et al. 2016b), curvelet, and Shearlet (Hou et al. 2019; Satyanarayana and Mohanaiah 2025) transformations.

Sparse representation (SR) (Jie et al. 2022): techniques such as orthogonal matching pursuit, joint sparsity models, spectral and spatial detail dictionaries.

Hybrid methods (Liu et al. 2019; Meeradevi et al. 2024): combination of different transforms such as wavelet-contourlet combinations, multi-scale transforms integrated with SR, morphological component analysis with SR.

Other domain methods: techniques such as spatial domain techniques, intensity-hue-saturation transform, principal component analysis, and gram-Schmidt transform.

Pixel-level fusion techniques have been widely utilized in various studies. For instance, techniques like the rotated wavelet transform, introduced by Chavan et al. (2016), effectively enhance edge features by creating a new frequency domain plane using maxima and entropy-based fusion rules. Similarly, Xu et al. (2016b) utilized the discrete fractional wavelet transform to decompose images into low-and high-frequency sub-bands, employing weighted regional variance rules for fusion and reconstruction. Manchanda and Sharma (2016) demonstrated the advantages of fuzzy transform for fusion, which employs varied basis functions to enhance contrast and detail. Their approach integrates entropy and selects maxima-based rules in the fuzzy transform domain. On the other hand, Du et al. (2016c) utilized the laplacian pyramid transform for multi-scale representation, focusing on contrast and outline feature extraction, which are combined and reconstructed into a fused image. Li et al. (2017a) and Li et al. (2024a) comprehensively reviewed pixel-level and deep learning-based fusion techniques, respectively, shedding light on the advancements in the domain. Dai et al. (2017) combined the complementary strengths of CT and MRI using wavelet-based multi-scale fusion, enhancing diagnostic accuracy through weighted averaging and maximum selection rules. Seal et al. (2017) incorporated a random forest learning algorithm with the a-trous wavelet transform for PET and CT fusion, leveraging detailed coefficients and pixel selection for effective reconstruction. Advancements in sparse representation were explored by Aishwarya and Thangammal (2018), integrating spatial frequency to discard non-informative patches and enhance structural information.

Hybrid approaches further advanced the field, as seen in Rajalingam et al. (2019), who combined dual tree complex wavelet transform with non-subsampled contourlet transform (NSCT) for multi-scale decomposition and detail preservation. Liu et al. (2019) employed NSCT with nonparametric density models for PET-MRI fusion, achieving superior quality through variable-weight theory and inverse NSCT reconstruction.

Emerging trends integrate machine learning, as shown by Hou et al. (2019), who combined non-subsampled shearlet transforms with convolutional neural networks to adaptively weight low- and high-frequency features. Adaptive methods, such as the cloud model-based local laplacian pyramid by Benjamin et al. (2019), further emphasize efficient coefficient generation and rule-based fusion. Wang et al. (2020) introduced a laplacian pyramid combined with adaptive sparse representation, minimizing noise and artifacts while maintaining image detail. Wen et al. (2024b) proposed MsgFusion, integrating key anatomical and functional features using a dual-branch design and hierarchical fusion strategy, achieving superior reconstructions across MRI, CT, PET, and SPECT modalities. Meeradevi et al. (2024) proposed a hybrid transform fusion method based on Shearlet transform and discrete wavelet transform using a deep neural network. Pan et al. (2024) provided a review study in pixel-level image fusion based on convolutional sparse representation. Wei et al. (2024) proposed an image fusion framework that focuses on measuring pixel-level semantic relationships and structure awareness. The framework includes a pixel-level structure-aware filter, which evaluates spatial semantic relationships using interval gradients to effectively identify salient structures at low frequencies. Additionally, a fusion rule was developed to combine these salient structures based on multiscale structure patch decomposition.

4.2 Feature level fusion (middle integration)

Feature-level fusion involves combining extracted characteristics from different imaging modalities, such as textures, shapes, or other quantitative measurements. This approach operates at a middle integration stage, where each image is processed independently to extract intermediate features. These features, representing higher-level abstractions, are then integrated into a unified feature vector, which is subsequently fed into a model for further analysis. These features are divided into two groups as follows:

4.2.1 Handcrafted features

Handcrafted features refer to manually designed attributes such as edges, textures, shapes, and other image characteristics extracted using traditional image processing techniques. These features are often derived through predefined algorithms that emphasize specific aspects of the image. For example, Du et al. (2016b) proposed a method that integrates parallel color and edge features within a multi-scale local extrema scheme to fuse MRI-CT and MRI-PET images. Their approach involved decomposing images into detailed scales, extracting salient color and edge features using a canny edge detector, and fusing the images through average and weighted average schemes. This method effectively preserved structural and luminance information, with the final fused images reconstructed from the detailed layers. Similarly, Chavan et al. (2016) developed a technique for fusion of MRI-CT utilizing rotated wavelet transform to extract edge-related features from source images, focusing on edge enhancement and feature clarity. Liu et al. (2018) introduced a multi-scale joint decomposition framework employing shearing filters for fusing MRI-CT. In their approach, source images were decomposed into layers, each capturing specific features at different scales. Edge and detail layers were fused using a linear weighted sum, while shearing filters extracted directional features from the detail layers. The final fused image was synthesized by combining the low-pass layer with the detail layers, maintaining comprehensive feature representation. Shamsipour et al. (2024) proposed a hybrid approach that combines handcrafted features with deep learning techniques to improve the efficiency and accuracy of image retrieval systems. Lavrova et al. (2024) provided a review of handcrafted and deep radiomics in neurological diseases.

4.2.2 Learned features

Learned features refer to complex representations discovered by deep learning techniques. These features, such as latent representations obtained from convolutional neural networks (CNNs) (Oh et al. 2023; Ning and Xie 2024) or other architectures, encapsulate high-level abstractions, modality-specific information, or task-specific attributes. They are automatically learned during training, eliminating the need for manual feature engineering or extensive preprocessing.

Deep learning methods excel at modeling complex, non-linear relationships within imaging data, making them highly effective for tasks like classification (Fan et all. 2024), segmentation (García-Aguilar et al. 2023; Mirzaei and Adeli 2018), and feature vector fusion (Zhou et al. 2024). Among deep learning approaches, CNNs are particularly prominent in medical imaging studies (Manzanera et al. 2019; Samala et al. 2016). CNN architectures typically consist of convolutional layers for local feature extraction, pooling layers for down-sampling, and fully connected layers for aggregating information (Shen et al. 2017). Their ability to learn hierarchical features from raw data has received considerable attention in medical imaging research.

A multimodal fusion approach was developed by Liu et al. (2017a, b) for integration of CT, SPECT, and MRI images, combining pixel-level techniques with CNN-learned features. This method integrates pyramid decomposition at the pixel level with a CNN-generated weight map to guide the fusion process. By utilizing a multi-scale framework based on Laplacian pyramids, it achieves enhanced consistency with human visual perception. This hybrid approach merges traditional pixel-based methods with advanced feature-level representations, resulting in improved multimodal medical image fusion. Li et al. (2021) advanced multimodal fusion by applying a CNN-based technique to integrate CT and SPECT images. Building on this, Liu et al. (2022) introduced an end-to-end CNN framework for fusing T1-and T2-weighted MRI images, employing an attention-based multimodal feature fusion mechanism to optimize feature extraction and integration. Further innovation came from Wen et al. (2024a), who designed a CNN-based feature extraction framework for MR and SPECT fusion. Their method incorporated coarse, fine, and multi-scale feature extraction modules, alongside an adaptive linear mapping function to relate multi-dimensional features, achieving superior fusion performance.

Other deep learning techniques used for automatic learned feature extraction include encoders, which extract latent features by transforming input data into a lower-dimensional representation. Encoders are integral components of various deep learning models, including Autoencoders (Bank et al. 2023), where they compress data into a compact latent space for tasks like dimensionality reduction or reconstruction, and Transformers (Vaswani el al. 2017) where they generate contextual embeddings by capturing relationships within the input data using self-attention mechanisms. These models leverage the encoder’s ability to distill relevant information from raw data, enabling effective feature representation for diverse applications such as classification, segmentation, and multimodal data fusion. Weisheng et al. (2022) introduced a decoder-encoder fusion technique that integrated colorful (MRI-PET and MRI-SPECT) and grayscale (MRI-CT) images using a densely connected three-path dilated network encoder. Their method fused features from different modalities, with the decoder reconstructing the final image. Xie et al. (2023) proposed an unsupervised fusion model combining transformers and CNNs for medical imaging fusion. Their two-stage training strategy involved an autoencoder for feature extraction and reconstruction of fused images, demonstrating strong performance across modalities like CT-MRI and PET-MRI.

U-Nets, another widely used encoder-based model, are built upon convolutional layers and have proven advantageous for medical image segmentation (Ronneberger et al. 2015). They employ an encoder–decoder architecture with skip connections to capture and restore image details efficiently. Various U-Net variants have been developed for diverse applications. Xu et al. (2019) introduced an LSTM-enhanced multimodal U-Net for brain tumor segmentation using MRI data. Zhao et al. (2022) designed a multimodality feature fusion network for brain tumor segmentation, leveraging hybrid attention blocks to enhance low-level features extracted independently from MRI modalities like T1-weighted imaging (T1), T1-weighted contrast-enhanced imaging (T1c), T2-weighted imaging (T2), and fluid-attenuated inversion recovery (FLAIR). The decoder fused these features to achieve accurate pixel-level segmentation. Comprehensive reviews, such as that by Siddique et al. (2021), summarize the applications of U-Net and its variants, highlighting their adaptability and success in medical image segmentation. (Wu et al. 2023) proposed the modality preserving U-Net, which used a modality-preservation encoder to maintain feature independence and a fusion decoder for multiscale analysis. This architecture yielded a rich feature representation suitable for segmentation tasks. Chowdhury and Mirzaei (2025) proposed a U-Net based architecture incorporated with atrous spatial pyramid pooling for MRI brain tumor segmentation. Liu et al. (2024a) developed two segmentation tasks based on U-Net and combined features in a cross-branch fusion in infant brain tissue segmentation task. Several deep learning-based imaging fusion review studies have been conducted, including those by Li et al. (2024a) and Santhakumar et al. (2024). Dhamale et al. (2025) proposed a dual multi scale networks which combines convolution and attention features in a multi-scale fashion.

4.3 Decision level fusion (late integration)

Decision-level fusion, involves making a comprehensive decision based on information derived from multi-modality, view, focus, or different models such as knowledge-based systems (Adeli 1990a, b), neural networks, fuzzy logic (Adeli and Hung 1995; Siddique and Adeli 2013), and support vector machines. This approach often employs an ensemble of classifiers, with the final decision is determined using voting mechanisms such as majority or average voting. Decision-level fusion is typically used in late integration. In this approach, each modality or algorithm processes the data independently, and their individual results are combined at the final decision-making stage. This method allows for the fusion of different outputs, such as classifications or segmentations, creating a final result that leverages the strengths of each approach. Late integration is particularly useful when combining diverse imaging modalities, such as MRI, CT, and PET, or when merging the results of different machine learning models. By combining the decisions made at each stage, late integration enhances the robustness of the model and reduces the risk of overfitting.

Xu et al. (2016a) proposed a decision level fusion that uses a pulse-coupled neural network for medical image fusion, utilizing three fitness functions – image entropy, average gradient, and spatial frequency – to evaluate the quality of the quantum particle swarm optimization-based fusion process. Yang et al. (2019) introduced a multimodal medical image fusion technique that employed structural patch decomposition (SPD) and fuzzy logic. SPD extracted salient features, leading to the creation of two fusion decision maps: an incomplete fusion map and a supplemental fusion map, each generated by distinct fuzzy logic systems. These maps were then merged to form an initial fusion map, which was refined using a Gaussian filter to produce the final fusion outcome. Mirzaei (2021) developed an ensemble classification model that integrates multi-view MRI (T1-weighted) data. The model consists of four classifiers based on deep architectures, such as Xception and ResNet: one for each view (sagittal, coronal, and axial) and one for the combined three-view input. The final decision is made through majority voting among the four classifiers. Zhu et al. (2023a) introduced a decision-level fusion framework using ensemble learning for medical image classification. Their approach employs an ensemble of sparsely evolved DenseNet-121 networks. Fusion at the decision level is achieved through late integration techniques, such as the mean rule, maximum rule, or majority voting, to combine the outputs of individual networks. This method was applied to classify brain tumors (meningioma, glioma, and pituitary tumors) and lung infections. Liu et al. (2024d) showcase the effectiveness of late fusion by integrating structural MRI (sMRI) and resting-state magnetoencephalography (rsMEG) data to enhance classification accuracy for early Alzheimer’s disease. The fusion process is carried out at three stages: 1) early fusion, where data from rsMEG and MRI are combined prior to network processing; 2) interfusion, which merges features from these modalities using a specialized fusion module; and 3) late fusion, which integrates the final outputs from each modality. A multi-modal fusion-based approach using late fusion is proposed by Liu et al. (2024b), where different data modalities, such as neuroimaging and clinical assessments, are processed independently before their results are combined at the final decision-making stage

5 Data fusion of EEG and other image modalities

EEG measures the brain’s electrical activity, providing invaluable insights into neural dynamics and aiding in diagnosis and monitoring of various brain disorders including epilepsy, dementia, and stroke. Among imaging modalities, the fusion of EEG with MRI (or fMRI) has been extensively studied due to their complementary strengths. This fusion enhances the spatial resolution of electromagnetic source imaging provided by MRI, while leveraging the high temporal resolution inherent in EEG data. Fusion techniques for EEG-MRI integration often employ pixel-level approaches in early-stage integration, where raw data from both modalities are combined to optimize the synergy between spatial and temporal resolution. Additionally, more advanced methods, such as middle-level fusion strategies, have also been studied (Wei et al. 2020).

Fusion of EEG and MRI has been widely explored in brain studies. Hunyadi et al. (2017) offered a fusion of EEG-fMRI data using tensor decomposition techniques to address challenges in blind source separation and uncover neural activity patterns, such as epileptic activity. By leveraging the multidimensional nature of tensors, the study highlighted their ability to preserve interdependencies among modes (e.g., channels, time, and patients) and provide solutions critical for identifying and characterizing neural processes. Additionally, tensor decomposition methods, including soft coupled tensor decomposition, have been explored for studying brain functionality by preserving multiway structures (Chatzichristos et al. 2018).

Attia et al. (2017) developed a framework for EEG-fMRI data fusion using Dempster–Shafer theory, which employs basic belief assignment and a combination rule to fuse multimodal data. This approach has applications in neurological conditions, such as epilepsy, by providing clearer distinctions between activated and non-activated areas. Methods addressing limitations of traditional techniques, such as the Consecutive Independence and Correlation Transform, improve EEG-fMRI integration by extracting independent features and exploiting complementary information through canonical correlation analysis (Akhonda et al. 2018). Similarly, structure-revealing coupled matrix and tensor factorization techniques have been applied to integrate EEG, fMRI, and MRI data for schizophrenia study (Acar et al. 2019). For depression classification, multimodal fusion of EEG data under neutral, negative, and positive audio stimulation has been demonstrated using linear and nonlinear feature extraction. Genetic algorithms optimize feature weighting to enhance model performance (Cai et al. 2020). Bayesian fusion methods, such as those utilizing dynamic causal modeling, integrate EEG and fMRI by incorporating EEG based features to improve fMRI parameter estimation. This approach enhances model evidence, parameter efficiency, and the resolution of conditional dependencies between neuronal and hemodynamic parameters, with applications in cortical hierarchies and aging-related changes in neurovascular dynamics (Wei et al. 2020). Fusion techniques have also been extended to neurofeedback tasks, where early integration of EEG and fMRI data enhances motor imagery self-regulation, with applications in stroke rehabilitation and other neurological disorders (Lioi et al. 2020).

EEG and fMRI fusion has also been applied to investigate spatiotemporal dynamics in epilepsy using double coupled matrix tensor factorization (Chatzichristos et al. 2022). This technique preserves the multiway structure of both modalities while accounting for hemodynamic variability and temporal differences. Advances in machine learning, such as optimized forward variable selection decoders, further improve functional connectivity decoding using simultaneously recorded EEG and fMRI signals (Dang et al. 2023).

Clustering algorithms combined with EEG-MRI fusion have been employed to categorize somatosensory phenotypes, providing insights into functional brain network connectivity (Chu et al. 2024). Additionally, entropy-bound minimization for joint independent component analysis and independent vector analysis have demonstrated efficacy in fusing EEG with structural and functional MRI for schizophrenia classification (Akhonda et al. 2018). Furthermore, multimodal EEG-MEG fusion frameworks have been developed to address challenges in electrophysiological source imaging. Attention-based neural networks leverage complementary information from EEG and MEG modalities, enhancing brain source localization for neurological conditions such as epilepsy and face perception tasks. These innovations underscore the clinical relevance and versatility of EEG fusion with various imaging modalities. Liu et al. (2024a, b, c, d) proposed an end-to-end spatial-temporal alignment network (STA-Net) that achieves precise spatial and temporal alignment between EEG and fNIRS data. The aligned features are then integrated at a later stage to decode cognitive states effectively. The FGSA layer computes spatial attention maps from fNIRS to identify sensitive brain regions and spatially aligns EEG with fNIRS by weighting EEG channels accordingly. Gao et al. (2025) introduced a novel method for integrating EEG and fNIRS signals by aligning features from both modalities, thereby improving the analysis of neural and hemodynamic signals. Phadikar et al. (2025) integrated fMRI and EEG data to link spatially dynamic brain networks with time-varying EEG spectral properties. They used a sliding window-based spatially constrained independent component analysis (scICA) to estimate time-resolved brain networks at the voxel level and assessed their coupling with EEG spectral power across multiple frequency bands. Liu et al. (2025) proposed a fusion of EEG and functional near-infrared spectroscopy (fNIRS) to capture complementary temporal and spatial features of brain activity. By integrating these two modalities, their method aims to enhance the characterization of dynamic brain connectivity.

6 Future directions and challenges

The integration of multimodal imaging techniques in medical diagnostics continues to evolve, driven by the need for more comprehensive and accurate insights into complex diseases. Despite significant advancements, several challenges remain, and future research directions aim to address these issues.

One of the primary challenges in multimodal image fusion is developing algorithms that can seamlessly integrate diverse data types while preserving essential features from each modality. Current methods often face difficulties in balancing spatial and temporal resolutions or managing the trade-off between image quality and computational efficiency. Future research will focus on optimizing deep learning architectures, such as hybrid CNN-transformer models or multi-scale feature fusion networks, to enhance the fidelity and efficiency of fused images.

Multimodal imaging data often comes from different sources, with varying resolutions, noise levels, and acquisition parameters. Managing this heterogeneity is a major challenge that affects the quality of fused images. Future research should focus on developing more robust pre-processing techniques and adaptive models capable of handling these variations. Transfer learning and domain adaptation methods could also be explored to generalize fusion models across different datasets and imaging systems.

Deep learning models, particularly those used in image fusion, are often criticized for their black-box nature, which limits clinical acceptance. Enhancing model interpretability and explainability is essential to gain the trust of healthcare professionals. Future directions will involve integrating explainable AI (XAI) techniques (Macas et al. 2024; Noureldin et al. 2023) into fusion frameworks, such as using attention mechanisms to highlight the most informative regions or developing interpretable models that provide insights into decision-making processes.

Real-time image fusion is critical for clinical applications, especially in emergency and surgical settings. However, most existing fusion techniques are computationally intensive, limiting their clinical feasibility. Future work will explore lightweight models, advanced hardware acceleration, and real-time processing frameworks to ensure that fused images can be generated quickly and efficiently. The integration of cloud-based and edge computing solutions may also be considered to manage large datasets in real-time applications.

The integration of imaging and omics, such as transcriptomics, genomics, epigenomic, proteomics, etc. holds great promise for improving diagnostic accuracy and understanding disease mechanisms. Multi-omics can provide insights into disease biology (Mirzaei 2021, 2022, 2023; Mirzaei and Petreaca 2022; Pappula et al. 2021) that imaging alone may not capture (Raj and Mirzaei 2022; Raj et al. 2024) and can complement imaging data to provide a more comprehensive view of tumor behavior and progression. However, a major challenge lies in the effective fusion of these diverse data types. The inherent differences in resolution, scale, and data representation between imaging and genomics pose significant hurdles for their integration.

In conclusion, while multimodal image fusion holds great promise for advancing medical diagnostics, several challenges must be addressed to fully realize its potential. Continued innovation in algorithm development, real-time processing, model interpretability, and data security will be essential for the successful adoption of these technologies in clinical practice. By overcoming these hurdles, the field can move closer to delivering more precise, efficient, and personalized healthcare solutions.

-

Research ethics: Not applicable.

-

Informed consent: Not applicable.

-

Author contributions: The three authors contributed to the review. The magnitude of each contribution follows the order of the authors.

-

Use of Large Language Models, AI and Machine Learning Tools: None.

-

Conflict of interest: None.

-

Research funding: None.

-

Data availability: Not applicable.

References

Acar, E., Schenker, C., Levin-Schwartz, Y., Calhoun, V.D. and Adalı, T. (2019). Unraveling diagnostic biomarkers of schizophrenia through structure-revealing fusion of multi-modal neuroimaging data. Front Neurosci. 13; 416, https://doi.org/10.3389/fnins.2019.00416.Suche in Google Scholar PubMed PubMed Central

Adeli, H. (1990a). Knowledge engineering – Volume one – Fundamentals. McGraw-Hill Book Company, New York.Suche in Google Scholar

Adeli, H. (1990b). Knowledge engineering – Volume two – Applications. McGraw-Hill Book Company, New York.Suche in Google Scholar

Adeli, H. and Hung, S.L. (1995). Machine learning – Neural networks, genetic algorithms, and fuzzy systems. John Wiley and Sons, New York.Suche in Google Scholar

Aishwarya, N. and Thangammal, C.B. (2018). A novel multimodal medical image fusion using sparse representation and modified spatial frequency. Int. J. Imaging Syst. Technol. 28(3): 175–185.10.1002/ima.22268Suche in Google Scholar

Akhonda, M.A.B.S., Levin-Schwartz, Y., Bhinge, S., Calhoun, V.D., and Adali, T. (2018). Consecutive independence and correlation transform for multimodal fusion: application to EEG and FMRI data. In: IEEE international conference on acoustics, speech and signal processing (ICASSP). IEEE, Calgary, AB, pp. 2311–2315.10.1109/ICASSP.2018.8462031Suche in Google Scholar

Amaral, D., Soares, D., Gromicho, M., de Carvalho, M., Madeira, S., Tomás, P. and Aidos, H. (2024) Temporal stratification of amyotrophic lateral sclerosis patients using disease progression patterns. Nat. Commun. 15; 5717.10.1038/s41467-024-49954-ySuche in Google Scholar PubMed PubMed Central

Amezquita-Sanchez, J.P., Mammone, N., Morabito, F.C., and Adeli, H. (2021). A new dispersion entropy and fuzzy logic system-based methodology for automated classification of dementia stages using electroencephalograms. Clin. Neurol. Neurosurg. 201(106446).10.1016/j.clineuro.2020.106446Suche in Google Scholar PubMed

Arco, J.E., Ortiz, A., Gorriz, J.M., and Ramirez, J. (2023). Enhancing multimodal patterns in neuroimaging by siamese neural networks with self-attention mechanism. Int. J. Neural Syst. 33(4).10.1142/S0129065723500193Suche in Google Scholar PubMed

Attia, A., Moussaoui, A., and Chahir, Y. (2017). An EEG-fMRI fusion analysis based on symmetric techniques using dempster shafer theory. J. Med. Imaging Health Inf.: 1493–1501.10.1166/jmihi.2017.2185Suche in Google Scholar

Bank, D., Koenigstein, N., and Giryes, R. (2023). Autoencoders. In: Machine Learning for data science handbook. Springer International Publishing, Princeton, NJ, pp. 353–374.10.1007/978-3-031-24628-9_16Suche in Google Scholar

Basu, S., Singhal, S., and Singh, D. (2024). A systematic literature review on multimodal medical image fusion. Multimed. Tools Appl. 83: 15845–15913.10.1007/s11042-023-15913-wSuche in Google Scholar

Benjamin, J.R., Jayasree, T., and Vijayan, S.A. (2019). High-quality MRI and PET/SPECT image fusion based on local laplacian pyramid (LLP) and adaptive cloud mode (ACM) for medical diagnostic applications. Int. J. Recent Techno. Eng. 8: 9211–9217.10.35940/ijrte.D9127.118419Suche in Google Scholar

Cai, H., Qu, Z., Li, Z., Zhang, Y., Hu, X., and Hu, B. (2020). Feature-level fusion approaches based on multimodal EEG data for depression recognition. Inf. Fusion 59: 127–138.10.1016/j.inffus.2020.01.008Suche in Google Scholar

Calhoun, V.D. and Sui, J. (2016). Multimodal fusion of brain imaging data: a key to finding the missing links in complex mental illness. Biol. Psychiatr. Cogn Neurosci. Neuroimaging 1: 230–244.10.1016/j.bpsc.2015.12.005Suche in Google Scholar PubMed PubMed Central

Chatzichristos, C., Davies, M., Escudero, J., Kofidis, E., and Theodoridis, S. (2018). Fusion of EEG and fMRI via soft coupled tensor decompositions. In: European signal processing conference (EUSIPCO). IEEE, New York, pp. 56–60.10.23919/EUSIPCO.2018.8553077Suche in Google Scholar

Chatzichristos, C., Kofidis, E., Van Paesschen, W., De Lathauwer, L., Theodoridis, S., and Van Huffel, S. (2022). Early soft and flexible fusion of electroencephalography and functional magnetic resonance imaging via double coupled matrix tensor factorization for multisubject group analysis. Hum. Brain Mapp. 43: 1231–1255.10.1002/hbm.25717Suche in Google Scholar PubMed PubMed Central

Chavan, S., Pawar, A., and Talbar, S. (2016). Multimodality medical image fusion using rotated wavelet transform. In: Proceedings of the international conference on communication and signal processing, Atlantis Press, DordrechtSuche in Google Scholar

Chen, L., Qiao, H., and Zhu, F. (2022). Alzheimer’s disease diagnosis with brain structural MRI using multiview-slice attention and 3D convolution neural network. Front. Aging Neurosci. 14: 871706.10.3389/fnagi.2022.871706Suche in Google Scholar PubMed PubMed Central

Cheng, L., Zhu, Y., Sun, J., Deng, L., He, N., Yang, Y., Ling, H., Ayaz, H., Fu, Y., and Tong, S. (2018). Principal states of dynamic functional connectivity reveal the link between resting-state and task-state brain: an fMRI Study. Int. J. Neural Syst. 28: 7, https://doi.org/10.1142/S0129065718500028.Suche in Google Scholar PubMed

Chowdhury, S. and Mirzaei, G. (2025). Hybridization of attention UNet with repeated atrous spatial pyramid pooling for improved brain tumour segmentation. arXiv preprint arXiv:2501.13129.10.1109/ISBI60581.2025.10980895Suche in Google Scholar

Chu, S., He, C., Su, C., Lin, H., Pan, L., and Wang, S. (2024). EEG-fMRI-Based multimodal fusion system for analyzing human somatosensory network. In: 2024 international conference on system science and engineering (ICSSE), Hsinchu, Taiwan, pp. 1–610.1109/ICSSE61472.2024.10608928Suche in Google Scholar

Clancy, U., Garcia, D., Stringer, M., Thrippleton, M., Valdés-Hernández, M., Wiseman, S., Hamilton, O., Chappell, F., Brown, R., Blair, G., et al.. (2021). Rationale and design of a longitudinal study of cerebral small vessel diseases, clinical and imaging outcomes in patients presenting with mild ischaemic stroke: mild Stroke Study 3. Europ. Stroke J. 6: 81–88.10.1177/2396987320929617Suche in Google Scholar PubMed PubMed Central

Colonnese, F., Di Luzio, F., Rosato, A., and Panella, M. (2024). Bimodal feature analysis with deep learning for autism spectrum disorder detection. Int. J. Neural Syst. 34: 2.10.1142/S0129065724500059Suche in Google Scholar PubMed

Dai, Y., Zhou, Z., and Xu, L. (2017). The application of multi-modality medical image fusion based method to cerebral infarction. In: EURASIP journal on image and video processing, Vol. 55. Springer.10.1186/s13640-017-0204-3Suche in Google Scholar

Dang, T., Ono, K., Sasaoka, T., Yamawaki, S., and Machizawa, M. (2023). Decoding time-course of saliency network of fMRI signals by EEG signals using optimized forward variable selection: a concurrent EEG-fMRI study. In: Asia pacific signal and information processing Association Annual Summit and conference (APSIPA ASC), Taipei, Taiwan, pp. 540–545.10.1109/APSIPAASC58517.2023.10317275Suche in Google Scholar

Dhamale, A., Rajalakshmi, R., and Balasundaram, A. (2025). Dual multi scale networks for medical image segmentation using contrastive learning. Image and Vision Comput. 154.10.1016/j.imavis.2024.105371Suche in Google Scholar

Díaz-Francés, J.Á., Fernández-Rodríguez, J.D., Thurnhofer, K., and López-Rubio, E. (2024). Semi-supervised semantic image segmentation by deep diffusion models and generative adversarial networks. Int. J. Neural Syst. 34: 11.10.1142/S0129065724500576Suche in Google Scholar PubMed

Dimitri, G.M., Spasov, S., Duggento, A., Passamonti, L., Lió, P., and Toschi, N. (2022). Multimodal and multicontrast image fusion via deep generative models. Inf. Fusion 88: 146–160.10.1016/j.inffus.2022.07.017Suche in Google Scholar

Dogra, A., Goyal, B., and Agrawal, S. (2018). Medical image fusion: a brief introduction. Biomed. Pharmacol. J. 11: 1209–1214.10.13005/bpj/1482Suche in Google Scholar

Du, J., Li, W., Lu, K., and Xiao, B. (2016a). An overview of multi-modal medical image fusion. Neurocomputing 215: 3–20.10.1016/j.neucom.2015.07.160Suche in Google Scholar

Du, J., Weisheng, L., Xiao, B., and Nawaz, Q. (2016b). Medical image fusion by combining parallel features on multi-scale local extrema scheme. Knowl. Based Syst. 113: 4–12.10.1016/j.knosys.2016.09.008Suche in Google Scholar

Du, J., Weisheng, L., Xiao, B., and Nawaz, Q. (2016c). Union laplacian pyramid with multiple features for medical image fusion. Neurocomputing 194: 326–339.10.1016/j.neucom.2016.02.047Suche in Google Scholar

Fahoum, A. and Zyout, A. (2024). Wavelet transform, reconstructed phase space, and deep learning neural networks for EEG-based schizophrenia detection. Int. J. Neural Syst. 34(9).10.1142/S0129065724500461Suche in Google Scholar PubMed

Fan, L., He, F., Song, Y., Xu, H., and Li, B. (2024). Look inside 3D point cloud deep neural network by patch-wise saliency map. Integrated Comput. Aided Eng. 31: 197–212.10.3233/ICA-230725Suche in Google Scholar

Feng, W., Van Halm-Lutterodt, N., Tang, H., Mecum, A., Mesregah, M.K., Ma, Y., Li, H., Zhang, F., Wu, Z., Yao, E., et al.. (2020). Automated MRI-based deep learning model for detection of Alzheimer’s disease process. Int. J. Neural Syst. 30: 6, https://doi.org/10.1142/S012906572050032X.Suche in Google Scholar PubMed

Gao, T., Chen, C., Liang, G., Ran, Y., Huang, Q., Liao, Z., He, B., Liu, T., Tang, X., Chen, H., et al.. (2025). Feature fusion analysis approach based on synchronous EEG-fNIRS signals: application in etomidate use disorder individuals. Biomed. Opt. Express 16: 382–397.10.1364/BOE.542078Suche in Google Scholar PubMed PubMed Central

García-Aguilar, I., García-González, J., Luque-Baena, R.M., López-Rubio, E., and Domínguez-Merino, E. (2023). Optimized instance segmentation by super-resolution and maximal clique generation. Integrated Comput. Aided Eng. 30: 243–256.10.3233/ICA-230700Suche in Google Scholar

González, D., Bruña, R., Martínez-Castrillo, J.C., López, J.M., and de Arcas, G. (2023). First longitudinal study using binaural beats on Parkinson disease. Int. J. Neural Syst. 33: 6.10.1142/S0129065723500272Suche in Google Scholar PubMed

Guelib, B., Bounab, R., Hermessi, H., Zarour, K., and Khlifa, N. (2025). Survey on machine learning for MRI and PET fusion in alzheimer’s disease. Multimed. Tools. Appl, https://doi.org/10.1007/s11042-025-20631-6.Suche in Google Scholar

Hechkel, W. and Helali, A. (2025). Early detection and classification of Alzheimer’s disease through data fusion of MRI and DTI images using the YOLOv11 neural network. Front Neurosci. 19: 1554015.10.3389/fnins.2025.1554015Suche in Google Scholar PubMed PubMed Central

Hou, R., Zhou, D., Nie, R., Liu, D., and Ruan, X. (2019). Brain CT and MRI medical image fusion using convolutional neural networks and a dual-channel spiking cortical model. Med. Biol. Eng. Comput. 57: 887–900, https://doi.org/10.1007/s11517-018-1935-8.SpringerSuche in Google Scholar PubMed

Hu, Y., Yang, H., Xu, T., He, S., Yuan, J., and Deng, H. (2024). Exploration of multi-scale image fusion systems in intelligent medical image analysis. In: IEEE 2nd international conference on sensors, electronics and computer engineering (ICSECE), pp. 1224–122910.1109/ICSECE61636.2024.10729378Suche in Google Scholar

Hunyadi, B., Dupont, P., Van Paesschen, W., and Van Huffel, S. (2017). Tensor decompositions and data fusion in epileptic electroencephalography and functional magnetic resonance imaging data. WIREs Data Mining Knowl. Discov. 7: e1197.10.1002/widm.1197Suche in Google Scholar

James, A.P. and Dasarathy, B.V. (2015). A review of future and data fusion with medical images. In: Smith, J., and Lee, A. (Eds.), Medical image fusion technologies. Springer, Berlin, pp. 491–507.10.1201/b18851-27Suche in Google Scholar

Ji, D., He, L., Dong, X., Li, H., Zhong, X., Liu, G., and Zhou, W. (2024). Epileptic seizure prediction using spatiotemporal feature fusion on EEG. Int. J. Neural Syst. 34: 8.10.1142/S0129065724500412Suche in Google Scholar PubMed

Jia, C., Akhonda, M.A.B.S., Long, Q., Calhoun, V.D., Waldstein, S., and Adali, T. (2019). C-ICT for discovery of multiple associations in multimodal imaging data: application to fusion of fMRI and DTI data. In: 2019 53rd annual conference on information sciences and systems (CISS), Baltimore, MD, USA, pp. 1–5.10.1109/CISS.2019.8692878Suche in Google Scholar

Jie, Y., Zhou, F., Tan, H., Wang, G., Cheng, X., and Li, X. (2022). Tri-modal medical image fusion based on adaptive energy choosing scheme and sparse representation. Measurement 204.10.1016/j.measurement.2022.112038Suche in Google Scholar

Kamnitsas, K., Ledig, C., Newcombe, V.F.J., Simpson, J.P., Kane, A.D., Menon, D.K., Rueckert, D., and Glocker, B. (2017). Efficient multi-scale 3D CNN with fully connected CRF for accurate brain lesion segmentation. Medical Image Analysis 36, https://doi.org/10.1016/j.media.2016.10.004.Suche in Google Scholar PubMed

Kang, M., Ting, C.-M., Ting, F.F., and Phan, R.C.-W. (2024). BGF-YOLO: enhanced YOLOv8 with multiscale attentional feature fusion for brain tumor detection. In: Linguraru, M.G. (Ed.), et al.. (Eds.). Medical image computing and computer assisted intervention – MICCAI 2024. MICCAI 2024. Lecture Notes in Computer Science, Vol. 15008. Springer, Cham.10.1007/978-3-031-72111-3_4Suche in Google Scholar

Kim, S.T., Küçükaslan, U., and Navab, N. (2021). Longitudinal brain MR image modeling using personalized memory for alzheimer’s disease. IEEE Access 9: 143212–143221.10.1109/ACCESS.2021.3121609Suche in Google Scholar

Koutrintzes, D., Spyrou, E., Mathe, E., and Mylonas, P. (2023). A multimodal fusion approach for human activity recognition. Int. J. Neural Syst. 33: 2350002.10.1142/S0129065723500028Suche in Google Scholar PubMed

Landén, M., Jonsson, L., Klahn, A.L., Kardell, M., Göteson, A., Abé, C., Aspholmer, A., Liberg, B., Pelanis, A., Sparding, T., et al.. (2025). The St. Göran project: a multipronged strategy for longitudinal studies for bipolar disorders. Neuropsychobiology, (Epub ahead of print). PMID: 39746340, https://doi.org/10.1159/000543335.Suche in Google Scholar PubMed PubMed Central

Lavrova, E., Woodruff, H.C., Khan, H., Salmon, E., Lambin, P., and Phillips, C. (2024). A review of handcrafted and deep radiomics in neurological diseases: transitioning from oncology to clinical neuroimaging. arXiv.Suche in Google Scholar

Lee, J., Renslo, J., Wong, K., Clifford, T.G., Beutler, B.D., Kim, P.E., and Gholamrezanezhad, A. (2024). Current trends and applications of PET/MRI hybrid imaging in neurodegenerative diseases and normal aging. Diagnostics 14: 585.10.3390/diagnostics14060585Suche in Google Scholar PubMed PubMed Central

Li, J., Liu, J., Zhou, S., Zhang, Q., and Kasabov, N. (2024a). GeSeNet: a general semantic-guided network with couple mask ensemble for medical image fusion. IEEE Trans. Neural Networks Learn. Syst. 35: 16248–16261.10.1109/TNNLS.2023.3293274Suche in Google Scholar PubMed

Li, X., Li, X., Ye, T., Cheng, X., Liu, W., and Tan, H. (2024b). Bridging the gap between multi-focus and multi-modal: a focused integration framework for multi-modal image fusion. In: IEEE/CVF winter conference on applications of computer vision (WACV), pp. 1617–162610.1109/WACV57701.2024.00165Suche in Google Scholar

Li, Y., Daho, M.E.H., Conze, P.-H., Zeghlache, R., Le Boité, H., Tadayoni, R., Cochener, B., Lamard, M., and Quellec, G. (2024c). A review of deep learning-based information fusion techniques for multimodal medical image classification. Comput. Biol. Med. 177.10.1016/j.compbiomed.2024.108635Suche in Google Scholar PubMed

Li, Y., Xie, D., Liu, J.-X., Zheng, C., and Cui, X. (2024d). DAResNet-ViT: a novel hybrid network for the early diagnosis of alzheimer’s disease using multi-view sMRI and multimodal analysis. In: IEEE international conference on bioinformatics and biomedicine (BIBM), pp. 3461–346410.1109/BIBM62325.2024.10822806Suche in Google Scholar

Li, Y., Yakushev, I., Hedderich, D., and Wachinger, C. (2024e). PASTA: pathology-aware MRI to PET CroSs-modal TrAnslation with diffusion models. In: Proceedings of medical image computing and computer assisted intervention MICCAI, pp. 529–54010.1007/978-3-031-72104-5_51Suche in Google Scholar

Li, S., Kang, X., Fang, L., Hu, J., and Yin, H. (2017a). Pixel-level image fusion: a survey of the state of the art. Inf. Fusion 33: 100–112.10.1016/j.inffus.2016.05.004Suche in Google Scholar

Li, Y., Liu, X., Wei, F., Sima, D.M., Cauter, S.V., Himmelreich, U., Pi, Y., Hu, G., Yao, Y., and Van Huffel, S. (2017b). An advanced MRI and MRSI data fusion scheme for enhancing unsupervised brain tumor differentiation. Comput. Biol. Med. 81: 121–129, https://doi.org/10.1016/j.compbiomed.2016.12.017.Suche in Google Scholar PubMed

Li, H., Ren, Z., Zhu, G., Liang, Y., Cui, H., Wang, C., and Wang, J. (2025). Enhancing medical image segmentation with MA-UNet: a multi-scale attention framework. Visual Comp. 41: 6103–6120.10.1007/s00371-024-03774-9Suche in Google Scholar

Li, Y., Zhao, J., Lv, Z., and Pan, Z. (2021). Multimodal medical supervised image fusion Method by CNN, Vol. 15.10.3389/fnins.2021.638976Suche in Google Scholar PubMed PubMed Central

Lian, J. and Xu, F. (2023). Epileptic EEG classification via graph transformer network. Int. J. Neural Syst. 33: 2350042, 13 pages.10.1142/S0129065723500429Suche in Google Scholar PubMed

Lioi, G., Cury, C., Perronnet, L., Mano, M., Bannier, E., Lécuyer, A., and Barillot, C. (2020). Simultaneous EEG-fMRI during a neurofeedback task, a brain imaging dataset for multimodal data integration. Sci. Data 7: 173, https://doi.org/10.1038/s41597-020-0498-3.Suche in Google Scholar PubMed PubMed Central

Liu, Z., Chai, Y., Yin, H., Zhou, J., and Zhu, Z. (2017a). A novel multi-focus image fusion approach based on image decomposition. Inf. Fus. 1: 102–116.10.1016/j.inffus.2016.09.007Suche in Google Scholar

Liu, Y., Chen, X., Cheng, J., and Peng, H. (2017b). A medical image fusion method based on convolutional neural networks. In: 2017 20th international conference on information fusion (fusion), Xi’an, pp. 1–710.23919/ICIF.2017.8009769Suche in Google Scholar

Liu, X., Mei, W., and Du, H. (2018). Detail-enhanced multimodality medical image fusion based on gradient minimization smoothing filter and shearing filter. Med. Biol. Eng. Comput. 56: 1565–1578, https://doi.org/10.1007/s11517-018-1796-1.Suche in Google Scholar PubMed