Abstract

Batik, a traditional textile art form, holds profound cultural and historical value in Indonesia, where its motifs are rich in symbolic meaning. As efforts to digitally preserve batik grow, there is an increasing need for accurate and scalable classification methods to analyze its intricate patterns. Manual classification remains labor-intensive and prone to subjectivity. This study investigates the use of machine learning and computer vision techniques to automate batik motif classification. By leveraging deep feature extraction through the VGG16 architecture and integrating it with machine learning classifiers such as Support Vector Machines (SVM), Multi-Layer Perceptron (MLP), Convolutional Neural Networks (CNN), and XGBoost, the study aims to improve classification performance. Experimental results demonstrate that the VGG16+XGBoost model achieves the highest performance with an average accuracy of 89.83 % across five-fold cross-validation, outperforming standalone models including SVM (64.29 %), CNN (65.76 %), and XGBoost without deep features (64.87 %). Meanwhile, the retrained VGG16 model attained an accuracy of 87.77 %, confirming the benefit of combining deep and traditional learning approaches. These findings highlight the effectiveness of deep feature extraction in capturing the fine-grained textures of batik motifs and demonstrate the potential of such hybrid approaches in supporting the digital preservation and classification of traditional cultural artifacts.

1 Introduction

Batik, as a traditional textile art form, holds significant cultural and historical value in many societies, particularly in Indonesia (Poon 2020). The intricate motifs and patterns of batik are not only aesthetically pleasing but also carry deep symbolic meanings, often reflecting local traditions, beliefs, and identities (Gondoputranto and Dibia 2022). The increasing digitization of cultural heritage necessitates the systematic preservation, classification, and analysis of these traditional motifs (Manap et al. 2024). However, manual classification is time-consuming, subjective, and often requires expert knowledge (Manap et al. 2024). This has driven the exploration of automated methods for batik motif classification using machine learning and computer vision. In recent years, machine learning has demonstrated remarkable success in image classification tasks. Several previous studies have developed batik classification methods based on machine learning. Minarno et al. (2018) employed the Multi Texton Histogram (MTH) method combined with k-NN and SVM algorithms, achieving accuracies of 82 % and 76 %, respectively. Subsequently, Rangkuti et al (2021) introduced the MU2ECS-LBP algorithm, which is robust to scale and rotation variations, attaining an accuracy of up to 99.91 % using k-NN. Meanwhile, deep learning-based approaches such as Convolutional Neural Networks (CNNs) have also been increasingly adopted; for instance, Meranggi et al (2022) utilized a ResNet-18 architecture and obtained an accuracy of 88.88 % after data quality enhancement.

More complex CNN architectures like VGG have been effectively used for high-level feature extraction. Alya et al (2023) applied transfer learning using VGG-16 and Xception models for batik motif classification, achieving a training accuracy of 91.23 % with VGG-16. Such pre-trained models not only reduce training time but also deliver high performance in capturing intricate texture details characteristic of batik motifs.

Recent studies continue to enrich CNN-based batik classification approaches. For example, Anggoro et al (2024) employed a three-layer CNN to classify nine Batik Solo motifs with an accuracy reaching 97.77 %. Elvitaria et al. (2025) proposed a novel CNN model named Okta-Net that achieved a validation accuracy of 93.17 % across five classes from a public batik dataset. Ardyani and Sari (2024) modified the VGG16 architecture for web-based Demak batik classification and successfully attained an accuracy rate of 98.72 %.

In addition to single-model approaches, ensemble methods are beginning to be applied in this domain as well: Elvitaria et al. (2024) combined GLCM texture features with ResNet within a hard voting system framework, yielding accuracies up to 95 %. Conversely, Andrian et al. (2024) compared four CNN architectures – AlexNet, EfficientNet, LeNet, and MobileNet – for Lampung batik classification; their results indicated that LeNet provided the best performance at an accuracy level of 99.33 %. Murinto and Winiarti (2025), optimizing CNN hyperparameters via Modified Particle Swarm Optimization (MPSO), reported an accuracy rate of 94.032 % on North Java coastal batik motifs.

Building upon these prior investigations, this study contributes novel insights by integrating feature extraction based on the VGG architecture while evaluating its performance through various machine learning classifiers such as Support Vector Machine (SVM), Multi-Layer Perceptron (MLP), CNN itself as classifier layers or end-to-end model variants, and XGBoost algorithms. This approach presents a unique combination leveraging CNN’s strength in visual feature extraction alongside traditional classifiers’ efficiency. The study not only compares each method’s performance but also identifies optimal models tailored for diverse types of batik motifs.

Practically speaking, the automated batik classification system developed herein holds substantial potential for real-world applications. For artisans, this system can facilitate efficient documentation and organization of motif collections. In museums or cultural institutions, it supports digitization efforts along with cataloging heritage artifacts. In e-commerce platforms, the automatic classifier enhances user experience by enabling more accurate, relevant product searches based on motif patterns. In other words, the proposed model simultaneously aids cultural heritage preservation while offering high utility value within technology-driven creative industries.

2 Methodology

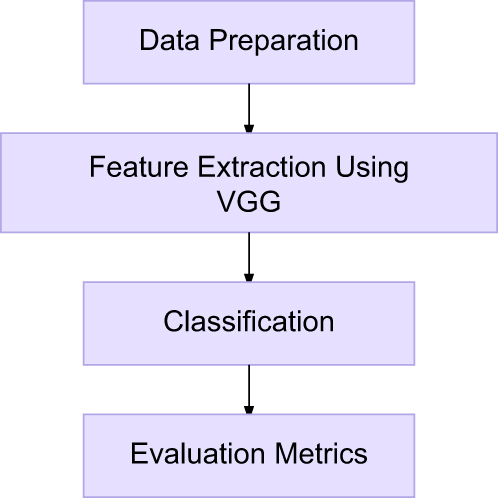

This study proposes a method for classifying traditional batik motifs by leveraging the feature extraction capabilities of the VGG16 network and employing several machine learning classifiers, as detailed in the Classification section and illustrated in Figure 1. The figure illustrates the process flow of the research methodology used in this study, including data preprocessing, feature extraction, and classification stages.

Flowchart of the research methodology.

2.1 Dataset Preparation

The dataset utilized in this study is the Batik Keraton Jawa dan Cirebon dataset obtained from Kaggle (https://www.kaggle.com/datasets/stefaron/dataset-batik-keraton). This dataset was chosen due to the cultural and historical significance of Batik Keraton motifs in Javanese and Cirebonese royal traditions (Kusrianto 2024). These motifs are visually distinctive and carry meaningful symbolism, making them suitable for preserving Indonesia’s intangible heritage through computational analysis.

The dataset comprises 1,799 images categorized into four traditional batik motifs: Kawung (506 images), Mega Mendung (472 images), Parang (426 images), and Truntum (395 images). Other batik patterns were excluded because of limited availability and inconsistent labeling, allowing the study to focus on well-documented motifs for more accurate classification.

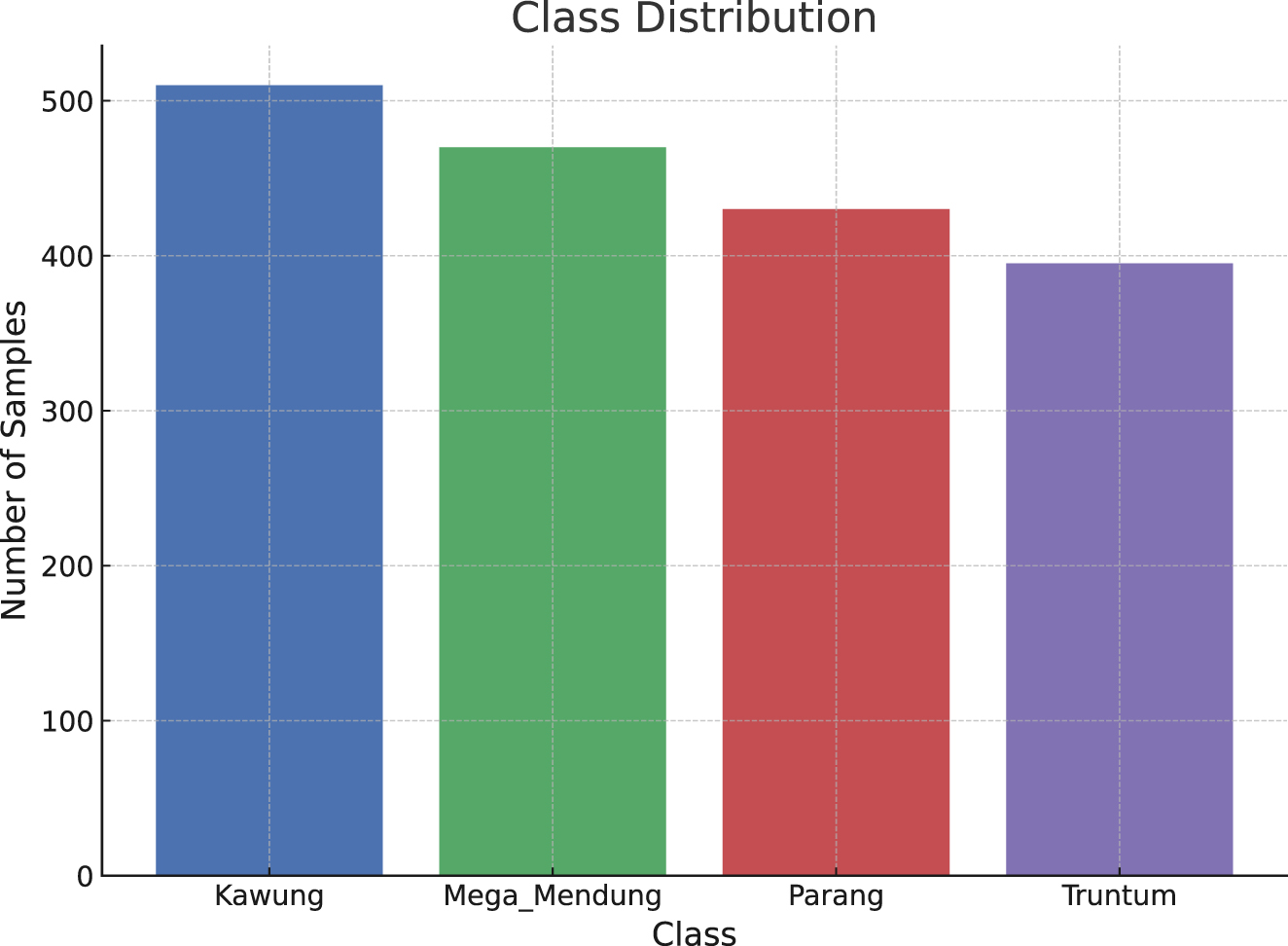

For model training and evaluation, the dataset is split into training and testing sets with an 80:20 ratio to ensure robust performance assessment. An example of the batik motifs from the dataset is shown in Figure 2.

Example of Batik motifs from the dataset Batik Keraton.

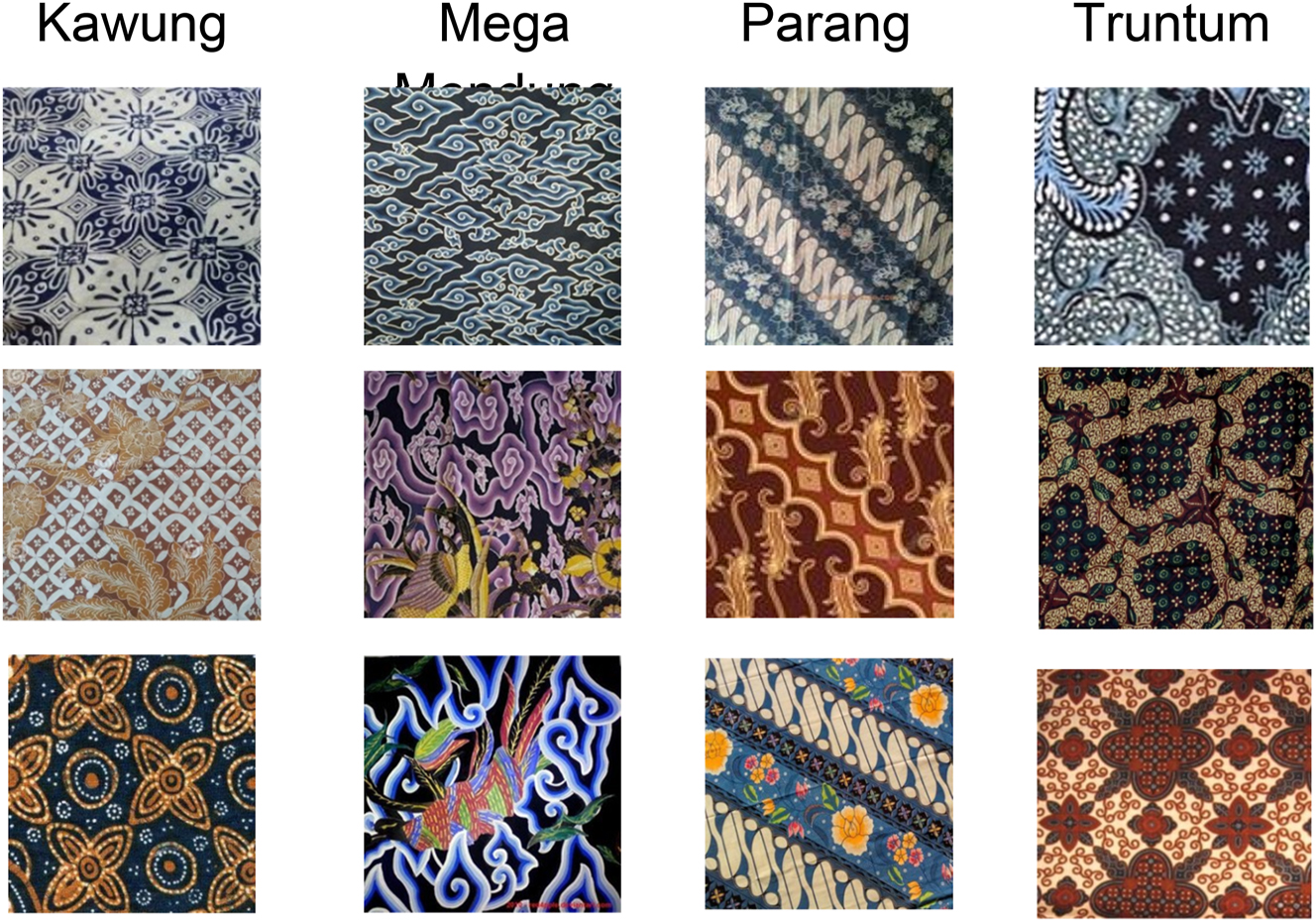

Each image is resized to 224 × 224 pixels to align with the input requirements of the VGG16 network. Labels are assigned to each image according to its motif category. The dataset is split into training and testing sets using an 80:20 ratio, with stratification applied to maintain class balance across all categories. The distribution of images among the classes is shown in Figure 3.

Distribution of images among the four batik motif classes.

2.2 Feature Extraction

VGG16, a convolutional neural network pre-trained on the ImageNet dataset (Tammina 2019), is used for feature extraction. The model, known for its hierarchical architecture (Seo and Shin 2019), captures features at different levels of abstraction, from simple edges to complex patterns. In this study, the fully connected layers of the VGG16 model are removed, and the output from the last convolutional layer is retained as feature maps. These feature maps are subsequently flattened to form one-dimensional feature vectors used as input for classification models (Li et al. 2018). The pre-trained VGG16 leverages prior knowledge from extensive training on large-scale image data (Djaroudib et al. 2024; Zhou et al. 2021), enabling it to generate robust and discriminative features. For comparison, a version of VGG16 trained from scratch on the batik dataset is also utilized to analyze the impact of pre-training.

2.3 Classification

To comprehensively assess the effectiveness of different classification strategies for traditional batik motifs, this study compares a diverse set of models across three main categories. The first category consists of traditional machine learning methods applied directly to the processed feature data, namely Multi-Layer Perceptron (MLP), Support Vector Machine (SVM), and XGBoost. The MLP is configured with two hidden layers, containing 128 and 64 neurons, respectively, and trained over a maximum of 200 iterations, with a fixed random seed of 42 for reproducibility (Marshl 2011). The MLP operates on features extracted using VGG16, enabling the model to benefit from rich representations while maintaining a relatively lightweight architecture. The SVM uses a linear kernel to effectively separate data in high-dimensional spaces and is applied to the VGG16-derived feature vectors. The SVM is chosen for its generalization ability and minimal reliance on large datasets, providing a robust baseline for comparison with more complex neural approaches (Ghosh et al 2019). XGBoost, a gradient boosting ensemble model, is employed with 100 boosting rounds, a maximum tree depth of 3, a learning rate of 0.1, and a random state of 42 (Zheng 2022). By leveraging VGG16-extracted features, XGBoost iteratively builds decision trees to minimize classification error through boosting, achieving high accuracy, and efficiency in training.

The second category involves hybrid models that combine the feature extraction power of VGG16 with traditional machine learning classifiers. These models include MLP with VGG16, SVM with VGG16, and XGBoost with VGG16, each benefiting from the rich, deep feature representations produced by VGG16. These models aim to investigate how the addition of deep feature extraction improves the performance of classical machine learning classifiers.

The third category includes full deep learning-based approaches. A Convolutional Neural Network (CNN) is trained directly on the original resized batik images. The CNN uses categorical crossentropy as the loss function and the Adam optimizer, trained for 50 epochs with a batch size of 32 and a validation split of 20 % (Lavanya and Parameswari 2020). As an end-to-end model, the CNN autonomously learns both spatial features and decision boundaries directly from raw image data (Baruah 2017; Suyahman et al. 2024a, 2024b). Finally, a fully fine-tuned VGG16 model is employed as a comprehensive transfer learning solution. In this approach, all layers – including convolutional and dense layers – are retrained on the batik dataset (Sheth 2024). This model is optimized using the Adam optimizer with a learning rate of 1e-5, trained for 10 epochs, and a batch size of 32. Unlike the fixed-feature models, this end-to-end approach allows the model to adapt both feature extraction and classification processes based on the specific characteristics of batik motifs.

By comparing the performance of MLP, SVM, XGBoost, CNN, fully fine-tuned VGG16, MLP with VGG16, SVM with VGG16, XGBoost with VGG16, and CNN with VGG16, this study aims to identify the most effective classification method in terms of both accuracy and computational efficiency for traditional batik motif recognition.

2.4 Evaluation Metrics

The performance of all models is assessed using standard evaluation metrics, including accuracy, precision, recall, and F1-score (Chicco and Jurman 2020). These metrics provide a comprehensive understanding of each model’s ability to correctly classify the batik motifs and avoid misclassifications. Additionally, confusion matrices are used to analyze classification performance across the four batik categories, offering insights into model strengths and potential limitations in distinguishing between specific motifs (Valero-Carreras et al 2023; Heydarian et al. 2022). To ensure the robustness and generalizability of the results, five-fold cross-validation is employed (Sejuti and Islam 2023). This method splits the dataset into five equal subsets, iteratively training and testing the model on different subsets. The performance metrics are calculated for each fold, and the average across all five folds is then computed to provide a more reliable and generalized evaluation of each model’s performance.

Furthermore, the average performance across the folds will be compared across all models, including the traditional machine learning models (MLP, SVM, and XGBoost), the hybrid models (MLP with VGG16, SVM with VGG16, and XGBoost with VGG16), and the deep learning models (CNN and fully fine-tuned VGG16). This comparison will highlight the strengths and weaknesses of each approach in terms of classification accuracy, precision, recall, and F1-score, offering a thorough evaluation of how feature extraction, transfer learning, and end-to-end deep learning contribute to the overall performance in classifying traditional batik motifs.

3 Results and Discussion

The results of this study demonstrate that the implementation of the VGG16 architecture for feature extraction significantly enhances classification performance in recognizing traditional batik motifs. The XGB + VGG16 model achieved an average accuracy of 89.83 %, while the Full VGG16 model yielded an accuracy of 87.77 %. These findings indicate that the features extracted by the VGG architecture – renowned for its depth and dense convolutional structure – are capable of effectively and robustly representing the visual patterns present in batik imagery.

The superior performance of VGG-based models underscores the critical importance of deep feature extraction in visual classification tasks involving batik motifs, particularly considering the intricate ornamentation and high inter-class similarity. Compared to baseline models, the incorporation of VGG results in a substantial improvement in accuracy. Models that do not utilize VGG exhibit notably lower performance. For instance, the MLP model attained only 58.20 % accuracy, highlighting its limitations in capturing complex visual patterns without the support of VGG-based feature extraction. This limitation becomes particularly evident in the context of traditional batik, which is characterized by high intra-class variation, intricate patterns, and rich color compositions. The subtle differences in motif structure, the overlapping geometric and floral elements, and the diversity in shading and line thickness present significant challenges for conventional models like MLP. Examples of the visual complexity and diversity of traditional batik motifs used in this study are illustrated in Figure 4, which showcases several representative patterns, highlighting the nuanced textures and visual similarities that contribute to classification difficulty.

The complexity of batik motifs.

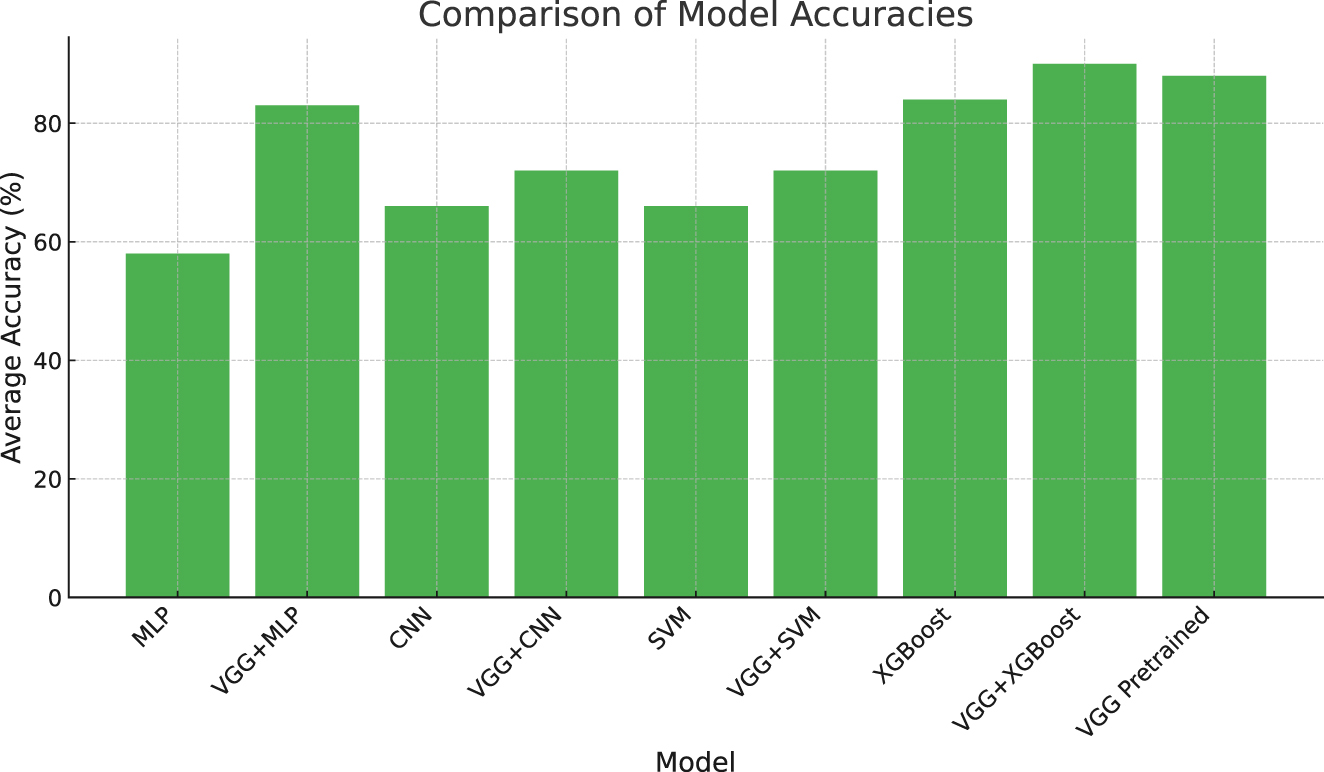

The combination of VGG16 as a feature extractor and XGBoost (XGB) as the classification model yielded the best overall performance. This finding suggests that the deep features extracted by VGG can be effectively leveraged by XGBoost, which excels at handling non-linear data and complex inter-feature relationships. Furthermore, this hybrid approach demonstrated more stable performance across average results from cross-validation folds, reinforcing the argument that such a combination is particularly well-suited for image classification tasks involving intricate visual structures, such as traditional batik motifs. Figure 5 presents a comparative overview of the average accuracies achieved by the various models.

Average accuracy comparison of model.

In terms of precision, recall, and F1-score metrics, models incorporating VGG consistently outperformed those that did not utilize VGG-based feature extraction. The XGB + VGG model, in particular, achieved high values across these metrics, indicating that VGG-extracted features are highly capable of capturing the distinctive characteristics of traditional batik patterns. Similarly, the Full VGG model attained macro-average precision, recall, and F1-score values in the range of approximately 0.88, reflecting a balanced capability in recognizing all classes effectively. Table 1 provides a comprehensive comparison of the models in terms of average accuracy, precision, recall, and F1-score.

Performance comparison of different models.

| Model | Average accuracy | Average precision | Average recall | Average F1-score |

|---|---|---|---|---|

| MLP | 58.20 % | 0.55 | 0.58 | 0.54 |

| VGG + MLP | 82.77 % | 0.83 | 0.83 | 0.83 |

| CNN | 65.76 % | 0.72 | 0.64 | 0.63 |

| VGG + CNN | 72.60 % | 0.80 | 0.70 | 0.67 |

| SVM | 64.92 % | 0.64 | 0.65 | 0.64 |

| VGG + SVM | 84.21 % | 0.84 | 0.84 | 0.84 |

| XGBoost | 64.87 % | 0.65 | 0.65 | 0.65 |

| VGG + XGBoost | 89.83 % | 0.92 | 0.92 | 0.92 |

| VGG Pretrained | 87.77 % | 0.88 | 0.88 | 0.88 |

Compared to previous studies, the results of this research demonstrate that the developed model exhibits competitive, and in some aspects superior, performance. In the study conducted by Minarno et al. (2018), which utilized the Batik Nitik 960 dataset consisting of 300 samples across 50 classes, the highest accuracy was achieved by the MTH + kNN model at 82 %, followed by MTH + SVM at 76 %. While these accuracy scores are relatively high, the large number of classes introduces additional complexity, and the limited dataset size may affect the reliability and generalizability of the model. A comparative summary with prior research can be found in Table 2.

Comparison of research results with previous research.

| Dataset | Number of data | Class | Model | Result |

|---|---|---|---|---|

| Batik Keraton (this research) | 1,799 | 4 | XGB + VGG16 | Average accuracy 89.83 % |

| Batik Keraton (this research) | 1,799 | 4 | VGG16 | Average accuracy 87.77 % |

| Batik Keraton (this research) | 1,799 | 4 | XGB | Average accuracy 64.87 % |

| Batik Keraton (this research) | 1,799 | 4 | CNN | Average accuracy 65.76 % |

| Batik Keraton (this research) | 1,799 | 4 | SVM | Average accuracy 64.29 % |

| Batik Nitik 960 dataset (Minarno et al. 2018) | 300 | 50 | MTH + kNN | Accuracy 82 % |

| Batik Nitik 960 dataset (Minarno et al. 2018) | 300 | 50 | MTH + SVM | Accuracy 76 % |

| Batik Ceplok, Kawung, Parang, Megamendung dan Sidomukti (Alya et al 2023) | 3,534 | 5 | VGG16 | Accuracy 91.23 % |

| Batik Ceplok, Kawung, Parang, Megamendung dan Sidomukti (Alya et al 2023) | 3,534 | 5 | CNN | Accuracy 89.64 % |

Meanwhile, the study conducted by Alya et al (2023), which utilized a dataset comprising 3,534 samples across five batik classes – Ceplok, Kawung, Parang, Megamendung, and Sidomukti – reported an accuracy of 91.23 % using VGG16 and 89.64 % using a conventional CNN. These results are comparable to the performance achieved in the present study, which also employed VGG16 on the Batik Keraton dataset. This indicates that, despite differences in batik motifs, the effectiveness of the VGG16 architecture remains consistent in delivering high performance for batik motif classification in general.

It is worth noting, however, that the dataset used in this study contains fewer classes (four) compared to Alya, Wibowo, and Paradise (five) and Minarno et al. (50). Nevertheless, the total number of samples used – 1,799 images – provides a stable and representative foundation for model training. The Batik Keraton motifs are characterized by complex visual structures and exhibit a high degree of visual similarity between classes, such as Kawung and Truntum or Parang and Mega Mendung, especially in pixel-level vector representations. Therefore, employing deep feature extraction methods becomes crucial. The VGG architecture is capable of capturing subtle differences in patterns and textures that are often difficult for conventional models like SVM or MLP to distinguish without deep feature support.

This study demonstrates that the integration of VGG16 as a feature extractor with the XGBoost classifier yields the best classification performance for Batik Keraton motifs, achieving an average accuracy of 89.83 %. These results highlight the strong potential of computer vision technologies in supporting cultural preservation through the digitalization of batik patterns. Such a classification system can be applied in various contexts, including education, digital documentation, and creative industries such as batik e-commerce.

Nonetheless, challenges remain, particularly concerning the limited number of classes, the generalization of the model to motifs from other regions, and the computational demands of deep learning-based models. Compared to previous studies, the performance achieved in this work is relatively competitive, surpassing the MTH + SVM model applied to the Batik Nitik dataset, yet slightly below the VGG16 model tested by Alya et al (2023) on a five-class batik dataset. This suggests that factors such as the number of classes, motif types, and dataset size significantly influence classification outcomes. For future research, it is recommended to increase motif diversity and dataset size, explore lightweight models such as MobileNet, and apply systematic hyperparameter optimization to further enhance model accuracy and generalizability.

4 Conclusions

This study highlights the effectiveness of combining deep learning-based feature extraction with machine learning classifiers in batik motif classification. The results demonstrate that utilizing the VGG architecture for feature extraction, particularly in combination with XGBoost, leads to significant improvements in classification accuracy. The findings underscore the importance of deep feature extraction in capturing the intricate details and textures of batik motifs, which are essential for accurate classification. Additionally, the performance variations across different models suggest that traditional machine learning methods alone are insufficient for handling the complexity of batik image data. The integration of deep learning techniques provides a scalable and efficient solution for batik motif classification, contributing to the digital preservation of cultural heritage. Despite these promising results, this study has several limitations. The dataset, although substantial, is limited to four classes of Batik Keraton motifs, which may not represent the full diversity of traditional batik. This restriction could introduce classification bias and reduce the model’s generalizability to other regional motifs. Future research should consider expanding the dataset to include a wider range of batik styles and increasing the number of samples per class to improve robustness. Additionally, exploring more advanced or lightweight deep learning architectures, optimizing hyperparameters, and integrating attention mechanisms or ensemble approaches could further enhance classification accuracy. Such developments will not only improve model performance but also support more comprehensive and inclusive efforts in the heritage.

References

Alya, R. F., M. Wibowo, and P. Paradise. 2023. “Classification of Batik Motif Using Transfer Learning on Convolutional Neural Network (CNN).” Jurnal Teknik Informatika (Jutif) 4 (1): 161–70. https://doi.org/10.52436/1.jutif.2023.4.1.564.Search in Google Scholar

Andrian, R., R. Taufik, D. Kurniawan, A. S. Nahri, and H. C. Herwanto. 2024. “Lampung Batik Classification Using AlexNet, EfficientNet, LeNet and MobileNet Architecture.” International Journal of Advanced Computer Science and Applications 15 (11). https://doi.org/10.14569/ijacsa.2024.0151191.Search in Google Scholar

Anggoro, D. A., A. A. T. Marzuki, and W. Supriyanti. 2024. “Classification of Solo Batik Patterns Using Deep Learning Convolutional Neural Networks Algorithm.” TELKOMNIKA (Telecommunication Computing Electronics and Control) 22 (1): 232–40. https://doi.org/10.12928/telkomnika.v22i1.24598.Search in Google Scholar

Ardyani, S. S. F., and C. A. Sari. 2024. “A Web-Based for Demak Batik Classification Using VGG16 Convolutional Neural Network.” Advance Sustainable Science Engineering and Technology 6 (4): 0240406. https://doi.org/10.26877/asset.v6i4.771.Search in Google Scholar

Baruah, L. 2017. Performance Comparison of Binarized Neural Network with Convolutional Neural Network. Michigan Technological University.Search in Google Scholar

Chicco, D., and G. Jurman. 2020. “The Advantages of the Matthews Correlation Coefficient (MCC) over F1 Score and Accuracy in Binary Classification Evaluation.” BMC Genomics 21 (1). https://doi.org/10.1186/s12864-019-6413-7.Search in Google Scholar

Djaroudib, K., P. Lorenz, R. Belkacem Bouzida, and H. Merzougui. 2024. “Skin Cancer Diagnosis Using VGG16 and Transfer Learning: Analyzing the Effects of Data Quality over Quantity on Model Efficiency.” Applied Sciences 14 (17): 7447. https://doi.org/10.3390/app14177447.Search in Google Scholar

Elvitaria, L., E. F. Ahmad, N. A. Samsudin, S. K. A. Khalid, and Z. Indra. 2025. “An Improved Okta-Net Convolutional Neural Network Framework for Automatic Batik Image Classification.” JOIV: International Journal on Informatics Visualization 9 (1): 115–9. https://doi.org/10.62527/joiv.9.1.2591.Search in Google Scholar

Elvitaria, L., A. Shaubari, E. Fadzrin, N. A. Samsudin, A. Khalid, S. Kamal, et al.. 2024. “A Proposed Batik Automatic Classification System Based on Ensemble Deep Learning and GLCM Feature Extraction Method.” International Journal of Advanced Computer Science and Applications 15 (10). https://doi.org/10.14569/ijacsa.2024.0151058.Search in Google Scholar

Ghosh, S., A. Dasgupta, and A. Swetapadma. 2019. “A Study on Support Vector Machine Based Linear and Non-linear Pattern Classification.” In 2019 International Conference on Intelligent Sustainable Systems (ICISS), 24–8. IEEE.10.1109/ISS1.2019.8908018Search in Google Scholar

Gondoputranto, O., and I. W. Dibia. 2022. “Use of Technology in Capturing Various Traditional Motifs and Ornaments: A Case Study of Batik Fractal, Indonesia and TUDITA-Turkish Digital Textile Archive.” Humaniora 13 (1): 39–48. https://doi.org/10.21512/humaniora.v13i1.7408.Search in Google Scholar

Heydarian, M., T. E. Doyle, and R. Samavi. 2022. “MLCM: Multi-Label Confusion Matrix.” IEEE Access 10: 19083–95. https://doi.org/10.1109/access.2022.3151048.Search in Google Scholar

Kusrianto, A. 2024. Batik: Filosofi, Motif dan Kegunaan. Penerbit Andi.Search in Google Scholar

Lavanya, M., and R. Parameswari. 2020. “Implementation Using Multiple Linear Regressions with ADAM Optimizer Technique in Neural Network for Crop Prediction-MLRAONN.” In International Conference On Contemporary Researches in Engineering, Science, Vol. 12. Management & Arts. https://doi.org/10.9756/bp2020.1002/12.Search in Google Scholar

Li, J., Y. Si, T. Xu, and S. Jiang. 2018. “Deep Convolutional Neural Network Based ECG Classification System Using Information Fusion and One-Hot Encoding Techniques.” Mathematical Problems in Engineering: 1–10: 7354081. https://doi.org/10.1155/2018/7354081.Search in Google Scholar

Manap, Abd, N. L. Xiao Xuan, K. Kumar Singh, A. Sheikh Akbari, and A. Putra. 2024. “Classification of Malaysian and Indonesian Batik Designs Using Deep Learning Models.” Journal of Telecommunication, Electronic and Computer Engineering (JTEC) 16 (4): 23–30. https://doi.org/10.54554/jtec.2024.16.04.004.Search in Google Scholar

Marshl, S. 2011. “The Multi-Layer Perceptron.” In Machine Learning, 63–110. Chapman and Hall/CRC.10.1201/9781420067194-7Search in Google Scholar

Meranggi, D. G. T., N. Yudistira, and Y. A. Sari. 2022. “Batik Classification Using Convolutional Neural Network with Data Improvements.” JOIV: International Journal on Informatics Visualization 6 (1): 6. https://doi.org/10.30630/joiv.6.1.716.Search in Google Scholar

Minarno, A. E., A. S. Maulani, A. Kurniawardhani, F. Bimantoro, and N. Suciati. 2018. “Comparison of Methods for Batik Classification Using Multi Texton Histogram.” TELKOMNIKA (Telecommunication Computing Electronics and Control) 16 (3): 1358–66. https://doi.org/10.12928/telkomnika.v16i0.7376.Search in Google Scholar

Murinto, M., and S. Winiarti. 2025. “Modified Particle Swarm Optimization (MPSO) Optimized CNN’s Hyperparameters for Classification.” International Journal of Advances in Intelligent Informatics 11 (1): 133–42. https://doi.org/10.26555/ijain.v11i1.1303.Search in Google Scholar

Poon, S. 2020. “Symbolic Resistance: Tradition in Batik Transitions Sustain Beauty, Cultural Heritage and Status in the Era of Modernity.” World Journal of Social Science 7 (2): 1. https://doi.org/10.5430/wjss.v7n2p1.Search in Google Scholar

Rangkuti, A. H., A. Harjoko, and A. Putra. 2021. “A Novel Reliable Approach for Image Batik Classification that Invariant with Scale and Rotation Using MU2ECS-LBP Algorithm.” Procedia Computer Science 179: 863–70. https://doi.org/10.1016/j.procs.2021.01.075.Search in Google Scholar

Sejuti, Z. A., and M. S. Islam. 2023. “A Hybrid CNN–KNN Approach for Identification of COVID-19 with 5-Fold Cross Validation.” Sensors International 4: 100229. https://doi.org/10.1016/j.sintl.2023.100229.Search in Google Scholar

Seo, Y., and K. Shin. 2019. “Hierarchical Convolutional Neural Networks for Fashion Image Classification.” Expert Systems with Applications 116: 328–39. https://doi.org/10.1016/j.eswa.2018.09.022.Search in Google Scholar

Sheth, K. 2024. “An Intelligent Approach to Detect Facial Retouching Using Fine Tuned VGG16.” International Journal of Biometrics 1 (1). https://doi.org/10.1504/ijbm.2024.10062315.Search in Google Scholar

Suyahman, Sunardi, and Murinto. 2024a. “Comparative Analysis of CNN Architectures in Siamese Networks with Test-Time Augmentation for Trademark Image Similarity Detection.” Scientific Journal of Informatics 11 (4): 949–58. https://doi.org/10.15294/sji.v11i4.13811.Search in Google Scholar

Suyahman, S., S. Sunardi, M. Murinto, and A. N. Khusna. 2024b. “Data Augmentation Using Test-Time Augmentation on Convolutional Neural Network-Based Brand Logo Trademark Detection.” Indonesian Journal of Artificial Intelligence and Data Mining 7 (2): 266. https://doi.org/10.24014/ijaidm.v7i2.28804.Search in Google Scholar

Tammina, S. 2019. “Transfer Learning Using VGG-16 with Deep Convolutional Neural Network for Classifying Images.” International Journal of Scientific and Research Publications (IJSRP) 9 (10): p9420. https://doi.org/10.29322/ijsrp.9.10.2019.p9420.Search in Google Scholar

Valero-Carreras, D., J. Alcaraz, and M. Landete. 2023. “Comparing Two SVM Models through Different Metrics Based on the Confusion Matrix.” Computers & Operations Research 152: 106131. https://doi.org/10.1016/j.cor.2022.106131.Search in Google Scholar

Zheng, Y. 2022. “A Default Prediction Method Using XGBoost and LightGBM.” In 2022 International Conference on Image Processing, Computer Vision and Machine Learning (ICICML), 210–3. IEEE.10.1109/ICICML57342.2022.10009823Search in Google Scholar

Zhou, Y., H. Chang, Y. Lu, X. Lu, and R. Zhou. 2021. “Improving the Performance of VGG through Different Granularity Feature Combinations.” IEEE Access: 26208–20. https://doi.org/10.1109/access.2020.3031908.Search in Google Scholar

© 2025 the author(s), published by De Gruyter, Berlin/Boston

This work is licensed under the Creative Commons Attribution 4.0 International License.

Articles in the same Issue

- Frontmatter

- Editorial

- Technology, Preservation, and Resilience in an Era of Change: Editor’s Note

- Articles

- Newly Discovered Archival Logic: Functions Emerging from the Records in Contexts Standard

- Traversing Rock Climbers’ Oral Histories to Podcast Platforms: Processes, Analytics, and Digital Library Impact

- VGG-Based Feature Extraction for Classifying Traditional Batik Motifs Using Machine Learning Models

- The Impact of Artificial Intelligence on the Production and Editing of Audiovisual Content

- Navigation of Cultural Heritage in the Digital Age: The Role of Social Media Platforms in the Preservation of Selected Folk Performances of Odisha

- Review

- Saving Ukrainian Cultural Heritage Online (SUCHO)

- News and Comments

- PDT&C Editorial Board Position Paper on the Emerging Crisis in the Cultural Heritage and Preservation Sectors

Articles in the same Issue

- Frontmatter

- Editorial

- Technology, Preservation, and Resilience in an Era of Change: Editor’s Note

- Articles

- Newly Discovered Archival Logic: Functions Emerging from the Records in Contexts Standard

- Traversing Rock Climbers’ Oral Histories to Podcast Platforms: Processes, Analytics, and Digital Library Impact

- VGG-Based Feature Extraction for Classifying Traditional Batik Motifs Using Machine Learning Models

- The Impact of Artificial Intelligence on the Production and Editing of Audiovisual Content

- Navigation of Cultural Heritage in the Digital Age: The Role of Social Media Platforms in the Preservation of Selected Folk Performances of Odisha

- Review

- Saving Ukrainian Cultural Heritage Online (SUCHO)

- News and Comments

- PDT&C Editorial Board Position Paper on the Emerging Crisis in the Cultural Heritage and Preservation Sectors