Abstract

Study purpose

This research examines perspectives, attitudes, and behavioral intentions regarding AI among college students in Brazil and the United States. We chose Brazil and the United States, as they are leading countries in terms of AI adoption in Latin America and North America, respectively.

Methodology

This study used a mixed-methods approach of combining online surveys and in-person interviews with college students majoring in journalism and mass communications in Brazil and the United States.

Main findings

Our findings identified similarities and differences between the two groups in terms of familiarity with AI, perceived AI efficiency, concerns about AI, AI self-efficacy, intention to learn about AI, and career optimism. For example, our research showed that students with higher levels of familiarity with AI and perceived efficiency demonstrated higher levels of AI self-efficacy. These college students are optimistic about potential benefits of AI, while at the same time exhibiting concern about its negative consequences.

Social implications

Given the rapidly growing deployment of AI in media and other related fields around the world, it is important that journalism and mass communications programs update their curriculum to help students better prepare for their future careers. In doing so, we should include voices of instructors and students in journalism and mass communications programs across the globe so that our approaches to the topic reflect realities and aspirations of countries with different levels of AI adoption and use.

Practical implications

Our research highlight needs for instructors to be better educated on AI so they can lead thoughtful, nuanced, and substantive discussions on AI in their classrooms. Academic associations including the Association for Education in Journalism and Mass Communication could serve as information and networking hubs for developing curriculum around this topic and sharing lessons learned among instructors.

Originality/value

Given the implications of AI for media and communication environment, it is important to understand how future communication specialists understand and perceive AI, which is the topic of the current study. Our comparative analysis offers implications that can be beneficial to those who study or practice international communication or communication technology, especially in the context of higher education.

1 Introduction

Since Open AI launched ChatGPT in November 2022, universities have scrambled to understand and assess implications of generative artificial intelligence (AI) on teaching, research, and other aspects of higher education (Lang 2024; Singer 2023). For example, there has been a surge of workshops and webinars on how to help students ethically use ChatGPT and other generative AI tools (e.g., Copilot, Gemini, and Claude) for their assignments (Huang 2023). Regarding effects of AI in higher education, both educators and students have shown mixed reactions (Lang 2024). While some emphasized AI’s potential contributions in enhancing efficiency in teaching and research, others were skeptical of educational uses of AI and worried about potential harms posed by AI. For example, professors have expressed concern about unethical use of AI by students in completing assignments, whereas students are worried about being accused of violating university academic integrity standards if AI detection tools incorrectly flag assignment as being written by AI (Talaue 2023).

Fear and unease surrounding AI notwithstanding, educators and students are increasingly adopting AI applications and have a desire to understand how to utilize them to enhance efficiency and effectiveness of their work (Singer 2023). Research shows that as students continued to use AI technology, they developed more positive attitudes toward AI applications (Li 2023). Specifically, ease of use positively influenced students’ motivation and their goal attainment. Despite the uptick in attention to and use of AI in higher education in recent years, there is insufficient research on university students’ perceptions of and attitudes toward AI. How do university students’ social-psychological characteristics and perceptions of AI influence their intention to adopt and use AI? What challenges and concerns do they face when using or learning about AI? In particular, little research has examined these topics among students majoring in journalism and mass communications.

This research examines perspectives, attitudes, and behavioral intentions regarding AI among college students majoring in journalism and mass communications in Brazil and the United States. We chose Brazil and the United States, as they are leading countries in terms of AI adoption in Latin America and North America, respectively (Eastwood 2024; IBM 2023; Mari 2023; McElheran et al. 2023). In addition, selecting the two countries enables a comparison between a leading economy in the Global South (Brazil) and a dominant economy in the Global North (the United States). We conducted online surveys and in-person interviews in Spring 2024 with college students majoring in journalism and mass communications in the two countries to examine familiarity with AI, perceived efficiency of AI, concerns about AI, AI self-efficacy, intention to learn about AI, and career optimism.

Our comparative analysis of Brazilian and U.S. college students’ perceptions and attitudes toward AI offers implications that can be beneficial to those who study or practice international communication or communication technology, especially in the context of higher education. Moreover, as we focused on university students majoring in journalism and mass communications, findings from the research provide specific insights into current students and the future workforce in the field of media and communication.

2 Literature review

2.1 Artificial intelligence (AI) in communication environments

As the adoption of AI has increased globally, its impact on society has become more widespread. According to IBM’s Global AI Adoption Index 2023 (IBM 2023), roughly 42 % of enterprise-scale companies with more than 1,000 employees reported having actively deployed AI in their business. In addition, about 59 % of the companies that had already explored or deployed AI said they have sped up their application of and investment in AI in recent years. Important factors that are influencing these organizations’ AI adoption are “advances in AI tools that make them more accessible”, “the need to reduce costs and automate key processes”, and “the increasing amount AI embedded into standard off the shelf business applications” (IBM 2023, p. 25).

AI adoption in the public sector such as education and public health has also increased (Eastwood 2024; OECD 2019). However, there has been limited research on use and application of AI in the public sector or public understandings of AI (Desouza 2018; de-Lima-Santos and Ceron 2022; Rockoff et al. 2010; Sousa et al. 2019; Sun and Medaglia 2019). Previous research in this area has mainly focused on AI’s capacity to transform workplaces by making it easy to perform key tasks and achieve desired goals (e.g., Mitchell et al. 2016). Research on public understandings of AI, although experiencing recent growth, remains limited (Kennedy et al. 2023).

The emergence of ChatGPT in November 2022 and advancements of other generative artificial intelligence (AI) tools in recent years have increased interest in AI both from scholars and the general public. For example, Pew Research Center surveys of U.S. citizens in recent years showed an increase in their awareness of AI applications in daily life, along with growing concerns about potential negative consequences of AI including privacy harms and job loss (Kennedy et al. 2023; Rainie et al. 2022). In addition, a majority of survey respondents advocated for more rigorous standards in evaluating AI safety and other emerging technologies. Another specific concern raised was potential for emerging technologies to exacerbate social inequities, with many respondents believing that these technologies could widen the wealth gap (Rainie et al. 2022). Indeed, research found that individuals with higher income and education levels had greater awareness of AI in daily life (Kennedy et al. 2023). Additionally, younger adults were more adept at identifying AI applications, and active Internet users were more aware of these applications (Kennedy et al. 2023).

AI has influenced the field of communication and media in many different ways, though news organizations’ use of AI remains somewhat constrained (Broussard et al. 2019; Chan-Olmsted 2019; de-Lima-Santos and Ceron 2022). A study on the adoption of AI by news media organizations in the United States and Europe found that several subfields of AI (e.g., machine learning, computer vision, planning, scheduling, and optimization) were most frequently used in newsrooms (de-Lima-Santos and Ceron 2022). In comparison, speech recognition, natural language processing, expert systems, and robotics were not effectively utilized by news organizations (de-Lima-Santos and Ceron 2022).

AI applications are used to facilitate idea generation and production of public affairs beat stories in newsrooms (Broussard 2015; Seo et al. 2024). Some news organizations have used AI to build paywalls that focus on individual readers and can predict subscription cancellation (de-Lima-Santos and Ceron 2022; Seo et al. 2024). The Wall Street Journal’s machine learning AI algorithm paywall allows non-subscribers to sample some stories and enables news managers to see the content that readers prefer (de-Lima-Santos and Ceron 2022). Newsrooms have also increasingly utilized social bots or news bots to write and disseminate news stories (de-Lima-Santos and Ceron 2022).

Given the implications of AI for media and communication environment, it is important to understand how future communication specialists understand and perceive AI, which is the topic of the current study. At this point, common ways that students use AI-based tools in educational settings are for research and studying literature, solving problems and assistance in making decisions, clarifying concepts and subject matter, analyzing texts, translating texts, and preparing for exams (Von Garrel and Mayer 2023). While AI tools are most commonly used by students in engineering and mathematics (Von Garrel and Mayer 2023), the tools assist and motivate students across university departments by helping them offload monotonous tasks or improve writing quality and understanding (Talaue 2023; Yilmaz and Yilmaz 2023). Previous research found that while students demonstrated interest and confidence in using AI applications such as ChatGPT, constant technological developments and algorithmic adjustments led them to feel uneasy (Baek and Kim 2023). To analyze media and communication majors’ perspectives, attitudes, and behavioral intentions related to AI, it is important to understand their AI self-efficacy and other related social-psychological variables.

2.2 Perspectives on AI, AI self-efficacy & intention to learn about AI

Empirical studies have found that demographic characteristics, overall experience with technology, and political orientation influence individuals’ attitudes toward AI (Wang et al. 2023; Zhang and Dafoe 2019). For example, based on a nationally representative survey of 2,000 U.S. adults, Zhang and Dafoe (2019) suggested that socioeconomic status affected individuals’ attitudes toward the AI. Specifically, their study showed that males who are young, wealthy, educated, and with more experience with technology are more likely to exhibit more positive attitudes toward AI.

Self-efficacy is another important aspect to consider in this context, as previous research on technology adoption demonstrated that self-efficacy plays an important role in an individual’s decision to adopt and use a particular type of technology (Hong 2022; Seo et al. 2014, 2019, 2024). Self-efficacy refers to a person’s belief in their competence to deal with different situations needed to complete desired tasks (Bandura 1997). Across different age groups, self-efficacy tends to be positively related with technology adoption and use, with higher self-efficacy individuals more likely to actively use technology applications and participate in social activities online (Seo et al. 2014, 2019, 2024).

For the purpose of this study, self-efficacy regarding AI is defined as an individual’s confidence in their ability to learn and use AI (Hong 2022; Wang et al. 2023). While empirical research on AI self-efficacy and adoption of AI for professional activities is lacking, recent research shows those with higher levels of income and education were more likely to demonstrate higher levels of self-efficacy toward AI (Hong 2022). In addition, given that one’s prior experience in using a technology application affects the person’s level of self-efficacy related to the application (Byars-Winston et al. 2017), their experience or familiarity with AI could affect their levels of self-efficacy toward AI.

Intention to use AI is influenced by AI self-efficacy and other related factors (Hong 2022; Roy et al. 2022; Wang et al. 2023). Specifically, those with higher levels of AI self-efficacy are more likely to exhibit higher levels of intention to use or learn about AI (Hong 2022). In addition, individuals who perceive AI to be easy to use and useful tend to show stronger intentions to use AI. Supportive environments can also affect an individual’s intention to learn about AI. For example, based on their survey of university students in China, Wang et al. (2023) found that students who perceived more supportive environments showed stronger intentions to learn about AI. In addition, a study of university instructors and students in India showed that trust in technology was a key factor influencing positive attitudes toward AI adoption (Roy et al. 2022).

2.3 AI adoption in Brazil and the U.S.

In examining university students’ perspectives, attitudes, and behavioral intentions related to AI, we focus on Brazil and the United States. Brazil and the United States are leading countries in Latin America and North America, respectively, in terms of AI adoption and utilization (Ammachchi 2022; Mari 2023; McElheran et al. 2023). Journalism education in Brazil and the United States are similar in that they both emphasize a combination of practical skills (e.g., reporting, writing, and digital media production) and theoretical foundations (e.g., media ethics/law and communication theory) (Moreira and Lago 2017). At the same time, there are key differences between the two countries, as Brazil represents the aspirations and challenges of the Global South, whereas the United States epitomizes the economic and technological dominance of the Global North. In particular, the rise of AI, amplified by digital neo-colonialism, may create an imbalance of power between the Global South and the Global North, with the Global South largely becoming “consumers” of AI technologies, heavily dependent on the Global North for their development and deployment (Khan et al. 2024; Seo et al. 2024). While Brazil’s technology sector has grown rapidly, it is still in the developing phase compared with the United States or other leading countries in the world (World Bank 2024).

Specifically, Brazil’s AI adoption rate is highest in Latin America with 46.7 %, followed by Mexico (26.7 %), Columbia (7.9 %), Argentina (5.6 %), and Peru (2.4 %) (Ammachchi 2022). In 2022, about 73 % of IT professionals in Brazil reported having invested in AI deployment in the last two years. AI was used for various purposes including analyzing consumer behaviors, spotting upcoming trends, and identifying advanced business opportunities. In addition, a multi-national study in 2023 found that consumers in Brazil are significantly more interested in AI and had more positive attitudes toward AI when compared with consumers in other countries (Mari 2023).

In the United States, AI adoption is uneven with larger companies showing higher levels of deploying AI for their business. As of 2023, about 50 % of U.S. companies with more than 5,000 employees were using AI and 60 % of companies with more than 10,000 employees deployed AI (Eastwood 2024). In comparison, 33 % of U.S. companies with more than 1,000 employees reported that they have actively deployed AI as part of its business operations, and 38 % said they were exploring but not have deployed AI (IBM 2023). This reflects an increase over the previous year when 25 % of the companies with more than 1,000 employees reported having actively deployed and 43 % exploring but not having deployed AI (IBM 2022). AI is used most by manufacturing, information, healthcare, professional services, and education sectors (Eastwood 2024). A survey of U.S. adults found that they exhibited uncertainty and ambivalence toward AI (Rainie et al. 2022). While about half were excited about AI performing menial tasks, there was overall uncertainty about AI’s societal impact, with respondents being “equally excited and concerned” about the proliferation of AI in everyday use (Rainie et al. 2022, p. 6).

2.4 Research questions and hypotheses

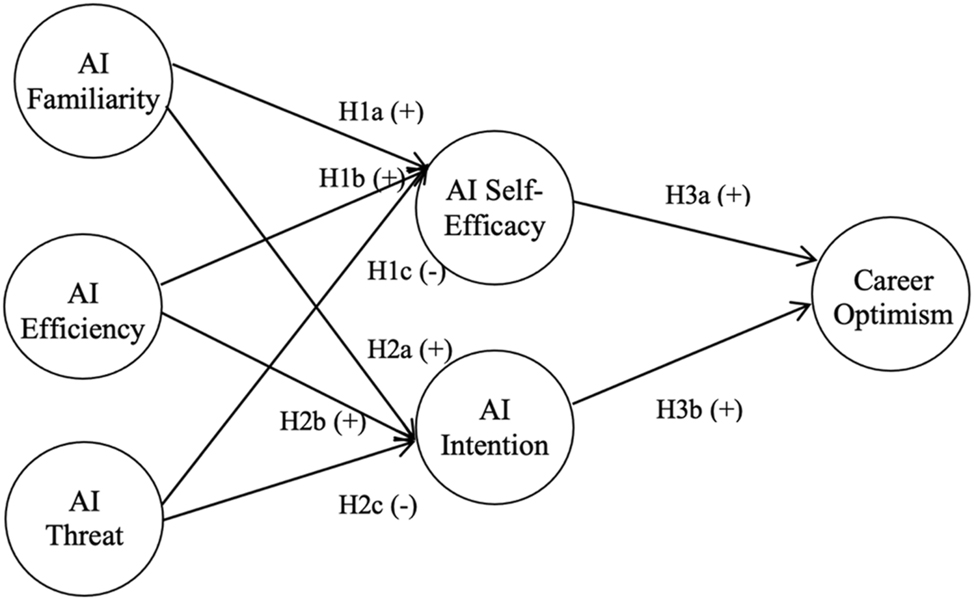

The Technological Acceptance Model (Davis 1989; Venkatesh and Davis 2000), the Value-based Adoption Model (Kim et al. 2017), and Social Cognitive Theory (Bandura 1986, 1997) provide helpful insights into relationships between perspectives on AI, AI self-efficacy, intention to learn about AI, and career optimism. According to the Technology Acceptance Model, perceived usefulness and perceived ease of use are key factors influencing people’s willingness to adopt a particular technology (Davis 1989; Venkatesh and Davis 2000). For example, previous research indicates that individuals who perceive a certain technology as efficient, familiar, or useful are more likely to embrace it (Li 2023; Pan 2020). Similarly, the Value-based Adoption Model suggests that individuals’ perceptions of costs and benefits associated with new technologies significantly influence their intentions and behaviors toward adopting and using those technologies (Kim et al. 2017; Sohn and Kwon 2020). For instance, college students who perceive greater ethical risks in using Generative AI are less likely to engage with the technology. Based on these theoretical frameworks and previous empirical studies, we posit that university students’ familiarity with AI (H1a) and perceived efficiency of AI (H1b) are positively associated with their AI self-efficacy, whereas perceived threat of AI (H1c) is negatively associated with their AI self-efficacy. In addition, we hypothesize that university students’ familiarity with AI (H2a) and perceived efficiency of AI (H2b) are positively associated with their intention to learn about AI, whereas perceived threat of AI (H2c) is negatively associated with their intention to learn about AI. The Social Cognitive Theory (Bandura 1986) identifies self-efficacy as the central and most influential socio-cognitive mechanism driving personal agency. Previous research has demonstrated that self-efficacy positively influences career optimism (Garcia et al. 2015). In addition, across different age groups, self-efficacy and learning intention tend to be positively related with technology adoption and use, with higher self-efficacy and learning-intention individuals being more likely to actively use technology applications and participate in social activities online (Seo et al. 2014, 2019). Thus, we propose that university students’ AI self-efficacy (H3a) and intention to learn about AI (H3b) are positively associated with their optimism toward future career. Based on data collected from Brazilian and U.S. university students majoring in journalism and mass communications, we also examine students’ reported challenges and concerns about using or learning about AI. The following research questions and hypotheses are examined. Figure 1 shows hypothesized relationships between the variables under study (H1, H2 and H3).

RQ1: How are Brazilian and U.S. university students majoring in communication and media similar or different in their attitudes toward AI and assessments of AI’s roles in their education, career paths, and society?

Hypothesized model of relationships between variables analyzed.

H1:

University students’ familiarity with AI (H1a) and perceived efficiency of AI (H1b) are positively associated with their AI self-efficacy, whereas perceived threat of AI (H1c) is negatively associated with their AI self-efficacy.

H2:

University students’ familiarity with AI (H2a) and perceived efficiency of AI (H2b) are positively associated with their intention to learn about AI, whereas perceived threat of AI (H2c) is negatively associated with their intention to learn about AI.

H3:

University students’ AI self-efficacy (H3a) and intention to learn about AI (H3b) are positively associated with their optimism toward future career.

RQ2: What are major challenges and concerns university students have in using or learning about AI?

3 Methods

We conducted online surveys and in-person interviews with undergraduate students majoring in journalism and mass communications in Brazil and the United States to examine their perspectives and behavioral intentions related to AI. We used a mixed-method approach of combining quantitative survey research with qualitative interviews to obtain a more holistic perspective on the research questions and hypotheses. Previous research studies in media and communication as well as other fields have utilized mixed-methods approaches to analyze social phenomena in a more comprehensive manner (Johnson et al. 2007; Seo et al. 2022). The number of academic journal articles that used mixed-method approaches has increased in the past decades (Creswell and Plano Clark 2018; Johnson et al. 2007). All research procedures described below were reviewed and approved by the Institutional Review Board (IRB) at the lead university, and all respondents were informed of the objectives of the research and had access to the informed consent form.

3.1 Sampling

To recruit participants for our survey and interview research, the research team contacted instructors of journalism and mass communications (JMC) undergraduate courses in one public research university each in Brazil and in the United States during Spring 2024. The universities were selected given similarities between them including being research-intensive public education institutions and having an established JMC undergraduate program. Instructors announced the research study in undergraduate classes in person and through their online learning management sites.

3.2 Online survey

The development of our survey questionnaire was informed by review of previous research studies discussed in the Literature Review section. After constructing our initial survey questions in English, we implemented a pretest of the survey questionnaire with 10 JMC undergraduate students from the U.S. university. We then translated the survey into Portuguese for students in Brazil. The Portuguese version was also pretested with 10 JMC students from the Brazilian university. Based on feedback from pretests, we finalized both English and Portuguese versions of the survey questionnaire and created them on Qualtrics, an online survey platform. Each survey questionnaire included 20 multiple-choice questions and two open-ended questions. Specific question items used to measure concepts and topics in this study are described below.

3.3 Survey measurement items

3.3.1 AI self-efficacy

To measure participants’ confidence in learning about AI, a revised technology self-efficacy scale was used (Holden and Rada 2011; Hong 2022). Specifically, the following four items were used to measure AI self-efficacy in this study: (1) I could complete any desired task using the AI technology if I had the manuals for reference; (2) I could complete any desired task using the AI technology if I had seen someone else using it before trying it myself; (3) I could complete any desired task using the AI technology if someone else helped me get started; and (4) I could complete any desired task using the AI technology if I had a lot of time to complete the task. Each item was measured on a scale of 1 (strongly disagree) to 5 (strongly agree). Cronbach’s alpha test indicated that an index based on these multiple items was reliable (α = 0.91).

3.3.2 Intention to use AI

The survey questionnaire included five items related to participants’ stated intentions to use and learn about AI (Kennedy et al. 2023). The five items were: (1) I prefer to use the most advanced AI technology available; (2) I will continue to acquire AI-related information; (3) I will keep myself updated with the latest AI applications; (4) I intend to use AI to assist with my learning; and (5) I will continue to learn AI. Each item was measured on a scale of 1 (strongly disagree) to 5 (strongly agree). The index variable was reliable according to Cronbach’s alpha test (α = 0.90).

3.3.3 AI familiarity

The survey questionnaire included a set of questions related to the participant’s familiarity and experience with AI (Hong 2022; Kennedy et al. 2023; Rainie et al. 2022). The four questions used to measure the participant’s AI experience were: (1) I can use AI-assisted voice recognition software to search for information; (2) I know how to use AI applications to create images; (3) I am able to use online AI translation tools; and (4) Overall, I am familiar with AI technologies. Each item was measured on a scale of 1 (strongly disagree) to 5 (strongly agree). The index variable was reliable according to Cronbach’s alpha test (α = 0.80).

3.3.4 AI efficiency & AI threats

Each participant was asked to respond to a series of questions related to their attitudes toward AI. These questions were derived from previous studies on AI (Hong 2022; Kennedy et al. 2023; Rainie et al. 2022). Each item was measured on a scale of 1 (strongly disagree) to 5 (strongly agree). The first set of items related to perceived efficiency of AI were: (1) AI increases efficiency and accuracy; (2) AI offers convenience and saves time; (3) AI improves decision-making processes; (4) AI helps solve complex problems; (5) AI leads to cost savings; and (6) AI creates new job opportunities. The AI efficiency index variable was reliable according to Cronbach’s alpha test (α = 0.81). The next set of items asked about participants’ perspectives on threats posed by AI: (1) Increased adoption of AI in society leads to job displacement; (2) Increased adoption of AI in society creates problems in privacy; (3) AI is used for malicious purposes; (4) AI causes errors and mistakes; (5) AI perpetuates biases and discrimination; and (6) AI creates unintended consequences. The AI threat index variable was reliable according to Cronbach’s alpha test (α = 0.84).

3.3.5 Career optimism

The survey included questions on participants’ perspectives on the future of the JMC field and job prospects (Garcia et al. 2015). The following questions were: (1) I am optimistic about the future of media industries; (2) I am optimistic about the future of journalism; (3) I am optimistic that I will be able to get a job within a year after graduation; and (4) I am optimistic that I will be able to get a job in my specialization within a year after graduation. Each item was measured on a scale of 1 (strongly disagree) to 5 (strongly agree). The index variable was reliable according to Cronbach’s alpha test (α = 0.87).

3.3.6 Demographics and open-ended questions

Demographic questions asked about the participant’s gender, race/ethnicity, age, year in school, and specific concentration within JMC. The survey questionnaire included two open-ended questions: (1) What excites you the most about AI? (2) What concerns you the most about AI?

3.4 Survey data analysis

This research employed path analysis through a series of multiple regression analyses to examine the theoretical model depiected in Figure 1 and the hypothesized relationships among the variables. A hierarchical approach was applied in the regression analyses. Age, gender, and race/ethnicity variables were entered first in the regression equations as control variables. Then AI familiarity, AI efficiency, and AI threat were entered, followed by AI self-efficacy and AI intention, and then career optimism. Tolerance and Variance Inflation Factor (VIP) statistics were calculated, and no significant multicollinarity issues were detected.

3.5 Interview research

We used a semi-structured interview method to gain deeper insights into undergraduate students’ perspectives and attitudes toward AI. Each interview was conducted by a communication researcher who earned their university’s IRB human subject research certificate. Our interview questionnaire included questions on general attitudes toward AI, experience using AI in classroom or other settings, perceived benefits and harms of AI, confidence in learning about AI, and job prospects. The interviewer recorded each interview with the consent from the participant, and all interviews were transcribed for data analysis. We conducted interviews until we reached the theoretical saturation of themes.

In analyzing the interview transcripts, we utilized Dedoose 9.2.006, an analytics platform for qualitative or mixed-methods research. We identified codes using a constant comparison technique based on grounded theory (Glaser and Strauss 1967; Hesse-Biber and Leavy 2010; Rubin and Rubin 2011; Strauss and Corbin 1994). Specifically, using this approach, we identified patterns in the transcripts related to themes of perceived benefits of AI, privacy and data security concerns, and intention to use and learn about AI, as well as new and emergent themes.

4 Results

Findings are based on online surveys and in-person interviews with undergraduate students majoring in journalism and mass communications in the United States or Brazil. A total of 511 students (296 from the United States and 215 from Brazil) participated in the survey research in Spring 2024. Demographic characteristics of the U.S. and Brazilian survey participants are shown in Table 1. As shown in Table 1, the two samples are generally comparable in terms of race, gender and race/ethnicity distributions. The U.S. sample included a slightly higher proportion of participants aged 21 or younger (84.7 %) compared to the Brazilian sample (76.2 %). Additionally, the proportion of female students was higher in the U.S. sample (70.3 %) than the Brazilian sample (61.8 %). Regarding race/ethnicity, the largest category in both samples was white, accounting for 72.3 % of the U.S. sample and 63.7 % of the Brazilian sample. In addition, a total of 35 students (20 from the United States and 15 from Brazil) participated in the interview research in Spring 2024. Table 2 summarizes demographic characteristics of the U.S. and Brazilian interview participants. Table 3 shows measurement items used for each main variable in the study.

Demographic characteristics of survey participants.

| USA | Brazil | ||||

|---|---|---|---|---|---|

| Variable/value | Count | Percent | Variable/value | Count | Percent |

| Age | |||||

|

|

|||||

| 18–19 | 157 | 53.0 % | 18–19 | 105 | 48.8 % |

| 20–21 | 94 | 31.7 % | 20–21 | 59 | 27.4 % |

| 22–23 | 20 | 6.8 % | 22–23 | 26 | 12.1 % |

| 24–25 | 20 | 6.8 % | 24–25 | 16 | 7.4 % |

| 26 or older | 5 | 1.7 % | 26 or older | 9 | 4.2 % |

| Total | 296 | 100 % | Total | 215 | 100 % |

|

|

|||||

| Gender | |||||

|

|

|||||

| Female | 208 | 70.3 % | Female | 133 | 61.8 % |

| Male | 84 | 28.4 % | Male | 75 | 34.9 % |

| Non-binary | 3 | 1.0 % | Non-binary | 4 | 1.9 % |

| Prefer not to answer | 1 | 0.3 % | Prefer not to answer | 3 | 1.4 % |

| Other | 0 | 0 % | Other | 0 | 0 % |

| Total | 296 | 100 % | Total | 215 | 100 % |

|

|

|||||

| Race/ethnicity | |||||

|

|

|||||

| White/Caucasian | 214 | 72.3 % | White | 137 | 63.7 % |

| Hispanic/Latino | 34 | 11.5 % | Black | 38 | 17.7 % |

| Asian | 26 | 8.8 % | Brown | 32 | 14.9 % |

| Black | 17 | 5.7 % | Asian | 10 | 4.7 % |

| Native American | 6 | 2.0 % | Indigenous | 7 | 3.2 % |

| Other | 2 | 0.7 % | Other | 0 | 0 % |

| Total* | NA | NA | Total* | NA | NA |

-

Note: Demographic characteristics of the survey participants are aligned with those of the JMC student population at each university under study. *Participants were given the opportunity to choose multiple options for the race/ethnicity question. Therefore, participants who identify with more than one race/ethnicity were provided the option to choose multiple races/ethnicities.

Demographic characteristics of interview participants.

| USA | Brazil | ||||

|---|---|---|---|---|---|

| Variable/value | Count | Percent | Variable/value | Count | Percent |

| Age | |||||

|

|

|||||

| 18–19 | 7 | 35.0 % | 5 | 33.3 % | 5 |

| 20–21 | 5 | 25.0 % | 5 | 33.3 % | 5 |

| 22–23 | 5 | 25.0 % | 1 | 6.7 % | 1 |

| 24–25 | 3 | 15.0 % | 4 | 26.7 % | 4 |

| Total | 20 | 100 % | 15 | 100 % | 15 |

|

|

|||||

| Gender | |||||

|

|

|||||

| Female | 12 | 60.0 % | Female | 9 | 60.0 % |

| Male | 7 | 35.0 % | Male | 6 | 40.0 % |

| Non-binary | 1 | 5.0 % | Non-binary | 0 | 0 % |

| Prefer not to answer | 0 | 0 % | Prefer not to answer | 0 | 0 % |

| Other | 0 | 0 % | Other | 0 | 0 % |

| Total | 20 | 100 % | Total | 15 | 100 % |

|

|

|||||

| Race/ethnicity | |||||

|

|

|||||

| White/Caucasian | 12 | 60.0 % | White | 9 | 60.0 % |

| Hispanic/Latino | 3 | 15.0 % | Black | 2 | 13.3 % |

| Asian | 2 | 10.0 % | Brown | 2 | 13.3 % |

| Black | 2 | 10.0 % | Asian | 1 | 6.7 % |

| Native American | 1 | 5.0 % | Indigenous | 2 | 13.3 % |

| Other | 0 | 0 % | Other | 0 | 0 % |

| Total* | NA | NA | Total* | NA | NA |

-

Note: *Participants were given the opportunity to choose multiple options for the race/ethnicity question. Therefore, participants who identify with more than one race/ethnicity were provided the option to choose multiple races/ethnicities.

Measurement items for main variables.

| Variable/source | Measurement items | Cronbach’s alpha (α) |

|---|---|---|

| AI self-efficacy (Holden and Rada 2011; Hong 2022) | I could complete any desired task using the AI technology if I had the manuals for reference. | 0.91 |

| I could complete any desired task using the AI technology if I had seen someone else using it before trying it myself. | ||

| I could complete any desired task using the AI technology if someone else helped me get started. | ||

| I could complete any desired task using the AI technology if I had a lot of time to complete the task. | ||

| Intention to use AI (Kennedy et al. 2023) | I prefer to use the most advanced AI technology available. | 0.90 |

| I will continue to acquire AI-related information. | ||

| I will keep myself updated with the latest AI applications. | ||

| I intend to use AI to assist with my learning. | ||

| I will continue to learn AI. | ||

| AI familiarity (Hong 2022; Kennedy et al. 2023; Rainie et al. 2022) | I can use AI-assisted voice recognition software to search for information. | 0.80 |

| I know how to use AI applications to create images. | ||

| I am able to use online AI translation tools. | ||

| Overall, I am familiar with AI technologies. | ||

| AI efficiency (Hong 2022; Kennedy et al. 2023; Rainie et al. 2022) | AI increases efficiency and accuracy. | 0.81 |

| AI offers convenience and saves time. | ||

| AI improves decision-making processes. | ||

| AI helps solve complex problems. | ||

| AI leads to cost savings. | ||

| AI creates new job opportunities. | ||

| AI threats (Hong 2022; Kennedy et al. 2023; Rainie et al. 2022) | Increased adoption of AI in society leads to job displacement. | 0.84 |

| Increased adoption of AI in society creates problems in privacy. | ||

| AI is used for malicious purposes. | ||

| AI causes errors and mistakes. | ||

| AI perpetuates biases and discrimination. | ||

| AI creates unintended consequences. | ||

| Career optimism (Garcia et al. 2015) | I am optimistic about the future of media industries. | 0.87 |

| I am optimistic about the future of journalism. | ||

| I am optimistic that I will be able to get a job within a year after graduation. | ||

| I am optimistic that I will be able to get a job in my specialization within a year after graduation. |

To understand study participants’ overall understandings of AI, our interview research asked participants to describe, in their own words, what AI means to them and to provide examples of AI applications. Overall, U.S. and Brazilian students were similar in that they demonstrated predominantly instrumental views of AI, shaped largely by their recent experiences with tools like ChatGPT for research and text production. For many, these applications are the first things that come to mind when they hear the term “artificial intelligence.” Additionally, they highlighted the use of bots for tasks such as audio transcription and image creation/editing as central to their understanding of AI. Specifically, in defining AI, students mentioned: “machines that can perform tasks that typically require human intelligence,” “machines that think or act like humans,” “applications that help do things quickly and efficiently,” and “database.” When asked to give examples of AI, many students mentioned ChatGPT or features on social media such as Snapchat filters, while some cited Notion, translation programs, and design applications such as Adobe Firefly.

4.1 RQ1: Attitudes toward AI

Our first research question asked how Brazilian and U.S. university students majoring in communication and media are similar or different in their attitudes toward AI and assessments of AI’s roles in their education, career paths, and society. In testing the differences based on the survey data, we used analysis of variance (ANOVA) tests. In addition, we utilized our interview data to provide more context for these topics.

When it comes to perceived familiarity with AI, there were no statistically significant differences between Brazilian and U.S. students (F = 1.85, p = 0.17), though the mean of U.S. student responses (M = 3.04, SD = 0.89) was slightly higher than that of Brazilian student responses (M = 2.93, SD = 0.92). In contrast, there were statistically significant differences between the two groups in terms of their perceived efficiency of AI (F = 3.94, p < 0.05) and perceived threats posed by AI (F = 3.92, p < 0.05). U.S. students (M = 3.04, SD = 0.72) rated AI efficiency higher than Brazilian students did (M = 2.89, SD = 0.95). Brazilian students reported higher levels of perceived threats posed by AI (M = 3.70, SD = 0.80) compared with U.S. students (M = 3.55, SD = 0.77).

In terms of AI self-efficacy, Brazilian (M = 3.51, SD = 0.92) and U.S. students (M = 3.51, SD = 0.94) demonstrated similar levels of confidence regarding using and learning to use AI (F = 0.07, p = 0.78). In comparison, Brazilian students (M = 3.15, SD = 1.05) demonstrated a higher level of intention to learn about AI in the future as compared to their U.S. counterparts (M = 2.79, SD = 0.89). The difference between the two groups regarding intention to learn about AI in the future was statistically significant (F = 16.01, p < 0.001).

Our interview results offer additional insight into Brazilian and U.S. students’ attitudes toward AI. Two main themes emerged from the interview data: (i) mixed feelings toward AI and (ii) curiosity and interest. In terms of AI’s impact on society, the majority of Brazilian and U.S. students interviewed for this research expressed mixed feelings. While they were excited about AI’s potential positive contributions such as curing diseases, they worried about negative consequences of AI applications such as generation and amplification of misinformation and breach of privacy. A Brazilian student said that while “AI is useful for some everyday tasks such as product suggestions and recommendations,” greater attention should be paid to ensure ethical uses of AI. A U.S. interviewee said, “I think it [AI] is really scary, like personally…I would just describe it as like a database. It knows everything.” Participants also mentioned that AI has affected their learning. For example, a U.S. student said, “Honestly, most personally it has impacted my learning…I have to do all of the like AI checks on my work. Like I run it through like the AI detector because I don’t want to get flagged for AI. And a surprising amount of the time AI flagged it and I did not use any AI for my assignments.”

Both Brazilian and U.S. students expressed curiosity and interest about AI. In addition, they expressed eagerness to deepen their understandings of AI, particularly as its applications have expanded into media and creative domains such as news writing, image production, and social media content creation. A U.S. student said: “AI is obviously going to make its way into journalism, as it can write reports faster than any person could. Understanding it better, I think, would be really useful.” A Brazilian student emphasized the inevitability of embracing AI, stating, “We can’t escape AI anymore. AI is becoming increasingly integrated into society, being used more and more, and in the future, it will serve more important functions.” A majority of students mentioned that their JMC classes covered AI through class discussions or assignments and that they would like to see more advanced discussions in classes regarding ethics of AI and specific applications that can be used by media professionals.

4.2 H1: Effects of AI familiarity, efficiency, and threat on AI self-efficacy

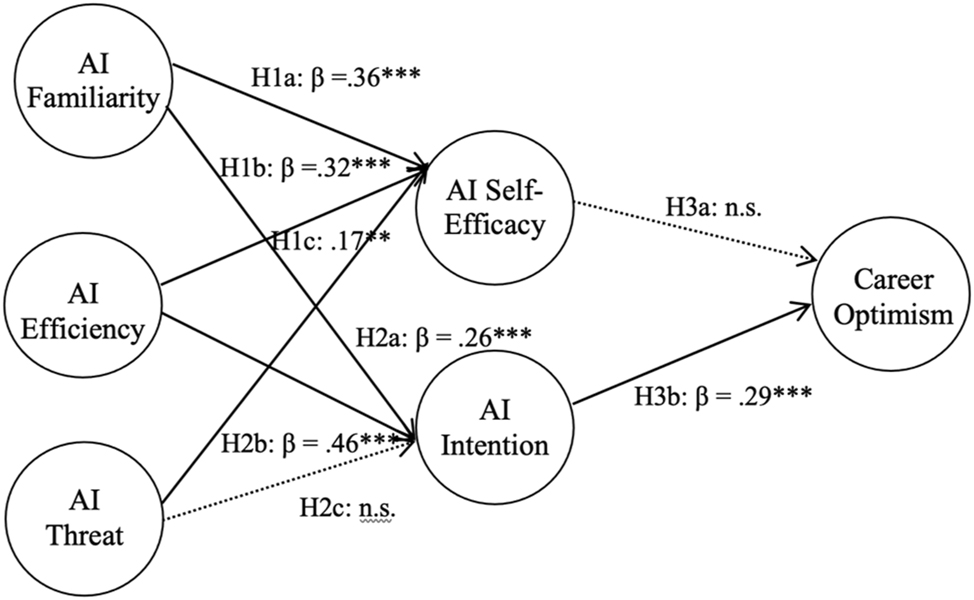

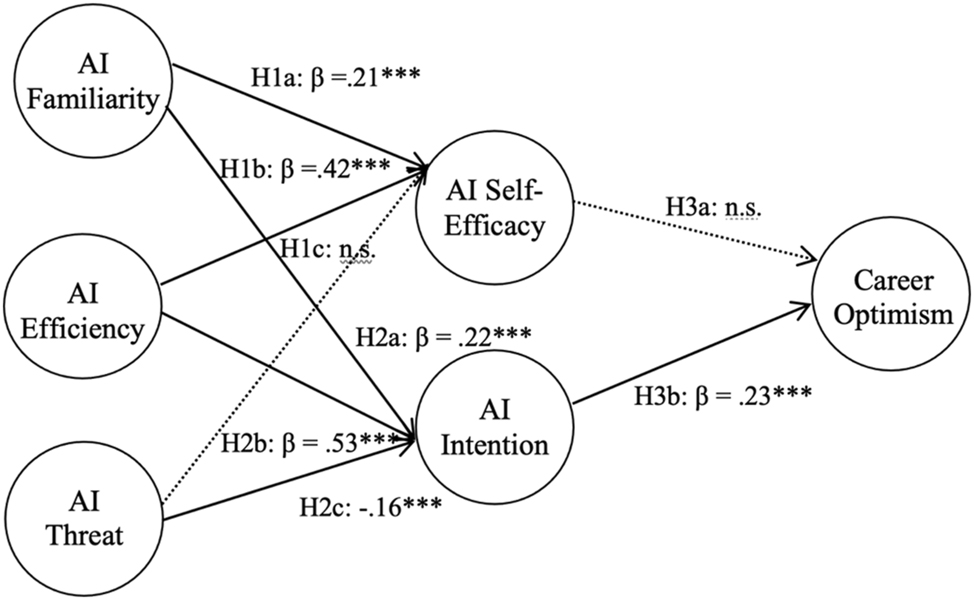

As described in the Methods section, we conducted a series of regression analyses or path analyses to test H1, H2, and H3. Figures 2 and 3 show results of hypothesis testing with Brazilian survey data and U.S. data, respectively.

Path analysis of Brazilian students’ AI efficacy, attitudes, perceived threats and career optimism.

Path analysis of U.S. students’ AI efficacy, attitudes, perceived threats and career optimism.

Our first hypothesis posited that students’ familiarity with AI (H1a) and perceived efficiency of AI (H1b) would be positively associated with their AI self-efficacy, whereas perceived threat of AI (H1c) is negatively associated with their AI self-efficacy. U.S. survey data showed statistically significant effects of students’ attitudes toward AI on their AI self-efficacy (Δ R2 = 0.30, F = 40.08, p < 0.001). Specifically, AI familiarity (β = 0.21, t = 3.84, p < 0.001) and perceived efficiency of AI (β = 0.42, t = 7.76, p < 0.001) were positively associated with AI self-efficacy. H1a and H1b were supported. However, H1c was not supported, as there was no statistically significant negative relationship between perceived threats of AI and AI self-efficacy (β = 0.08, t = 1.53, p = 0.13).

Our analysis of Brazilian data showed slightly different patterns. There were statistically significant effects of students’ attitudes toward AI on their AI self-efficacy (Δ R2 = 0.31, F = 29.08, p < 0.001). AI self-efficacy had statistically significant positive relationships with AI familiarity (β = 0.36, t = 5.57, p < 0.001) and perceived efficiency of AI (β = 0.32, t = 4.86, p < 0.001). In addition, it showed a statistically significant positive relationship with perceived threats of AI (β = 0.17, t = 2.73, p < 0.01). Therefore, H1a and H1b were supported, but not H1c.

4.3 H2: Effects of AI familiarity, efficiency, and threat on AI learning intention

Our second hypothesis proposed that university students’ familiarity with AI (H2a) and perceived efficiency of AI (H2b) would be positively associated with their intention to learn about AI, whereas perceived threats of AI (H2c) is negatively associated with their intention to learn about AI.

Our analysis of U.S. data yielded statistically significant effects of students’ attitudes toward AI on their intention to learn about AI (Δ R2 = 0.41, F = 66.56, p < 0.001). Specifically, AI familiarity (β = 0.22, t = 4.26, p < 0.001) and perceived efficiency of AI (β = 0.53, t = 10.70, p < 0.001) were positively associated with intention to learn about AI. In addition, there was a statistically significant negative relationship between participants’ perceived threats of AI and their intention to learn about AI (β = −0.16, t = −3.37, p < 0.001). Therefore, H2a, H2b, and H2c were supported.

Relationships between these variables in the survey data from Brazil, also showed statistically significant effects for students’ attitudes toward AI on their intention to learn about AI (Δ R2 = 0.41, F = 45.19, p < 0.001). Intention to learn about AI had statistically significant positive relationships with AI familiarity (β = 0.26, t = 4.38, p < 0.001) and perceived efficiency of AI (β = 0.46, t = 7.43, p < 0.001). However, there was no statistically significant negative relationship between participants’ perceived threats of AI and their intention to learn about AI (β = −0.11, t = −1.84, p = 0.07). Therefore, H2a and H2b were supported, but H2c was not.

4.4 H3: Effects of AI self-efficacy and AI learning intention on career optimism

Our third hypothesis posited that university students’ AI self-efficacy (H3a) and intention to learn about AI (H3b) would be positively associated with optimism toward future career. In both Brazilian (Δ R2 = 0.08, F = 8.77, p < 0.001) and U.S. survey data (Δ R2 = 0.06, F = 9.94, p < 0.001), we found a statistically significant effect for students’ AI self-efficacy and intention to learn about AI on their career optimism. In both cases, intention to learn about AI was positively associated with career optimism (Brazil: β = 0.29, t = 3.82, p < 0.001; U.S.: β = 0.23, t = 3.62, p < 0.001). However, there was no statistically significant relationship between AI self-efficacy and career optimism (Brazil: β = −0.01, t = −0.09, p = 0.92; U.S.: β = 0.05, t = 0.77, p = 0.44). Therefore, H3a was not supported, but H3b was.

4.5 RQ2: Challenges and concerns about AI

Our second research question was about major challenges and concerns university students have in using or learning about AI. To answer this question, we analyze interview transcripts and survey respondents’ answers to two open-ended questions in the online survey (i.e., What excites you the most about AI? What concerns you the most about AI?). Results suggest the following five main themes regarding university students’ challenges and concerns regarding AI: (i) unethical and malicious use, (ii) privacy and data security, (iii) job displacement, (iv) over-reliance on AI, and (v) complexity and errors.

Many participants – both from Brazil and the United States – expressed concern about unethical or malicious use of AI in academic, media, or other settings. In particular, creating fake images or false information was most frequently mentioned in this context. For example, one U.S. participant said, “It is mostly used unethically and maliciously to create porn or other false images or statements that some people cannot tell are fake.” In discussing this issue, another U.S. student cited as an example of AI-generated fake and explicit images of Taylor Swift that spread widely across social media in early 2024 (Hsu 2024). Similarly, Brazilian participants noted that media organizations will need to be increasingly vigilant, especially considering that misinformation and disinformation are more widely and rapidly disseminated through AI applications. One Brazilian student said, AI tools will make “fake news go to another level.” Another Brazilian student noted that media organizations “have to do more in-depth work so as not to spread fake news, because sometimes something seems very real.” He added, “Whether they want to or not, they’re going to have to work harder to be careful about polishing information so that they’re not putting out false information.”

Both U.S. and Brazilian students mentioned privacy and data security as a primary concern regarding AI. Students cited “invasions of privacy” or personal information being “stolen” in this regard. One Brazilian student said he was worried about “the issue of privacy, data security, how this can be used in a way that infringes copyright and personal image.” This was echoed by another Brazilian student who mentioned “lack of virtual security” and “my data exposed” as primary concerns related to AI. Similarly, U.S. students noted that the absence of regulations on AI further intensifies privacy and data security concerns regarding AI. A U.S. student said, “AI can be used for many malicious reasons and hacking people. It is concerning if we don’t put checks when using AI.”

Another aspect frequently stated by study participants was job displacement resulting from AI. One U.S. participant said, “It potentially has the effect of taking over jobs or altering the job market in such a way that certain positions are no longer necessary.” The participant added that this can be particularly an issue for those whose “roles are in a specialized field that requires training and education and cannot easily adapt to another role.” Another U.S. participant said, “It could take away job opportunities and it will be more intelligent than a human.” Similarly, Brazilian students mentioned that they were worried about professionals being replaced by AI tools while making comments such as “loss of jobs after the adoption of artificial intelligence” and “extinction” of some jobs. For example, a Brazilian student said, “What worries me the most is the potential displacement of workers by AI-powered tools, particularly in fields like graphic design within the media industry, leading to job losses.”

Many U.S. and Brazilian interviewees voiced concern about growing reliance on AI and its potential ramifications for creative and critical thinking skills among university students and in broader contexts. For instance, a U.S. student said, “I think that students will easily plagiarize or have AI write their work for them. I am also concerned that too much reliance on AI will result in lowering our critical thinking skills as human beings.” A Brazilian student stated, “What worries me is that I’ll become too dependent on it [AI] and won’t be able to produce content on my own.” Several students noted it is important that media students and professionals engage in critical thinking and independent exploration when incorporating AI into their creative workflows.

Finally, many study participants expressed concern that AI will become too complex and powerful for them to understand its implications and that its harms and errors will have significant unanticipated negative consequences. For instance, a Brazilian participant said, she was most worried about “the possibility of errors and inaccuracies in the results presented by” AI technologies. One U.S. participant said, “I’m concerned about AI because it continues to grow every day and I’m scared we won’t be able to stop it from growing beyond our control.” Another U.S. participant noted, “I am most concerned that AI will get so advanced, and we soon will not be able to tell what is real and what is not.” These concerns are aligned with overall public concerns about AI in Brazil and the United States (Eastwood 2024; Kennedy et al. 2023; Mari 2023).

5 Discussion

Utilizing a mixed-methods approach of combining online survey and in-person interview research, our study examined perspectives, attitudes, and behavioral intentions related to AI among undergraduate students majoring in journalism and mass communications in Brazil and the United States. In this section, we discuss scholarly and practical implications of our findings and suggestions for future research.

5.1 Scholarly and practical implications

Our research showed that students with higher levels of familiarity with AI and perceived efficiency demonstrated higher levels of AI self-efficacy. This phenomenon was observed both in Brazilian and U.S. data. In this study, AI self-efficacy was defined as an individual’s confidence in their ability to learn and use AI and was measured by four items (Bandura 1997; Hong 2022; Wang et al. 2023). Our finding is broadly consistent with previous research on self-efficacy regarding technology in general (Seo et al. 2014, 2019). As can be inferred from the Technology Acceptance Model (Davis et al. 1989; Venkatesh and Davis 2000), when people find a certain AI application is easy to use, familiar, or efficient, they are more likely to exhibit confidence in that technology. Aligned with the Technology Acceptance Model, our finding indicates that students who perceive AI tools familiar and efficient are more likely to feel confident in using them. This result is also compatible with previous research showing that students developed more positive attitudes toward AI as they continued to use it (Li 2023).

While Brazilian and U.S. students were similar in their reported familiarity with AI and AI self-efficacy, there were statistically significant differences between the two groups regarding perceived efficiency of AI and perceived threats posed by AI. U.S. students rated AI efficiency higher than did Brazilian students, whereas Brazilian students reported higher levels of perceived threats posed by AI as compared with U.S. counterparts. These findings suggest that Brazilian students are more cautious about effects of AI on society. Despite these differences, it should be noted that both samples demonstrated mixed feelings about AI acknowledging both benefits from and harms posed by ever-increasing AI applications. These students’ mixed, and sometimes ambivalent, attitudes toward AI are aligned with attitudes among general public in both countries (Kennedy et al. 2023; Rainie et al. 2022).

Our research found that Brazilian students showed higher levels of intention to learn about AI in the future compared with U.S. students. On the surface, this may seem contradictory to the results that Brazilian students rated AI threats higher and AI efficiency lower than their U.S. counterparts did. However, stronger intentions to learn about AI among Brazilian students may reflect rapid embracing of AI in Brazil (IBM 2023; Mari 2023). Students in Brazil may feel that it is imperative for them to learn about AI to adapt to the job market in the country that is increasingly deploying AI in multiple sectors. During the interview research, many Brazilian students cited “ease of completing day-to-day tasks” and “possibility of solving problems” as benefits of AI.

Another important finding from our study is that intention to learn about AI was positively associated with optimism toward the field of journalism and mass communications and perceived job prospect. This applies to students from both Brazil and the United States. While study participants mentioned job displacement as one of the main concerns regarding AI, students from both countries were overall positive toward the future of journalism and mass communications and their employment opportunities in the field. Students mentioned AI applications writing news stories or creating marketing materials as examples of AI potentially replacing human workforce in media and communication fields. However, they also emphasized the importance of creative ideas and critical thinking skills that may not be easily replaced by AI at this point. Indeed, previous research showed that adoption of AI in newsrooms or other media fields remain limited through it has increased significantly in recent years (de-Lima-Santos and Ceron 2022).

Our research highlight needs for instructors to be better educated on AI so they can lead thoughtful, nuanced, and substantive discussions on AI in their classrooms. Students in our interview research expressed desire to engage in more advanced and in-depth discussions of AI in their classes. Several students mentioned that their professors do not know “how to address AI adequately.” For example, a U.S. student said, “I think they [professors] should get into what it is, because I think a lot of people just don’t actually understand what artificial intelligence is and like how it functions.” She added, “As journalists, we should be taught how to flag something as [done by] AI and what journalistic integrity means around AI. I think there’s a lot of material that can be covered, but definitely needs to be in some scope.” As university programs on journalism and mass communications continue to explore innovative ways to integrate AI into classroom settings, it becomes increasingly vital for schools to provide tailored support for educators. This support can take the form of workshops, seminars, or other professional development opportunities designed to help instructors deepen their understanding of AI technologies and develop necessary skills to effectively teach AI applications. Such initiatives should specifically address how AI intersects with media and communication fields, ensuring that educators are equipped to guide students in this rapidly evolving digital media landscape.

Finally, concerted efforts are needed to help both instructors and students better understand how to use AI tools in academic settings. Many students in our study expressed stress and frustration around possibly being accused of violating university academic integrity policy even though they followed university guidelines related to AI use for assignments or did not even use AI in completing their assignments. Concerns identified in this research are consistent with previous research showing that students, who are well-intentioned and attempt to use AI to ethically further their education, may end up being found to have violated their universities’ academic integrity standards (Talaue 2023). Both instructors and students need to better understand limitations of AI in detecting plagiarism, and universities need to focus more on when and how to use AI applications to facilitate critical thinking activities. Research has shown that when critical thinking is used alongside AI assistance, students’ work and motivation can improve significantly (Li 2023; Yilmaz and Yilmaz 2023). Using AI to assist in frontloading tasks or educational projects can allow students to exert more time to critical thinking and creative problem solving (Yilmaz and Yilmaz 2023). For example, an experimental study found that students who used ChatGPT to perform systematic tasks at the onset of a project demonstrated more self-efficacy on the later portion of the same project. This increase in self-efficacy led to increasing students’ motivation in successfully and ethically utilizing chatbots in their educational careers (Fryer et al. 2019; Katchapakirin et al. 2022; Lee et al. 2022).

5.2 Limitations and future research

There are several areas that might be explored in future research. First, a future study with national representative samples would provide more generalizable findings. Second, it would be helpful to examine perspectives and attitudes toward AI among university students in other regions and countries to examine related issues more globally. In particular, including countries where AI adoption is lower than other countries would be useful in understanding commonalities and distinctions between countries with different levels of AI adoption and use. Third, comparing AI-related experiences and attitudes among students majoring in journalism and mass communications with students in other fields such as engineering might provide useful insights. Fourth, while the AI familiarity variable in our survey research and interview responses regarding AI definitions and examples provide valuable insights into study participants’ experiences with AI tools, our survey research did not directly assess each participant’s baseline knowledge of AI. Future studies could enhance this research by incorporating measures to assess participants’ baseline knowledge and awareness of AI. Finally, it would be useful to examine instructors’ perceptions, attitudes, and behavioral intentions toward AI and how their attitudes and intentions might influence the ways they cover AI in their classes.

5.3 Conclusions

Open AI’s ChatGPT rocked higher education in November 2022. In the immediate wake of the ChatGPT introduction, concerns about students unethically using AI for their assignments led to the restructuring of course assignments and other related measures (Huang 2023). Overtime, universities have started focusing more on how to help students better understand ethical uses of AI and incorporating AI applications into learning activities in a way to enhance critical thinking and problem-solving skills (Yilmaz and Yilmaz 2023; Li 2023). Our research shows that students’ perceptions of AI are often tied to their perspectives on ChatGPT and concerns about being accused of academic misconduct. They are optimistic about potential benefits of AI – from reducing time spent on menial tasks to helping address social problems, while at the same time exhibiting concern about negative consequences of AI. Most of all, they want to engage in conversations around AI and using of AI applications on a deeper level through their courses in journalism and mass communications. Given the rapidly growing deployment of AI in media and other related fields around the world (Eastwood 2024; IBM 2023; Mari 2023; McElheran et al. 2023), it is crucial for journalism and mass communications programs to adapt their curricula to equip students with the skills and knowledge necessary to succeed in their future careers. As AI continues to transform industries, students need to be prepared not only to understand and use these technologies but also to critically analyze their implications on journalism, media, communication, and society. Academic associations including the Association for Education in Journalism and Mass Communication can play a pivotal role. These organizations could serve as information and networking hubs for developing curriculum around this topic and sharing lessons learned among instructors. In doing so, we should include voices of instructors and students in journalism and mass communications programs across the globe so that our approaches to the topic reflect realities and aspirations of countries with different levels of AI adoption and use.

References

Ammachchi, Narayan. 2022. Brazil leads Latin America in AI adoption. Nearshore Americas. https://nearshoreamericas.com/brazil-latin-america-ai-adoption/ (accessed 2 November 2022).Search in Google Scholar

Baek, Tae Hyun & Minseong Kim. 2023. Is ChatGPT scary good? How user motivations affect creepiness and trust in generative artificial intelligence. Telematics and Informatics 83. 102030. https://doi.org/10.1016/j.tele.2023.102030.Search in Google Scholar

Bandura, Albert. 1986. Social foundations of thought and action. Englewood Cliffs, NJ 1986(23-28). 2.Search in Google Scholar

Bandura, Albert. 1997. Self-efficacy: The exercise of control. New York: Freeman.Search in Google Scholar

Broussard, Meredith. 2015. Artificial intelligence for investigative reporting: Using an expert system to enhance journalists’ ability to discover original public affairs stories. Digital Journalism 3(6). 814–831. https://doi.org/10.1080/21670811.2014.985497.Search in Google Scholar

Broussard, Meredith, Nicholas Diakopoulos, Andrea L. Guzman, Rediet Abebe, Michel Dupagne & Ching-Hua Chuan. 2019. Artificial intelligence and journalism. Journalism and Mass Communication Quarterly 96(3). 673–695. https://doi.org/10.1177/1077699019859901.Search in Google Scholar

Byars-Winston, Angela, Jacob Diestelmann, Julia N. Savoy & William T. Hoyt. 2017. Unique effects and moderators of effects of sources on self-efficacy: A model-based meta-analysis. Journal of Counseling Psychology 64(6). 645. https://doi.org/10.1037/cou0000219.Search in Google Scholar

Chan-Olmsted, Sylvia M. 2019. A review of artificial intelligence adoptions in the media industry. International Journal on Media Management 21(3-4). 193–215. https://doi.org/10.1080/14241277.2019.1695619.Search in Google Scholar

Creswell, John W. & Vicki L. Plano Clark. 2018. Designing and conducting mixed methods research, 3rd edn. London, U.K.: Sage.Search in Google Scholar

Davis, Fred D. 1989. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Quarterly 13(3). 319–340. https://doi.org/10.2307/249008.Search in Google Scholar

Davis, Fred D., Richard P. Bagozzi & Paul R. Warshaw. 1989. User acceptance of computer technology: A comparison of two theoretical models. Management Science 35(8). 982–1003. https://doi.org/10.1287/mnsc.35.8.982.Search in Google Scholar

de-Lima-Santos, Mathias-Felipe & Wilson Ceron. 2022. Artificial intelligence in news media: Current perceptions and future outlook. Journalism and Media 3(1). 13–26. https://doi.org/10.3390/journalmedia3010002.Search in Google Scholar

Desouza, Kevin C. 2018. Delivering artificial intelligence in government: Challenges and opportunities. IBM Centre for The Business of Government. https://www.businessofgovernment.org/sites/default/files/Delivering%20%20Artificial%20Intelligence%20in%20Government_0.pdf (accessed 2 November 2022).Search in Google Scholar

Eastwood, Brian. 2024. The who, what, and where of AI adoption in America. MIT Management School. https://mitsloan.mit.edu/ideas-made-to-matter/who-what-and-where-ai-adoption-america#:∼:text=Just%206%25%20of%20U.S.%20companies,%2C%20San%20Antonio%2C%20and%20Nashville (accessed 7 February 2024).Search in Google Scholar

Fryer, Luke K., Kaori Nakao & Andrew Thompson. 2019. Chatbot learning partners: Connecting learning experiences, interest and competence. Computers in Human Behavior 93. 279–289. https://doi.org/10.1016/j.chb.2018.12.023.Search in Google Scholar

Garcia, Patrick Raymund James M., Simon Lloyd D. Restubog, Prashant Bordia, Sarbari Bordia & Rachel Edita O. Roxas. 2015. Career optimism: The roles of contextual support and career decision-making self-efficacy. Journal of Vocational Behavior 88. 10–18. https://doi.org/10.1016/j.jvb.2015.02.004.Search in Google Scholar

Glaser, Barney & Anselm Strauss. 1967. The discovery of grounded theory strategies for qualitative research. Mill Valley: CA Sociology Press.Search in Google Scholar

Von Garrel, Jörg & Jana Mayer. 2023. Artificial Intelligence in studies—use of ChatGPT and AI-based tools among students in Germany. Humanities & Social Sciences Communications 10(1). 799–9. https://doi.org/10.1057/s41599-023-02304-7.Search in Google Scholar

Hesse-Biber, Sharlene Nagy & Patricia Leavy. 2010. The practice of qualitative research. Thousand Oaks, CA: Sage Publications.Search in Google Scholar

Holden, Heather & Roy Rada. 2011. Understanding the influence of perceived usability and technology self-efficacy on teachers’ technology acceptance. Journal of Research on Technology in Education 43(4). 343–367. https://doi.org/10.1080/15391523.2011.10782576.Search in Google Scholar

Hong, Joo-Wha. 2022. I was born to love AI: The influence of social status on AI self-efficacy and intentions to use AI. International Journal of Communication 16. 20.Search in Google Scholar

Hsu, Tiffany. 2024. Fake and explicit images of Taylor Swift started on 4chan, study says. The New York Times. https://www.nytimes.com/2024/02/05/business/media/taylor-swift-ai-fake-images.html (accessed 5 February 2024).Search in Google Scholar

Huang, Kalley. 2023. Alarmed by A.I. chatbots, universities start revamping how they teach. The New York Times. https://www.nytimes.com/2023/01/16/technology/chatgpt-artificial-intelligence-universities.html (accessed 6 January 2023).Search in Google Scholar

IBM. 2022. IMB global AI adoption index—enterprise report.Search in Google Scholar

IBM. 2023. IMB global AI adoption index—enterprise report.Search in Google Scholar

Johnson, R. Burke, Anthony J. Onwuegbuzie & Lisa A. Turner. 2007. Toward a definition of mixed methods research. Journal of Mixed Methods Research 1(2). 112–133. https://doi.org/10.1177/1558689806298224.Search in Google Scholar

Katchapakirin, Kantinee, Chutiporn Anutariya & Thepchai Supnithi. 2022. ScratchThAI: A conversation-based learning support framework for computational thinking development. Education and Information Technologies 27(6). 8533–8560. https://doi.org/10.1007/s10639-021-10870-z.Search in Google Scholar

Kennedy, Brian, Alec Tyson & Emily Saks. 2023. Public awareness of artificial intelligence in everyday activities. Washington, D.C: Pew Research Center.Search in Google Scholar

Khan, Muhammad Salar, Hamza Umer & Farhana Faruqe. 2024. Artificial intelligence for low income countries. Nature 11. 1422. https://doi.org/10.1057/s41599-024-03947-w.Search in Google Scholar

Kim, Yonghee, Youngju Park & Jeongil Choi. 2017. A study on the adoption of IoT smart home service: Using value-based adoption model. Total Quality Management & Business Excellence 28(9–10). 1149–1165. https://doi.org/10.1080/14783363.2017.1310708.Search in Google Scholar

Lang, James M. 2024. The case for slow-walking our use of generative AI. The Chronicle of Higher Education. https://www.chronicle.com/article/the-case-for-slow-walking-our-use-of-generative-ai (accessed 29 February 2024).Search in Google Scholar

Lee, Yen-Fen, Gwo-Jen Hwang & Pei-Ying Chen. 2022. Impacts of an AI-based chat bot on college students’ after-class review, academic performance, self-efficacy, learning attitude, and motivation. Educational Technology Research & Development 70(5). 1843–1865. https://doi.org/10.1007/s11423-022-10142-8.Search in Google Scholar

Li, Kang. 2023. Determinants of college students’ actual use of AI-based systems: An extension of the technology acceptance model. Sustainability 15(6). 5221. https://doi.org/10.3390/su15065221.Search in Google Scholar

Mari, Angelica. 2023. Brazil among most optimistic countries about AI, study says. Forbes. https://www.forbes.com/sites/angelicamarideoliveira/2023/11/03/brazil-among-most-optimistic-countries-about-ai-study-says/?sh=301b1e222daa (accessed 3 November 2023).Search in Google Scholar

McElheran, Kristina, J. Frank Li, Erik Brynjolfsson, Zachary Kroff, Emin Dinlersoz, Lucia Foster & Nikolas Zolas. 2023. AI adoption in America: Who, what, and where. Journal of Economics & Management Strategy 33(2). 375–415. https://doi.org/10.1111/jems.12576.Search in Google Scholar

Mitchell, Caroline, Paul Meredith, Matthew Richardson, Peter Greengross & Gary B. Smith. 2016. Reducing the number and impact of outbreaks of nosocomial viral gastroenteritis: Time-series analysis of a multidimensional quality improvement initiative. BMJ Quality and Safety 25(6). 466–474.10.1136/bmjqs-2015-004134Search in Google Scholar

Moreira, Sonia Virgínia & Cláudia Lago. 2017. Journalism education in Brazil: Developments and neglected issues. Journalism & Mass Communication Educator 72(3). 263–273. https://doi.org/10.1177/1077695817719609.Search in Google Scholar

OECD. 2019. Hello, world: Artificial intelligence and its use in the public sector. OECD working paper. https://www.oecd-ilibrary.org/docserver/726fd39d-en.pdf?expires=1711217177&id=id&accname=guest&checksum=905C48646330B0005A1530344712FE33 (accessed 1 December 2019).Search in Google Scholar

Pan, Xiaoquan. 2020. Technology acceptance, technological self-efficacy, and attitude toward technology-based self-directed learning: Learning motivation as a mediator. Frontiers in Psychology 11. 564294. https://doi.org/10.3389/fpsyg.2020.564294.Search in Google Scholar

Rainie, Lee, Cary Funk, Monica Anderson & Alec Tyson. 2022. AI and human enhancement: Americans’ openness is tempered by a range of concerns. Washington, D.C: Pew Research Center.Search in Google Scholar

Roy, Rita, Mohammad Dawood Babakerkhell, Subhodeep Mukherjee, Debajyoti Pal & Suree Funilkul. 2022. Evaluating the intention for the adoption of artificial intelligence-based robots in the university to educate the students. IEEE Access 10(1). 125666–125678. https://doi.org/10.1109/access.2022.3225555.Search in Google Scholar

Rockoff, Jonah E., Brian A. Jacob, Thomas J. Kane & Douglas O. Staiger. 2010. Can you recognize an effective teacher when you recruit one? Education Finance and Policy 6(1). 43–74. https://doi.org/10.1162/edfp_a_00022.Search in Google Scholar

Rubin, Herbert J. & Irene S. Rubin. 2011. Qualitative interviewing: The art of hearing data. Thousand Oaks, CA: Sage Publications.Search in Google Scholar

Seo, Hyunjin, J. Brian Houston, Leigh Anne Taylor Knight, Emily J. Kennedy & Alexandra B. Inglish. 2014. Teens’ social media use and collective action. New Media & Society 16(6). 883–902. https://doi.org/10.1177/1461444813495162.Search in Google Scholar

Seo, Hyunjin, Joseph Erba, Darcey Altschwager & Mugur Geana. 2019. Evidence-based digital literacy class for low-income African-American older adults. Journal of Applied Communication Research 47(2). 130–152. https://doi.org/10.1080/00909882.2019.1587176.Search in Google Scholar

Seo, Hyunjin, Yuchen Liu, Muhammad Ittefaq, Fatemeh Shayesteh, Ursula Kamanga & Annalise Baines. 2022. International migrants and COVID-19 vaccinations: Social media, motivated information management & vaccination willingness. Digital Health 8. 1–11. https://doi.org/10.1177/20552076221125972.Search in Google Scholar

Seo, Hyunjin, Azhar Iqbal & Shanawer Rafique. 2024. Attitudes toward AI, AI self-efficacy, and AI adoption: A survey of media students in Afghanistan and Pakistan. Studies in Media, Journalism, and Communications 2(2). 22–30. https://doi.org/10.32996/smjc.2024.2.2.4.Search in Google Scholar

Singer, Natasha. 2023. Despite cheating fears, schools repeal ChatGPT bans. The New York Times. https://www.nytimes.com/2023/08/24/business/schools-chatgpt-chatbot-bans.html?searchResultPosition=6 (accessed 24 August 2023).Search in Google Scholar

Sohn, Kwonsang & Ohbyung Kwon. 2020. Technology acceptance theories and factors influencing artificial Intelligence-based intelligent products. Telematics and Informatics 47. 101324. https://doi.org/10.1016/j.tele.2019.101324.Search in Google Scholar

Sousa, De, Weslei Gomes, Elis Regina Pereira de Melo, Paulo Henrique De Souza Bermejo, Rafael Araújo Sousa Farias & Adalmir Oliveira Gomes. 2019. How and where is artificial intelligence in the public sector going? A literature review and research agenda. Government Information Quarterly 36(4). 1–14. https://doi.org/10.1016/j.giq.2019.07.004.Search in Google Scholar

Strauss, Anselm & Juliet Corbin. 1994. Grounded theory methodology: An overview. In D. Denzin Norman & S. Loncoln Yonna (eds.), Handbook of qualitative research, 273–285. Thousand Oaks, CA: SAGE.Search in Google Scholar

Sun, Tara Qian & Rony Medaglia. 2019. Mapping the challenges of artificial intelligence in the public sector: Evidence from public health. Government Information Quarterly 36(2). 368–383. https://doi.org/10.1016/j.giq.2018.09.008.Search in Google Scholar

Talaue, Franklin G. 2023. Dissonance in generative AI use among student writers: How should curriculum managers respond? E3S Web of Conferences 426. 1058. https://doi.org/10.1051/e3sconf/202342601058.Search in Google Scholar

Venkatesh, Viswanath & Fred D. Davis. 2000. A theoretical extension of the technology acceptance model: Four longitudinal field studies. Management Science 46(2). 186–204. https://doi.org/10.1287/mnsc.46.2.186.11926.Search in Google Scholar

Wang, Faming, Ronnel B. King, Ching Sing Chai & Ying Zhou. 2023. University students’ intentions to learn artificial intelligence: The roles of supportive environments and expectancy–value beliefs. International Journal of Educational Technology in Higher Education 20(1). 51. https://doi.org/10.1186/s41239-023-00417-2.Search in Google Scholar

World Bank. 2024. The World Bank in Brazil. https://www.worldbank.org/en/country/brazil/overview (accessed 1 December 2024).Search in Google Scholar

Yilmaz, Ramazan & Fatma Gizem Karaoglan Yilmaz. 2023. The effect of generative artificial intelligence (AI)-based tool use on students’ computational thinking skills, programming self-efficacy and motivation. Computers and Education Artificial Intelligence 4. 100147. https://doi.org/10.1016/j.caeai.2023.100147.Search in Google Scholar

Zhang, Baobao & Allan Dafoe. 2019. Artificial intelligence: American attitudes and trends. Oxford, UK: Center for the Governance of AI, Future of Humanity Institute, University of Oxford.10.2139/ssrn.3312874Search in Google Scholar

© 2025 the author(s), published by De Gruyter, Berlin/Boston

This work is licensed under the Creative Commons Attribution 4.0 International License.

Articles in the same Issue

- Frontmatter

- Original Research Articles

- Comparative analysis of AI attitudes among JMC students in Brazil and the US: a mixed-methods approach

- Digital technology races between China and the US: a critical media analysis of US media coverage of China’s rise in technology and globalization

- Framing Huawei: from the target of national debate to the site of international conflict in Canada’s mainstream online newspaper

- “We are no scapegoat.”: analyzing community response to legislative targeting in U.S. Texas State Senate bill 147 discourse on Twitter (X)

- Displaying luxury on social media: Chinese university students’ perception of identity, social status, and privilege

- Understanding content dissemination on sensemaking: WHO’s social listening strategy on X during the initial phase of COVID-19

- Featured Translated Research Outside the Anglosphere

- Russian TV channels and social media in the transformation of the media field

Articles in the same Issue

- Frontmatter

- Original Research Articles

- Comparative analysis of AI attitudes among JMC students in Brazil and the US: a mixed-methods approach

- Digital technology races between China and the US: a critical media analysis of US media coverage of China’s rise in technology and globalization