Abstract

Purpose

This study examines and compares online incivility on China’s Weibo and the U.S.’s X (Twitter) amid the Russia-Ukraine conflict, aiming to unravel how different cultural and geopolitical contexts influence online incivility and identify factors that may influence the occurrence of online incivility in different national contexts.

Design/methodology

This study collected and analyzed over 80,000 social media posts concerning the Russia-Ukraine conflict. By employing machine learning methods and moderation tests, this study compares online incivility in different country contexts.

Findings

Twitter and Weibo show different level of online incivility across eight months in the discussion of Russia-Ukraine war. Conflict frame and negative sentiment both positively predict online incivility on Twitter and Weibo and these two factors both show higher prediction power on Twitter than on Weibo.

Practical implication

This study highlights the necessity for platforms like X (Twitter) and Weibo to refine their moderation systems to address the predictors of online incivility, particularly negative sentiment and conflict framing.

Social implication

This study provides evidence that cultural differences significantly impact online communication patterns and norms. It also finds that non-anonymous users might exhibit more uncivil behavior in politically charged discussions, seeking social approval.

Originality/value

This research is one of the few studies to compare online incivility and its impact factors between China and the United States social media platforms. It shows how cultural differences influence the prevalence and predictors of online incivility and distinguishes the roles of negative sentiment and conflict framing in fostering incivility, with novel findings that challenge conventional beliefs about the impact of user anonymity on online discourse.

1 Introduction

After the Russian government recognized Donetsk and Luhansk People’s Republics in February 2022, Russia–Ukraine tensions surged, reminiscent of the 2014 Crimea incident. The military actions initiated by these two countries mark one of the most intensive warfare between two sovereign states since the 2008 Russo–Georgian War. Social media platforms have also come to the forefront as the war broke out. These platforms have not only burgeoned as arenas for public political discussions but also as hubs for irrational online incivility, characterized by offensive comments or replies. Such online incivility can adversely reshape democratic functions by affecting people’s perceptions of the political climate and discourse, shewing political trust and participation, and amplifying online opinion polarization (Lee et al. 2019; Otto et al. 2020).

While scholars argue that incivility is shaped by cultural background, contextual norms, and political and media systems, studies on cross-cultural differences offer mixed findings about whether such cross-country differences in political discussion exist (Britzman and Kantack 2019; Marien et al. 2020; Mutz 2015). While prior studies have predominantly concentrated on one single nation or nations with similar cultural and political frameworks, this study examines and compares online incivility on Chinese (i.e., Weibo) and the U.S. (i.e., X/Twitter) social media platforms amid the Russia–Ukraine conflict, aiming to unravel how different cultural and geopolitical contexts influence online incivility and identify factors that may influence the occurrence of online incivility in different national contexts.

This research contributes to the field of political communication by expanding the theories of incivility, which has traditionally been developed within the Western context, but may not fully capture the dynamics in a non-West setting. This study seeks to deepen the theoretical understanding of how political cultures and media environments could possibly influence the manifestation of incivility in the public discourse. Furthermore, by incorporating framing theory into the theoretical framework, this study also provides insights into how media framing, especially conflict frames, could possibly shape online public discourse in both China and the United States, expanding its application in the understanding the formation and expression of online incivility across diverse cultural and geopolitical contexts.

2 Literature review

2.1 Definition and categorization of online incivility

Originating from early modernity and the European Renaissance (Flores et al. 2021), incivility in the political discussion has been classified into two categories: (1) personal-level incivility and (2) public-level incivility (Muddiman 2017). Personal-level incivility, which includes behaviors such as swearing, name-calling, vulgarity, or aspersion (Coe et al. 2014; Cortina et al. 2001; Luparell 2011), highlights a tendency to avoid face-threatening acts (Muddiman 2017) and consider civility as the proper manner and tone in which interactions are carried out in everyday life (Boyd 2006). The public-level incivility, conversely, refers to behaviors involving violation of political and deliberative norms, which includes behaviors like prevent a respectful and inclusive discussion, refusing to recognize others’ views, and putting forward personal benefit in the political discussion (Muddiman 2017; Stryker et al. 2016). This differentiation is informed by Papacharissi’s (2004) distinction of incivility and impoliteness. She argues that incivility requires threats to democracy or others’ rights as opposed to merely improper communicative behaviors labeled as impoliteness. This definition is widely applied in political communication studies, defining incivility as a mode of offensive discussion that impedes the democratic ideal of deliberation or threatens democratic pluralism (Anderson et al. 2014; Rossini 2020).

This research will employ the definition of incivility from Stryker et al. (2016), which categorized it into three types: (a) utterance incivility, such as name-calling and swearing; (b) discursive incivility, which inhibits a respectful and inclusive discussion, for example by interrupting someone with a differing viewpoint; and (c) deception incivility, which focus on the outright lying, exaggeration of the fact, and providing misleading information. This definition combines both the linguistic and the political attributes of online incivility. Previous studies on platforms like X (Twitter) and Weibo have demonstrated the presence of incivility in political discussions on these platforms (Song and Wu 2018; Trifiro et al. 2021). Therefore, this study proposed the following research question.

RQ1:

Does the degree of online incivility differ between social media posts in China (i.e., Weibo) and those in the United States (i.e., X/Twitter) when discussing the Russia–Ukraine War?

RQ2:

How do users express online incivility differently between social media posts in China (i.e., Weibo) and those in the United States (i.e., X/Twitter) when discussing the Russia–Ukraine War?

2.2 Media attributes of online incivility

2.2.1 Journalistic culture attributes

Previous research indicates that personal level of incivility is heavily influenced by exposure to incivility in media (Muddiman 2013). Wu (2015) raises the issue that different cultures have unique and potentially conflicting social norms when investigating incivility in public settings. Such differentiation in incivility in a cross-culture context could be explained by the media systems and news reporting culture (Otto et al. 2020). They argue that different media systems and levels of mediated conflict for adverse reporting are two of the critical variables to explain incivility in cross-country context.

Journalistic cultures and media systems are crucial in shaping how the public defines the boundary of incivility, which leads to differences in how journalists perceive and include hyperbole, negativity, and conflict in their daily work (Otto et al. 2020). Comparing the representative state-controlled media system and the North Atlantic liberal media system, Xue and Wang (2012) proposed that Chinese and American news media systems are different in report framing, risk control, the goal of reporting, and contextual culture (Table 5).

Most Chinese media act as the mouthpiece of the Party and the government, which has long been tasked with guiding public opinion, directing political work, and conveying the voice of the party and government. In reporting a significant event, Chinese media tend to adopt leader frames to illustrate the government leaders’ reactions to the event and deliver positive and objective information to the public to decrease the public perception of risks (Xue and Wang 2012). The consequence of this reporting tendency can lead the journalists to insert less incivility expression in their news reporting behaviors.

Instead, the news media in the United States represent the North Atlantic liberal media system (Hallin and Mancini 2004), which is relatively independent of the government and is led by the news market. To gain public attention and expand Market share and media discourse, U.S. news media would consider more about news and market value in its choice of topics and perspectives. However, competency in the commercial news media system could also facilitate the usage of negativity and conflict (Bartholomé et al. 2015). With the rise of public opinion towards significant events, the news media in the U.S. tend to adopt the conflict frames to depict the views from either side of the events, which is emphasized through treacherous questions and negative criticism, to raise and keep public awareness (Otto et al. 2020; Xue and Wang 2012). Compared to the Chinese state-controlled media system, the news reporting strategy in the United States centers more on the conflict and criticism.

2.2.2 Framing attributes

The differences in media systems and journalistic culture will transfer into different news frames of the events reporting. As “interpretive packages” in news production and consumption that determine which viewpoint will be brought into an audience’s “field of perception”, news frames are found to have significant influences on how readers perceive and judge the content of news reports (Tewksbury and Scheufele 2009; Ojala and Pantti 2017). By suggesting specific problem definitions, causal diagnoses, remedies, and moral judgments of responsibility (Ojala and Pantti 2017), journalists could insert their interpretation of the incident into the news reporting frame and thus facilitate the audience to generate a particular understanding of an event or issue (Liu et al. 2019).

Defined as the media frame that contains “conflict between individuals, groups or institutions as a means of capturing audience interest (Semetko and Valkenburg 2000)”, the conflict news frame is widely used in political and war news reporting for its high news value to arouse public attention and to meet professional standards of balanced journalistic reporting (Maslog et al. 2006; Schuck et al. 2013). The framing of conflict consists of two processes the discourse use, which is shown by the emotional valance of words in the naming or labeling of the nature of the situation, and the development of issue that is performed by the continual interpretation of the conflict (Putnam and Shoemaker 2007).

Conflict frame is related to the adverse effects on the public. Esser et al. (2016) investigated the usage of conflict frames in different countries’ media systems. They revealed that countries with fewer number of political parties or with polarized political systems are more likely to cover the news with conflict frames using negative narrative, which can lead the journalists to use incivility expressions in the news reporting. Similarly, Bartholome et al. (2018) also founds that news articles with conflict frames were found to contains more strategy, personal attacks and incivility.

Despite frames traditionally being a characteristic of news reporting, study also find that the frames from the news agencies can also be found in the social media discussion (Mendelsohn et al. 2021), indicating that frames used by the media reporter can influence the frames used in the social media. Similarly, incivility can also transfer from news organization reportings to social media discussion. Su et al. (2018) found that comments under right wing news media Facebook posts are more likely to presents rudeness or extremely uncivil, matching the tendency that conservative commenters adapt more insulting and uncivil languages than the liberal commenters (Sobieraj and Berry 2011).

Therefore, the research proposed the following hypothesis.

H1:

Social media posts containing conflict frames will have higher level of online incivility than posts do not contain conflict frames.

2.3 User-central metrics attributes of online incivility

Considered one of the most related affordances to explain the formation of online incivility, the anonymity of the online platform allows the users to express their opinion uninhibitedly without considering any consequences (Rossini 2020). Conceptualized by Suler (2004), the online disinhibition effect reveals the discrepancy between individual languages in cyberspace and the natural world and explains how it impacts the formation of incivility. Suler introduced six factors that could explain two types of online disinhibition effects, positive/benign and negative/toxic. The positive/benign type refers to the “unusual acts of kindness and generosity that users express online (Suler 2004)”, while the negative toxic type refers to incivilities such as rude words or harsh criticisms that the user would not use in offline communication. As one of the six major factors, dissociate anonymity describes when people separate their real-life roles and identity and their online behaviors and hide some or all their identity. Such anonymity will decrease the sense of vulnerability about self-disclosing and being free from the responsibility of any toxic expression. Other literature also considers anonymity could foster online incivility. Experimental research discovered that the perceived credibility of commenters and the persuasive quality of public discussion could be negatively influenced by incomplete identity cues and uncivil commentary (Wang 2020). However, a study on the incivility of the X (Twitter) post of three democratic primary candidates during the 2020 election demonstrates that anonymity is not related to the incivility level of the post (Trifiro et al. 2021).

Apart from anonymity, another user-level factor that will lead to the formation of online incivility is sentiment. Operationalizing online incivility as swearing language, Song and Wu (2018) studied incivility in the Weibo discussion of the Hongkong–Mainland China conflict, discovering that negative emotions with high arousal levels are among the most influential factors in predicting online incivility. Stephens and Zile (2017) also studied the connection between negative emotion, high arousal levels, and incivility. After experimenting with games with different emotional arousal levels and negativity, Stephens discovered that swearing fluency significantly increased after playing the game with high emotional arousal and high negativity.

Therefore, apart from the media frames as the primary independent variable, the research also proposes negative sentiment as the variables that would impact online incivility:

H2:

Negative sentiment in social media posts is positively correlated with online incivility.

Meanwhile, the study proposes that the user-centric factor can moderate the positive relationships between negative sentiment, conflict frame and online incivility. Thus, this research proposes hypotheses H3 to H4 to explore the effect of anonymity on the relationship between negative sentiment, conflict frames, and online incivility.

H3:

Anonymity moderates the positive relationship between negative sentiment and online incivility such that this relationship is stronger if the social media user is anonymous.

H4:

Anonymity moderates the positive relationship between conflict frames and online incivility such that this relationship is stronger if the social media user is anonymous.

Figure 1 summarizes the conceptual framework of this study.

Theoretical framework.

3 Method and measurement

3.1 Platform selection

This research intentionally adopts X and Weibo as the research target, as these two popular microblogging platforms, originating from leading global powers, offers valuable perspective on how the international community views and interprets the conflict.

X (formerly Twitter) is a U.S.-based microblogging platform with 229 million monthly active users globally, including 95.4 million users in the United States as of 2022. The platform plays a significant role in facilitating political discourse, serving as a public sphere where citizens engage in horizontal discussions with one another and vertical interactions with policymakers (Jaidka et al. 2019). Given that political content, such as election campaigns, is frequently disseminated and discussed across multiple major social media platforms, X/Twitter provides a representative case for studying political discourse in the U.S. social media landscape.

Sina Weibo (Weibo) is a Chinese microblogging social media platform that owns 586 million monthly active users in 2022 Q4. It features platform structure similar to the X/Twitter, allowing users to publicly post and share content including short texts, image, and videos. Weibo is also one of the first three social media platforms officially utilized by both Chinese central and regional government as the official political information outlets, making it one of the most popular and influential platforms in China. The integration of official Chinese government and media on Weibo, combined with its original user-driven discussion basis, has created a multi-layer public sphere where diverse voice coexist (Rauchfleisch and Schäfer 2015) despite the platform still follows national censorship policy. The multi-layer public sphere pattern combining its open-content design, where content is visible to all users, helps Weibo to be positioned as a microcosm of the broader Chinese public sphere. Consequently, Weibo serves as a representative case for studying political discourse on Chinese social media.

The comparability of X (Twitter) and Sina Weibo comes from their similar platform structure, user behaviors, and their similar function in amplifying public discourse and social movements. Twitter and Weibo are both private-owned microblogging platforms, allowing users to share posts with short texts, images, videos, or links. The character limits on the two platform (280 characters for X, 140 Chinese characters for Weibo originally) both encourage users to use concise language in the communication to emphasize their opinion. In terms of user behaviors, both Twitter and Weibo use hashtags and keywords/key phrases rather than the posts by friends in the more private social network sites. This approach of content organization and trends highlighting allows for the rapid aggregation of public discussion around specific topic. Furthermore, Twitter and Weibo have proven to be powerful tools for amplifying public opinion and playing central role in organizing and promoting multiple social movements (Huang and Sun 2016; Huszár et al. 2022).

Based on the formulated research questions and hypothesis, this study utilizes data obtained from both original and shared posts on X (Twitter) and SINA Weibo pertaining to the Russia–Ukraine conflict. The X (Twitter) data was collected utilizing the X (Twitter) Academic API, while the Weibo data was gathered using a Python-based web scraper. The data collection process involved capturing posts from both platforms that featured one or more keywords or hashtags related to the Russia–Ukraine conflict. Furthermore, the selected posts were limited to those published between February 27, 2022, and September 27, 2022.

To retrieve data from Twitter Academic API, the research used a query-based Python script to search in X (Twitter) historic database for all English tweets contains one or more of keywords words from the keyword list. 44,179 tweets were collected within the time range. The keyword list is built on the top 10 most popular Russian–Ukraine war hashtags and keywords identified in previous research datasets (Chen and Ferrara 2023). The specific keywords include Ukraine, Russia, Putin, Standwithukraine, Kyiv, etc. (Table 1). All the tweets were collected exclusively from the users with geolocation setting in the U.S.

Search hashtags and keywords for X (Twitter) and Weibo.

| X (Twitter) | |

|---|---|

| #ukraine | 乌克兰 (Ukraine) |

| #russia | 俄罗斯 (Russia) |

| #putin | 俄乌 (Russia–Ukraine) |

| #standwithukraine | 基辅 (Kyiv) |

| #ukrainerussiawar | 普京 (Putin) |

| #nato | 泽连斯基 (Zelensky) |

| #russian | 俄方 (Russian side) |

| #ukrainian | 北约 (NATO) |

| #kyiv | 乌方 (Ukrainian side) |

| #ukrainewar | 俄军 (Russian Army) |

The research used a Python-based web scraper to search for and download 41,806 Weibo post. Due to the missing of API permission, the searching function of the scraper was built on the integrated searching function of Weibo website instead of accessing to the Weibo historic dataset. The search keywords were compiled based on the top 10 most popular Russian–Ukraine war keywords from prior research dataset (Fung and Ji 2022). The specific keywords include 乌克兰 (Ukraine), 俄罗斯 (Russia), 俄乌 (Russia Ukraine), 基辅 (Kyiv), 普京 (Putin), etc. (Table 1). The geolocation limit was not applied in Weibo data collection.

All data collected from X (Twitter) and Weibo contains the content of the posts, the time stamps of initial posting behavior, the user engagement data including count of likes, comments, and retweets, and the account name and follower numbers of the post composer.

3.2 Measurements

3.2.1 Online incivility

Following Stryker et al.’s (2016) conceptualization of incivility of utterance and discursive incivility, this research operationalizes the incivility by combining two dimensions, linguistic level utterance incivility and the political and behavioral level of discursive incivility.

The linguistic level utterance incivility refers to all kinds of swearing or foul language and can be identified using a set of impolite keywords (Lee et al. 2019). This research distinguishes the level of utterance incivility of each post using the utterance incivility score. The utterance incivility score of each post was calculated by the ratio of number of impolite keywords to the number of total words of the post. The calculation is shown below.

The utterance incivility score yielded an index value ranging from 0 to 1, where 0 indicates no impolite words were detected in the post, and 1 refers that the post is composed entirely of impolite terms. The impolite words derived from three perspectives, name-calling, vulgarity, and demonization of political entities from online sources. 4,163 unique words or terms were collected in the English dirty word list while the Chinese counterpart comprised 10,347 unique words and terms. The example of the utterance score for X (Twitter) and Weibo are shown in the Table 2.

Examples of utterance incivility posts on Weibo and X (Twitter).

| Levels of utterance incivility | X (Twitter) | |

|---|---|---|

| High (utterance incivility score ≥ 0.1) | Shit ass #Putin Hey #fsb #Russia go fuck yourselves! #putin sucks |

拜登还怪俄乌冲突, 这不是你们美国这些王八羔子一手怂恿乌克兰人惹恼俄罗斯人才发起的战争嘛? 能怪谁? 怪你们美国没有一个好总统和好内阁, 这些事情只能由美国人民自己埋单, 而你们这些无耻政客, 终将遭到报应。种下的恶因, 必结恶果偿还于你们这些无耻政客 |

| Low (0.1 > utterance incivility score > 0) | Do you see now, how much the world has turned it’s Back On #Ukraine ??? You will remember this, never forget; Now is the time to kill Russians. What monster does this? | 乌军士兵发视频抱怨战况严峻”他们会说:他们用什么射击你? 除了船上的, 其他一切都在开火, 艹。我快要完蛋了。xx, 第三天了, 该死。这是晚上7点。他们早上5点开始。休息时间有2分钟。他们甚至不让你抬头环顾四周。”在顿巴斯的另一名不幸的乌克兰武装部队士兵展示了与俄罗斯军队作战的意义。这与他们8年来一直告诉全世界的情况有些不同。 |

| None (utterance incivility score = 0) | It’s not #Putin’s war, folks. The whole society in #Russia is full of hate and self supremacist. It’s rotten down to the roots. Nothing to fix it other than to cut all ties and build walls and self defense to keep them out. | #俄罗斯##乌克兰##战争# 4月15日俄乌战争双方态势图。与前一天比较没有大的变化, 不过乌军对科尔松的攻击取得一些进展, 更加逼近科尔松市区。 |

This study applies toxicity detection to classify the posts through Perspective API for the discursive incivility post that contains behaviors that prevent discussion. Developed by Jigsaw and Google, Perspective API uses Convolutional Neural Network (CNN) to train the model to identify toxic comments, defined as “rude, disrespectful, or unreasonable that is likely to make someone leave a discussion” by the API development team. Based on the classification, the API returns a toxicity score for each text piece that is ranging from 0 to 1. The higher score indicates a higher possibility that the reader will perceive the text as toxic. According to the research by Jiang and Vosoughi (2020), highly toxic tweets usually receive a toxicity score of over 0.8, while effectively masking tweets with slurs and attacks is given scores between 0.3 and 0.5 through the Perspective API. The example of the discursive score for X (Twitter) and Weibo are shown in the Table 3.

Examples of discursive incivility on Weibo and X (Twitter).

| Levels of discursive incivility | X (Twitter) | |

|---|---|---|

| High (toxicity score ≥ 0.8) | Sick Russian bastards! this shit so sad man. #Ukraine | 圣母婊们看清楚, 乌俄战争里别的国家给乌克兰送钱送武器你美爹屁都不放一个, 天天掐着我们中国明里暗里套话企图找到我们支持俄罗斯的言论, 我们中国是一个独立国家!!!独立国家!!!凭什么被人这样掐, 收了美爹钱的抓紧时间用钱给自己换身白皮, 不然警告你俄罗斯倒下以后下一个说不定就是我们, 而你美爹可不在乎你黄皮底下装的是不是美利坚自由之心, 上赶着给人舔地的垃圾玩意儿, 真白瞎我中国给你的国籍! |

| Low (0.8 > toxicity score ≥ 0.5) | Now …. I am down to support #Ukraine, but to ignore this level of money for America’s poor, especially US Veterans is absurd! | 俄乌战争让人联想到七九年中国同越南那场战争。中国为了教训吃里扒外忘心负义小覇越南。以雷霆之势直打到河内边上。解气, 。俄罗斯也可以照此办理。非得把乌克兰打趴下不可。换一个明白事理的人管理乌克兰。 |

| None (toxicity score < 0.5) | President Biden has nominated US diplomat Bridget Brink as Ambassador to Ukraine | 城市本是温馨家园, 如今只剩断壁残垣。老奶奶在满地瓦砾间缓慢移动, 俄军士兵搀扶帮忙, 另外一名俄军士兵也放下枪, 前来共同帮助老奶奶 … … 战争无情人有情, 有血有肉的士兵才是无敌的存在。#俄罗斯乌克兰战况##俄乌局势最新进展# |

Given that both scores possess a range of 0–1, the study assigned a weight of 0.5 to each, resulting in an incivility score comprised of 50 % toxicity score and 50 % utterance incivility score. The aggregate incivility score maintains a range from 0 to 1, with higher scores indicative of elevated levels of online incivility.

3.2.2 Anonymity

According to the definition set forth by Peddinti et al. (2017), anonymity in social media is characterized by user accounts that do not display an actual human first or last name. As a result, the presence of a human name within an account function as a significant predictor of online anonymity. This study utilized Spacy’s default trained pipeline for named entity recognition to detect human names in the usernames of both X (Twitter) and Weibo users. The Spacy named entity recognizer pipeline[1] is capable of identifying named entities in English and Chinese text and is built upon the CNN model, which achieves the highest recognition accuracy when comparing to other pre-build library in name entity recognition task (Shelar et al. 2020). The named entity recognition algorithm produces two values when detecting a named entity in the text: the entity label (e.g., companies, locations, people’s names) and the detected entity text. If any portion of the username is classified as a person’s name, the study assigns a label of 1 (Not Anonymous); conversely, usernames containing no person’s name are labeled as 0 (Anonymous). In X (Twitter) dataset, 19,774 (44.76 %) out of 44,179 posts are from anonymous users and 24,405 (55.24 %) posts are from non-anonymous users. As a comparison, 32,289 (77.25 %) out of 41,798 posts are from anonymous users and 9,509 (22.75 %) posts are from non-anonymous users in Weibo dataset. It should be noted that, even though some individuals may include celebrities’ names in their user account names, this research categorizes all user accounts featuring any person’s name in their username as non-anonymous accounts.

3.2.3 Conflict frames

To classify whether a post contains conflict frame, this research applied BERT-based models fine-tuned on a sample of 500 manually coded data points (1 % ± 0.5 %) from both X (Twitter) and Weibo datasets. The manual codebook of conflict framing consists of four sub-variables: (1) two or more sides of a problem or issue, (2) any conflict or disagreement, (3) a personal attack between two or more actors or (4) an actor’s reproaching or blaming another (Schuck et al. 2013). Each post in the 500-post dataset was manually labeled with a binary value of 0 (no conflict frame) or 1 (contains conflict frame) based on the presence of one or more of the four conflict frame categories.

The standard data preprocessing steps encompassed tokenization, lowercasing, and the removal of stop words, links, and special characters. For the Weibo dataset, word segmentation and text summarization were employed with additional preprocessing steps. All Weibo posts were segmented into word tokens using Jieba’s accurate mode and subsequently joined as sentences with spaces. Weibo posts exceeding the 512-token maximum limit of the BERT model were condensed into 1–5 sentences with TextRank4zh algorithm, maintaining a token count below 512. Following preprocessing, the dataset was partitioned into training and validation sets at a 60:40 ratio.

For conflict frame detection in X (Twitter) dataset, this research employs the BERT base model with an additional single linear layer for binary classification on top of the pretrained BERT model. The model was trained using the AdamW optimizer with a learning rate of 3e-6, batch size of 16, and trained for 10 epochs. The training was terminated early at fourth epoch when the validation loss started to increase, in order to prevent overfitting. Accuracy and F1 score were chosen as evaluation metrics to assess the performance of the BERT model and compare it to traditional SVM methods. The fine-tuned BERT model achieved an accuracy of 70.0 % and an F1 score of 0.709 on the validation dataset. As a comparison, the traditional SVM classifier achieved an accuracy of 63.3 % and an F1 score of 0.658 with the same training and validation dataset. The fine-tuned BERT model was then applied to detect the conflict frame in the full X (Twitter) dataset.

For Weibo dataset, the “BERT-wwm-ext Chinese” model[2] (Cui et al. 2021) pretrained by Joint Laboratory of HIT and iFLYTEK Research (HFL) was utilized to detect conflict frame. This model employs the same architecture as the BERT-base model, comprising 12 layers, 768 hidden units, and 12 attention heads, and was pretrained with 5.4 billion Chinese texts data from Chinese Wikipedia, other online encyclopedias, and internet news articles. In this research, the pretrained model was also fine-tuned by adding a single linear layer for binary classification and was trained using the AdamW optimizer with a learning rate of 1e-6, batch size of 16, and trained for 10 epochs. The training was early stopped at fifth epoch when the validation loss started to increase to prevent overfitting. The fine-tuned BERT model achieved an accuracy of 69.5 % and an F1 score of 0.788 on the validation dataset while the SVM classifier achieved an accuracy of 61.5 % and an F1 score of 0.724 using the same training and validation dataset. The fine-tuned model was subsequently applied to detect the conflict frame in the full Weibo dataset.

3.2.4 Negative sentiment

Following the classification methods in Song and Wu’s (2018) paper, the sentiment of online comments can be divided into three valance-based categories: positive, neutral, and negative. This research used the pre-trained X (Twitter) sentiment classifier developed by Cardiff NLP (Barbieri et al. 2020) to classify the sentiment for the English texts. The classifier is based on the RoBERTa model pretrained on a 58 million tweets dataset and finetuned with the sentiment analysis dataset of TweetEval for sentiment analysis task. The output of the Cardiff NLP sentiment analysis returns three probability score, positive, neutral, and negative, ranging from 0 to 1, to indicate the probability of the text belongs to each of the three sentiments. The Erlangshen sentiment classifier (Wang et al. 2022), which is based on the RoBERTa model, was used for the sentiment detection of Chinese-based texts. The model was trained with 110 million social media data and fine-tuned with 227,347 sentiment samples. The output of the model is two variables, ranging from 0 to 1, indicating the probability of the text belongs to positive and negative sentiment. Either of the sentiment classifiers produces the probability of the texts being classified as negative sentiment, which was used for the negative sentiment score in this research.

3.3 Data analysis

Upon completion of data collection, measurements for each variable were computed via computer-assisted content analysis. To address RQ1, descriptive statistics was used to summarize and compare the usage of incivility on X (Twitter) and Weibo to examine whether the degree of online incivility differs between social media users in China and in the United States when discussing the Russia–Ukraine War. Hypotheses 1 through 4 were tested using Hayes’ PROCESS macro model 1 to investigate the moderation relationships between variables.

4 Results

4.1 Descriptive statistics

After preprocessing the data, 44,179 tweets and 41,806 Weibo posts were used in the analysis. In response to RQ1, the descriptive analysis result of the incivility score reveals a differentiation in the level of online incivility across two platforms in the discussion of Russia–Ukraine war. There were mild fluctuations observed over the eight months. The average incivility score for X (Twitter) over the 8-month period was 0.115 (M TwitterIncivility = 0.115, SDTwitterincivility = 0.101), with the highest score of 0.120 in July and the lowest score of 0.111 in September. Weibo’s cumulative incivility score averaged at 0.089 (M Weiboincivility = 0.089, SDWeiboincivility = 0.052), with February marking the highest monthly score at 0.095 and March the lowest at 0.085 (Figure 2). A two-sample independent t-test was conducted to compare the means of X and Weibo for incivility score. The result (t = 44.843, p < 0.001) indicates that the difference between two groups is highly statistically significant.

Average incivility score of X (Twitter) and Weibo post from Feb to Sep 2022.

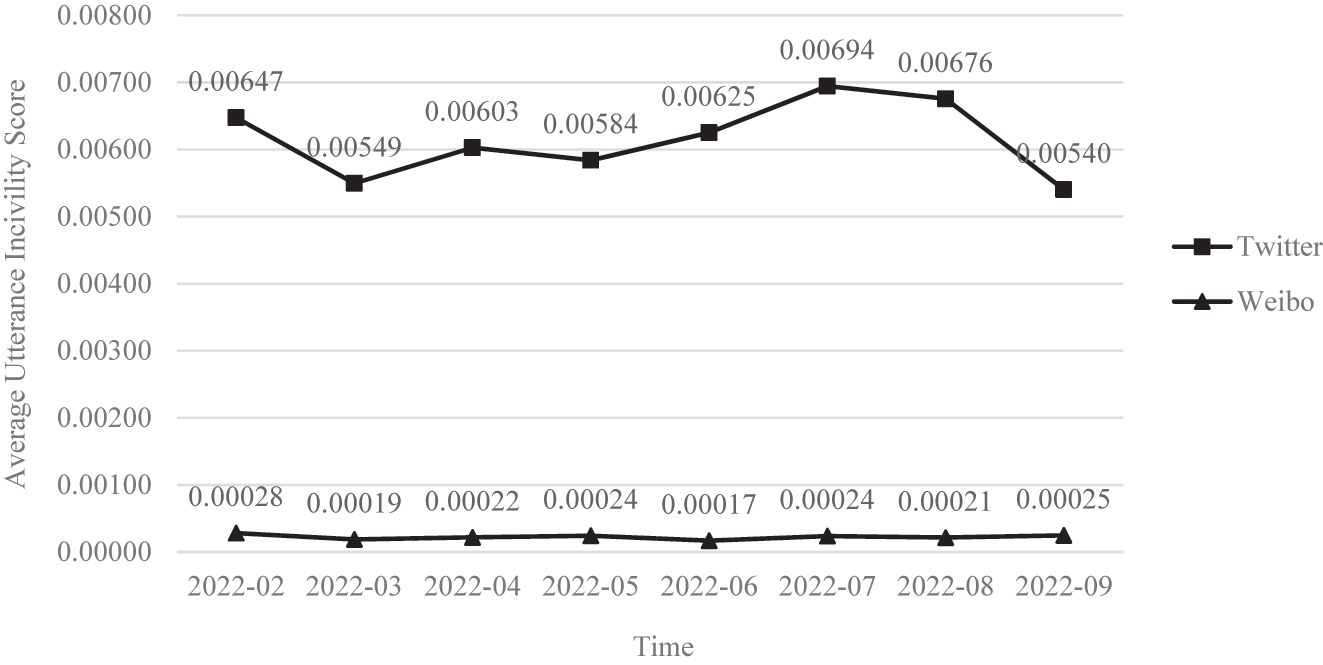

For the utterance incivility, 4,880 X (Twitter) posts (11.1 % of 44,180) included words from the designated incivility lexicon. X (Twitter)’s utterance incivility score (M Twitteruttrance = 0.006, SDTwitterutterance = 0.023) peaked in July at 0.00694 and dipped to 0.00540 in September. On Weibo, 1,313 posts (3.1 % of 41,798) featured incivility lexicon terms. Weibo’s average utterance incivility score was at 0.0002 (M Weiboutterance = 0.0002, SDWeiboutterance = 0.002) over the same period, hitting a maximum of 0.00028 in February and a minimum of 0.00017 in June (Figure 3). However, it is also noticeable that the average length of the Weibo posts (M Weibolength = 223.38, SDWeibolength = 235.08) is significantly longer than the average length of X (Twitter) post (M Twitterlength = 25.13, SDTwitterlength = 13.33), which potentially contributes to the large discrepancy in the utterance scores of the two datasets. A two-sample independent t-test was conducted to compare the means of X and Weibo for utterance incivility score. The result (t = 48.582, p < 0.001) indicates that the difference between two groups is highly statistically significant.

Average utterance incivility score of X (Twitter) and Weibo post from Feb. to Sep. 2022.

For the discursive incivility, 4,183 tweets (9.5 %) are found to have a score above 0.5, indicating a toxic content, with an average of 0.224 (M Twitterdiscursive = 0.224, SDTwitterdiscursive = 0.190), across eight months – highest in July (0.234) and lowest in September (0.217). Compared to X (Twitter), Weibo have fewer posts with toxicity score over 0.5, at 348 (0.3 %), with an overall toxicity average of 0.177 (M Weibodiscursive = 0.177, SDWeibodiscursive = 0.103), reaching its apex in February (0.189) and its bottom in March (0.169) (Figure 4). A two-sample independent t-test was conducted to compare the means of X and Weibo for discursive incivility score. The result (t = 41.794, p < 0.001) indicates that the difference between two groups is highly statistically significant.

Average toxicity score of X (Twitter) and Weibo post from Feb to Sep 2022.

The research then counted the top 20 keywords of the posts with an online incivility score of over 0.3 for both X (Twitter) (N = 2,657) and Weibo (N = 97) datasets. The keyword list only contains nouns and verbs to focus on the most impactful terms that contribute to the sentiment and context of the discussions.

The word frequency analysis (Table 4) reveals similar thematic focuses and emotional intensities between the two platforms. On X (Twitter), keywords such as “Putin” (1,071 mentions), “Russia” (609 mentions), “Ukraine” (540 mentions), and “war” (273 mentions) are prevalent, highlighting the central figures and geopolitical entities involved in the conflict. On Weibo, terms like “乌克兰” (Ukraine, 260 mentions), “俄罗斯/毛子” (Russia, 263 mentions), and “俄乌战争/战争” (Russia–Ukraine War/war, 162 mentions) dominate the conversations, indicating a similar focus on the geopolitical conflict.

Top 20 keywords in the uncivil posts (incivility score > 0.3).

| X (Formally Twitter) | Frequency | Frequency | |

|---|---|---|---|

| Putin | 1,071 | 乌克兰 (Ukraine) | 260 |

| Russia | 609 | 俄罗斯/毛子 (Russia) | 263 |

| Ukraine | 540 | 俄乌战争/战争 (R-U War/war) | 162 |

| Russian | 473 | 美国 (the United States) | 153 |

| F*ck | 410 | 中国 (China) | 110 |

| People | 337 | 国家 (National) | 55 |

| Go | 302 | 北约 (NATO) | 45 |

| Have | 284 | 世界 (World) | 42 |

| War | 273 | 局势 (State (of fair)) | 40 |

| Sh*t | 237 | 西方 (Western) | 36 |

| Kill | 227 | 狗/走狗 (Dog/lackey) | 36 |

| Standwithukraine | 226 | 无人机 (Drone) | 27 |

| Trump | 201 | 欧洲 (Europe) | 27 |

| A*s | 191 | 领土 (Territories) | 24 |

| Say | 167 | 总统 (President) | 23 |

| World | 166 | 顿巴斯 (Donbas) | 23 |

| Stop | 152 | 普京 (Putin) | 21 |

| Need | 151 | 最新进展 (Latest Update) | 20 |

| Ukrainerussiawar | 151 | 历史 (History) | 19 |

| Country | 148 | 和平 (Peace) | 19 |

For the uncivil words, the frequent use of profanity on X such as “f*ck” (410 mentions) and “sh*t” (237 mentions) suggests a high level of emotional expression and anger among users. The frequent occurrence of terms on Weibo like “狗/走狗” (dog/lackey, 36 mentions) also indicates the presence of derogatory language and strong disdain on the Chinese platform.

4.2 Relationship of negative sentiment, conflict frame, and online incivility

The hypotheses H1–H4 postulated that the negative sentiment and conflict frame present in social media posts would influence the level of online incivility. These effects were projected to be moderated by anonymity and mediated by online incivility. We used the PROCESS macro model 1 to test the moderate model and evaluate the direct and indirect impacts of two independent variables, Negative Sentiment and Conflict Frame, on the online incivility.

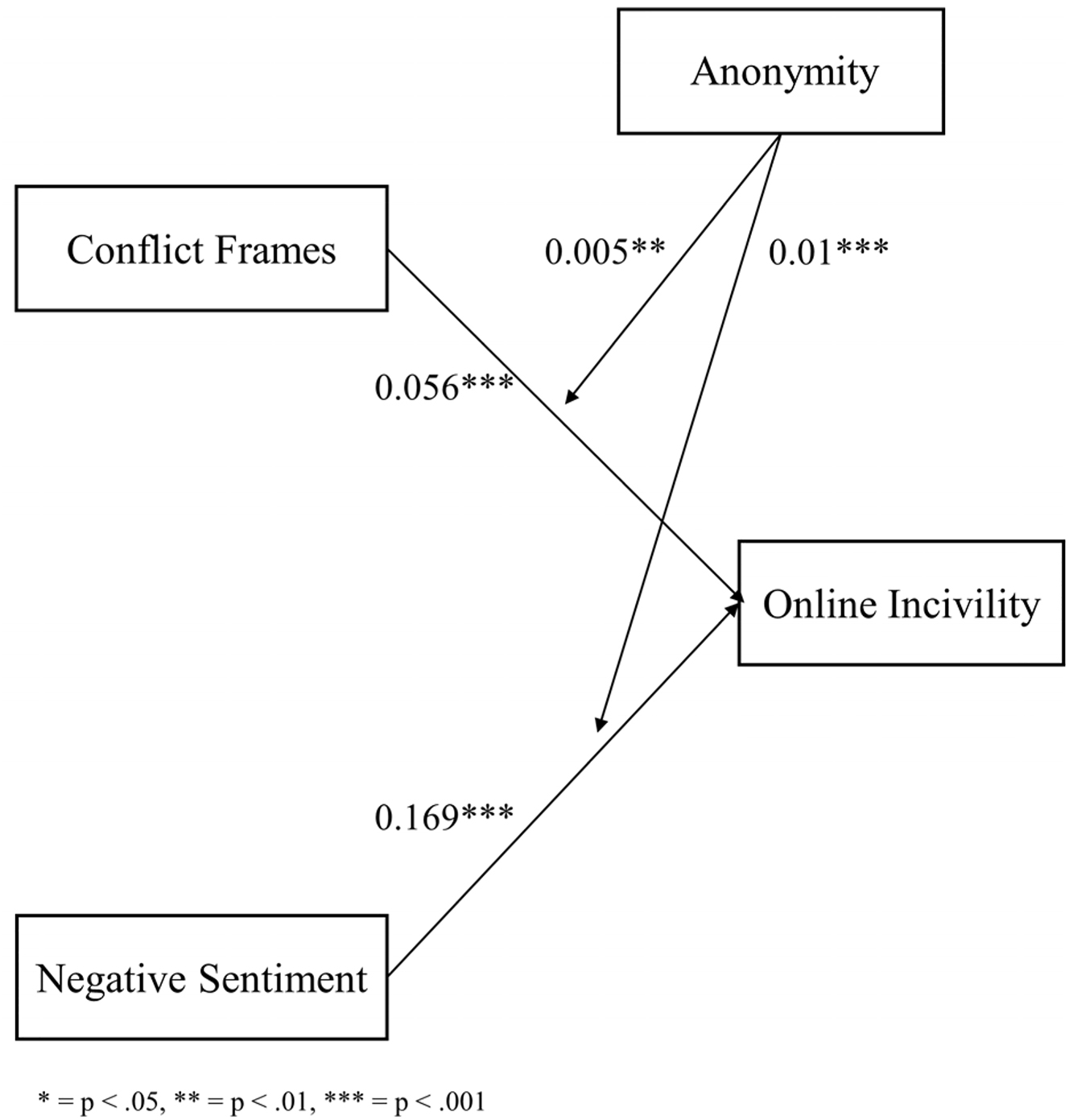

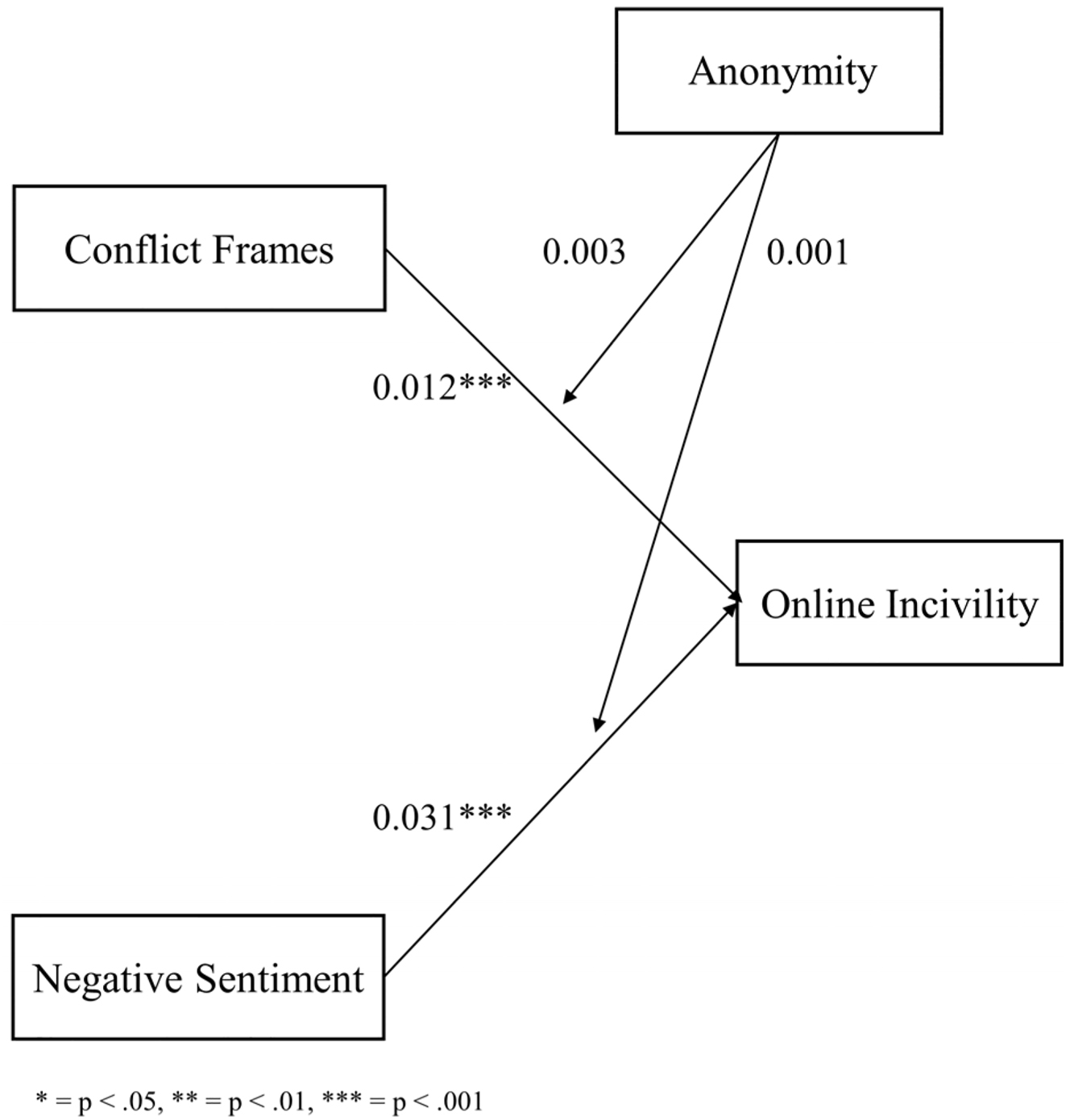

H1 proposed that the conflict frames in a post act as a positive predictor for the online incivility. This hypothesis was corroborated in both X (Twitter) (b = 0.056, SE = 0.001, t = 60.557, p < 0.001, 95 % CI [0.054, 0.058]) and Weibo (b = 0.012, SE = 0.001, t = 19.184, p < 0.001, 95 % CI [0.011, 0.013]) datasets. The empirical findings affirm a significant positive direct association between the presence of conflict frames and the extent of incivility.

H2 posited that the negative sentiment inherent in social media posts serves as a positive predictor of online incivility. This hypothesis was substantiated in both X (Twitter) (b = 0.169, SE = 0.001, t = 153.876, p < 0.001, 95 % Confidence Interval [0.166, 0.170]) and Weibo (b = 0.031, SE = 0.001, t = 36.290, p < 0.001, 95 % Confidence Interval [0.029, 0.033]) datasets. The results reveal a statistically significant positive direct correlation between negative sentiment and the extent of incivility.

H3 proposed that anonymity moderates the positive effect of conflict frame on online incivility such that the effect is positive and stronger when the username is anonymous. This hypothesis is rejected in both X (Twitter) and Weibo dataset.

For X (Twitter) data, the H3 was rejected. The interaction between conflict frame and anonymity significantly influenced online incivility (b = 0.005, p = 0.005), which implies that the effect of conflict frame on incivility was more pronounced in non-anonymous contexts. For Weibo data, the H3 was also rejected. The interaction between negative sentiment and anonymity in the regression model was not statistically significant (b = 0.003, p = 0.112).

H4 proposed that anonymity moderates the positive effect of negative sentiment on online incivility such that the effect is positive and stronger when the username is anonymous. This hypothesis is also rejected in both X (Twitter) and Weibo dataset.

For X (Twitter) data, the H4 was rejected. The interaction between negative sentiment and anonymity significantly influenced online incivility (b = 0.010, p < 0.001). Specifically, the effect of negative sentiment on incivility was more pronounced in non-anonymous contexts. For Weibo data, the H4 was also rejected. The interaction between negative sentiment and anonymity in the regression model was not statistically significant (b = 0.001, p = 0.746). This implies that the slope of the relationship between negative sentiment and online incivility does not significantly differ based on the level of anonymity (Figures 5 and 6).

Hypothesis testing results of Twitter dataset.

Hypothesis testing results of Weibo dataset.

5 Discussion and limitation

This study examines the problem of online incivility related to the Russia–Ukraine war on American and Chinese social media. Despite the semantic themes about online incivility on social media being similar across countries, the levels of incivility in X (Twitter) and Weibo discussions are divergent within the same period. A possible explanation for the higher online incivility score in X (Twitter) discussions is that X (Twitter) has a loose content censorship mechanism and a more democratic expression online environment (Kim et al. 2021), especially in the discussion with radical conflicting context such as war. Furthermore, this result reflects cultural differences in online communication norms and expectations, which aligns with Strachan and Wolf’s (2012) finding that incivility is dependent on shifting social norms of appropriate behaviors between cultures.

Another finding of this study is that it provides further insight into the formation and effect of online incivility across different cultural and national contexts. Two predictors of online incivility, negative sentiment and conflict frame, are examined in this research. The results show that negative sentiment and conflict frames in social media posts serve as positive predictors of online incivility on both X (Twitter) and Weibo. The level of online incivility increases when the post presents more negative sentiment or a conflict frame. This finding aligns with previous research suggesting that negative sentiment with high arousal (Song and Wu 2018; Stephens and Zile 2017) will lead to incivility behaviors both online and offline. The result also supports the hypothesis that the adoption of a conflict frame will lead to a higher level of online incivility.

However, the predictive effects of the two predictors are not identical. Negative sentiment demonstrates a stronger predictive effect than conflict framing, suggesting that negative sentiment is more influential in forecasting online incivility. This could be explained by the fact that negative sentiment, including emotions such as anger, disgust, or frustration, is a direct emotional response that can be immediately and intuitively perceived by the reader. These strong emotions may elicit similar responses in the recipient, creating a foundation for the growth of incivility. On the other hand, although conflict framing can contribute to incivility, it is more dependent on the context and interpretation of the reader. It requires the reader to complete a cognitive process of identifying a special package of conflict-related keywords in the post. This means that its influence on incivility might be more indirect and could be moderated by other factors, such as the readers’ education level or their stance on the issue (Borah 2013). It’s also possible that negative sentiment is simply more prevalent in online discourse than conflict framing, thereby having more opportunities to incite incivility. Additionally, the coding table used in this research, which is commonly used in the coding of news articles instead of social media posts, may not capture the conflict framing on social media posts accurately.

It is also worth noticing that the prediction effects of negative sentiment and conflict frames are different across different countries and social media platforms. The negative sentiment and conflict frame showed a higher prediction power in X (Twitter) than on Weibo. This result could be explained by the differences in media systems across the U.S. and China. Previous studies argue that the U.S. media are more likely to adopt conflict frames with facts through treacherous questions and negative criticism to gain public awareness. Such negativity in media frames would lead the reporters to include incivility content in the reporting. On the contrary, the Chinese government-oriented media prefer to deliver positive information to convey the government message, which would lead the reporters to include less incivility content. With the reporting of the R–U war, such reporting mode is also delivered to social media posts.

As for the proposed moderator, anonymity, the study shows that it is an important factor when examining the effect between negative sentiment, conflict framing, and online incivility. However, contrary to current studies that suggest anonymous users are more likely to generate uncivil content (Suler 2004; Wang 2020), the result finds that the interactions between negative sentiment and online incivility, as well as between conflict framing and online incivility, are stronger when the posts are published by non-anonymous users. This effect is validated in both the X (Twitter) and Weibo datasets, indicating that this effect is similar across different national and cultural contexts. This could be explained by the motivation to seek social approval, and the gratification derived from generating and receiving social group identification, especially in discussing events with a clear political leaning. Under such circumstances, incivility towards the opposing side might not be perceived as a violation of social norms but rather as an effective way of identifying people or groups with similar political stances. During this process, having a non-anonymous identity might help gain the trust of others.

Despite the valuable insights provided by this research, several limitations should be acknowledged. This study primarily focused on X (Twitter) and Weibo, which are just two of the many social media platforms in the United States and China. The findings may not be fully applicable to other platforms such as Facebook, Zhihu, or TikTok (Douyin), which have different user demographics, community norms, and censorship mechanisms, and thus, could have different formation mechanisms and effects regarding online incivility.

Similarly, this research examines X and Weibo as two comprehensive social media ecologies; however, different types of accounts (e.g., news media, government agencies, politicians, influencers, individuals) on these platforms may exhibit significantly different posting behaviors. Future studies would benefit from including a broader range of platforms in the analysis, while clearly distinguishing and conducting comparative analyses of the content produced by various account types.

Furthermore, while the data for this study were collected by searching the most popular keywords related to the conflict, it is important to note that the keywords used were not consistent across the two platforms. This inconsistency introduces the possibility of generating biased results, as different keywords may capture varying aspects of the discourse or exclude certain content, leading to potential discrepancies in the data collected from each platform.

Lastly, this study relied heavily on machine coding to analyze the large amount of social media data. While machine coding is efficient for the processing of large datasets, it may lack the precision of human coding, particularly in capturing the nuances of language and context in incivility. Although the machine coding techniques employed in this study include well-established models widely used in previous research, and the model we fine-tuned specifically for this study was validated by human reviewers, there remains an inherent limitation in the inability of machine coding to fully account for the complexity and subtleties of human communication. The use of different machine learning models may also introduce variability in the results, depending on the specific algorithms and training data used.

See Table 5.

Differences between Chinese and U.S. media systems, adapted from Xue and Wang 2012; Otto et al. 2020; Hallin and Mancini 2004.

| Chinese news media system | U.S. News media system | |

|---|---|---|

| Media system | State-controlled media | North Atlantic liberal media |

| Major funding resources | Government | Commercial activity |

| Reporting frame in large events | Responsibility & leader frames | Conflict frames |

| Risk control strategy | Delivers the positive information to control the public perception of risks | Delivers facts through treacherous questions and negative criticism to keep public awareness |

| Reporting goal | Guiding public opinion, directing political work, and conveying the voice of the party and government | Gain public attention, expanding market share and media discourse |

References

Anderson, Ashley A., Dominique Brossard, Dietram A. Scheufele, Michael A. Xenos & Peter Ladwig. 2014. The “nasty effect:” online incivility and risk perceptions of emerging technologies. Journal of Computer-Mediated Communication 19(3). 373–387. https://doi.org/10.1111/jcc4.12009.Search in Google Scholar

Barbieri, Francesco, Jose Camacho-Collados, Leonardo Neves & Luis Espinosa-Anke. 2020. TweetEval: Unified benchmark and comparative evaluation for tweet classification. arXiv e-prints. arXiv:2010.12421. https://doi.org/10.48550/arXiv.2010.12421.Search in Google Scholar

Bartholomé, Guus, Sophie Lecheler & Claes de Vreese. 2015. Manufacturing conflict? How journalists intervene in the conflict frame building process. The International Journal of Press/Politics 20(4). 438–457. https://doi.org/10.1177/1940161215595514.Search in Google Scholar

Bartholomé, Guus, Sophie Lecheler & Claes de Vreese. 2018. Towards A typology of conflict frames. Journalism Studies 19(12). 1689–1711. https://doi.org/10.1080/1461670X.2017.1299033.Search in Google Scholar

Borah, Porismita. 2013. Interactions of news frames and incivility in the political blogosphere: Examining perceptual outcomes. Political Communication 30(3). 456–473. https://doi.org/10.1080/10584609.2012.737426.Search in Google Scholar

Boyd, Richard. 2006. The value of civility? Urban Studies 43(5–6). 863–878. https://doi.org/10.1080/00420980600676105.Search in Google Scholar

Britzman, Kylee J. & Benjamin R. Kantack. 2019. Politics as usual? Perceptions of political incivility in the United States and United Kingdom. Journal of Political Science 48. 25.Search in Google Scholar

Chen, Emily & Emilio Ferrara. 2023. Tweets in time of conflict: A public dataset tracking the Twitter discourse on the war between Ukraine and Russia. Proceedings of the International AAAI Conference on Web and Social Media 17(1). 1006–1013. https://doi.org/10.1609/icwsm.v17i1.22208.Search in Google Scholar

Coe, Kevin, Kate Kenski & Stephen A. Rains. 2014. Online and uncivil? Patterns and determinants of incivility in newspaper website comments. Journal of Communication 64(4). 658–679. https://doi.org/10.1111/jcom.12104.Search in Google Scholar

Cortina, Lilia M., Vicki J. Magley, Jill Hunter Williams & Regina Day Langhout. 2001. Incivility in the workplace: Incidence and impact. Journal of Occupational Health Psychology 6(1). 64–80. https://doi.org/10.1037/1076-8998.6.1.64.Search in Google Scholar

Cui, Yiming, Wanxiang Che, Ting Liu, Bing Qin & Ziqing Yang. 2021. Pre-training with whole word masking for Chinese BERT. IEEE/ACM Transactions on Audio, Speech, and Language Processing 29. 3504–3514. https://doi.org/10.1109/TASLP.2021.3124365.Search in Google Scholar

Esser, Frank, Sven Engesser, Jörg Matthes & Rosa Berganza. 2016. Negativity. Comparing political journalism. Oxfordshire: Routledge.Search in Google Scholar

Flores, Madison, Megan Nair, Meredith Rasmussen & Emily Sydnor. 2021. Civility through the comparative lens: Challenges and achievements. Political Incivility in the parliamentary, Electoral and media Arena. Oxfordshire: Routledge.10.4324/9781003029205-1Search in Google Scholar

Fung, Yi R. & Heng Ji. 2022. A Weibo dataset for the 2022 Russo-Ukrainian crisis. arXiv e-prints. arXiv:2203.05967. https://doi.org/10.48550/arXiv.2203.05967.Search in Google Scholar

Hallin, Daniel C. & Paolo Mancini. 2004. Comparing media systems: Three Models of Media and politics. Cambridge: Cambridge University Press.10.1017/CBO9780511790867Search in Google Scholar

Huang, Ronggui & Xiaoyi Sun. 2016. Dynamic preference revelation and expression of personal frames: How Weibo is used in an anti-nuclear protest in China. Chinese Journal of Communication 9(4). 385–402. https://doi.org/10.1080/17544750.2016.1206030.Search in Google Scholar

Huszár, Ferenc, Sofia Ira Ktena, Conor O’Brien, Luca Belli, Andrew Schlaikjer & Moritz Hardt. 2022. Algorithmic amplification of politics on Twitter. Proceedings of the National Academy of Sciences 119(1). e2025334119. https://doi.org/10.1073/pnas.2025334119.Search in Google Scholar

Jaidka, Kokil, Alvin Zhou & Yphtach Lelkes. 2019. Brevity is the soul of Twitter: The constraint affordance and political discussion. Journal of Communication 69(4). 345–372. https://doi.org/10.1093/joc/jqz023.Search in Google Scholar

Jiang, Jiachen & Soroush Vosoughi. 2020. Not judging a user by their cover: Understanding harm in multi-modal processing within social media research. In Proceedings of the 2nd international Workshop on fairness, accountability, Transparency and Ethics in multimedia, 6–12. Seattle WA USA: ACM.10.1145/3422841.3423534Search in Google Scholar

Kim, Sora, Kang Hoon Sung, Yingru Ji, Chen Xing & Jiayu Gina Qu. 2021. Online firestorms in social media: Comparative research between China Weibo and USA Twitter. Public Relations Review 47(1). 102010. https://doi.org/10.1016/j.pubrev.2021.102010.Search in Google Scholar

Lee, Francis L. F., Hai Liang & Gary K. Y. Tang. 2019. Online incivility, cyberbalkanization, and the dynamics of opinion polarization during and after a mass protest event. International Journal of Communication 13. 20.Search in Google Scholar

Liu, Siyi, Lei Guo, Kate Mays, Margrit Betke & Derry Tanti Wijaya. 2019. Detecting frames in news headlines and its application to analyzing news framing trends surrounding U.S. Gun violence. In Proceedings of the 23rd Conference on computational natural language learning (CoNLL), 504–514. Hong Kong, China: Association for Computational Linguistics.10.18653/v1/K19-1047Search in Google Scholar

Luparell, Susan. 2011. Incivility in nursing: The connection between academia and clinical settings. Critical Care Nurse 31(2). 92–95. https://doi.org/10.4037/ccn2011171.Search in Google Scholar

Marien, Sofie, Ine Goovaerts & Stephen Elstub. 2020. Deliberative qualities in televised election debates: The influence of the electoral system and populism. West European Politics 43(6). 1262–1284. https://doi.org/10.1080/01402382.2019.1651139.Search in Google Scholar

Maslog, Crispin C., Seow Ting Lee & Hun Shik Kim. 2006. Framing analysis of a conflict: How newspapers in five Asian countries covered the Iraq war. Asian Journal of Communication 16(1). 19–39. https://doi.org/10.1080/01292980500118516.Search in Google Scholar

Mendelsohn, Julia, Ceren Budak & David Jurgens. 2021. Modeling framing in immigration discourse on social media. arXiv e-prints. arXiv:2104.06443. https://doi.org/10.48550/arXiv.2104.06443.Search in Google Scholar

Muddiman, Ashley Rae. 2013. The instability of incivility: How news frames and citizen perceptions shape conflict in American politics. Austin, TX: University of Texas at Austin dissertation.Search in Google Scholar

Muddiman, Ashley. 2017. Personal and public levels of political incivility. International Journal of Communication 11(0). 21.Search in Google Scholar

Mutz, Diana. 2015. In-your-face politics: The consequences of uncivil media. In-your-face politics. Princeton: Princeton University Press.10.23943/princeton/9780691165110.001.0001Search in Google Scholar

Ojala, Markus & Mervi Pantti. 2017. Naturalising the new cold war: The geopolitics of framing the Ukrainian conflict in four European newspapers. Global Media and Communication 13(1). 41–56. https://doi.org/10.1177/1742766517694472.Search in Google Scholar

Otto, Lukas P., Sophie Lecheler & Andreas R. T. Schuck. 2020. Is context the key? The (Non-)Differential effects of mediated incivility in three European countries. Political Communication 37(1). 88–107. https://doi.org/10.1080/10584609.2019.1663324.Search in Google Scholar

Papacharissi, Zizi. 2004. Democracy online: Civility, politeness, and the democratic potential of online political discussion groups. New Media & Society 6(2). 259–283. https://doi.org/10.1177/1461444804041444.Search in Google Scholar

Peddinti, Sai Teja, Keith W. Ross & Justin Cappos. 2017. User anonymity on Twitter. IEEE Security & Privacy 15(3). 84–87. https://doi.org/10.1109/MSP.2017.74.Search in Google Scholar

Putnam, Linda L. & Martha Shoemaker. 2007. Changes in conflict framing in the news coverage of an environmental conflict symposium. Journal of Dispute Resolution 2007(1). 167–176.Search in Google Scholar

Rauchfleisch, Adrian & Mike S. Schäfer. 2015. Multiple public spheres of Weibo: A typology of forms and potentials of online public spheres in China. Information, Communication & Society 18(2). 139–155. https://doi.org/10.1080/1369118X.2014.940364.Search in Google Scholar

Rossini, Patrícia. 2020. Beyond toxicity in the online public sphere: Understanding incivility in online political talk. A Research Agenda for Digital Politics. 160–170.10.4337/9781789903096.00026Search in Google Scholar

Schuck, Andreas R. T., Rens Vliegenthart, Hajo G. Boomgaarden, Matthijs Elenbaas, Rachid Azrout, Joost van Spanje & Claes H. de Vreese. 2013. Explaining campaign news coverage: How medium, time, and context explain variation in the media framing of the 2009 European parliamentary elections. Journal of Political Marketing 12(1). 8–28. https://doi.org/10.1080/15377857.2013.752192.Search in Google Scholar

Semetko, Ha & Pm Valkenburg. 2000. Framing European politics: A content analysis of press and television news. Journal of Communication 50(2). 93–109. https://doi.org/10.1111/j.1460-2466.2000.tb02843.x.Search in Google Scholar

Shelar, Hemlata, Gagandeep Kaur, Neha Heda & Poorva Agrawal. 2020. Named entity recognition approaches and their comparison for custom ner model. Science & Technology Libraries 39(3). 324–337. https://doi.org/10.1080/0194262x.2020.1759479.Search in Google Scholar

Sobieraj, Sarah & Jeffrey M. Berry. 2011. From incivility to outrage: Political discourse in blogs, talk radio, and cable news. Political Communication 28(1). 19–41. https://doi.org/10.1080/10584609.2010.542360.Search in Google Scholar

Song, Yunya & Yi Wu. 2018. Tracking the viral spread of incivility on social networking sites: The case of cursing in online discussions of Hong Kong–Mainland China conflict. Communication and the Public 3(1). 46–61. https://doi.org/10.1177/2057047318756408.Search in Google Scholar

Stephens, Richard & Amy Zile. 2017. Does emotional arousal influence swearing fluency? Journal of Psycholinguistic Research 46(4). 983–995. https://doi.org/10.1007/s10936-016-9473-8.Search in Google Scholar

Strachan, J. Cherie & Michael R. Wolf. 2012. Political civility: Introduction to political civility. PS: Political Science & Politics 45(03). 401–404. https://doi.org/10.1017/S1049096512000455.Search in Google Scholar

Stryker, Robin, Bethany Anne Conway & J. Taylor Danielson. 2016. What is political incivility? Communication Monographs 83(4). 535–556. https://doi.org/10.1080/03637751.2016.1201207.Search in Google Scholar

Su, Leona Yi-Fan, Michael A. Xenos, Kathleen M. Rose, Christopher Wirz, Dietram A. Scheufele & Dominique Brossard. 2018. Uncivil and personal? Comparing patterns of incivility in comments on the Facebook pages of news outlets. New Media & Society 20(10). 3678–3699. https://doi.org/10.1177/1461444818757205.Search in Google Scholar

Suler, John. 2004. The online disinhibition effect. CyberPsychology and Behavior 7(3). 321–326. https://doi.org/10.1089/1094931041291295.Search in Google Scholar

Tewksbury, David & Dietram A. Scheufele. 2009. News framing theory and research. Media effects. 33–49.10.4324/9780203877111-8Search in Google Scholar

Trifiro, Briana M., Sejin Paik, Zhixin Fang & Li Zhang. 2021. Politics and politeness: Analysis of incivility on Twitter during the 2020 democratic presidential primary. Social Media + Society 7(3). https://doi.org/10.1177/20563051211036939.Search in Google Scholar

Wang, Sai. 2020. The influence of anonymity and incivility on perceptions of user comments on news websites. Mass Communication and Society 23(6). 912–936. https://doi.org/10.1080/15205436.2020.1784950.Search in Google Scholar

Wang, Junjie, Yuxiang Zhang, Lin Zhang, Ping Yang, Xinyu Gao, Ziwei Wu, Xiaoqun Dong, Junqing He, Jianheng Zhuo, Yang Qi, Yongfeng Huang, Xiayu Li, Yanghan Wu, Junyu Lu, Xinyu Zhu, Weifeng Chen, Ting Han, Kunhao Pan, Rui Wang, Hao Wang, Xiaojun Wu, Zhongsheng Zeng, Chongpei Chen, Jiaxing Zhang & Ruyi Gan. 2022. Fengshenbang 1.0: Being the foundation of Chinese cognitive intelligence. arXiv e-prints. arXiv: 2209.02970. https://doi.org/10.48550/arXiv.2209.02970.Search in Google Scholar

Wu, Yanfang. 2015. Incivility on Diaoyu Island sovereignty in Tianya Club. The Journal of International Communication 21(1). 109–131. https://doi.org/10.1080/13216597.2014.980296.Search in Google Scholar

Xue, Ke & Shuyao Wang. 2012. 议程注意周期模式下中美主流媒体对突发公共卫生事件的报道框架 [Media coverage of health risk information in China and the USA: Linking issue attention cycles and framing toward an integrated theory]. Chinese Journal of Journalism & Communication(2015–6). 32–33.Search in Google Scholar

© 2024 the author(s), published by De Gruyter, Berlin/Boston

This work is licensed under the Creative Commons Attribution 4.0 International License.

Articles in the same Issue

- Frontmatter

- Research Articles

- Examining the escalation of hostility in social media: a comparative analysis of online incivility in China and the United States regarding the Russia–Ukraine war

- Diplomatic webs: the influential figures shaping U.S. policy in Israel, Qatar, and Iraq

- Content removal: the government-Google partnership

- Navigating platform work through solidarity and hustling: the case of ride-hailing drivers in Nairobi, Kenya

- How individuals cope with anger- and sadness-induced narrative misinformation on social media: roles of transportation and correction

- Review Article

- Online media communication research in Vietnam 2003–2023: a review

Articles in the same Issue

- Frontmatter

- Research Articles

- Examining the escalation of hostility in social media: a comparative analysis of online incivility in China and the United States regarding the Russia–Ukraine war

- Diplomatic webs: the influential figures shaping U.S. policy in Israel, Qatar, and Iraq

- Content removal: the government-Google partnership

- Navigating platform work through solidarity and hustling: the case of ride-hailing drivers in Nairobi, Kenya

- How individuals cope with anger- and sadness-induced narrative misinformation on social media: roles of transportation and correction

- Review Article

- Online media communication research in Vietnam 2003–2023: a review