EU AI Act: what could AI literacy mean for medical laboratories? – Opinion Paper on behalf of the section digital competence and AI of the German Society for Clinical Chemistry and Laboratory Medicine (DGKL)

-

Jakob Adler

, Felix Philipp Herrmann

Abstract

The EU AI Act came into force on August 1, 2024, and since February 2, 2025, employees must be sufficiently trained in the use of AI systems (Article 4 of the EU AI Act). But what does “sufficient” AI literacy mean in the context of the EU AI Act? This article highlights the statements of the EU AI Act on AI literacy and aims to help in the development of training curricula. Medical laboratories offer numerous areas of application for AI, including improving the organization of laboratory processes and knowledge management. Even though there are no mandatory training requirements yet, basic data literacy is considered a prerequisite for AI Literacy. Three major topics are identified for AI literacy training: a general knowledge on the content of the EU AI Act, fundamentals of artificial intelligence, and context-specific training. This combination of fundamental data expertise and fundamental AI expertise will enable Article 4 of the EU AI Act to be fulfilled “to the best of ability” and lay the foundation for the transformation of medical laboratories.

Introduction

The EU AI Act officially came into force on August 1, 2024. However, not all parts of the legislation will come into force immediately; rather, different parts will come into force at different times. Article 4 on “AI literacy” has been in effect since February 2, 2025.

It states:

Providers and deployers of AI systems shall take measures to ensure, to their best extent, a sufficient level of AI literacy of their staff and other persons dealing with the operation and use of AI systems on their behalf, taking into account their technical knowledge, experience, education and training and the context the AI systems are to be used in, and considering the persons or groups of persons on whom the AI systems are to be used. [1]

This opinion article attempts to explore what this means for medical laboratories and how “sufficient” AI literacy can be achieved. We also believe that “sufficient” AI literacy can only be achieved based on a fundamental data competence.

Article 4 of the EU AI Act on “AI literacy”

The EU AI Act applies to all AI systems used in the European Union, regardless of the country of origin of the AI system (see Art. 2 (1a) EU AI Act). The only exceptions are purely private, “non-professional” use (see Art. 2 (10) EU AI Act) of AI systems and the use of such systems in the context of research and development (see Art. 2 (6) EU AI Act). There are also other exceptions, such as those relating to the national security of member states (see Art. 2 (3) EU AI Act). This means that the EU AI Act also applies to AI systems used in medical laboratories. To use AI systems, users must demonstrate “AI literacy.” Article 4 of the EU AI Act sets out various requirements for this:

Measures must be implemented to ensure the AI literacy of the company’s own employees and, where applicable, contracted people.

Employees must have a sufficient level of AI literacy.

The training courses should be context-dependent, depending on technical knowledge, experience, education, and training, as well as the AI system used. The context here also includes the people for whom the AI systems are to be used (e.g., patients).

This raises three key questions that a laboratory should answer when developing a training program:

Which AI systems does the laboratory already use and what is the intended purpose of those systems (defined by the provider)?

Who will use and operate the system? Which knowledge is already available and which knowledge is required to use the tool effectively?

For whom (e.g., patients) will the system be used? What potential biases may occur?

The first question raises the difficulty of defining an AI system. Whether a system is an AI system within the meaning of the EU AI Act is based on a case-by-case assessment of the respective system regarding the definition of an AI system under Article 3 (1). Unfortunately, this definition is very broadly formulated. The definition is as follows:

‘AI system’ means a machine-based system that is designed to operate with varying levels of autonomy and that may exhibit adaptiveness after deployment, and that, for explicit or implicit objectives, infers, from the input it receives, how to generate outputs such as predictions, content, recommendations, or decisions that can influence physical or virtual environments. [2]

The EU Commission has therefore published a 16-page “Guideline” on the interpretation of the definition [3] but explicitly points out that this is not binding and that the exact legal interpretation is the responsibility of the European Court of Justice. The authors of this article consider the following quote from the Commission’s guideline on the definition of AI to be particularly crucial in relation to laboratory medicine:

5.2. Systems outside the scope of the definition of an AI system: […]

(40) Recital 12 also explains that the AI system definition should distinguish AI systems from “simpler traditional software systems or programming approaches and should not cover systems that are based on the rules defined solely by natural persons to automatically execute operations. [3]

This means that rule-based systems defined by laboratory personnel or clinical decision support systems that operate based on static rules are unlikely to fall within the scope of the EU AI Act.

It is also important to consider the overlap between the EU AI Act, the Medical Device Regulation (MDR) and the In vitro Diagnostic Device Regulative (IVDR). AIB 2025-1/MDCG 2025-6 was recently published on this topic, according to which all products that correspond to classes A, B, C, and D of the IVDR and for which a notified body must be involved are considered high-risk AI systems [4]. Interestingly, AI systems classified as in-house devices under Art. 5 (5) IVDR are not considered high-risk AI systems (see MDCG 2025-6, p. 6, Table 1). In addition, there is another important overlap between the EU AI Act and the GDPR, as health data is considered particularly sensitive private data and therefore a high level of protection must be ensured when using AI tools to improve healthcare.

Possible training content for achieving basic data literacy.

|

As there is currently no precise wording regarding the implementation of the aforementioned legal provisions, the authors of this article point out that the interpretations of the legal text presented here can only be regarded as a suggestion. The interpretation of the text is carried out exclusively by the European Court of Justice.

Data literacy as prerequisite for AI literacy

As already stated in the introduction, the authors believe that AI literacy cannot be achieved without fundamental data literacy. Without a basic understanding of how data can be structured, stored, processed, and transformed, or what different visualizations can and cannot achieve, it is not possible to understand how AI systems work and what their outputs mean, and thus to become proficient in using AI systems. The following lines should explain possible training content for achieving basic data literacy. A summary can be found in Table 1.

Data literacy begins with understanding what data is and how data sets can be structured. It is important to explain the concept of variables and derived values, the basics of various types of data storage, and the concept of tidy data [7]. Furthermore, when it comes to medical data, it is crucial to know which population the data comes from and how this affects possible conclusions and correlations. Since many different data types and formats can be collected in the laboratory, it is helpful to build up a basic knowledge of the data collected in the respective laboratory and its structure. For example, classic measurements from high-throughput analyzers differ from data from the areas of quality control, chromatography data, or image data in the case of automated cell recognition in hematology or digital urine diagnostics. Depending on the size of a laboratory, it can also be helpful to develop a basic understanding of big data and the so-called FAIR data principles (findability, accessibility, interoperability, reusability [5]). Once a basic understanding of what data is and how it can be structured is established, one should consider the possibilities of data preparation and data transformation. Dealing with missing values, e.g. by omission, interpolation or imputation is also of crucial importance here. When dealing with high-dimensional data sets, the differences between information reduction and analysis simplification, as well as the difference between graphical dimensions and data dimensions, should be addressed.

Sufficient space should also be given to the possibilities of data visualization. Here, it is important to provide an overview of the possible plot types and to adhere to good data visualization practices. Also, common pitfalls when dealing with data and its visualization should be discussed. From a data privacy protection perspective, basic knowledge of information reduction techniques and artificial noise inclusion as well as data deletion periods is also helpful. Context-dependent training should be performed on relevant techniques for medical laboratories, e.g. using specific visualization variants that can be used and interpreted in daily routines (e.g., Bland-Altman plot, etc.). Even though basic statistical knowledge of measures of location and dispersion, statistical tests, and significance levels is extremely helpful, acquiring basic data literacy is not about training a new generation of statisticians. Instead, training should focus on providing a basic level of confidence in handling data and interpreting data and its visualizations. Combining basic data literacy with knowledge of how to interpret application outputs used daily can build a strong foundation for acquiring AI literacy. Due to the great heterogeneity of the employee composition in medical laboratories and the increasing blurring of the boundaries between technical staff and academic education or further training through new job titles such as M.Sc. Biomedical Sciences (academic continuing education for technical staff), it is not possible to make a general recommendation as to which content of a data literacy course is useful and relevant for which employees. Here, laboratories must take their own employee structure into account when planning data literacy education.

Proposed AI training framework

When we talk about AI literacy, three major topics can be identified:

General knowledge on the EU AI Act

Fundamentals of artificial intelligence

Context-dependent training

Possible training content for the three main topics is shown in Table 2.

Possible training content for achieving basic AI literacy.

|

Module 1: general knowledge on the EU AI Act

To understand why certain content about AI needs to be taught, it is necessary to understand the purpose of this training and the most important aspects of the relevant legislation. Additionally, laboratory staff should be informed why the EU AI Act also applies to medical laboratories, as explained in the introduction of this article.

The EU AI Act has a major, fundamental objective, as explained in Article 1:

The purpose of this Regulation is to improve the functioning of the internal market and promote the uptake of human-centric and trustworthy artificial intelligence (AI), while ensuring a high level of protection of health, safety, fundamental rights enshrined in the Charter, including democracy, the rule of law and environmental protection, against the harmful effects of AI systems in the Union and supporting innovation.

The aim of the EU AI Act is therefore to safeguard the health, safety, and fundamental rights of the people in the European Union. Even though many people currently use AI systems without much criticism, the EU AI Act rightly focuses on the necessary protection of these fundamental values.

The EU AI Act takes a risk-based approach, dividing AI systems into different risk classes. AI systems that are banned in the EU are distinguished from systems with high, moderate, low, or no risk. The ban on certain AI systems has also been in power since February 2, 2025. Considering the overlap between the MDR, IVDR, and the EU AI Act, it can be assumed that most AI systems used in medicine will be classified as high-risk AI systems (see introduction, all system that require involvement of a notified body). Another important aspect of the EU AI Act is the distinction between providers and deployers and the associated obligations that must be fulfilled to comply with the EU AI Act. In addition to AI systems that are specialized for specific tasks, the EU AI Act distinguishes between so-called “general purpose artificial intelligence” (GPAI), which includes chat-based systems such as ChatGPT or Gemini. In this case, other requirements must be met. Finally, training should be provided on the basics of data protection when dealing with AI systems.

Module 2: fundamentals of artificial intelligence

To gain a basic understanding of artificial intelligence, it is essential to clarify some of the most important terms. Training should therefore first distinguish between rule-based systems, such as rulesets in a Laboratory Information System (LIS) or in a clinical decision support system (if they are expert rules (hard-coded) without dynamic adaptation of the system), and genuine AI systems. Rule-based systems have long been used in laboratory medicine and are unlikely to fall under the provisions of the AI Act (see introduction). In order to understand the terminology used in the field of AI, basic knowledge of the different types of machine learning, such as supervised learning, unsupervised learning, and reinforcement learning, should be taught. Deep learning techniques, as a subfield of machine learning, are particularly important for further understanding AI systems such as large language models (LLMs), so the basic structure of neural networks, their fundamental function, and examples of their application, such as image recognition, should be discussed.

Today’s debate about AI revolves primarily around generative AI models. It is important to provide employees with basic knowledge of foundation models, especially large language models. It is helpful to structure this knowledge around the development process of an LLM (pre-training, supervised fine-tuning, reinforcement learning, reasoning), as this also provides a basic understanding of the possibilities and limitations as well as the fundamental functioning of LLMs. Based on this knowledge, phenomena such as hallucinations, sycophancy, alignment faking, and security issues such as jail breaks or prompt injections should then be addressed. Problems such as biases in the training data, which lead to biases in the model results, also need to be discussed, as this can lead to relevant problems in the care of different patient groups (e.g., gender or ethnicity bias). Finally, the fundamentals of the function and possibilities, but also the limitations and security concerns of agentic AI systems should be addressed.

Module 3: context-dependent training

Once the fundamentals of the EU AI Act and a general understanding of the various possibilities of AI models and systems are known, AI literacy should be tailored to the tools used in the laboratory in a context-dependent manner. It is important to take into account the existing knowledge of employees and to address the specific algorithms used in the AI tools. It is crucial to emphasize that AI tools may only be used for the purpose intended by the manufacturer. Based on this, the basic functioning as well as the strengths and weaknesses of the AI system can be discussed. This requires information from the manufacturers, which is also required of them in exactly this form by the EU AI Act. Based on this information and fundamental knowledge of how AI models work, the outputs of the tools used in practice should be critically examined. In this context, reference should be made to the high relevance of the “human-in-the-loop” principle, which is explicitly required by the EU AI Act for high-risk AI systems. The basics of evaluating AI systems should also be discussed (especially if AI systems are to be developed as in-house systems). Finally, training should also include content on the possibilities and obligations of quality and risk management for the AI systems used.

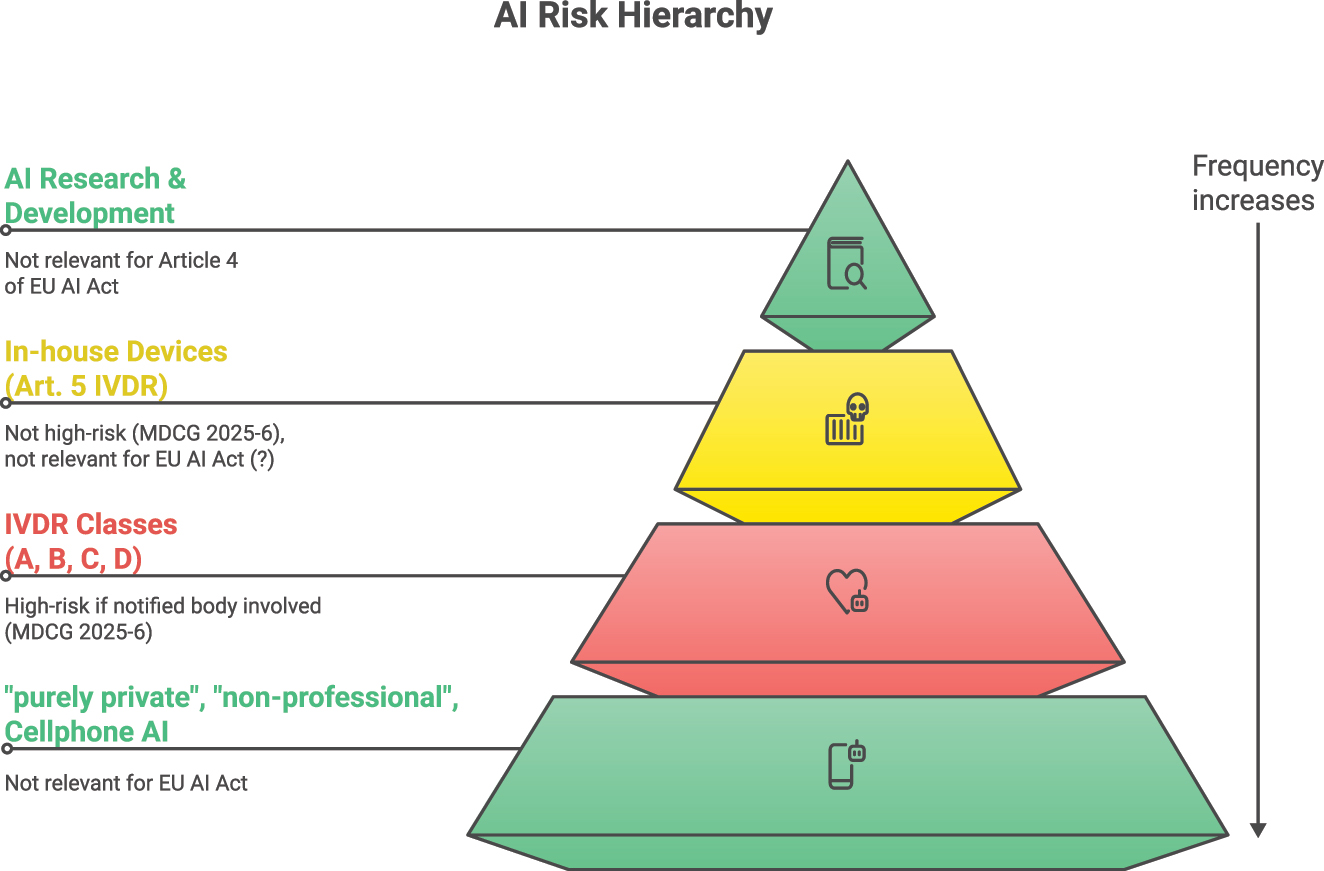

In Figure 1, we have attempted to present a framework that is helpful for classifying AI systems and their requirements in terms of regulation, knowledge, and control by employees. While most AI systems currently are mainly used privately (base of the pyramid in Figure 1, “cellphone-based AI”), such as the apps from OpenAI, Google, or Meta AI in WhatsApp, laboratory staff will increasingly be confronted with AI-based IVDs in the future. Some laboratories will also develop their own AI tools, and universities and specialized institutes will conduct research in the field of AI for laboratory medicine. Depending on the application, different requirements must be placed on AI literacy. In the case of AI- or machine learning-based studies in laboratory medicine, the EFLM working group recently published a checklist that can serve as a basis for the design of corresponding studies in the field of science [9]. The IFCC has also issued corresponding recommendations in 2023 [10]. Since there are no official guidelines on AI training yet, thinktanks such as working groups of national and international professional societies should seize this opportunity and contribute possible training content and foundations for technical, professional, and ethical frameworks.

AI systems and their relevance for Article 4 of the EU AI Act. The pyramid shape is intended to symbolize the frequency of AI applications.

Legal implications (a German perspective)

With regard to the question of the requirements of AI literacy, the question arises as to the legal consequences in the event of non-compliance with the regulations of Article 4 of the EU AI Act by deployers as defined in Article 3 (4) of the EU AI Act. Chapter XII of the EU AI Act contains no explicit regulations for fines in the event of a breach of the AI literacy obligation under Article 4. Currently, a fine under the EU AI Act is only imposed if non-compliance with Article 4 is accompanied by a violation of the requirements specifically regulated for high-risk systems, such as those set out in Article 16 (a) in conjunction with 9 (risk management system) or Article 26 (2) in conjunction with 14 (human oversight) [11]. According to Article 99 (1) of the EU AI Act, Member States are required to adopt national regulations for sanctions and other enforcement measures for infringements of the EU AI Act – and consequently also of Article 4. However, the current draft bill for a law implementing the EU AI Act does not currently comply with this implementation requirement regarding Article 4 of the EU AI Act [12].

Apart from administrative sanctions, the question arises as to how violations of Article 4 of the EU AI Act by deployers of AI systems should be assessed in terms of civil and criminal law if patients are harmed as a result. Neither the EU AI Act itself nor the MDR [13] and IVDR [14], which may apply to certain medical AI systems, provide an independent basis of civil liability or corresponding criminal offences for deployers. In February 2025, the EU Commission withdrew its proposal for an AI civil liability directive [15], which had provided regulations for strict liability for deployers of high-risk systems [16]. Consequently, the breach of duties of care must be addressed using the existing liability instruments of national civil law, namely contractual liability (Section 280(1) of the German Civil Code (BGB)) and tortious liability (Section 823 of the BGB), as well as the principles of criminal liability for negligent bodily injury and homicide offences (especially Sections 222 and 229 of the German Criminal Code (StGB)).

In this context, the question of how to deal with breaches of duty by the specific user of the AI system – often the treating physician – continues to gain relevance. These could result from a lack of competence in using the systems, or from a lack of verification or misinterpretation of the results when actively using them. At the same time, breaches of duty could result from failing to use the systems despite their availability. The legal assessment of such behavior necessitates answering some fundamental questions regarding breaches of medical duty, which frequently requires an interdisciplinary approach. Particular focus is given to determining the applicable standard of care – the so-called medical standard [17] – which, as a legal concept, is largely based on medical practice [18]. It is essential that fundamental civil and criminal law principles for assessing medical malpractice, such as the principle of horizontal division of labor, medical freedom of treatment or patient autonomy in the form of information and consent, are brought into line with the increasing use of this technological innovation [19].

Why expert knowledge still matters

Even if we can achieve basic AI literacy through knowledge of the contents of the EU AI Act, basic knowledge of the possibilities and limitations of AI models and AI tools, and an assessment of the specific tools used in our laboratories with their strengths and weaknesses, it should not be forgotten that even when using seemingly powerful AI tools such as large language models, expert knowledge for verifying the outputs of these models continues to be a top priority in the context of patient safety and providing the best possible care for our patients. It would be wrong to conclude from current developments that we, as laboratory staff, will only be communicating results in the future. Our critical questioning, coupled with our expertise (which will certainly not be any less pronounced in the future), together with the helpful processing of routine tasks by AI tools, will ensure the best possible care for our patients.

Outlook

AI tools will change the future and the job profile in laboratory medicine. However, we will only achieve responsible change if we actively help shaping it. There is currently an excellent opportunity to do so, as no generally applicable rules for the use of AI in laboratory medicine have been defined yet. We should develop a technical (security, infrastructure, etc.), professional (solving problems such as hallucinations, etc.), ethical (What serves our patients? What is fair and just?) and financial (Who benefits?) framework for the application of AI tools in laboratory medicine [20]. These tools will be new tools in our toolbox of possibilities. We should mitigate the risks and exploit the possibilities. AI agents will help us with everyday tasks – and here we advocate their use as helpful assistants. We should not relinquish our medical responsibility to these tools but rather use them as an aid to reflect on our diagnostic thinking [21].

-

Research ethics: Not applicable.

-

Informed consent: Not applicable.

-

Author contributions: All authors have accepted responsibility for the entire content of this manuscript and approved its submission.

-

Use of Large Language Models, AI and Machine Learning Tools: Use of DeepL.com for translations questions.

-

Conflict of interest: The authors state no conflict of interest.

-

Research funding: None declared.

-

Data availability: Not applicable.

References

1. EU AI Act, Article 4, EU AI Act explorer. https://artificialintelligenceact.eu/article/4/ [Accessed 12 Aug 2025].Suche in Google Scholar

2. EU AI Act, Article 3 (1), EU AI Act explorer. https://artificialintelligenceact.eu/article/3/ [Accessed 17 Aug 2025].Suche in Google Scholar

3. Commission guidelines on the definition of an artificial intelligence system established by Regulation (EU) 2024/1689 (AI Act). https://digital-strategy.ec.europa.eu/en/library/commission-publishes-guidelines-ai-system-definition-facilitate-first-ai-acts-rules-application [Accessed 17 Aug 2025].Suche in Google Scholar

4. AIB 2025-1, MDCG 2025-6 interplay between the Medical Devices Regulation (MDR) & In vitro Diagnostics Medical Devices Regulation (IVDR) and the Artificial Intelligence Act (AIA). https://health.ec.europa.eu/document/download/b78a17d7-e3cd-4943-851d-e02a2f22bbb4_en?filename=mdcg_2025-6_en.pdf [Accessed 17 Aug 2025].Suche in Google Scholar

5. Blatter, TU, Witte, H, Nakas, CT, Leichtle, AB. Big data in laboratory medicine – FAIR quality for AI? Diagnostics 2022;12:1923. https://doi.org/10.3390/diagnostics12081923.Suche in Google Scholar PubMed PubMed Central

6. Medical laboratories – requirements for quality and competence (ISO 15189:2022); German version EN ISO 15189:2022 + A11:2023. https://doi.org/10.31030/3515195.Suche in Google Scholar

7. Wickham, H, Cetinkaya-Rundel, M, Grolemund, G. R for data science, 2nd ed. Sebastopol: O`Reilly; 2023. https://r4ds.hadley.nz/ [Accessed 27 Aug 2025].Suche in Google Scholar

8. Hofmann, V, Kalluri, PR, Jurafsky, D, King, S. AI generates covertly racist decisions about people based on their dialect. Nature 2024;633:147–54. https://doi.org/10.1038/s41586-024-07856-5.Suche in Google Scholar PubMed PubMed Central

9. Carobene, A, Cadamuro, J, Frans, G, Goldshmidt, H, Debeljak, Z, De Bruyne, S, et al.. EFLM checklist for the assessment of AI/ML studies in laboratory medicine: enhancing general medical AI frameworks for laboratory-specific applications. Clin Chem Lab Med 2025. https://doi.org/10.1515/cclm-2025-0841 [Epub ahead of print].Suche in Google Scholar PubMed

10. Master, SR, Badrick, TC, Bietenbeck, A, Haymond, S. Machine learning in laboratory medicine: recommendations of the IFCC working group. Clin Chem 2023;69:690–8. https://doi.org/10.1093/clinchem/hvad055.Suche in Google Scholar PubMed PubMed Central

11. Schefzig, J, Kilian, R. BeckOK KI-Recht, KI-VO Art. 4 Rn 55-57, 2nd ed. Munich: C.H. Beck; 2025.Suche in Google Scholar

12. Draft bill by the Federal Ministry for Economic Affairs and Climate Protection and the Federal Ministry of Justice: draft law implementing Regulation (EU) 2024/1689 of the European Parliament and of the Council of June 13, 2024, laying down harmonized rules on artificial intelligence and amending Regulations (EC) No. 300/2008, (EU) No. 167/2013, (EU) No. 168/2013, (EU) 2018/858, (EU) 2018/1139, and (EU) 2019/2144, as well as Directives 2014/90/EU, (EU) 2016/797, and (EU) 2020/1828 (Regulation on Artificial Intelligence) – law on the implementation of the AI Regulation, processing status: 4th December 2024.Suche in Google Scholar

13. Regulation (EU) 2017/745 of the European Parliament and of the Council of April 5, 2017, on medical devices, amending Directive 2001/83/EC, Regulation (EC) No. 178/2002 and Regulation (EC) No. 1223/2009 and repealing Council Directives 90/385/EEC and 93/42/EEC.Suche in Google Scholar

14. Regulation (EU) 2017/746 of the European Parliament and of the Council of April 5, 2017, on in vitro diagnostic medical devices and repealing Directive 98/79/EC and Commission Decision 2010/227/EU.Suche in Google Scholar

15. AI Liability Directive. A new plan for Europe’s sustainable prosperity and competitiveness. https://www.europarl.europa.eu/legislative-train/theme-a-europe-fit-for-the-digital-age/file-ai-liability-directive?sid=9301 [Accessed 29 Jul 2025].Suche in Google Scholar

16. Zech, H, Hünefeld, IC. Einsatz von KI in der Medizin: Haftung und Versicherung. MedR 2023;41:1–8. https://doi.org/10.1007/s00350-022-6374-8.Suche in Google Scholar

17. Jansen, C. Der Medizinische Standard, 1st ed. Berlin: Springer; 2019.10.1007/978-3-662-59997-6Suche in Google Scholar

18. Laufs, A, Katzenmeier, C, Lipp, V. Arztrecht, 8th ed. Munich: C.H. Beck; 2021. Chapter X, Rn. 14 and following.Suche in Google Scholar

19. Ruschemeier, H, Steinrötter, B. Der Einsatz von KI & Robotik in der Medizin, 1st ed. Baden-Baden: Nomos Verlagsgesellschaft mbH & Co. KG; 2024:97 p. and following. https://doi.org/10.5771/9783748939726.Suche in Google Scholar

20. Goldberg, CB, Adams, L, Blumenthal, D, Flatley Brennan, P, Brown, N, Butte, AJ, et al.. To do no harm – and the most good – with AI in healthcare. NEJM AI 2024;1. https://doi.org/10.1056/AIp2400036.Suche in Google Scholar

21. Schmidt, HG, Mamede, S. Improving diagnostic decision support through deliberate reflection: a proposal. Diagnosis 2023;10:38–42. https://doi.org/10.1515/dx-2022-0062.Suche in Google Scholar PubMed

© 2025 the author(s), published by De Gruyter, Berlin/Boston

This work is licensed under the Creative Commons Attribution 4.0 International License.