Read for me: developing a mobile based application for both visually impaired and illiterate users to tackle reading challenge

-

Zainab Hameed Alfayez

, Batool Hameed Alfayez

Abstract

In recent years, there have been several attempts to help visually impaired and illiterate people to overcome their reading limitations through developing different applications. However, most of these applications are based on physical button interaction and avoid the use of touchscreen devices. This research mainly aims to find a solution that helps both visually impaired and illiterate people to read texts present in their surroundings through a touchscreen-based application. The study also attempts to discover the possibility of building one application that could be used by both type of users and find out whether they would use it in the same efficiency. Therefore, a requirements elicitation study was conducted to identify the users’ requirements and their preferences and so build an interactive interface for both visually impaired and illiterate users. The study resulted in several design considerations, such as using voice instructions, focusing on verbal feedback, and eliminating buttons. Then, the reader mobile application was designed and built based on these design preferences. Finally, an evaluation study was conducted to measure the usability of the developed application. The results revealed that both sight impaired and illiterate users could benefit from the same mobile application, as they were satisfied with using it and found it efficient and effective. However, the measures from the evaluation sessions also reported that illiterate users had used the develop app more efficiently and effectively. Moreover, they were more satisfied, especially with the application’s ease of use.

1 Introduction

Worldwide, there are still over 750 illiterate adults [1] and more than 300 million people who are blind or have moderate-to-severe visual impairment [2]. These people are facing daily challenges to do basic tasks, such as recognizing objects, navigating places, and reading texts that can be found in books, street signs, restaurant menus, and grocery products. However, the advancements in technology, especially the revolution of mobile devices and their applications, play a huge role in tackling some of these problems and can enhance the way these groups of people communicate with society and the world. For example, with mobile-based applications, visually impaired and illiterate people can make phone calls, receive and send messages, play games [3], and learn from mobile-based applications (apps) [4]. This confirms that people who have a visual handicap or are illiterate could use modern technology more efficiently by utilizing a variety of applications. They could also benefit from smartphones’ advanced functions rather than only making phone calls and sending text messages.

The summary of the previous works.

| Ref. | Target audience | Hardware | Technologies | Advantages | Limitations |

|---|---|---|---|---|---|

| [5] | Illiterates + visually impaired | Raspberry Pi a camera | OCR Computer vision Google speech application program interface | 97.4 % of reading accuracy | Less accurate at low light condition |

| [6] | Visually impaired | Raspberry pi Ultrasonic sensor Camera Earphones | OCR + TTS | Produce vocal speech from text | Unmentioned |

| [7] | Visually impaired | Smart glass with camera Ultrasonic sensor Smart phone | Computer vision + Deep learning + TTS | Robust text recognition even in a dark scene environment | Few errors in text recognition |

| [8] | Illiterate + visually impaired | Computer | OCR | Read and fill bank form | Unmentioned |

| [9] | Illiterate + visually impaired | Mobile phone | OCR + artificial intelligence + computer vision | Access to the literatures that are not available in braille | Unmentioned |

| [10] | Visually impaired | Mobile phone | OCR | Accept both voice and touch commands | Accuracy depends on level of luminosity, the weather, and camera distance |

| [11] | Visually impaired | Raspberry Pi 3 a camera Push switch Earphones | OCR + TTS | Reduce the dependence of blinds | Unmentioned |

As a result, several researchers were encouraged to adapt different technologies and develop information and communication technology (ICT)-based applications that help visually impaired and illiterate people to overcome their reading limitations. For example [5], presented Raspberry Pi and a camera system to help Bangla-speaking people to read documents, whereas [11] proposed a system built from Raspberry Pi, the phone’s camera, a push switch, and earphones to help blind people to read text in their surroundings, and finally [8], developed a software application to help illiterate and visually impaired people to fill in banking forms without assistance using optical character recognition (OCR) technology and a speech interface.

Obviously, most of these studies avoid using smartphones when building a system for the blind in particular because of the difficulties that blind people face while interacting with touchscreens [12]. However, developing a mobile-based application makes it more accessible than other types of systems, as the statistics have reported that the usage of smartphones is about 43 % with a significant percentage from developing countries [13], which also have the major number of blind and illiterate people from around the world [1,2].

Therefore, the aim of this paper was to develop a mobile-based application that would be accessible to both visually impaired and illiterate people and could help them to read texts that can be found on different surfaces, such as fiber, paper, and plastic. The built app uses the smartphone camera with OCR technology to capture the texts. It also uses Firebase for real time translation to the local language in case the captured text is in English, and TTS (Text To Speech) for reading the text aloud. The app was designed according to users’ design preferences, which were collected through conducting a requirements elicitation study. Then, the reader app was developed and given to blind and illiterate users to evaluate it. The evaluation sessions showed that the users could efficiently interact with and were satisfied using smartphone applications, which are designed according to their requirements and developed to help them to take advantage of the available technology.

2 Previous works

This section provides a concise overview of a number of previous studies that were conducted to help visually impaired or illiterate people take advantage of using technology in their usual activities. Table 1 shows the summary of the prevoius works.

Firstly, as mentioned previously [5], proposed a system to help people who speak the Bangla language and are unable to read or have a significant loss of sight to read text documents. The system was built using Raspberry Pi, with a camera module for utilizing the OCR engine, computer vision techniques, and the Google Speech Application Program Interface. In this research, the accuracy for Bangla text images was 97.4 %. However, at night, the accuracy of the extraction of the text does not reach this level, as the quality of the captured image is not perfect in low light conditions. Another Raspberry Pi based system was developed by [6]. This system recognizes texts in images then generates vocal speech that the users could hear. In addition to reading, developers of this system utilized ultrasonic sensors to keep a safe distance between the objects and the visually impaired. Authors in [7] also used ultrasonic sensors and smart glasses with camera to recognize text and obstacles in the roads. Two-branch exposure-fusion network was also used to enhance low-light images and aided the system to recognize texts in the dark conditions.

Furthermore, the authors in [8] developed a software application that enables illiterate and visually impaired people to fill in bank forms efficiently and without outside assistance. The system was based on a speech interface for users’ inputs and OCR technology for data retrieval from the paper documents.

A more recent system was produced by [9] to make newspapers available on a daily basis to those who are illiterate or have a visual handicap. The system is a mobile-based system that converts newspaper images into audio using character recognition, artificial intelligence, and computer vision. Another OCR-based reader system was developed by [10]. This mobile-based application targeted visually impaired people and explained the possibility of interaction between them and smartphones. During the testing phase, the author addressed several issues inherent in the interaction between blind people and smartphones, such as the level of luminosity, the weather, and positioning the camera at the optimal distance. Similar interaction issues were addressed by [12] regarding blind people using smartphones with the additional issue of using a touchscreen rather than physical keys, which makes the interaction process more challenging for these people in particular. A solution for this problem was suggested by [11] using a push switch to act as a button to capture images on their system, which was developed to reduce the dependency of the visually impaired on other people to recognize texts in their surroundings. As mentioned above, the authors in this research built a system that consisted of Raspberry Pi, a camera, a push switch, and earphones. This means they did not utilize and benefit from the services offered by mobile devices, which are owned by a significant number of people who might use them only for basic functions, such as making or receiving calls [13]. As a result, the visually impaired might buy a new device to help them read text, while they could actually use their phone to do this task. Thus, in this study, we aimed to develop a mobile-based system that encourages illiterate and visually impaired people to use their smartphones for more advanced functions and help them to read texts without assistance. Moreover, this research mainly attempts to discover the possibility of building an application that could be used by both illiterates and blinds. Then find out whether they would use it with same efficiency. This was achieved through conducting two studies. The first one was carried out to identify the design requirements of visually impaired and illiterate users regarding the intended application. Then, the users’ requirements and design considerations identified by this study were applied to the new developed application. Next, an evaluation study was conducted with both groups of users (blind or illiterate) to measure their performance while using the app and gather their opinions about it.

Furthermore, none of the previously mentioned studies described the users’ requirements or system design features for the best interaction experience, although these systems were mainly devised for the blind or illiterate for reading purposes. Moreover, the earlier studies did not measure the users’ performance or collect their feedback on these systems. Therefore, this study attempts to fill this gap and give a deep understanding of both visually impaired and illiterate users’ needs and design requirements in a reader mobile-based application.

3 Methodological framework

This study was conducted following the five steps of Design Science Research (DSR) in an information system proposed by [14]. These steps are presented in Figure 1 and are explained as follows:

The research methodology steps.

3.1 Awareness of problem

The starting point of the research was to identify its particular problem and devise the solution. Without other people’s assistance, visually impaired and illiterate people in Iraq cannot read the simple texts that they need in their daily life, such as product expiry dates, currency notes, and signs on the streets. Moreover, these two groups of people can benefit only from the basic functions of their smartphones, for example, making or receiving calls. Therefore, an ICT solution for visually impaired and illiterate people is needed to help them read texts without such assistance. In addition, it will possibly encourage them to use the modern technology more and to take advantage of the services it provides.

3.2 Suggestion

This stage involved producing the artifact’s initial proposal by specifying its requirements, which were determined based on the data gathered from conducting a requirements elicitation study. The authors conducted semi-structured interviews with 30 participants, who were either visually impaired or illiterate. The results from the qualitative study reported that there is a need for a mobile app to help the visually impaired and the illiterate to read texts and to solve the problem identified in the first stage. The participants, however, asked for a usable and interactive application. Several design considerations, which resulted from conducting the requirements elicitation process, were applied in the later design and development of the application.

3.3 Development

The third step of the DSR was to design and develop the proposed application. Based on the interviewees’ feedback and the design requirements derived from the “suggestion” stage, the prototype of the reader application was designed and built and then prepared for the evaluation stage.

3.4 Evaluation

The following stage after developing the application was the evaluation, where 35 visually impaired and illiterate participants used the app and provided feedback on it. The participants’ performance was analyzed to measure the usability of the application regarding three metrics: efficiency, effectiveness, and user satisfaction.

3.5 Conclusions

This was the final stage of the DSR in which the study findings were reported and discussed.

3.6 Qualitative study

The aim of the first study was to discover the need for developing a reading mobile app for people who are illiterate or visually impaired. Moreover, this study was conducted to determine the users’ requirements regarding the application. Below is the explanation of the first research steps starting with participants’ relevant demographic information, the study procedure, and finally, the study findings.

3.6.1 Participants demographic

In order to recruit participants for this study, we used the snowball sampling method, which is explained in [15] and used by [13]. A total of 30 participants (18 males and 12 females) were chosen from different areas from Albasrah, Iraq. Most of these participants were illiterate (21 participants), while 9 of them were blind or severely visually impaired. They were from different professions, such as plumbers, carpenters, mechanics, drivers, housewives, or unemployed. The participants’ ages ranged from 25 to 75 with an average of 40 years. The majority of them (25 participants) had some experience with using smartphones mainly for making calls, sending/receiving voice messages, capturing photos, listening to music, and watching YouTube videos. The other participants (1) used phones to receive calls only. Table 2 describes the participants’ demographic information for the requirements elicitation study.

Participants demographic for requirement elicitation study.

| Participants characteristic | Number of illiterate users | Number of blind users |

|---|---|---|

| Male | 12 | 6 |

| Female | 9 | 3 |

| Employed | 12 | – |

| Unemployed/housewives | 9 | 9 |

| Aged under 50 years | 15 | 4 |

| Aged over 50 years | 6 | 5 |

| Experience with using smartphones | 20 | 5 |

| No experience with using smartphones | 1 | 4 |

3.6.2 Study process

The procedure of the first study involves two sections which are as follows.

3.6.2.1 Apparatus

The interviews with participants were conducted in different areas of Albasrah city in the southeast of Iraq with consideration of the best environmental context, such as quietness. They were interviewed individually over a period of two weeks with two people interviewed per day. Each interview session was recorded using an audio recorder, and the recording was saved for the analysis phase.

3.6.2.2 Semi-structured interview

Since the current study was conducted to obtain qualitative data, the 30 participants were interviewed and asked questions according to the semi-structured interview method. This method is widely used to collect qualitative textual data [16]. It is an open flexible method in which the interviewer is able to add more questions and ask the participants for further clarification. This method can give the researcher a deeper understanding of the participants’ needs and requirements.

After preparing the interview questions and organizing the setting for each session, each interview started with welcoming the participant then reading aloud their ethical rights. Once the participant had accepted the study consent form, the interviewer asked him/her several profile questions such as name, age, job, and experience with using technology especially mobile devices. The next part of the interview started with the interviewer asking the participant a number of questions related to the research subject, for example, the need of illiterate or blind users to have a mobile app that helps them to read texts, which is the main design requirement of this app.

The next step of the study was transferring each interview recording into textual data using a phonetic approach where each word was transcribed regardless of its importance [17]. The objective was to discover the key concepts raised by the interviewers and identify viewpoints that were repeated or held by multiple participants. This step resulted in a number of pages of transcription which represent the data obtained in each interview.

The transcribed texts were analyzed and coded using interpretive phenomenological analysis (IPA). This method is based on deeply reading and rereading the text [18]. Therefore, the researchers read the transcripts repeatedly in order to be more familiar with interviewees’ comments and probably gain more ideas. The process of reading the transcripts also involved highlighting and extracting the key points from the interviewees’ responses. Then, the extracted texts were separated into smaller notes. The process was continued to determine the study’s master themes and subthemes.

3.6.3 Results

By combining repeated comparable or related topics, the textual material was advanced to a higher level of abstraction resulting in a collection of master themes and their associated subthemes. The analyzed data were divided into three main themes. The first theme focused on participants’ needs for a mobile application that help them to read texts. The second main theme shed light on the challenges that blind and illiterate people face while using mobile devices. The last theme was analysis of the descriptive data of the participants’ preferences on the application’s design. Table 3 illustrates the three identified master themes and their associated subthemes produced after analyzing data obtained from the semi-structured interviews.

Theme and subtheme of the requirement elicitation study.

| Main theme | Subtheme | Quotes from illiterate participants | Quotes from blind participants |

|---|---|---|---|

| The need for a reader mobile app | Independency | “It is an amazing thing to read text myself.” | “The app will help me to read even if there is no one there.” |

| Availability | “I will be able to read texts anytime and anywhere, just open the app.” | “I carry my phone wherever I go, so the app will be available whenever I need it.” | |

| More usage of technology (mobile devices) | “I could use my phone for more purposes, not just making calls.” | “My phone will be used for various purposes.” | |

| Challenges | Little or no experience with smart devices | “I only use my phone for calls; what do you mean by ‘app’?” | “I have never had a smartphone; how could I use the app!” “I may need some training before using the app.” |

| Application design issues | High quality voice guide | “Playing clear audios over the app is essential.” | “The app should have a good quality sound to guide me how to use the app.” “The app should inform me where to press.” |

| Local language | “I could only understand Arabic language” | “The app must read texts in Arabic, so I can understand” | |

| No typing or text entry | “I do not want to copy texts then the app read it aloud; it is a boring process.” “The app should do the reading without my interfering.” “It would be a useless app if I have to copy the words to read it.” | – | |

| Buttons | “I prefer buttons with well-known symbols” | “The buttons must be big, at the same points on the screen.” “The fewer buttons the app has, the better.” “How would I know where are the buttons to press?” | |

| Feedback | “ For best capturing, the app should inform me whether to close the phone to text or make further” | “How would I know if the phone has captured the text or not?” |

3.6.3.1 The need for a reader mobile app

The majority of the participants (around 90 %) indicated a desire to have a reader app on their smartphone. They emphasized that this kind of application would help them feel independent and give them the ability to read texts without the need for outside help. Moreover, the participants mentioned that the app would be available to them at anytime and anyplace as they always carry their phone. Hence, they would have access to the reader app whenever and wherever they wanted. Additionally, a number of interviewees stated that their phones would be more functional. In another words, they would be able to use their smartphones for more purposes rather than only making/receiving calls. As a result, the study reveals that developing a reader mobile app with the required features to serve both illiterate and blind people will be advantageous for users in various ways.

3.6.3.2 Challenges with using smartphones

As mentioned previously, most of the participants said they would be excited to have a mobile app to help them read texts. However, nearly half (about 54 %) showed concerns about using digital tools. They explained that they had little or no experience of dealing with smart gadgets like smartphones. Thus, all the blind and several of the illiterate participants (43 % in total) highlighted that they might need training to become familiar with the app before using it.

3.6.3.3 Application design issues

In addition to the need for a reader mobile app and the challenges in dealing with this kind of tools, the interviewees pointed out the design considerations for developing mobile apps to serve blind and illiterate people in Iraq. According to this study, there are five factors that need to be taken into account when designing an interactive and usable interface for this community. These features are a high quality voice guide, the local language (in this case Arabic), no typing or text entry, buttons, and feedback.

Most of participants (about 93 %) agreed that they would rather audio instructions were included in the app to guide them on how to use the app and what is the next step. They also confirmed that the app should provide these audio instructions only in Arabic, as they do not understand English or any other language. In terms of typing or entering text into the app (for illiterate participants only), for example, the participants firmly rejected the idea of copying the text so that the app could then translate it and read it aloud, They considered that copying letters would be a boring process and would take a long time, which would make the app useless so nobody would use or recommend it.

In addition, the interviewees were asked about their design preferences on the app’s buttons. Illiterate participants commented that the app’s buttons should not have any text but should instead have symbols or conventional icons that reflect their functions. In this case, the button will give them additional hints toward using the app. In contrast, blind participants indicated that navigating through the app screens while interacting with a flat touchscreen is the most critical issue they would face. They were concerned about how they could find which buttons to press and how they could switch from one screen to another. Therefore, more than two thirds of the blind participants (about 77 %) preferred the app to have no or only a few buttons. Meanwhile, a few of them suggested having a big, figure-friendly buttons with enough space between them and putting them on the same spots in all the screens so the blind users could figure out and memorize the location of the buttons and press the required one. Additionally, all the blind participants mentioned that after training sessions, they would be more familiar with the app and might better memorize its buttons.

The final design issue which was raised by the participants was feedback. Both illiterate and visually impaired interviewees required auditory feedback in the app especially after capturing the text using the phone camera. They wondered if they should be informed whether the app has captured part of or the whole text and if once the capturing process was complete, whether the app would tell them via an audio message.

To sum up, the study’s requirements elicitation phase showed that both blind and illiterate people showed a strong interest in using their smartphones for more advanced functions rather than basic phone calls or listening to music. They also thought that the reader app would help them feel more independent, as they would be able to read without requiring someone’s assistance. However, they expressed some reservations regarding the use of the digital tools. Thus, they recommended several design characteristics, such as a voice guide, the local language, free of typing or text entry, buttons, and feedback to be considered to make a usable interactive application for both illiterate and visually impaired people. They also suggested having training before using the app in order to be more familiar with its screens and the best way of using it.

4 Design and development

4.1 Application design

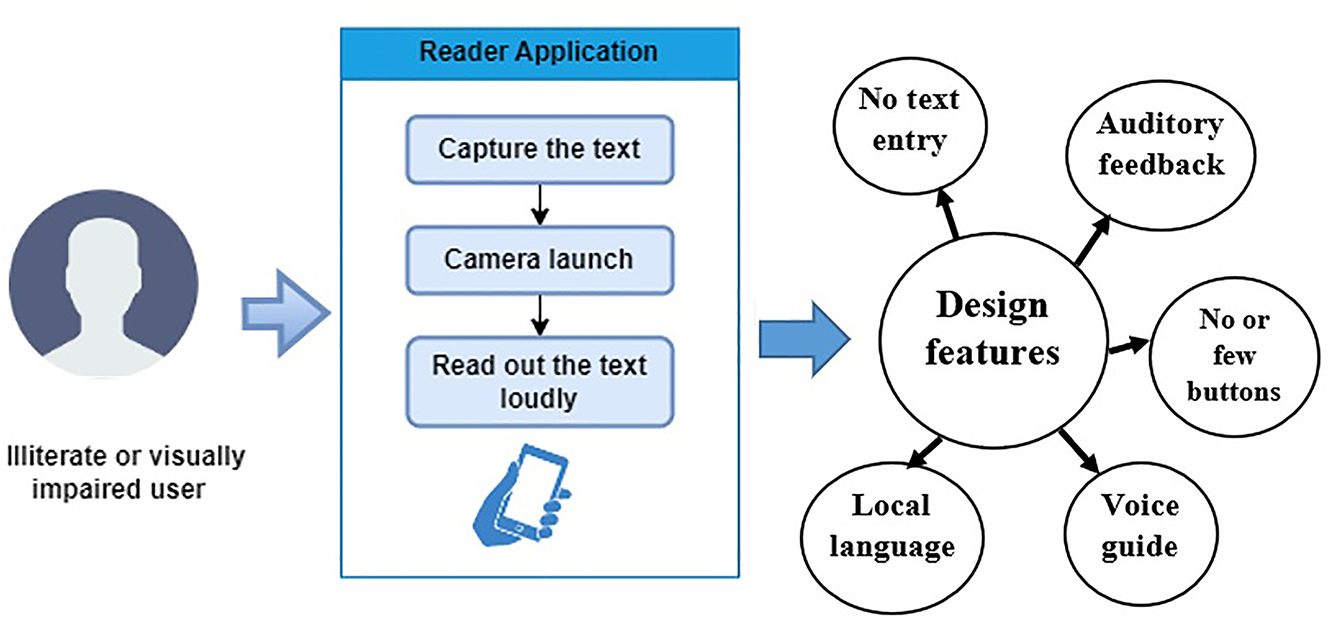

Based on the design features suggested by the visually impaired and illiterate interviewees in the requirements elicitation study, the authors designed and developed a mobile application to serve as a text reader for illiterate or visually impaired people in Iraq. According to the participants’ preferences, the screens of the reader application should be primarily designed with simple and reduced content. The visually impaired participants in particular preferred few or no buttons to be included in the app and for them to be replaced with sounds. Similarly, illiterate participants expressed that they would rather have a simple interface based on sounds rather than texts, as this would make the app easy to use without others’ assistance. As a result, we developed a reader application that has a simple interface with only one big button placed at the bottom of the device screen. Moreover, the application provides a background audio that supports the users’ local language (Arabic) to ensure users receive instructions and guidance throughout their interaction with the application. Background audios were also used to provide feedback after every step the user takes. These features allow users to navigate the application effectively and reduce the number of errors that might occur while using the app. Figure 2 demonstrates the design framework of the application.

The design framework for the reader application.

4.2 Application development

Several technologies and tools were utilized to realize the design’s conceptual framework and develop the proposed application. To begin with, Android Studio IDE was the main tool used to build the Android-based application. The backend of the app was coded in Java programming language, whereas the frontend used XML language. Firebase, a real time database, was also used in this app for translation purposes. If the captured text is in English, it is converted to Arabic then read aloud to the users in their native language. Another technology utilized in this application is OCR. This is a tool that extracts texts from images then transforms them into an editable text format. In addition, TTS technology was used to convert the extracted text into a spoken audio format. This technology supports over 100 languages including the Arabic language and different voice styles, such as male and female.

Once the user has launched the application, a vocal instruction is played to guide them on what to do next. The audio message is “Welcome to the ‘read for me’ app … To launch the phone camera, please press the button at the bottom of the screen”. When the user presses the button, the app launches the camera phone and simultaneously plays another vocal message which is “Please place your device facing the text within the distance of a hand span”. Then, the app captures the text and in the case that the extracted text is in English, translates it into Arabic. If the app does not capture the text, it gives an audio alarm saying that the process was not successful and that the user should try again. In the final step after capturing and extracting the text, the user hears the spoken text which is read aloud by the app. Figure 3 illustrates the flow of activities of the application.

The flow chart for the application’s activities.

4.3 Implementation

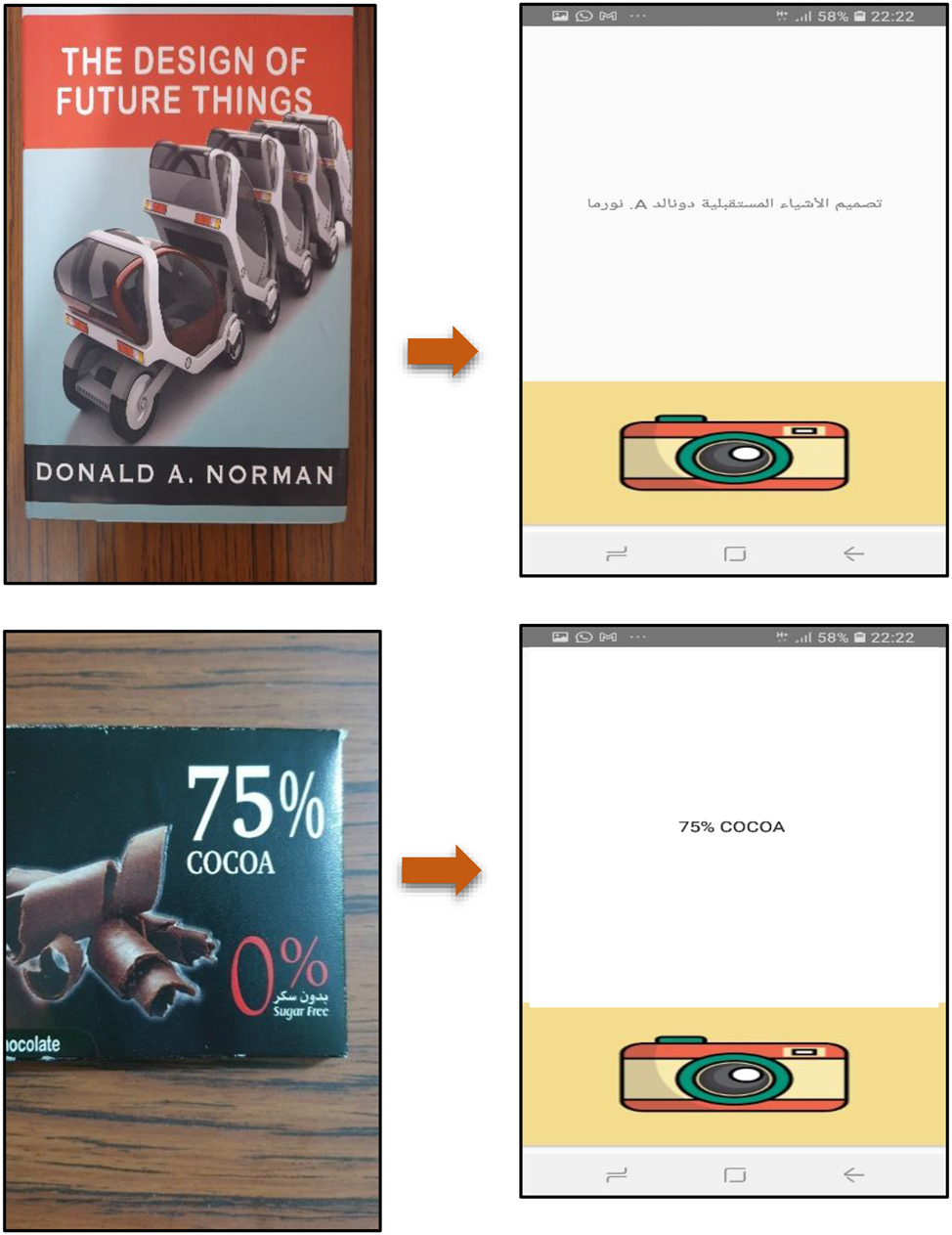

The reader application was developed with one screen that contains only a single content, which is one big button at the bottom. The button takes up the entire bottom part of the screen, so wherever the user (especially blind people) presses, it will be on the required button. In addition, the entire application employs voice as user guidance and to give the user feedback after each step. Moreover, voice was used as an alarm to warn the user if the capturing process was not successful. All of these voices, which accompany every step of the application, are available in the local language (Arabic). The audio feature was added to the application to assist both illiterate and visually impaired users in dealing with their current situation and in understanding what necessary actions should be taken. When the user launches the app, he/she hears a 7-s audio that tells the user to go to the bottom of the screen and press the button. This launches the device camera. Along with launching the camera screen, another voice guide is played to inform the user that he/she should place the phone facing the required text. The voice also contains the required distance (hand span) between the text and the device for best capturing the text. If the text is captured and extracted, feedback is played to confirm the success of the process. There is also a sound that warns the user to try again in case the app has not captured the text. In addition, the app provides a real time translation for texts that are captured in English and transforms them into Arabic. At the end, the user hears the extracted text in their native language. Figure 4 shows the implementation of the reader application on different surfaces.

Screens from the reader application after implementation.

5 Evaluation of the application

A usability evaluation study was conducted after building and testing the application. The aim of this step was to determine the effectiveness of the developed app and explore whether it has met both users’ requirements, which were identified in the requirements elicitation study. Moreover, during the evaluation sessions, the authors could observe the performance of both users (illiterate and visually impaired) and measure the differences between them. Lab-based sessions were carried out with a number of illiterate and visually impaired people to find out their impressions and gather their feedback while they were using the app.

5.1 Participant sample

A total of 35 individuals were invited to use the application and give their feedback. Most of them (26 people) had participated in the first study (requirements elicitation study), whereas 9 were new participants. Similar to the initial study, the recruited participants in the evaluation study included those who were visually impaired (7 participants) and who were illiterate (28 participants). Their ages ranged from 23 to 77 years old with an average of 39. In terms of smartphone usage experience, all illiterate participants had used smartphones for basic functions, such as making calls, sending and receiving voice messages, and listening to music. In contrast, only 3 of the visually impaired participants had used smartphones for the same purposes. However, none of the 35 participants had used a smartphone as a reader tool.

5.2 Evaluation procedure

The evaluation session began with the interviewer greeting the participants then explaining to them their ethical rights and the goal of the experiment. They were also notified that they could withdraw from the experiment at any time. Moreover, they were encouraged to express their honest opinion about the application. Next, the interviewer asked participants several questions related to their profile information, such as age and experience with using smart devices. After their information had been collected, each participant was asked to accomplish the following task:

“Read a piece of printed text, which contains words and numbers and the special symbol “%” using the app.”

Meanwhile, the interviewer was tracking the user’s behavior and taking notes on several aspects, such as the time the user needed to complete the reading process, whether the user encountered any difficulties when using the app, and whether the user could complete the task without assistance. At the end of each session, the participants were asked a number of questions pertaining to user satisfaction, for example, usability, learnability, and willingness to use the app in the future. The responses were assessed using a Likert scale that ranged from 1 (strongly disagree) to 5 (strongly agree). Figure 5 shows a picture from the evaluation session.

5.3 Evaluation results

The data obtained from the evaluation sessions were analyzed to assess the usability of the developed application based on three distinct aspects: efficiency, effectiveness, and satisfaction [19]. Table 4 describes the findings from the evaluation sessions.

Summery of the evaluation study findings.

| Evaluation metrics | Measuring indicator | Type of participants | Mean | SD |

|---|---|---|---|---|

| Efficiency | Task completion time | Visually impaired | 46.28 | ±2.87 |

| Illiterate | 33.21 | ±2.14 | ||

| Learning time: times assistance was required | Visually impaired | 0.42 | ±0.53 | |

| Illiterate | 0.071 | ±0.26 | ||

| Learning time: time spent listening to the audio instructions | Visually impaired | 0.42 | ±0.53 | |

| Illiterate | 0.107 | ±0.31 | ||

| Effectiveness | Completion rate | Visually impaired | 0.857 | ±0.37 |

| Illiterates | 1 | ±0 | ||

| Error rate | Visually impaired | 0.57 | ±0.53 | |

| Illiterate | 0.142 | ±0.35 | ||

| Satisfaction | Overall users’ satisfaction with the application | Visually impaired | 4.2 | ±0.95 |

| Illiterate | 4.5 | ±0.69 | ||

| Simplicity and ease of use | Visually impaired | 3.5 | ±0.53 | |

| Illiterate | 4.7 | ±0.46 | ||

| Willingness to use the app | Visually impaired | 4.8 | ±0.37 | |

| Illiterate | 4.9 | ±0.26 | ||

| Recommendations to others | Visually impaired | 4.5 | ±0.53 | |

| Illiterate | 4.8 | ±0.31 |

5.3.1 Efficiency

Efficiency refers to how quickly users can complete tasks once they have become familiar with the interface’s design. In other words, the system is considered efficient when no time is wasted [20]. Two metrics were utilized in this research to assess the efficiency of the reader application [19]. The first one was task completion time, which was measured by calculating the average time spent to complete the required task. The second metric was learning time, which includes two parts: the number of times the participant requested assistance, and the frequency with which the participant listened to the audio instructions.

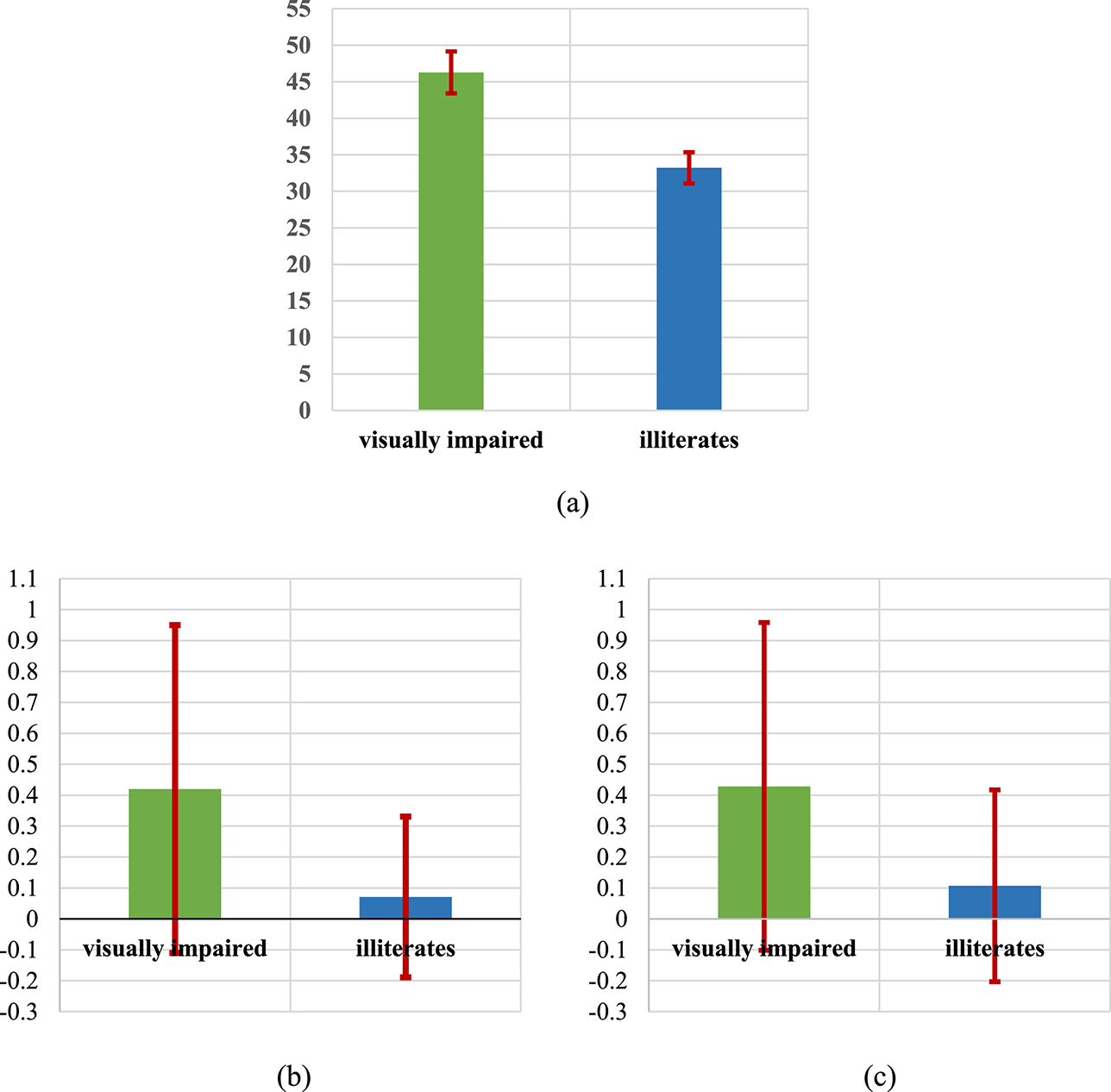

5.3.1.1 Task completion time: the average time spent to complete the task

The system interface is considered efficient when it minimizes the time required to perform a task [21]. In this research, each participant was given the same task, which was reading a printed text using the developed app. The average time participants took to complete the task was 35.82 s. The standard deviation (SD) for the task was ±5.76. However, there was a distinctive difference between the time spent to accomplish the same task by visually impaired participants and illiterate participants, whereby the visually impaired spent much more time to complete the task than illiterate participants. The average time spent by blind participants was 46.28 s with an SD of ±2.87, while the average time spent by illiterate participants was 33.21 with an SD of ±2.14. Figure 6 shows the average time with SD values to complete the given task by visually impaired and illiterate participants. The minimum time spent to achieve the task by illiterate participants was 30 s while visually impaired participants took 43 s whereas the maximum time taken was 37 s and 51 s by illiterate and blind participants respectively. The number of participants who spent longer than the average time to finish the task was 8 (28.5 %) and 3 (42.85 %) for illiterate and visually impaired users respectively. These results confirmed that the majority of participants, whether they were illiterate or visually impaired, had used the app efficiently.

Evaluating the app through lab study.

The results of metrics used to measure the application’s efficiency. (a) Task completion time. (b) Number of times the participant requested assistance. (c) Number of times the participant listened to the audio instructions.

5.3.1.2 Learning time: the number of times the participant requested assistance

According to [22], efficiency is measured by the number of times the users ask for help while interacting with the system. As long as the users successfully perform the tasks without assistance, the interface is considered efficient. This study revealed that 3 out of 7 visually impaired participants required assistance to complete the task. In contrast, only 2 out of 28 illiterate participants needed help with how to perform the task. These results prove that the participants found the application’s user interface simple and easy to use. Additionally, the voice instructions, which accompany every step while using the app, made it easier for the participants to understand how to interact with the application and complete each task.

5.3.1.3 Learning time: the frequency of times the participant listened to the audio instructions

The developed application has an Arabic voice guide in every step to inform the user what to do next. An ideal user interface would be the one in which the participant listened to the vocal instructions just once, as having to listen repeatedly to the instructions would indicate a poorly designed user interface [22]. In this study and in order to do the reading task, 3 of the visually impaired participants (nearly 43 %) went back to listen to the voice guide provided by the app. Illiterate participants, in contrast, were more confident, as only 3 of 28 participants (about 11 %) repeated listening to the audio instructions. This means most of the illiterate and less than a half of visually impaired participants could successfully perform the reading task after listening to the voice instructions only once. These findings demonstrate that the voice guide was clear and simple enough so the participants could follow the instructions easily. Moreover, these audio instructions contributed significantly to the application’s efficiency by guiding the user on how to complete the task.

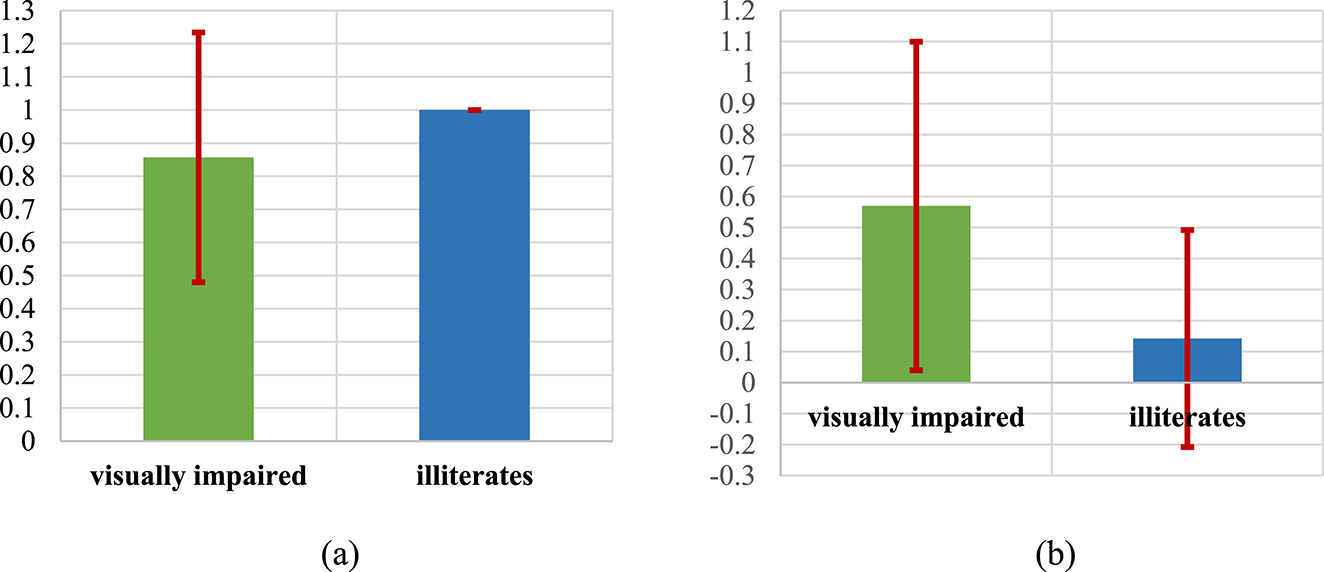

5.3.2 Effectiveness

Effectiveness was described by [19] as the degree of accuracy and completeness with which users accomplish specific goals. In this study, two fundamental indicators were considered to measure the effectiveness of the reader application: the completion rate and the error rate. Figure 7 illustrates the effectiveness mesurement of the application.

5.3.2.1 Completion rate

Also known as the success rate, this refers to the number of participants who managed to complete the given task. In order to calculate the completion rate for the application, we used the following equation:

The results reported a high success rate between both visually impaired and illiterate participants, as 6 of the visually impaired participants (about 85.7 %), and all of the illiterate participants (100 %) were able to complete the reading task. This means that the application is completely effective for both groups of participants.

5.3.2.2 Error rate

Another primary method to measure the effectiveness of the system is by counting the mistakes or errors made by participants while trying to accomplish a specific task [23]. The below formula was used to calculate the error rate for this study:

In this experiment, 4 of the visually impaired participants (57.14 %) made mistakes during the reading session. The errors mostly occurred when the participant was attempting to capture the text. They miscalculated the distance that should be between the text and the device. Therefore, they repeated the process of capture after they had heard the warning feedback from the app, which states, “Capturing text was not successful”. Regarding the illiterate participants, they also recorded errors while using the app; 4 out of 28 participants (14.2 %) did not follow the voice instruction related to the hand span distance between the text and the device. Consequently, the device did not capture the text directly. However, the warning voice message provided by the app helped them to recover from this error and then complete the task properly. These results showed that, although the participants made errors while interacting with the app, the accompanying feedback with every step in the app certainly helped them to recover from these errors and accomplish their task.

5.3.3 User satisfaction

Satisfaction is defined as the level of comfort and positive attitudes of users toward the system [19]. User satisfaction can be measured through interviews, questionnaires, or attitude rating scales. In this study, we rated the users’ satisfaction with the developed application using a Likert scale that ranged from 1 (extremely disagree) to 5 (extremely agree). At the end of each session, the participants were asked four questions in order to express their opinions about the app. The first question was, “Overall, are you satisfied with the application?” The responses were distributed in a range from 1 (not satisfied at all) to 5 (very satisfied). The second question was, “Was the app simple and easy to use?” The responses ranged from 1 (not at all easy) to 5 (very easy). The third question was, “Would you use the reader app in the future?” The responses ranged from 1 (not at all willing) to 5 (very willing). The final question was, “Would you recommend this app to others?” The responses ranged from 1 (not recommend at all) to 5 (would strongly recommend). According to the hypothesis, satisfied users rated their average satisfaction as equal to or higher than 2.5, which represents the mean value for satisfaction on the Likert scale.

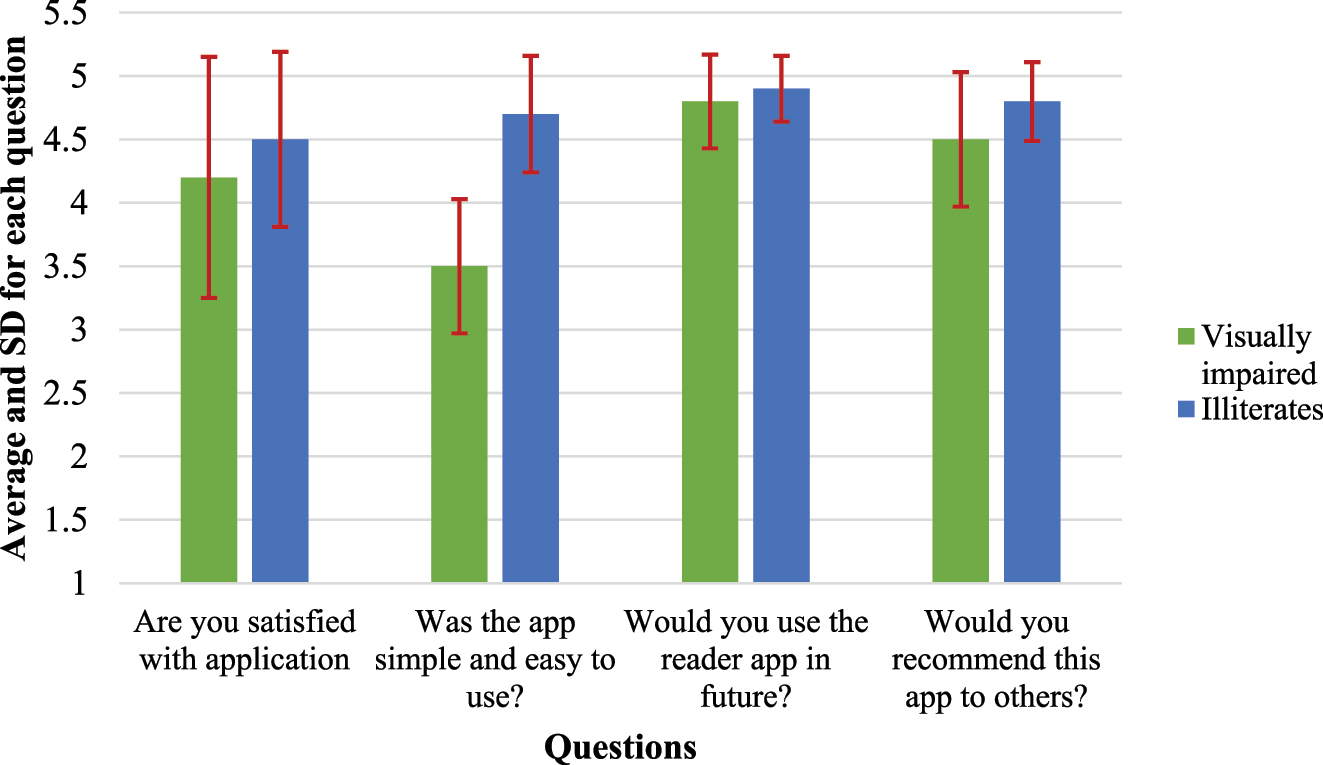

Overall, the study findings reported that the application rating given by the illiterate participants was higher than the rating given by the visually impaired over the four satisfaction questions. Furthermore, the most difference in the application rating between the two groups was noticed in question 2 “Was the app simple and easy to use?” Figure 8 illustrates the participants’ satisfaction rating on the application.

The results of two metrics used to measure the application’s effectiveness. (a) Completion rate. (b) Error rate.

Users’ satisfaction rating on the application.

Regarding question 1, both groups of participants were pleased and showed extreme satisfaction with the reader application; the average satisfaction rating was 4.2 and 4.5 for visually impaired and illiterate users respectively. They also expressed intensive interest in the application, and they were keen to use it to help them read texts (average 4.8 for visually impaired and 4.9 for illiterate users). Moreover, illiterate participants confirmed that they would advise others to use the reader app (average 4.8), and most of the visually impaired participants (average 4.5) concurred with this opinion. Finally, the feedback on question 2 had a gap rating between the two groups of participants (visually impaired and illiterate users). While illiterate users found the application very easy to use and rated this aspect at 4.7, some of the visually impaired users faced difficulties when using the application. This made them rate the average ease of use of the application at 3.5, which is still above the 2.5 average. That means the application was easy to use for both visually impaired and illiterate users.

6 Discussion

In this research, a mobile-based application was designed and implemented to help two groups of people in Iraq (visually impaired and illiterate people) to read texts. The developed app would assist both groups to explore what is written on different surfaces, such as paper, fabric, plastic, and wood. Moreover, the technologies, for example, OCR and real time translation, used to build the app enable it to capture different forms of words like names, numbers, and special symbols and allow it to support the local native language.

Firstly, a requirements elicitation study was conducted with both visually impaired and illiterate participants to determine the requirements they would like to add to or remove from the application. Similar to the results of earlier studies, including [4,21], and [24], our study found that the significant preferences for both visually impaired and illiterate users was to first build the app based on audio content and eliminate texts. The later evaluation study proved that building an application based on audio content helped both visually impaired and illiterate users in several aspects; for example, audio instructions were utilized to guide the users on how to use the app without needing to ask for help. This led to an increase in their feeling of user independency and in their willingness to use more digital tools. Moreover, audio feedback after every step the user undertaken helped user to recover if they made mistakes during using the app. Such that increased the level of application effectiveness and assist them to successfully complete the tasks.

Secondly, the analyzed data from the requirements elicitation sessions showed that the illiterate users did not mind having graphical content, especially simple and well-known symbols. Visually impaired users, in contrast, expressed a firm refusal to include any visual content in the app. Furthermore, during the requirements elicitation study, the visually impaired users were concerned about interacting with a smart touchscreen, and they wondered how they could find the buttons on such screens. This issue was also addressed by [12] as interacting with touchscreens compared to physical keys is not efficient or easy for blind users. As a result, visually impaired users would either need assistance or would need to find a better method of interacting with touchscreens [12]. Some earlier studies, such as [5,11], proposed solutions for this problem through building physical keys systems to read text and so avoid touchscreens. That means building new systems and not taking advantage of already available smartphones. Therefore, in this research, we utilized the smartphones that are widely available and owned by many people, even blind and illiterate people [13], to develop the proposed reader system. However, to tackle the problem of interaction with touchscreens, we reduced the number of buttons and developed an application that has only one big button, which is located at the bottom of the screen. This approach can drastically enhance the experience of the visually impaired interacting with touchscreens, which was proved in the results reported after the app evaluation.

Additionally, because most of the visually impaired and illiterate users had little or no experience with using smart devices, they thought they would face difficulties using and interacting with the app. However, the majority of participants from both groups, that is, visually impaired and illiterate users, demonstrated an extremely competent performance when using the app. This confirmed that by following the previously suggested design features (voice guide, auditory feedback, no texts or visual content, few or no buttons, local native language), the developed text reading application was efficient and effective and gained a high level of satisfaction for both visually impaired and illiterate users.

Finally, it is worth mentioning that illiterate users’ performance was higher than that of the visually impaired in all three evaluation metrics, namely, efficiency, effectiveness, and user satisfaction. This could be an indicator that some blind users, who could not complete the reading task, might need training before using the app. Training was suggested by the study participants and presented by [12] as a solution to help blind users be aware of the gestures available and to be more familiar with the app interface.

7 Research limitation

The small sample size of participants who were recruited for the evaluation study is the research only limitation. In total, 35 individuals (7 visually impaired and 28 illiterate users) were chosen to provide feedback on the application. This might be considered a modest number, especially with regard to the visually impaired participants. However, it has been noted that 12 participants in qualitative studies and 25–30 participants in quantitative studies are sufficient to approach data saturation [25,26]. As a result, the authors believed that 35 participants would be sufficient to address the research objectives and produce insightful findings.

8 Conclusions

This research presents a mobile-based application for visually impaired and illiterate users to read texts using various technologies, such as OCR and real time translation. Two studies were carried out; the first study was conducted with both groups of users to gather their requirements regarding their need for a reader application and the design of it. The study resulted in several design recommendations that supported both visually impaired and illiterate users, for example, voice guidance throughout the application, auditory feedback after each step, few or no buttons.

The second study was carried out after the reader application had been developed to assess its usability and gather users’ feedback. The evaluation findings showed that the reader application was effective and efficient for both groups of participants (visually impaired and illiterate users), since they managed to complete the given task within or under the average time and with no or only a minor number of errors. Another finding of the evaluation study was that the visually impaired users found interaction with the application more difficult compared to the illiterate users, as their performance was less competent over the two evaluation metrics of efficiency and effectiveness. However, these results could be improved by training the users on how to use the app.

In addition, the application gained a high level of user satisfaction, as users expressed a strong desire to continue using it and would recommend it to others. These findings demonstrate that creating applications that are usable will encourage both visually impaired and illiterate users to employ modern technology more, and this will help them overcome their concerns about smart devices. As a result, this will help to reduce the digital divide and increase the connectivity between communities.

About the authors

Zainab Hameed Alfayez was awarded MSC degree in Computer Science (Advance Computer Science) with a distinction from Swansea University, in 2015, UK and B.Sc. in Computer science from University of Basrah. She currently works as an instructor at the Computer Science & Information Technology college, University of Basrah, Iraq. Her research interests are focused on Human Computer Interaction, Mobile Human Computer Interaction, and Software Engineering.

Batool Hameed Alfayez was awarded MSC degree in Libraries and Information (Knowledge Management) from University of Basrah, in 2014, Iraq and B.Sc. in Libraries and Information from University of Basrah. She currently works as an instructor at the College of Art, University of Basrah, Iraq. Her research interests are focused on knowledge management, Automated Systems, and Database Management.

Nahla Hamad Abdul-Samad was awarded B.Sc. in Computer Information Systems from University of Basrah, Iraq. She currently works as an instructor assistant at the Computer Science & Information Technology college, University of Basrah, Iraq. Her research interests are focused on general Computer Science.

References

1. Friscira, E., Knoche, H., Huang, J. Getting in touch with text: designing a mobile phone application for illiterate users to harness SMS. In 2nd ACM Symposium on Computing for Development; ACM: Atlanta Georgia, 2012; p. 5.10.1145/2160601.2160608Suche in Google Scholar

2. Orbis. Global Blindness Was Slowing Prior to Pandemic Study Reveals [Online]. https://www.orbis.org/en/news/2021/new-global-blindness-data (accessed Sep 01, 2023).Suche in Google Scholar

3. Islam, M. N., Inan, T. T., Promi, N. T., Diya, S. Z., Islam, A. K. M. N. Design, implementation, and evaluation of a mobile game for blind people: toward making mobile fun accessible to everyone. In Information and Communication Technologies for Humanitarian Services; The Institution of Engineering and Technology: Herts, United Kingdom, 2020.10.1049/PBTE089E_ch13Suche in Google Scholar

4. Alfayez, Z. H. Design and implement a mobile-based system to teach traffic signs to illiterate people. i-com 2022, 21, 353–364. https://doi.org/10.1515/icom-2022-0029.Suche in Google Scholar

5. Rajbongshi, A., Islam, I., Rahman, M., Majumder, A., Islam, E., Biswas, A. A. Bangla optical character recognition and text-to-speech conversion using Raspberry Pi. Int. J. Adv. Comput. Sci. Appl. 2020, 11, 274–278. https://doi.org/10.14569/IJACSA.2020.0110636.Suche in Google Scholar

6. Punith, A., Manish, G., Sai Sumanth, M., Vinay, A., Karthik, R., Jyothi, K. Design and implementation of a smart reader for blind and visually impaired people. In 3rd International Conference on “Advancements in Aeromechanical Materials for Manufacturing”: icaamm; AIP Conference: Hyderabad, India, Vol. 2317, 2021.10.1063/5.0036140Suche in Google Scholar

7. Mukhiddinov, M., Cho, J. Smart glass system using deep learning for the blind and visually impaired. Electronics 2021, 10, 2756. https://doi.org/10.3390/electronics10222756.Suche in Google Scholar

8. Lakshmi, S. S. A., et al.. Telly-filly a form filling assistant for Illiterate and visually impaired. In 2021 International Conference on Advancements in Electrical, Electronics, Communication, Computing and Automation (ICAECA); IEEE: Coimbatore, India, 2021; pp. 1–6.10.1109/ICAECA52838.2021.9675740Suche in Google Scholar

9. Sundar, C., Abinaya, O., Ajithkumar, V. An optical character recognition framework based newspaper reader application for blind. In First International Conference on Combinatorial and Optimization; EAI: Chennai, India, 2021.Suche in Google Scholar

10. Laviniu, E., Ioan, G., Virgil, T., Péter, S., Alexandru, G. OCR application on smartphone for visually impaired people. J. Electr. Electron. Eng. 2014, 7, 153–156.Suche in Google Scholar

11. Prabha, R., Razmah, M., Saritha, G., Asha, R., Senthil, G. A., Gayathiri, R. Vivoice – reading assistant for the blind using OCR and TTS. In 2022 International Conference on Computer Communication and Informatics (ICCCI); IEEE: Coimbatore, India, 2022; pp. 1–7.10.1109/ICCCI54379.2022.9740877Suche in Google Scholar

12. Rodrigues, A., Nicolau, H., Montague, K., Montague, K., Guerreiro, T., Guerreiro, J. Open challenges of blind people using smartphones. Int. J. Hum. Comput. Interact. 2020, 36, 1605–1622. https://doi.org/10.1080/10447318.2020.1768672.Suche in Google Scholar

13. Ahmed, M. A., Islam, M. N., Jannat, F., Sultana, Z. Towards developing a mobile application for illiterate people to reduce digital divide. In International Conference on Computer Communication and Informatics (ICCCI): Coimbatore, India, 2019; pp. 1–5.10.1109/ICCCI.2019.8822036Suche in Google Scholar

14. Kuechler, W., Vaishnavi, V. Promoting relevance in IS research: an informing system for design science research. Informing Sci. Int. J. an Emerg. Transdiscipl. 2011, 14, 125–128; https://doi.org/10.28945/1498.Suche in Google Scholar

15. Biernack, P., Waldorf, D. Snowball sampling: problems and techniques of chain referral sampling. Sociol. Methods Res. 1981, 10, 141–163. https://doi.org/10.1177/004912418101000205.Suche in Google Scholar

16. Alfayez, Z. H. Designing educational videos for university websites based on students’ preferences. Online Learning 2021, 25, 280–298. https://doi.org/10.24059/olj.v25i2.2232.Suche in Google Scholar

17. Biggerstaff, D., Thompson, A. R. Interpretative phenomenological analysis (IPA): a qualitative methodology of choice in healthcare research. Qual. Res. Psychol. 2008, 5, 173–183. https://doi.org/10.1080/14780880802314304.Suche in Google Scholar

18. Smith, J. A., Jarman, M., Osborn, M. Interpretative phenomenological analysis. In Qualitative Health Psychology: Theories and Methods; SAGE: Los Angeles, 2009; pp. 53–80.Suche in Google Scholar

19. Frekjmr, E., Hertzum, M., Hornbmk, K. Measuring usability: are effectiveness, efficiency, and satisfaction really correlated? In SIGCHI conference on Human Factors in Computing Systems; Association for Computing Machinery: The Hague, The Netherlands, 2000; pp. 345–352.Suche in Google Scholar

20. Bevan, N., Carter, J., Harker, S. ISO 9241-11 revised: what have we learnt about usability since 1998? In Human-Computer Interaction: Design and Evaluation; Kurosu, M., Ed.; Springer: Cham, 2015; pp. 143–151.10.1007/978-3-319-20901-2_13Suche in Google Scholar

21. Khan, I. A., Hussain, S. S., Shah, S. Z. A., Iqbal, T., Shafi, M. Job search website for illiterate users of Pakistan. Telemat. Inform. 2017, 34, 481–489. https://doi.org/10.1016/j.tele.2016.08.015.Suche in Google Scholar

22. Islam, M. N., Ahmed, M. A., Islam, A. K. M. N. Chakuri-bazaar: a mobile application for illiterate and semi-literate people for searching employment. Int. J. Mob. Hum. Comput. Interact. 2020, 12, 22–39. https://doi.org/10.4018/IJMHCI.2020040102.Suche in Google Scholar

23. Nielsen, J., Levy, J. Measuring usability: preference vs. performance. Commun. ACM 1994, 37, 66–75. https://doi.org/10.1145/175276.175282.Suche in Google Scholar

24. Doiphode, R., Ganore, M., Garud, A., Ghuge, T., and Kaur, P. Be my eyes: android voice application for visually impaired people. 2017, https://doi.org/10.13140/RG.2.2.12307.48164.Suche in Google Scholar

25. Dworkin, S. L. Sample size policy for qualitative studies using in-depth interviews. Arch. Sex. Behav. 2012, 41, 1319–1320. https://doi.org/10.1007/s10508-012-0016-6.Suche in Google Scholar PubMed

26. Fugard, A. J. B., Potts, H. W. W. Supporting thinking on sample sizes for thematic analyses: a quantitative tool. Int. J. Soc. Res. Methodol. 2015, 18, 669–684. https://doi.org/10.1080/13645579.2015.1005453.Suche in Google Scholar

© 2023 the author(s), published by De Gruyter, Berlin/Boston

This work is licensed under the Creative Commons Attribution 4.0 International License.

Artikel in diesem Heft

- Frontmatter

- Editorial

- i-com directory and index listings

- Research Articles

- Test automation for augmented reality applications: a development process model and case study

- User-centered design in mobile human-robot cooperation: consideration of usability and situation awareness in GUI design for mobile robots at assembly workplaces

- Introducing VR personas: an immersive and easy-to-use tool for understanding users

- Read for me: developing a mobile based application for both visually impaired and illiterate users to tackle reading challenge

- AnswerTruthDetector: a combined cognitive load approach for separating truthful from deceptive answers in computer-administered questionnaires

- The Method Radar: a way to organize methods for technology development with participation in mind

Artikel in diesem Heft

- Frontmatter

- Editorial

- i-com directory and index listings

- Research Articles

- Test automation for augmented reality applications: a development process model and case study

- User-centered design in mobile human-robot cooperation: consideration of usability and situation awareness in GUI design for mobile robots at assembly workplaces

- Introducing VR personas: an immersive and easy-to-use tool for understanding users

- Read for me: developing a mobile based application for both visually impaired and illiterate users to tackle reading challenge

- AnswerTruthDetector: a combined cognitive load approach for separating truthful from deceptive answers in computer-administered questionnaires

- The Method Radar: a way to organize methods for technology development with participation in mind