Abstract

Despite the various advantages of online surveys, such as their cost-effectiveness and broad reach, the infiltration of bots can result in data distortion, eroding trust and hindering effective decision-making. Identifying bot responses within survey data is paramount, and epidemiologic and public health researchers can utilise various tactics such as email authentication and scrutiny of response times, to detect fraudulent responses. This paper discusses the authors’ experience of bot spamming in an online survey, which skewed our findings. We discuss the actions taken to detect and invalidate bot responses within survey data and discuss potential forms of bot prevention. To detect fraudulent responses, the authors investigated the time taken to complete the survey, recruitment rates, invalid email addresses, and invalid free-format responses. Supplementary strategies, such as data validation methods and monitoring tools, can complement reCAPTCHA systems to alleviate the adverse effects of bot activity on survey data accuracy. However, employing other methods that require challenges, or additional questions may reduce the recruitment rate and deter potential participants. Given the advancing sophistication of bots, ongoing innovation in authentication techniques is imperative to protect the dependability and accuracy of survey data in the future.

Background

Online surveys are important in public health research and practice as they can effectively and efficiently collect information regarding health behaviours, attitudes, and outcomes from various populations, facilitating the implementation of interventions, evidence-gathering, and policy-making decisions [1]. Online surveys offer multiple benefits for researchers and participants including cost-effectiveness, wider global reach, participant convenience, faster responses, reduced methodological bias, automation and customisation (e.g., branching logic), and anonymity [1], 2]. Hard copy surveys have several limitations, including the cost of printing, increased labour for staff and participants, and low response rates. Due to these advantages, online surveys are a common practice in research across epidemiologic and public health research [1]. The Technology Acceptance Model (TAM) is a widely utilised theoretical framework that explains and predicts user acceptance of new technologies based on perceived usefulness and ease of use. These beliefs impact attitudes toward technology use [3]. Extended models (e.g., TAM2, Unified Theory of Acceptance and Use of Technology (UTAUT)) incorporate social influence, experience, and task relevance [4].

However, the spamming of online surveys using bots has become an increasing prevalent problem posing significant challenges for researchers relying on authentic survey data for evidence-gathering and decision-making [5]. These bots, which are automated programs designed to perform tasks on the internet, including imitating human behaviour [2], threaten the integrity of electronic survey research. When used to manipulate online survey responses they skew results, compromise data integrity, and undermine the validity of research findings [5]. This distortion can mislead researchers who rely on survey results to understand end-user preferences or public opinion and inform policies or strategies [5], 6]. Dealing with bot-generated spam consumes valuable resources, including time and money, to identify and filter out fraudulent responses [5]. Recent research has indicated that bots have accelerated since the COVID-19 pandemic, with recent examples in online survey studies [7], [8], [9].

Bot spamming can considerably affect a study’s internal validity (i.e., Type I and II errors) and reliability, often impacting variance [5], 8]. However, there is limited evidence to determine the level of effect of the bias introduced to studies from fraudulent survey responses. Researchers and survey administrators must implement security measures to mitigate the impact of bot activity [10]. If the data are tainted by bot spamming, decisions made based on such data may be flawed, leading to ineffective outcomes or strategies that do not accurately address the needs of the target audience [1], 5], 10]. This paper will address several ways epidemiologic and public health researchers can prevent and detect bots in online surveys. These strategies include Completely Automated Public Turing test to tell Computers and Humans Apart (CAPTCHA), email validation, use of open-ended questions, ‘free-format’ fields, and machine learning.

Possible motivations for bot spamming in online surveys

Few researchers have reported on the impact of bot spamming [5], 6], 10], despite the significant and catastrophic effect it has on survey methods and research integrity. Moreover, there is limited evidence establishing why bots are created and deployed to spam online surveys. It is likely that bots are used to collect incentives or to purposefully sabotage the data collected [5]. A speculation in one recent report on bot activity is that bots may use artificial intelligence to target keywords or symbols, such as ‘$’ to seek incentives [8]. One hypothesis is that controversial topics may be a reason for bot sabotage attention. It is likely that survey links are identified by spammers or bots when shared publicly on social media sites [5]. Bots can be programmed to automatically fill out survey forms, often utilising scripts or specialised software [2], 5]. In certain instances, bots may be deployed to manipulate survey outcomes to favour a particular agenda or viewpoint [2], 5]. This may involve providing biased or deceitful responses to influence the survey’s outcome [5], 6].

Our experience – evidence of bot invasion

We conducted a project on the use of microarray patches (MAPs) for vaccinations across Australia, Canada, United Kingdom, and New Zealand. This study was approved by the Western Sydney Local Health District ethics committee (ref: 2023/ETH00705). The protocol for this study was previously published [11]. MAPs are a novel intradermal vaccine delivery technology currently undergoing clinical trials [12]. Compared to injection, MAPs have potential for enhanced thermostability, minimal pain, no needle-stick injuries, convenience, and possible self or lay administration [13], 14]. We aimed to recruit people online from the following groups: the general public, MAP recipients, clinicians, and parents. Participants were required to complete two surveys at least two weeks apart by providing a valid email address (required to receive the invitation for the second survey). We collected demographic data at baseline including country of residence and postal code. Participants were recruited using e-newsletters, invites from group email, and social media (i.e., Facebook and X [formerly Twitter]).

Recognising bots in survey data

We first suspected fraudulent responses due to a considerable increase in the recruitment rate within 1 h. This alerted us to an abnormality, and we then utilised additional steps to determine if they were likely bots. We detected fraudulent survey responses using various sources including email verification, invalid postal codes for the country selected time taken to complete surveys, and rare group selections (Table 1).

Example demographic data from suspected bots.

| Participant ID | Email address | Group selection | Age, Years | Country of residence | First nations | Postal code |

|---|---|---|---|---|---|---|

| 761 | XxxxXxxxxxxX@zoho.com | Someone who has received or experienced a vaccine patch | 42 | Australia | Yes | 36092 |

| 762 | XxxxxxxXxxxx##@gmail.com | Someone who has received or experienced a vaccine patch | 30 | Australia | Yes | 33173 |

| 763 | XxxxxXxxxxxXx@zoho.com | Someone who has received or experienced a vaccine patch | 50 | Australia | Yes | 18252 |

| 764 | Xxxxxxxxxxx####@gmx.com | Someone who has received or experienced a vaccine patch | 42 | Australia | Yes | 67401 |

| 765 | Xxxxxxxxx####@gmx.com | Someone who has received or experienced a vaccine patch | 43 | Australia | Yes | 31602 |

-

X’ represents a letter; ‘#’ represents a number.

Criteria for detecting bots in this study: Respondents had to meet at least one of the following: (1) an unusual/unexpected increase in recruitment rates or (2) a completion time that was under 1 min or over 10 min, and two of the following: (1) an invalid email address as evidenced by undeliverable email receipt/no response to follow up survey, (2) an invalid postcode, or (3) chose a rare group option (i.e., someone who has received a vaccine patch). A summary of responses that met the criteria is displayed in Table 2.

Summary of criteria for detecting suspected bots.

| Human (n=375) | Bot (n=364) | |

|---|---|---|

| Criteria 1 | ||

| Time taken to complete survey (<1 min or >10 min) | 119 (31.7 %) | 360 (98.9 %) |

| Unexplained increase in recruitment rate | 283 (75.5 %) | 364 (100 %) |

| Criteria 2 | ||

| Invalid email address | 0 (0 %) | 188 (51.6 %) |

| Invalid postcode | 24 (6.4 %) | 362 (99.5 %) |

| Rare group selection | 305 (81.3 %) | 364 (100 %) |

Recruitment rates: Daily recruitment rate was 10 or less. Any one day in which recruitment was over 10, triggered further investigation. For example, in approximately 1 h we had 350 ‘participants’ recruited.

Time taken to complete survey: Any survey that was completed in 1 min or less or over 10 minutes was flagged for further investigation. Invalid email addresses: Follow up surveys were automatically sent in REDCap to participants two weeks after their first response. Invalid email addresses would bounce back and thus could be excluded from further involvement in the study, and were investigated as potential bots at this point.

Invalid postal codes for the country selected: Given the diversity of countries and the format of postcodes, this was a free-format field. The validity of postcodes was checked relative to the country of residence selected. For example, almost all Australian postcodes consist of four numbers (one territory has three numbers), All suspected bot responses contained postcodes with five numbers.

Rare group selection: Two fields were considered for this criteria: Exposure to Vaccine patches, and Identifying as First Nations. If the response chosen was ‘someone who has received a vaccine patch’, which is currently rare as this technology is in early clinical trials, they were considered suspected bots. Likewise if selecting ‘yes’ to identifying as First Nations (e.g., Aboriginal and Torres Strait Islander, Inuit, Métis, or Māori), which in Australia for example, Aboriginal and Torres Strait Islander people represent 3.8 % of the population [15], they were considered bots.

Impact of bots on study results

The inclusion of data generated by suspected bots has the potential to bias the results of one’s research. The size and direction of the bias is likely to vary for each research project. In our study, we saw some significant differences between the data generated by suspected bots and that of genuine participants, including (but not limited to) ‘participant’ demographics, and main outcomes. After applying our criteria, a total of 364 bots and 375 human responses were detected. All suspected bots selected ‘yes’ to identifying as First Nations (e.g., Aboriginal and Torres Strait Islander, Inuit, Métis, or Māori). In Australia, Aboriginal and Torres Strait Islander people represent 3.8 % of the population [15]. This would have created a selection bias in groups that bots spammed (i.e., MAP recipients and in Aboriginal and Torres Strait Islanders).

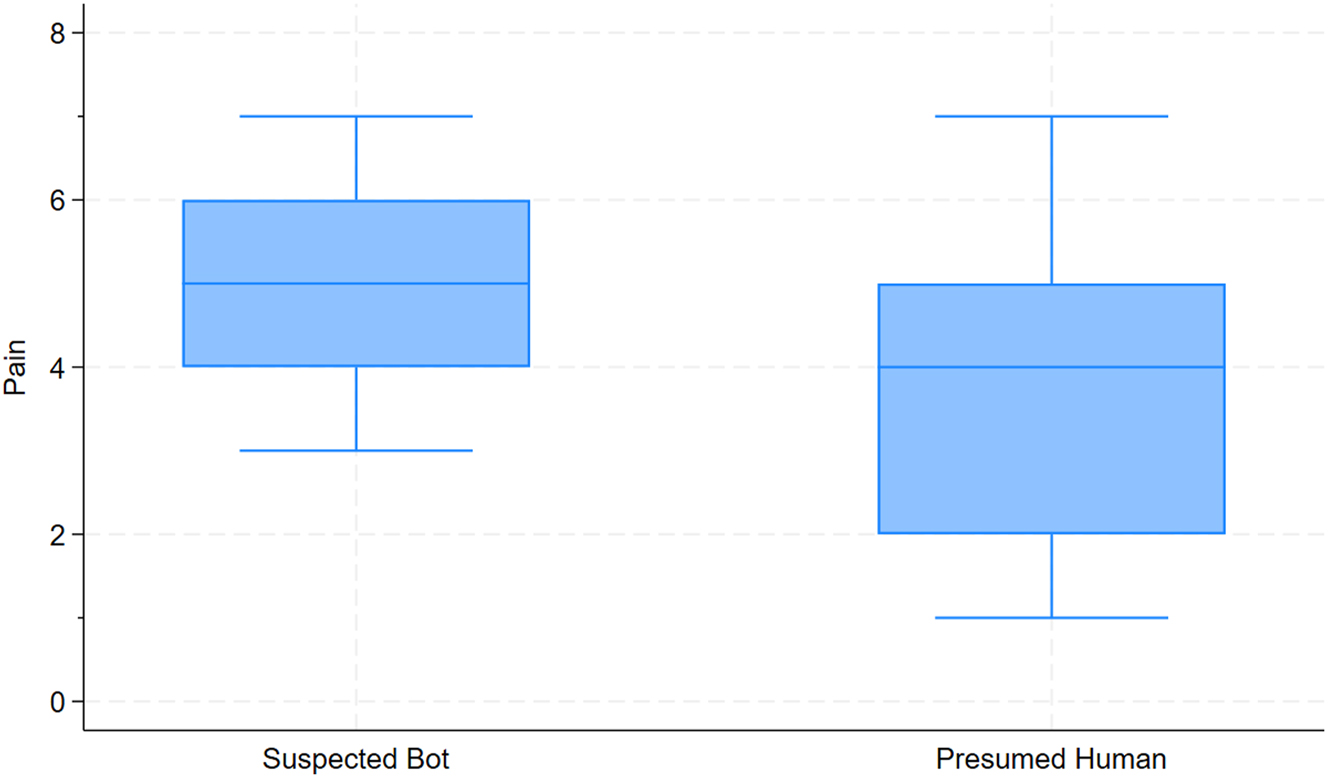

We performed a preliminary analysis using an independent samples t-test comparing human and suspected bot responses to the question “I believe the vaccine patch will be more painful than an injection” on a 7-point Likert scale (with higher scores suggesting a more painful perception). The differences between bot and human responses are illustrated in a box plot in Figure 1. Humans’ average pain perception was 3.78 (95 % CI: 3.61–3.95) and suspected bots average pain perception was 4.88 (95 % CI: 4.73–5.02). The estimated difference between perceptions of pain between bots and humans was 1.10, with a 95 % confidence interval of 0.88–1.32 (t(726) = 9.71, p<0.001). If we consider all participants (including bots) (mean = 4.33, 95 % CI: 4.21 to 4.45) compared to human respondents (mean = 3.77, 95 % CI: 3.60 to 3.94), there was a mean difference of 0.55 (95 % CI: 0.35 to 0.76, (t(1,089) = 5.29, p<0.001). Along with reaching statistical significance, this may also be a clinically important difference.

Box plot of pain perceptions between suspected bots and presumed humans on a 7-point Likert scale.

Bot prevention strategies

Bot prevention using reCAPTCHA

CAPTCHA is perhaps one of the most feasible options to prevent bots in online surveys and can be embedded in surveys (i.e., REDCap and Qualtrics) [16]. Implementing CAPTCHA or other human verification mechanisms can help differentiate between human respondents and bots [17]. These systems require users to perform tasks that are easy for humans but challenging for automated bots to complete [10]. reCAPTCHA, a Google technology, confirms a user’s human identity and shields websites from automated spam and abuse. Initially conceived by von Ahn and colleagues [17], it was later acquired by Google in 2009. reCAPTCHA’s primary role is to differentiate between human users and bots through challenges designed to be easy for humans but challenging for automated scripts [17]. These challenges commonly involve deciphering distorted text, selecting specific images from a set, or completing tasks requiring human cognitive abilities [18].

As time has progressed, reCAPTCHA has advanced to enhance user-friendliness and security [16], 17]. Notably, reCAPTCHA introduced machine learning algorithms to assess user behaviour and assign a risk score, eliminating the need for traditional CAPTCHA challenges [17]. reCAPTCHA is deeply integrated into various online platforms, including websites, online forms, and mobile applications [17]. It provides a robust defence against spam, abuse, and unauthorised access attempts, thus preserving the integrity and security of online surveys [16], 17]. Despite its effectiveness, reCAPTCHA has encountered criticism and controversy, particularly regarding its accessibility for users with disabilities and concerns over user privacy from public groups [19], 20]. It may also produce false negatives (failing to detect bots) and false positives (incorrectly identifying humans as bots) [21]. Google has addressed these concerns by improving accessibility features and minimising data collection practices using a score based on suspicion to avoid challenging the user. reCAPTCHA v3 or ‘invisible’ reCAPTCHA is designed to remove the need to interrupt users by running analyses in the background to detect patterns of bot activity on the web [22]. This could help prevent the exclusion of people with accessibility issues or disabilities that make reCAPTCHA a barrier to participation, thus reducing selection bias and increasing generalisability and external validity. However, this is a delicate balance between user privacy, anonymity, and accessibility. Thus, while reCAPTCHA v3 enhances usability and inclusivity (e.g., people with visual impairment), it does so by collecting more granular user data, which may compromise privacy; in contrast, traditional CAPTCHA methods, though more intrusive, limit data collection and better preserve user anonymity.

Alternative bot prevention strategies

Data validation techniques such as IP address tracking (determining a user’s location), browser fingerprinting (a method that captures a user’s data through web browsing activity), and response consistency checks can help identify suspicious patterns indicative of bot activity [1], 23]. Employing monitoring and filtering tools capable of detecting and blocking bot-generated responses in real-time can help mitigate the impact of spam on survey data quality [5]. Limiting anonymity in surveys by requiring users to sign in with verified accounts or providing incentives tied to legitimate user profiles can deter bots seeking to exploit the anonymity of online surveys [23], but may also reduce the recruitment rate and deter potential participants, especially those from culturally and linguistically diverse populations or with low digital and data literacy.

Lessons learned and recommendations

Implications for epidemiologic research

Bots have the potential to introduce selection, confounding and measurement bias into research as well as impacting upon the generalisability of our findings. As demonstrated in our data, had we failed to exclude the bot generated responses from our data, we would have had an inflated proportion of indigenous participants, all of whom were allocated to one treatment arm. Thus, introducing selection bias and confounding. Additionally, the main results were biased showing greater harms for the treatment when bot responses were included in the analysis. Including bots would have inflated the sample size, resulting in underestimated variance and overly narrow confidence intervals.

Use of reCAPTCHA or human verification

It is highly recommended that researchers use reCAPTCHA to reduce the additional labour and the potential risk of inaccurate survey data, especially for surveys that only require a single response. Many reputable survey administration software, such as REDCap or Qualtrics, have reCAPTCHA functions that can be activated easily.

Identifying bots in collected data

Even if attempts have been made to reduce bots from completing data collection, the following strategies may help in identifying and excluding potential bot data from a dataset. First Nation’s status as suspected bots may unintentionally exclude genuine responses and contribute to the underrepresentation of Indigenous participants. However, it was important that this was considered with other bot-identifying criteria to best avoid excluding genuine responses.

Collect email addresses for all participants

Even if a cross-sectional survey is being conducted and no further follow-up is required, sending a ‘thank you for participating’ email may assist in identifying potential bots. However, some sophisticated bots may use disposable email addresses [5].

Open-ended questions

By including open-ended questions, such as “Can you explain your preference for MAP or injection” may allow researchers to determine genuine responses. These questions should be concise to avoid burdening and discouraging participants. Analysing this may require additional resources, but natural language processing tools could assist [24].

Allowing ‘free-format’ fields

Allowing ‘free-format’ fields may assist researchers in identifying potential bots that have entered invalid options. This could include having participants write in their postal code instead of restricting this field to a specific format (e.g., Australian post codes consist of four digits). This may increase time for analysis but could be managed using natural language processing.

Other strategies previously discussed may be more taxing for participants and could impact participant engagement. Artificial intelligence (AI) and machine learning advances could further sophisticate bots and possibly bypass reCAPTCHA authentication methods [23]. Researchers can leverage machine learning algorithms to analyse user behavioural patterns (i.e., response times, answer consistency, and click patterns) to identify anomalies [25]. Metadata analysis, including IP address tracking, device fingerprinting, and geolocation checks, can further help distinguish human participants from bots [26]. Natural language processing techniques can be employed to evaluate open-ended survey responses for coherence, context relevance, and linguistic complexity [27]. Future authentication may need to adapt and use AI to fight AI, as AI-based detection systems have demonstrated effectiveness in dynamically adapting to evolving bot behaviour [28].

Acknowledgments

The authors acknowledge Dr Cameron Fong of the Research Data Strategy Team, part of the Research Portfolio of the University of Sydney, for technical support.

-

Research ethics: This study was approved by Western Sydney Local Health District Ethics Committee (ref: 2023/ETH00705) and adhered to the Declaration of Helsinki (as revised in 2013).

-

Informed consent: Informed consent was obtained from all individuals included in this study.

-

Author contributions: All authors have accepted responsibility for the entire content of this manuscript and approved its submission.

-

Use of Large Language Models, AI and Machine Learning Tools: None declared.

-

Conflict of interest: All other authors state no conflict of interest.

-

Research funding: None declared.

-

Data availability:The datasets analysed during the current study are available from the corresponding author on reasonable request.

References

1. Dillman, DA, Smyth, JD, Melani Christian, L. Internet, phone, mail, and mixed-mode surveys: the tailored design method, 4th ed. Hoboken, New Jersey: Wiley; 2014.10.1002/9781394260645Search in Google Scholar

2. Van Der Walt, E, Eloff, J. Using machine learning to detect fake identities: bots vs humans. IEEE Access 2018;6:6540–9. https://doi.org/10.1109/ACCESS.2018.2796018.Search in Google Scholar

3. Davis, FD. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q 1989;13:319. https://doi.org/10.2307/249008.Search in Google Scholar

4. Holden, RJ, Karsh, B-T. The technology acceptance model: its past and its future in health care. J Biomed Inf 2010;43:159–72. https://doi.org/10.1016/j.jbi.2009.07.002.Search in Google Scholar PubMed PubMed Central

5. Storozuk, A, Ashley, M, Delage, V, Maloney, EA. Got bots? Practical recommendations to protect online survey data from bot attacks. Quantitative Methods Psychol 2020;16:472–81. https://doi.org/10.20982/tqmp.16.5.p472.Search in Google Scholar

6. Pozzar, R, Hammer, MJ, Underhill-Blazey, M, Wright, AA, Tulsky, JA, Hong, F, et al.. Threats of bots and other bad actors to data quality following research participant recruitment through social media: cross-sectional questionnaire. J Med Internet Res 2020;22:e23021. https://doi.org/10.2196/23021.Search in Google Scholar PubMed PubMed Central

7. Griffin, M, Martino, RJ, LoSchiavo, C, Comer-Carruthers, C, Krause, KD, Stults, CB, et al.. Ensuring survey research data integrity in the era of internet bots. Qual Quantity 2022;56:2841–52. https://doi.org/10.1007/s11135-021-01252-1.Search in Google Scholar PubMed PubMed Central

8. Kumarasamy, V, Goodfellow, N, Ferron, EM, Wright, AL. Evaluating the problem of fraudulent participants in health care research: multimethod pilot study. JMIR Form Res 2024;8:e51530. https://doi.org/10.2196/51530.Search in Google Scholar PubMed PubMed Central

9. Wang, J, Calderon, G, Hager, ER, Edwards, LV, Berry, AA, Liu, Y, et al.. Identifying and preventing fraudulent responses in online public health surveys: lessons learned during the COVID-19 pandemic. PLOS Glob Public Health 2023;3:e0001452. https://doi.org/10.1371/journal.pgph.0001452.10.1371/journal.pgph.0001452Search in Google Scholar PubMed PubMed Central

10. Loebenberg, G, Oldham, M, Brown, J, Dinu, L, Michie, S, Field, M, et al.. Bot or not? Detecting and managing participant deception when conducting digital research remotely: case study of a randomized controlled trial. J Med Internet Res 2023;25:e46523. https://doi.org/10.2196/46523.Search in Google Scholar PubMed PubMed Central

11. Berger, MN, Davies, C, Mathieu, E, Shaban, RZ, Bag, S, Rachel Skinner, S. Developing and validating a scale to measure the perceptions of safety, usability and acceptability of microarray patches for vaccination: a study protocol. Ther Adv Vaccines Immunother 2024;12. https://doi.org/10.1177/25151355241263560.Search in Google Scholar PubMed PubMed Central

12. Hacker, E, Baker, B, Lake, T, Ross, C, Cox, M, Davies, C, et al.. Vaccine microarray patch self-administration: an innovative approach to improve pandemic and routine vaccination rates. Vaccine 2023;41:5925–30. https://doi.org/10.1016/j.vaccine.2023.08.027.Search in Google Scholar PubMed

13. Berger, MN, Mowbray, ES, Farag, MWA, Mathieu, E, Davies, C, Thomas, C, et al.. Immunogenicity, safety, usability and acceptability of microarray patches for vaccination: a systematic review and meta-analysis. BMJ Glob Health 2023;8:e012247. https://doi.org/10.1136/bmjgh-2023-012247.Search in Google Scholar PubMed PubMed Central

14. Davies, C, Taba, M, Deng, L, Karatas, C, Bag, S, Ross, C, et al.. Usability, acceptability, and feasibility of a high-density microarray patch (HD-MAP) applicator as a delivery method for vaccination in clinical settings. Hum Vaccines Immunother 2022;18:2018863. https://doi.org/10.1080/21645515.2021.2018863.Search in Google Scholar PubMed PubMed Central

15. Australian Bureau of Statistics. Estimates of aboriginal and Torres Strait Islander Australians; 2021. https://www.abs.gov.au/statistics/people/aboriginal-and-torres-strait-islander-peoples/estimates-aboriginal-and-torres-strait-islander-australians/30-june-2021.Search in Google Scholar

16. Harris, PA, Taylor, R, Minor, BL, Elliott, V, Fernandez, M, O’Neal, L, et al.. The REDCap consortium: building an international community of software platform partners. J Biomed Inf 2019;95:103208. https://doi.org/10.1016/j.jbi.2019.103208.Search in Google Scholar PubMed PubMed Central

17. Von Ahn, L, Maurer, B, McMillen, C, Abraham, D, Blum, M. reCAPTCHA: human-based character recognition via web security measures. Science 2008;321:1465–8. https://doi.org/10.1126/science.1160379.Search in Google Scholar PubMed

18. Von Ahn, L, Blum, M, Hopper, NJ, Langford, J. CAPTCHA: using hard AI problems for security. In: Biham, E, editor. Advances in Cryptology — EUROCRYPT 2003. Berlin, Heidelberg: Springer Berlin Heidelberg; 2003, vol 2656:294–311 pp. Lecture Notes in Computer Science.10.1007/3-540-39200-9_18Search in Google Scholar

19. Gafni, R, Nagar, I. CAPTCHA: impact on user experience of users with learning disabilities. Interdiscip J E-Skills Lifelong Learn 2016;12:207–23. https://doi.org/10.28945/3612.Search in Google Scholar

20. Electronic Frontier Foundation. Behind the one-way mirror: a deep dive into the technology of corporate surveillance; 2019. https://www.eff.org/wp/behind-the-one-way-mirror.Search in Google Scholar

21. Nanglae, N, Bhattarakosol, P. ProCAPTCHA: a profile-based CAPTCHA for personal password authentication. In: Tahir, H, editor, Plos One; 2024, vol 19:e0311197 p.10.1371/journal.pone.0311197Search in Google Scholar PubMed PubMed Central

22. Google. Introducing reCAPTCHA v3: the new way to stop bots; 2018. https://www.google.com/recaptcha/intro/v3.html.Search in Google Scholar

23. Godinho, A, Schell, C, Cunningham, JA. Out damn bot, out: recruiting real people into substance use studies on the internet. Subst Abuse 2020;41:3–5. https://doi.org/10.1080/08897077.2019.1691131.Search in Google Scholar PubMed

24. Dutta, S, O’Rourke, EM. Open-ended questions: the role of natural language processing and text analytics. In: Macey, WH, Fink, AA, editors. Employee Surveys and Sensing, 1st ed. York: Oxford University PressNew; 2020:202–18 pp.10.1093/oso/9780190939717.003.0013Search in Google Scholar

25. Hayawi, K, S Saha, MM Masud, SS Mathew, M Kaosar. Social media bot detection with deep learning methods: a systematic review. Neural Comput Appl 2023;35:8903–18. https://doi.org/10.1007/s00521-023-08352-z.Search in Google Scholar

26. Heidari, M, JH JonesJr., O Uzuner. Online user profiling to detect social bots on twitter. arXiv 2022;1–9. https://doi.org/10.48550/ARXIV.2203.05966.Search in Google Scholar

27. Heidari, M, Jones, JHJr. BERT model for social media bot detection. George Mason University; 2022. https://mars.gmu.edu/server/api/core/bitstreams/410ef9fb-7608-4332-9e04-c75dfa20d77c/content.Search in Google Scholar

28. Zhou, M, D Zhang, Y Wang, Y-ao Geng, Y Dong, J Tang. LGB: language model and graph neural network-driven social bot detection. arXiv 2024;1–14. https://doi.org/10.48550/ARXIV.2406.08762.Search in Google Scholar

© 2025 the author(s), published by De Gruyter, Berlin/Boston

This work is licensed under the Creative Commons Attribution 4.0 International License.

Articles in the same Issue

- Research Articles

- Definition, identification, and estimation of the direct and indirect number needed to treat

- Sample size determination for external validation of risk models with binary outcomes using the area under the ROC curve

- Analysis of the drug resistance level of malaria disease: a fractional-order model

- Extending the scope of the capture-recapture experiment: a multilevel approach with random effects to provide reliable estimates at national level

- Discrete-time compartmental models with partially observed data: a comparison among frequentist and Bayesian approaches for addressing likelihood intractability

- Sensitivity analysis for unmeasured confounding for a joint effect with an application to survey data

- Investigating the association between school substance programs and student substance use: accounting for informative cluster size

- The quantiles of extreme differences matrix for evaluating discriminant validity

- Finite-sample improved confidence intervals based on the estimating equation theory for the modified Poisson and least-squares regressions

- Causal mediation analysis for difference-in-difference design and panel data

- What if dependent causes of death were independent?

- Bot invasion: protecting the integrity of online surveys against spamming

- A study of a stochastic model and extinction phenomenon of meningitis epidemic

- Understanding the impact of media and latency in information response on the disease propagation: a mathematical model and analysis

- Time-varying reproductive number estimation for practical application in structured populations

- Perspective

- Should we still use pointwise confidence intervals for the Kaplan–Meier estimator?

- Leveraging data from multiple sources in epidemiologic research: transportability, dynamic borrowing, external controls, and beyond

- Regression calibration for time-to-event outcomes: mitigating bias due to measurement error in real-world endpoints

Articles in the same Issue

- Research Articles

- Definition, identification, and estimation of the direct and indirect number needed to treat

- Sample size determination for external validation of risk models with binary outcomes using the area under the ROC curve

- Analysis of the drug resistance level of malaria disease: a fractional-order model

- Extending the scope of the capture-recapture experiment: a multilevel approach with random effects to provide reliable estimates at national level

- Discrete-time compartmental models with partially observed data: a comparison among frequentist and Bayesian approaches for addressing likelihood intractability

- Sensitivity analysis for unmeasured confounding for a joint effect with an application to survey data

- Investigating the association between school substance programs and student substance use: accounting for informative cluster size

- The quantiles of extreme differences matrix for evaluating discriminant validity

- Finite-sample improved confidence intervals based on the estimating equation theory for the modified Poisson and least-squares regressions

- Causal mediation analysis for difference-in-difference design and panel data

- What if dependent causes of death were independent?

- Bot invasion: protecting the integrity of online surveys against spamming

- A study of a stochastic model and extinction phenomenon of meningitis epidemic

- Understanding the impact of media and latency in information response on the disease propagation: a mathematical model and analysis

- Time-varying reproductive number estimation for practical application in structured populations

- Perspective

- Should we still use pointwise confidence intervals for the Kaplan–Meier estimator?

- Leveraging data from multiple sources in epidemiologic research: transportability, dynamic borrowing, external controls, and beyond

- Regression calibration for time-to-event outcomes: mitigating bias due to measurement error in real-world endpoints