Abstract

The simultaneous advances in artificial neural networks and photonic integration technologies have spurred extensive research in optical computing and optical neural networks (ONNs). The potential to simultaneously exploit multiple physical dimensions of time, wavelength and space give ONNs the ability to achieve computing operations with high parallelism and large-data throughput. Different photonic multiplexing techniques based on these multiple degrees of freedom have enabled ONNs with large-scale interconnectivity and linear computing functions. Here, we review the recent advances of ONNs based on different approaches to photonic multiplexing, and present our outlook on key technologies needed to further advance these photonic multiplexing/hybrid-multiplexing techniques of ONNs.

1 Introduction

Artificial neural networks (ANNs) are mathematical models that emulate the biological brain, with their computing speed and capabilities determined by the underlying computing hardware. Mainstream electronics based on the von Neumann architecture has been widely employed, leading to significant breakthroughs in machine learning with unprecedented performance in computer vision, adaptive control, decision optimization, object identification, and more [1], [2], [3], [4], [5], [6].

However, with the ever-growing demand for processing capacity, it is clear that electronic computing alone will not be able to meet future practical requirements [7], [8], [9], [10]. Its main limitation arises from the separation of the processing unit and memory, which requires significant energy and computing power during the reading and writing of data, which leads to limited efficiency when processing ultralarge matrices. Although advanced hardware architectures, such as graphics and tensor processing units, have enabled dramatic improvements in performance, several inherent bottlenecks of electrical digital processers still exist. For example, limited by the electronic bandwidth bottleneck, the clock frequency of traditional electrical digital processors is limited to under a few GHz [11]. Further, electronic processors with higher computational power generally need a larger circuit scale and higher integration density, which will inevitably lead to high energy consumption and heat dissipation [12]. These limitations will lead to the failure of Moore’s law, thus making the realization of significantly more advanced neural networks challenging or even impossible. The development of new techniques that has the potential to overcome these limitations and achieve unprecedented computing performance is needed [13, 14].

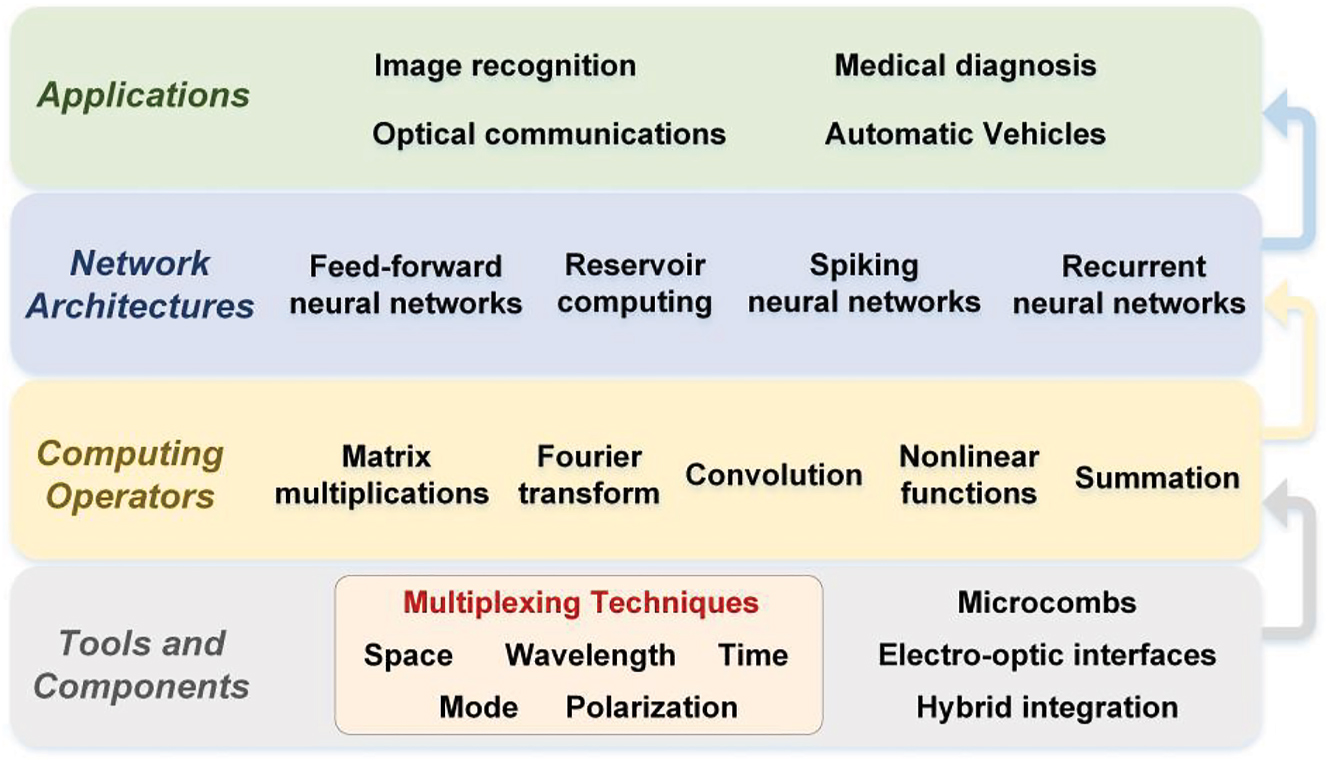

The unique advantages of light, such as its ultrawide bandwidths of up to 10s of THz, the low propagation loss and the inherent nature of its analog architecture, make optical neuromorphic computing hardware promising to address the challenges faced by their electronic counterparts [14], [15], [16], [17], [18], [19], [20], [21], [22], [23], [24], [25]. Ultimately hybrid opto-electronic computing hardware that leverages the broad bandwidths of optics without sacrificing the flexibility of digital electronics may provide the ideal solution. Light contains multiple degrees of freedom including wavelength, amplitude, phase, mode, and polarization states, thus supporting the simultaneous processing of data in multiple dimensions via multiplexing techniques [26], [27], [28], [29], [30], [31], [32], [33], [34], [35]. Photonic techniques also have significant potential in implementing large-scale fan-in/out and weighted interconnects between neurons based on multiplexing techniques (as shown in Figure 1), which underlie the optical computing operators and further support the construction of optical neural networks (ONNs), thus simplifying the hardware architecture and addressing the demands for increased computing power in specific applications [36], [37], [38], [39], [40], [41], [42], [43].

The different facets of optical neural networks.

ONNs use light as the information carrier and can simultaneously achieve the desired computing functions while propagating through specially designed dielectric structures or free space, and so the processing and storage functions are no longer separated. This passive process effectively improves the energy efficiency [44], [45], [46] and reduces the latency of ONNs – especially for approaches based on integrated platforms [47], [48], [49]. More importantly, ONNs have critical advantages for certain demanding applications such as autonomous vehicles, robotics, computer vision and other emerging fields, that require extremely rapid processing of optical and image signals. For ONNs, converting the optical and image signals into digital signals before being processed can be omitted [18], thus saving considerable time and energy.

Recently, the advances in ONNs have been reviewed from a number of different perspectives [14], [15], [16], [17], [18], [19], [20], [21], [22], [23], [24], [25], including introducing: optical field interferences for visual computing applications [18], integrated neuromorphic systems and the underlying hardware for implementing weighted interconnects and neurons [19], the training methods [21], energy consumption [22] and prospects and applications of ONNs [23]. Here, we review the most recent advances of ONNs from the perspective of the fundamental photonic multiplexing techniques that offer physical parallelism for the implementation of ONNs in contrast to previous reviews that have focused on key component and techniques [24]. These photonic multiplexing techniques include space-division multiplexing (SDM), wavelength-division multiplexing (WDM), time-division multiplexing (TDM), mode-division multiplexing (MDM), and polarization-division multiplexing (PDM). Further, we discuss the key technologies needed for the further enhancement of the computing parallelism of ONNs, which typically aim to achieve more efficient use of photonic multiplexing techniques. The paper is structured as follows. In Section 2, we survey in detail how different photonic multiplexing techniques are leveraged for the parallel signal input of vector matrix X and the optical weighted interconnection of the weight matrix W in ONNs. The typical structures of optical computing operations based on different multiplexing methods are outlined, which include matrix multiplication, Fourier transform, and convolution. In Section 3, spiking neurons and spiking neural networks based on optical multiplexing techniques are reviewed. In Section 4, we discuss how to further exploit photonic multiplexing/hybrid-multiplexing techniques in ONNs. The key technologies that further enhance the computing power through the use of photonic multiplexing/hybrid-multiplexing techniques are highlighted, including integrated optical frequency comb, integrated high-speed electronic-optical interfaces, and hybrid integration technologies.

2 Multiplexing techniques for optical neural networks

Neural networks typically consist of multiple layers, each of which is formed by multiple parallel neurons densely interconnected by weighted synapses. As such, the classification of ONNs can be made on the basis of a single neuron. As is shown in Figure 2, a single neuron has multiple input nodes, and the signals from different input nodes X are weighted via synapses W and summed as Y = XW. This multiply-and-accumulate operation (MAC) accounts for the majority of computations in neural networks [50, 51]. The neural network’s capacity to address complicated tasks is dictated by the scale of network (i.e., the number of neurons, synapses and layers), and thus the key to achieve maximum acceleration (using analog hardware) lies in achieving sufficiently high parallelisms and throughput to map X (input nodes/data) and W (weighted synapses) onto the practical parameters of the physical system. Analog photonics offer multiple physical degrees of freedom for multiplexing, and are thus capable of implementing large-scale fan-in/-out and synapses, with high throughput enabled by the broad optical bandwidths.

The model of a single neuron.

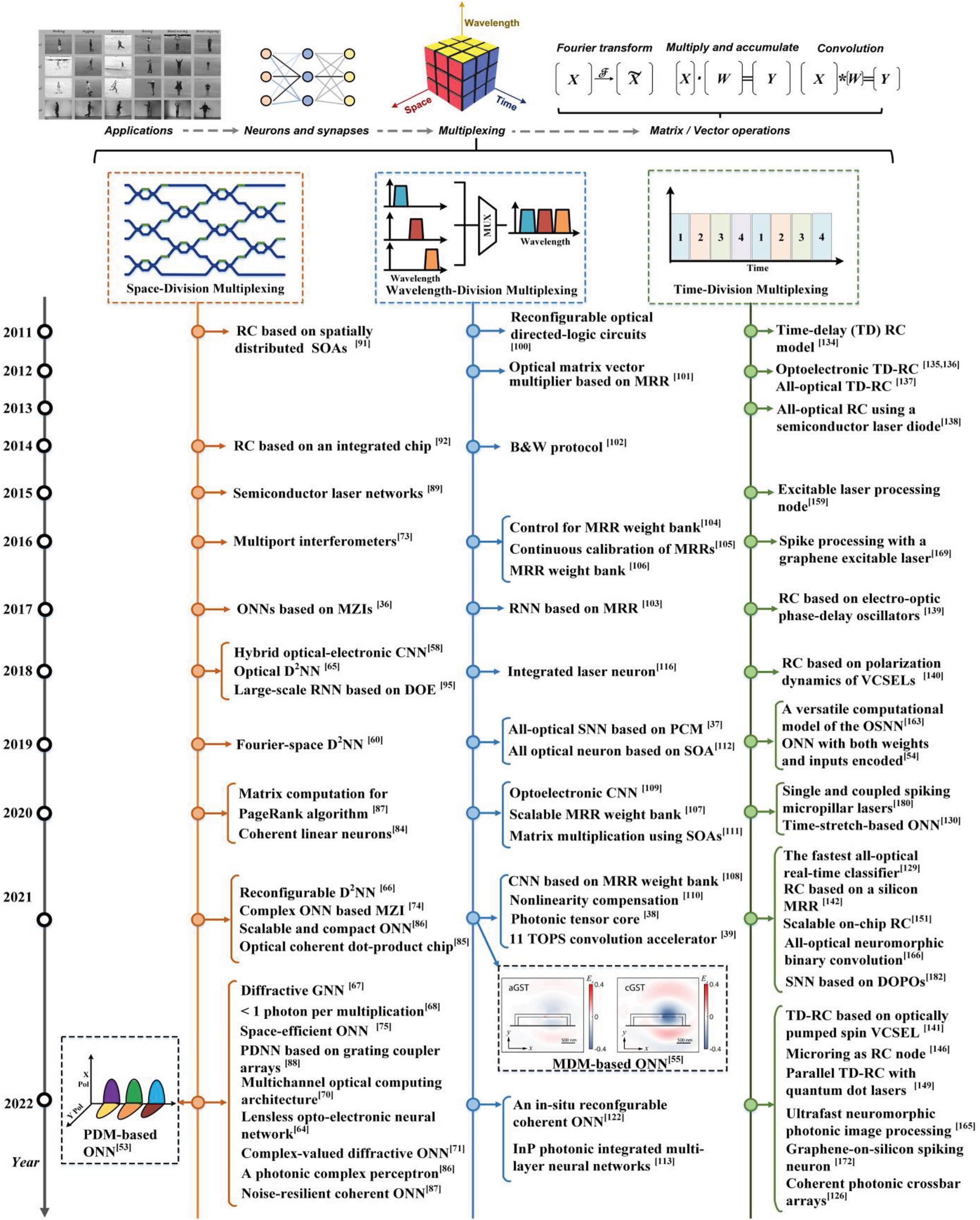

Here, we classify ONNs depending on the multiplexing technique employed in terms of the input nodes X and the optical weighted interconnections W in the single neuron. We note that a single neuron can simultaneously use multiple multiplexing approaches (ideally all simultaneously to achieve the highest computing performance), as has been demonstrated for networks based on SDM [36], WDM [37], TDM [39], MDM [52], PDM [53] for X; and SDM [36], WDM [39], TDM [54], MDM [55], PDM [53] for W. Figure 3 summarizes the development history and typical structure of previously reported ONNs based on multiplexing techniques, which will be in detail introduced in this section.

Approaches to optical neural networks using different multiplexing techniques.

2.1 ONNs based on space-division multiplexing

Spatial-division multiplexing is a fundamental approach to boost the computing parallelism and enhance the overall computing speed, as has been widely used in traditional digital computing systems. ONNs based on SDM feature architectures where the input nodes X and/or weighted synapses W are mapped onto the spatial division [56], [57], [58], [59], [60], [61], [62], [63], [64], [65], [66], [67], [68], [69], [70], [71], [72], [73], [74], [75], [76], [77], [78], [79], [80], [81], [82], [83], [84], [85], [86], [87], [88], [89], [90], [91], [92], [93], [94], [95], [96], [97], [98], [99]. The weighting process is achieved via manipulating the optical fields carrying data X, and the sum operation is achieved via constructive/destructive interference.

Fourier optics [56], [57], [58], [59], [60], using a free space lens to perform Fourier transform, is a classic example of computing based on SDM and was first proposed by Bieren in 1971 [56]. Thereafter, Goodman established the model of parallel and high-speed optical discrete Fourier transforms (Figure 4A) in 1978 [57], which have been widely used to perform matrix multiplication operations [61], [62], [63]. Subsequently, the convolution operation – a more sophisticated computing operator which takes on the heaviest computational burden of convolution neural networks – was realized optically based on the 4F system (Figure 4B) by Wetzstein et al. [58]. In that system, the encoded input signals X go through a Fourier lens to perform a Fourier transform, and are then convolved with the convolution kernel W encoded onto an optimized phase mask. Later, ONNs were demonstrated with Fourier optics [59, 60], such as using Fourier lenses for the optical linear operations [59] and laser-cooled atoms with electromagnetically induced transparency for nonlinear functions (Figure 4C). Recently, Shi et al. demonstrated a novel lens-less opto-electronic neural network architecture in order to perform machine vision of the environment under natural light conditions [64]. This addresses the challenge of processing incoherent light signals by liberating lens-based optical architectures from their dependence on coherent light sources. This scheme utilized a passive optical mask designed by a task-oriented neural network to replace the lens and perform an optical convolution, thus simplifying the neural network and reducing the required complexity.

![Figure 4:

Advances in SDM-based ONNs. (A) The first optical MVM system model [57]. (B) Performing optical convolution based on the 4F system [58]. (C) A fully functioning all-optical neural network based on Fourier optics [59]. (D) An optical diffractive deep neural network [65]. (E) A Fourier-space D2NN [60]. (F) A reconfigurable diffractive neural network [66]. (G) A diffractive graph neural network [67]. (H) Performing optical dot products with extremely low optical energies [68]. (I) A programmable D2NN based on a digital-coding metasurface array [69]. (J) An all-optical neural network architecture based on MZI meshes [36]. (K) A complex neural network based on MZI meshes and the coherent detection [74]. (L) An integrated diffractive neural network [75]. (M) An integrated photonic deep neural network based on spatially distributed array of input grating couplers [88]. (N) A spatially distributed 16-node on-chip RC [92]. (O) A large scale RNN consisting of 2025 nonlinear network nodes [95].](/document/doi/10.1515/nanoph-2022-0485/asset/graphic/j_nanoph-2022-0485_fig_004.jpg)

Advances in SDM-based ONNs. (A) The first optical MVM system model [57]. (B) Performing optical convolution based on the 4F system [58]. (C) A fully functioning all-optical neural network based on Fourier optics [59]. (D) An optical diffractive deep neural network [65]. (E) A Fourier-space D2NN [60]. (F) A reconfigurable diffractive neural network [66]. (G) A diffractive graph neural network [67]. (H) Performing optical dot products with extremely low optical energies [68]. (I) A programmable D2NN based on a digital-coding metasurface array [69]. (J) An all-optical neural network architecture based on MZI meshes [36]. (K) A complex neural network based on MZI meshes and the coherent detection [74]. (L) An integrated diffractive neural network [75]. (M) An integrated photonic deep neural network based on spatially distributed array of input grating couplers [88]. (N) A spatially distributed 16-node on-chip RC [92]. (O) A large scale RNN consisting of 2025 nonlinear network nodes [95].

Since classical free space optics setups are relatively bulky, novel approaches such as gradient index technology, meta-surface, diffraction structures, and so on, have been exploited using SDM to achieve optical computing operators and ONNs in more compact form. In 2018, Lin et al. proposed an optical diffractive deep neural network (D2NN) (Figure 4D) [65], in which the information was encoded onto both the amplitude and phase of optical waves. As each pixel of the diffractive lens serves as a neuron in free space, a fully connected network involving large input nodes and neurons was realized. Following this, Dai et al. proposed a Fourier-space D2NN (Figure 4E) based on diffractive modulation layers [60]. The combination of diffractive optics and Fourier optics can achieve all-optical segmentation of the salient objects for the target scene after deep learning design of modulation layers, and obtained higher classification accuracy and a much more compact structure compared to real-space D2NN. Following this, Dai et al. [66] further optimized the diffractive neural network and proposed a reconfigurable optoelectronic neural network (Figure 4F). The key fundamental building block was the reconfigurable diffractive processing unit consisting of large-scale diffractive neurons and weighted optical interconnections. The input nodes were achieved with SDM-based spatial light modulators, with the weights tuned by changing the diffractive modulation of the wavefront. Benefiting from the high parallelism of SDM-based lenses, the proposed large-scale diffractive neural network can support millions of neurons. Soon after, Yan applied the integrated diffractive photonic computing units to the diffractive graph neural network (Figure 4G) that can perform optical message passing over graph-structured data [67], which can fulfill the recognition of skeleton-based human action. This work has inspired researchers to combine deep learning with the application-specific integrated photonic circuits design. In another example, diffractive optics were leveraged to achieve optical dot products (Figure 4H) with extremely low optical energy consumption, thus experimentally proving the advantages of photonic techniques in low-power-consumption computing [68]. Further to this, a programmable D2NN based on a digital-coding metasurface array [69] was proposed (Figure 4I). This D2NN consisted of multiple programmable physical layers, capable of dealing with image recognition, feature detection and multi-channel encoding and decoding in wireless communications by processing electromagnetic waves in free space. Recently, a novel multichannel optical computing architecture named Monet was established based on a new projection-interference-prediction framework [70]. This approach is capable of accomplishing advanced machine vision tasks such as 3D detection and moving objection detection. In this multichannel ONN, multiple inputs were projected onto a shared spatial domain and then encoded into optical fields, where optical interference and diffraction were both exploited to establish inter- and intra-channel connections, respectively. Recently, a novel diffractive all-optical neural network for physics-aware complex-valued adversarial machine learning was reported [71], based on cascaded multiple layers of (cost-effective) transmissive twisted nematic liquid crystal spatial light modulators that enabled the coupled amplitude and phase modulation of the transmitted light. This work provides the ability to manufacture and rapidly deploy large-scale photonic neuromorphic processors, with applications to adversarial and defense algorithms in the new field of complex-valued neural networks.

The interference of light represents another form of SDM based on the superposition of waves, and this can also be used to achieve optical computing [72], [73], [74], [75], [76], [77], [78], [79], [80], [81], [82], [83], [84], [85], [86], [87]. The fundamental principle is to divide coherent input light into different paths in free planar space, after which optical matrix multiplication can be achieved by appropriately designing the propagation paths of the multiply-coherent light. Typical structures to achieve interference-based computing mainly consist of Mach–Zehnder interferometers (MZIs), which are formed by beam splitters/couplers and phase shifters. In 1994, Reck et al. introduced a theoretical model for MZI-based meshes [72]. Later, Clements et al. proposed a novel universal matrix transformer in 2016 [73], with the footprint and loss further optimized. In 2017, Shen et al. proposed an all-optical neural network architecture (Figure 4J) based on a silicon photonic integrated circuit, in which 56 programmable MZIs were used for optical matrix multiplications [36]. In 2021, Zhang et al. proposed a complex neural network (Figure 4K) [74] based on coherent detection, in which information was encoded on both the magnitude and phase of light. In contrast to real-valued ONNs, this work can offer an additional degree of parallelism and achieve better performance in terms of computational speed and energy efficiency. An integrated-chip diffractive neural network (Figure 4L) was proposed in [75], where diffractive cells were introduced to implement discrete Fourier transforms. This chip is capable of performing Fourier transform and convolution operations, bringing prominent advantages in space-efficient and low-power-consuming implementations of large-scale photonics computational circuits for neural networks.

Another approach to ONNs based on optical interference is to utilize spatially distributed Mach–Zehnder modulators (MZM) to realize the physical implementation of the vector matrix X and the weight matrix W [80, 81]. Specifically, a silicon-based optical coherent dot-product chip was achieved based on a light source and spatially deployed modulator arrays in [85], where the vector matrix X and weight matrix W were mapped onto the optical fields via two push-pull configured modulators in each branch. This optically coherent dot-product chip performed computing operations in the complete real-valued domain by introducing reference light, and is capable of accomplishing sophisticated deep learning regression tasks. Very recently, a noise-resilient deep learning coherent photonic neural network was demonstrated [87], with multiple spatially deployed integrated dual-IQ electro–optic MZMs and thermo-optic MZMs that performed the vector data input and synaptic weights. Compared to coherent layouts using cascaded MZIs, the dual-IQ-modulator-based coherent photonic neural network significantly improves the noise tolerance, enabling it to realize noise-resilient deep learning at a record-high 10 GMAC/sec/axon computation rate. These dual-IQ modulator schemes can be extended to N × N neural layers via a coherent X bar architecture [80, 81], and in doing so support tiled matrix multiplication at high speeds [82]. Coherent photonic neurons and blocks can also be realized with single-wavelength coherent optical linear neurons [84], as well as silicon-integrated coherent neurons [83], or a complex-valued photonic perceptron [86].

The reported ONNs based SDM also include those that adopt an array of grating couplers [88], spatially distributed phase-change material (PCM) meshes [38], vertical-cavity surface-emitting lasers (VCSELs) arrays [89], and so on. In [88], an integrated photonic deep neural network (Figure 4M) with optoelectronic nonlinear activation functions was demonstrated, capable of directly processing optical waves impinging on an array of grating couplers and fulfilling image classification. A 5 × 6 array of input grating couplers distributed in free space served as input nodes to capture the image of the target object, and the weight vectors were controlled by tuning the input voltages of the PIN attenuator array. After achieving the weighted sum of the neuron inputs, the optoelectronic nonlinear response of a PN junction micro-ring modulator was used as a rectified linear unit (ReLU) which yielded the neuron’s output. This work is a significant step for the implementation of fully integrated end-to-end ONNs, and experimentally proves the advantages of ONNs for directly processing optical and image signals. In [38], the spatially distributed PCM meshes served as weighted interconnections to implement the weight addition.

Finally, SDM has also been exploited for reservoir computing (RC) [89], [90], [91], [92], [93], [94], [95], [96], [97], [98], [99] with the nodes implemented with tailored optical connection topologies [90], [91], [92], [93], [94]. In 2011, Vandoorne et al. demonstrated an integrated optical RC based on spatially distributed semiconductor optical amplifiers (SOAs) [91], whose steady state characteristics implement hyperbolic tangent nonlinear functions. Later, the authors further demonstrated that RC can be achieved on an integrated silicon photonic chip (Figure 4N) [92], which consists of passive elements such as optical waveguides, optical splitters and combiners. In 2015, Brunner and Fischer presented a spatially extended optical RC based on diffractive optical coupling. The diffractive-optical element (DOE), incorporated with an imaging lens, created coupling with the emitters of a laser array [89]. Limited only by the imaging aberration, potentially much larger network scales are possible with this diffractive coupling scheme. Later, the authors further proposed a large-scale RNN (Figure 4O) consisting of 2025 nonlinear network nodes via the DOE [95], which can individually or simultaneously realize spatial- and wavelength-division multiplexing of the output.

2.2 ONNs based on wavelength-division multiplexing

WDM is the prime embodiment of light’s remarkable advantages over electronics. The wide optical bands support massive wavelength channels for implementation of parallel input nodes and weighted synapses, and potentially much higher clock rates up to 10s of GHz. Specifically, the optical computing operations based on WDM can be realized by combining multi-wavelength sources with weight bands or wavelength-sensitive elements [100], [101], [102], [103], [104], [105], [106], [107], [108], [109], [110], [111], [112], [113], [114], [115], [116], [117], [118], [119], [120], [121], [122], such as micro-ring resonators (MRRs) [100], [101], [102], [103], [104], [105], [106], [107], [108], [109], [110], SOAs [111], [112], [113], and PCMs [37, 38, 114].

In 2011, Xu et al. proposed a WDM circuit to perform incoherent summation by collecting light outputs of different wavelengths into a waveguide via a tunable MRR [100]. Soon after, Yang et al. designed an optical matrix vector multiplier (Figure 5A) that was composed of an array of cascaded lasers and modulators, wavelength multiplexers/demultiplexers, an MRR matrix and photodetectors, capable of performing 8 × 107 MACs per second [101]. The input data vector was mapped onto the optical power of different wavelengths, and the element a ij of the weight vector was converted to the transmittance of the MRR at row i, column j. Such structures were further investigated in [122] (Figure 5B), in which the weight vector was added by the modulator, capable of supporting four different operations on the same photonic hardware: multi-layer, convolutional, fully-connected, and power-saving layers.

![Figure 5:

Advances in WDM-based ONNs. (A) An optical matrix vector multiplier based on WDM and MRMs [101]. (B) A programmable ONN based on WDM and coherent light [122]. (C) A continuous time RNN based on WDM and MRR weight bank [103]. (D) A WDM-based ONN for compensating the fiber nonlinearity [110]. (E) A photonic feed-forward neural network based on WDM and SOAs [111]. (F) An ONN based on WDM and PCM [38]. (G) An optical convolution accelerator based on a time-wavelength interleaving multiplexing technique [39].](/document/doi/10.1515/nanoph-2022-0485/asset/graphic/j_nanoph-2022-0485_fig_005.jpg)

Advances in WDM-based ONNs. (A) An optical matrix vector multiplier based on WDM and MRMs [101]. (B) A programmable ONN based on WDM and coherent light [122]. (C) A continuous time RNN based on WDM and MRR weight bank [103]. (D) A WDM-based ONN for compensating the fiber nonlinearity [110]. (E) A photonic feed-forward neural network based on WDM and SOAs [111]. (F) An ONN based on WDM and PCM [38]. (G) An optical convolution accelerator based on a time-wavelength interleaving multiplexing technique [39].

In parallel, Tait et al. proposed the broadcast-and-weight protocol in 2014 [102] and further demonstrated it with an MRR weight bank in 2017 (Figure 5C) [103]. This protocol broadcast input data onto all wavelength channels via electro–optic modulation, simultaneously weighting the replicas by controlling the power of the wavelength channels. Recently, Huang et al. implemented the WDM-based ONN with a broadcast-and-weight architecture (Figure 5D) on a silicon photonic platform [110], in which the input data of difference neurons were loaded on the multiple optical wavelengths and then multiplexed on the same optical waveguide; the interconnections between the neurons were realized by using a power splitter; the weights were applied by controlling the partial transmission of the signal via an array of MRR banks. The WDM-assisted ONN can be used to compensate for fiber’s nonlinearity, leading to an improved Q factor in optical communication systems.

In 2020, Indium Phosphide (InP) platforms were employed to realize a photonic feed-forward neural network (Figure 5E) [111], where SOAs were employed to simultaneously compensate for losses and achieve synaptic weights. As another alternative to achieve weighted interconnects, PCM cells can make the synaptic waveguides highly transmissive or mostly absorbing by switching phase states. In 2019, Feldmann et al. first demonstrated a spiking ONN based on PCM cells. In 2021, the authors further proposed an integrated photonic tensor core (Figure 5F) to accelerate convolution operations. The employed on-chip PCM matrix [38] can implement highly parallel matrix multiplication operations, potentially at trillions of MAC operations per second.

With the recent advances in chip-scale frequency combs, wideband and low-noise integrated optical sources are readily available, greatly expanding the potential of WDM-based ONNs. Recently, an optical convolution accelerator (Figure 5G) achieving a vector computing speed at 11.3 TOPS was demonstrated based on a time-wavelength interleaving technique [39], capable of extracting the features of large-scale data with scalable convolutional kernels. The schematic of the convolution accelerator is shown in Figure 5G. Input data was mapped onto the amplitudes of temporal waveforms via digital-to-analog converters (i.e., TDM); and the convolutional kernels’ weight matrices were mapped onto the power of microcomb lines via an optical spectral shaper (i.e., WDM). After electro–optic modulation, the input data were broadcast onto multiple wavelength channels featuring progressive time delays due to second-order dispersion of the subsequent fiber spool. By setting the progressive time delay step the same as the symbol duration of the input waveform, convolution operations between the input data and convolutional kernels can be obtained after photodetection. The convolution accelerator can be further leveraged for convolutional neural networks, which feature greatly simplified parametric complexity in contrast to fully connected ONNs.

2.3 ONNs based on time-division multiplexing

A key advantage of ONNs is the ultrawide bandwidths offered by optics. This yields massive numbers of wavelength channels to greatly enhance the parallelism, as introduced above for WDM-based ONNs. It also enables high data throughputs of up to 10s of Giga Baud, (corresponding to the clock rate of electronic hardware) – which requires high-speed electro–optic interfaces (i.e., modulators and photodetectors), and tailored network protocol/architecture of the input nodes employing TDM techniques [54, 123], [124], [125], [126]. Here, we review the fundamental building blocks, architectures, and recent advances of TDM-based ONNs.

In [39], a high throughput exceeding 11 TOPS was demonstrated by interleaving the time- and wavelength-divisions. Here, in this work, TDM has been employed to sequentially map the input nodes/data into the time domain. Assisted by high-speed electro–optic modulators and photodetectors (>25 GHz analog bandwidth), the data rate reached ∼62.9 Giga Baud (Figure 6A). In [54], a large-scale ONN based on TDM was constructed (Figure 6B), capable of operating at high speed and very low energy per MAC. Compared to other TDM-based ONNs, this large-scale ONN encoded both the vector matrix X and the weight matrix W onto optical signals by using standard free-space optical components, allowing the weights to be reprogrammed and trained at high speed. This work shows that TDM techniques can be used to implement not only large-scale fan-in/-out architectures for input signals, but can also achieve synaptic weights, for fast, reconfigurable, and trainable ONNs. Subsequently, [126] this TDM-based optical neuron architecture was extended to an integrated photonic platform in an N × N configuration by utilizing photonic crossbar array and homodyne detection, thus achieving large-scale matrix–matrix multiplication.

![Figure 6:

Advances in TDM-based ONNs. (A) and (B) ONNs exploiting TDM to implement large-scale fan-in/-out [39, 54]. (C) Time-delay reservoir computing (TD-RC) based on SOA [137]. (D) TD-RC based on electro–optic phase-delay oscillator [139]. (E) TD-RC based on an optically pumped spin VCSEL [141]. (F) TD-RC based on a silicon microring [142]. (G) Scalable RC on coherent linear photonic processor [151].](/document/doi/10.1515/nanoph-2022-0485/asset/graphic/j_nanoph-2022-0485_fig_006.jpg)

Advances in TDM-based ONNs. (A) and (B) ONNs exploiting TDM to implement large-scale fan-in/-out [39, 54]. (C) Time-delay reservoir computing (TD-RC) based on SOA [137]. (D) TD-RC based on electro–optic phase-delay oscillator [139]. (E) TD-RC based on an optically pumped spin VCSEL [141]. (F) TD-RC based on a silicon microring [142]. (G) Scalable RC on coherent linear photonic processor [151].

The accumulation operations of TDM-based ONNs are achieved generally based on interference between signals and replicas having different delays [127], [128], [129], [130], [131], [132], [133], [134], [135], [136], [137], [138], [139]. This can be implemented using either integrated or fiber delay lines or via dispersive media. For instance, the time-stretch method based on dispersive fiber can be leveraged to implement feedforward neural networks [130], where the multiplications of input data vectors and weight matrices can be accomplished using light by stretching ultrashort pulses in the time-domain. Besides, the fiber delay lines are widely used in RC architectures.

The concept of RC, defined by Verstraeten et al. [131], was derived from the echo state network (ESN) proposed by Herbert in 2001 [132] and the liquid state machines (LSM) reported by Maass in 2002 [133]. RC is a simple and efficient machine learning algorithm suitable for processing sequential signals. It consists of an input layer, a reservoir and an output layer. The input signal is first preprocessed, and then nonlinearly mapped into a high-dimension state space by the reservoir. Afterwards, the output layer generates processed results according to the node states of the reservoir and the connection weights of the output layer. Specifically, the connection weights of the input layer and the reservoir are randomly generated and remain unchanged during the training process, while only the connection weights of the output layer are trained. In 2011, Appeltant et al. proposed an RC network that exploited a single nonlinear node with a time-delay feedback loop that yielded a large number of virtual nodes, which simplify the hardware implementation of the reservoir [134]. In 2012, Larger et al. [135] and Paquot et al. [136] first experimentally demonstrated RC using an electro–optical feedback loop with electrical gain nested, and Duport et al. implemented an all-optical RC (Figure 6C) based on a time delay feedback loop with the nonlinear function achieved with an SOA [137]. In 2013, Brunner et al. realized an all-optical RC using a semiconductor laser diode as the nonlinear node in the time delay feedback loop [138]. In 2017, Larger et al. employed electro–optic phase-delay oscillator and other traditional photonic devices to construct a photonic RC (Figure 6D) that was capable of classifying a million words per second [139]. In 2021, a novel photonic recurrent neural network with the ability to achieve time-series classification directly in the optical domain was demonstrated [129], achieving the highest computing speed for all-optical real-time classifiers at 10 Gb/s.

Recently, advances of optical RC hardware based on time delay feedback loops including those using the dual-polarization dynamics of a VCSEL [140], an optically pumped spin VCSEL (Figure 6E) [141], a silicon MRR (Figure 6F) [142], and others [143], [144], [145], [146], [147], [148], [149], [150]. In 2021, Nakajima et al. reported an on-chip RC architecture (Figure 6G) based on hybrid photonic architectures/devices [151]. The input data was spatially divided into multiple branches/temporal nodes, which were then progressively delayed via an array of delay lines and weighted by optical cross connecting units, thus achieving the input mask of RC for subsequent time-series forecasting and image classification.

TDM techniques play a significant role in a range of optical neural network structures, where they map the input signal and/or synaptic weights onto serial temporal waveforms in the optical domain, thus greatly enhancing the data input/output bandwidth. Assisted by advanced electronic digital processing techniques, TDM-based ONNs can implement a large-scale fan-in/-out of extremely high data-throughput ONNs. Furthermore, they enable the weights to be reprogrammed and trained “on the fly”, resulting in a greatly accelerated training process for the neural network. In reservoir computing, the TDM technique maps the input signals onto time-vary sequences, which simplifies the hardware implementation of the reservoir by creating a large number of virtual nodes within the time-delay loop. In spiking neural networks, the single neuron operates on discrete time spiking signals instead of continuous signals. This spiking nature enables the system to operate at ultralow powers with the ability to process temporally varying information.

2.4 ONNs based on mode- and polarization-division multiplexing

In addition to the approaches to ONNs introduced above, polarization- and mode-division multiplexing can also be employed to enhance the transmission capacity of optical communications [152], [153], [154] and computing parallelism of ONNs. We note that MDM and PDM are generally compatible with other multiplexing techniques, and thus can potentially lead to dramatic increases in the ONNs’ computing power. Here, we review recent advances in ONNs using those two multiplexing techniques.

In order to implement on-chip ONNs based on MDM, mode multiplexers/demultiplexers with low modal crosstalk and losses are critical. As an ideal material with optical programmability, the PCM can be employed to build programmable waveguide mode converters (other than programming the synaptic weights). Specifically, the TE0 and TE1 modes of photonic waveguides can be converted to the other via large refractive index changes of PCM Ge2Sb2Te5 (GST) during phase transition. Wu et al. proposed a multimode photonic convolutional neural network (Figure 7A) based on an array of programable mode converters made from PCM in 2021 [55], as shown in Figure 7A. In detail, the patches of pixels of the image were encoded onto the power of multiple wavelength channels with variable optical attenuators (i.e., WDM). The weighted data was programmed as the mode contrast coefficient of each PCM mode converter, by controlling the tunable material phases of the GST, and the transmitted results of difference modes were obtained with photodetectors.

PDM can straightforwardly double the capacity/parallelism for information transmission/processing and has been widely used in optical communications, imaging, and sensing. Recently, Li et al. proposed a diffractive ONN based on PDM (Figure 7B), which is potentially capable of fulfilling multiple, arbitrarily-selected linear transformations [53]. In this work, the computing parallelism was improved with PDM between the input and output field-of-view of the diffractive network, where the polarization states of the light will not affect the phase and amplitude transmission coefficients of each trainable diffractive feature. 2- and 4-channel optical diffractive computing based on PDM were designed for arbitrarily-selected linear transformations.

Similar to WDM techniques, both MDM and PDM result from light’s remarkable properties compared to electronics. Both can be used for to implement the input data X and the optical weighted interconnections W. Although MDM and PDM require specific components or structures to realize the required filters or mode converters and polarization devices, thus introducing additional complexity and possibly a larger footprint, they can nonetheless serve as complementary techniques to other photonic multiplexing methods, and can thus further enhance the parallelism and computing power of the system.

3 Multiplexing techniques for spiking neurons

Representing the third generation of ANNs [155], spiking neural networks (SNN) operate on time-discrete spiking signals instead of continuous signals. Inspired by the biological human brain neurons, artificial spiking neurons encompass binary states (active and inactive), and are only active and output spikes at firing events. The spiking characteristics of SNNs bring about enhanced noise robustness and capabilities of processing temporally varying information, enabling the great superiority of SNNs in dealing with event-based applications. Here, we survey existing structures of optical spiking neurons, one of the most fundamental components of optical SNNs, and discuss the roles of photonic multiplexing techniques in achieving them.

TDM techniques were employed in most optical spiking neural networks, where the spikes/pulses are sequentially distributed in the time division. The model of spiking neurons was first proposed by Maass in [155]. Afterwards, the spiking neuron was experimentally demonstrated based on a nonlinear fiber and an SOA (Figure 8A) by Rosenbluth et al. in 2009 [156]. The SOA and the Ge-doped nonlinear fiber can achieve leaky temporal integration of a signal with thresholding functions. Later in 2011, Coomans et al. designed an optical spiking neuron based on a semiconductor ring laser and demonstrated the mechanism of utilizing a single triggering spike to excite consecutive spikes [157].

![Figure 8:

Advances in optical SNNs. (A) A spiking neuron based on SOA [156]. (B) Graphene laser-based all-optical fiber neurons [169]. (C) A spiking neuron network based on WDM [102]. (D) A spiking neuron based on VCSEL and TDM [165]. (E) A spiking neuron network based on WDM and PCM [37]. (F) Schematic diagram of a DOPO neural network based on time-domain multiplexing in a 1 km fiber-ring cavity [182].](/document/doi/10.1515/nanoph-2022-0485/asset/graphic/j_nanoph-2022-0485_fig_008.jpg)

Advances in optical SNNs. (A) A spiking neuron based on SOA [156]. (B) Graphene laser-based all-optical fiber neurons [169]. (C) A spiking neuron network based on WDM [102]. (D) A spiking neuron based on VCSEL and TDM [165]. (E) A spiking neuron network based on WDM and PCM [37]. (F) Schematic diagram of a DOPO neural network based on time-domain multiplexing in a 1 km fiber-ring cavity [182].

Since then, various lasers have been exploited as excitable devices for spiking neurons, such as distributed feedback lasers [158], [159], [160], [161], VCSELs [162], [163], [164], [165], [166], [167], and excitable fiber lasers [168], [169], [170], [171]. For instance, Nahmias et al. proposed a spiking neuron based on photodetectors and DFB lasers (with saturable absorbers inside), in which the input spiking signals were weighted by a tunable filter and summed by the photodetector, then drove the excitable laser to output spikes [158]. Later, they also embodied the saturable absorbers into VCSELs to form spiking neurons, utilizing the gain variation of VCSELs with input pulses at different wavelengths [118]. After that, Xiang et al. proposed a VCSEL-based SNN [163], and further presented a photonic approach for binary convolution [166]. Then they also emulated the sound azimuth detection function of the human brain based on VCSELs, in which the time interval of two spikes indicates the sound azimuth [167].

Other nonlinear optical cavities can also implement spiking dynamics [172], [173], [174], [175], [176], [177], [178], [179], [180], [181], [182], [183]. In 2016, Shastri et al. experimentally verified that graphene laser-based all-optical fiber neurons (Figure 8B) can implement spiking dynamics including consecutive spike generation, suppression of sub-threshold responses, refractory periods, and bursting behaviors with strong inputs [169]. Jha et al. proposed a spiking neuron using a graphene-on-silicon MRR, which enables spikes delivered at a high speed and improves the overall power efficiency [172]. Besides, with highly contrasting optical and electrical features between the amorphous and crystalline states, PCM can also implement spiking neurons. In 2018, Chakraborty et al. demonstrated an optical spiking neuron based on the phase change dynamics of GST embedded on the top of an MRR [114]. Soon after, Feldman et al. constructed an optical spiking neuron composed of the PCM and MRRs in 2019 [37], where the PCM unit on the ring resonator served as the excitable devices. In [165], a VCSEL-based spiking neuron with integrate-and-fire capability was demonstrated (Figure 8D), achieving power summation of multiple fast input pulses. In [182], a spiking neuron network based on a degenerate optical parametric oscillator was constructed (Figure 8F). It consists of a fiber-ring cavity and opto-electronic feedback system, which could accommodate more than 5000 time-domain multiplexed pulses in the 5-μs round-trip time.

The WDM technique has also played a significant role in achieving photonic SNNs. The optical pulses encoded on to different wavelengths are inherently transmitted without crosstalk, and the weighted optical signals from different nodes can be detected/summed via photodetectors. In 2014, Tait et al. proposed an optical SNN (Figure 8C) based on the WDM technique [102], supporting large-scale parallel interconnections among high-performance optical spiking neurons (as introduced in Section 2.2). The following year, Nahmias et al. presented a method to cascade DFB spiking neurons into a large-scale network [159], where the capacity of each waveguide was boosted by WDM. Afterwards, Feldman et al. demonstrated a WDM-based optical SNN (Figure 8E), with input spikes at different wavelengths multiplexed and integrated via post-synaptic spiking neuron [37].

Compared to continuous-valued ONNs, spiking ONNs are more similar to the intuitive model of biological brains. While current research mostly focuses on building high-performance spiking neurons, the network structure, data fan-in/out, and hardware integration remains an unsolved puzzle.

4 Outlook

The key to neuromorphic photonic computing hardware is to achieve a sufficiently large parallelism in order to map the input nodes and synapses onto physical parameters, as the optical systems are analog, as well as to boost the overall computing speed in cooperation with high-speed electro–optic interfaces. Although significant advances have been made in neuromorphic optics, the unique advantage of optics, such as the ultralarge bandwidths and multiple dimensions for multiplexing, have yet been fully realized. Extensive progress remains to be made in terms of collectively combining existing techniques to develop devices tailored for ONNs, especially in terms of key components and integration platforms, optical computing operators/algorithms, and electro–optic hybrid logics/architectures, in order to boost the computing performance of optics, making them comparable with, and ultimately enabling them to partially replace, their electronic counterparts.

The key components and integration platforms for ONNs are mainly centered on those that are critical to implementing multiplexing techniques. SDM is implemented with massive optical devices (both passive and active) densely integrated onto a single chip, which requires: low propagation loss waveguides and crossings, such as ∼1 dB/m achieved by SiN platforms using the Damascence reflow process [184]. Also important are heterogeneous integration techniques that enable compact footprints for computing cores involving active (i.e., gain media or light sources), photodetectors, modulators, and passive computing cores (such as MZI arrays) [185], [186], [187]. Finally, multilayer photonic circuits exploiting the vertical dimension of integration, other than planar circuits [188, 189] can also be exploited.

WDM is implemented mainly through the use of optical frequency combs that provide a large number of evenly spaced wavelength channels, and spectral shapers to manage the wavelength channels. Microcombs generated via parametric oscillation in high-Q micro-resonators, are promising optical frequency comb sources, as they offer a large number of wavelengths in integrated platforms. Significant advances have been made in microcombs, leading to a wideband, compact, high-energy-efficiency (even battery driven operation), turnkey, and mass-producible comb sources for WDM-based ONNs [190], [191], [192], [193], [194], [195], [196], [197], [198], [199], [200].

TDM is implemented based on high-speed electro–optic interfaces including modulators and photodetectors, which communicate with external electronics (such as analog-to-digital converters, digital-to-analog converters, and memories). A diverse range of integrated platforms, including lithium niobate (LiNbO3) [201], hybrid silicon and LiNbO3 [202], thin-film LiNbO3-on-insulator (LNOI) [203], InP [204], and hybrid silicon polymer [205], have readily demonstrated these high-speed interface devices.

PDM and MDM are additional dimensions of optics for multiplexing, and they can greatly scale the parallelism of ONNs as they can operate together with other multiplexing techniques. The key to construct PDMs or MDM-based ONNs lies in achieving on-chip polarization/mode sensitive devices to offer suitable fan-in/-out, such as the dual-polarization LNOI modulator that can utilize two polarization states [206], and the PCM cells capable of switching supported optical modes [55]. In addition, while multiplexing techniques address the mapping of neurons’ input nodes and synapses, the nonlinear functions, albeit demonstrated in [59], remains challenging for integrated platforms. They can be potentially achieved via either highly-nonlinear optical materials/structures, or electro–optic devices [88] employed in the interfaces of ONNs.

In Figure 9 we compare the different multiplexing techniques, indicating their unique advantages.

SDM is the most widely used technique; both in optics and electronics, where reconfigurable diffractive neural networks based on SDM [66] have achieved 2.20 million fan-in nodes of the input signal X, with a computing speed of 240.1 tera-operations per second, which is the highest parallelism so far in optics. SDM can also be implemented with passive optical components, resulting in low complexity, although limited by the integration density achievable with nanofabrication. SDM-based ONNs using Fourier lenses, gratings, cameras/sensors or other optical devices have critical advantages for directly processing optical data/sequences for demanding applications to autonomous vehicles, robotics, and computer vision, thus saving considerable time and energy [18]. SDM is a key technique for realizing optical neuromorphic hardware, and will likely remain the most popular approach.

TDM is implemented with high-speed electro–optical interfaces and external digital-to-analog converters, and so with a sufficiently large memory, the fan-in is theoretically unlimited. TDM is a serial method, and so although it cannot boost the computing parallelism, it is attractive for handling the input and output of data and weights, since it offers a high throughput of up to tens of Giga Baud. By utilizing a 62.9-gigabaud digital-to-analogue converter to map the input vector X onto serial temporal waveforms in the optical domain, an optical convolutional accelerator based on TDM achieved a computing speed of 11 tera-operations per second [39]. Benefiting from mature electronic technologies, TDM can also achieve a high integration density.

Another major advantage of TDM techniques is that they lend themselves much more readily to scaling the network in depth to multiple hidden layers. Most ONNs only achieve a single neural layer, as well as implementing their nonlinear functions in the electronic domain. Using TDM techniques, multilayer ONNs can be constructed to accomplish more challenging functions by iteratively invoking the single optical computing unit, and so TDM-based ONNs have better scalability. For reservoir computing and spiking neural networks, TDM methods help simplify the hardware and significantly reduce the power consumption.

WDM, MDM and PDM based methods further exploit the advantages of optics, and are complementary to SDM approaches. WDM is one of the most direct and effective approaches to enhance the parallelism of photonic neuromorphic hardware. With the advances in hybrid integration of soliton microcombs, integrated photonic hardware accelerators that support more than 200 individual wavelengths have been reported [38], capable of operating at speeds of 1012 MAC operations per second. Hybrid integration enables WDM-based integrated photonic hardware to achieve a high technical complexity. Similar to WDM, MDM, and PDM both involve additional components to further enhance the system parallelism and thus computing power.

Schematic of a photonic neuron using hybrid multiplexing techniques. EOM, electro–optical modulation; PU, processing unit; PD, photodetector; ADC, analog-to-digital convertor.

Finally, we note that future neuromorphic photonic processors will likely make use of all of the multiplexing techniques supported by optics, simultaneously. Figure 9 shows a schematic of a photonic neuron using hybrid multiplexing techniques. It uses TDM to achieve the data and weight input/output, and ideally uses all multiplexing methods to boost the parallelism. The processing unit (PU) can be implemented in the form of interferometers or dispersive media in order to achieve the greatest range of computing functions. The neurons can then be densely integrated into arrays to form a full neural network for specific machine learning tasks.

While analog optics features potentially much higher computing power and energy efficiency, they are inherently limited in terms of flexibility and versatility in contrast to digital electronics based on Von Neumann structures with distributed processors and memories. As such, hybrid opto–electronic neuromorphic hardware is a promising solution that leverages the advantages of both optics and electronics, where optics undertakes the majority of specific computing operations while electronics manages hardware parameters and data storage. Under such architectures, the optical computing cores serve as callable modules embedded in external electronic hardware, with the data rate and analog bandwidths matching each other. It is optimistically expected that, with more categories of optical computing operations, algorithms and architectures being demonstrated, ONNs can serve as a universal building block for diverse machine learning tasks [200, 207], [208], [209], [210], [211], [212]. With the dramatically accelerated computing speed brought about by the hybrid opto–electronic computing architecture, much more complicated neural networks can be enabled, potentially leading to revolutionary advances in applications such as automated vehicles, real-time data processing, and medical diagnosis.

5 Conclusions

Photonic multiplexing techniques have remarkable capacity for implementing the optoelectronic hardware that is isomorphic to neural networks, which can offer competitive performance in connectivity and linear operation of neural network. In this review, we have presented typical architectures and the recent advances of ONNs that utilize different photonic multiplexing/hybrid-multiplexing techniques involving SDM, WDM, TDM, MDM, and PDM to achieve interconnection and computing operations. The challenges and future possibilities of ONNs are also discussed.

-

Author contributions: All the authors have accepted responsibility for the entire content of this submitted manuscript and approved submission.

-

Research funding: This work is supported in part by the National Natural Science Foundation of China (NSFC) under Grant 62135009.

-

Conflict of interest statement: The authors declare no conflicts of interest regarding this article.

References

[1] Y. Lecun, Y. Bengio, and G. Hinton, “Deep learning,” Nature, vol. 521, no. 7553, pp. 436–444, 2015. https://doi.org/10.1038/nature14539.Search in Google Scholar PubMed

[2] V. Mnih, K. Kavukcuoglu, D. Silver, et al.., “Human-level control through deep reinforcement learning,” Nature, vol. 518, no. 7540, pp. 529–533, 2015. https://doi.org/10.1038/nature14236.Search in Google Scholar PubMed

[3] D. Silver, A. Huang, C. J. Maddison, et al.., “Mastering the game of Go with deep neural networks and tree search,” Nature, vol. 529, no. 7587, pp. 484–489, 2016. https://doi.org/10.1038/nature16961.Search in Google Scholar PubMed

[4] P. M. R. Devries, F. Viegas, M. Wattenberg, and B. J. Meade, “Deep learning of aftershock patterns following large earthquakes,” Nature, vol. 560, no. 7720, pp. 632–634, 2018. https://doi.org/10.1038/s41586-018-0438-y.Search in Google Scholar PubMed

[5] S. Webb, “Deep learning for biology,” Nature, vol. 554, no. 7693, pp. 555–557, 2018. https://doi.org/10.1038/d41586-018-02174-z.Search in Google Scholar PubMed

[6] M. Reichstein, G. Camps-Valls, B. Stevens, et al.., “Deep learning and process understanding for data-driven earth system science,” Nature, vol. 566, no. 7743, pp. 195–204, 2019. https://doi.org/10.1038/s41586-019-0912-1.Search in Google Scholar PubMed

[7] X. Xu, Y. Ding, S. Hu, et al.., “Scaling for edge inference of deep neural networks,” Nat. Electron., vol. 1, no. 4, pp. 216–222, 2018. https://doi.org/10.1038/s41928-018-0059-3.Search in Google Scholar

[8] C. Toumey, “Less is Moore,” Nat. Nanotechnol., vol. 11, no. 1, pp. 2–3, 2016. https://doi.org/10.1038/nnano.2015.318.Search in Google Scholar PubMed

[9] G. D. Ronald, W. Michael, B. David, S. Dennis, and N. M. Trevor, “Near-threshold computing: reclaiming Moore’s law through energy efficient integrated circuits,” Proc. IEEE, vol. 98, no. 2, pp. 253–266, 2010. https://doi.org/10.1109/jproc.2009.2034764.Search in Google Scholar

[10] S. Ambrogio, P. Narayanan, H. Tsai, et al.., “Equivalent-accuracy accelerated neural-network training using analogue memory,” Nature, vol. 558, no. 7708, pp. 60–67, 2018. https://doi.org/10.1038/s41586-018-0180-5.Search in Google Scholar PubMed

[11] D. A. B. Miller, “Attojoule optoelectronics for low-energy information processing and communications,” J. Lightwave Technol., vol. 35, no. 3, pp. 346–396, 2017. https://doi.org/10.1109/jlt.2017.2647779.Search in Google Scholar

[12] K. Kitayama, M. Notomi, M. Naruse, and K. Inoue, “Novel Frontier of photonics for data processing—photonic accelerator,” APL Photonics, vol. 4, no. 9, p. 090901, 2019. https://doi.org/10.1063/1.5108912.Search in Google Scholar

[13] M. M. Waldrop, “The chips are down for Moore’s law,” Nature, vol. 530, no. 7589, pp. 144–147, 2016. https://doi.org/10.1038/530144a.Search in Google Scholar PubMed

[14] T. F. de Lima, B. J. Shastri, A. N. Tait, M. A. Nahmias, and P. R. Prucnal, “Progress in neuromorphic photonics,” Nanophotonics, vol. 6, no. 3, pp. 577–599, 2017. https://doi.org/10.1515/nanoph-2016-0139.Search in Google Scholar

[15] Q. Zhang, H. Yu, M. Barbiero, B. Wang, and M. Gu, “Artificial neural networks enabled by nanophotonics,” Light Sci. Appl., vol. 8, no. 1, p. 42, 2019. https://doi.org/10.1038/s41377-019-0151-0.Search in Google Scholar PubMed PubMed Central

[16] T. F. de Lima, A. N. Tait, A. Mehrabian, et al.., “Primer on silicon neuromorphic photonic processors: architecture and compiler,” Nanophotonics, vol. 9, no. 13, pp. 4055–4073, 2020. https://doi.org/10.1515/nanoph-2020-0172.Search in Google Scholar

[17] E. Goi, Q. Zhang, X. Chen, H. Luan, and M. Gu, “Perspective on photonic memristive neuromorphic computing,” PhotoniX, vol. 1, no. 3, pp. 115–133, 2020. https://doi.org/10.1186/s43074-020-0001-6.Search in Google Scholar

[18] G. Wetzstein, A. Oacan, S. Gigan, et al.., “Inference in artificial intelligence with deep optics and photonics,” Nature, vol. 588, no. 7836, pp. 39–47, 2020. https://doi.org/10.1038/s41586-020-2973-6.Search in Google Scholar PubMed

[19] B. J. Shastri, A. N. Tait, T. F. de Lima, et al.., “Photonics for artificial intelligence and neuromorphic computing,” Nat. Photonics, vol. 15, no. 2, pp. 102–114, 2021. https://doi.org/10.1038/s41566-020-00754-y.Search in Google Scholar

[20] K. Berggren, Q. Xia, K. Likharev, et al.., “Roadmap on emerging hardware and technology for machine learning,” Nanotechnology, vol. 32, no. 1, p. 012002, 2021. https://doi.org/10.1088/1361-6528/aba70f.Search in Google Scholar PubMed

[21] J. Liu, Q. Wu, X. Sui, et al.., “Research progress in optical neural networks: theory, applications and developments,” PhotoniX, vol. 2, no. 1, pp. 1–39, 2021, https://doi.org/10.1186/s43074-021-00026-0.Search in Google Scholar

[22] C. Li, X. Zhang, J. Li, T. Fang, and X. Dong, “The challenges of modern computing and new opportunities for optics,” PhotoniX, vol. 2, no. 1, pp. 1–31, 2021, https://doi.org/10.1186/s43074-021-00042-0.Search in Google Scholar

[23] C. Huang, V. J. Sorger, M. Miscuglio, et al.., “Prospects and applications of photonic neural networks,” Adv. Phys. X, vol. 7, no. 1, pp. 1–63, 2022, https://doi.org/10.1080/23746149.2021.1981155.Search in Google Scholar

[24] H. Zhou, J. Dong, J. Cheng, et al.., “Photonic matrix multiplication lights up photonic accelerator and beyond,” Light Sci. Appl., vol. 11, no. 1, p. 30, 2022. https://doi.org/10.1038/s41377-022-00717-8.Search in Google Scholar PubMed PubMed Central

[25] D. Midtvedt, V. Mylnikov, A. Stilgoe, et al.., “Deep learning in light-matter interactions,” Nanophotonics, vol. 11, no. 14, pp. 3189–3214, 2022. https://doi.org/10.1515/nanoph-2022-0197.Search in Google Scholar

[26] G. Pandey, A. Choudhary, and A. Dixit, “Wavelength division multiplexed radio over fiber links for 5G fronthaul networks,” IEEE J. Sel. Area. Commun., vol. 39, no. 9, pp. 2789–2803, 2021. https://doi.org/10.1109/jsac.2021.3064654.Search in Google Scholar

[27] A. Macho, M. Morant, and R. LIorente, “Next-generation optical fronthaul systems using multicore fiber media,” J. Lightwave Technol., vol. 34, no. 20, pp. 4819–4827, 2016. https://doi.org/10.1109/jlt.2016.2573038.Search in Google Scholar

[28] L.-W. Luo, N. Ophir, C. P. Chen, et al.., “WDM-compatible mode-division multiplexing on a silicon chip,” Nat. Commun., vol. 5, no. 1, p. 3069, 2014. https://doi.org/10.1038/ncomms4069.Search in Google Scholar PubMed

[29] A. Gnauck, R. W. Tkach, A. R. Chraplyvy, and T. Li, “High-capacity optical transmission systems,” J. Lightwave Technol., vol. 26, no. 9, pp. 1032–1045, 2008. https://doi.org/10.1109/jlt.2008.922140.Search in Google Scholar

[30] D. J. Richardson, J. M. Fini, and L. E. Nelson, “Space-division multiplexing in optical fibres,” Nat. Photonics, vol. 7, no. 5, pp. 354–362, 2013. https://doi.org/10.1038/nphoton.2013.94.Search in Google Scholar

[31] P. J. Winzer, “High-spectral-efficiency optical modulation formats,” J. Lightwave Technol., vol. 30, no. 24, pp. 3824–3835, 2012. https://doi.org/10.1109/jlt.2012.2212180.Search in Google Scholar

[32] N. Cvijetic, “OFDM for next-generation optical access networks,” J. Lightwave Technol., vol. 30, no. 4, pp. 384–398, 2012. https://doi.org/10.1109/jlt.2011.2166375.Search in Google Scholar

[33] B. J. Puttnam, G. Rademacher, and R. S. Luis, “Space-division multiplexing for optical fiber communications,” Optica, vol. 8, no. 9, pp. 1186–1203, 2021. https://doi.org/10.1364/optica.427631.Search in Google Scholar

[34] F. Ren, J. Li, T. Hu, et al.., “Cascaded mode-division-multiplexing and time-division-multiplexing passive optical network based on low mode-crosstalk FMF and mode MUX/DEMUX,” IEEE Photonics J., vol. 7, no. 5, pp. 1–9, 2015. https://doi.org/10.1109/jphot.2015.2470098.Search in Google Scholar

[35] X. Fang, H. Ren, and M. Gu, “Orbital angular momentum holography for high-security encryption,” Nat. Photonics, vol. 14, pp. 102–108, 2020. https://doi.org/10.1038/s41566-019-0560-x.Search in Google Scholar

[36] Y. Shen, N. C. Harris, S. Skirlo, et al.., “Deep learning with coherent nanophotonic circuits,” Nat. Photonics, vol. 11, no. 7, pp. 441–446, 2017. https://doi.org/10.1038/nphoton.2017.93.Search in Google Scholar

[37] J. Feldmann, N. Youngblood, C. D. Wright, H. Bhaskaran, and W. H. P. Pernice, “All-optical spiking neurosynaptic networks with self-learning capabilities,” Nature, vol. 569, no. 7755, pp. 208–214, 2019. https://doi.org/10.1038/s41586-019-1157-8.Search in Google Scholar PubMed PubMed Central

[38] J. Feldmann, N. Youngblood, M. Karpov, et al.., “Parallel convolutional processing using an integrated photonic tensor core,” Nature, vol. 589, no. 7840, pp. 52–58, 2021. https://doi.org/10.1038/s41586-020-03070-1.Search in Google Scholar PubMed

[39] X. Xu, M. Tan, B. Corcoran, et al.., “11 TOPS photonic convolutional accelerator for optical neural networks,” Nature, vol. 589, no. 7840, pp. 44–51, 2021. https://doi.org/10.1038/s41586-020-03063-0.Search in Google Scholar PubMed

[40] H. Luan, D. Lin, K. Li, W. Meng, M. Gu, and X. Fang, “768-ary Laguerre-Gaussian-mode shift keying free-space optical communication based on convolutional neural networks,” Opt. Express, vol. 29, no. 13, pp. 19807–19818, 2021. https://doi.org/10.1364/oe.420176.Search in Google Scholar

[41] W. Xin, Q. Zhang, and M. Gu, “Inverse design of optical needles with central zero-intensity points by artificial neural networks,” Opt. Express, vol. 28, no. 26, pp. 38718–38732, 2020. https://doi.org/10.1364/oe.410073.Search in Google Scholar PubMed

[42] E. Goi, X. Chen, Q. Zhang, et al.., “Nanoprinted high-neuron-density optical linear perceptrons performing near-infrared inference on a CMOS chip,” Light Sci. Appl., vol. 10, no. 40, pp. 1–11, 2021. https://doi.org/10.1038/s41377-021-00483-z.Search in Google Scholar PubMed PubMed Central

[43] B. P. Cumming and M. Gu, “Direct determination of aberration functions in microscopy by an artificial neural network,” Opt. Express, vol. 28, no. 10, pp. 14511–14521, 2020. https://doi.org/10.1364/oe.390856.Search in Google Scholar PubMed

[44] D. Perez, I. Gasulla, P. Das Mahapatra, and J. Capmany, “Principles, fundamentals, and applications of programmable integrated photonics,” Adv. Opt. Photon., vol. 12, no. 3, pp. 709–786, 2020. https://doi.org/10.1364/aop.387155.Search in Google Scholar

[45] W. Bogaerts, D. Perez, J. Capmany, et al.., “Programmable photonic circuits,” Nature, vol. 586, no. 7828, pp. 207–216, 2020. https://doi.org/10.1038/s41586-020-2764-0.Search in Google Scholar PubMed

[46] D. Perez, I. Gasulla, and J. Capmany, “Programmable multifunctional integrated nanophotonics,” Nanophotonics, vol. 7, no. 8, pp. 1351–1371, 2018. https://doi.org/10.1515/nanoph-2018-0051.Search in Google Scholar

[47] W. Bogaerts and L. Chrostowski, “Silicon photonics circuit design: methods, tools and challenges,” Laser Photon. Rev., vol. 12, no. 4, 2018, Art. no. 1700237 https://doi.org/10.1002/lpor.201700237 .Search in Google Scholar

[48] K. Nozaki, S. Matsuo, T. Fujii, et al.., “Femtofarad optoelectronic integration demonstrating energy-saving signal conversion and nonlinear functions,” Nat. Photonics, vol. 13, no. 7, pp. 454–459, 2019. https://doi.org/10.1038/s41566-019-0397-3.Search in Google Scholar

[49] M. A. Nahmias, T. F. de Lima, A. N. Tait, H.-T. Peng, B. J. Shastri, and P. R. Prucnal, “Photonic multiply-accumulate operations for neural networks,” IEEE J. Sel. Top. Quantum Electron., vol. 26, no. 1, pp. 1–18, 2020. https://doi.org/10.1109/jstqe.2019.2941485.Search in Google Scholar

[50] T. De Lima, H. Peng, A. Tait, et al.., “Machine learning with neuromorphic photonics,” J. Lightwave Technol., vol. 37, no. 5, pp. 1515–1534, 2019. https://doi.org/10.1109/jlt.2019.2903474.Search in Google Scholar

[51] T. De Lima, A. Tait, H. Saeidi, et al.., “Noise analysis of photonic modulator neurons,” IEEE J. Sel. Top. Quantum Electron., vol. 26, no. 1, pp. 1–9, 2019. https://doi.org/10.1109/jstqe.2019.2931252.Search in Google Scholar

[52] H. Larocque and D. Englund, “Universal linear optics by programmable multimode interference,” Opt. Express, vol. 29, no. 23, p. 38257, 2021. https://doi.org/10.1364/oe.439341.Search in Google Scholar

[53] J. Li, Y. Hung, O. Kulce, et al.., “Polarization multiplexed diffractive computing: all-optical implementation of a group of linear transformations through a polarization-encoded diffractive network,” Light Sci. Appl., vol. 11, no. 153, pp. 1–20, 2022. https://doi.org/10.1038/s41377-022-00849-x.Search in Google Scholar PubMed PubMed Central

[54] R. Hamerly, L. Bernstein, A. Sludds, M. Soljačić, and D. Englund, “Large-scale optical neural networks based on photoelectric multiplication,” Phys. Rev. X, vol. 9, no. 2, p. 021032, 2019. https://doi.org/10.1103/physrevx.9.021032.Search in Google Scholar

[55] C. Wu, H. Yu, S. Lee, et al.., “Programmable phase-change metasurfaces on waveguides for multimode photonic convolutional neural network,” Nat. Commun., vol. 12, no. 96, pp. 1–8, 2021. https://doi.org/10.1038/s41467-020-20365-z.Search in Google Scholar PubMed PubMed Central

[56] K. von Bieren, “Lens design for optical Fourier transform systems,” Appl. Opt., vol. 10, no. 12, pp. 2739–2742, 1971. https://doi.org/10.1364/ao.10.002739.Search in Google Scholar PubMed

[57] J. W. Goodman, A. R. Dias, and L. M. Woody, “Fully parallel, high-speed incoherent optical method for performing discrete Fourier transforms,” Opt. Lett., vol. 2, no. 1, pp. 1–3, 1978. https://doi.org/10.1364/ol.2.000001.Search in Google Scholar PubMed

[58] J. Chang, V. Sitzmann, X. Dun, W. Heidrich, and G. Wetzstein, “Hybrid optical-electronic convolutional neural networks with optimized diffractive optics for image classification,” Sci. Rep., vol. 8, no. 1, p. 12324, 2018. https://doi.org/10.1038/s41598-018-30619-y.Search in Google Scholar PubMed PubMed Central

[59] Y. Zuo, B. Li, Y. Zhao, and Y. Jiang, “All-optical neural network with nonlinear activation functions,” Optica, vol. 6, no. 9, pp. 1132–1137, 2019. https://doi.org/10.1364/optica.6.001132.Search in Google Scholar

[60] T. Yan, J. Wu, T. Zhou, et al.., “Fourier-space diffractive deep neural network,” Phys. Rev. Lett., vol. 123, no. 2, p. 023901, 2019. https://doi.org/10.1103/physrevlett.123.023901.Search in Google Scholar

[61] E. P. Mosca, R. D. Griffin, F. P. Pursel, and J. N. Lee, “Acoustooptical matrix-vector product processor: implementation issues,” Appl. Opt., vol. 28, no. 18, pp. 3843–3851, 1989. https://doi.org/10.1364/ao.28.003843.Search in Google Scholar

[62] C.-C. Sun, M.-W. Chang, and K. Y. Hsu, “Matrix-matrix multiplication by using anisotropic self-diffraction in BaTiO3,” Appl. Opt., vol. 33, no. 20, pp. 4501–4507, 1994. https://doi.org/10.1364/ao.33.004501.Search in Google Scholar PubMed

[63] H. J. Caulfield and S. Dolev, “Why future supercomputing requires optics,” Nat. Photonics, vol. 4, no. 5, pp. 261–263, 2010. https://doi.org/10.1038/nphoton.2010.94.Search in Google Scholar

[64] W. Shi, Z. Huang, H. Huang, et al.., “LOEN: lensless opto-electronic neural network empowered machine vision,” Light Sci. Appl., vol. 11, no. 1, p. 121, 2022. https://doi.org/10.1038/s41377-022-00809-5.Search in Google Scholar PubMed PubMed Central

[65] X. Lin, Y. Rivenson, N. T. Yardimci, et al.., “All-optical machine learning using diffractive deep neural networks,” Science, vol. 361, no. 6406, pp. 1004–1008, 2018. https://doi.org/10.1126/science.aat8084.Search in Google Scholar PubMed

[66] T. Zhou, X. Lin, J. Wu, et al.., “Large-scale neuromorphic optoelectronic computing with a reconfigurable diffractive processing unit,” Nat. Photonics, vol. 15, no. 5, pp. 367–373, 2021. https://doi.org/10.1038/s41566-021-00796-w.Search in Google Scholar

[67] T. Yan, R. Yang, Z. Zheng, et al.., “All-optical graph representation learning using integrated diffractive photonic computing units,” Sci. Adv., vol. 8, no. 24, p. 7630, 2022. https://doi.org/10.1126/sciadv.abn7630.Search in Google Scholar PubMed PubMed Central

[68] T. Wang, S. Ma, L. G. Wright, et al.., “An optical neural network using less than 1 photon per multiplication,” Nat. Commun., vol. 13, no. 1, p. 123, 2022. https://doi.org/10.1038/s41467-021-27774-8.Search in Google Scholar PubMed PubMed Central

[69] C. Liu, Q. Ma, Z. Luo, et al.., “A programmable diffractive deep neural network based on a digital-coding metasurface array,” Nat. Electron., vol. 5, pp. 113–122, 2022. https://doi.org/10.1038/s41928-022-00719-9.Search in Google Scholar

[70] Z. Xu, X. Yuan, T. Zhou, and L. Fang, “A multichannel optical computing architecture for advanced machine vision,” Light Sci. Appl., vol. 11, no. 1, p. 255, 2022. https://doi.org/10.1038/s41377-022-00945-y.Search in Google Scholar PubMed PubMed Central

[71] R. Chen, Y. Li, M. Lou, et al.., “Physics-aware machine learning and adversarial attack in complex-valued reconfigurable diffractive all-optical neural network,” Laser Photon. Rev., 2022, Early Access, https://doi.org/10.1002/lpor.202200348.Search in Google Scholar

[72] M. Reck, A. Zeilinger, H. J. Bernstei, and P. Bertani, “Experimental realization of any discrete unitary operator,” Phys. Rev. Lett., vol. 73, no. 1, pp. 58–61, 1994. https://doi.org/10.1103/physrevlett.73.58.Search in Google Scholar PubMed

[73] W. R. Clements, P. C. Humphreys, B. J. Metcalf, et al.., “Optimal design for universal multiport interferometers,” Optica, vol. 3, no. 12, pp. 1460–1465, 2016. https://doi.org/10.1364/optica.3.001460.Search in Google Scholar

[74] H. Zhang, M. Gu, X. D. Jiang, et al.., “An optical neural chip for implementing complex-valued neural network,” Nat. Commun., vol. 12, no. 1, pp. 1–11, 2021. https://doi.org/10.1038/s41467-020-20719-7.Search in Google Scholar PubMed PubMed Central

[75] H. Zhu, J. Zou, H. Zhang, et al.., “Space-efficient optical computing with an integrated chip diffractive neural network,” Nat. Commun., vol. 13, no. 1, p. 1044, 2022. https://doi.org/10.1038/s41467-022-28702-0.Search in Google Scholar PubMed PubMed Central

[76] R. Burgwal, W. R. Clements, D. H. Smith, et al.., “Using an imperfect photonic network to implement random unitaries,” Opt. Express, vol. 25, no. 23, pp. 28236–28245, 2017. https://doi.org/10.1364/oe.25.028236.Search in Google Scholar

[77] M. Y. S. Fang, S. Manipatruni, C. Wierzynski, et al.., “Design of optical neural networks with component imprecisions,” Opt. Express, vol. 27, no. 10, pp. 14009–14029, 2019. https://doi.org/10.1364/oe.27.014009.Search in Google Scholar PubMed

[78] Y. Tian, Y. Zhao, S. Liu, et al.., “Scalable and compact photonic neural chip with low learning-capability-loss,” Nanophotonics, vol. 11, no. 2, pp. 329–344, 2021. https://doi.org/10.1515/nanoph-2021-0521.Search in Google Scholar

[79] H. Zhou, Y. Zhao, G. Xu, et al.., “Chip-scale optical matrix computation for PageRank algorithm,” IEEE J. Sel. Top. Quantum Electron., vol. 26, no. 2, pp. 1–10, 2020. https://doi.org/10.1109/jstqe.2019.2943347.Search in Google Scholar

[80] M. Moralis-Pegios, G. Mourgias-Alexandris, A. Tsakyridis, et al.., “Neuromorphic silicon photonics and hardware-aware deep learning for high-speed inference,” J. Lightwave Technol., vol. 40, no. 10, pp. 3243–3254, 2022. https://doi.org/10.1109/jlt.2022.3171831.Search in Google Scholar

[81] G. Dabos, D. V. Bellas, R. Stabile, et al.., “Neuromorphic photonic technologies and architectures: scaling opportunities and performance frontiers,” Opt. Mater. Express, vol. 12, no. 6, pp. 2343–2367, 2022. https://doi.org/10.1364/ome.452138.Search in Google Scholar

[82] G. Giamougiannis, A. Tsakyridis, M. Moralis-Pegios, et al.., “High-speed analog photonic computing with tiled matrix multiplication and dynamic precision capabilities for DNNs,” in 48th European Conference on Optical Communication (ECOC), Basel, Switzerland, 2022.Search in Google Scholar

[83] G. Giamougiannis, A. Tsakyridis, G. Mourgias-Alexandris, et al.., “Silicon-integrated coherent neurons with 32GMAC/sec/axon compute line-rates using EAM-based input and weighting cells,” in Eur. Conf. on Optical Comm. (ECOC) 2021, Bordeaux, France, 2021.10.1109/ECOC52684.2021.9605987Search in Google Scholar

[84] G. Mourgias-Alexandris, A. Totović, A. Tsakyridis, et al.., “Neuromorphic photonics with coherent linear neurons using dual-IQ modulation cells,” J. Lightwave Technol., vol. 38, no. 4, pp. 811–819, 2020. https://doi.org/10.1109/jlt.2019.2949133.Search in Google Scholar

[85] S. Xu, J. Wang, H. Shu, et al.., “Optical coherent dot-product chip for sophisticated deep learning regression,” Light Sci. Appl., vol. 10, no. 12, p. 221, 2021. https://doi.org/10.1038/s41377-021-00666-8.Search in Google Scholar PubMed PubMed Central

[86] M. Mancinelli, D. Bazzanella, P. Bettotti, and L. Pavesi, “A photonic complex perceptron for ultrafast data processing,” Sci. Rep., vol. 12, no. 1, p. 4216, 2022. https://doi.org/10.1038/s41598-022-08087-2.Search in Google Scholar PubMed PubMed Central

[87] G. Mourgias-Alexandris, M. Moralis-Pegios, A. Tsakyridis, et al.., “Noise-resilient and high-speed deep learning with coherent silicon photonics,” Nat. Commun., vol. 13, no. 1, p. 5572, 2022. https://doi.org/10.1038/s41467-022-33259-z.Search in Google Scholar PubMed PubMed Central

[88] F. Ashtiani, A. J. Geers, and F. Aflatouni, “An on-chip photonic deep neural network for image classification,” Nature, vol. 606, no. 7914, pp. 501–506, 2022. https://doi.org/10.1038/s41586-022-04714-0.Search in Google Scholar PubMed

[89] D. Brunner and I. Fischer, “Reconfigurable semiconductor laser networks based on diffractive coupling,” Opt. Lett., vol. 40, no. 16, pp. 3854–3857, 2015. https://doi.org/10.1364/ol.40.003854.Search in Google Scholar PubMed

[90] K. Vandoorne, W. Dierckx, B. Schrauwen, et al.., “Toward optical signal processing using photonic reservoir computing,” Opt. Express, vol. 16, no. 15, pp. 11182–11192, 2008. https://doi.org/10.1364/oe.16.011182.Search in Google Scholar PubMed

[91] K. Vandoorne, J. Dambre, D. Verstraeten, et al.., “Parallel reservoir computing using optical amplifiers,” IEEE Trans. Neural Network., vol. 22, no. 9, pp. 1469–1481, 2011. https://doi.org/10.1109/tnn.2011.2161771.Search in Google Scholar

[92] K. Vandoorne, P. Mechet, T. V. Vaerenbergh, et al.., “Experimental demonstration of reservoir computing on a silicon photonics chip,” Nat. Commun., vol. 5, no. 1, p. 3541, 2014. https://doi.org/10.1038/ncomms4541.Search in Google Scholar PubMed

[93] A. Katumba, M. Freiberger, P. Bienstman, and J. Dambre, “A multiple-input strategy to efficient integrated photonic reservoir computing,” Cognit. Comput., vol. 9, no. 3, pp. 307–314, 2017. https://doi.org/10.1007/s12559-017-9465-5.Search in Google Scholar

[94] A. Kautumba, J. Heyvaert, B. Schneider, et al.., “Low-loss photonic reservoir computing with multimode photonic integrated circuits,” Sci. Rep., vol. 8, no. 1, p. 2653, 2018. https://doi.org/10.1038/s41598-018-21011-x.Search in Google Scholar PubMed PubMed Central

[95] J. Bueno, S. Maktoobi, L. Froehly, et al.., “Reinforcement learning in a large-scale photonic recurrent neural network,” Optica, vol. 5, no. 6, pp. 756–760, 2018. https://doi.org/10.1364/optica.5.000756.Search in Google Scholar

[96] J. Dong, M. Rafayelyan, F. Krzakala, and S. Gigan, “Optical reservoir computing using multiple light scattering for chaotic systems prediction,” IEEE J. Sel. Top. Quantum Electron., vol. 26, no. 1, pp. 1–12, 2020. https://doi.org/10.1109/jstqe.2019.2936281.Search in Google Scholar

[97] T. Heuser, J. Große, S. Holzinger, M. M. Sommer, and S. Reitzenstein, “Development of highly homogenous quantum dot micropillar arrays for optical reservoir computing,” IEEE J. Sel. Top. Quantum Electron., vol. 26, no. 1, pp. 1–9, 2020. https://doi.org/10.1109/jstqe.2019.2925968.Search in Google Scholar