Abstract

Nanophotonic devices manipulate light at sub-wavelength scales, enabling tasks such as light concentration, routing, and filtering. Designing these devices to achieve precise light–matter interactions using structural parameters and materials is a challenging task. Traditionally, solving this problem has relied on computationally expensive, iterative methods. In recent years, deep learning techniques have emerged as promising tools for tackling the inverse design of nanophotonic devices. While several review articles have provided an overview of the progress in this rapidly evolving field, there is a need for a comprehensive tutorial that specifically targets newcomers without prior experience in deep learning. Our goal is to address this gap and provide practical guidance for applying deep learning to individual scientific problems. We introduce the fundamental concepts of deep learning and critically discuss the potential benefits it offers for various inverse design problems in nanophotonics. We present a suggested workflow and detailed, practical design guidelines to help newcomers navigate the challenges they may encounter. By following our guide, newcomers can avoid frustrating roadblocks commonly experienced when venturing into deep learning for the first time. In a second part, we explore different iterative and direct deep learning-based techniques for inverse design, and evaluate their respective advantages and limitations. To enhance understanding and facilitate implementation, we supplement the manuscript with detailed Python notebook examples, illustrating each step of the discussed processes. While our tutorial primarily focuses on researchers in (nano-)photonics, it is also relevant for those working with deep learning in other research domains. We aim at providing a solid starting point to empower researchers to leverage the potential of deep learning in their scientific pursuits.

1 Introductions

The broad field of (nano-)photonics deals with the interaction of light with matter and with applications that arise from structuring materials at sub-wavelength scales in order to guide or concentrate light in a pre-defined manner [1–5]. Astonishing effects can be obtained in this way, such as unidirectional scattering, negative refraction, enhanced nonlinear optical effects, amplified quantum emitter yields, magnetic optical effects at visible frequencies [6–12]. Tailoring of such effects via the rational design of nanodevices is typically termed “inverse design”. Unfortunately, like most inverse problems, nanophotonics inverse design is in general an ill-posed problem and cannot be solved directly [13]. Usually, iterative approaches like global optimization algorithms or high-dimensional gradient based adjoint methods are used, which however are computationally expensive and slow, especially if applied to repetitive design tasks [14, 15].

In the recent past it has been shown that deep learning models can be efficiently trained on predicting (nano-)optical phenomena [16–20]. This rapidly growing research interest stems from remarkable achievements that deep learning accomplished in computer science since around 2010, especially in the fields of computer vision [21–24] and natural language processing [25–27]. The main underlying assumption is that neural networks are universal function approximators [28]. It has been shown that deep learning is capable to solve various inverse design problems in nano-photonics. A non-exhaustive list of examples includes single nano-scatterers [29, 30], gratings [31, 32], Bragg mirrors [33–35], photonic crystals [36], waveguides [37], or sophisticated light routers [38–40]. For an extensive overview of the current state of research we refer the interested reader to recent review articles on the topic [41–48], or to more methodological reviews and comparative benchmarks [15, 49], [50], [51].

This work aims at providing a comprehensive tutorial for deep learning techniques in nano-photonics inverse design. Rather than assembling a complete review of the literature, we try to develop a pedagogical guide through the typical workflow, and focus in particular on the discussion of practical guidelines and good habits, that will hopefully help with the creation of robust models, avoiding frustration through typical pitfalls. We start with a concise introduction to the basic ideas behind deep learning and discuss their practical implications. We then study the question about which types of problem may benefit from solving them with deep learning, and which problems are probably better solved with other approaches. Subsequently we provide considerations on the typical deep learning workflow, including detailed advice for best practices. This ranges from the choice of the model architecture, data generation, parameterization, and normalization, to the setup and running of the training procedure and tuning of the associated hyperparameters.

In the second part, we introduce a selection of methods from the two most popular categories of inverse design using deep learning models. The first group of methods is based on iterative optimization, using deep learning models as ultra-fast and differentiable surrogates for slow numerical simulations. The second approach aims at developing end-to-end network training for solving the inverse problem. The latter, so-called “one-shot” solvers, can be implemented in different ways, we specifically discuss the tandem network, as well as conditional variational autoencoders and conditional generative adversarial networks (cVAE, cGAN). Finally we provide a short overview of further techniques. The paper is accompanied by a set of extensively commented Python notebook tutorials [52], that demonstrate the practical details of the presented techniques on two specific examples from (nano-)photonics: The design of reflective multi-layer systems and tailoring of the scattering response of individual nano-scatterers.

2 Introduction to deep learning and the typical workflow

Before diving into the technical details of different approaches to use deep learning (DL) for inverse design, it is crucial to become familiar with some basic concepts and good practices. In the following section we therefore provide a very concise introduction to artificial neural networks, we discuss how to assess whether it could make sense to apply deep learning to a problem and in which cases one should rather stick to conventional methods.

2.1 Short introduction to artificial neural networks

2.1.1 Artificial neurons and neural networks

The basic building block of an artificial neural network (ANN) is the artificial neuron. An artificial neuron is simply a mathematical function taking several input values. A weight parameter is associated with each of the inputs. As depicted in Figure 1a, first the sum of the weighted input values is calculated, and then the value of an additional bias parameter is added. Subsequently a so-called activation function f is applied to the resulting number. The result of the activation function is the neuron’s output value, also simply called its activation. A neural network is nothing else than several of these artificial neurons connected to each other in some way, for instance by feeding the output of one neuron into other neurons’ input layers (c.f. Figure 1b). Please note that some network architectures also implement other types of connections, for example of neurons within a layer or to preceding layers, etc.

![Figure 1:

Basic concepts of artificial neural networks and their training. (a) A single neuron where x is the input vector, w and b are, respectively, the weights and biases and f is the activation function. The inset shows the output of the sigmoid activation function. (b) A set of neurons arranged in several, consecutively connected layers, forming a neural network. (c) Learning rate size effect on the loss function optimization. (d) A typical training loss or validation error plot. When the loss decrease starts to stagnates, reducing the learning rate leads to further convergence. Adapted from Ref. [22].](/document/doi/10.1515/nanoph-2023-0527/asset/graphic/j_nanoph-2023-0527_fig_001.jpg)

Basic concepts of artificial neural networks and their training. (a) A single neuron where x is the input vector, w and b are, respectively, the weights and biases and f is the activation function. The inset shows the output of the sigmoid activation function. (b) A set of neurons arranged in several, consecutively connected layers, forming a neural network. (c) Learning rate size effect on the loss function optimization. (d) A typical training loss or validation error plot. When the loss decrease starts to stagnates, reducing the learning rate leads to further convergence. Adapted from Ref. [22].

2.1.2 Deep networks with nonlinear activations

A key hypothesis underlying deep learning (“deep” means that a network has many layers) is that the ANN is learning a hierarchy of features from the input data, where each layer is extracting a deeper level of characteristics. In an image for instance, the first layer could be recognizing lines and edges, the second layer may “understand” how they form specific shapes like eyes or ears and a subsequent layer may then analyze the relative positions, orientations and sizes in the ensemble of these features, to identify complex objects like animals or human faces. In consequence, using many layers is essential to ensure that a network has a high abstraction capacity.

Now, it is technically possible to use linear activation functions throughout a network. However, it is trivial to show, that any neural network with multiple layers of linear activation can be identically represented by a single linear layer, as the chain of two linear functions is still linear. Hence, using nonlinear activation functions is crucial in any deep ANN in order to perform hierarchical feature extraction. Various activation functions can be used. The activation which is closest to a biological neuron’s response is probably the sigmoid neuron, which implements a logistic activation function, depicted in the inset of Figure 1a. The sigmoid is however suffering from the fact that for inputs far away from the bias, the gradient is very small. For such inputs learning is very slow. Therefore, other activations such as rectified linear units (ReLU) expressed as max(0, x) often preferred in the hidden layers of an ANN, because of its constant gradient and cheap computation cost. In the “body” of the network, ReLU is usually a very good first choice and leads to robust networks.

The activation of the very last layer of a network, its output layer, needs to be chosen depending on the task to solve, as well as on the numerical range of the output data values. A softmax activation layer is a form of a generalized logistic function, where the sum of the neurons’ activations of the entire layer is normalized. Hence it is adequate for outputs that correspond to a probability distribution like in classification tasks. In regression tasks – inverse design typically falls in this category – a linear output activation function is the simplest first choice, since it can take arbitrary values. Be aware that in case of a linear output activation the ANN needs to learn the data range along with the actual problem to solve. Therefore one should consider normalizing also the output part of the dataset (see also below). In this case Sigmoid (data range [0, 1]) or tanh activation (data range [−1, 1]) can help the network to focus on the essential learning task (c.f. “inductive bias”).

2.1.3 Training

Deep learning is a statistical method; the goal is to adapt the network parameters (weights and biases of the artificial neurons) such that the ANN learns to solve a task that is implicitly defined by a large dataset. The trick to learn from such data is to define a loss function that quantifies the network’s prediction error. More precisely, the loss expresses how much the neural network’s predictions of a set of samples are different from the expected network outputs. The notion of “set” is important, because the loss is defined in a stochastic approximation for batches of samples [53]. The expected outputs obviously need to be known and thus are also part of the training data.

Using an optimization algorithm, the loss function is minimized by modifying the weight and bias parameters of the network. During this optimization, the model repeatedly computes a batch of training samples in forward propagation to calculate the loss. Subsequently the gradients of the loss function with respect to all network weights are calculated in a backpropagation step, using automatic differentiation (autodiff) [54]. The network parameters are finally adapted towards the negative gradient direction, in order to minimize the loss function. Autodiff is the core of deep learning and hence all DL libraries are essentially autodiff libraries with tools for neural network optimization.

In practice, the model parameters are randomly initialized, hence DL is typically not deterministic. Restarting a training process will not give the exact same network. During training, the size of the parameter modification steps is a crucial parameter. It is typically called “learning rate” and takes generally values smaller than 1 (10−3 or 10−4 are common start values). For a given network parameter w i , the update is expressed as w i = w i − LR × ∂loss/∂w i . If the learning rate (LR) is very small, the algorithm requires many iterations to reach the loss function minimum, and if it is too large, it can miss the optimal solution which often is a steep minimum. Thus it is important to reduce the learning rate during training, as depicted in Figure 1c and d.

2.1.4 Which optimizer

The most popular optimization algorithm in deep learning is stochastic gradient descent (SGD) [53, 55], which performs the weight updates as described above. A popular alternative, that often offers a faster convergence are the SGD variants “adam” [56] or “adamW” [57]. It is also more robust with respect to its hyperparameter configuration, and therefore an excellent first choice.

2.1.5 Practical implementation of neural networks

The de facto standard programming language in the deep learning community is Python, but frameworks exist for virtually all programming languages. The most popular libraries to build and train neural networks are “PyTorch” [58], “TensorFlow” with its high-level API “Keras” [59, 60], “Flax”/“JAX” [61], or “MXNet” [62], among others.

2.2 When is deep learning useful?

With the rapidly growing research interest around deep learning in the last years, a newcomer can easily get the impression that DL is the perfect solution to basically any problem. This is a dangerous fallacy. Assuming limitation to reasonable computational invests, deep learning will in many situations actually lead to inferior results compared to conventional methods, and due to data generation and network training, it will often come with a higher total computational cost. We therefore want to start with a survey of inverse design scenarios, and discuss situations in which deep learning may, or rather may not, be an adequate method.

The question of whether deep learning may or may rather not be an interesting option stands and falls with the quantity of available data. If huge amounts of data are available, with an appropriate model layout, deep learning likely works well on essentially any problem [26, 63, 64]. Unfortunately, data is often expensive. In photonics for instance, many simulation methods are relatively slow. In our considerations below, we will therefore assume the case of datasets with high computational cost and limited size, in the order of thousands to tens of thousands of samples.

2.2.1 When to use deep learning

2.2.1.1 Intuition

Deep learning is a data-driven approach: during training, the neural network is trying to figure out correlations in the dataset that allow to link the input to the output values, eventually converging to an empirical model describing the implicit rules behind the dataset (in our case the implicit physics). In that sense, neural network training can be somehow compared to human learning: By mere observations, humans figure out causal correlations in nature. In nano-photonics for instance, a person who is studying plasmonic nanostructures will sooner or later develop an intuition for the expected red-shift of a particle’s localized surface plasmon resonance with increasing particle size.

Therefore, a good question to ask is: With the given dataset, does it seem easily possible to develop an intuition of the physical response? If this is the case, training a deep learning model on a large enough dataset promises to be successful. On the other hand, if a problem or its description is highly entangled and we can only hardly imagine that an intuitive understanding can be learned without further guidance, then an artificial neural network is likely going to have a hard time understanding the correlations in the dataset.

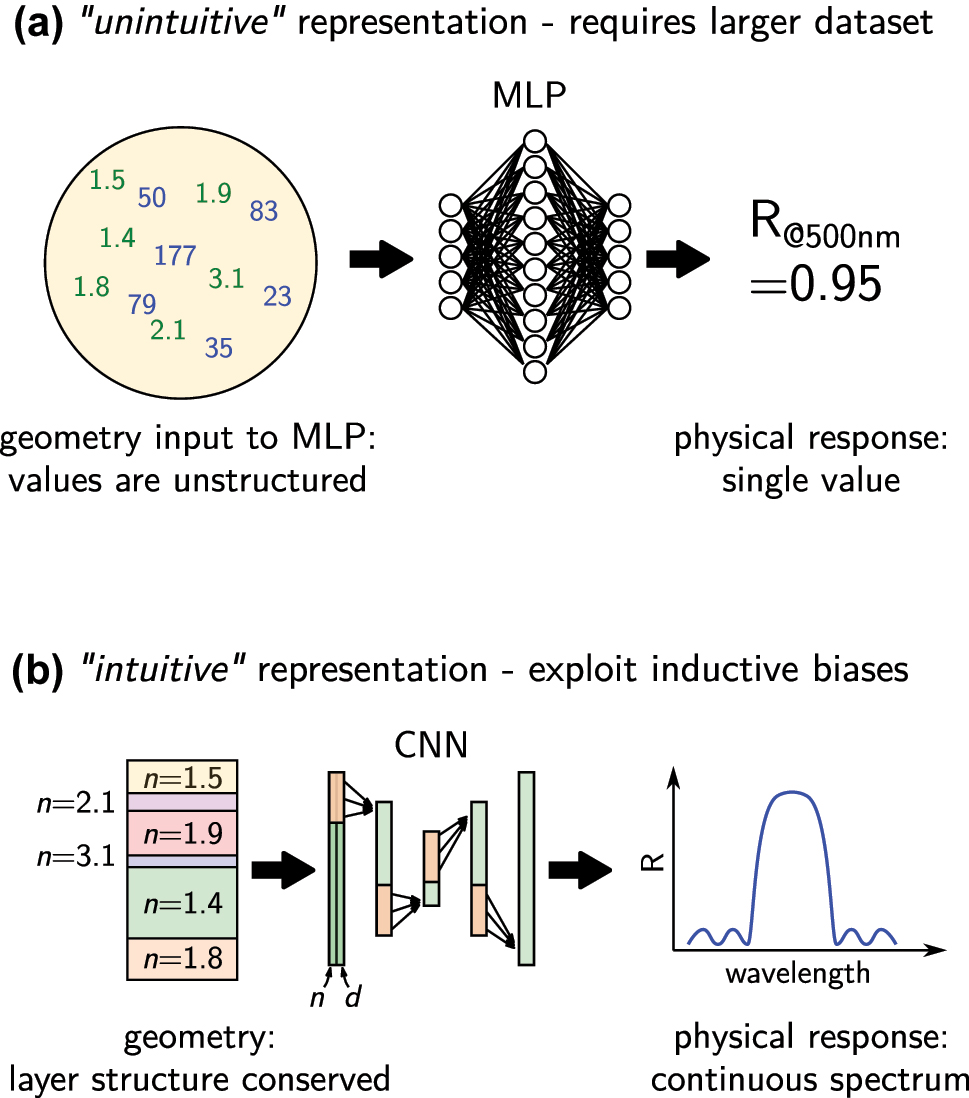

An example is shown in Figure 2. A Bragg mirror design problem is parameterized by its geometry of N dielectric layers with arbitrary material and thickness, and the target physical observable is the reflectivity at a fixed wavelength. The learning problem is a mapping of 2N values to a single reflectivity value. Providing a human with a large set of such data samples would most likely result in confusion rather than in an intuitive understanding of the correlation between geometry and physical property. The problem becomes easier to grasp intuitively if the layer-order is given to the network so it does not need to learn that the order is important. In deep learning this can be done using a convolutional layer, which keeps the input structure and searches correlations only between “neighbor” values. We exploit the convolution’s inductive bias (see also below). We know that periods of the same pair of layers form the ideal Bragg mirror. With that prior knowledge we could further simplify the intuitive accessibility and parameterize the geometry with only two thicknesses and two materials values, this layer-pair being repeated for N/2 times. Finally, instead of predicting only a single reflectivity at one wavelength, we could train the network on predicting reflection spectra in a large spectral window. Now, the geometry can be understood easier and the impact of changes in the layer pair geometry is easier to interpret. A wavelength shift of the stop-band for instance can be easily quantified if a spectrum is given instead of just one reflectivity value. In the same way as the latter representation is easier to interpret for a human, a deep learning model will be capable to develop an empirical model much easier. Other examples where such additional tasks, also called auxiliary tasks, are useful for improving the performance on the machine learning principle task, have been investigated in reference [65], and more recently in the case of the game “Go” [66]. In conclusion, richer information about the problem is often useful for making training faster and less data intensive.

Comparison of two different parameterizations of the same problem. (a) Using a dense neural network, the order of the thicknesses (blue values) and refractive indices (green values) is lost, because every input value is fed into every neuron in the first layer. The network needs to learn during training that the order matters. Furthermore, the correlation between the many input values and the single output reflectivity are difficult to understand. (b) Using a CNN, the layer order can be conserved and using two input channels, even the association of thickness and refractive index of a single layer can be directly passed to the network, hence during training these correlations do not need to be learned. Furthermore, predicting a whole reflectivity spectrum facilitates to identify correlations between changes of the geometry and for example resonance peak shifts. Returning this again via a convolutional layer conserves the order of the spectrum. On small to medium size datasets, exploiting these inductive biases of a CNN can significantly improve performance.

2.2.1.2 Repetitive and speed-critical design tasks

In case it appears reasonable to assume that an intuitive comprehension of the problem can be built, one should also think about the motivation of using deep learning. On often highlighted advantage of DL models is their high evaluation speed (leaving aside the training phase). Using deep learning with the goal to speed up inverse design therefore seems reasonable. The training phase however requires significant computational work for network training and often also for data generation. Hence deep learning makes most sense in cases of highly repetitive design tasks like metasurface meta-atom creation, or in speed-critical scenarios like real-time applications, such as spatial light modulator control.

2.2.1.3 Differentiable surrogate models

Another tremendous strength of deep learning models is the fact that they are differentiable. While gradients in numerical simulations may be obtained with adjoint methods [14], deep learning models can learn differentiable models even from empirical data, for example from experimental measurements.

2.2.1.4 Latent descriptions of high-dimensional data

A key capability of deep learning is the possibility to learn latent representations of complex, high-dimensional data. The latent space is not only a crucial concept in deep generative models [67, 68], it can also be used to compress bulky data [69, 70], or to gain insight into hidden correlations [71–73].

2.2.1.5 Empirical models from experimental data

On data that is very complex and/or high-dimensional, it can be difficult to fit a conventional physical model. A deep learning neural network may be a promising alternative to obtain a differentiable description for the physics, based on experimental data. A specific use-case application may be when a theoretical model fails to reproduce experimental observations. A so-called “multi-modal” [74] model could learn in parallel from experimental data and the simulated data. Both inputs are separately projected in a shared latent space. After successful training, the latent description creates a learned link between experiment and simulated data.

2.2.2 When to NOT use deep learning

Writing a good data generation routine to create a useful training set is at least as challenging, but often even more challenging than writing a good fitness function for a conventional optimization technique. Additionally, it has been demonstrated on several occasions, that simple conventional methods often outperform heavy GPU-based black-box optimization methods [75–78]. Before rushing into data generation, we therefore urge the reader to consider whether conventional techniques may not be a sufficient alternative to deep learning on their specific problem.

2.2.2.1 “Unintuitive” problems or parameterizations

Deep learning may perform badly if the problem or its parameterization is not intuitive, hence where the correlations between input and output are highly abstract. This is the case in the above example of a sequence of N random dielectric layers, where the goal is to map the 2N values of layer thicknesses and materials to a single output value (the reflectivity). While the problem is very easy to solve with the right physical model at hand (e.g. with transfer matrix or s-matrix method), a human presented with examples of such 2N + 1-value samples will have a very hard time to understand the correlation. This can typically be solved by proper pre-processing and network architecture design, but requires supplementary effort.

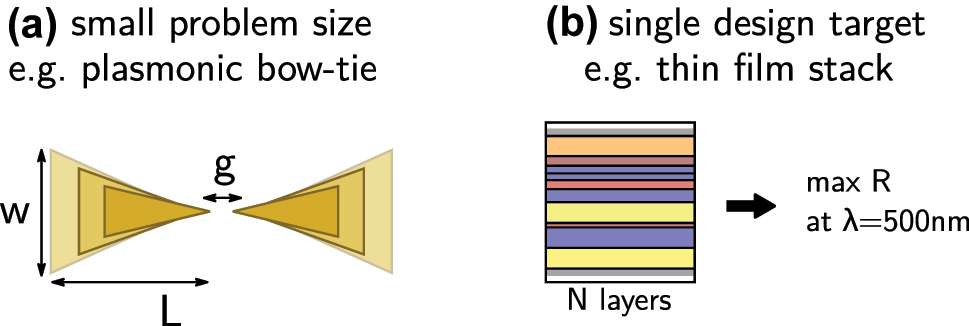

2.2.2.2 Single design tasks and simple problems

We argue that the main argument against deep learning is that it is inappropriately expensive in many situations. If the geometric model used in the design problem is described by only a few free parameters (like 3 or 4), a systematic analysis is probably very promising and maybe even cheaper than generation of a large dataset. In many cases this may furthermore be done around an intuitive, first guess. Similarly, if the geometry is more or less known and only small variations are to be optimized, conventional optimization or again a systematic exploration of all parameters are more appropriate. Such situations are depicted in Figure 3. Even if a systematic analysis cannot be performed with sufficiently dense parameter steps, it may be worth considering conventional interpolation approaches such as Chebyshev expansion or also Bayesian optimization [79–82]. Finally, if the problem consists in solving a single design target, even for complex scenarios a conventional global optimization run is probably the more adequate approach [15].

Examples of problem configurations, for which deep learning is probably not adequate. (a) Problems with few parameters like the here depicted plasmonic bow-tie antenna design (3 free parameters) can easier be solved by conventional approaches, intuition or may even be systematically explored. (b) In problems with a single design target, the computational overhead of deep learning is not paying back. Conventional global optimization is the method of choice.

In conclusion, finding problems that really benefit from deep learning based inverse design is not as obvious as it is often suggested in literature. As an example to support this claim we recall that to date all production-scale metasurface design is being done with conventional lookup tables, while deep learning is still only used on toy-problems for testing.

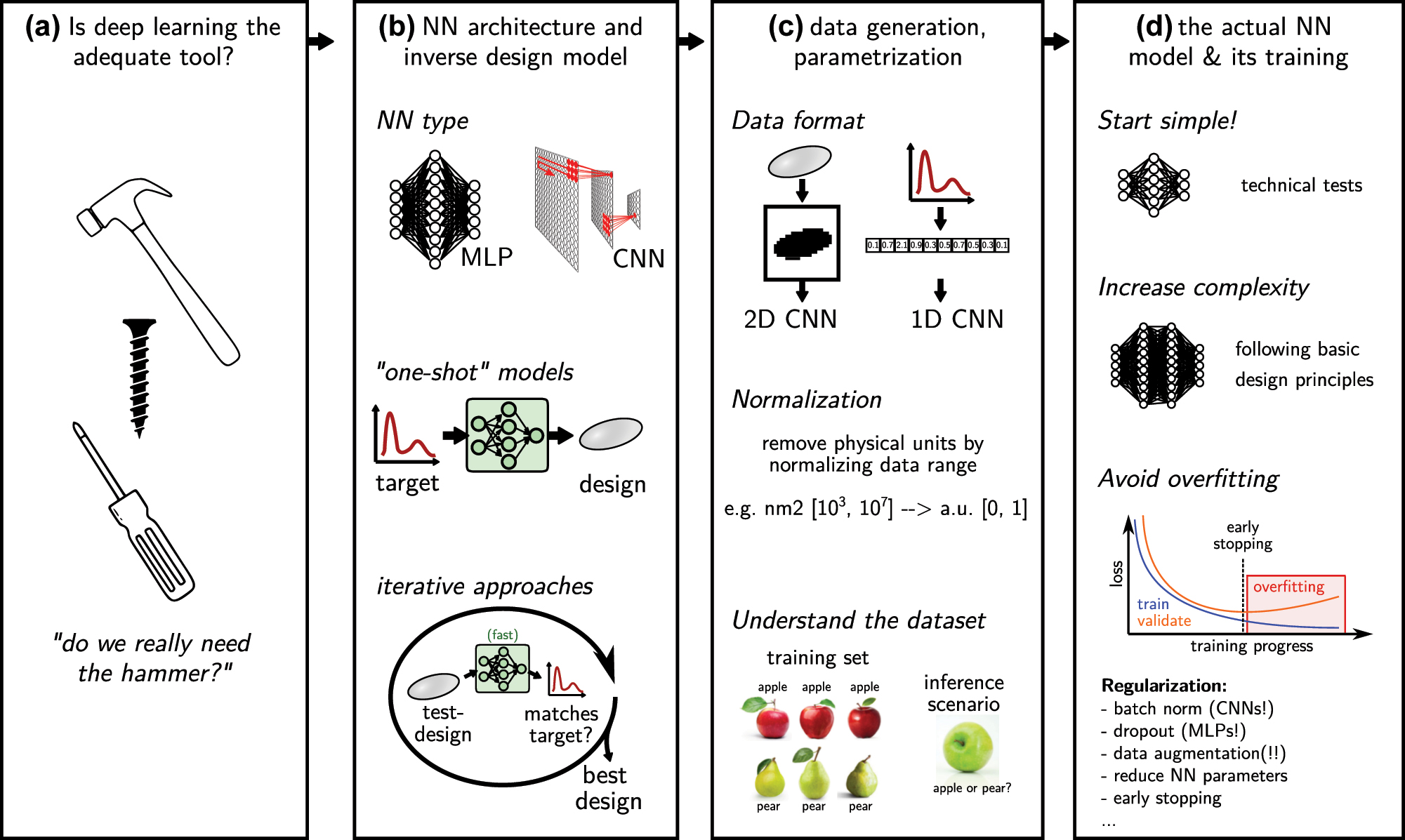

2.3 General workflow and good habits

Let’s suppose we have decided to go for deep learning as method of choice. Before diving into the details of methods for inverse design, we want to provide a loose guide of the typical workflow with suggestions for good habits that should be useful when applying deep learning to any problem, not limited to inverse design. In the following we aim at providing guidelines that implement findings of modern deep learning and that will be useful to get good results with little trial and error. The following section is structured in the way that we believe the workflow should be organized. A schematic overview is depicted in Figure 4.

Workflow for the application of deep learning in inverse design. (a) The workflow should start with an unbiased assessment, whether other methods might not be more adequate than deep learning (“do we need the hammer, or is it rather a screwdriver?”). (b) Think about how the problem can be parameterized. Which network type will be necessary? Is the goal ultimate acceleration (use one-shot inverse design), or a less time critical, best possible optimization (use iterative inverse design). (c) Prepare and understand the dataset. (d) Implement the actual mode. Start simple, increase complexity, avoid overfitting (regularization!). Always keep in mind literature design guidelines.

2.3.1 Overview of the major model architectures

Neurons can be connected in different ways to form an ANN. The most adequate neural network type will also depend on the dataset to be processed. Multilayer perceptrons (MLP), convolutional neural networks (CNN) and recurrent neural networks (RNNs) are the most widely used general architectures. Recently so-called “attention”-layers have gained significant popularity as well. In the following we will discuss the advantages and drawbacks of the different network architectures and give suggestions concerning their applications.

2.3.1.1 Multilayer perceptron (MLP)

The MLP is also called fully connected or dense network, and consists of layers of unstructured neurons, where each neuron of a layer is connected to every neuron of the preceding and of the following layer [83]. Because they scale badly with dimension, MPLs should not be used on raw data, but on features of the data. Exceptions could be natively relational data, or if the dataset consists already of high-level features. In geometric inverse design, raw data might be images of the structure, whereas size and shape parameters would be the features. In consequence, MLPs are typically part of a larger neural network, where features are extracted in a first stage. Those features are then passed into the MLP, for example in various convolutional neural network architectures, or similarly also in the attention heads of transformers (see below) [84, 85]. With large layers the number of free parameters in fully connected networks can quickly diverge, which makes this architecture particularly prone to overfitting.

We argue that MLPs can be a valid option for inverse design, for example when a model is parameterized with only a low number of parameters and/or when the dataset comprises relatively high-level features of low dimensionality (e.g. a low number of size and/or position parameters). However, for more general problems and parameterizations (e.g. parameterization at the pixel/voxel level), we recommend CNNs (see below).

2.3.1.2 Convolutional neural networks (CNN)

CNNs are used since the 1980ies [86–89], and were inspired by how receptive fields in the visual cortex process signals in a hierarchical manner [90]. They work similarly to popular computer vision algorithms of the 1990s that processed images via feature detection kernels [91], with the difference that the CNN kernels are composed of artificial neurons and automatically learn from data, instead of being manually defined. Feature detection itself is performed by convolutions of the kernels with the structured input data (e.g. images) [55]. In the early 2010s, GPUs rendered calculation of discrete convolutions computationally cheap. This allowed to scale-up CNNs, which lead to a breakthrough in computer vision performance. Ever since, CNNs are amongst the most popular artificial neural network architectures that scale well from small up to gigantic data set and problem sizes [21, 92]. In particular the idea of residual blocks with identity connections allowed to scale the depth of CNNs by orders of magnitude (thousand layers and more), while maintaining efficient training performance [22, 93, 94].

The popularity of CNN is a result of many favorable properties. For instance they offer inductive biases (see also below), that are useful for many applications like locality (assuming that neighbor input values are closely related e.g. in an image or a spectrum), or translation invariance of the feature detection (a feature will be detected regardless of its position in the input data). CNNs are easy to configure and very robust in training. They are applicable and perform very well over a large range of input dimensions, e.g. from tiny input arrays to megapixel images, and they scale well to large dataset sizes [67]. They are computationally friendly since the convolutions can be very efficiently calculated on modern GPU accelerators. Please note, that an often forgotten advantage of CNNs is also their ability to work on variable input sizes [95]. And finally, due to their tremendous success in the past 10 years, an abundance of recipes and optimization guides are available in the literature, which lowers the entry barrier for newcomers to an absolute minimum.

2.3.1.3 Recurrent neural networks (RNN)

Recurrent neural networks implement memory mechanisms in networks which process data sequentially. Thanks to this memory, long-term correlations in data sequences can be processed [96, 97]. RNNs have been the state of the art in natural language processing for some time, and due to their success in this area, researchers have applied RNN concepts to various other fields as well [98]. However, RNNs suffer from a main drawback, their training is largely sequential and cannot easily be parallelized, hence training scales badly with increasing amounts of data [85]. With the recent exploding availability of data and highly parallelized accelerator clusters, RNNs were losing foot in terms of performance and have today been mostly replaced by other architectures like CNNs or attention based models like transformers [99, 100].

We argue that RNNs should not be the first choice for typical inverse design tasks, because of their drawbacks such as non-parallel training and more complex hyperparameter tuning compared to CNNs.

2.3.1.4 Graph neural networks (GNN)

Graph neural networks (GNNs) are an emerging class of network architectures that process data represented as graphs. Graphs are natural representations for various data structures, for example social networks or molecules in chemistry [101, 102]. Several variants of GNNs exist, such as convolutional GNNs [103], Graph-attention networks [104], or recurrent GNNs [105]. Please note that also CNNs are strictly speaking a specific type of GNN, processing image-“graphs” where connections exist only between neighbor pixels. One of their advantages is that they can operate on data of variable input size and are very flexible regarding the data format. In physics GNNs have been proposed for example to learn dynamic mesh representations [106]. In nano-photonics, GNNs have started to be used only recently. GNNs have also been used for the decription of optically coupled systems like metasurfaces, including non-local effects [107]. It has been also demonstrated recently that GNNs can learn domain-size agnostic computation schemes. A GNN learned the finite difference time domain (FDTD) time-step update scheme to calculate light propagation through complex environments [108].

In our opinion, GNNs can be a very interesting approach, i.e. for difficult parametrizations. But we argue that they should not be the first choice for a newcomer, because of the scarce literature in nano-photonics and challenges in its proper configuration.

2.3.1.5 Transformer

The transformer is a recent, very successful, attention-based model class [85]. The underlying attention mechanism [109] mimics cognitive focusing on important stimuli while omitting insignificant information. The attention module gives the network the capacity to learn a hierarchy of correlations within an input sequence. In natural language processing (NLP), transformers largely outperform formerly used recurrent neural network architectures, which they entirely replaced in all NLP applications [26, 99]. In 2020 the concept was adopted to computer vision with so-called vision transformers (ViT), followed by important research efforts with some remarkable results [110–113]. However, tuning the hyperparameters of a transformer is significantly more difficult than conceiving a good CNN design, therefore also approaches that combine CNNs with ViTs were proposed [114, 115]. Furthermore, while the transformer’s main advantage is its excellent scaling behavior to huge datasets and model sizes, the need for gigantic datasets is probably also their biggest shortcoming. When the size of the datasets decrease or for smaller model sizes, their advantages diminish [116]. In fact, “small” datasets in the world of transformers still range in the order of hundreds of thousands samples [117].

We argue that transformers are in most cases not the most adequate architecture for inverse design, since their advantages unleash only for datasets of gigantic sizes, in the order of millions or even billions of samples [20, 116].

2.3.2 Which network type to use

Comparing the arguments in favor and against the different network architecture that are presented in the preceding section, we come to the conclusion that, whenever applicable, CNNs are generally the best first choice for approaching an inverse design (or other) problem with deep learning. They are simple to design, robust in training and have excellent scaling behavior. If the data is not in an adequate format, often it is even advantageous to reformat the data in order to render it compatible with a CNN. There is no “one” recipe to do so, but if a meaningful structure exists in the data values, it should be conserved. In case of a sequence of thin film layers for instance, it makes sense to concatenate layer thicknesses and layer materials in two lists of values that can be fed to a one-dimensional CNN (c.f. Figure 2b).

Besides the first technical tests, in general one should not use simple sequences of convolutions, typically called “visual geometry groups” (VGG). It is long known that such VGG-type CNNs have severe limitations and do not scale well with model size [22]. Instead, convolutional layers should be organized in residual blocks [93] or, even better and only slightly more complex, in residual “ResNeXt” blocks with inverted bottleneck layers and grouped convolutions [116, 118]. We refer the reader to the supplementary Python notebook tutorials for a detailed technical description and example implementation [52].

2.3.3 Choosing the inverse design method

2.3.3.1 Iterative DL based approaches

Following conventional inverse design by global optimization, a deep learning surrogate forward model can be used to accelerate iterative optimization for inverse design [51, 119].

Drawbacks of iterative methods are: They are slower than one-shot approaches (due to multiple evaluations through the iterations) and coupled with deep learning, they may bear the risk of convergence to network singularities, since they actively search for extrema in the parameter space [120]. Also convergence to parameters in the extrapolation zone needs to be avoided, since often the physical model collapses in the extrapolation regime, adding an additional technical challenge since the interactive solver needs proper regularization [18, 121]. Such dangerous extrapolations can also be mitigated by increasing ground truth data: a real physical simulator is sometimes used (typically at designs proposed by the surrogate model), and the learned model is updated to take into account these new data. This is a classical procedure in machine-learning enhanced global optimization [122–126].

2.3.3.2 Direct inverse design (“one-shot”)

An alternative class of inverse design methods is one-shot methods. The goal is to create a neural network, which takes the design target as input and immediately returns a geometry candidate that implements the desired functionality. The main advantage of such methods is the ultimate speed. A single network call gives the response to the inverse problem. However, this also means that no optimization is performed in this case, and the solution is likely not the optimum. To obtain close-to-optimum solutions from one-shot approaches, considerable effort needs to be invested in dataset generation, network design, optimum training and proper testing [127]. Alternatively, a one-shot inverse model can be very useful to provide high-quality initial guesses for a subsequent (gradient based) optimization.

2.3.4 The dataset – questions to ask about the data

The data are the most important resource in a deep learning model. It is therefore essential to meticulously carry out data generation or, if data already exists, to understand the dataset. In both cases it is often helpful to perform appropriate preprocessing. As a rough guide, we provide a few questions that one should ask about the data:

How much data is necessary?

Most deep learning architectures require at the very least a few thousand samples, likely more. There are cases where less data can suffice, for instance in transfer learning/fine-tuning of an existing model. In some cases it’s possible to learn on slices of large but few samples, for instance in segmentation tasks [128, 129].

Is random data generation possible?

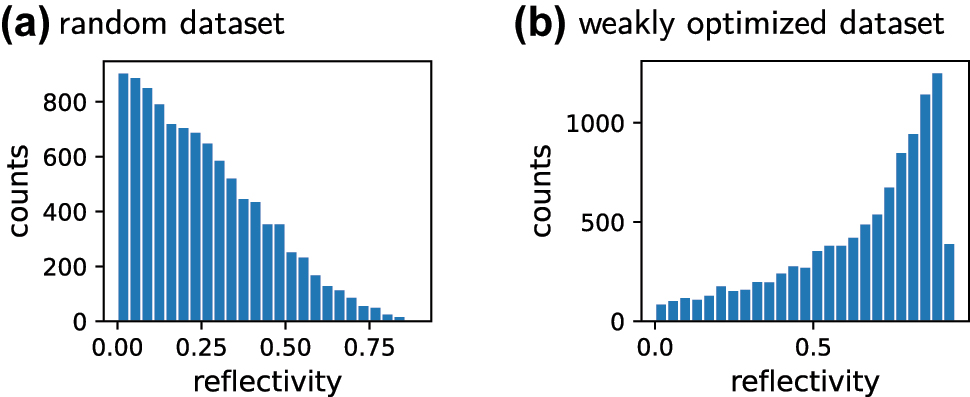

The design targets may be sparse in the parameter space, in which case training using a random dataset may not work. This is often the case in free-form geometries, where the number of design parameters is high. Using a weak optimization method for data generation can help in such cases, but special care must be taken to avoid bias of the dataset towards a specific type of pre-optimized solution, so enough randomness is important [40]. Also systematic data sampling approaches (e.g. Sobol sampling) may be useful [130–132]. The potential difference between random and optimized data is illustrated in Figure 5.

Are there obvious biases in the data?

Biased data is one of the most important challenges in deep learning. In short: “what you put in is what you get out”. For instance if a dataset is built with resonant nanostructures, a network trained on this data may not correctly identify non-resonant cases.

Is the data meaningful?

Samples carrying the essential information may be sparse in the dataset or the important features may be hidden in a large number of irrelevant attributes. A careful pre-processing of the dataset may help in such cases.

Can I shuffle the data?

Are subsets of the data partly redundant (e.g. after sequential data generation)? If yes, a splitting method is required that guarantees that training, validation, and test subsets are independent.

Are the subsets (training data, validation data) representative for the problem?

In order for the training loss and benchmark metrics to be meaningful, each subset needs to fully cover the entire problem to be solved. If we apply the network on significantly different samples than it was trained on, we probe the extrapolation regime, where performance is generally weak. A non-representative validation or test dataset will therefore provide a bad benchmark [18]. An illustration depicting non-representative subsets is shown in Figure 4c.

How should the data be normalized?

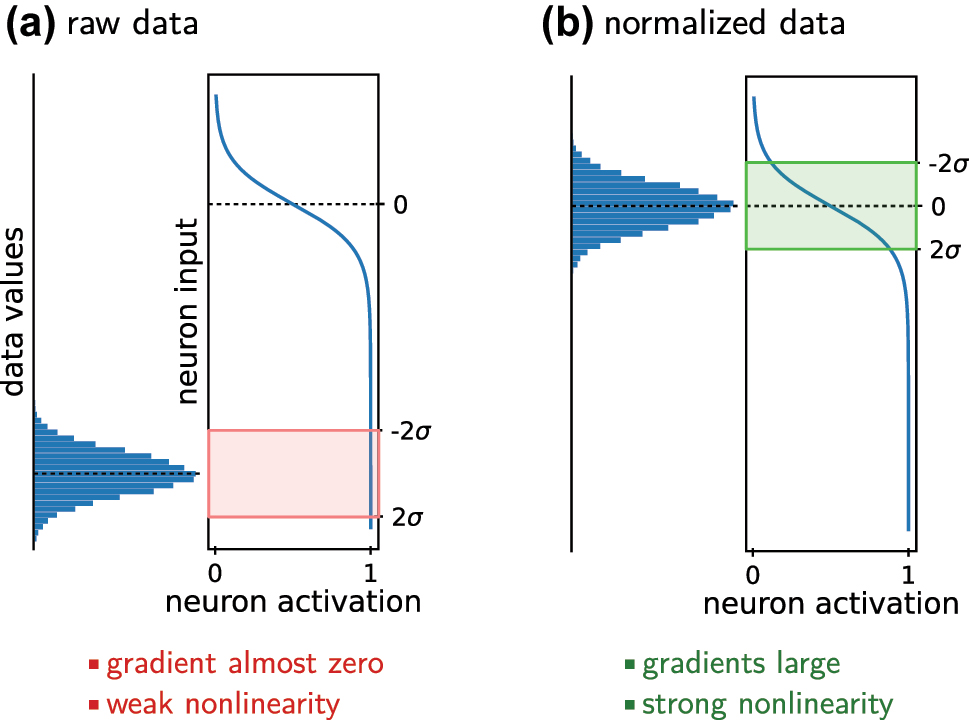

Input values should in general be standardized: Subtract the mean and divide by the standard deviation. Figure 6 depicts why this is essential. The choice of the normalization for the network output is closely linked to the choice of the loss function, but also depends on the data representation and on the significance of outliers, among other factors. If the data has multiple output values or channels, are those of similar numerical magnitude? If not, should they be normalized with the same scaling process or each channel individually? Appropriate normalization is highly important for an accurate model.

Can we exploit inductive biases?

The term inductive bias denotes any assumption about how to solve a task that is implicitly included in the model. In a classification task for instance, the output layer is chosen as a probability distribution. In a regression task, the output layer is a continuous activation function. Such inductive biases improve the network training. In their absence, a network needs to learn them from the data in addition to the actual task. For example a classifier would need to learn that the output is a binary yes/no decision, and parts of the network would be used for this aspect. Often it is possible to include some properties of the problem implicitly in the network architecture. CNNs typically imply translation invariance. Likewise, symmetries may be included, or the valid data range, by choice of an adequate output activation. In physics, a possibility to exploit inductive bias is causality, which may be enforced through Kramers–Kronig relations or, more simply, using a Lorentzian output layer [18, 135].

Histograms of two illustrative datasets of the reflectivity (at λ = 500 nm) of 10,000 dielectric thin-film sequences. (a) Randomly generated thin film sequences. Their reflectivity is in general low. A network for the design of high reflectivity solutions, that is trained on this dataset, will very likely fail. (b) Weakly optimized data generation, starting from random samples. The dataset offers a large portion of medium to high reflectivity samples. Care should be taken that still enough “randomness’ is present to avoid biases towards high R solutions. A network trained on the optimized set will perform better at the task of reflectivity maximization.

Normalizing the data is important. (a) Large numerical values do not exploit the non-linearity of typical neuron activation functions. In case of specific activations like Sigmoid or tanh, furthermore the gradients of the neuron output are very small. Both situations are unfavorable for network training. (b) The numerical values of normalized data cover the full range of an artificial neuron’s non-linearity. Also the neuron gradients are large for all typically used activation functions. This significantly helps the learning process. On non-normalized data, during training first the neuron biases need to be optimized which consumes unnecessary computation. The same argument explains why batch normalization is so effective.

2.3.5 Practical network implementation and training

Once the data is prepared, the full set needs to be split into training, validation and test subsets. With these subsets we are ready for the actual training of a network. The next step is to code and train the model. This section is a little technical, we ask the reader to look at the Python notebook tutorials that demonstrate explicit implementations of networks following the below guidelines [52].

Since a sophisticated model may train very slowly, it is important to do a first technical test with a very simple network model. Even though you shouldn’t use it as a final layout, the initial test network can be a sequence of a few stacked convolutional layers (“Visual Geometry Group”, VGG). With this we test the implementation of the input and output layer dimensions, if the data scales are correct, and if the network output format is adequate. Finally we get a first idea about the typical learning loss. As for the specific configuration of the convolutions, small 3 × 3 kernels, strides 1, and “ReLU” activations can be used as a relatively foolproof rule of thumb [118]. Kernels with larger receptive field are occasionally used in literature [116], but increasing the convolutional kernel size increases quadratically (in 2D) the computational cost as well as the number of network parameters. It can be easily shown that stacking N 3 × 3 convolutions covers a receptive field equivalent to a single layer with kernel size (2N + 1) × (2N + 1), but with significantly less trainable parameters, reduced computational effort and potentially the additional benefit of hierarchical feature extraction capabilities [136].

Once this technical test is passed, it is time to increase the model complexity. Simple convolutions are converted into residual blocks [93] or, even better, “ResNeXt” blocks [116, 118], and more and more layers are added to the network, while paying attention to overfitting. Once overfitting occurs, the network complexity needs to be reduced again, or if the accuracy is not yet sufficient, other regularization strategies can be considered, such as data augmentation (for example, shifting or rotating the input data, if possible). Following dense layers, dropout can be applied, which randomly deactivates some of the neurons during training, leading to a model less prone to overfitting [137].

2.3.5.1 A note on overfitting

Recent research showed that overfitting occurs mainly in medium size networks, at the so-called interpolation threshold, where the number of model parameters is comparable to the number of degrees of freedom of the dataset. It appears that overfitting can not only be avoided by reducing the model size (or increasing the amount of data), but it can in fact be circumvented also by increasing the model size far into the overdetermined region [138–141]. These findings are in direct link with the modern concept of so-called foundation models like the large language models GPT3/GPT4 [26, 142]. Such foundation models are extremely large network models that are pre-trained on a gigantic corpus of generic data, and in a second step are fine-tuned on specific downstream tasks, which is usually successful even on very small datasets [20, 143].

In a typical scenario of deep learning for nano-photonics inverse design however, the model and dataset sizes are likely in a regime where the conventional considerations regarding overfitting hold and corresponding parameter tuning will be beneficial.

2.3.5.2 Dropout and batch normalization

We feel it is important to dedicate a paragraph on dropout and batch normalization. Both methods are applied on training time. Dropout deactivates random subsets of neurons with the goal to avoid overfitting because the model cannot rely on exactly memorized information [137]. Batch normalization normalizes the samples that it receives according to the statistics of the current batch of samples, with the goal to ideally exploit gradients and non-linearities of the activation functions (see also Figure 6) [144].

Even though dropout is often found in internet tutorials, using it in convolutions should be avoided. Its effect in CNNs is entirely different than in dense layers, for which it was originally proposed. If it does help regularizing a CNN, this will be rather accidental, than an effect that can in general be expected [145].

Instead of dropout, batch normalization (BN) should be used in deep CNNs (in particular in deep res-nets), after the convolution and before the nonlinearity [144]. As a rule of thumb, BN and dropout should not be acting on the same layer of neurons [146]. BN is indispensable in very deep architectures as it counteracts internal covariate shift. Furthermore, BN typically stabilizes training in the sense that it allows to use larger learning rates.

However, in smaller networks (in the order of a few tens of convolutions) and in particular with normalized data, BN is often not very useful, since the data normalization is more or less conserved through late layers in smaller networks. On the other hand, BN slows down data processing and training due to its computational overhead [147]. Even worse though, in physics regression tasks, using batch normalization (and also dropout) can be an unexpected pitfall, often difficult to pinpoint. This is because BN and dropout are both dynamic regularization techniques that act differently on every training batch. This can in fact be problematic when the statistics of individual batches fluctuate significantly, which is the case if the training data has a large variance. The network then re-normalizes each data batch very differently, introducing a new statistical error. In many applications, such as in segmentation tasks or in classification problems, the network output goes through a final normalization layer (typically softmax), then this problem is usually negligible [148, 149]. For regression tasks on the other hand, this can be a source of a considerable error. Large batch-sizes in the late training can counteract the problem to a certain extent. Still, in many regression problems, in particular with small to medium size network models, the best solution is often to avoid using batch normalization (or dropout) [150]. When large models are required, BN should be used with an adequate batch-size increase schedule.

To conclude, we want to remind again, that normalization of the input data using the whole data-set statistics is a small, yet crucial step in the pre-processing workflow (see Figure 6). This by itself speeds up training due to the same mechanisms as used in BN, while avoiding some of the problems mentioned here [151].

2.3.6 Training loop good habits

A lot of frustration can be avoided if some good habits are respected concerning the training loop itself.

Learning rate: The learning rate is an important training hyperparameter. If it is too small, training gets stuck in a local minimum. If it is too large, the network parameters may “overshoot”, resulting in a diverging training. The choice of the initial learning rate (LR) is strongly dependent on the problem and on the network architecture. Typically it needs to be tested which LR works well. Once a good starting value is found, the LR should not be left constant through the entire training loop. Training rate schedules of varying complexity exist, but a good starting procedure is usually to gradually reduce the LR (see also Figure 1c and d). A simple learning rate decay schedule such as dividing the LR by a factor 10 every time the validation loss stagnates for a few epochs, is often already very efficient.

Batch size: Evaluating the training loss on small batches of random samples is one of the key mechanisms in deep learning. It is the pivotal procedure that impedes the optimizer to get stuck in local minima. As a result the batch size (BS) is also a crucial hyperparameter that has a tremendous impact on the training convergence and model performance. While it depends also on each specific problem, in general the BS should be small in early training. 16 or 32 are good initial values. Too large starting batch sizes usually converge to local minima and thus lead to less accurate models [152, 153]. However, once the loss stagnates, it is good practice to increase the batch size since it renders the gradients smoother, and helps further decreasing the loss function, similar to the reduction of the learning rate [154]. Please note that, as in any global optimization scheme, it is impossible to evaluate whether the actual global minimum was reached, because in general training will always end in a local minimum.

A few additional, rather technical tips are given in a dedicated Section 4.

2.4 Alternative data-based approaches

Developing, optimizing and training a deep learning model can require a considerable time investment and can be very resource consuming. Having a look at other data based approaches can be worth a try. Methods such as k-means or principle component analysis [155], clustering algorithms like DBscan [156] or t-SNE [157], and machine learning methods like support vector machines [158] or random forest [159] are typically straightforward in their application and often computationally more efficient than large deep learning models. Since this is not the scope of this work, we refer the reader to the above cited literature. The python package scikit-learn is also a very accessible opensource collection of machine learning algorithms and tools with an extensive and well-written online documentation [160].

3 Deep learning based inverse design

After having discussed the general approach and workflow for deep learning, we will now explain different possible approaches to solve inverse design problems via deep artificial neural networks.

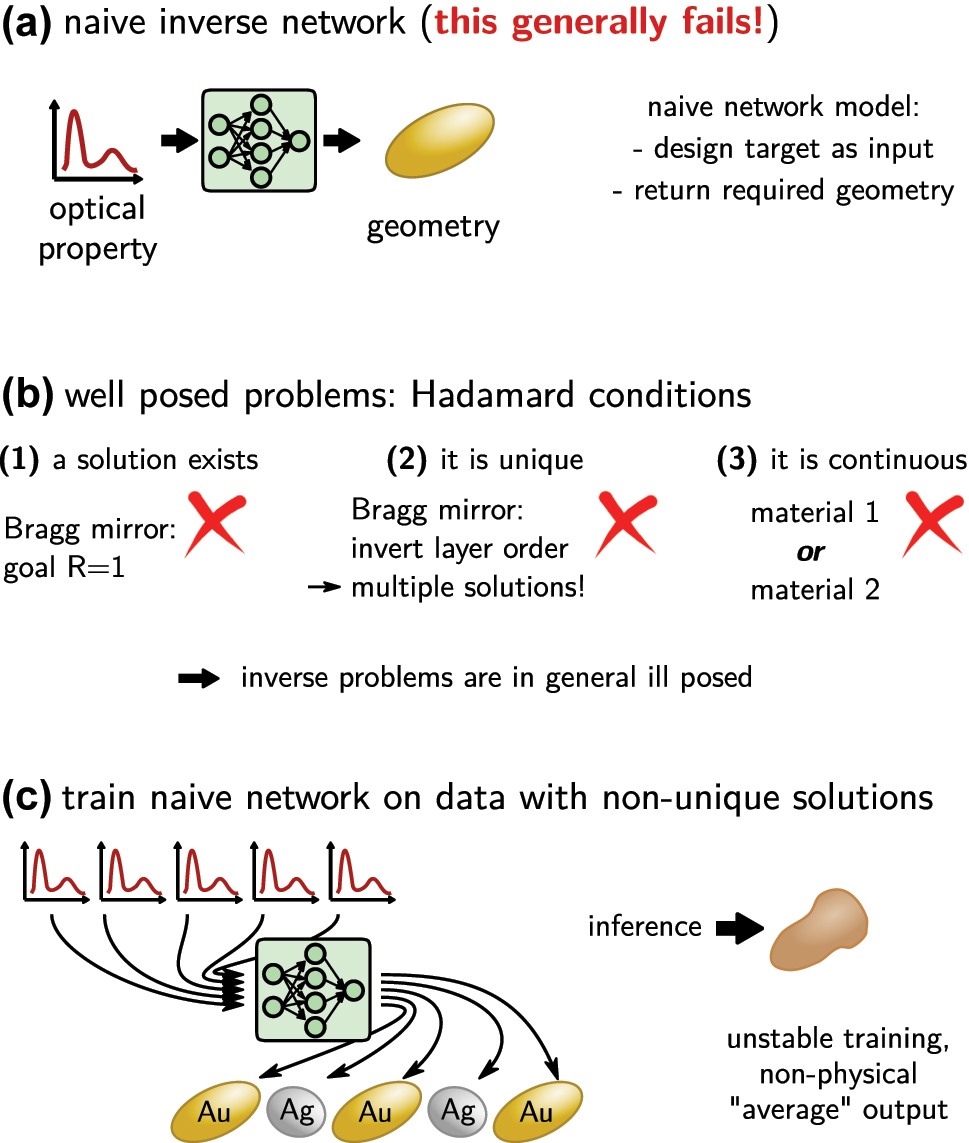

The naive approach to solve inverse design with deep learning would be to use a feed-forward neural network that takes the optical property as input and returns the geometry parameters as output. This would be trained on a large dataset. Unfortunately such approach, as depicted in Figure 7a, does not work.

The crux of the ill posed problem. (a) A naive, not working implementation of a simple feed-forward inverse network would take as input the design target (e.g. an optical property) and returns the design that is required to obtain it. (b) Only well posed physical problems can be solved this way. Such problem obeys the three Hadamard conditions. However, neither of these conditions is in general fulfilled in photonics inverse design, as illustrated by a selected example under each condition. (c) In case of multiple solutions, the training process would iterate of these several times, every time adapting the network parameters to return a different design. Training is unstable and eventually the network will learn some non-physical mix of the multiple solutions. If a non-continuous parameterization is used (here: two distinct materials), the naive network may also return non-allowed mixtures of those.

The main challenge in nanophotonics inverse design is that the problem is in general ill posed, in consequence it is impossible to solve the problem directly. J. Hadamard described a so-called “well posed problem” as one for which a solution does exist, this solution is unique and continuously dependent on the parameterization (c.f. Figure 7b) [13]. The typical inverse design problem however has in general non-unique solutions (multiple geometries yield the same or very similar property). Often design targets exist that cannot be optimally implemented, hence no exact solution exists (e.g. a mirror with unitary reflectivity). And finally, in many cases the physical property of a device is not continuously dependent on the geometry, but the parameter space is at least partially discrete (e.g. if a choice from a finite number of materials has to be made). Training of a naive network on a problem with multiple possible solutions will oscillate between the different possible outputs and finally learn some non-physical average between those different solutions, as illustrated in Figure 7c [45].

Fortunately, methods exist to solve ill posed inverse problems with deep learning. We discuss in the following two popular groups of approaches. The first contains iterative methods that use optimization algorithms to discover the best possible solution(s). The second group consists of direct (“one-shot”) inverse design methods.

3.1 Iterative approaches

Conventional inverse design generally uses iterative methods to search for an optimum solution to a given design problem. One way to speed up inverse design with deep learning is hence to accelerate the iterative process with a deep learning surrogate model. A surrogate model is a forward deep learning network that is trained on predicting the physical property of a structure, hence to solve the “direct” problem [17, 50, 119, 161, 162]. This is usually straightforward, and consists in a feed-forward neural network that takes the design parameters as input (e.g. geometry, materials, …) and returns the physical property of interest via its output layer (e.g. reflection or absorption spectrum, …). Provided a large enough dataset is available, designing and training of such forward model is generally not difficult. We advise to follow the design guidelines from Section 2 and to consult the Python tutorial notebooks.

An accurate forward model can be used in various ways for rapid iterative inverse design.

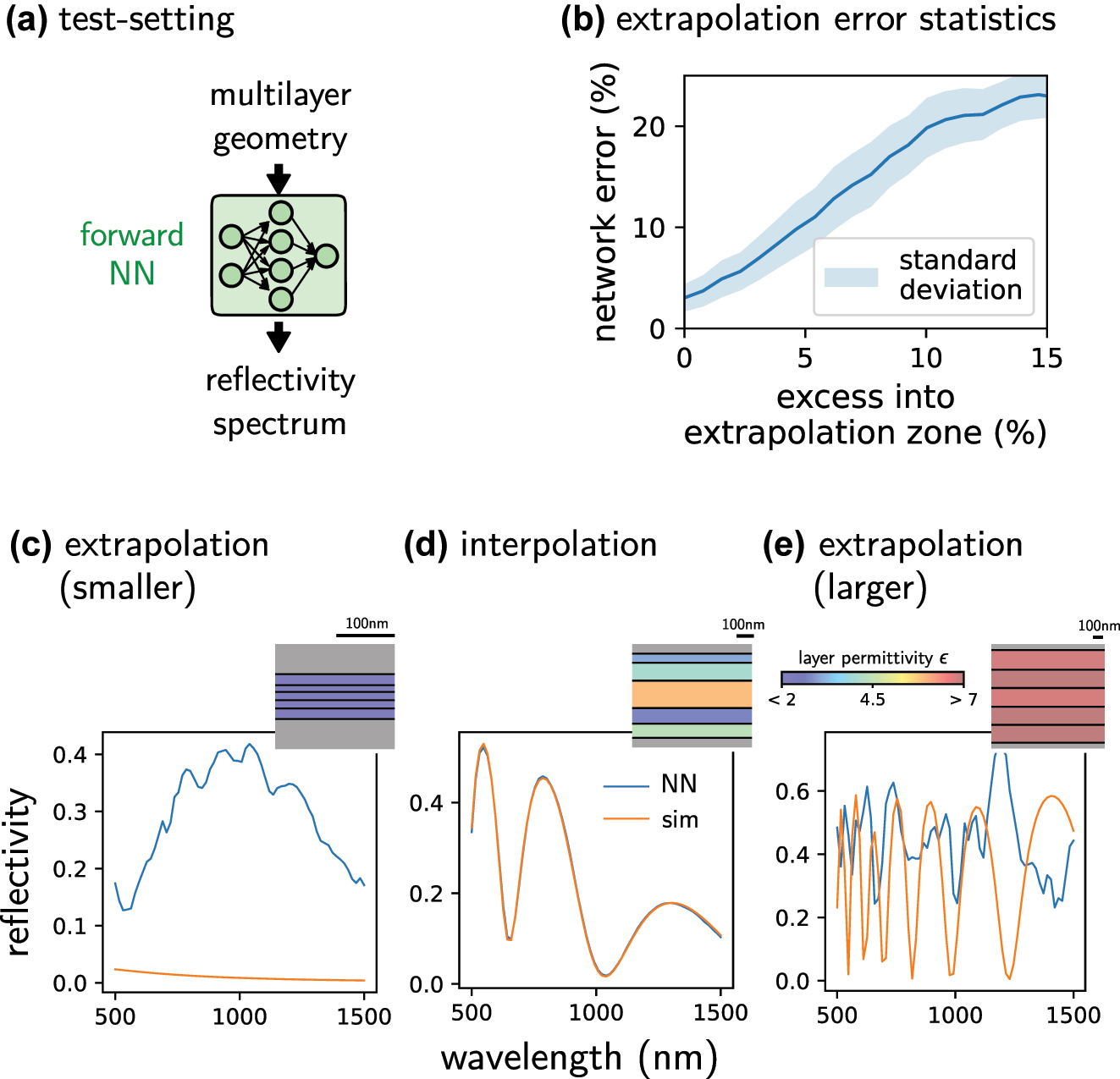

3.1.1 Avoiding the extrapolation zone

The most imminent danger when using iterative optimization with deep learning surrogates is that the optimizer pushes the design parameters outside of the model’s validity range. Most neural networks are strong at interpolation but bad at extrapolation tasks. This is illustrated in Figure 8, where a neural network has been trained on predicting the reflectivity of a dielectric thin film layer stack. This network totally fails in doing so outside of the range of the training data. For input values that are 10 % larger than the largest training data, the average error is already in the order of 20 % (Figure 8b). This illustrates that it is important to constrain the allowed designs to the parameter range that has been used for training, hence to the interpolation regime of the forward model. Please note that even if the model is efficiently constrained, a non-negligible risk of converging to network singularities remains in any case, since optimization algorithms seek extrema of the target function [83, 120]. We insist therefore that careful verification of results obtained from deep learning is crucial in any case. A classical approach is also to retrain the surrogate model with the new data, after output data has been verified by a classical physical model.

Illustration of failed extrapolation. (a) A neural network was trained on predicting the reflectivity spectrum of a thin film layer stack made of ideal dielectrics with constant permittivity ɛ. (b) Average forward network error as function of the excess of the input parameters outside of the training data range. (c) Failed prediction example outside of the training data range (smaller permittivities and thinner layers). (d) Example inside the range of the training-set design parameters (interpolation). The network predictions are accurate. (e) Same as (b) but for larger permittivities and thicker layers than used in training. Orange lines: PyMoosh simulated reflectivity spectra. Blue lines: neural network predicted spectra. Insets represent the used layer stack. Bar heights correspond to the layer thickness according to the scale bar, the color code indicates the layer dielectric’s permittivity.

In order to constrain the designs, in specific cases dependent on the design parameterization, it is possible to formulate a penalty loss. For instance when the design is described by size values for which the limits are known [121]. The penalty loss is added to the optimization fitness function, which then increases, if the optimizer leaves the allowed parameter range. A drawback of this method can be the increasing complexity of the fitness function, and the requirement of problem-specific weight tuning to balance the contributions to the total fitness function.

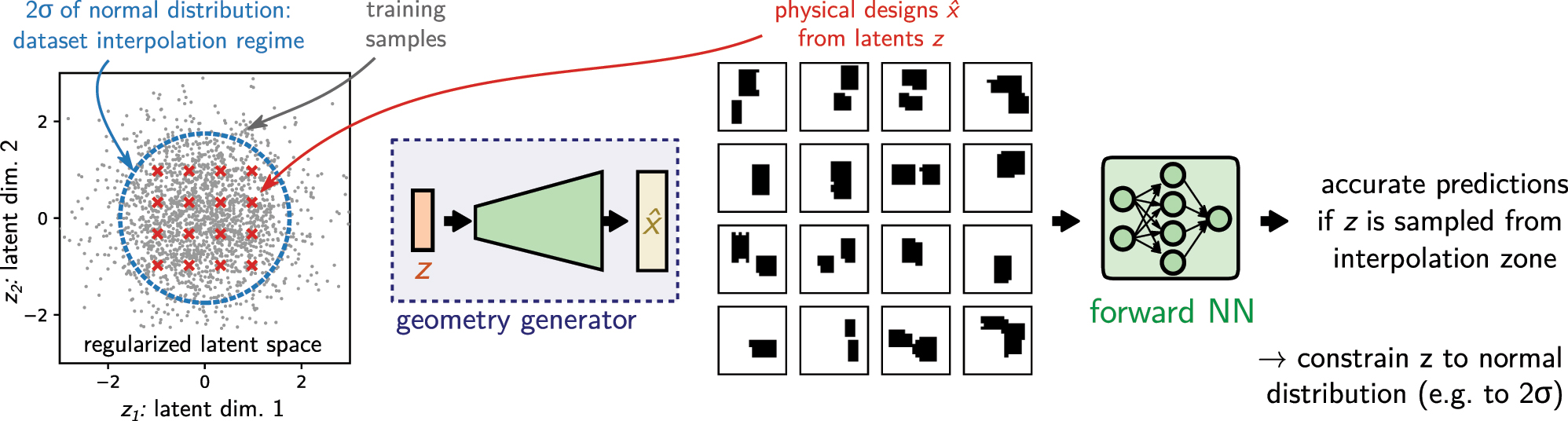

In many situations this tuning can turn out to be complicated or the parameterization of the design is difficult to constrain, for example in free-form optimization, where the design may be given as a 2D image. In such cases, a separate deep learning model can provide an elegant solution. By training in a first step a generative model on the designs alone (without any physics knowledge), a design parameterization can be learned from the dataset [71, 73, 163]. Adequate models are for instance variational autoencoders (VAEs) or generative adversarial networks (GANs). These two approaches are depicted in Figure 9a, respectively 9b. For technical details and an example implementation, we refer the reader to the accompanying tutorial notebooks [52].

Avoid the extrapolation regime of the forward model with learned design parameterization. (a) Sketch of a variational autoencoder (VAE) trained to reconstruct the design. In a VAE, the encoder is trained to return the mean value (µ z ) and standard deviation (σ z ) of the latent variable z. A randomized, normal distributed latent vector is passed to the decoder for the reconstruction task (via random number generator “RNG”). By further constraining σ z with a KL loss (c.f. text), one obtains a compact and smooth latent space that is normally distributed. (b) Sketch of a generative adversarial network (GAN). As in the VAE, by using a normal distributed random number generator during training for the latent space input, the generator develops a smooth and compact latent space, essentially representing the interpolation regime of the dataset.

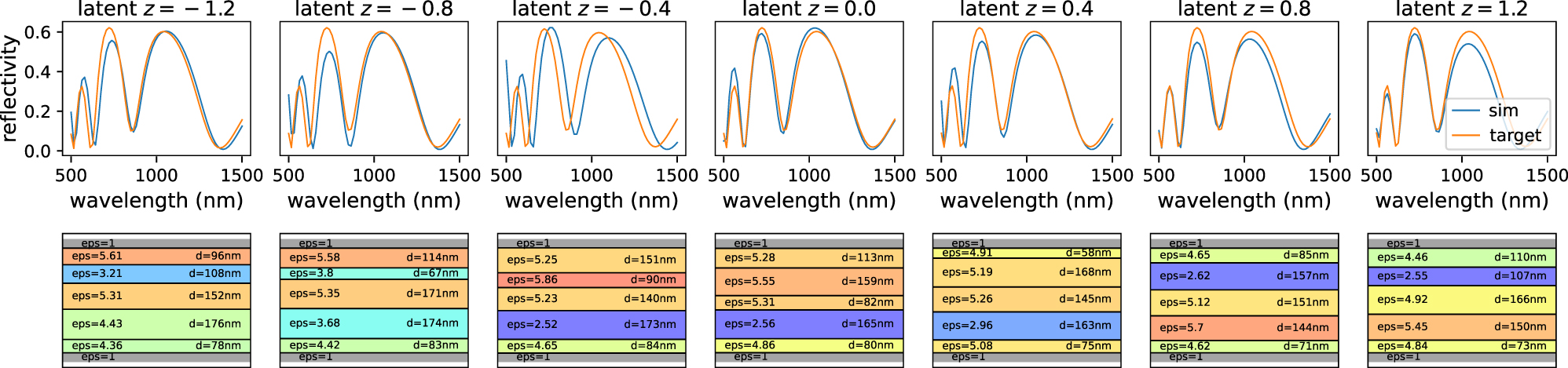

The latent space of such a VAE or GAN represents a learned parameterization of the designs. Thanks to the properties of the regularized latent space of VAEs or GANs, the parameterization is compact and continuous, even if the original design description was not (e.g. if a list of discrete materials is used). Please note that some techniques like variational regularization are not differentiable. Since backpropagation necessarily requires deterministic gradients, reparametrization tricks need to be applied to the backward path in such cases [164]. Provided the VAE or GAN training did converge, this means that every point in the latent space yields a physically meaningful solution. Most importantly, the latent space of a well-trained VAE or GAN is regularized to a normal distribution with unitary standard deviation.

This means that in an iterative optimization loop, we can now constrain the latent variable that describes the designs to the numerical range of a normal distribution (e.g. to a 2σ confidence interval). This is then equivalent to constraining the entire problem to the interpolation regime of the dataset. Practically we replace the original design parameters by the latent input variable of the trained geometry generator, as depicted in Figure 10. In the subsequent optimization, the latent input can be conveniently constrained to a normal distribution e.g. by simply penalizing large values as in inspirational generation [165], or by using a Kullback–Leibler divergence loss (KL loss), as used for variational autoencoders [69, 166].

Re-parameterized the forward model using a learned latent representation as design input: The trained geometry generator (e.g. from a VAE or a GAN) is simply plugged before the input of the forward network. It converts a latent vector z to a physical design

3.1.1.1 Technical hint: GAN normalization

To conclude, we like to put emphasis on an important technical detail in the generative adversarial network layout, especially in deep GAN architectures. In fact, the activation function of the generator output and the normalization of the associated data is important for a robust model design. As discussed above (c.f. sections on data normalization and on batch normalization), the statistical assumption behind deep learning is that the data follows a normal distribution with mean value of zero and unity variance (c.f. also Figure 6). Therefore, the design parameters (x in Figure 9b) should follow this assumption, since the output of the GAN generator is being fed back into the discriminator network. The most simple mean to accomplish this is to normalize the data (e.g. the design images) between [−1, 1], and use a tanh activation function at the generator output.

3.1.2 Heuristics: forward network with global optimization

A robust way to overcome local extrema and assure convergence to the global optimum is using gradient-free heuristics such as evolutionary optimization or genetic algorithms [15, 167–171].

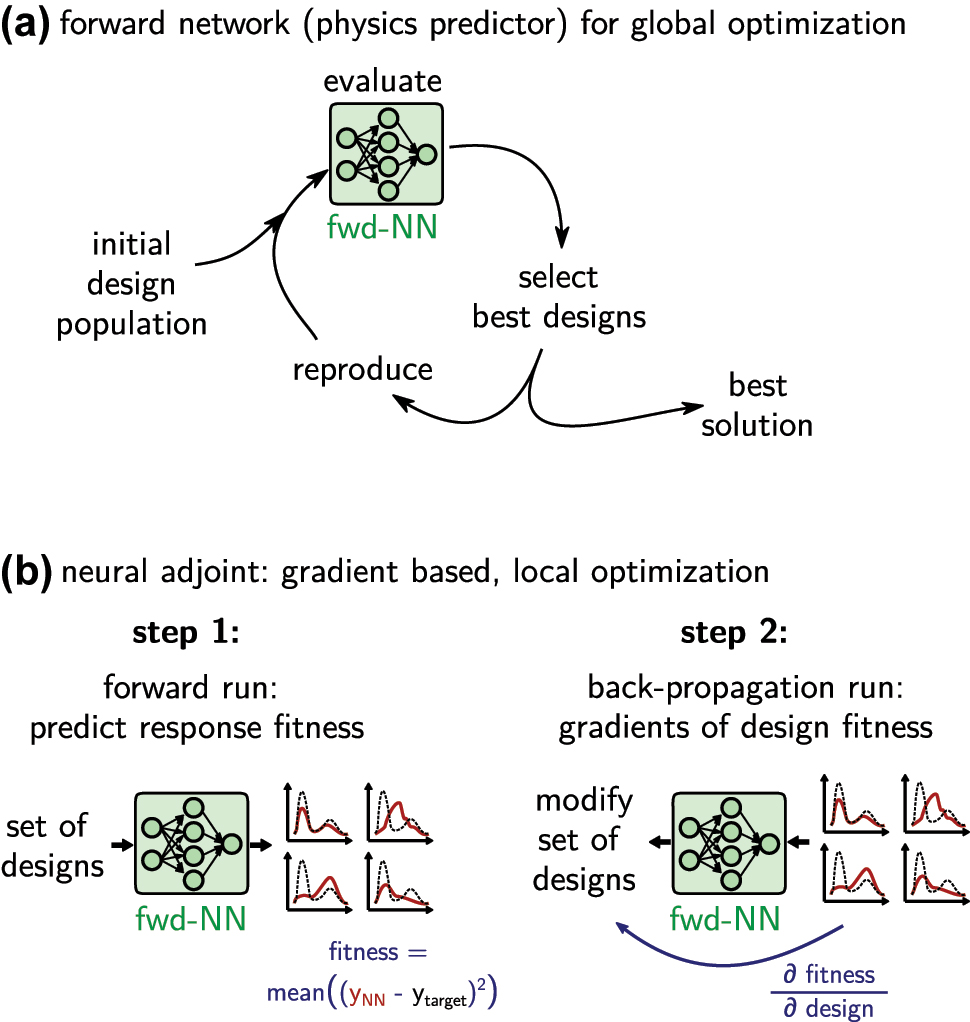

Accelerating global optimization based inverse design with deep learning is in principle straightforward. Instead of using numerical simulations, the evaluation step in the loop of the optimizer (e.g. evolutionary optimization, particle swarm, genetic algorithm …) is done with a deep learning surrogate model. This is depicted in Figure 11a. As discussed in the previous section, it is recommended to constrain the designs to the interpolation regime, for example using a generative model that precedes the physics predictor, as illustrated in Figure 10. The actual optimization can be done with any algorithm, since the operation of the deep learning model is restricted to the calculation of the fitness function.

Inverse design via iterative optimization using a forward model as fast physics solver surrogate. (a) Use forward model to accelerate a global optimization loop. (b) Neural adjoint method: neural networks are differentiable and allow gradient based optimization. Reduce risk of local minima by operating on a large set of random initial designs. The optimization tries to minimize the error between the predicted optical property (solid red line) and the design target (dashed black line).

One of the major advantages of deep learning surrogates is their differentiability. We therefore encourage to not use gradient-free global optimization alone, but combine it with gradient-based optimization for faster convergence. This will be discussed in the following.

3.1.3 Gradient descent – neural adjoint method

While global optimization is robust and generally converges well towards the overall optimum, such methods are also inherently slow since they do not take advantage of gradients. This is unfortunate because gradients are available “for free” in deep learning surrogate models. On the other hand, gradient based approaches tend to get stuck in local minima. This can be accounted for to a certain extent, but it usually depends strongly on the individual design problem if a gradient based method will work.

The idea of gradient based optimization is the same as in the Newton–Raphson method. A fitness function is defined such that it is a measure of the error of a solution compared to the design target. Then, the derivatives of the fitness function with respect to the design parameters of a test solution are calculated and used to modify the test-design towards the negative gradient. By minimizing the fitness function in this way, the solution iteratively gets closer and closer to the ideal design target until a minimum is reached.

Typical numerical simulation methods are not differentiable, and hence gradient based methods cannot be applied directly. While gradients can be calculated using adjoint methods [14], these still require multiple calls of the, generally, slow simulation, and hence are usually computationally expensive. Both problems can be solved to some extent by forward neural network models. A key advantage is, besides the evaluation speed, that gradients can be calculated “for free”, because the network is an analytical mathematical function. For the same reason, the gradients of the surrogate model are also continuous, since this is a key requirement for the network training algorithms. As stated in the beginning, the training procedure of a neural network is in fact a gradient based optimization by itself, therefore the main functionality of all deep learning toolkits is automatic differentiation. A forward neural network model can thus always be used for gradient based inverse design, which consists of two steps that are illustrated in Figure 11b. In a first step, a set of test-designs (typically random initial values) is evaluated with the forward model. Their predicted physical behavior is compared to the design target, for which a fitness function evaluates the error between target and prediction. Now the deep learning toolkit is used to calculate the gradients of this fitness with respect to the input design parameters via backpropagation and the chain rule. Finally, the designs are modified by a small step towards the negative gradients. Repeating this procedure minimizes the fitness [29, 36, 51, 121, 172, 173].

Note that, if the gradients of the underlying physics data source are known, they can be added in the training step. This often leads to better convergence of the model, since the training can use deeper correlations to build its model [174].

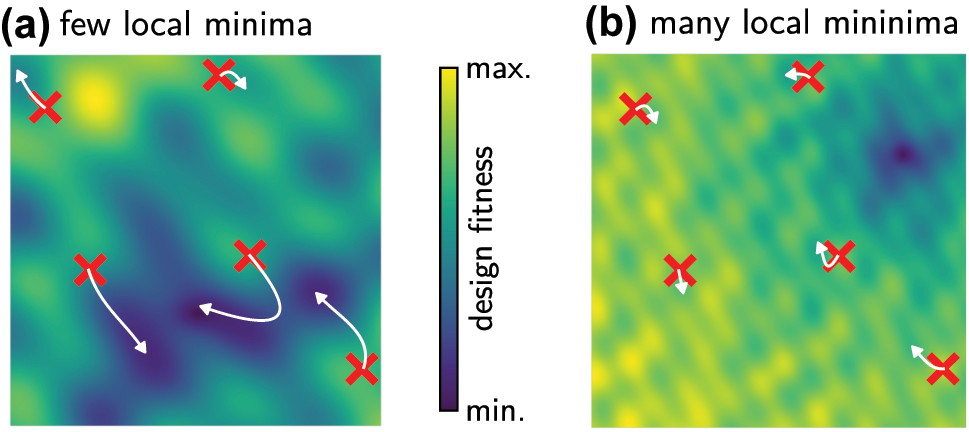

As mentioned before, the main difficulty in this approach is to avoid getting stuck in local minima of the fitness function. To a certain extent this can be accounted for by optimizing a large number of random designs in parallel (see Figure 12a). While such strategy would be prohibitively expensive using numerical simulations, with a machine learning surrogate model it is typically possible to optimize several hundreds or even thousands of designs in parallel. However, depending on the problem, the number of local extrema may be too large for successful convergence (see Figure 12b). This can be tested by running the optimization several times. If multiple runs do not converge to a similar solution, the parameter landscape of the problem is probably too “bumpy” for gradient based inverse design.

Schematic fitness landscapes of (a) a friendly problem with relatively few local extrema. (b) A complicate problem with many local fitness minima. Using gradient based methods with a large number of initial test-sets, problem (a) will likely converge to the global optimum. In problem (b) on the contrary, the chance is high that none of the initial designs is close enough to the global optimum, and optimization will converge to a local solution. The paths taken by a gradient-based method path are indicated by white arrows.

As explained above, it is crucial also in gradient based optimization to remain in the forward model’s interpolation regime since extrapolation bears a high risk of converging towards non-physical minima of the deep learning model [121]. Also, if the dimensionality of a problem is high, the risk of strongly varying gradients further increases and optimization may always converge to unsatisfying local minima. As discussed above, in such cases it is helpful to train a separate generator network that maps the design parameters onto a regularized latent space (e.g. VAEs or GANs, c.f. also Figure 9). Instead of optimizing the physical design parameters, the optimizer then acts on this design latent space. Because the latent space is regularized, it is possible to constrain the designs to the neural network’s interpolation regime e.g. by using a KL loss term in the fitness function.

Finally we want to recall once again, that unlike conventional numerical simulation methods, deep learning surrogates can possess singularities, also called failure modes or adversarial examples [120]. Gradient based optimization, especially, comes with the risk of converging to such singularities of the surrogate network. In fact, the neural adjoint method is very similar to the “fast gradient signed method” that is specifically used to find network failure modes [83]. To avoid convergence to a network singularity, it has been proposed to alternate the evaluation in the optimizer loop between the surrogate network model and exact numerical simulations. Such occasional verification of the optimized solutions effectively eliminates non-physical designs [119].

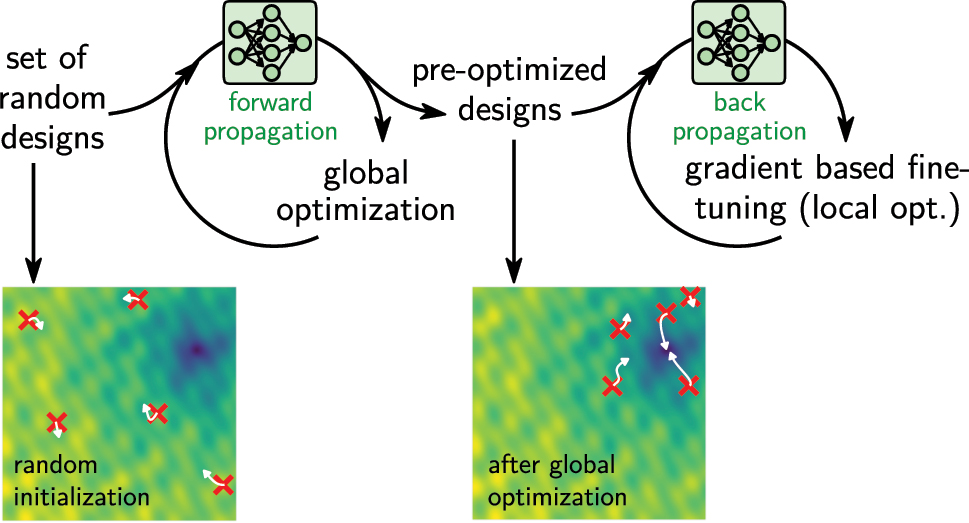

3.1.4 Hybrid approach: global optimization followed by neural adjoint

As mentioned above, inverse design tasks often possess of a large number of local extrema. As illustrated in Figure 12b, in such case gradient based algorithms may get stuck in those local extrema, even if a large number of designs is optimized in parallel. A possible solution can be a combination of iterative global optimization and local gradient based neural adjoint. In such a scenario, a global optimizer runs for a few iterations with a rather large population of solutions. During the first generations global solvers usually converge the most rapidly towards the global optimum. However, they can be expensive in the final convergence towards the exact extremum. Using the population obtained from a few iterations of a global optimization run can be very helpful as the initial set of designs for the neural adjoint method. Those designs are then relatively close to the global optimum and the chance that at least a few manage to avoid local minima is considerably increased. The approach is depicted schematically in Figure 13c.

Global pre-optimization. If too many local minima exist, a promising solution is to start by pre-optimizing a set of random designs with global optimization. The positions of the random initial samples in an illustrative fitness landscape are depicted in the bottom left. After a few iterations of a global optimizer, the solutions are closer to the global optimum, as illustrated in the bottom right. Using this set as initial population for gradient based neural adjoint is likely to converge.

3.2 Direct inverse design networks

In the above discussed iterative approaches, the optimality of the solution is the highest priority. Deep learning methods for direct inverse design on the other hand, put the design speed over all other criteria. The goal is to solve the inverse problem with a single network call. These approaches typically yield the ultimate acceleration, but results are generally not optimum, since no optimization algorithm pushes the solution to the extremum. These “one-shot” techniques rather perform a kind of similarity matching and typically yield solutions that resemble the design target.

For the sake of accessibility we focus first on two popular variants, the tandem network and conditional variational autoencoders, for which also detailed jupyter notebook tutorials are provided as supplemental documents [52]. Subsequently we give a brief overview of other direct inverse design techniques.

3.2.1 Tandem network

One of the most simple configurations for a one-shot inverse design network is the so-called “tandem network” [33]. The tandem network is a variation on autoencoder acting on the physics domain. It takes as input the desired physical property and returns a reconstruction of these physics (for instance a target reflectivity spectrum and its reconstruction). The difference to a conventional autoencoder is that the decoder is trained in a first step on predicting the physical properties using the design parameters as input. This means, the decoder is simply a “forward” physics predictor, solving the direct problem (“fwd” in Figure 14a). Subsequently, a second training step is performed, in which the forward model weights are fixed, and the encoder, which is actually trained on generating the designs, is added to the model (generator “G” in Figure 14a). In this second step, the full model is trained, but now only the physical responses from the training set are used. The physical property (e.g. reflectivity spectrum, etc …) is fed into the encoder, which predicts a design. However, instead of comparing this design to the known one from the dataset, the generated design is fed into the forward model, that predicts the physical property of the suggestion. This predicted response is finally compared with the input response, the error between both being minimized as training loss. This means, that even if multiple possible design solutions exist, the training remains unambiguous since only the physical response of the design is evaluated, regardless of how it is achieved. The full model is then essentially an autoencoder of which the latent space is being forced to correspond to the design parameters by using the fixed, pre-trained forward network as decoder.

Direct inverse design models. (a) Tandem model. The training is divided in two steps. At first a forward predictor is trained on the direct problem. Subsequently, the forward network is fixed and used to train the generator, (b) the conditional variational autoencoder (cVAE) is trained end-to-end in a single run. A latent space z is used to provide additional degrees of freedom to handle ambiguities in the design problem. (c) Inverse problems typically can be solved by multiple solutions. A tandem model will learn only one of possibly multiple solutions, the other remain inaccessible. The cVAE on the other hand typically learns the set of possible solutions which can be retrieved via the latent vector z.

A practical advantage of the tandem is that the inverse problem is split in two sub-problems that are individually easier to fit, compared to end-to-end training of the full inverse problem. In a first step the forward problem is learned, which is usually a relatively straightforward task. This physics knowledge is then used in the second step to guide training of the generator network.

3.2.2 Conditional variational autoencoder (cVAE)