Abstract

Objectives

To develop and test feasibility of patient survey-based quality measures to assess timeliness of cancer diagnosis.

Methods

We developed a 39-item survey through review of literature and measures, input from experts, and cognitive testing. We field-tested it in an urban health system among patients with new cancer diagnoses (prior 3–9 months); surveys were administered by web and mail September–October 2023. We calculated top-box scores and conducted confirmatory factor analysis for evaluative items and assessed construct validity through correlations to the diagnostic interval, number of health care visits, and overall assessments of care quality.

Results

The overall response rate was 23.9 %. The 276 respondents primarily spoke English (89 %), were non-Hispanic white (66 %), age 65+ (68 %), and college graduates (67 %). More than a quarter believed their cancer could have been diagnosed much sooner. Better communication with health care professionals, timely receipt of usual and specialty care, and ease of receipt and follow-up of tests, X-rays, and scans were positively associated with patients reporting that health care professionals could not have diagnosed the cancer much sooner. Patients reporting it was always easy to receive needed tests, X-rays, and scans and that they always received follow-up results were more than three times more likely to report a diagnostic interval less than or equal to 30 days (p<0.001).

Conclusions

Patients can report on barriers to timely diagnosis associated with the length of their cancer diagnostic interval. Surveys are a promising source of information regarding opportunities to improve timeliness of cancer diagnosis.

Introduction

Timely diagnosis is a critical component of high-quality cancer care [1], [2], [3]. Timely cancer diagnosis may result in improved survival, diagnosis at earlier stages, and improved quality of life [4]. Measuring timeliness and delays in cancer diagnosis is complex [5], and proposed definitions vary [6], 7]. Measurements of timeliness can be operationalized as a continuous length of time, a binary judgment of timeliness, or the occurrence of events or experiences associated with delays to timely medical care. Some factors contributing to delays are not unique to cancer care, including difficulty accessing care (navigation difficulties, wait times for appointments, or insurance barriers), poor communication with clinicians, and not getting appropriate imaging or other tests and referrals to specialists. However, all these measurement approaches present implementation challenges in the fragmented US health care system, as patients may receive care from multiple clinicians and facilities on their journey to cancer diagnosis.

Eliciting patient perspectives is the only way to understand certain aspects of care quality, such as the effectiveness of clinician communication. Surveying patients is also an important way of understanding breakdowns in complex diagnosis processes, which are particularly difficult to study in the US context, as clinicians and facilities rarely share electronic health records (EHRs) and patients often have multiple payers, complicating analyses of EHR and claims. Patient reports and experiences have been a critical tool for improving diagnosis [8], [9], [10], [11], and patient experience surveys are an important method for understanding and measuring health care quality [12]. Patient experience may be especially important for understanding cancer diagnosis, which can be a prolonged, complex, and multi-step process [5]. Surprisingly, little is known about patient experiences of cancer diagnosis in the United States (US), as most of this research has been conducted in other countries [13], [14], [15], especially the United Kingdom (UK) [16], [17], [18], [19]. Large scale patient experience surveys in the US, such as the US Consumer Assessment of Healthcare Providers and Systems (CAHPS®) Cancer Care surveys, focus on assessing treatment and not diagnosis [20]. In this study, we aimed to develop and test the feasibility of administering survey-based patient experience measures of timeliness of cancer diagnosis. We report results on patient experiences as well as psychometric properties of the measures.

Methods

We complied with all relevant national human subjects regulations, institutional policies, and tenets of the Helsinki Declaration. This research was approved by the RAND Human Subjects Protection Committee and Cedars-Sinai Institutional Review Board.

Survey development

In keeping with best practices for patient experience survey development [21], we conducted an environmental scan; sought input from technical experts, stakeholders, and potential users; drafted and iterated on the survey; and conducted cognitive interviews prior to field-testing.

Environmental scan

We reviewed the literature on timeliness and delays in cancer diagnosis and identified the closest existing survey questions and measures. We also interviewed 21 subject matter experts in diagnosis, quality measurement, cancer, and patient surveys. Questions included perspectives on timeliness of cancer diagnosis as a quality gap, causes and factors associated with missed or delayed cancer diagnoses, and usefulness and challenges of surveying patients on cancer diagnosis experiences.

Expert input

We convened a 14-member Technical Expert/Clinical User/Patient Panel (TECUPP), which included seven experts in clinical medicine, quality measurement, and diagnosis alongside seven patients or care partners with lived cancer experience. The TECUPP met in early 2023 to discuss potential survey and measure domains using an established patient-centered framework [22] and iterate on the draft survey. TECUPP members were asked to discuss which factors were (a) important for understanding timeliness of cancer diagnosis, (b) observable to patients and/or care partners, and (c) actionable for health care organizations.

Draft survey

Based on the environmental scan and feedback from the TECUPP, we developed a draft survey. We used or adapted existing items when available [13], [23], [24], [25] and created de novo items when they did not.

Cognitive interviews

To test the degree to which potential survey respondents consistently and accurately comprehended, interpreted, and answered draft questions, we conducted cognitive interviews with 15 patients (identified using a different sampling procedure from the survey) with lived experience of cancer diagnosis within two years and a variety of cancer types and educational levels. We revised the draft survey to reflect feedback from interviewees.

Final survey

The final version of the “Survey About Care Leading Up to Cancer Diagnosis” (Supplement) includes 29 questions related to timeliness of cancer diagnosis, including self-reported cancer type, stage, and route to diagnosis (symptoms, screening, or incidental findings); key dates (first symptoms or test results, diagnosis, referral to specialist); source and involvement of usual care; referrals to specialists; barriers to seeing usual and specialty providers; communication and respect from usual and specialty providers; access and follow-up to test results; access to interpretation; and global experience ratings. We considered 12 items on patients’ perception of getting timely care, communication and respect from providers, access and follow-up to test results, and global experience ratings as “evaluative” items potentially suitable for measure development. In addition, the survey includes eight questions about the demographics of the patient and proxy respondent (if applicable) as well as two open-ended questions for patients to provide additional information about what went well or poorly in their experiences of cancer diagnosis.

Survey sample

We identified survey-eligible patients through EHR at Cedars-Sinai, a large integrated health system serving a diverse population in urban Los Angeles. Eligible patients had a cancer ICD-10 encounter diagnosis code in the last 3–9 months (December 2022 to June 2023) and no encounters with a cancer ICD-10 code within five years prior. All cancer ICD-10 codes (C-codes) were included, except common skin cancers (C44) and cancer-like syndromes (C96). Patients were determined to be ineligible and were excluded from further analysis following survey administration if they disavowed being diagnosed with cancer in the last 12 months (n=19) or skipped all evaluative items assessing perceived access and quality of care (n=3).

Data collection

Survey data were collected via a web survey with mail survey follow-up. Patients with an email address on file (n=1,102) were sent an email with a survey link and a reminder email two days later. Patients who did not complete the web survey or who did not have an email address on file were sent a paper packet containing the survey and a business reply envelope one week after the initial survey email. Patients who had not completed the web or mail survey after three weeks were mailed a second paper packet. The data collection period was closed three weeks after the second packet was mailed. All survey and recruitment materials were in English.

Analyses

Descriptive analyses

We calculated the diagnostic interval starting with the patient-reported date of first visit/test leading to their cancer diagnosis and ending with the date a health care professional communicated the diagnosis. If month and/or year were not provided, we considered the diagnostic interval to be missing. If the first visit/test and diagnosis date were reported to be the same month and year, but no day was provided, we estimated the diagnostic interval to be 15 days.

To promote ease of interpretation and set high standards for care quality, we calculated top-box scores for each evaluative survey item [26]. This means we classified response(s) indicating the best experiences as 100 and all other responses as 0 (e.g., ‘‘always’’ = 100; all other responses = 0; rating of 9 or 10 out of 10=100; all other responses = 0). We report stratified results by initial presentation and cancer stage at diagnosis.

Measure properties

Among the 12 evaluative items on the survey, two items were considered as single-item measures of patients’ overall assessments of care (Overall Rating of Care and No Perceived Delay in Cancer Diagnosis). We conducted confirmatory factor analysis (CFA) to evaluate the factorial structure of the remaining 10 evaluative items assessing various aspects of care. We hypothesized two single-item measures (Getting Timely Usual Care and Getting Timely Specialty Care, measured by two items about how often patients were able to see usual or specialty health care professional(s) as soon as needed) and three factors: Communication with Usual Care Professionals (3 items), Communication with Specialists (3 items), and Getting Tests and Results (2 items). We used means- and variance-adjusted weighted least squares to account for the dichotomous nature of top-box scores. We used a criterion of factor loadings ≥ 0.40 [27] for inclusion within proposed factors and assessed overall model fit using the Comparative Fit Index (CFI), the root mean square error of approximation (RMSEA), and weighted root mean square residual (WRMR) (models with good fit typically have CFI>0.95, RMSEA<0.05, and WRMR<1.0 [28], 29]). With the factorial structure confirmed, we calculated composite measure scores for each factor as the average of top-box-scored items included in that factor and assessed the degree to which the composite measures assess distinct content domains by calculating their correlations. Correlations exceeding 0.80 suggest insufficiently distinct aspects of care. We also calculated the composite reliability (the reliability of observed scores calculated as composites [e.g. mean] of individual items) using coefficient Omega, which uses parameters in the factor-analytic model and is recommended over Cronbach’s alpha [30], 31]. We calculated categorical Omega to account for the ordinal nature of item top-box scores [32], 33]. Larger values indicate more precise measurement of the underlying construct. Composite reliability of 0.70 or higher is considered adequate [34].

To assess construct validity, we examined the degree to which the composite measures were related to overall assessments of care by running linear or logistic regression models in which each measure predicted the following outcomes calculated from survey responses: Overall Rating of Care, Perceived Timeliness of Cancer Diagnosis (i.e., no perceived delay), Number of Visits to Health Care Professional During Diagnostic Interval, and Diagnostic Interval less than or equal to 30 days. A diagnostic interval of one month is commonly used in studies of cancer diagnostic intervals and aligns with international benchmarks, such as the National Health Service’s Faster Diagnosis Standard [35].

Results

Survey results

We received 276 surveys from eligible respondents for an overall response rate of 23.5 %; 21.4 % responded by web and 78.6 % by mail. Approximately two-thirds were age 65 or older; 6.5 % were non-Hispanic Black or African American, 12.3 % were Hispanic, and 65.6 % were non-Hispanic white (Table 1). Two-thirds of respondents had a college degree or more. 11 percent of respondents were proxies responding on behalf of the patient. Respondents had similar sex and ethnicity breakdowns compared to the overall sample individuals to whom we sent the survey (Supplementary Appendix Table A.1); respondents were less likely to be Black/African-American and were generally older than the overall sample.

Characteristics of survey respondents (n=276).

| Characteristic | n (%) |

|---|---|

| Sociodemographic characteristics | |

|

|

|

| Sex | |

| Female | 151 (54.7 %) |

| Male | 125 (45.3 %) |

| Age | |

| 18–39 years | 12 (4.4 %) |

| 40–64 years | 76 (27.7 %) |

| 65–79 years | 137 (50.0 %) |

| >80 years | 49 (17.9 %) |

| Race/ethnicity | |

| Non-hispanic black or African American | 18 (6.5 %) |

| Hispanic (of any race) | 34 (12.3 %) |

| Non-hispanic white | 181 (65.6 %) |

| Other | 43 (15.6 %) |

| Education | |

| Less than high school | 7 (2.5 %) |

| High school graduate or GED | 14 (5.1 %) |

| Some college or 2-year degree | 71 (25.8 %) |

| College degree or more | 183 (66.5 %) |

| Primary languagea | |

| English | 239 (88.8 %) |

| Spanish | 8 (3.0 %) |

| Other | 22 (8.2 %) |

|

|

|

| Clinical characteristics | |

|

|

|

| Cancer type | |

| Anal | 6 (2.2 %) |

| Bladder | 8 (2.9 %) |

| Brain | 8 (2.9 %) |

| Breast | 72 (26.2 %) |

| Cervical | 3 (1.1 %) |

| Colon/rectal | 12 (4.4 %) |

| Endometrial/uterine | 9 (3.3 %) |

| Head and neck | 7 (2.5 %) |

| Kidney | 5 (1.8 %) |

| Leukemia/lymphoma/myeloma | 28 (10.2 %) |

| Liver | 6 (2.2 %) |

| Lung | 22 (8.0 %) |

| Melanoma | 5 (1.8 %) |

| Ovarian | 6 (2.2 %) |

| Pancreatic | 18 (6.5 %) |

| Prostate | 36 (13.1 %) |

| Stomach/esophageal | 7 (2.5 %) |

| Thyroid | 6 (2.2 %) |

| Other | 11 (4.0 %) |

| Stage at diagnosis | |

| Stage 0 (in situ) | 15 (5.7 %) |

| Stage 1 | 63 (23.9 %) |

| Stage 2 | 39 (14.8 %) |

| Stage 3 | 28 (10.6 %) |

| Stage 4/metastatic | 41 (15.5 %) |

| Don’t know/not sure | 78 (29.5 %) |

| Initial presentation | |

| Screening test abnormal | 60 (21.9 %) |

| Incidental finding | 84 (30.7 %) |

| Symptoms | 122 (44.5 %) |

| Diagnostic interval | |

| ≤30 days | 129 (46.7 %) |

| 31–60 days | 50 (18.1 %) |

| 61–90 days | 22 (8.0 %) |

| 91–180 days | 22 (8.0 %) |

| 181+ days | 23 (8.3 %) |

| Missing | 30 (10.9 %) |

| Number of visits to health care professional during diagnostic interval | |

| 0–1 | 61 (22.7 %) |

| 2 | 66 (24.5 %) |

| 3–4 | 78 (29.0 %) |

| 5+ | 56 (20.8 %) |

| Don’t know/not sure | 8 (3.0 %) |

-

All characteristics self-reported in survey response data. Categories not adding up to 276 indicate missing. Missing shown when >5 %. aSurvey materials were only available in English. Although 30 respondents reported primary languages other than English, we received English-language survey responses from all of these respondents. Of the respondents, only 13 reported ever wanting interpreter services during their cancer diagnosis, indicating that most of these individuals were comfortable communicating in English even if it was not their primary language. Five respondents reported using the assistance of someone to translate the survey.

Respondents were diverse regarding cancer type, stage at diagnosis, and initial presentation. The most common cancer types were breast; prostate; leukemia, lymphoma, or myeloma; lung; and pancreatic (Table 1). Compared to national incidence rates, breast cancer was overrepresented among respondents, while prostate, lung, and colorectal cancers were each slightly underrepresented [36]. While 29.5 % of respondents reported not knowing or being unsure of their cancer stage, 29.6 % reported being diagnosed at Stage 0 or 1, 25.4 % at 2 or 3, and 15.5 % at Stage 4. Forty-five percent reported their diagnostic interval began with a visit to a health care professional to discuss symptoms; 30.7 % reported their cancer diagnosis initially presented as an incidental finding and 21.9 % reported their diagnostic interval began with an abnormal result on a cancer screening test.

Patient-reported diagnostic intervals (days between initial presentation and diagnosis) varied; 46.7 % of patients reported intervals of 30 days or fewer and 8.3 % reported intervals greater than 180 days; 10.9 % could not provide sufficient information to place the respondent in an interval category. Only 3.0 % of patients said they were unsure of number of visits to a health care professional during the diagnostic interval, while 22.7 % reported no visits or one visit and 20.8 % reported having five or more visits. Patients who were diagnosed at later stages and had symptomatic initial presentations tended to report shorter diagnostic intervals (Table 2).

Length of diagnostic interval and number of visits to health care professionals during diagnostic intervals; overall and for subgroups of interest.

| Initial presentation (n=266) | Cancer stage at diagnosis (n=186) | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| All | Screening test (n=60) | Incidental finding (n=84) | Symptomatic (n=122) | p-Value | 0–1 | 2–3 | 4 | p-Value | |

| (n=276) | (n=78) | (n=67) | (n=41) | ||||||

| Diagnostic interval (continuous) | |||||||||

| Mean, daysa | 69 | 79 | 93 | 48 | 0.059 | 92 | 69 | 44 | 0.188 |

| Diagnostic interval (binary) | |||||||||

| ≤30 days | 52 % (129/246) | 42 % (24/57) | 45 % (34/75) | 63 % (66/105) | 0.014 | 45 % (33/74) | 52 % (32/62) | 76 % (28/37) | 0.008 |

| ≤90 days | 82 % (201/246) | 79 % (45/57) | 77 % (58/75) | 87 % (91/105) | 0.224 | 73 % (54/74) | 81 % (50/62) | 89 % (33/37) | 0.131 |

| Number of visits during diagnostic interval (categorical) | |||||||||

| 0–1 visits | 23 % (61/261) | 21 % (12/56) | 20 % (16/80) | 27 % (31/116) | 0.937 | 15 % (11/72) | 30 % (20/67) | 28 % (11/39) | 0.092 |

| 2 visits | 25 % (66/261) | 27 % (15/56) | 28 % (22/80) | 23 % (27/116) | 28 % (20/72) | 21 % (14/67) | 15 % (6/39) | ||

| 3–4 visits | 30 % (78/261) | 30 % (17/56) | 29 % (23/80) | 30 % (35/116) | 39 % (28/72) | 25 % (17/67) | 23 % (9/39) | ||

| 5 or more visits | 21 % (56/261) | 21 % (12/56) | 24 % (19/80) | 20 % (23/116) | 18 % (13/72) | 24 % (16/67) | 33 % (13/39) | ||

-

We tested statistical significance of group comparison for initial presentation and cancer stage diagnosis using ANOVA tests for diagnostic interval (continuous), and Chi-square tests for other variables.

Quality of care measure properties

We derived seven quality of care measures from the survey, including two single-item overall assessments of care, and five measures assessing aspects of care quality during the cancer diagnostic interval (two single-item measures, Getting Timely Usual Care and Getting Timely Specialty Care, and three multi-item, composite measures, Communication with Usual Care Professionals, Communication with Specialists, and Getting Tests and Results, which were confirmed in CFA). The CFA model (two single item measures and three factors representing three multi-item composite measures) provides an excellent fit to the data, χ2(27)=26.18, p=0.51; CFI=1.00; RMSEA=0.00; WRMR=0.49. Table 3 displays the factor loadings and corrected item-total correlation for the eight evaluative items proposed for three factors, along with internal consistency reliability (categorical Omega) for each composite measure. The factor loadings range between 0.71 and 1.00 and corrected item-total correlations range between 0.39 and 0.76, suggesting these items are strong indicators of the corresponding factor. Top-box-scored items included in each factor were averaged to generate scores for each composite measure. The five measures assessing aspects of care quality are moderately correlated, with a range of r=0.25 to r=0.50 (Table 4), indicating that they measure related but distinct aspects of care. Measures that are least correlated are Getting Timely Usual Care and Communication with Specialists (0.25) and Getting Timely Usual Care and Getting Tests and Results (r=0.31).

Psychometric properties of proposed composite measures assessing timeliness of cancer diagnosis.

| Composite measures and component items | Item-total correlation | Factor loading | Top-box score |

|---|---|---|---|

| Communication with Usual Care Professionals During the time leading up to your cancer diagnosis, how often did your usual health care professional (s) |

Categorical omega = 0.86, 95 % CI [0.80, 0.91] | 76 % (149.5/197) | |

|

0.71 | 0.92 | 72 % (138/193) |

|

0.75 | 1.00 | 73 % (141/192) |

|

0.58 | 0.89 | 85 % (164/192) |

| Communication with Specialists During the time leading up to your cancer diagnosis, how often did the specialist(s) you saw |

Categorical omega = 0.88, 95 % CI [0.79, 0.93] | 88 % (167.83/191) | |

|

0.70 | 0.96 | 85 % (162/190) |

|

0.76 | 0.97 | 86 % (164/190) |

|

0.63 | 0.91 | 92 % (176/191) |

| Getting Tests and Results During the time leading up to your cancer diagnosis |

Categorical omega = 0.74, 95 % CI [0.67, 0.80] | 67 % (182/272) | |

|

0.39 | 0.84 | 63 % (168/267) |

|

0.39 | 0.71 | 72 % (191/267) |

-

CI, confidence interval.

Correlations among composite measures and single-item measures.

| Getting Timely Usual Care | Communication with Usual Care Professionals | Getting Timely Specialty Care | Communication with Specialists | Getting Tests and Results | |

|---|---|---|---|---|---|

| Getting Timely Usual Care: during the time leading up to your cancer diagnosis, how often were you able to see your usual health care professional(s) as soon as you needed? | – | ||||

| Communication with Usual Care Professionalsa | 0.42 | – | |||

| Getting Timely Specialty Care: during the time leading up to your cancer diagnosis, how often were you able to see the specialist(s) as soon as you needed? | 0.41 | 0.50 | – | ||

| Communication with Specialistsa | 0.25 | 0.43 | 0.45 | – | |

| Getting Tests and Resultsa | 0.31 | 0.41 | 0.45 | 0.47 | – |

-

Correlations presented are Pearson correlations. All correlations are significant at p<0.001. aItem text shown in Table 3.

Overall reported care experiences

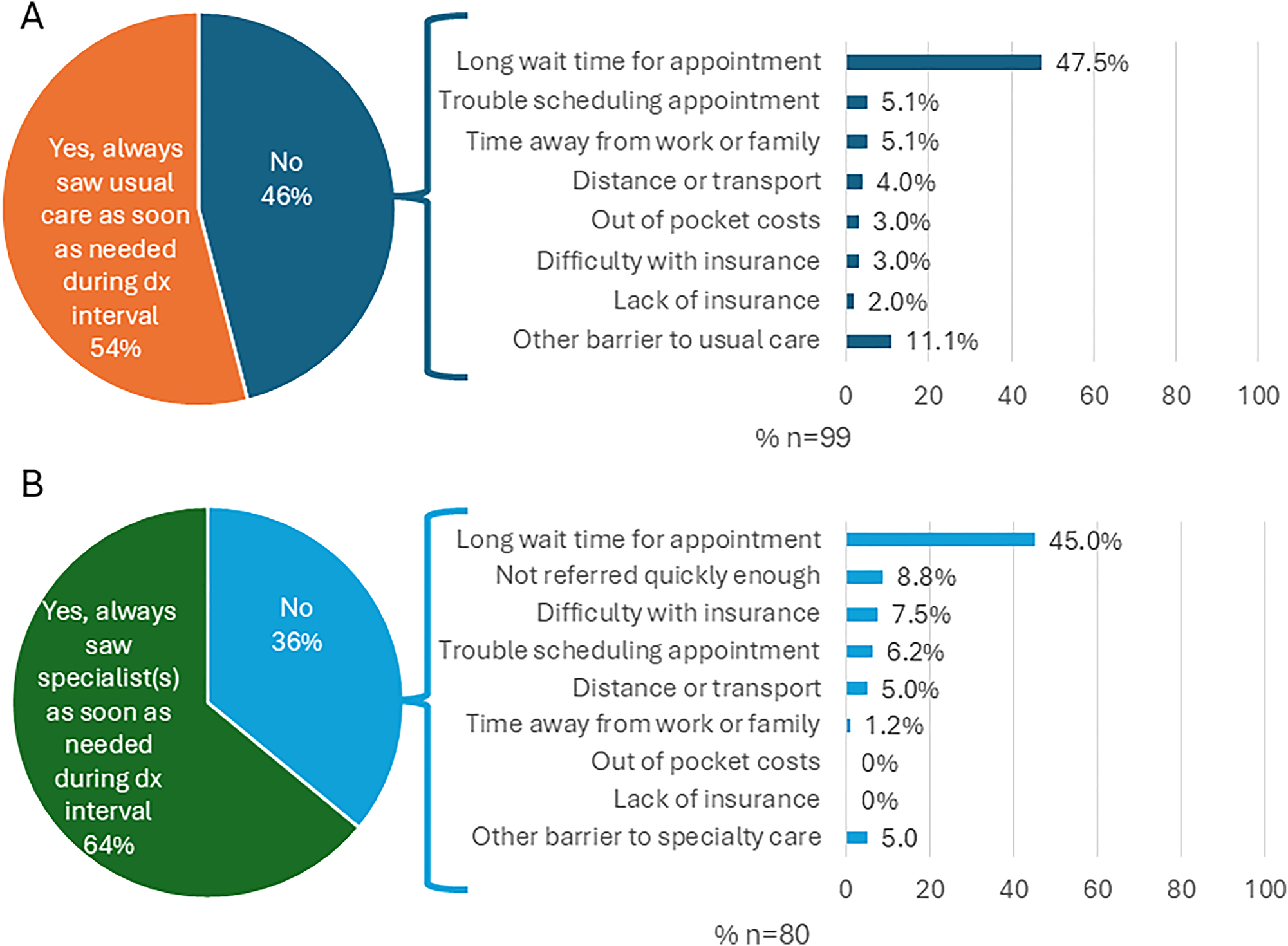

Distributions of responses for each measure are shown in Supplementary Appendix Table A.2. Approximately four out of five (79 %) respondents reported having a usual source of care prior to the diagnostic interval, and 90 % of these respondents talked to their usual care provider during the diagnostic interval. Half (46 %) reported they did not “always” see their usual care provider as soon as needed during the diagnostic interval (Figure 1A); the predominant barrier to usual care reported was long wait time for an appointment (48 %).

Descriptive results of access and barriers for usual care (A) and specialty care (B).

Of patients who saw their usual care providers during the diagnostic interval, 70 % reported their usual care provider referred them to a specialist. Whether or not they were referred, 69 % percent of respondents reported seeing a specialist during the diagnostic interval. Of these, 36 % reported they did not “always” see the specialist as soon as needed during the diagnostic interval (Figure 1B). The predominate barrier to specialty care reported was also long wait time for an appointment (45 %), although not being referred quickly enough and difficulty with insurance coverage were also noted by 8.8% and 7.5 % of respondents, respectively.

Respondents reported favorable experiences overall (Table 3 and Supplementary Appendix Table A.3). Patients reported better communication with specialists than with usual care providers; top-box scores for Communication with Usual Care Professionals and Communication with Specialists composite measures were 76 and 88, respectively. The overall top-box score for the Getting Tests and Results composite measure was somewhat lower (67). Nearly three-quarters (74 %) of respondents rated their overall care during the diagnostic phase a 9 or 10 out of 10, while 10 % rated it 6 or less. Nearly two-thirds of respondents (64 %) believed their cancer could not have been diagnosed much sooner.

Patients who were diagnosed at later stages and had symptomatic initial presentations tended to report overall worse care experiences, though this was not true in all cases. Exceptions included patients with symptomatic initial presentations reporting higher rates of results follow-up of tests, X-rays, and scans (Figure 2A), and patients diagnosed at Stage 4 reporting better communication with specialists and results follow-up of tests, X-rays, and scans than patients diagnosed at earlier stages (Figure 2B).

Top-box scores for timeliness of care leading up to cancer diagnosis measures by (A) initial presentation (B) cancer stage at diagnosis. Top-box scores classify the response(s) indicating the best experiences as 100 and all other responses as 0 (e.g., ‘‘always’’ = 100; all other responses = 0; rating of 9 or 10 out of 10=100; all other responses = 0).

Association between measures and perceived timeliness of diagnosis, diagnostic interval, and overall ratings of care

All measures had strong, statistically significant associations with perceived timeliness of cancer diagnosis and overall ratings of care (Table 5); Communication with Usual Health Care Professionals, Communication with Specialists, and Getting Tests and Results were the strongest predictors of these outcomes. Getting Tests and Results, and Getting Timely Specialty Care were the strongest predictors of a diagnostic interval less than or equal to 30 days. In models that included all measures simultaneously (rather than individually), the strongest predictors of outcomes remained the same, although coefficients and odds ratios were somewhat smaller (Supplementary Appendix Table A.4). Measures were mostly not associated with the number of visits to a health care professional during the diagnostic interval.

Association between quality measure top-box scores and overall ratings of care, number of visits during diagnostic interval, diagnostic interval less than days, and perceived timeliness of cancer diagnosis.

| Perceived timeliness of cancer diagnosis | Diagnostic interval ≤30 days | Overall rating (higher is better) | Number of visits during diagnostic interval | |

|---|---|---|---|---|

| Models assessing measures one-by-one | Odds ratio [95 % CI] | Unstandardized regression coefficients [95 % CI] | ||

|

|

||||

| Getting Timely Usual Care | 3.37c [1.70, 6.69] | 1.41 [0.77, 2.57] | 1.33c [0.78, 1.89] | 0.13 [−0.19, 0.45] |

| Communication with Usual Care Professionals | 14.07c [4.95, 39.98] | 1.10 [0.50, 2.43] | 3.20c [2.56, 3.83] | −0.37 [−0.80, 0.06] |

| Getting Timely Specialty Care | 3.63c [1.74, 7.57] | 2.10a [1.09, 4.03] | 1.58c [1.12, 2.03] | −0.14 [−0.47, 0.19] |

| Communication with Specialists | 10.93c [2.90, 41.18] | 1.71 [0.55, 5.35] | 3.17c [2.44, 3.91] | −0.51 [−1.05, 0.04] |

| Getting Tests and Results | 6.63c [3.12, 14.09] | 3.28c [1.68, 6.41] | 2.40c [1.88, 2.92] | −0.11 [−0.47, 0.25] |

-

ap<0.05; bp<0.01; cp<0.001.

Discussion

In our study of patients recently diagnosed with cancer in a large integrated health system, patients reported barriers to timely diagnosis that were both associated with the cancer diagnostic interval and actionable for health care providers and systems. Patient reports of timely access to specialty care and ease of getting tests and results were strongly associated with shorter diagnostic intervals.

Our results demonstrate that patient-reported measures can provide important information about diagnosis experiences that may not be captured by other data sources. For example, respondents who reported that they experienced barriers to accessing usual care and specialty care, long wait times for appointments was by far the most frequently reported barrier. Patient ratings of timeliness have been shown to be correlated with real appointment wait times [37]. Long wait times are potentially actionable by health care systems [38], 39].

Most respondents (90 %) were able to report the dates of both their first visit/test and their diagnosis to at least the month and year. Previous work has found concordance of patient reports of this information with administrative data [40]. Wide variation was reported in the number of visits during the diagnostic interval; about half of respondents reported three or more visits. Previous work has found that only 23 % of UK cancer patients experienced three or more consultations before referral for cancer [41], though the contextual differences of the UK’s general practitioner gatekeeper model make these numbers hard to compare. About 28 % of patients believed their cancer could have been diagnosed much sooner, which is similar to rates of previously reported rates as assessed by clinicians (24 %–34 %) [42], 43].

This work has several limitations. This study was conducted among patients receiving cancer care in a single, large integrated health system highly ranked for health care quality and serves patients with high levels of education and insurance coverage. To ensure sufficient sample size for analysis of patient experience measures, we analyze all cancer types together, even though they may have different acceptable diagnostic intervals. The survey materials were in English only and most respondents primarily spoke English, which limits our ability to understand the role of language in timeliness of cancer diagnosis [44]. About one-quarter of the sample responded to the survey, comparable to other patient experience surveys in broad national use [45]. However, results still represent a minority of potential respondents and our sample was small overall. These issues may reduce generalizability of both the results and measure performance.

We were also unable to compare self-reported survey responses with EHR data, so we are unable to assess accuracy of patient self-reports of cancer type, cancer stage at diagnosis, and dates of first visit/test and diagnosis used to calculate the diagnostic interval. Patients who reported their cancer was diagnosed through symptomatic presentations or at later stages tended to report worse care experiences than patients whose cancer was detected through screening or incidental findings, or at earlier stages of disease, aligning with previous work [46]. These reported differences in care experiences by presentation and cancer stage may be due, in part, to factors associated with presentation and stage, such as socioeconomic status. The survey also does not account for possible hindsight or outcome bias on the part of the respondents. We chose not to risk-adjust the measures when stratifying by respondent subgroups due to our small sample size.

Lastly, this survey’s purview is restricted to the period between when the patient is first seen by a health care professional and the final diagnosis (diagnostic interval). Important delays may also happen during the appraisal interval (when patients are deciding whether to seek care) and help-seeking interval (when patients have decided to seek care but have not yet received it). We will explore this in future surveys.

Conclusions

Timely and accurate diagnosis of cancer remains an important target for quality measurement and quality improvement. In our study, most respondents reported easy access and excellent communication with both usual care providers and specialists, ease of access to tests and results, and rated overall care highly, in line with previous work [47], but results also indicated numerous areas with substantial room for improvement.

Our study establishes internal consistency, reliability, and construct validity of patient-reported measures assessing aspects of timely diagnosis of cancer. These measures can be used to identify targets for quality improvement and complement other measures assessing timely diagnosis, such as current claims- and EHR-based measures [48], 49] as well as emerging approaches, such as the use of artificial intelligence to rapidly analyze clinical notes, conversations between clinicians and patients, and stories on social media. In the pursuit of diagnostic excellence for cancer in the US, patient-reported measures allow us to learn about processes and perspectives that would otherwise be unobservable.

Funding source: National Center for Advancing Translational Sciences

Award Identifier / Grant number: UL1TR001881

Funding source: Gordon and Betty Moore Foundation

Award Identifier / Grant number: GBMF11504

Acknowledgments

We would like to thank Johan Carrascoza-Bolanos and Joshua Wolf for their assistance administering the survey; Sangeeta Ahluwalia, Lucy Schulson, and Carrie Farmer for their advice on study design and project management; the subject matter experts we interviewed during the environmental scan; our Technical Expert/Clinical User/Patient Panel (TECUPP); our Technical Assistance team at Battelle; and our program officers at the Gordon and Betty Moore Foundation for their advice and feedback.

-

Research ethics: This study has been approved by the RAND Human Subjects Protection Committee (study number 2022-N0293) on 9/28/2022 and Cedars-Sinai Institutional Review Board (study number 2323) on 5/22/2023. This study was conducted in accordance with the Declaration of Helsinki (as revised in 2013).

-

Informed consent: Informed consent was obtained from all individuals included in this study.

-

Author contributions: All authors have accepted responsibility for the entire content of this manuscript and approved its submission.

-

Use of Large Language Models, AI and Machine Learning Tools: None declared.

-

Conflict of interest: The authors state no conflict of interest.

-

Research funding: This research was funded by the Gordon and Betty Moore Foundation through Grant GBMF11504. This research was also supported by NIH National Center for Advancing Translational Science (NCATS) UCLA CTSI Grant Number UL1TR001881.

-

Data availability: The datasets generated and/or analyzed during the current study are available from the corresponding author on reasonable request.

References

1. Ilbawi, A, Varghese, C, Loring, B, Ginsburg, O, Corbex, M. Guide to cancer early diagnosis. WHO; 2017:1–38 pp.Search in Google Scholar

2. Williams, KE, Sansoni, J, Morris, D, Thompson, C. A Delphi study to develop indicators of cancer patient experience for quality improvement. Support Care Cancer 2018;26:129–38. https://doi.org/10.1007/s00520-017-3823-4.Search in Google Scholar PubMed

3. Yang, D, Fineberg, HV, Cosby, K. Diagnostic excellence. JAMA 2021;326:1905–6. https://doi.org/10.1001/jama.2021.19493.Search in Google Scholar PubMed

4. Neal, R, Tharmanathan, P, France, B, Din, N, Cotton, S, Fallon-Ferguson, J, et al.. Is increased time to diagnosis and treatment in symptomatic cancer associated with poorer outcomes? Systematic review. Br J Cancer 2015;112:S92–107. https://doi.org/10.1038/bjc.2015.48.Search in Google Scholar PubMed PubMed Central

5. Lyratzopoulos, G, Vedsted, P, Singh, H. Understanding missed opportunities for more timely diagnosis of cancer in symptomatic patients after presentation. Br J Cancer 2015;112:S84–91. https://doi.org/10.1038/bjc.2015.47.Search in Google Scholar PubMed PubMed Central

6. Lyratzopoulos, G. Markers and measures of timeliness of cancer diagnosis after symptom onset: a conceptual framework and its implications. Cancer Epidemiol 2014;38:211–3. https://doi.org/10.1016/j.canep.2014.03.009.Search in Google Scholar PubMed

7. Giardina, TD, Hunte, H, Hill, MA, Heimlich, SL, Singh, H, Smith, KM. Defining diagnostic error: a scoping review to assess the impact of the national academies’ report improving diagnosis in health care. J Patient Saf 2022;18:770–8. https://doi.org/10.1097/pts.0000000000000999.Search in Google Scholar PubMed PubMed Central

8. Gleason, KT, Peterson, S, Dennison Himmelfarb, CR, Villanueva, M, Wynn, T, Bondal, P, et al.. Feasibility of patient-reported diagnostic errors following emergency department discharge: a pilot study. Diagnosis 2021;8:187–92. https://doi.org/10.1515/dx-2020-0014.Search in Google Scholar PubMed PubMed Central

9. Giardina, TD, Haskell, H, Menon, S, Hallisy, J, Southwick, FS, Sarkar, U, et al.. Learning from patients’ experiences related to diagnostic errors is essential for progress in patient safety. Health Aff 2018;37:1821–7. https://doi.org/10.1377/hlthaff.2018.0698.Search in Google Scholar PubMed PubMed Central

10. McDonald, KM, Bryce, CL, Graber, ML. The patient is in: patient involvement strategies for diagnostic error mitigation. BMJ Qual Saf 2013;22(2 Suppl):ii33–9. https://doi.org/10.1136/bmjqs-2012-001623.Search in Google Scholar PubMed PubMed Central

11. Schlesinger, MGR, Gleason, K, Yuan, C, Haskell, H, Giardina, T, McDonald, K. Patient experience as a source for understanding the origins, impact, and remediation of diagnostic errors. Volume 1: why patient narratives matter. Rockville, MD: Agency for Healthcare Research and Quality; 2023. Contract No.: AHRQ Publication No. 23-0040-2-EF.Search in Google Scholar

12. Anhang, PR, Elliott, MN, Zaslavsky, AM, Hays, RD, Lehrman, WG, Rybowski, L, et al.. Examining the role of patient experience surveys in measuring health care quality. Med Care Res Rev 2014;71:522–54. https://doi.org/10.1177/1077558714541480.Search in Google Scholar PubMed PubMed Central

13. Butler, J, Foot, C, Bomb, M, Hiom, S, Coleman, M, Bryant, H, et al.. The international cancer benchmarking partnership: an international collaboration to inform cancer policy in Australia, Canada, Denmark, Norway, Sweden and the United Kingdom. Health Pol 2013;112:148–55. https://doi.org/10.1016/j.healthpol.2013.03.021.Search in Google Scholar PubMed

14. Weller, D, Vedsted, P, Anandan, C, Zalounina, A, Fourkala, EO, Desai, R, et al.. An investigation of routes to cancer diagnosis in 10 international jurisdictions, as part of the international cancer benchmarking Partnership: survey development and implementation. BMJ Open 2016;6:e009641. https://doi.org/10.1136/bmjopen-2015-009641.Search in Google Scholar PubMed PubMed Central

15. Jensen, H, Nissen, A, Vedsted, P. Quality deviations in cancer diagnosis: prevalence and time to diagnosis in general practice. Br J Gen Pract 2014;64:e92–8. https://doi.org/10.3399/bjgp14x677149.Search in Google Scholar PubMed PubMed Central

16. Allgar, VL, Neal, RD. Delays in the diagnosis of six cancers: analysis of data from the national survey of NHS patients: cancer. Br J Cancer 2005;92:1959–70. https://doi.org/10.1038/sj.bjc.6602587.Search in Google Scholar PubMed PubMed Central

17. Abel, GA, Gomez-Cano, M, Pham, TM, Lyratzopoulos, G. Reliability of hospital scores for the cancer patient experience survey: analysis of publicly reported patient survey data. BMJ Open 2019;9:e029037. https://doi.org/10.1136/bmjopen-2019-029037.Search in Google Scholar PubMed PubMed Central

18. Mendonca, SC, Abel, GA, Saunders, CL, Wardle, J, Lyratzopoulos, G. Pre-referral general practitioner consultations and subsequent experience of cancer care: evidence from the English cancer patient experience survey. EJC 2016;25:478–90. https://doi.org/10.1111/ecc.12353.Search in Google Scholar PubMed PubMed Central

19. Wiseman, T, Lucas, G, Sangha, A, Randolph, A, Stapleton, S, Pattison, N, et al.. Insights into the experiences of patients with cancer in London: framework analysis of free-text data from the national cancer patient experience survey 2012/2013 from the two London integrated cancer systems. BMJ Open 2015;5:e007792. https://doi.org/10.1136/bmjopen-2015-007792.Search in Google Scholar PubMed PubMed Central

20. Lerro, CC, Stein, KD, Smith, T, Virgo, KS. A systematic review of large-scale surveys of cancer survivors conducted in North America, 2000–2011. J Cancer Surviv 2012;6:115–45. https://doi.org/10.1007/s11764-012-0214-1.Search in Google Scholar PubMed

21. Goldstein, E, Farquhar, M, Crofton, C, Darby, C, Garfinkel, S. Measuring hospital care from the patients’ perspective: an overview of the CAHPS hospital survey development process. Health Serv Res 2005;40:1977–95. https://doi.org/10.1111/j.1475-6773.2005.00477.x.Search in Google Scholar PubMed PubMed Central

22. Bell, SK, Bourgeois, F, DesRoches, CM, Dong, J, Harcourt, K, Liu, SK, et al.. Filling a gap in safety metrics: development of a patient-centred framework to identify and categorise patient-reported breakdowns related to the diagnostic process in ambulatory care. BMJ Qual Saf 2022;31:526–40. https://doi.org/10.1136/bmjqs-2021-013672.Search in Google Scholar PubMed

23. NHS England and NHS Improvement. National cancer patient experience survey [Online]. Available from: https://www.ncpes.co.uk/.Search in Google Scholar

24. CAHPS patient experience surveys and guidance Rockville, MD: Agency for Healthcare Research and Quality; 2022 [Online]. Available from: https://www.ahrq.gov/cahps/surveys-guidance/index.htmlSearch in Google Scholar

25. Victoria Department of Health. Patient experience updated July 19, 2022 [Online]. Available from: https://www.health.vic.gov.au/health-strategies/victorian-cancer-patient-experience-survey-tool-project.Search in Google Scholar

26. Robert Wood Johnson Foundation. How to report results of the CAHPS clinician & group survey. Aligning forces for quality: improving health & health care in communities across America; 2010. Available from: https://www.ahrq.gov/sites/default/files/wysiwyg/cahps/surveys-guidance/cg/cgkit/HowtoReportResultsofCGCAHPS080610FINAL.pdf.Search in Google Scholar

27. Brown, TA. Confirmatory factor analysis for applied research. New York: Guilford publications; 2015.Search in Google Scholar

28. Hu, L, Bentler, PM. Cutoff criteria for fit indexes in covariance structure analysis: conventional criteria versus new alternatives. Struct Equ Model 1999;6:1–55. https://doi.org/10.1080/10705519909540118.Search in Google Scholar

29. DiStefano, C, Liu, J, Jiang, N, Shi, D. Examination of the weighted root mean square residual: evidence for trustworthiness? Struct Equ Model 2018;25:453–66. https://doi.org/10.1080/10705511.2017.1390394.Search in Google Scholar

30. Yang, Y, Green, SB. A note on structural equation modeling estimates of reliability. Struct Equ Model 2010;17:66–81. https://doi.org/10.1080/10705510903438963.Search in Google Scholar

31. McDonald, RP. Test theory: a unified treatment, 1st ed. New York: Psychology Press; 1999.Search in Google Scholar

32. Yang, Y, Green, SB. Evaluation of structural equation modeling estimates of reliability for scales with ordered categorical items. Methodology 2015;11:23–34. https://doi.org/10.1027/1614-2241/a000087.Search in Google Scholar

33. Flora, DB. Your coefficient alpha is probably wrong, but which coefficient omega is right? A tutorial on using R to obtain better reliability estimates. AMPPS 2020;3:484–501. https://doi.org/10.1177/2515245920951747.Search in Google Scholar

34. Deng, L, Chan, W. Testing the difference between reliability coefficients alpha and omega. Educ Psychol Meas 2017;77:185–203. https://doi.org/10.1177/0013164416658325.Search in Google Scholar PubMed PubMed Central

35. National Health Service United Kingdom. Faster diagnosis framework and the faster diagnostic standard [Online]. Available from: https://www.england.nhs.uk/cancer/faster-diagnosis/.Search in Google Scholar

36. National Cancer Institute. Cancer stat facts: common cancer sites [Online]. Available from: https://seer.cancer.gov/statfacts/html/common.html.Search in Google Scholar

37. Mayo-Smith, M, Radwin, LE, Abdulkerim, H, Mohr, DC. Factors associated with patient ratings of timeliness of primary care appointments. J Patient Exp 2020;7:1203–10. https://doi.org/10.1177/2374373520968979.Search in Google Scholar PubMed PubMed Central

38. Ansell, D, Crispo, JAG, Simard, B, Bjerre, LM. Interventions to reduce wait times for primary care appointments: a systematic review. BMC Health Serv Res 2017;17:295. https://doi.org/10.1186/s12913-017-2219-y.Search in Google Scholar PubMed PubMed Central

39. Amigoni, F, Lega, F, Maggioni, E. Insights into how universal, tax-funded, single payer health systems manage their waiting lists: a review of the literature. Health Serv Manag Res 2023:09514848231186773.10.1177/09514848231186773Search in Google Scholar PubMed

40. Larsen, MB, Hansen, RP, Sokolowski, I, Vedsted, P. Agreement between patient-reported and doctor-reported patient intervals and date of first symptom presentation in cancer diagnosis - a population-based questionnaire study. Cancer Epidemiol 2014;38:100–5. https://doi.org/10.1016/j.canep.2013.10.006.Search in Google Scholar PubMed

41. Lyratzopoulos, G, Neal, RD, Barbiere, JM, Rubin, GP, Abel, GA. Variation in number of general practitioner consultations before hospital referral for cancer: findings from the 2010 national cancer patient experience survey in England. Lancet Oncol 2012;13:353–65. https://doi.org/10.1016/s1470-2045(12)70041-4.Search in Google Scholar PubMed

42. Swann, R, Lyratzopoulos, G, Rubin, G, Pickworth, E, McPhail, S. The frequency, nature and impact of GP-assessed avoidable delays in a population-based cohort of cancer patients. Cancer Epidemiol 2020;64:101617. https://doi.org/10.1016/j.canep.2019.101617.Search in Google Scholar PubMed

43. Singh, H, Khan, R, Giardina, TD, Paul, LW, Daci, K, Gould, M, et al.. Postreferral colonoscopy delays in diagnosis of colorectal cancer: a mixed-methods analysis. QMHC 2012;21:252–61. https://doi.org/10.1097/qmh.0b013e31826d1f28.Search in Google Scholar

44. Bell, SK, Dong, J, Ngo, L, McGaffigan, P, Thomas, EJ, Bourgeois, F. Diagnostic error experiences of patients and families with limited English-language health literacy or disadvantaged socioeconomic position in a cross-sectional US population-based survey. BMJ Qual Saf 2023;32:644–54. https://doi.org/10.1136/bmjqs-2021-013937.Search in Google Scholar PubMed

45. Godden, E, Paseka, A, Gnida, J, Inguanzo, J. The impact of response rate on hospital consumer assessment of healthcare providers and system (HCAHPS) dimension scores. PXJ 2019;6:105–14. https://doi.org/10.35680/2372-0247.1357.Search in Google Scholar

46. Salika, T, Abel, GA, Mendonca, SC, von Wagner, C, Renzi, C, Herbert, A, et al.. Associations between diagnostic pathways and care experience in colorectal cancer: evidence from patient-reported data. Frontline Gastroenterol 2018;9:241–8. https://doi.org/10.1136/flgastro-2017-100926.Search in Google Scholar PubMed PubMed Central

47. Corner, J, Wagland, R, Glaser, A, Richards, SM. Qualitative analysis of patients’ feedback from a PROMs survey of cancer patients in England. BMJ Open 2013;3. https://doi.org/10.1136/bmjopen-2012-002316.Search in Google Scholar PubMed PubMed Central

48. Murphy, DR, Thomas, EJ, Meyer, AND, Singh, H. Development and validation of electronic health record–based triggers to detect delays in Follow-up of abnormal lung imaging findings. Radiology 2015;277:81–7. https://doi.org/10.1148/radiol.2015142530.Search in Google Scholar PubMed PubMed Central

49. Kapadia, P, Zimolzak, AJ, Upadhyay, DK, Korukonda, S, Murugaesh Rekha, R, Mushtaq, U, et al.. Development and implementation of a digital quality measure of emergency cancer diagnosis. J Clin Oncol 2024;42:2506–15. https://doi.org/10.1200/jco.23.01523.Search in Google Scholar PubMed PubMed Central

Supplementary Material

This article contains supplementary material (https://doi.org/10.1515/dx-2024-0203).

© 2025 the author(s), published by De Gruyter, Berlin/Boston

This work is licensed under the Creative Commons Attribution 4.0 International License.