Abstract

The last two decades have witnessed a growing interest in developing and incorporating tasks in technology-mediated settings. Correspondingly, technology-mediated task-based language teaching (technology-mediated TBLT) has established itself as a distinct domain of TBLT research, exploring the benefits of technology in promoting task performance and outcomes of task-based learning. This research synthesis intends to contribute to this growing trend by focusing on one type of technology – immersive virtual reality (VR). We conducted a systematic search and reviewed 33 primary studies (2014–2024) that adopted VR technology to create tasks, and then we assessed learning outcomes of these tasks. In addition to reviewing study features for a general trend (e.g., target population, language, and knowledge/skill area), we evaluated the VR-based instructional tasks for common characteristics of pedagogical tasks established in the TBLT literature. Results showed that the majority of VR tasks are meaning-focused and authentic, but they are not sufficiently designed to produce non-linguistic outcomes (outcomes beyond the mere display of linguistic knowledge and skills).

1 Introduction

Over the last two decades, there has been an increasing interest in exploring the intersection between task-based language teaching (TBLT) and technology-enhanced language learning (TELL), resulting in a line of seminal publications – position papers, research syntheses, and book volumes (Canals and Mor 2023; Chong and Reinders 2020; Doughty and Long 2023; González-Lloret 2017; González-Lloret and Ortega 2014; Kim and Namkung 2024; Lai and Li 2011; Smith and González-Lloret 2021; Thomas and Reinders 2010; Ziegler 2016). As these publications emphasize, this interest is based on the complementary roles that TBLT and TELL play to advance language teaching. On the TBLT side, integrating technology into task design and implementation helps create tasks that can generate meaningful language use, rich interaction, and opportunities for learning-by-doing, all of which are basic tenets of pedagogical tasks. On the TELL side, TBLT provides a framework to realize a more theoretically grounded approach to researching the role of technology in language teaching.

The call for the TBLT-TELL synergy has spurred interest among researchers and practitioners to implement technology-enhanced TBLT and document learning outcomes (gains in linguistic knowledge and skills). Dozens of empirical studies have revealed positive results. When engaging in tasks using technologies (e.g., blogging, texting, video conferencing), learners showed greater motivation and lower anxiety (e.g., Chen 2012; Lai et al. 2011); produced a great amount of output and interaction features (Canto et al. 2014; Yanguas 2012); and improved language abilities (e.g., Nielson 2014; Sauro 2014; Tsai 2011) as well as abilities beyond linguistics areas (e.g., intercultural literacy; Abrams 2016).

Although the TBLT-TELL intersection has received some empirical support, the current literature lacks a systematic examination on the intersection between TBLT and virtual reality (VR) platforms. Of the technological tools examined in the TBLT literature, VR has received the least attention, probably because this technology is relatively new compared to others. In fact, a cursory literature search using the search terms “virtual reality” and “TBLT” in Google Scholar brought up only two publications (Baralt et al. 2023; Smith and McCurrach 2021). The scant attention to VR in TBLT suggests that a research synthesis exploring this connection could encourage VR applications to TBLT, especially because VR has received considerable attention in the TELL literature (Chun et al. 2022; Salder and Thrasher 2023). This synthesis is an effort in this direction. We synthesize existing VR-based studies to evaluate their instructional tasks from the TBLT perspective. Specifically, we apply common criteria of pedagogical tasks established in the TBLT literature (focus on meaning, authenticity, and non-linguistic outcomes) (Ellis 2009; Long 2016) to examine whether current VR-based instructional activities are sufficiently task-based based on the TBLT perspectives.

2 Background

2.1 Task-Based Language Teaching (TBLT)

With a mounting body of research demonstrating the effectiveness of tasks to promote language learning, TBLT has established its status as a prominent pedagogical approach. TBLT is a process-oriented approach that adopts communicative language teaching at the core of lesson planning, syllabus design, and curriculum goals (Nunan 2004; Richards 2005). Adapting the educational theories that underscore the relationship between experience and learning, TBLT capitalizes on “learning for use through participation” (Samuda and Bygate 2008, p. 69). Experiential learning or learning-by-doing (Dewey 1997) is the core of the TBLT approach: TBLT emphasizes that acquisition of a language is a byproduct of engaging in its use, and tasks serve as a vehicle to facilitate its use.

TBLT contends that learners develop their language knowledge and skills while engaging in communicative tasks, and thus tasks should form the basic units of an instructional cycle and a curriculum. As such, defining a pedagogical task and task characteristics is a critical component of TBLT. Long (2016) defines tasks as “the real-world communicative uses to which learners will put the L2 beyond the classroom – the things they will do in and through the L2” (p. 6). Although some differences in the conceptualization of tasks exist among TBLT scholars, scholars have agreed upon a set of essential task characteristics (e.g., Ellis 2003, 2009; Long 1985, 2016; Nunan 2004; Skehan 1998; Van den Branden 2006). First, tasks are meaning-oriented. Tasks engage learners with meaningful language use rather than isolated linguistic forms. Second, tasks are authentic. There is a close correspondence between tasks and real-life activities. Third, tasks have a non-linguistic outcome. By completing a task, learners achieve an outcome (or a goal) beyond the mere display of language use (e.g., planning a trip, designing a poster, organizing an event, writing a report).

These characteristics – focus-on-meaning, authenticity, and non-linguistic outcome – have served as guiding principles for task design. Adopting these principles, a number of studies have examined how tasks can facilitate language learning. These studies fall into two groups. One group has focused on educational goals of tasks, exploring how teachers use tasks in the classroom and how TBLT is perceived by teachers and learners (Van den Branden 2016). Pedagogy-focused topics such as needs analyses (Chaudron et al. 2005), task design and implementation (Kim 2015), task-based curriculum development (Van den Branden 2006), teacher training (East 2017), and program evaluation (Norris 2015) have been addressed in this group of studies. For example, in technology-mediated contexts, online language courseware has been designed following the TBLT framework, generating positive reactions among learners and instructors (Nielson 2014).

Another group of studies are more SLA-oriented, exploring how manipulating certain task features or task conditions impacts language use and learning outcomes. Studies have analyzed learners’ output during task performance for linguistic quality (e.g., complexity, accuracy, fluency) and interaction patterns (e.g., turn-taking, alignment), and linked those analyses to learners’ linguistic development. For example, research showed that increasing cognitive demands of a task generated more interaction among learners, leading to greater learning of targeted linguistic features (Kim 2015). However, task complexity had no effect in interaction or learning outcomes in technology-mediated contexts (CMC in Baralt 2013; 3D immersive environment in Collentine 2013). Other studies revealed that task planning positively affected task performance. Sauro and Smith (2010) found that L2 German learners who used online planning time in task-based text chat interactions exhibited greater syntactic complexity and lexical diversity in their utterances. Task repetition has also been a common topic of investigation. Liao and Fu (2014) found that task repetition improved the syntactic and lexical complexity of learners’ production in written text chat.

In summary, TBLT has greatly informed researchers and practitioners about the pedagogical, empirical, and theoretical values of tasks in language teaching. Tasks can promote learners’ language use, interaction, and learning, which in turn inform effective pedagogy and SLA theories. Incorporating technology into TBLT has merit because it expands the options of task design and implementation, as well as the environments where TBLT can be practiced.

2.2 Intersection of TBLT and TELL

An early call for the TBLT-TELL intersection was made by Doughty and Long (2003) who articulated their reciprocal relationship: Technology presents a venue for realizing the TBLT principles, while TBLT offers a framework for the selection and use of technology for language teaching. Following this, Ortega (2009) clarified the nature of this relationship, arguing that TBLT and technology have a common emphasis on experiential learning and share certain pedagogical principles such as authenticity in language use, learner autonomy, and community-based learning. Thomas and Reinders (2010) also noted that TBLT and technology-assisted learning share certain conceptual frameworks such as “project-based, content-based, and experiential learning, as well as constructivist and social constructivist thought” (p. 5).

Scholars have underscored the need for a new conceptualization of technology-mediated TBLT, leading to a renewed definition of tasks using technologies (González-Lloret 2017; González-Lloret and Ortega 2014; Smith and González-Lloret 2021). González-Lloret and Ortega (2014) proposed a set of design criteria for technology-mediated tasks:

Tasks have a primary focus on meaning over forms.

Tasks are goal-oriented with a communicative purpose.

Tasks are learner-centered, designed based on learners’ needs.

Tasks are authentic and have relevance to real-life processes and language use.

Tasks bring reflection to the learning process.

Tasks help learners develop their digital literacy and real-life technology skills, together with their linguistic abilities.

While the first five criteria are comparable to the common characteristics of pedagogical tasks in TBLT (see the previous section), the last criterium involving digital literacy is unique to technology-mediated tasks. This criterium adds a unique value because learners are expected to develop their digital, multimodal, and informational literacies (Warschauer 2007) along with their language skills. Learners’ technological proficiency contributes to their successful task completion as much as their language proficiency does.

Canals and Mor (2023) support these design criteria of technology-mediated tasks. Using the Delphi technique, they identified the most agreed-upon principles of technology-mediated TBLT among 21 experts. Although common task features of focus-on-meaning and authenticity were present in those principles, what was notable was the explicit mention of technology-specific terms. The experts agreed that tasks “represent and promote language use as a holistic, multimodal entity (including non-verbal communication and symbols), trying not to separate language domains, grammar from lexis” (p. 7). They also agreed that tasks “promote learning by doing and using language (often mediated by multimodal artifacts/technology) to produce meaningful outputs” (p. 8). These principles show that multimodal cues and artifacts, which are inherent in digital environments, are essential resources for meaning-making. The experts also agreed that digital literacy is critical, while maintaining that learners should critically evaluate the functions of technology (e.g., as a tutoring system, as a communication medium).

Adapting these pedagogical principles, existing studies have documented unique affordances of various types of technology in task-based environments (e.g., online courseware, teleconferencing, social media, mobile technologies, digital games). Chong and Reinders (2020) synthesized 16 technology-mediated TBLT studies published between 2002 and 2017. They found that technology-mediated tasks are characterized by authenticity and real-life language use (e.g., writing and uploading blogs based on interviews; Chen 2012). Tasks facilitated interactions among learners through a range of technological tools such as SMS and social networking (Park and Slater 2014). Tasks also supported autonomous learning via an online tutoring system (Tsai 2011). However, they also identified challenges. Learners need digital literacy and computer skills to complete tasks, while teachers need sufficient training and preparation for guiding instruction. Researchers, on the other hand, have to adopt new research methods and tools to analyze real-time learner process data (e.g., screen capture of learner behavior, eye-tracking of learner navigation; see also Lai and Li 2011; Smith and González-Lloret 2021).

In summary, responding to the call for technology-mediated TBLT, existing studies have documented the use of TBLT as a framework for implementing technology in language teaching. More research is needed to better understand how various affordances of technology can be best utilized in the task-based framework. When considering technological affordances, it is critical to discuss affordances specific to certain technologies. As Smith and Gonzalez-Lloret (2021) contend, technology is never neutral: We need to consider nature, appropriateness, and use of a specific technology for a given technology-mediated task, in relation to learners’ needs and abilities to use the technology. Hence, a research synthesis focusing on a specific technology is a timely attempt in this direction, particularly because the existing literature uses technology as an umbrella term, exploring its relation to TBLT as a whole. To this end, this synthesis offers a focused review of VR-assisted language learning from a TBLT perspective.

2.3 Virtual Reality for Language Teaching

VR is a computer-generated simulation of a real-life environment where users can self-explore and interact. VR uses head-mounted displays (HMDs), body trackers, and hand controllers, which give users an immersive feel of a virtual world. VR has two types – high and low-immersion (Kaplan-Rakowski and Gruber 2019). The former involves a computer-generated virtual space with a 360° view displayed in a cubical CAVE (Cave Automatic Virtual Environment) or HMDs with high fidelity. The latter refers to a virtual space “experienced through standard audio-visual equipment, such as a desktop computer with a two-dimensional monitor” (Kaplan-Rakowski and Gruber 2019, p. 552).

With increasing affordability and accessibility of VR technology in everyday lives, VR has gained widespread adoption in the education world. The VR market in the education industry has reached 25 billion dollars in 2024 and is expected to grow to 67 billion in five years (https://www.mordorintelligence.com/). This trend is also seen in language education. A number of VR platforms and apps for language learning have emerged in the last decade (e.g., Mondly, VirtualSpeech). These platforms provide diverse resources, ranging from vocabulary exercises to conversation practice with built-in avatars and chatbots. Parallel to this trend, curriculum-level applications of VR have emerged (e.g., ImmerseMe), providing interactive language modules over wide-ranging communicative situations and cultural contexts.

VR has several unique affordances that can promote L2 learning (Chun et al. 2022; Godwin-Jones 2023; Lan 2020; Salder and Thrasher 2023). First, VR can fully immerse learners in a virtual space in which they experience telepresence, or the feeling that they are actually part of the world they are experiencing (Slater 2018). This sense of presence adds to real-life simulations, making language practice engaging, contextualized, and realistic. Second, VR can promote interaction. Learners can interact with the environment and others via text or oral chat. Third, VR promotes learner agency. Since learners have to determine their course of action while navigating through the VR space, they are encouraged to plan, monitor, and assess their own actions. This decision-making power and agency can boost learner motivation and engagement in learning (Santos et al. 2016). Finally, VR supports embodied learning (Johnson-Glenberg 2018). Because the mind, body, and environment are intricately connected in a VR space, the physical environment shapes their thinking, and by extension, their learning.

These affordances of VR technology have driven scholars’ interest in developing virtual environments for language teaching. Early studies concentrated on the use of low-immersion VR, ranging from a two-dimensional environment with a text-based chat system (e.g., MOO), to a 3D environment that involves multiple communication channels (e.g., text/voice chat) and high-quality visual stimuli (e.g., Second Life). Studies often analyzed the amount and patterns of interaction occurring in these virtual environments (Peterson 2006), as well as language development resulting from VR-based interaction (Cantó et al. 2014).

Studies in the subsequent phase shifted to high-immersion VR, but focused more on learners’ perceptions of the VR experience (e.g., enjoyment, anxiety, confidence, motivation) rather than their language development. This tendency is evident in the research syntheses and meta-analyses from the last few years. In Dhimolea et al.’s (2022) review of 32 studies using high-immersion VR (published between 2015 and 2020), over 60 % of the studies focused on learners’ perceptions. Similarly, in Qiu et al.’s (2023) meta-analysis of 23 studies (published from 2015 to 2021), about half of the studies measured learners’ perceptions and affective factors. Study findings revealed learners’ positive perceptions and acceptance of high-immersion VR for language learning. For example, Xie et al. (2019) documented heightened interest and motivation among L2 Chinese learners who used interactive VR tools (Google Expeditions) and presented virtual tours to sightseeing spots in China.

In summary, a surge of studies have examined the efficacy of immersive VR for L2 learning. Yet, these studies have largely addressed learners’ affective domain, documenting their perceived VR experience rather than language development. To advance the field, this synthesis focuses on studies that examined language development as outcomes of VR experiences. The focus on language knowledge and skills as learning outcomes aligns with the goal of TBLT by assessing the efficacy of tasks in promoting language development. We will critically review characteristics of the tasks used in VR studies in relation to such learning outcomes. Such a review can contribute to the TBLT-TELL intersection that has been promoted by a number of scholars over the last two decades (e.g., González-Lloret and Ortega 2014; Kim and Namkung 2024; Smith and González-Lloret 2021; Ziegler 2016). Various affordances of VR technologies – immersion and sense of presence, embodiment, and multimodal input – are likely to contribute to the design characteristics of a pedagogical task, helping us develop tasks that are meaning-focused, contextualized, and authentic. Hence, it is critical to examine potential of VR-based tasks in relation to the TBLT principles. By reviewing the current VR studies from the TBLT perspective, we can understand whether current VR studies are sufficiently task-based, and how VR can be more fully integrated into the framework of technology-mediated TBLT. The following research questions are addressed in this synthesis review:

RQ1: What features (in terms of volume, range, and methods) characterize the studies investigating the efficacy of VR-assisted language teaching?

RQ2: To what extent are the tasks used in the VR studies task-based according to the TBLT principles?

3 Methods

The current synthesis conducted a principled and exhaustive literature search by locating all relevant empirical studies available at the time of writing this paper (September of 2024). We located all the studies that used VR as a platform for L2 instruction and then coded them for study features. We also coded the instructional tasks appearing in the studies according to characteristics of a pedagogical task established in the TBLT literature. The following section explains the literature search process and coding criteria. To be clear, not all studies were grounded in the TBLT framework. We identified studies using VR as a platform of L2 instruction and then analyzed characteristics of their instructional tasks according to the TBLT’s task principles.

3.1 Retrieval of Studies and Inclusion/Exclusion Criteria

We used three major electronic databases in linguistics, education, and psychology (LLBA, ERIC, and PsycInfo), two search terms (second language, virtual reality), and two broad search criteria (peer reviewed articles written in English) to locate primary studies. We set no limit on time period of the publications. The initial search brought up 175 studies in LLBA, 158 studies in ERIC, and 160 studies in PsycInfo. We removed overlapping studies across the databases, and then applied the following criteria for further screening:

The study is a data-driven empirical study, not a review article or a position paper.

The study used immersive VR technology (high- or low-immersion VR) to design and implement language learning tasks.

The study assessed learning outcomes (i.e., language knowledge and skills) resulting from the tasks.

The study gave sufficient information about VR environments and tasks used.

The search process yielded 33 primary studies published between 2014 and 2024. Full references of these studies are available in Appendix B.

3.2 Coding Procedure

A coding scheme was developed to analyze the methods and results of the 33 studies (see Appendix A). There were six coding categories addressing the first research question: study identification (i.e., author, year), school level, instructional context, study length, target language, target area of learning, type of VR used (e.g., high- or low-immersion) and implementation of VR training, study design (e.g., between or within group), descriptions of VR tasks, assessment measures, and findings of learning outcomes. These study features were selected as criteria for capturing trends of VR-assisted language learning studies based on the existing synthesis reviews (Dhimolea et al. 2022).

The second research question – the degree to which the VR tasks are task-based – was addressed by coding the studies’ VR tasks for defining characteristics of a pedagogical task established in the TBLT literature (Ellis 2003; Long 1985, Nunan 2004; Skehan 1998; Van den Branden 2006). VR tasks were assessed to see if they include these features (see also 2.1):

Tasks have a primary focus on meaning over form. Tasks focus on meaningful language use rather than isolated linguistic forms.

Tasks are authentic. Tasks represent what a learner is likely to do in real life.

Tasks have a non-linguistic outcome to achieve, rather than just a display of language.

These task features were coded categorically by assigning “1” when the feature was present, and “0” when the feature was absent. Two coders with backgrounds in applied linguistics independently coded 30 % of the studies, reaching 92 % agreement rate. Discrepancies between the two coders were resolved via discussion. Then, the first coder coded the rest of the studies.

Notably, of the 33 primary studies, only 4 studies were published before 2020 (i.e., 2014–2019). The remaining 29 studies appeared after 2020, indicating that empirical investigations of immersive VR for L2 learning are fairly recent. This trend is particularly true with those studies focusing on language development, since existing research syntheses (Dhimolea et al. 2022; Qiu et al. 2023) found a majority of studies on learners’ perceptions of the VR experience rather than actual language gains.

4 Results

4.1 Study Features and Trends

This section presents results for the first research question, highlighting study features in terms of their volume, range, and methods.

4.1.1 School Level and Learning Context

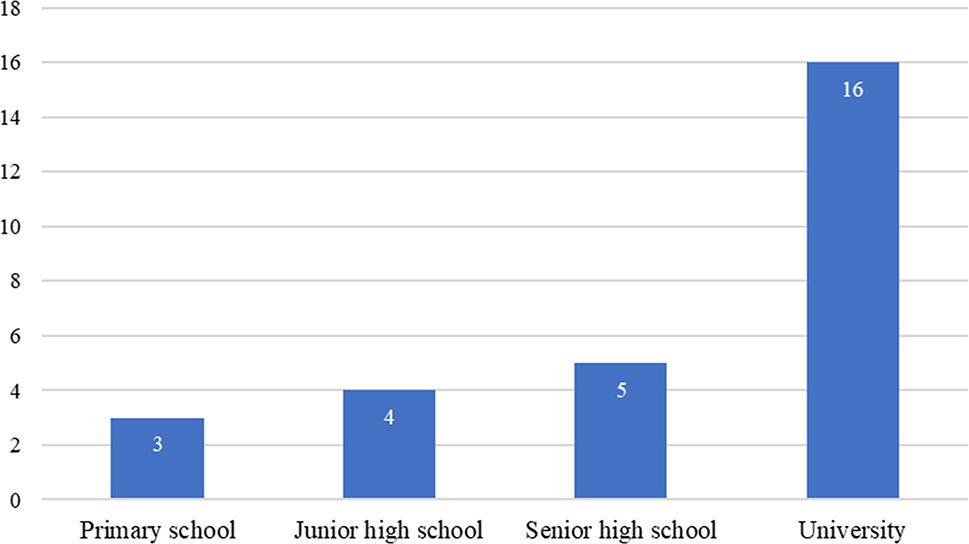

Almost half of the studies (n = 16) involved university students, making this population the most represented one in the examined studies (Figure 1). In contrast, the primary school level is the least represented (n = 3), and the junior and senior high school levels have relatively similar representation, with four and five studies, respectively.

School level.

In terms of learning contexts, we found that nine studies were conducted in a laboratory setting, while 24 studies were conducted in a classroom setting.

4.1.2 Types of VR Hardware and Software Used

Figure 2 displays VR hardware used in the studies. Only five studies used low-immersion VR (computer desktop). The remaining 26 studies adopted high-immersion VR using devices such as HTC VIVE, Oculus, Google Cardboard, and Samsung. This trend is noticeable because Parmaxi’s (2023) synthesis of 26 studies (published from 2015 to 2018) found no high-immersion studies. The current findings indicate that only recently researchers started using high-immersion VR for L2 teaching.

VR hardware. Note. Makers of HMD include HTC VIVE, Oculus, Samsung, Pico, DPVR, and VRBOX. Google Cardboard is the only VR cardboard type found in the studies.

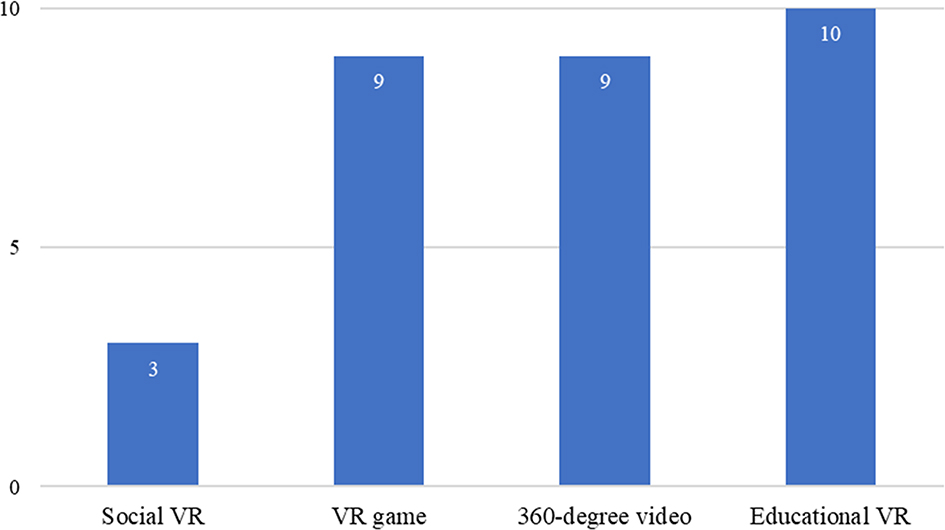

We coded VR software into four categories: social VR, educational VR, VR game, and 360-degree video (Figure 3). Social VR refers to software designed for social interaction where users can communicate with others in a virtual environment (e.g., Second Life, vTime XR). Educational VR is created for language learning and teaching purposes, including applications such as Mondly, Virtual Reality Life English, and Meet-Me. VR games are VR applications or VR development software involving gameplay that engages players in interactive, simulative experiences (e.g., The Line, Unity). 360-degree video is a video platform that provides a panoramic view and allows learners to explore virtual surroundings (e.g., Google Expedition, Google Earth VR). As presented in Figure 4, educational VR is the most utilized VR software (n = 10), followed closely by VR games (n = 9) and 360-degree videos (n = 9). Social VR, however, is less popular, used only in three studies.

VR software. Note. Social VR includes Second Life and vTime XR. Educational VR includes Mondly, Virtual Reality Life English, Meet-Me, Edmersiv, and NeuroVr2. VR games include The Line, Unity, and Angels and Demigods. 360-degree video platforms include Google Expedition, Google Earth VR, EduVenture VR, YouTube 360, Aparat 360, and Cospaces.

Target language.

Specifically, Unity was the most widely used VR software, through which researchers developed 3D language-learning games. Macedonia et al. (2023) designed 18 3D pictures displaying various objects (e.g., a phone). These objects had accompanying vocabulary in written and audio input. The group who were able to hold the objects virtually learned vocabulary better than other groups who just saw the vocabulary. In Peixoto et al. (2023), two virtual environments were designed using Unity: a passive VR group where participants sat next to avatars and listened to dialogues, and an interactive VR group where participants transited to different places and interacted with avatars. Google Expedition was also common, usually together with Google Cardboard as the hardware, providing panoramic views. For example, both Ebadi and Ebadijalal (2022) and Xie et al. (2021) incorporated role-plays so participants could act as tour guides, while in Hoang et al. (2023), participants presented the tours they designed in a virtual space.

In terms of training on VR tools, 25 out of 33 studies reported providing some form of VR training to participants prior to instruction. Training involved both instructor/researcher-guided sessions and opportunities for participants to familiarize themselves with VR tools. Only three studies reported the actual length of training, ranging from 10 minutes to 1.5 hour. No studies assessed the impact of training on task performance or learning outcomes. No studies addressed teacher training for using VR in language instruction.

4.1.3 Target Language

The target language in the primary studies is overwhelmingly English (22 studies). Chinese came in second (5 studies), followed by Finnish (1), Italian (1), Czech (1), French (1), Thai (1), and Japanese (1), as shown in Figure 4.

4.1.4 Target Area of Learning

The target area of learning varied; 12 studies taught vocabulary, which emerged as the most popular area (Figure 5). VR technology creates immersive and authentic environments where learners can interact with scenario-specific vocabulary in context. Feng and Ng (2024) incorporated 90 vocabulary items representing household and furniture in a virtual room. Learners were able to click on an item in the room and access the spelling and pronunciation of the corresponding English word. In Li et al.’s (2022) study, geography majors were immersed into a virtual museum where they experienced the processes of the hydrologic cycle. Voice and text input was provided to facilitate their learning of the target vocabulary.

Target area of learning.

Speaking skill was the second most popular area (seven studies). Two trends emerged in the studies: (1) using VR as an input provider for structured speaking practice and (2) using VR to create a social, interactive space for spontaneous speaking practice. The first trend is often seen in the studies where learners were situated in VR environments (e.g., museums), received input, and practiced speaking (e.g., roleplay as a museum tour guide) (e.g., Ebadi and Ebadijalal 2022; Hoang et al. 2023; Lin et al. 2023; Wu and Hung 2022; Xie et al. 2021). The second trend is seen in the studies where learners engaged in conversational activities using a social VR application (e.g., vTime XR). Learners either interacted with a built-in avatar or other users in a virtual setting (e.g., Ou Yang et al. 2020; Thrasher 2022).

The length of instruction in these areas also varied, ranging from 20 minutes to one semester (10 sessions), with the majority falling in one session.

4.1.5 Study Design

Twenty-one studies adopted the between-group design, while 12 implemented the within-group design. Among the 21 between-group studies, we noticed two major trends: (a) studies comparing the experimental group who received VR-based instruction and the control group who did not and (b) studies comparing learning outcomes between groups under different VR conditions. Among the studies in (a), 17 showed that the VR condition was more effective than the control condition. The studies in (b) mainly evaluated interactivity and scaffolding in the VR condition. For example, both Macedonia et al. (2023) and Peixoto et al. (2023) compared a traditional VR environment where learners merely observed the VR space and received input, with a more interactive VR environment where learners engaged with the stimuli sensorimotorly (i.e., grasping the objects) or linguistically (i.e., interacting with avatars for language practice). Wang et al. (2021) and Zhao and Yang (2023) investigated the role of scaffolding provided by the VR environment. In Wang et al. (2021), one group received linguistic support (vocabulary information) when watching a VR video, while the other group did not. In Zhao and Yang (2023), a mind map was provided to one group of learners, which helped them organize learned vocabulary and sentences.

In both study designs (between and within-group), VR was used to provide instruction in a variety of ways. The most common use of VR was to contextualize language practice. For example, target vocabulary items associated with particular domains (e.g., police station, car garage, hydrologic cycle) were presented with the objects situated in the domains. Participants interacted with the objects by clicking them, picking them up, and moving them around. Through these kinesthetic activities, participants learned vocabulary referring to the objects. Another common way of contextualizing practice involved role-plays and simulations. Taking an assigned role, participants engaged in a conversation with a built-in avatar acting in pre-recorded speech (e.g., ordering a drink, making a hotel reservation, purchasing a toy). Besides contextualized practice, VR was used to provide authentic, multi-modal input. For example, participants watched a 360-degree video to learn about a specific place (e.g., subway station in Tokyo), read an interactive story book, listened to someone talking (e.g., tour guide explaining the surroundings). These input-based activities were often followed by productive activities such as writing a description of a place or giving a virtual tour of a place.

4.1.6 Assessment Measures Used and Learning Outcome Findings

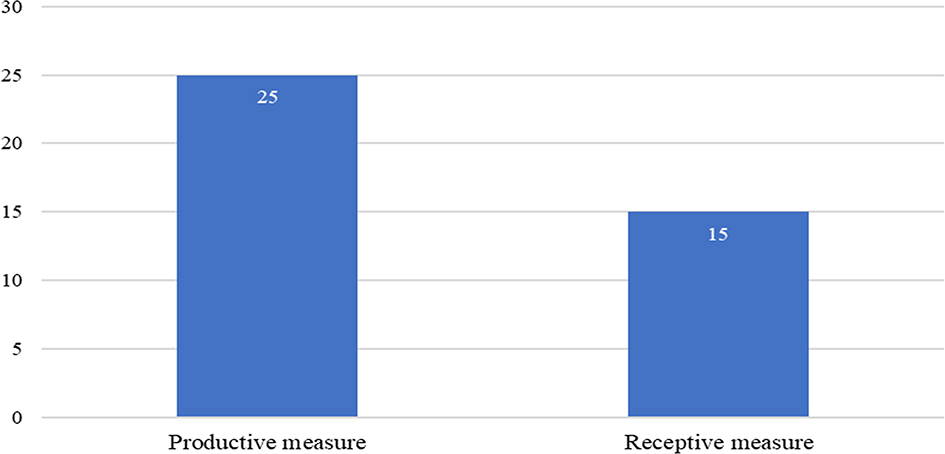

Twenty-five studies used productive measures, while 15 studies used receptive measures (Figure 6). Types of productive measures tended to be less controlled, allowing participants flexibility in expressing ideas. Those measures included essay writing (e.g., Chen et al. 2020; Dolgunsöz et al. 2018; Khodabandeh 2022) and monologic/dialogic speaking tasks (e.g., Ebadi and Ebadijalal 2022; Lin et al. 2023; Ou Yang et al. 2020). However, when assessing vocabulary learning, productive measures were generally more controlled, such as meaning-and-form recall tests (e.g., Fuhrman et al. 2021; Lai and Chen 2023; Macedonia et al. 2023) and fill-in-the-blanks tests (e.g., Feng and Ng 2024; Tai et al. 2022).

Assessment measures.

Receptive measures mostly assessed participants’ comprehension (reading and listening) and vocabulary. When assessing reading, a comprehension test was the only instrument found in the primary studies (e.g., Kaplan-Rakowski and Gruber 2024; Wang et al. 2021). In contrast, listening skills were assessed using more diverse measures, including self-reported scales (e.g., Peixoto et al. 2023), multiple-choice questions (e.g., Rahimi and Aghabarari 2024; Ye and Kaplan-Rakowski 2024), and information sequencing tasks (e.g., Ou Yang et al. 2020). Vocabulary knowledge was also assessed using different receptive measures, including multiple-choice questions (e.g., Chang et al. 2024), form-meaning matching tests (e.g., Fuhrman et al. 2021), self-reported scales (e.g., Li et al. 2022), and recognition tasks (e.g., Lai and Chen 2023; Repetto et al. 2015).

In terms of learning outcomes, 17 out of 21 between-group studies showed statistically significant gains in the experimental group (EG) using VR than the non-VR control group (CG). For example, after learning workplace English of auto mechanics using 360 VR images, EG participants in Chen and Liao (2022) showed a higher achievement score than the CG who received traditional instruction. Kaplan-Rakowski and Gruber (2024) reported significantly more accurate reading comprehension responses among the EG group learning about a story in an interactive VR environment than in a video-only condition.

Within-group studies also showed positive effects of VR on L2 learning, with 8 out of 12 reporting significant improvements from pre- to posttest. Chen et al.’s (2020) participants gained significantly higher essay scores after receiving writing instruction assisted by Google Earth VR. In Yamazaki’s (2018) study using “Meet-Me,” a Japanese 3D virtual world where learners of Japanese engaged in authentic activities (e.g., giving directions), the learners achieved significant gains in vocabulary and kanji pronunciation.

While the majority of studies reviewed demonstrated positive effects of VR on learning outcomes, some studies did not report statistically significant findings. Among them, four studies either did not conduct statistical analyses or failed to report p-values, making it difficult to draw conclusions regarding the effectiveness of VR use. Specifically, Thrasher’s (2022) study found higher ratings of speaking performance in the EG compared to the CG with small-to-medium effect sizes but she did not report p-values. Chiang et al. (2014), Watthanapas et al. (2023), and Yang et al. (2022) reported positive trends in vocabulary gains, retention rates, and speaking improvement, but these studies did not compare pre- and post-test scores using statistical tests.

Among the studies that did use statistical tests but found no significant effects, several factors were mentioned to explain the results. Dolgunsöz et al. (2018) found lower performance in the EG compared to the CG in essay writing and attributed the finding to participants’ full control when navigating the VR space. Because the participants were not directed to pay attention to specific details, it was possible that they missed critical details to include in their essays, leading to lower ratings in the content area of the essays. However, they also noted a slightly higher information retention rate in the VR group, suggesting long-term memory benefits in the VR context. Nicolaidou et al. (2023) found that VR was more effective for ill-structured knowledge, i.e., knowledge that does not follow a fixed pattern and varies depending on the situation (e.g., discourse coherence) (Spiro et al. 1994). However, VR was not effective for well-structured knowledge, which can often be memorized and applied consistently (e.g., vocabulary). Therefore, VR effect is not all-encompassing across different areas of linguistic knowledge. Similarly, Wang et al. (2021) reported that, while VR with visual prompts was more effective than VR without visual prompts, that condition did not outperform the CG in all areas of learning, indicating that VR’s effectiveness may be task-dependent. In other words, the benefits of VR might vary depending on the specific learning task, as some tasks align more naturally with VR’s interactive and immersive affordances. These findings suggest that, while VR-assisted TBLT has potential, its effectiveness varies based on task design, learning outcome assessment, and target knowledge areas.

4.2 Task Characteristics

This section presents results for the second research question: the extent to which the VR tasks used in the studies are task-based. We coded each study’s task for three defining characteristics of a task: focus-on-meaning, authenticity, and presence of non-linguistic outcomes (see Section 3.2 Coding procedures). Since several studies involved more than one VR condition, a total of 39 VR tasks were coded. Figure 7 displays the findings.

Task characteristics.

Tasks focusing on meaning were common, with 31 emphasizing meaningful language use over isolated linguistic forms. Authenticity was also a notable characteristic, with 21 tasks designed to represent real-world scenarios. By contrast, the feature of non-linguistic outcomes was observed in 13 tasks only.

Some tasks incorporated none of these task characteristics (Feng and Ng 2024; Repetto et al. 2015; Watthanapas et al. 2023; Zhao and Yang 2023). In these studies, VR environments primarily served as spaces for isolated language practice. In Feng and Ng (2024), participants navigated a virtual room filled with objects with their labels, and the task was limited to vocabulary recall. Similarly, Watthanapas et al. (2023) used a task where L2 Thai learners reordered words to formulate grammatically correct sentences. These tasks demonstrate a common limitation: the VR environments’ potential was not fully realized to create context-rich experiences that recreate lifelike experiences, which is the core of the TBLT approach.

In contrast to the tasks summarized above, 11 tasks focused on meaning over form while leaving out the characteristics of authenticity and non-linguistic outcomes (e.g., Alemi and Khatoony 2022; Chang et al. 2024; Chen and Liao 2022; Dolgunsöz et al. 2018; Kaplan-Rakowski and Gruber 2024; Lan and Tam 2023; Macedonia et al. 2023; Nicolaidou et al. 2023; Tai et al. 2022). For example, Alemi and Khatoony (2022) designed a VR game-based task for pronunciation learning. Young learners watched 10 animations (e.g., ordering food) and interacted with a robot afterward via quizzes. Although the task provided a meaningful context, it remained controlled without interactions or goals beyond linguistic gains. In Dolgunsöz et al. (2018), participants watched a VR video and wrote a paragraph based on the content. This task also focused on meaning but did not engage learners with lifelike materials or goals.

Nine other tasks emphasized both meaning and authenticity but lacked non-linguistic outcomes (e.g., Acar and Çavaş 2020; Chen et al. 2020; Ebadi and Ebadijalal 2022; Peixoto et al. 2023; Wang et al. 2021; Ye and Kaplan-Rakowski 2024). The task in Acar and Çavaş (2020) situated participants in a spaceship and allowed them to interact with surrounding objects and to read introductions about different planets. However, the only outcome of this task was to fill out worksheets with information gained in the VR environment, making the goal highly language-based. Similarly, in Ebadi and Ebadijalal’s (2022) study using Google Expedition, participants explored a museum and role-played as museum guides. This authentic task facilitated target language use in a meaningful setting, but task outcomes solely focused on language aspects.

Finally, 12 tasks included all three task characteristics (e.g., Chiang et al. 2014; Hoang et al. 2023; Khodabandeh 2022; Li et al. 2022; Lin et al. 2023; Thrasher 2022; Xie et al. 2021; Yamazaki 2018; Yang et al. 2022). In Chiang et al. (2014), participants engaged in a virtual supermarket in Second Life, where they were tasked to interact with their classmates about their purchase decisions and to eventually purchase the virtual merchandise. The task was meaning-focused and authentic as it required learners to articulate their opinions in an everyday consumer activity. It also included a non-linguistic outcome by enhancing decision-making skills. Similarly, Thrasher (2022), Yamazaki (2018), and Yang et al. (2022) integrated VR platforms where learners interacted with other users and lifelike surroundings. These studies used problem-solving tasks, emphasizing a goal beyond language practice.

In Khodabandeh’s (2022) study, participants made a banana cake following instructions given in a virtual kitchen. Authenticity and meaning were inherent in this lifelike cooking scenario. The task also focused on non-linguistic outcomes by expecting participants to make a banana cake successfully. In another study, Lai and Chen (2023) used a VR game and engaged learners in a sci-fi story where they needed to comprehend the text, choose the correct response, and survive in a battle. Meaning focus and authenticity were achieved by placing learners in an interactive environment that simulated realistic decision-making in a virtual, fictional world. Meanwhile, the setup of the task required the participants to make accurate decisions and survive the game, making the ultimate task goal broader than just linguistic performance.

Overall, this synthesis revealed a range of approaches for VR task design – focus on meaning, authenticity, and non-linguistic outcomes. While some tasks did not leverage VR’s full potential of creating lifelike scenarios and orienting learners to goal-driven experiences, there were tasks that integrated all three features successfully, calling for more attention to thoughtful task design in VR-assisted language learning studies.

5 Discussion

This synthesis intended to contribute to the growing partnership between TBLT and TELL (González-Lloret 2017; González-Lloret and Ortega 2014; Lai and Li 2011; Smith and González-Lloret 2021; Ziegler 2016), focusing on the potential of VR technology in advancing the practice of TBLT. A total of 33 primary studies were analyzed for study features to capture general trends (Research Question 1). Then, VR tasks used in those studies were analyzed for characteristics of pedagogical tasks established in the TBLT literature (Ellis 2003; Long 1985, Nunan 2004; Skehan 1998; Van den Branden 2006) (Research Question 2).

5.1 Interpretations of the Study Features

Several notable trends emerged in the review. First, most studies were published after 2020, which aligns with the trend identified in Dhimolea et al.’s (2022) synthesis. The COVID-19 pandemic might have boosted interest in VR-assisted language learning as researchers and practitioners explored opportunities for remote and hybrid learning models (Dhimolea et al. 2022). Another notable trend was the popularity of university students as the primary age group in the studies. This is consistent with Dhimolea et al. (2022) and Qiu et al.’s (2023) reviews. These findings reflect a general trend of SLA research that predominantly relies on convenience samples drawn from university student populations (Goldfroid and Andringa 2023). In addition, underrepresentation of younger participants implies that using VR in primary/secondary education may be challenging due to limited budgets and infrastructure.

Frequent use of high-immersion VR devices (as opposed to low-immersion) demonstrates the preference toward the more immersive experiences provided by more advanced VR equipment. This trend is probably because of the distinct advantages of high-immersion VR (use of a HMD with hand controllers) over low-immersion VR (use of a desktop computer with a keyboard and a mouse). As Makransky and Peterson (2021) noted, high-immersion VR has two distinct advantages: enhanced sense of presence and learner agency. Rich sensory information in the high-immersion context helps learners experience a greater degree of representational fidelity. Learners also have a greater control over their actions, which in turn promotes their sense of agency. Recognizing these advantages, along with increasing accessibility and affordability of VR devices, it seems that more scholars prefer using high-immersion VR in recent years.

In terms of VR software, educational VR designed specifically to facilitate L2 learning was most widely used in the studies. This contrasts with Dhimolea et al.’s (2022) results, which found only six studies using VR applications created for educational purposes. The shift is likely to indicate the field’s interest in designing VR content with specific pedagogical goals. This shift might also reflect a closer collaboration between educators, VR developers, and researchers in the field.

Vocabulary was the most investigated learning area in the primary studies, which was also found in Dhimolea et al. (2022) and Qiu et al.’s (2023) syntheses. The affordances of VR technology for contextualizing vocabulary can facilitate form-meaning connection building and retention. However, vocabulary tasks are relatively straightforward to design, and thus more research is needed in other skill domains. Regarding learning outcomes, a majority of the studies revealed significant learning gains through use of VR, which is consistent with Qiu et al.’s (2023) findings, illustrating VR’s great potential in enhancing L2 learning. However, the effectiveness of VR-assisted learning is not uniform across all studies, with some reporting non-significant results due to factors such as task design, learning outcome assessment, and target knowledge areas. Future research should investigate which areas of linguistic and cognitive knowledge are more effectively enhanced through VR. Researchers should also optimize task deign to balance between learner autonomy and structured instruction, ensuring that learning outcomes are consistent with interactions that learners experience in a VR space.

5.2 Interpretations of the VR Task Characteristics

Turning to the analysis of task characteristics from the TBLT perspective, synthesis results showed that the characteristic of focus-on-meaning was most represented in the VR tasks. About 80 % of the study tasks had this quality. This is not surprising because the primary affordance of VR involves immersion and multimodal input, which help generate a strong sense of presence. Being situated in a 3D panoramic animation viewed from the first-person perspective, learners feel embodied in the virtual space. Taking advantage of these affordances, existing VR tasks are often designed to promote lifelike simulations and contextualized practice in which meaning is the primary focus of language use. VR tasks rarely present grammar or vocabulary exercises targeting isolated linguistic forms.

While not as common as the first task characteristic, authenticity was also a noticeable feature found in 60 % of the VR tasks. By adopting the VR technology, researchers and teachers can develop tasks that closely correspond to real-life activities. VR can provide a first-hand experience with the events and activities that are likely to occur in real-world settings (e.g., guiding tourists through cultural sights). Sense of physical presence, realistic sensory experience, multimodal communication, and a 3D view of the environment all help enhance authenticity of the simulations. Other affordances of VR, like interactivity and its capacity to promote autonomy, also contribute to task authenticity. Just like in real-life settings, learners can explore the VR space freely at their own pace. They can decide where to go, what to look at, and which objects to touch. These decision-making routines, along with their capability of interacting with the environment directly, add to the authenticity of VR tasks.

These findings about the first two task characteristics indicate that VR technologies have great potential to reinforce the principles of TBLT by providing simulations of real-world environments where rich interaction among learners takes place. The immersive, multisensory interface available in a VR space presents unique experiences which, in keeping with TBLT, are perceived as realistic and meaningful.

Unlike the first two task characteristics, the characteristic of non-linguistic outcomes was less common (less than 40 % of the VR tasks). Even in the tasks that are highly meaning-driven and authentic, task outcomes were either not specified and thus lacking goal-orientation, or were limited to the display of linguistic knowledge and skills. For example, in Chen et al.’s (2020) study using Google Earth, learners of English took a virtual trip to their favorite city, where they walked through the streets and explored treasures they found. They also used various VR resources (e.g., placemark, historical imagery function) to learn about the history and location of the city. While these tasks are meaning-focused and authentic, task outcomes were limited to the linguistic display writing an essay about the city, which was scored on language-use aspects (e.g., language use, organization).

In summary, VR tasks are naturally meaning-focused and authentic because these characteristics are inherent to the affordances of VR. VR’s capacity to provide full immersion, embodiment, diverse interactive modes, and autonomy typically generate opportunities for meaningful, contextualized language use. In other words, these VR affordances are not compatible with form-focused drills and exercises. However, the task characteristic of non-linguistic outcomes was under-represented because this feature is not integral to the VR technology or its affordances. Unless researchers and teachers deliberately attend to this feature in task design to create a task that brings out non-linguistic outcomes, task completion tends to end up with a mere display of language use, just like tasks designed outside of the TBLT framework. Under TBLT, language abilities are expected to develop as a byproduct of task completion (e.g., Ellis 2009; Samuda and Bygate 2008; Van den Branden 2006). Hence, pedagogical tasks, by design, should have explicitly stated goals to achieve, and the goals should be genuine to what learners accomplish in real-life settings (i.e., non-linguistic outcomes). The findings from this synthesis indicate that this specific task characteristic, which is researcher/teacher-inherent rather than technology-inherent, requires more explicit attention in VR-assisted language learning studies if VR tasks can qualify as pedagogical tasks within the TBLT framework.

In conclusion, the current synthesis findings contribute to the ongoing call for the TBLT-TELL partnership (González-Lloret 2017; Smith and González-Lloret 2021; Thomas and Reinders 2010; Ziegler 2016). As González-Lloret and Ortega (2014) argued more than a decade ago, to realize the full potential of technology-mediated TBLT, researchers and teachers have to attend to “the specific affordances of a technology tool and the environment it inhabits, and specific tasks or task types that promote language learning, as well as how these elements interact” (Smith and González-Lloret 2021, p. 519). The current findings reinforce these claims. The findings showed that certain affordances of immersive VR are more naturally transferrable to task design, but others are not, requiring conscious implementation. Specifically, affordances such as sense of presence, embodiment, and multimodal input can generate opportunities for meaningful, contextualized language use. As a result, tasks using VR technology are naturally meaning-oriented and reflective of real-life activities. However, these affordances do not automatically push learners toward achieving real-life, non-linguistic outcomes. Teachers and researchers have to consciously include everyday goals (e.g., planning a trip, organizing a party, writing emails) into task outcomes. Hence, it is critical to closely study the affordances of specific technologies in given environments to understand what such technologies can offer for language learning and how they need to be complemented to further align with the TBLT principles. Such thinking can promote mutual benefits of the TBLT and TELL fields in their practice of technology-mediated TBLT.

6 Implications and Future Directions

6.1 Limitations of the Current Study

The current paper synthesized existing VR-based studies to evaluate their instructional tasks from the TBLT perspective. Although the study conducted an exhaustive literature review, the search process was limited to peer-reviewed journal articles. Hence, future review can expand the search to include books and book chapters. Another limitation is that the current study conduced a narrative review describing general trends and characteristics of primary studies. Future studies can incorporate a different method such as meta-analysis to compare effects of VR-assisted language instruction across studies, combined with analyses of moderator variables (e.g., instructional context, instructional length, types of outcome measures used, target knowledge and skill areas).

6.2 Implications for Teaching

Since only a few studies have integrated VR technology into the TBLT practice and curriculum (Baralt et al. 2023; Smith and McCurrach 2021), a number of issues remain to achieve a full implementation of VR-based TBLT. First and foremost, existing VR tasks can be re-designed with particular attention to promoting non-linguistic outcomes. For example, in Chen et al.’s (2020) study using Google Earth and virtual tours, students wrote an essay about the tours that were assessed from linguistic perspectives (see the Discussion section). This task outcome can be modified to produce non-linguistic outcomes by posting the tour in a travel magazine or writing an email inviting people to the tour. When designing a task with a non-linguistic outcome, it is critical to consider learners’ real-world needs and the relevance of the VR tasks to their lives so successful task completion can promote real-life language use.

Another future direction relates to technological competence (Chong and Reinders 2020; Smith and González-Lloret 2021). Learners need technological competence to engage in tasks, and teachers need sufficient training for guiding learners through task completion. They need to be educated about the affordances of immersive VR and resources with VR tools – what they are and how to use them. Therefore, comprehensive VR training prior to the main task is essential. Training should involve familiarizing participants with both the technical aspects of VR hardware and specific interactions required by the task. Training can help minimize the cognitive load coming from navigating VR technology and reduce the novelty effect (Dhimolea et al. 2022). Although majority of the studies in this synthesis (25 out of 33 studies) provided some form of VR training to participants, transparency in the content of VR training is important when reporting study findings. We encourage researchers to provide detailed descriptions of the training protocols, which help readers evaluate the extent to which training might have influenced the results while supporting replicability in future research. We also emphasize that future research should assess the impact of VR training on learning outcomes, as well as issues related to teacher training with VR use.

6.3 Implications for Research

Since the trend of VR-based TBLT is only incipient, core research topics of TBLT have not been addressed much. One line of such research includes impact of task types (e.g., cognitively complex vs. simple task) and task conditions (e.g., availability of planning time) on learners’ output (e.g., quality of linguistic forms) and learning outcomes (e.g., acquisition of linguistic forms). These topics have been widely researched and deserve attention in future VR-TBLT studies. For example, learners’ task performance can be compared according to the availability of planning time to see whether sufficient planning can lead to better performance. Task complexity can be operationalized in terms of the number of objects, artifacts, and people that learners need to interact with while completing the task in a VR space. A complex task can involve a greater number of people in a wider space (e.g., more rooms to visit), while a simple task can take place in a more constrained space. As these examples show, if task types and task conditions can be operationalized according to the affordances of VR (e.g., degree of interactivity with the environment), future research can expand the options of task design and implementation, contributing to the current practice of TBLT.

Acknowledgments

We would like to thank two anonymous reviewers for their constructive feedback. We are responsible for all the errors that may remain in the manuscript.

-

Research ethics: Not applicable.

-

Informed consent: Not applicable.

-

Author contributions: All authors have accepted responsibility for the entire content of this manuscript and approved its submission.

-

Use of Large Language Models, AI and Machine Learning Tools: None declared.

-

Conflict of interest: All authors state no conflict of interest.

-

Research funding: None declared.

-

Data availability: Not applicable.

Appendix A: Coding Framework

| Variable | Operationalization |

|---|---|

| Study identification | Open; including author(s) and year of publication |

| School level | Primary school; junior high school; senior high school; university |

| Learning context | Classroom; lab |

| VR training | No; yes, including length |

| VR hardware used | HMD; cell phone with VR cardboard; computer desktop |

| VR software used | Social VR; VR game; 360-degree video; educational VR |

| Target language | Open: the target language that was learned by participants |

| Target area of learning | Reading; listening; writing; speaking; vocabulary; grammar; pronunciation; professional English; conversation strategies |

| Study design | Between-groups design; within-groups design |

| Assessment measures | Productive measure; receptive measure |

| Learning outcomes | Statistically significant learning gains; statistically non-significant learning gains |

| Task characteristics | Focus on meaning; authenticity; non-linguistic outcome |

Appendix B: Primary Studies Coded

Acar, A., and Çavaş, B. 2020. The Effect of Virtual Reality Enhanced Learning Environments on the 7th-Grade Students’ Reading and Writing Skills in English. Malaysian Online Journal of Educational Sciences 8(4): 22–33.Search in Google Scholar

Alemi, M., and Khatoony, S. 2022. “Virtual Reality Assisted Pronunciation Training (VRAPT) for Young EFL Learners.” Teaching English with Technology 20(4): 59–81.Search in Google Scholar

Chang, H., J. Park, and J. Suh. 2024. “Virtual Reality as a Pedagogical Tool: An Experimental Study of English Learner in Lower Elementary Grades.” Education and Information Technologies 29: 4809–42, https://doi.org/10.1007/s10639-023-11988-y.Search in Google Scholar

Chen, H.-L., and Y.-C. Liao. 2022. “Effects of Panoramic Image Virtual Reality on the Workplace English Learning Performance of Vocational High School Students.” Journal of Educational Computing Research 59 (8): 1601–1622. https://doi.org/10.1177/0735633121999851.Search in Google Scholar

Chen, Y., T. J. Smith, C. S. York, and H. J. Mayall. 2020. “Google Earth Virtual Reality and Expository Writing for Young English Learners from a Funds of Knowledge Perspective.” Computer Assisted Language Learning 33 (1–2): 1–25. https://doi.org/10.1080/09588221.2018.1544151.Search in Google Scholar

Chiang, T. H. C., S. J. H. Yang, C. S. J. Huang, and H.-H. Liou. 2014. “Student Motivation and Achievement in Learning English as a Second Language Using Second Life.” Knowledge Management & E-Learning 6 (1): 1–17.10.34105/j.kmel.2014.06.001Search in Google Scholar

Dolgunsöz, E., G. Yıldırım, and S. Yıldırım. 2018. “The Effect of Virtual Reality on EFL Writing Performance.” Journal of Language and Linguistic Studies 14 (1): 278–292.Search in Google Scholar

Ebadi, S., and M. Ebadijalal. 2022. “The Effect of Google Expeditions Virtual Reality on EFL Learners’ Willingness to Communicate and Oral Proficiency.” Computer Assisted Language Learning 35 (8): 1975–2000, https://doi.org/10.1080/09588221.2020.1854311.Search in Google Scholar

Feng, B., and L.-L. Ng. 2024. “The Spatial Influence on Vocabulary Acquisition in an Immersive Virtual Reality-Mediated Learning Environment.” International Journal of Computer-Assisted Language Learning and Teaching 14 (1): 1–17. https://doi.org/10.4018/IJCALLT.339903.Search in Google Scholar

Fuhrman, O., A. Eckerling, N. Friedmann, R. Tarrasch, and G. Raz. 2021. “The Moving Learner: Object Manipulation in Virtual Reality Improves Vocabulary Learning.” Journal of Computer Assisted Learning 37 (3): 672–683. https://doi.org/10.1111/jcal.12515.Search in Google Scholar

Hoang, D. T. N., M. McAlinden, and N. F. Johnson. 2023. “Extending a Learning Ecology with Virtual Reality Mobile Technology: Oral Proficiency Outcomes and Students’ Perceptions.” Innovation in Language Learning and Teaching 17 (3): 491–504, https://doi.org/10.1080/17501229.2022.2070626.Search in Google Scholar

Kaplan‐Rakowski, R., and A. Gruber. 2024. “An Experimental Study on Reading in High-Immersion Virtual Reality.” British Journal of Educational Technology 55 (2): 541–59, https://doi.org/10.1111/bjet.13392.Search in Google Scholar

Khodabandeh, F. 2022. “Exploring the Applicability of Virtual Reality-Enhanced Education on Extrovert and Introvert EFL Learners’ Paragraph Writing.” International Journal of Educational Technology in Higher Education 19: 1–21, https://doi.org/10.1186/s41239-022-00334-w.Search in Google Scholar

Lai, K.-W. K., and H.-J. H. Chen. 2023. “A Comparative Study on the Effects of a VR and PC Visual Novel Game on Vocabulary Learning.” Computer Assisted Language Learning 36 (3): 312–345. https://doi.org/10.1080/09588221.2021.1928226.Search in Google Scholar

Lan, Y.-J., and V. T. T. Tam. 2023. “The Impact of 360° Videos on Basic Chinese Writing: A Preliminary Exploration.” Educational Technology Research and Development 71: 539–62, https://doi.org/10.1007/s11423-022-10162-4.Search in Google Scholar

Li, Y., S. Ying, Q. Chen, and J. Guan. 2022. “An Experiential Learning-Based Virtual Reality Approach to Foster Students’ Vocabulary Acquisition and Learning Engagement in English for Geography.” Sustainability 14 (22): 15359. https://doi.org/10.3390/su142215359.Search in Google Scholar

Lin, V., N. E. Barrett, G.-Z. Liu, N.-S. Chen, and M. S.-Y. Jong. 2023. “Supporting Dyadic Learning of English for Tourism Purposes with Scenery-Based Virtual Reality.” Computer Assisted Language Learning 36 (5–6): 906–42, https://doi.org/10.1080/09588221.2021.1954663.Search in Google Scholar

Macedonia, M., B. Mathias, A. E. Lehner, S. M. Reiterer, and C. Repetto. 2023. “Grasping Virtual Objects Benefits Lower Aptitude Learners’ Acquisition of Foreign Language Vocabulary.” Educational Psychology Review 35. https://doi.org/10.1007/s10648-023-09835-0.Search in Google Scholar

Nicolaidou, I., P. Pissas, and D. Boglou. 2023. “Comparing Immersive Virtual Reality to Mobile Applications in Foreign Language Learning in Higher Education: A Quasi-Experiment.” Interactive Learning Environments 31 (4): 2001–15, https://doi.org/10.1080/10494820.2020.1870504.Search in Google Scholar

Ou Yang, F.-C., F.-Y. R. Lo, J. Chen Hsieh, and W.-C. V. Wu. 2020. “Facilitating Communicative Ability of EFL Learners via High-Immersion Virtual Reality.” Educational Technology & Society 23 (1): 30–49.Search in Google Scholar

Peixoto, B., L. C. P. Bessa, G. Gonçalves, M. Bessa, and M. Melo. 2023. “Teaching EFL with Immersive Virtual Reality Technologies: A Comparison with the Conventional Listening Method.” IEEE Access 11: 21498–21507. https://doi.org/10.1109/ACCESS.2023.3249578.Search in Google Scholar

Rahimi, M., and M. Aghabarari. 2024. “The Impact of Virtual Reality Assisted Listening Instruction on English as a Foreign Language Learners’ Comprehension and Perceptions.” International Journal of Technology in Education 7 (2): 239–258, https://doi.org/10.46328/ijte.741.Search in Google Scholar

Repetto, C., B. Colombo, and G. Riva. 2015. “Is Motor Simulation Involved During Foreign Language Learning? A Virtual Reality Experiment.” SAGE Open 5 (4). https://doi.org/10.1177/2158244015609964.Search in Google Scholar

Tai, T.-Y., H. H.-J. Chen, and G. Todd. 2022. “The Impact of a Virtual Reality App on Adolescent EFL Learners’ Vocabulary Learning.” Computer Assisted Language Learning 35 (4): 892–917, https://doi.org/10.1080/09588221.2020.1752735.Search in Google Scholar

Thrasher, T. 2022. “The Impact of Virtual Reality on L2 French Learners’ Language Anxiety and Oral Comprehensibility: An Exploratory Study.” CALICO Journal 39 (2): 219–238, https://doi.org/10.1558/cj.42198.Search in Google Scholar

Wang, Z., Y. Guo, Y. Wang, Y.-F. Tu, and C. Liu. 2021. “Technological Solutions for Sustainable Development: Effects of a Visual Prompt Scaffolding-Based Virtual Reality Approach on EFL Learners’ Reading Comprehension, Learning Attitude, Motivation, and Anxiety.” Sustainability 13 (24): 13977, https://doi.org/10.3390/su132413977.Search in Google Scholar

Watthanapas, N., Y.-W. Hao, J.-H. Ye, J.-C. Hong, and J.-N. Ye. 2023. “The Effects of Using Virtual Reality on Thai Word Order Learning.” Brain Sciences 13 (3): 517. https://doi.org/10.3390/brainsci13030517.Search in Google Scholar

Wu, Y.-H. S., and S.-T. A. Hung. 2022. “The Effects of Virtual Reality Infused Instruction on Elementary School Students’ English-Speaking Performance, Willingness to Communicate, and Learning Autonomy.” Journal of Educational Computing Research 60 (6): 1558–87, https://doi.org/10.1177/07356331211068207.Search in Google Scholar

Xie, Y., Y. Chen, and L. H. Ryder. 2021. “Effects of Using Mobile-Based Virtual Reality on Chinese L2 Students’ Oral Proficiency.” Computer Assisted Language Learning 34 (3): 225–245, https://doi.org/10.1080/09588221.2019.1604551.Search in Google Scholar

Yamazaki, K. 2018. “Computer-Assisted Learning of Communication (CALC): A Case Study of Japanese Learning in a 3D Virtual World.” ReCALL 30 (2): 214–231. https://doi.org/10.1017/S0958344017000350.Search in Google Scholar

Yang, H., L. Tsung, and L. Cao. 2022. “The Use of Communication Strategies by Second Language Learners of Chinese in a Virtual Reality Learning Environment.” SAGE Open 12 (4): 215824402211418. https://doi.org/10.1177/21582440221141877.Search in Google Scholar

Ye, Y., and R. Kaplan‐Rakowski. 2024. “An Exploratory Study on Practising Listening Comprehension Skills in High-Immersion Virtual Reality.” British Journal of Educational Technology 55 (4): 1651–72, https://doi.org/10.1111/bjet.13481.Search in Google Scholar

Zhao, J.-H., and Q.-F. Yang. 2023. “Promoting International High-School Students’ Chinese Language Learning Achievements and Perceptions: A Mind Mapping-Based Spherical Video-Based Virtual Reality Learning System in Chinese Language Courses.” Journal of Computer-Assisted Learning 39 (3): 1002–16.10.1111/jcal.12782Search in Google Scholar

References

Abrams, Z. I. 2016. “Possibilities and Challenges of Learning German in a Multimodal Environment: A Case Study.” ReCALL 28 (3): 343–363. https://doi.org/10.1017/s0958344016000082.Search in Google Scholar

Baralt, M. 2013. “The Impact of Cognitive Complexity on Feedback Efficacy During Online Versus Face-to-Face Interactive Tasks.” Studies in Second Language Acquisition 35: 689–725. https://doi.org/10.1017/s0272263113000429.Search in Google Scholar

Baralt, M., S. Doscher, L. Boukerrou, B. Bogosian, W. Elmeligi, Y. Hdouch, J. Istifan, A. Nemouch, T. Khachatrvan, N. Elsakka, F. Arana, G. Perez, J. Cobos-Solis, S-E. Mouchane, and S. Vassigh. 2023. “Virtual Tabadul: Creating Language-Learning Community Through Virtual Reality.” Journal of International Studies 12 (3): 168–188. https://doi.org/10.32674/jis.v12is3.4638.Search in Google Scholar

Canals, L., and Y. Mor. 2023. “Towards a Signature Pedagogy for Technology-Enhanced Task-Based Language Teaching: Defining Its Design Principles.” ReCALL 35 (1): 4–18. https://doi.org/10.1017/S0958344022000118.Search in Google Scholar

Cantó, S., R. Graff, and K. Jauregi. 2014. “Collaborative Tasks for Negotiation of Intercultural Meaning in Virtual Worlds and Video‐Web Communication.” In Technology‐Mediated TBLT: Researching Technology and Tasks, edited by N. González‐Lloret, and L. Ortega, 183–212. Amsterdam: John Benjamins Publishing Company.10.1075/tblt.6.07canSearch in Google Scholar

Chaudron, C., C. J. Doughty, Y. Kim, D. Kong, J. Lee, Y Lee, M. Long, R. Rivers, and K. Urano. 2005. “A Task-Based Needs Analysis of a Tertiary Korean as a Foreign Language Program.” In Second Language Needs Analysis, edited by M. Long, 225–261. Cambridge: Cambridge University Press.10.1017/CBO9780511667299.009Search in Google Scholar

Chen, J. C. C. 2012. “Designing a Computer-Mediated, Task-Based Syllabus: A Case Study in a Taiwanese EFL Tertiary Class.” The Asian EFL Journal Quarterly 14 (3): 63–98.Search in Google Scholar

Chong, S. W., and H. Reinders. 2020. “Technology-Mediated Task-Based Language Teaching: A Qualitative Research Synthesis.” Language Learning & Technology 24 (3): 70–86. https://doi.org/10125/44739.Search in Google Scholar

Chun, D., H. Karimi, and D. J. Sanosa. 2022. “Traveling by Headset: Immersive VR for Language Learning.” CALICO 39: 129–149. https://doi.org/10.1558/cj.21306.Search in Google Scholar

Collentine, K. 2013. “Using Tracking Technologies to Study the Effects of Linguistic Complexity in CALL Input and SCMC Output.” CALICO 30: 46–65. https://doi.org/10.1558/cj.v30i0.46-65.Search in Google Scholar

Dewey, J. 1997. Experience and Education. New York: Simon & Schuster.Search in Google Scholar

Dhimolea, T. K., R. Kaplan-Rakowski, and L. Lin. 2022. “A Systematic Review of Research on High-Immersion Virtual Reality for Language Learning.” TechTrends. https://doi.org/10.1007/s11528-022-00717-w.Search in Google Scholar

Doughty, C. J., and M. H. Long. 2003. The Handbook of Second Language Acquisition. Oxford, UK: Blackwell Publishing Ltd.10.1002/9780470756492Search in Google Scholar

East, M. 2017. “Research Intro Practice: The Task-Based Approach to Instructed Second Language Acquisition.” Language Teaching 50: 412–424. https://doi.org/10.1017/s026144481700009x.Search in Google Scholar

Ellis, R. 2003. Task-Based Language Learning and Teaching. Oxford University Press. https://doi.org/10.4000/apliut.3696.Search in Google Scholar

Ellis, R. 2009. “Task-Based Language Teaching: Sorting Out the Misunderstandings.” International Journal of Applied Linguistics 19: 221–246. https://doi.org/10.1111/j.1473-4192.2009.00231.x.Search in Google Scholar

Godwin-Jones, R. 2023. “Presence and Agency in Real and Virtual Spaces: the Promise of Extended Reality for Language Learning.” Language Learning & Technology 27 (3): 6–26.Search in Google Scholar

Goldfroid, A., and Andringa, S. 2023. Uncovering Sampling Biases, Advancing Inclusivity, and Rethinking Theoretical Accounts in Second Language Acquisition: Introduction to the Special Issue SLA for All? Language Learning 73(4): 981–1002.10.1111/lang.12620Search in Google Scholar

González-Lloret, M. 2017. “Technology and Task-Based Language Teaching.” In Language and Technology. Encyclopedia of Language and Education, edited by S. Thorne, and S. May, 1–13. Cham, Switzerland: Springer.10.1007/978-3-319-02237-6_16Search in Google Scholar

González-Lloret, M., and L. Ortega, eds. 2014. Technology-Mediated TBLT. Philadelphia, PA: John Benjamins.10.1075/tblt.6Search in Google Scholar

Johnson-Glenberg, M. C. 2018. “Immersive VR and Education: Embodied Design Principles That Include Gesture and Hand Controls.” Frontiers in Robotics and AI 5: 1–19. https://doi.org/10.3389/frobt.2018.00081.Search in Google Scholar

Kaplan-Rakowski, R., and A. Gruber. 2019. “Low-Immersion Versus High-Immersion Virtual Reality: Definitions, Classification, and Examples with a Foreign Language Focus.” InProceedings of the Innovation in Language Learning International Conference 2019. Pixel.Search in Google Scholar

Kim, Y. 2015. “The Role of Tasks as Vehicles for Learning in Classroom Interaction.” In Handbook of Classroom Discourse and Interaction, edited by N. Markee, 163–181. Malden, MA: Wiley-Blackwell.10.1002/9781118531242.ch10Search in Google Scholar

Kim, Y., and Y. Namkung. 2024. “Methodological Characteristics in Technology-Mediated Task-Based Language Teaching Research: Current Practices and Future Directions.” Annual Review of Applied Linguistics. https://doi.org/10.1017/s0267190524000096.Search in Google Scholar

Lai, C., and G. Li. 2011. “Technology and Task-Based Language Teaching: A Critical Review.” CALICO 28 (2): 498–521. https://doi.org/10.11139/cj.28.2.498-521.Search in Google Scholar

Lai, C., Y. Zhao, and J. Wang. 2011. “Task-Based Language Teaching in Online Ab Initio Foreign Language Classrooms.” The Modern Language Journal 95 (Supp. 1): 81–103. https://doi.org/10.1111/j.1540-4781.2011.01271.x.Search in Google Scholar

Lan, Y. J. 2020. “Immersion, Interaction, and Experience-Oriented Learning: Bringing Virtual Reality into FL Learning.” Language Learning & Technology 24: 1–5.10.1016/bs.plm.2020.03.001Search in Google Scholar

Liao, P. L., and K. Fu. 2014. “Effects of Task Repetition on L2 Oral (in Written Form) Production in Computer-Mediated Communication.” International Journal of Humanities and Arts Computing 8: 221–236. https://doi.org/10.3366/ijhac.2014.0109.Search in Google Scholar

Long, M. H. 1985. “A Role for Instruction in Second Language Acquisition: Task-Based Language Teaching.” In Modelling and Assessing Second Language Acquisition, edited by K. Hyltenstam, and M. Pienemann, 77–99. Bristol, UK: Multilingual Matters.Search in Google Scholar

Long, M. H. 2016. “In Defense of Tasks and TBLT: Non-issues and Real Issues.” Annual Review of Applied Linguistics 36: 5–33. https://doi.org/10.1017/s0267190515000057.Search in Google Scholar

Makransky, G., and G. B. Petersen. 2021. “The Cognitive Affective Model of Immersive Learning (CAMIL): A Theoretical Research-Based Model of Learning in Immersive Virtual Reality.” Educational Psychology Review 33 (3): 937–958. https://doi.org/10.1007/s10648-020-09586-2.Search in Google Scholar

Nielson, K. B. 2014. “Evaluation of an Online, Task-Based Chinese Course.” In Technology-Mediated TBLT: Researching Technology and Tasks, edited by M. González-Lloret, and L. Ortega, 295–322. Amsterdam, The Netherlands: John Benjamins.10.1075/tblt.6.11nieSearch in Google Scholar

Norris, J. 2015. “Thinking and Acting Programmatically in Task-Based Language Teaching: Essential Roles for Program Evaluation.” In Domain and Directions in the Development of TBLT, edited by M. Bygate, 27–57. Amsterdam: John Benjamins.10.1075/tblt.8.02norSearch in Google Scholar

Nunan, D. 2004. Task-Based Language Teaching. Cambridge: Cambridge University Press.10.1017/CBO9780511667336Search in Google Scholar

Ortega, L. 2009. “Interaction and Attention to Form in L2 Text-Based computer-Mediated Communication.” In Multiple Perspectives on Interaction, edited by A. Mackey, and C. Polio, 226–253. New York, NY: Routledge.Search in Google Scholar

Park, M., and T. Slater. 2014. “A Typology of Tasks for Mobile-Assisted Language Learning: Recommendations from a Small-Scale Needs Analysis.” TESL Canada Journal 31 (8): 93–115. https://doi.org/10.18806/tesl.v31i0.1188.Search in Google Scholar

Parmaxi, A. 2023. “Virtual Reality in Language Learning: A Systematic Review and Implications for Research and Practice.” Interactive Learning Environments 31 (1): 172–84. https://doi.org/10.1080/09588220600804087.Search in Google Scholar

Peterson, M. 2006. “Learner Interaction Management in an Avatar and Chat-Based Virtual World.” Computer Assisted Language Learning 19: 79–103. https://doi.org/10.1080/09588220600804087.Search in Google Scholar

Qiu, X. B., C. Shan, J. Yao, and Q. K. Fu. 2023. “The Effects of Virtual Reality on EFL Learning: A Meta-Analysis.” Education and Information Technologies 19 (4): 1–27. https://doi.org/10.1007/s10639-023-11738-0.Search in Google Scholar