Abstract

The rise of conspiracy theories and misinformation in digital media has sparked intense debates among scholars, journalists, and policymakers about the challenges posed by these phenomena and potential responses. However, these discussions tend to remain narrowly focused on specific issues, stakeholders, or individual-level strategies, with limited attention paid to anticipatory impact assessment. To address these shortcomings, we conducted an integrative, three-wave Delphi study involving an expert panel of 47 scholars and practitioners from 13 countries to identify current challenges, anticipate problematic trends, and develop actionable interventions. The challenges, trends, and interventions discussed span ten thematic areas, including governance of and by platforms, platform design, journalism and news media ecosystems, research and science communication ecosystems, societal dynamics, socio-political institutions, and individual behavior. The paper concludes with methodological reflections, discussing the possibilities and limitations of Delphi methods in addressing complex, interdisciplinary issues.

1 Introduction

Recent socio-political upheavals, humanitarian crises, and public health emergencies have fueled the proliferation of deceptive content such as conspiracy theories and misinformation in digital communication environments, amplifying public concerns about its volume, diversity, and pervasiveness (Newman et al., 2022). While evidence of an increase in deceptive content in the digital age remains scarce – largely because these social phenomena were difficult to observe and measure in the pre-digital era (Altay, Berriche, and Acerbi, 2023) – digital platforms have substantially lowered the barriers to producing, distributing, and encountering such content. This has arguably resulted in heightened visibility and accelerated distribution of deceptive communication.

Although digital manifestations of conspiracy theories and misinformation do not automatically translate into increased acceptance and support (Uscinski, Enders, Klofstad, Seelig, et al., 2022), their consequences for a well-informed citizenry and healthy democracy can be significant. Potential detrimental effects include eroded trust in political institutions (Boulianne and Humprecht, 2023), political cynicism (Lee and Jones-Jang, 2024), delegitimization of the press (Ognyanova et al., 2020), increased societal stigmatization (Lantian et al., 2020) and prejudice (Jolley et al., 2020), violent extremism (Rottweiler and Gill, 2022), as well as reluctance to adopt preventive health measures (Bierwiaczonek et al., 2022)[1].

Correspondingly, recent years have witnessed a lively multidisciplinary debate about the challenges posed by conspiracy theories and misinformation (cf. reviews by Swire-Thompson and Lazer, 2020; Uscinski, Enders, Klofstad, and Stoler, 2022; Wang et al., 2019) and the interventions aimed at curbing their circulation (cf. overviews by Lazić and Žeželj, 2021; van der Linden, 2022; Ziemer and Rothmund, 2024). While this growing body of research provides valuable insights, it has three limitations that impede a more comprehensive understanding and the development of effective responses. First, research tends to disproportionately focus on specific issues (eg., climate change or health), particular stakeholders (e.g., journalists or technology companies), and individual-level strategies (e.g., digital literacy or inoculation), often reflecting the constraints of disciplinary silos and overlooking the interconnected dynamics that span topical domains, stakeholder groups, and societal levels. Second, there is a notable lack of anticipatory impact assessments that go beyond the current status quo, leaving scholars and practitioners ill-equipped to take proactive measures. Third, discussions of interventions sometimes seem disconnected from the specific challenges they seek to address, resulting in measures that risk being contextually misaligned.

Effectively addressing these shortcomings requires an integrative and multi-perspectival approach across academic and disciplinary boundaries, which we aim to provide in this article. Based on a three-wave Delphi study, which is an expert-driven method for anticipatory impact assessment and policy development (Linstone and Turoff, 1975), 47 scholars and practitioners from various fields and geographic regions (1) identified the most pressing contemporary challenges related to conspiracy theories and misinformation in digital media, (2) anticipated potentially problematic trends, and (3) subsequently developed targeted intervention strategies. This approach enables the integration of civil society, journalism, platforms, politics, and science and research, while considering the dynamics at the macro, meso, and micro levels.

2 Literature review

Defining conspiracy theories and misinformation

While the facets and forms of deceptive or “polluted” content (Wardle and Derakhshan, 2017, p. 4) circulating in today’s digital environments are diverse, conspiracy theories and misinformation are arguably the most pervasive phenomena in this context. However, as diverse as these two phenomena are, so are their definitions (Zeng and Brennen, 2023). To avoid a Western-centric perspective that may overlook unique political power dynamics in (non-)democratic contexts, we deliberately adopt a minimal definition of both phenomena. Thus, misinformation refers to false, inaccurate, incomplete, or misleading information (Vraga and Bode, 2020) that is spread either intentionally or unintentionally. Hence, we do not distinguish between misinformation and disinformation based on the criterion of intentionality (Wardle and Derakhshan, 2017), as the reasons for and intent behind sharing content vary and are rarely transparent (Appelman et al., 2022). Conspiracy theories, on the other hand, are defined as a “theory which posits a conspiracy” (Pigden, 2006, p. 23), typically claiming to reveal hidden truths and the secretive actions of malevolent actors and secret societies (Mahl et al., 2022).

Although conspiracy theories and misinformation are based on different epistemologies, they are closely interconnected. Both tend to align with individuals’ preconceived beliefs, thrive on distrust of institutional authorities, and rely on sensational or emotionally charged narratives (Martel et al., 2020). By filling in the gaps of partial or distorted facts with speculative explanations, conspiracy theories can gain traction, and adherents of conspiracy theories may in turn share misinformation to strengthen their narrative, creating a symbiotic relationship in which each reinforces the other. It is thus crucial to examine the challenges, trends, and interventions of both phenomena in tandem.

Diagnosing key challenges

Previous research has highlighted several challenges posed by conspiracy theories and misinformation online, including concerns related to the design of digital platforms, such as unclear algorithmic curation (Southwell et al., 2019), researchers’ limited access to platform data (Ciampaglia et al., 2018), and the difficulty of detecting misinformation across platforms (Al-Rawi and Fakida, 2023). Beyond this, scholars have discussed challenges related to journalism, democratic citizenship, and intergroup relations, such as the disconnect between fact-checking efforts and the spheres where misinformation is most likely to spread (Fernandez and Alani, 2018), the increase in non-normative political actions such as violence against political leaders (Imhoff et al., 2021), and the societal exclusion of immigrants (Jolley et al., 2020).

As this illustrates, scholarship has provided valuable perspectives on the contemporary challenges associated with conspiracy theories and misinformation in digital media. Nevertheless, these challenges are often examined in isolation, focusing on specific domains, stakeholders, or forms of deceptive communication. Moreover, the rapid expansion of this multidisciplinary field complicates efforts to pinpoint the most pressing issues that merit prioritized attention. Herein lies the key strength of a Delphi study, with both academic experts and practitioners providing systematic guidance. This leads us to the first research question (RQ) for which we asked experts:

RQ1: What are currently the most important challenges related to conspiracy theories and misinformation in digital media?

Anticipating problematic trends

The digital media landscape is constantly evolving, with technological advancements reshaping how information is created, shared, and consumed. Anticipating future challenges is essential for developing proactive strategies to address emerging issues before they escalate. However, prognostic perspectives on the challenges posed by conspiracy theories and misinformation in digital media remain limited – likely due to the inherent unpredictability of technological developments and societal changes, which complicate efforts to anticipate specific problematic trends.

A notable exception in this area is a survey conducted by the Pew Research Center and Elon University’s Imagining the Internet Center (Anderson and Rainie, 2017), which sought input from scholars, practitioners, strategic thinkers, and other experts on the future of truth and misinformation online. Participants were asked whether changes aimed at curbing the spread of lies and misinformation would improve the information environment. Of the respondents, 51 % were pessimistic, while 49 % predicted an improvement in the information environment. Similarly, Altay, Berriche, Heuer, and colleagues (2023) surveyed academic experts about the future of misinformation scholarship, highlighting the need for research beyond the United States, interdisciplinary collaboration, and attention to offline misinformation. Building on these valuable insights and aiming to help researchers and practitioners navigate the uncertainties of future developments, we wanted to know from experts:

RQ2: What will be the most problematic trends in the next five to ten years regarding conspiracy theories and misinformation in digital media?

Developing tailored interventions

In recent years, scholars, journalists, policymakers, and platform companies have proposed and tested various interventions to curb the spread and consumption of conspiracy theories and misinformation. Among the most prominent interventions are prebunking, debunking, nudging, and educational or regulatory interventions. While prebunking is a technique for building preemptive resilience to misinformation by forewarning people in order to trigger a desire to resist manipulation and thus equipping them with techniques to refute misinformed arguments (Compton et al., 2021), debunking aims to correct false or misleading information after people have been exposed to it (Chan et al., 2017). Nudging refers to small-scale interventions in the design of platforms aimed at minimizing the likelihood that users will spread misinformation and conspiracy theories further, for example, by prompting them to verify the accuracy of content before sharing it (Pennycook et al., 2020). Educational interventions target people’s digital media literacy (Guess et al., 2020) to reduce their susceptibility to conspiracy theories and misinformation prior to exposure. Regulatory strategies include both interventions implemented by platforms themselves, such as limiting the reach of content (Gillespie, 2022), and regulation of platforms[2] imposed by policymakers, like the Digital Services Act (European Commission, 2022).

Taken together, there is a substantial body of research – particularly in cognitive science (Pennycook and Rand, 2021), psychology (van Bavel et al., 2021), and educational research (Osborne and Pimentel, 2022) – that explores interventions designed to immunize people against conspiracy theories and misinformation. Given the inherent focus of these disciplines on micro-level factors, strategies often target individuals (Aghajari et al., 2023) while neglecting the interrelated dynamics at the macro, meso, and micro levels. At the same time, similar to the analysis of contemporary challenges, proposed interventions are often discussed in isolation – addressing specific issues, such as the COVID-19 pandemic (Tandoc et al., 2024) or focusing on particular stakeholders, like technology platforms (Ng et al., 2021). In response to these limitations, we sought expert insight into the following question:

RQ3: What should be done in the future to counter conspiracy theories and misinformation in digital media?

3 Method

The Delphi method

The Delphi method is a well-recognized approach for gathering expert insights to evaluate the impact of socio-technological advances, identify areas of consensus, develop guidelines, prioritize actions, and anticipate future scenarios (Linstone and Turoff, 1975). It is particularly valuable in dynamic or complex fields where empirical evidence remains limited or uncertain. For this reason, the Delphi method was deemed the most suitable approach for a systematic anticipatory impact assessment and policy development.

The fundamental premise of Delphi studies is the progressive aggregation of anonymous expert assessments through multiple rounds of surveys or interviews, with each iteration building upon the collective insights from the previous one. This iterative process allows participants to refine their views in light of others’ perspectives, while anonymity ensures equal consideration of all expert contributions, reducing biases from dominant voices. It is this reflective and iterative component that distinguishes the Delphi method from other expert-based or group survey techniques. In communication science, Delphi studies have been utilized to explore topics such as the impact of large language models on the science system (Fecher et al., 2023) and strategies for enhancing the quality of digital science communication (Fähnrich et al., 2023).

The research design and analysis

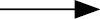

In contrast to consensus-oriented Delphi studies, where the number of rounds is determined by predefined agreement thresholds or response stability criteria (Diamond et al., 2014), we opted for an open and exploratory three-wave Delphi study, which was conducted between May and September 2022. As shown in Figure 1, the first two waves followed a Classic Delphi design, using consecutive anonymous online surveys. The first wave adopted an exploratory approach with open-ended questions, asking experts to assess contemporary challenges (RQ1), anticipate problematic trends (RQ2), and develop intervention strategies (RQ3). The second wave aimed to refine and prioritize the findings from the initial wave, inviting experts to provide feedback and rate the importance of proactively addressing the identified challenges and implementing the proposed interventions on a 4-point Likert scale (1 = “not important” to 4 = “very important”; see Appendix A Table A1 for the questionnaires from both waves).

To enable in-depth discussions on the development and implementation of intervention strategies in the context of national specificities – such as political, media, and economic environments – the third wave focused on the DACH region (Germany, Austria, Switzerland), an understudied area in the field (Mahl et al., 2022). Following a Group Delphi design, we conducted a two-day on-site workshop with experts knowledgeable about the region. The workshop revisited insights from the previous waves and specified strategies for the DACH region. Experts, divided into rotating focus groups and moderated by the authors, discussed interventions across five areas identified in the preceded waves: civil society, journalism, platforms, politics and public administration, and science and research. Each expert contributed to each of the areas, and the results were recorded by the experts to ensure unbiased outcomes, with additional notes taken by the moderators.

Following the three-wave Delphi design, in which each wave’s findings inform the design of the subsequent one, the respective results were analyzed after each wave. The qualitative analysis of the responses to the open-ended survey questions and the experts’ documented notes from the workshop discussions was conducted using an inductive-iterative thematic analysis (Schreier, 2014) performed in MAXQDA. During multiple coding sessions, the first three authors systematically reviewed the verbatim responses, consolidating and rephrasing similar items for clarity while preserving the original meaning. Overarching thematic areas were then identified inductively and labeled through an iterative process of repeated reading and cross-checking of responses to organize the challenges, trends, and interventions. The rest of the research team provided critical feedback on the initial findings, which was discussed until consensus was reached. This process was repeated in the second and third waves. Quantitative analysis of the experts’ ratings of the importance of the challenges and interventions identified in the second wave was conducted through descriptive analysis in R.

The Delphi research design.

The expert panel

To assemble an international, multi- and transdisciplinary expert panel, we employed a multi-stage sampling strategy, combining purposive snowball sampling (Noy, 2008) with maximum variation sampling (Suri, 2011). Experts were defined as individuals with in-depth specialized knowledge, recognized expertise, and practical experience in addressing conspiracy theories and misinformation in digital media and developing intervention strategies. Based on these criteria, we selected two categories of experts: (1) scholars with eminent positions in the field, recent publications in high-impact journals, and/or participation in renowned (inter)national research projects; and (2) practitioners with substantial experience working for fact-checking agencies, media organizations, government bodies, or think tanks, and/or notable contributions to intervention programs.

120 experts were invited to participate in the study, with 47 completing the first wave – 38 scholars and 9 practitioners. Of these, 26 participated in the second wave. For the third wave, we invited all experts from the DACH region, with 9 attending the workshop. The expert panel was characterized by a high degree of geographical and disciplinary diversity. In total, experts represented 13 countries from the Global South and North with different political, media, and economic environments, including Australia, Austria, Belgium, Brazil, China, Colombia, Hong Kong, Germany, the Netherlands, Switzerland, Taiwan, the United Kingdom, and the United States. The scholars included full, associate, and assistant professors, as well as early-career researchers, from the fields of communication science, cultural and media studies, history, information science, philosophy, political science, and psychology; practitioners were journalists, fact-checkers, professional communicators from think tanks and government agencies, activists, and educators. Of the 47 experts, only 25.5 % had previously collaborated with others from the panel, which highlights the diversity of viewpoints resulting from their different backgrounds and perspectives. Regarding their expertise, most participants reported high levels of experience and knowledge about the topic under investigation (see Appendix B Figure B1).

4 Findings

The experts’ assessment of current challenges (RQ1), problematic trends (RQ2), and intervention strategies (RQ3) revealed a strong consensus and spanned a diverse range of topics across ten thematic areas: the governance of and by platforms, platform design, journalism and news media ecosystems, research and science communication ecosystems, societal dynamics, socio-political institutions, individual behavior, prebunking and literacy, and debunking and fact-checking. The following sections discuss challenges, trends, and interventions together for each thematic area (see Table 1 for an overview), focusing primarily on the assessment provided by the full panel of experts during the first two waves; strategies that emerged during the focus group discussions among the experts from the DACH region are presented only selectively to complement the findings.

Before doing so, however, it is important to highlight three overarching findings that fundamentally frame the rest of the results. First, when asked to define the terms “conspiracy theory” and “misinformation”, experts emphasized that the conceptual core and boundaries of both are “very slippery” (E43) and that attempts to systematize existing terminology are “a mess” (E30). Others suggested replacing the term “conspiracy theory” with more value-neutral terms, such as “conspiracy myth” (E46), and noted that “misinformation” is often defined too broadly to be applied effectively. Experts also recognized that the two phenomena are often intertwined, which may explain why most made little distinction between them in terms of specific challenges, trends, and interventions, as the following quote illustrates: “I regard their challenges for society as very similar and not easy to separate” (E38). This may indicate that ongoing debates over defining various forms of deceptive communication and developing narrow typologies may divert attention from addressing more significant research priorities (Weeks and Gil de Zúñiga, 2021). Second, while some experts noted that national factors such as political or media systems might affect the prevalence of and adherence to conspiracy theories and misinformation, most made little distinction between geographic regions when evaluating challenges, trends, or interventions. Third, some experts disagreed on the severity of the issue. For instance, while one participant emphasized that “we are witnessing a veritable conspiracy theory panic” (E23), another argued that “we still underestimate how dangerous this development is and are not taking adequate action” (E34). When asked how the current situation regarding conspiracy theories and misinformation in digital media will develop, 47 % (n = 22) believe it will get worse, while only 11 % (n = 5) expect it to improve. 43 % (n = 20) think it will stay the same, which aligns with the finding that several challenges society is currently facing will likely remain relevant in the future.

Governance of platforms

The vast majority of both scholars and practitioners expressed concern about the lack of effective regulations to curb conspiracy theories and misinformation on platforms – regulations that take into account regional and cultural differences while avoiding censorship. The group of experts emphasized that this is currently one of the greatest challenges. However, especially scholars from countries where democracy is threatened by populist parties, such as Brazil, warned that giving states too much regulatory power can be risky, as it could enable policymakers to strategically (de)legitimize certain views and “give governments a platform for their propaganda” (E11). Some experts also pointed out that “private-by-design platforms” (E17) such as WhatsApp pose a particular challenge for regulation – and for verifying its effectiveness – and stressed that this is likely to remain a challenge in the future.

To adequately address these challenges and trends, experts called for platforms to be “held accountable” for the content they host – “not through censorship, but through smart regulation that counters the anti-democratic actions of platform algorithms” (E45), such as the Digital Services Act in the European Union. Accordingly, a majority of experts considered evidence-based, democratic, and transparent forms of regulation as a key intervention. In addition, some experts emphasized the need for governments to invest in “transnational cooperation” as hitherto “rather anachronistic forms of social and political organizations are pitted against globally acting digital platforms” (E36). In the course of the focus group discussions, experts proposed that governmental organizations should establish alert systems to detect, expose, and label conspiracy theories and misinformation, and to coordinate (inter)national regulatory responses to their spread.

Governance by platforms

With respect to platforms’ regulations for policing the content and users they host, the vast majority of experts – scholars and practitioners alike – pointed to the lack of accountable, effective, and transparent content moderation practices used by platforms that “balance freedom of speech with the need for regulation” (E44). According to the experts, this particularly applies to platforms with lax content moderation policies that espouse a “twisted version of free speech” (E17). The delicate balancing act between the need for regulation and the risk of censorship was seen as a key challenge. In addition, some experts warned about the lack of appropriate mechanisms for algorithmic or human detection of conspiracy theories and misinformation on platforms and expressed concern about embedding the governance of globally operating platforms and technology companies in widely varying national laws. Looking to the future, the majority of experts predicted that platforms’ adherence to “U.S.-specific definitions of ‘free speech’” (E16) is likely to remain one of the biggest challenges. Some scholars further warned about the continued lack of strict content moderation practices, which were seen as often conflicting with platforms’ business interests, the continued power of platforms to self-regulate, and the increasing use of strategies to circumvent content moderation, such as the usage of subcultural, decoded language.

To improve platforms’ governance and their efforts to minimize the distribution of conspiracy theories and misinformation, the majority of experts called for the implementation of more effective strategies to moderate false or harmful content. During the workshop, the experts identified the establishment of an independent monitoring body as one of the most promising measures to be taken in this regard. Similar to Meta’s Oversight Board, the experts suggested that stakeholders from different sectors of society should ensure that platforms communicate their moderation practices transparently. In addition, some experts highlighted that there is “an urgent need for more human content moderators” (E3). However, this would also require platforms to do a better job of ensuring their well-being. One scholar also warned that moderation practices, such as attaching fact-checking labels to content, “could draw more attention to the content than it already had (creating a Streisand effect)” (E17). Finally, during the focus group discussions, a fact-checker based in Switzerland, where the four national languages are spoken in many dialects, stressed the need for platforms to set up algorithmic detection that targets dialect specifics.

Platform design

Most experts emphasized that a better understanding of “how platforms shape communication and user cultures” (E15) is critical to minimizing the spread of conspiracy theories and misinformation in digital media. In this sense, scholars and practitioners agreed that the key challenge related to the design of platforms is the negative impact of opaque algorithmic content curation practices on the quality of public debates, as they “often favour emotive and outrageous content” (E25). However, one scholar cautioned that “we need to make sure that we don’t give in to a moral panic about the power of social media (and its recommendation algorithms) to brainwash users” (E29). In addition, some experts pointed out that the connectivity of the platform ecosystem, in particular, facilitates the rapid and global spread of conspiracy theories and misinformation. Looking at potential problematic trends in the future, some experts – academics and journalists alike – expressed concern about the impact of highly convincing deep fakes, which are extremely difficult to detect and expose. In addition, “burner” accounts, i.e., temporary or anonymous online profiles created to hide a user’s real identity, were seen as a significant challenge for the future.

In response to these challenges and trends, the majority of experts suggested rethinking the technological design of platforms to improve the quality of information and the well-being of users. More specifically, recalibrating algorithms to crack down on the spread of conspiracy theories and misinformation was seen as a key intervention. This could help to “interrupt escalating rabbit holes” (E44). Some experts suggested creating “human-in-the-loop artificial intelligence that is sensitive to content” (E12), while others called for platforms to allow independent auditing of user experience and algorithmic curation. During the focus group discussions, experts emphasized that platforms need to design, test, and implement various forms of friction to reduce the spread of false or harmful content.

Journalism and news media ecosystems

According to a majority of experts, the most significant current and future challenge related to journalism is posed by clickbait business models that “prioritize clicks over quality” (E1). In addition, some experts warned against journalists’ “problematic persistence of a disinterested, ‘objective’ stance even in the face of fundamental struggles to retain a democratic system” (E17), shrinking resources for science journalism, the blurring boundaries between information and fiction in commercial news media, and the impact of highly fragmented news environments with multiple channels and a perceived information overload. The latter, together with the increasing influence of citizen journalism, crowdsourcing, and content farms – also referred to as the “Uberization” of journalism (E17) – that do not adhere to professional journalistic standards, were highlighted as major challenges for the future.

According to a majority of experts, key interventions to address these challenges include disincentivizing clickbait journalism while improving journalistic standards through critical reporting of false or harmful content, weight-of-evidence coverage, and transparency of sources. As one scholar added, this should be achieved “both from within the profession and through the creation of better regulations against poor journalism that actively damages society” (E23). In addition, most of the journalistic experts suggested that quality newspapers or public broadcasters need to increase their activities on social media to disseminate trustworthy information as an antidote to conspiracy theories and misinformation. Finally, during the focus group discussions, scholars and practitioners emphasized that journalists, along with academics, professional communicators, policymakers, and others, need to build knowledge-sharing networks (e.g., modelled on The Conversation) to keep pace with the rapid and global spread of deceptive content.

Research and science communication ecosystem

Challenges related to science and research were raised mainly by scientific experts. A majority of them stressed that limited access to platform data and the lack of effective tools to track the flow of conspiracy theories and misinformation in digital media are the main challenges. In addition, experts identified a number of research and knowledge gaps, including the lack of research on cross-national and cross-cultural differences, the long-term effects of interventions, and the limited understanding of the language and culture of conspiracy theories. Others cautioned that research has disproportionately focused on the amplification of extreme content through recommender systems, ignoring that deceptive discourses proliferate on platforms because there is a demand for them among users, and platform affordances allow that demand to be met. In addition, some scholars pointed to epistemological challenges, such as the “problem of deciding whether something is/can be true” (E43). Finally, some scholars noted that the study of closed or semi-public platforms poses both methodological and ethical challenges. In addition to the rise of pseudoscience, more than half of the experts considered the growing distrust in scientists, scientific institutions, and processes to be the most important future challenge related to science and research.

A majority of scholars and practitioners agreed that transparent and targeted science communication, as well as a continuous exchange between researchers, practitioners, and the public, could be a key intervention to effectively stem the tide of deceptive communication in digital media. According to the expert group, the aim should be to provide an understanding of scientific processes and to communicate complex scientific findings in a comprehensible and tailored way to specific target groups. This, in turn, could be achieved, as the workshop participants discussed, by building a “network of well-connected science communicators, expert groups, and civil society actors” (E31) across fields.

Societal dynamics

Societal challenges were identified at various levels. At the macro level, the vast majority of experts identified the risk of societal destabilization, polarization, low resilience, and social conditions that increase vulnerability, such as loss of control or inequality, as some of the most pronounced challenges. Some also stressed that conspiracy theories and misinformation can undermine democratic systems and standards of deliberation. In addition to democracy-threatening trends such as populism or radicalization as consequences and drivers of conspiracy theories and misinformation – the most frequently cited future challenge for society – some experts expressed concern about the growing “epistemic divide” (E43) and “intensified battles over truth” (E40) in society.

According to a majority of experts, key interventions to address the deeper social roots of conspiracy theories and misinformation include solving long-standing cultural, social, and political issues that underlie their popularity and explain why people adhere to these phenomena. Against this backdrop, one expert urged that “the undermining of deliberative standards, which is the formal glue that keeps society together” (E4) must be overcome.

Socio-political institutions

At the meso level, a majority of experts identified the erosion of trust in socio-political institutions (e.g., scientific institutions, political parties, media organizations) and their representatives (e.g., scientists, politicians, journalists) as the key societal challenge of the present and the future. In addition, some – particularly scholars from geographic areas where national politics are dominated by populist parties (e.g., Brazil, China, Italy) – warned of the increasing instrumentalization of conspiracy theories and misinformation by authoritarian politicians to censor public opinion and persecute dissenters: “In many Asian countries, the government is the one that often tries to benefit from political conspiracy theories and misinformation (e.g., China, Cambodia, India, Myanmar, the Philippines, Vietnam) while accusing the opposition of making false statements” (E18). In addition, experts pointed out the negative consequences of granting organizations or individuals with power the autonomy to determine the veracity of information.

To address current challenges and future trends at the meso level of society, a majority of scholars and practitioners suggested evidence-based, transparent, and targeted communication as a key intervention. Experts argued that the forces that undermine public trust in institutions need to be tackled, and that rebuilding trust could ultimately help to address the root causes of conspiracy theories and misinformation. Moreover, some experts warned that we need to “rethink the whole political culture and political communication in terms of which communication styles should be accepted and used in society – and which should be rejected” (E25).

Individual behavior

At the micro level, the expert group expressed concern about a broad spectrum of challenges related to the impact of conspiracy theories and misinformation on individuals, focusing on the multifaceted nature of the challenges rather than on a single central problem. Challenges identified by most of the experts included the negative impact of deceptive communication on radicalization and extremism, the offline behavior of users, the lack of effective strategies to immunize and empower citizens, and the lack of “support structures for dropouts to reintegrate them into society” (E8). Looking to the future, scholars and practitioners again pointed to several issues, including the rise of hatred against minorities and vulnerable populations, the transformation of “small, scattered groups into large, radical movements that threaten democratic systems” (E24) – especially in authoritarian regimes and highly polarized societies – and the growing apathy and tendency toward “ill-informed cynicism and nihilism” (E18).

In order to effectively address these challenges and trends, experts at the workshop emphasized the need to “hold platform users accountable” (E44) for their actions. Accordingly, communication on digital platforms should not be perceived as taking place in a legal vacuum. To this end, experts considered it necessary to define what forms of communication are acceptable and justifiable. In addition, some experts stressed the need to establish counseling programs for individuals with strong conspiracy beliefs, while at the same time providing effective support for their families and friends. Others pointed to the importance of actively engaging with people who adhere to conspiracy theories or spread misinformation, rather than pathologizing their beliefs, worldviews, and motivational underpinnings: “Getting these people back should be the goal but this won’t happen by just debunking stuff and telling them how wrong they are” (E9).

Prebunking and literacy

The experts also identified several challenges with respect to already well-established interventions. In terms of strategies employed prior to people’s exposure to deceptive content, the majority of scholars and practitioners emphasized that the lack of initiatives to strengthen people’s digital literacy, news (media) literacy, and critical thinking skills – and the resources needed to fund such programs – are key challenges. However, some scholars emphasized that “media literacy concepts are twisted, exploited, and used as a weapon by non-partisan fringe groups to make their followers immediately distrust the ‘established’ media and other social actors” (E17). In terms of potential problematic trends, no specific aspects were mentioned.

To address the diagnosed lack of initiatives and resources to improve people’s digital media literacy, news (media) literacy, and critical thinking skills, a majority of scholars and practitioners suggested integrating these skills into school curricula and teacher training, but also targeting adults with appropriate programs, for example, through courses offered at community colleges. This was seen as the most important intervention in the context of prebunking and literacy measures. In addition, professional communicators need to be trained in these skills in order to lead the way as role models. This requires both financial resources and the commitment of civil society actors – and, above all, an awareness of the need for and added value of improved literacy skills. In contrast, some experts highlighted that these interventions assume that people support conspiracy theories or spread misinformation because they lack critical thinking or other skills, which is problematic because supporters of conspiracy theories often encourage others to construct knowledge from the bottom-up, and people’s motivations for spreading misinformation are varied.

Debunking and fact-checking

Regarding the challenges associated with debunking and fact-checking strategies, journalists and fact-checkers, in particular, emphasized that limited resources and low return on investment for fact-checking initiatives are key challenges. Some experts – practitioners and scholars alike – emphasized that debunking messages often do not reach their intended audiences, as conspiracy theories or misinformation are disseminated through (semi-)public or private platforms, while evidence refuting false information is either distributed through other channels or inaccessible due to paywalls. In addition, some experts warned that actors who compose debunking messages often lack subcultural knowledge. No particularly problematic trends were anticipated for this thematic area.

According to some experts, these challenges could be effectively addressed partly through long-term funding of initiatives and programs, but more importantly by training various actors in established fact-checking methods. A majority of journalists also emphasized the need to raise awareness, especially within media organizations, that fact-checking is a core component of journalistic practice. During the workshop discussion, it was also noted that there is a need for more independent fact-checking organizations, such as PolitiFact (in the United States) or Correctiv (in Germany).

Overview of challenges, trends, and interventions.

|

Thematic area |

Current challenges |

Problematic trends |

Intervention strategies |

|

Governance of platforms |

•lack of effective regulation that takes into account national specificities but avoids censorship (*) •monitoring of (semi-)closed platforms

|

|

•evidence-based, transparent regulation (*) •transnational cooperation •alert systems |

|

Governance by platforms |

•balance between freedom of expression and regulation (*) •lack of sufficient detection mechanisms

•orientation to specific national laws and regulations |

•balance between moderation and business interests •platforms self-regulation •circumvention of content moderation |

•independent monitoring board (*) •(well-being of) human moderators •vernacular detection |

|

Platform design |

•opaque algorithmic curation (*) •cross-platform distribution |

•deep fakes •‘burner’ accounts |

•algorithm recalibration (*) •user experience and algorithm audit •friction and nudges |

|

Journalism and news media ecosystems |

•clickbait journalism (*)

•‘objective’ attitudes toward harmful content •shrinking resources •blurred lines between information and fiction •fragmented news environments

|

•‘Uberization’ of journalism |

•disincentivizing clickbait (*) •critical reporting, weight-of-evidence, source transparency (*) •strengthening professional journalism •building communication networks |

|

Research and science communication ecosystems |

•limited access to platform data (*) •lack of sufficient detection tools (*) •research and knowledge gaps •study of (semi-)closed platforms |

•distrust of science (*) •amplification of pseudoscience |

•strengthening science communication and dialog with stakeholders and the public (*) •building networks of science communicators |

|

Societal dynamics |

•polarization, destabilization, low resilience, erosion of deliberative norms (*) |

•democracy-threatening developments (*) •epistemic divides |

•resolving long-standing political, economic, cultural problems (*) •restoring common ground in society |

|

Socio-political institutions |

•erosion of trust in socio-political institutions (*)

•instrumentalization by political opponents

|

|

•evidence-based, transparent, targeted communication (*) |

|

Individual behavior |

•radicalization and extremism •lack of strategies to empower citizens •lack of support for dropouts |

•hatred of minorities •transformation of scattered groups into radical movements •uninformed cynicism and nihilism |

•user accountability •reintegration of dropouts •support structures for family and friends |

|

Prebunking and literacy |

•lack of funding and programs to improve people’s literacy (*) |

|

•funding and delivery of literacy programs for various segments of society (*) |

|

Debunking and fact-checking |

•lack of resources and low profitability for fact-checking (*) •difficulty in reaching target audience •lack of skills to create debunking or fact-checking messages |

|

•funding fact-checking initiatives •raising awareness of the need for fact-checking •independent, certified fact-checking organizations •changing formats of fact-checking |

|

Note. (*) indicates key challenges, trends, or interventions that the majority of experts identified as particularly important; arrows indicate that current challenges were also identified as problematic trends. |

|||

Overall, when evaluating the intervention strategies developed to curb the spread and harmful effects of conspiracy theories and misinformation online, experts stressed that “there exists no silver bullet to resolve these issues” (E47) and that conspiracy theories and misinformation “can only be countered through the interaction of different actors at a national and international level” (E14). This perspective is reflected in experts’ ratings, as most measures were deemed moderately important, with minimal variation between them (see Table 2).

Importance of interventions.

|

Importance of interventions regarding … |

M |

SD |

|

governance of platforms |

3.50 |

0.71 |

|

journalism and news media ecosystems |

3.50 |

0.76 |

|

societal dynamics |

3.38 |

1.10 |

|

socio-political institutions |

3.19 |

1.02 |

|

individual behavior |

3.19 |

0.85 |

|

governance by platforms |

3.19 |

0.94 |

|

platform design |

3.19 |

0.94 |

|

prebunking and literacy |

3.15 |

0.92 |

|

research and science communication ecosystem |

3.12 |

0.83 |

|

debunking and fact-checking |

3.04 |

0.87 |

Note. N = 26 experts; importance was measured on a 4-point Likert scale (1 = “not important” to 4 = “very important”).

5 The road ahead: Empirical and methodological reflections

In recent years, debates about the challenges posed by conspiracy theories and misinformation in digital media – and interventions to address them – have intensified (Altay, Berriche, Heuer, et al., 2023; Lazić and Žeželj, 2021; Ziemer and Rothmund, 2024). However, much of the existing research focuses disproportionately on specific issues, stakeholders, and strategies at the individual level, potentially overlooking dynamics that cut across issues, domains, and societal levels. Addressing this requires integrative, multi-perspectival impact assessments that bridge academic and disciplinary boundaries. This is where our study makes its contribution, building on the strengths of the Delphi method: In three iterative rounds, a panel of 47 experts from different disciplines, fields of expertise, and geographic regions identified current challenges associated with conspiracy theories and misinformation (RQ1), anticipated problematic trends (RQ2), and developed intervention strategies (RQ3).

Empirical reflections

The experts’ assessment of challenges, trends, and interventions was characterized by a high degree of consensus and covered a broad thematic spectrum, linking the fields of civil society, journalism, platforms, politics, and science, while considering aspects at the macro, meso, and micro levels. To summarize, first, the results of our Delphi study are consistent with previous diagnoses of contemporary challenges, such as opaque algorithmic curation (Southwell et al., 2019) or growing distrust in science (Lee et al., 2024), as well as proposed interventions, such as digital media literacy (Guess et al., 2020). Beyond this, the Delphi method allows for a meta-perspective that complements existing reviews (Ziemer and Rothmund, 2024) and guidelines (Lewandowsky et al., 2020) by highlighting underexplored aspects. These include user strategies to circumvent content moderation, the “Uberization” of journalism, the shift from misinformed citizens to uninformed cynics and nihilists, and the critical need to better understand the demand for deceptive content. Effective responses to such multifaceted challenges are likely to be achieved only over the long term, which is why the expert panel expects many of the challenges identified to remain relevant in the future.

Second, the experts recommended targeted interventions such as establishing an independent monitoring board, recalibrating algorithms, discouraging clickbait journalism, and promoting evidence-based, transparent, and audience-specific communication. Among the proposed strategies, those focusing on the governance of platforms, journalism practices, and the broader news media ecosystem were deemed slightly more important than approaches like prebunking, literacy initiatives, debunking, and fact-checking. This is consistent with recent evidence suggesting that prebunking (Roozenbeek and van der Linden, 2019), media literacy (Guess et al., 2020), or fact-checking (Walter et al., 2020) can be effective in reducing misperceptions – but the effects are rather small and often short-lived. It is also important to recognize that individuals who subscribe to conspiracy theories or spread misinformation often harbor deep distrust of institutional authorities, such as journalists or scientists, making them less receptive to counterarguments from these sources. In this context, Altay (2022) advocates for promoting reliable information and mitigating the partisan animosity that fuels the consumption of deceptive content. In particular, the expert panel did not consider the implementation of individual measures to be a panacea, but rather a combination of measures, aligned with the key contemporary and future challenges.

Methodological reflections

To advance expert-driven approaches to anticipatory impact assessment, such as the Delphi method, two key aspects require critical consideration. The first is the selection of experts, which forms the foundation of any Delphi study. Unlike approaches aimed at representativeness, the goal here is to assemble a diverse expert panel that spans a wide disciplinary and thematic spectrum (Linstone and Turoff, 1975). This diversity is critical to the robustness and validity of the findings but depends heavily on effective recruitment strategies and the willingness of experts to participate. Given the time-intensive nature of Delphi studies, high attrition rates are a common challenge. In this study, scholars specializing in the social, psychological, political, regulatory, and technological dimensions of the field were overrepresented. In contrast, we were unable to recruit participants from key stakeholder groups such as politicians, lawyers, or representatives of technology companies. Consequently, the findings may not fully capture insights from these perspectives. Additionally, the panel comprised experts from 13 countries, primarily in Europe and Asia, which may limit the generalizability of our findings. At the same time, while diversity within expert panels enhances the depth of analysis, it also poses challenges. Integrating diverse or even conflicting viewpoints – whether across international and regional contexts, between academia and industry, or among different scientific disciplines – can complicate the aggregation and interpretation of responses.

The second aspect relates to the research design. The present study combined elements of the Classic and Group Delphi, with two anonymous online surveys followed by focus group discussions. While this approach offered several advantages, it also presented some challenges. The anonymous online surveys facilitated broad international participation and allowed experts to freely share their individual assessments while maintaining anonymity. However, this anonymity had the potential downside of making participants feel less responsible for their judgments and less likely to reflect critically on their statements. The iterative feedback rounds were particularly beneficial – not because they led to significant shifts in the experts’ initial views, but because they enriched and refined these views by exposing participants to new insights derived from their peers’ assessments. The Group Delphi component enabled deeper discussions and more nuanced deliberation among experts. Yet, its focus on a smaller group and a specific regional context introduced the risk of excluding valuable perspectives. Furthermore, the study’s emphasis on anticipating problematic trends added an additional layer of complexity. Predicting developments in a highly dynamic and multifaceted field over a five- to ten-year horizon is inherently challenging, even for experts who have been observing the field for years. This is also evidenced by the fact that the rise of generative artificial intelligence and its potential impact on the proliferation of deceptive communication was (perhaps unsurprisingly) not anticipated (for a critical discussion on AI’s potential implications on misinformation, see Simon et al., 2023; for reflections on the impact of AI on the broader information ecosystem, see Kessler et al., 2025).

In conclusion, curbing the spread of conspiracy theories and misinformation in digital media remains a complex global challenge. While our Delphi study offers a comprehensive set of measures, further research is essential to identify effective combinations of individual strategies – for instance, by using complementary methods such as computational modeling approaches (Bak-Coleman et al., 2022) – and fostering collaboration among stakeholders to transform these measures into actionable, context-sensitive guidelines. Ultimately, sustainable solutions to global challenges require long-term commitment and collective efforts. The rapid advancement of generative artificial intelligence presents new challenges as well as opportunities, highlighting the need to continually adapt mitigation strategies to evolving technological landscapes.

Acknowledgements

We would like to thank the members of the Delphi expert panel for their valuable time and insightful contributions (listed alphabetically; 12 experts chose to remain anonymous): Afonso de Albuquerque, Alexandre Bovet, Axel Bruns, Michael Butter, Niki Cheong, M R. X. Dentith, Karen Douglas, Nadine Andrea Felber, Lena Frischlich, Sebastian Galyga, Javier C. Guerrero, Jaron Harambam, Annett Heft, Amélie Heldt, Lukas Hess, Edward Hurcombe, Roland Imhoff, Masato Kajimoto, Bernd Kerschner, Peter Knight, Marko Ković, Steffen Kutzner, Stephan Lewandowsky, Corine S. Meppelink, Franziska Oehmer-Pedrazzi, Thaiane Moreira de Oliveira, Tobias Rothmund, Sara Rubinelli, Kai Sassenberg, Tim Schatto-Eckrodt, Philipp Schmid, Daniel Vogler, Kevin Winter, Carolin-Theresa Ziemer, and Katrin Zöfel. We also extend our gratitude to Birte Fähnrich for her valuable support in the design of the study.

Funding: This work was supported by the Swiss National Science Foundation (SNSF) as part of the project “Science-related conspiracy theories online: Mapping their characteristics, prevalence and distribution internationally and developing contextualized counter-strategies” (Grant: IZBRZ1_186296) and the Swiss Young Academy (SYA) as part of the project “What can we learn from COVID-19 fake news about the spread of scientific misinformation in general?”

References

Aghajari, Z., Baumer, E. P. S., & DiFranzo, D. (2023). Reviewing interventions to address misinformation: The need to expand our vision beyond an individualistic focus. Proceedings of the ACM on Human-Computer Interaction, 7(CSCW1), 1–34. https://doi.org/10.1145/357952010.1145/3579520Search in Google Scholar

Al-Rawi, A., & Fakida, A. (2023). The methodological challenges of studying “fake news.” Journalism Practice, 17(6), 1178–1197. https://doi.org/10.1080/17512786.2021.198114710.1080/17512786.2021.1981147Search in Google Scholar

Altay, S. (2022). How effective are interventions against misinformation? PsyArXiv. https://doi.org/10.31234/osf.io/sm3vk10.31234/osf.io/sm3vkSearch in Google Scholar

Altay, S., Berriche, M., & Acerbi, A. (2023). Misinformation on misinformation: Conceptual and methodological challenges. Social Media + Society, 9(1), 1–13. https://doi.org/10.1177/2056305122115041210.1177/20563051221150412Search in Google Scholar

Altay, S., Berriche, M., Heuer, H., Farkas, J., & Rathje, S. (2023). A survey of expert views on misinformation: Definitions, determinants, solutions, and future of the field. Harvard Kennedy School Misinformation Review. Advance online publication. https://doi.org/10.37016/mr-2020-11910.37016/mr-2020-119Search in Google Scholar

Anderson, J., & Rainie, L. (2017). The future of truth and misinformation online. Pew Research Center. https://www.pewresearch.org/internet/2017/10/19/the-future-of-truth-and-misinformation-online/Search in Google Scholar

Appelman, N., Dreyer, S., Bidare, P. M., & Potthast, K. C. (2022). Truth, intention and harm: Conceptual challenges for disinformation-targeted governance. Internet Policy Review, https://policyreview.info/articles/news/truth-intention-and-harm-conceptual-challenges-disinformation-targeted-governance/1668Search in Google Scholar

Bak-Coleman, J. B., Kennedy, I., Wack, M., Beers, A., Schafer, J. S., Spiro, E. S., Starbird, K., & West, J. D. (2022). Combining interventions to reduce the spread of viral misinformation. Nature Human Behaviour, 6(10), 1372–1380. https://doi.org/10.1038/s41562-022-01388-610.1038/s41562-022-01388-6Search in Google Scholar

Bierwiaczonek, K., Gundersen, A. B., & Kunst, J. R. (2022). The role of conspiracy beliefs for COVID-19 health responses: A meta-analysis. Current Opinion in Psychology, 46, 101346. https://doi.org/10.1016/j.copsyc.2022.10134610.1016/j.copsyc.2022.101346Search in Google Scholar

Boulianne, S., & Humprecht, E. (2023). Perceived exposure to misinformation and trust in institutions in four countries before and during a pandemic. International Journal of Communication, 17(0), 24. https://ijoc.org/index.php/ijoc/article/view/20110Search in Google Scholar

Chan, M.-P. S., Jones, C. R., Hall Jamieson, K., & Albarracín, D. (2017). Debunking: A meta-analysis of the psychological efficacy of messages countering misinformation. Psychological Science, 28(11), 1531–1546. https://doi.org/10.1177/095679761771457910.1177/0956797617714579Search in Google Scholar

Ciampaglia, G. L., Mantzarlis, A., Maus, G., & Menczer, F. (2018). Research challenges of digital misinformation: Toward a trustworthy web. AI Magazine, 39(1), 65–74. https://doi.org/10.1609/aimag.v39i1.278310.1609/aimag.v39i1.2783Search in Google Scholar

Compton, J., Linden, S., Cook, J., & Basol, M. (2021). Inoculation theory in the post‐truth era: Extant findings and new frontiers for contested science, misinformation, and conspiracy theories. Social and Personality Psychology Compass, 15(6), 1–16. https://doi.org/10.1111/spc3.1260210.1111/spc3.12602Search in Google Scholar

Diamond, I. R., Grant, R. C., Feldman, B. M., Pencharz, P. B., Ling, S. C., Moore, A. M., Wales, P, W. (2014). Defining consensus: a systematic review recommends methodologic criteria for reporting of Delphi studies. Journal of Clinical Epidemiology, 67(4), 401–409. https://doi.org/10.1016/j.jclinepi.2013.12.002.10.1016/j.jclinepi.2013.12.002Search in Google Scholar

Enders, A. M., Uscinski, J., Klofstad, C., & Stoler, J. (2022). On the relationship between conspiracy theory beliefs, misinformation, and vaccine hesitancy. PLOS ONE, 17(10), e0276082. https://doi.org/10.1371/journal.pone.027608210.1371/journal.pone.0276082Search in Google Scholar

European Commission. (2022). Digital Services Act. https://ec.europa.eu/info/strategy/priorities-2019-2024/europe-fit-digital-age/digital-services-act-ensuring-safe-and-accountable-online-environment_en#what-are-the-key-goals-of-the-digital-services-actSearch in Google Scholar

Fähnrich, B., Weitkamp, E., & Kupper, J. F. (2023). Exploring ‘quality’ in science communication online: Expert thoughts on how to assess and promote science communication quality in digital media contexts. Public Understanding of Science, 32(5), 605–621. https://doi.org/10.1177/0963662522114805410.1177/09636625221148054Search in Google Scholar

Fecher, B., Hebing, M., Laufer, M., Pohle, J., & Sofsky, F. (2023). Friend or foe? Exploring the implications of large language models on the science system. AI & SOCIETY, Online First, 1–13. https://doi.org/10.1007/s00146-023-01791-110.1007/s00146-023-01791-1Search in Google Scholar

Fernandez, M., & Alani, H. (2018). Online misinformation: Challenges and future directions. WWW ‘18: Companion Proceedings of the Web Conference 2018, 595–602. https://doi.org/10.1145/3184558.318873010.1145/3184558.3188730Search in Google Scholar

Gillespie, T. (2018). Regulation of and by platforms. In J. Burgess, A. E. Marwick, & T. Poell (Eds.), The Sage handbook of social media (pp. 254–278). Sage. https://doi.org/10.4135/9781473984066.n1510.4135/9781473984066.n15Search in Google Scholar

Gillespie, T. (2022). Do not recommend? Reduction as a form of content moderation. Social Media + Society, 8(3), 1–13. https://doi.org/10.1177/2056305122111755210.1177/20563051221117552Search in Google Scholar

Guess, A., Lerner, M., Lyons, B., Montgomery, J. M., Nyhan, B., Reifler, J., & Sircar, N. (2020). A digital media literacy intervention increases discernment between mainstream and false news in the United States and India. Proceedings of the National Academy of Sciences, 117(27), 15536–15545. https://doi.org/10.1073/pnas.192049811710.1073/pnas.1920498117Search in Google Scholar

Imhoff, R., Dieterle, L., & Lamberty, P. (2021). Resolving the puzzle of conspiracy worldview and political activism: Belief in secret plots decreases normative but increases nonnormative political engagement. Social Psychological and Personality Science, 12(1), 71–79. https://doi.org/10.1177/194855061989649110.1177/1948550619896491Search in Google Scholar

Jolley, D., Meleady, R., & Douglas, K. M. (2020). Exposure to intergroup conspiracy theories promotes prejudice which spreads across groups. British Journal of Psychology, 111(1), 17–35. https://doi.org/10.1111/bjop.1238510.1111/bjop.12385Search in Google Scholar

Kessler, S. H., Mahl, D., Schäfer, M. S., & Volk, S. C. (2025). Science communication in the age of artificial intelligence. Journal of Science Communication, 24(2), 1–8. https://jcom.sissa.it/article/pubid/JCOM_2402_2025_E/10.22323/2.24020501Search in Google Scholar

Lantian, A., Wood, M., & Gjoneska, B. (2020). Personality traits, cognitive styles and worldviews associated with beliefs in conspiracy theories. In M. Butter & P. Knight (Eds.), Routledge Handbook of conspiracy theories (pp. 155–167). Routledge.10.4324/9780429452734-2_1Search in Google Scholar

Lazić, A., & Žeželj, I. (2021). A systematic review of narrative interventions: Lessons for countering anti-vaccination conspiracy theories and misinformation. Public Understanding of Science (Bristol, England), 30(6), 644–670. https://doi.org/10.1177/0963662521101188110.1177/09636625211011881Search in Google Scholar

Lee, S., & Jones-Jang, S. M. (2024). Cynical nonpartisans: The role of misinformation in political cynicism during the 2020 U. S. Presidential Election. New Media & Society, 26(7), 4255–4276. https://doi.org/10.1177/1461444822111603610.1177/14614448221116036Search in Google Scholar

Lee, S., Jones-Jang, S. M., Chung, M., Lee, E. W. J., & Diehl, T. (2024). Examining the role of distrust in science and social media use: Effects on susceptibility to COVID Misperceptions with panel data. Mass Communication and Society, 27(4), 653–678. https://doi.org/10.1080/15205436.2023.226805310.1080/15205436.2023.2268053Search in Google Scholar

Lewandowsky, S., Cook, J., Ecker, U., Albarracín, D., Amazeen, M. A., Kendeou, P., Lombardi, D., Newman, E. J., Pennycook, G., Porter, E., Rand, D. G., Rapp, D. N., Reifler, J., Roozenbeek, J., Schmid, P., Seifert, C. M., Sinatra, G. M., Swire-Thompson, B., van der Linden, S., Vraga, E. K., Wood, T. J. & Zaragoza, M. S. (2020). The debunking handbook 2020. https://sks.to/db2020Search in Google Scholar

Linstone, H. A., & Turoff, M. (1975). The Delphi method: Techniques and applications. Addison Wesley Publishing Company.Search in Google Scholar

Mahl, D., Schäfer, M. S., & Zeng, J. (2022). Conspiracy theories in digital media environments: An interdisciplinary literature review and agenda for future research. New Media & Society, 25(7), 1501–1823. https://journals.sagepub.com/doi/full/10.1177/1461444822107575910.1177/14614448221075759Search in Google Scholar

Martel, C., Pennycook, G., & Rand, D. G. (2020). Reliance on emotion promotes belief in fake news. Cognitive Research: Principles and Implications, 5(1), 47. https://doi.org/10.1186/s41235-020-00252-310.1186/s41235-020-00252-3Search in Google Scholar

Matthews, L. J., Nowak, S. A., Gidengil, C. C., Chen, C., Stubbersfield, J. M., Tehrani, J. J., & Parker, A. M. (2022). Belief correlations with parental vaccine hesitancy: Results from a national survey. American Anthropologist, 124(2), 291–306. https://doi.org/10.1111/aman.1371410.1111/aman.13714Search in Google Scholar

Newman, N., Fletcher, R., Robertson, C. T., Eddy, K., & Nielsen, R. K. (2022). Reuters Institute Digital News Report 2022. Reuters Institute. https://reutersinstitute.politics.ox.ac.uk/digital-news-report/2022Search in Google Scholar

Ng, K. C., Tang, J., & Lee, D. (2021). The Effect of platform intervention policies on fake news dissemination and survival: An empirical examination. Journal of Management Information Systems, 38(4), 898–930. https://doi.org/10.1080/07421222.2021.199061210.1080/07421222.2021.1990612Search in Google Scholar

Noy, C. (2008). Sampling Knowledge: The hermeneutics of snowball sampling in qualitative research. International Journal of Social Research Methodology, 11(4), 327–344. https://doi.org/10.1080/1364557070140130510.1080/13645570701401305Search in Google Scholar

Ognyanova, K., Lazer, D., Robertson, R. E., & Wilson, C. (2020). Misinformation in action: Fake news exposure is linked to lower trust in media, higher trust in government when your side is in power. Harvard Kennedy School Misinformation Review, 1(4), 1–19. https://doi.org/10.37016/mr-2020-02410.37016/mr-2020-024Search in Google Scholar

Osborne, J., & Pimentel, D. (2022). Science, misinformation, and the role of education. Science, 378(6617), 246–248. https://doi.org/10.1126/science.abq809310.1126/science.abq8093Search in Google Scholar

Pennycook, G., McPhetres, J., Zhang, Y., Lu, J. G., & Rand, D. G. (2020). Fighting COVID-19 misinformation on social media: Experimental evidence for a scalable accuracy-nudge intervention. Psychological Science, 31(7), 770–780. https://doi.org/10.1177/095679762093905410.1177/0956797620939054Search in Google Scholar

Pennycook, G., & Rand, D. G. (2021). The psychology of fake news. Trends in Cognitive Sciences, 25(5), 388–402. https://doi.org/10.1016/j.tics.2021.02.00710.1016/j.tics.2021.02.007Search in Google Scholar

Pigden, C. R. (2006). Complots of mischief. In D. Coady (Ed.), Conspiracy theories: The philosophical debate (pp. 1–33). Routledge.Search in Google Scholar

Roozenbeek, J., & van der Linden, S. (2019). The fake news game: Actively inoculating against the risk of misinformation. Journal of Risk Research, 22(5), 570–580. https://doi.org/10.1080/13669877.2018.144349110.1080/13669877.2018.1443491Search in Google Scholar

Rottweiler, B., & Gill, P. (2022). Conspiracy beliefs and violent extremist intentions: The contingent effects of self-efficacy, self-control and law-related morality. Terrorism and Political Violence, 34(7), 1485–1504. https://doi.org/10.1080/09546553.2020.180328810.1080/09546553.2020.1803288Search in Google Scholar

Schreier, M. (2014). Ways of doing qualitative content analysis: Disentangling terms and terminologies. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 15(1). https://doi.org/10.17169/fqs-15.1.2043Search in Google Scholar

Simon, F. M., Altay, S., & Mercier, H. (2023). Misinformation reloaded? Fears about the impact of generative AI on misinformation are overblown. Misinformation Review, 4(5), 1–11. https://misinforeview.hks.harvard.edu/article/misinformation-reloaded-fears-about-the-impact-of-generative-ai-on-misinformation-are-overblown/10.37016/mr-2020-127Search in Google Scholar

Southwell, B. G., Niederdeppe, J., Cappella, J. N., Gaysynsky, A., Kelley, D. E., Oh, A., Peterson, E. B., & Chou, W.-Y. S. (2019). Misinformation as a misunderstood challenge to public health. American Journal of Preventive Medicine, 57(2), 282–285. https://doi.org/10.1016/j.amepre.2019.03.00910.1016/j.amepre.2019.03.009Search in Google Scholar

Suri, H. (2011). Purposeful sampling in qualitative research synthesis. Qualitative Research Journal, 11(2), 63–75. https://doi.org/10.3316/QRJ110206310.3316/QRJ1102063Search in Google Scholar

Swire-Thompson, B., & Lazer, D. (2020). Public health and online misinformation: Challenges and recommendations. Annual Review of Public Health, 41, 433–451. https://doi.org/10.1146/annurev-publhealth-040119-09412710.1146/annurev-publhealth-040119-094127Search in Google Scholar

Tandoc, E. C., Lee, J. C. B., Lee, S., & Quek, P. J. (2024). Does Length Matter? The impact of fact-check length in reducing COVID-19 vaccine misinformation. Mass Communication and Society, 27(4), 679–709. https://doi.org/10.1080/15205436.2022.215519510.1080/15205436.2022.2155195Search in Google Scholar

Uscinski, J. E., Enders, A. M., Klofstad, C., Seelig, M., Drochon, H., Premaratne, K., & Murthi, M. (2022). Have Beliefs in Conspiracy Theories Increased Over Time? PLOS ONE, 17(7), 1–19. https://doi.org/10.1371/journal.pone.027042910.1371/journal.pone.0270429Search in Google Scholar

Uscinski, J. E., Enders, A. M., Klofstad, C., & Stoler, J. (2022). Cause and effect: On the antecedents and consequences of conspiracy theory beliefs. Current Opinion in Psychology, 47, 101364. https://doi.org/10.1016/j.copsyc.2022.10136410.1016/j.copsyc.2022.101364Search in Google Scholar

van Bavel, J. J., Harris, E. A., Pärnamets, P., Rathje, S., Doell, K. C., & Tucker, J. A. (2021). Political psychology in the digital (mis)information age: A model of news belief and sharing. Social Issues and Policy Review, 15(1), 84–113. https://doi.org/10.1111/sipr.1207710.1111/sipr.12077Search in Google Scholar

van der Linden, S. (2022). Misinformation: Susceptibility, spread, and interventions to immunize the public. Nature Medicine, 28(3), 460–467. https://doi.org/10.1038/s41591-022-01713-610.1038/s41591-022-01713-6Search in Google Scholar

Vraga, E. K., & Bode, L. (2020). Defining misinformation and understanding its bounded nature: Using expertise and evidence for describing misinformation. Political Communication, 37(1), 136–144. https://doi.org/10.1080/10584609.2020.171650010.1080/10584609.2020.1716500Search in Google Scholar

Walter, N., Cohen, J., Holbert, R. L., & Morag, Y. (2020). Fact-checking: A meta-analysis of what works and for whom. Political Communication, 37(3), 350–375. https://doi.org/10.1080/10584609.2019.166889410.1080/10584609.2019.1668894Search in Google Scholar

Wang, Y., McKee, M., Torbica, A., & Stuckler, D. (2019). Systematic literature review on the spread of health-related misinformation on social media. Social Science & Medicine, 240, 112552. https://doi.org/10.1016/j.socscimed.2019.11255210.1016/j.socscimed.2019.112552Search in Google Scholar

Wardle, C., & Derakhshan, H. (2017). Information disorder: Toward an interdisciplinary framework for research and policy making. (Report DGI(2017)09). Council of Europe. https://edoc.coe.int/en/media/7495-information-disorder-toward-an-interdisciplinary-framework-for-research-and-policy-making.htmlSearch in Google Scholar

Weeks, B. E., & Gil de Zúñiga, H. (2021). What’s next? Six observations for the future of political misinformation research. American Behavioral Scientist, 65(2), 277–289. https://doi.org/10.1177/000276421987823610.1177/0002764219878236Search in Google Scholar

Zeng, J., & Brennen, S. B. (2023). Misinformation. Internet Policy Review, 12(4), 1–20. https://doi.org/10.14763/2023.4.172510.14763/2023.4.1725Search in Google Scholar

Ziemer, C.-T., & Rothmund, T. (2024). Psychological underpinnings of misinformation countermeasures. Journal of Media Psychology, 36(6), 397–409. https://doi.org/10.1027/1864-1105/a00040710.1027/1864-1105/a000407Search in Google Scholar

© 2025 bei den Autoren, publiziert von Walter de Gruyter GmbH, Berlin/Boston

Dieses Werk ist lizensiert unter einer Creative Commons Namensnennung 4.0 International Lizenz.