Abstract

Computer based imaging and analysis techniques are frequently used for the diagnosis and treatment of retinal diseases. Although retinal images are of high resolution, the contrast of the retinal blood vessels is usually very close to the background of the retinal image. The detection of the retinal blood vessels with low contrast or with contrast close to the background of the retinal image is too difficult. Therefore, improving algorithms which can successfully distinguish retinal blood vessels from the retinal image has become an important area of research. In this work, clustering based heuristic artificial bee colony, particle swarm optimization, differential evolution, teaching learning based optimization, grey wolf optimization, firefly and harmony search algorithms were applied for accurate segmentation of retinal vessels and their performances were compared in terms of convergence speed, mean squared error, standard deviation, sensitivity, specificity. accuracy and precision. From the simulation results it is seen that the performance of the algorithms in terms of convergence speed and mean squared error is close to each other. It is observed from the statistical analyses that the algorithms show stable behavior and also the vessel and the background pixels of the retinal image can successfully be clustered by the heuristic algorithms.

Introduction

Retinal vessel segmentation is one of the most important areas of retinal image analysis because some attributes of retinal vessels are usually important symptoms of diseases [1]. Due to the disadvantages of manual retinal image analysis automatic analysis of retinal images becomes an important area of research. Automated segmentation of retinal vessels is accepted as the first step of developments in the area of computer-aided diagnosis systems for ophthalmic disorders [2], The abnormalities caused by diseases of obesity [3], hypertension [4], glaucoma [5] and diabetic retinopathy [6], [7], [8] are able to display with higher accuracy by means of the automated segmentation of retinal vessels. It also plays an important role in the areas such as evaluation of retinopathy of prematurity [9], vessel diameter measurement [10]. fovea region detection [11], arteriolar stenosis [12], computer assisted laser surgery [13], treatment of ophthalmologic diseases [14], [15], [16] and optic disk detection [17].

The conventional classical algorithms are well developed for clustering based retinal image analysis. However, there are few works in literature including retinal image analysis by using heuristic algorithms. In this work clustering based heuristic artificial bee colony (ABC), particle swarm optimization (PSO), differential evolution (DE), teaching learning based optimization (TLBO), grey wolf optimization (GWO), firefly (FA) and harmony search (HS) algorithms have been applied to retinal vessel segmentation. The retinal images used in the simulations are taken from the DRIVE and STARE databases. In order to perform a detailed analysis simulations are realized for both normal and abnormal retinal images. The normal and abnormal retinal images taken from DRIVE and STARE databases are given in Figures 1 and 2, respectively.

Retinal images taken from DRIVE database.

(a) Normal retinal image; (b) Abnormal retinal image.

Retinal images taken from STARE database.

(a) Normal retinal image; (b) Abnormal retinal image.

In order to improve the performance of retinal vessel segmentation, some pre-processing operations have to be applied before clustering. Then the pre-processed retinal images are evaluated by the algorithms. Pre-processing operations used in this work can be described as the following.

Pre-processing operations

The retinal images analyzed in this work consist of red (R), green (G) and blue (B) color components and when each layer of these images was examined separately, it was seen that the highest clustering performance was obtained in the green layer. The G layer has higher illuminance and it also provides optimal contrast and brightness levels when compared to R and B layers. For these reasons, after this step, the image analysis was continued on the green layer. This pre-processing operation can be called as band selection.

As a result, after switching from a three-dimensional RGB image format to a one-dimensional image by band selection process; i.) the image becomes suitable for two-dimensional bottom-hat filtering, ii.) the pixel differences between the image background and the vessels become a bit more evident.

The contrast difference between the vessels and the image background was not found to be sufficient for a high clustering performance even after pre-processing operation mentioned above. For this reason, the next step is to apply a second pre-processing operation called bottom-hat transformation. This transformation can be described as extraction of the original image from the morphologically closed image. A bottom-hat filter enhances black spots in a white background by subtracting the morphological Close of the image from the original image. Bottom-hat filter increases the contrast between the bright levels in the retinal image and as a result of this, regions with different brightness levels can successfully be distinguished. Eq. (1) represents the bottom-hat transformation,

where g is the retinal image. B is the structural element to be used (filter structure), and n is the bottom-hat transformation. In this work, a disk with r=8 pixel radius have been used as building element and bottom-hat transformation is applied as to be n=8.

The last pre-processing applied before clustering is the brightness correction. During the photo shoots of biomedical images, low contrast values or high pixel levels may occur due to the device or environment. In brightness correction process, these extreme pixel values creating noise effect on the retinal image are being optimized by using the following Equation for a successful segmentation.

In the equation given above, a and b represent the minimum and maximum pixel values of the image, respectively. Also, c and d are respectively representing the lowest and highest limit values of the pixel range that we want to normalize the image and in which the noise effects are minimized. As a result of brightness correction the contrast between the vessels and the image background becomes optimal for clustering.

Figure 3 (b) and (e) show the green layer images obtained as a result of band selection for the retinal images taken from the DRIVE database given in Figure 1 (a) and (b). The enhanced retinal images obtained after the subsequent two pre-processing operations have been represented in Figure 3 (c) and (f).

The enhanced retinal images obtained after pre-processing operations. (a) and (d) are the normal and abnormal retinal images, respectively, taken from the DRIVE database, (b) and (e) are the green layer images obtained as a result of band selection for the retinal images taken from the DRIVE database given in Figure 1 (a) and (b), (c) and (f) are the enhanced retinal images obtained after the bottom-hat filtering and brightness correction, (g) and (j) are the normal and abnormal retinal images, respectively, taken from the STARE database, (h) and (k) are the green layer images obtained as a result of band selection for the retinal images taken from the STARE database given in Figure 2 (a) and (b), (i) and (l) are the enhanced retinal images obtained after the bottom-hat filtering and brightness correction.

The green layers of the retinal images given in Figure 2 (a) and (b) which are taken from the STARE database have been shown with Figure 3 (h) and (k). The enhanced retinal images obtained after bottom-hat and brightness correction operations have been given in Figure 3 (i) and (l).

Materials and methods

Artificial bee colony algorithm

Swarm intelligence simulates the collective behaviour of animal societies [18] and it provides effective solutions in all areas of engineering. Artificial bee colony algorithm which is proposed by Karaboga in 2005 [19] is one of the most effective and widely used swarm based algorithms.

The pseudo-code of a basic ABC algorithm can be given as the following.

Randomly create an initial population consisting of solutions

Calculate the fitness value of each xi solution in the population.

Cycle=1

REPEAT

Produce new solutions vij in the neighbourhood of xijusing

Apply the greedy selection betweenxi andviand save the selected new solution to memory.

Calculate the probability values pi for the solutions xi by means of their fitness values using the following Eq. (3).

where the pi values are normalized into [0,1]. The fitness value of each solution is calculated by using the following Eq. (4).

Produce the new solutions (new positions) vi from the solutions xi. selected depending on pi. and evaluate them.

Apply the greedy selection between xi and vi then memorize the selected new solution.

Determine the abandoned solution (source), if exists, and replace it with a new randomly produced solution xi using Eq. (5) given below,

So far, memorize the best food source position (solution) achieved

Cycle=Cycle + 1

UNTIL Cycle=Maximum Cycle Number

After an initial population is created randomly the ABC algorithm performs three steps at each cycle of the search. At the first step the fitness (quality) values of the initial solutions are calculated and memorized. Then a new possible solution within the neighborhood of the present solution is determined and its fitness value is calculated. If the fitness value of the new solution is higher, the previous solution is forgotten and the new solution is memorized (greedy selection). At last step new possible solutions are determined within the neighborhood of the solutions evaluated so far and then their fitness values are calculated.

In ABC algorithm, if a solution cannot be improved by a predetermined number of trials, it means that the associated solution has been exhausted and will no more be evaluated. The memorized information about this abandoned solution is replaced with information of a randomly produced new solution. The number of trials for releasing a solution is equal to the value of “limit” which is an important control parameter of ABC algorithm. These three steps are repeated until the termination criteria are satisfied.

Assume that wi is the ith solution to the problem and

where s is the number of solutions in the population. A new possible solution is selected depending on the probabilities calculated and then a neighbour solution around the chosen one is determined. This process is repeated until all possible solutions evaluated. Assume that a possible solution selected is wi. The neighbour solution of wi might be determined as the following,

where ϕi is a randomly generated number in the interval [−1, +1] and k is a randomly produced index different from i. If

Similar to PSO, DE, TLBO, GWO, FA and HS algorithms, while performing retinal vessel segmentation with basic ABC algorithm, optimal pixel range consisting of optimal lower and upper pixel values is investigated. In basic ABC algorithm, the segmentation process is initialized via a randomly selected xi pixel position. Firstly, the fitness value of each xi pixel position creating the retinal image is calculated and then these position values are updated by using Eq. (5) until the optimum fitness values for lower and upper bounds is reached. Finally, the retinal vessel segmentation is realized in the interval between the optimum xi lower and upper pixel positions obtained so far.

Particle swarm optimization algorithm

Particle swarm optimization is a population based stochastic optimization technique developed by Eberhart and Kennedy in 1995, inspired by social behavior of bird flocking or fish schooling [20]. In PSO, a population of particles (solutions) is made to move across D-dimensional space. The particles move across the problem space by getting information from the current optimum particles. The position of a given particle at any cycle represents a possible solution of the problem. Evaluating the objective function at a given position gives the fitness of the corresponding solution. The particles also have velocities which direct particles searching in the space.

PSO is initialized with a population including randomly produced particles and then searches for optimal solutions by updating positions in each generation. In every cycle, each particle is updated by best solution [

Intuitively, the information about good solutions is distributed through the swarm and thus the particles are directed to move to good areas in the search space. At each time step t. the velocity

The update of the velocity from the previous velocity to the new velocity is determined by the following Eq. (9):

where r1 and r2 are uniformly distributed random numbers. The parameter ω is called the inertia weight and controls the magnitude of the old velocity

| The pseudo code of the procedure: |

| Initialize |

| REPEAT |

| Calculate fitness values of all particles |

| Modify the best particles in the swarm |

| Choose the particle with the best fitness value of all the particles |

| Calculate the velocities of particles |

| Update the particle positions |

| UNTIL(maximum generations or minimum error criteria is satisfied) |

If the sum of accelerations would cause the velocity of that parameter to exceed υmax, the velocity on that dimension is limited to υmax.

While performing retinal vessel segmentation by using basic PSO algorithm, firstly, for each xi pixel position value that is randomly selected and constitutes the initial population, fitness values are calculated separately and stored in the

Differential evolution algorithm

Differential evolution algorithm which has been introduced by Storn and Price [21], is a method with crossover and mutation operations that work directly on continuous-valued vectors. An optimization task consisting of D parameters can be represented by a D dimensional vector. Therefore, in DE algorithm a population of NP solution vectors is randomly created at the start. This population is successfully improved by applying mutation, crossover and selection operators.

The main steps of a basic DE algorithm are given below:

| Initialization |

| Evaluation |

| REPEAT |

| Mutation |

| Recombination |

| Evaluation |

| Selection |

| UNTİL(maximum generations or minimum error criteria is satisfied) |

In the mutation operator, for each target vector

where

where j=1,2, … , D;

While DE algorithm is applied to retinal vessel segmentation, firstly, an initial population consisting of vectorially defined pixel locations is created. Then, by using the mutation and crossover operations represented in Eqs. (10) and (11), new pixel values are randomly created in the neighbourhood of each pixel value available in the initial population. The fitness values of the previous and the new produced pixel values are compared and the better solutions are stored as the optimal lower and upper pixel values. At each cycle, the optimal pixel values in the memory are updated by mutation and crossover, and as a result of this the most appropriate lower and upper pixel values for retinal vessel segmentation are obtained. Finally, by using the pixel value interval between the lower and upper pixel values obtained optimal retinal vessel segmentation is achieved.

Teaching learning based optimization algorithm

TLBO is an approach based on the learning-teaching interaction between teachers and students in order to solve multi-dimensional, linear and nonlinear problems [22]. The algorithm consists of two main phases so as to be teacher phase and learners phase.

In teacher phase, the teacher transfers knowledge to the students in order to increase the mean knowledge level of the class. Since the teacher is the most experienced and knowledgeable person in a class, he or she represents the best solution of the entire population. Let m be the number of subjects which corresponds to the parameter number to be optimized. Also, if n is the number of learners, population size can be determined as k=1, 2, … , n. At any cycle of i, Mj.i represents the mean result of the learners in a particular subject of j=1, 2, … , m. The average difference between the teacher’s knowledge capacity and the learning capacity of students is given in Eq. (12).

where,

As seen from Eq. (12), the value of

where,

The learner phase aims to increase the knowledge owned by the students by means of interaction with the teacher or between themselves. Let, P and Q be the randomly selected students, such that,

where

| The pseudo code of the TLBO algorithm can be given as following. |

| Randomly create an initial population |

| Calculate fitness values of all individuals |

| Teacher phase: Modify the population based on Eq. (14) and discretize it |

| REPEAT |

| Calculate the fitness values of all individuals |

| Accept each new individual if it gives a better fitness value |

| Learner phase: Modify the population based on Eqs. (15)–(17) and discretize it |

| Calculate the fitness value of all individuals |

| Accept each new individual if it gives a better fitness value |

| UNTIL(maximum cycles or minimum error criteria is satisfied) |

In retinal vessel segmentation based on TLBO algorithm, each pixel in the retinal image represents a student. The pixel with the highest fitness value among these pixels represents the teacher. At each cycle, the new pixel values representing possible solutions are produced by Eq. (14) and then fitness values of these new solutions are calculated. Among these new fitness values, the best two is selected and stored to the memory as the best lower and upper pixel values by using Eqs. (15)–(17). By repeating these steps at each cycle, the optimal lower and upper pixel values producing the best fitness values are found and the retinal vessel segmentation is applied according to these optimal solutions.

Grey wolf optimization algorithm

GWO is a heuristic optimization algorithm inspired from the collective behaviour of gray wolves in nature and proposed by Mirjalili et al. in 2014 [23], [24], [25]. It simulates the leadership hierarchy consisting of four types of wolves named as alpha (α), beta (β), delta (δ) and omega (w). In the population, α wolves represent the best solution for the problem to be optimized while the β and δ wolves represent the second and the third best solutions, respectively. The rest of the populations are determined as the w wolves. In GWO algorithm, an optimization task consists of three phases to be encircling, hunting and attacking.

Encircling phase includes the optimization of the positions of gray wolf and prey as given below,

where,

where,

In hunting phase, the position of the prey corresponds to the global solution. At the beginning of the search it is supposed that the α, β and δ have better knowledge about the position of the prey. The fitness values of each position information are calculated and the solution with the best fitness value is defined as α. The solution with second best fitness value is assigned to β and the solution with third best fitness value is defined as δ. At each cycle of the search, the position of the current optimal solution can be updated according to the Eqs. (22)–(24) in order to reach to the global solution.

where,

As seen from Eq. (24), the position updated would be in a random place within a circle which is defined by the positions of α, β and δ in the search space.

Attacking phase can be simulated by reducing the distance between the gray wolf and the prey. This phase can be modeled by decreasing the value of

The pseudo code of a basic GWO algorithm can be given as the following.

| Randomly create an initial population, |

| Determine the initial values of |

| Calculate the fitness value of each initial position ( |

| while(t < Max number of cycles) |

| for each search agent |

| Update the current optimal position by Eqs. (22)–(24). |

| end for |

| Update |

| Calculate the fitness value of current positions |

| Update ( |

| t=t + 1 |

| end |

Return Step 3 for current

In GWO algorithm, an initial population consisting of

Firefly algorithm

FA, which was proposed by Xin-She Yang, is a novel swarm intelligence based algorithm inspired by the phenomenon of bioluminescent communication behaviour of fireflies [26].

The following rules create the philosophy of the FA algorithm [26].

Fireflies are unisex and can attract any fellow FF.

Attractiveness depends on ones brightness.

The brightness or light intensity of a firefly is influenced by the landscape of fitness/cost function.

The pseudo code of a basic FA can be given as the following.

| BEGIN |

| Initialisation max cycle, α, βo, γ |

| Generate initial population |

| Define the Objective function f(x). |

| Determine Intensity (I) at cost (x) of each individual determined by f(xi) |

| while (t<Iter max) |

| fori=1 to n |

| forj=1 to n |

| if (Ij>Ii) |

| Move firefly i towards j in K dimension |

| end if |

| Evaluate new solutions and update light intensity |

| end forj |

| end fori |

| Rank the fireflies and find the current best |

| end while |

| Post process results and visualization |

| END (if end procedure satisfied) |

In retinal vessel segmentation based on FA algorithm, the optimization task begins with creating an initial population consisting of Xi pixel values in an interval limited by lowest and highest pixel values and then the fitness value of each Xi is calculated. Then the fitness value of each Xi is compared to that of the fitness value of new possible solution produced in the neighbourhood of the relevant Xi by the following Eq. (25).

where, β represents the value of attractiveness parameter changing in the interval of [0,1], i represents the index of the selected pixel value, j represents the index of the new pixel produced as a possible solution in the neighbourhood of the ith pixel, k represents the cycle number, Xi,k represents the value of ith pixel for kth cycle, Xj,k represents the value of jth pixel for kth cycle, α represents the value of attractiveness parameter changing in the interval of [0,1] and finally ∈ is a randomly produced number in the interval of [−0.5,0.5].

Among the pixel values updated at each cycle, the pixel values producing the highest fitness value for lower and upper pixel value bounds are selected and retinal vessel segmentation is applied for this interval.

Harmony search algorithm

Harmony search (HS) algorithm was developed by Geem at al. in an analogy with music improvisation process where music players improvise the pitches of their instruments to obtain better harmony [27].

The steps in the procedure of HS algorithm can be given as the following [28].

Step 1. Initialize the problem and algorithm parameters.

Step 2. Initialize the harmony memory.

Step 3. New harmony improvisation.

Step 4. Update the harmony memory.

Step 5. Check the stopping criterion.

The pseudo code of a basic HS algorithm can be given as the following [28].

| Objective function f (Xi), i=1 to N |

| Define HS parameters: HMS. HMCR. PAR. and BW |

| Generate initial harmonics (for i=1 to HMS) |

| Evaluate f(Xi) |

| while (t<Max number of iterations) |

| Create a new harmony: Xinew, i=1 to N |

| if (U(0.1)>HMCR), |

| Xinew=Xjold, where Xjold is a random value in the interval of {1, … , HMS} |

| else if If (U (0.1)>PAR), |

| Xinew=Xjold + BW [(2 × U (0.1)) – 1] |

| else |

| Xinew=XL(i) + U (0.1) × [XU(i) – XL(i)] |

| end if |

| Evaluation f (Xinew) |

| Accept the new harmonics (solutions) if better |

| end while |

Find the current best estimates

While applying HS algorithm to retinal vessel segmentation, firstly, an initial population consisting of HMS position vectors within an interval limited by lowest and highest pixel values is randomly created and then these position vectors representing possible solutions are registered into the harmony memory. After calculating the fitness values for each pixel representing possible solutions, new position vectors representing possible solutions (pixel values) are created by using the equations given in the pseudo code. The fitness value of the older and the new produced position vectors are compared and the pixel value with higher fitness is transferred to the next generation by replacing with the relevant pixel value in the harmony matrix. Otherwise, the old pixel value in the harmony matrix is preserved. Thus, after a predetermined cycle number the algorithm will be reached the most appropriate lower and upper limits of the pixel interval in which the optimum retinal vessel segmentation can be performed.

Retinal vessel segmentation

The performances of the algorithms have been tested on the images taken from two public databases. The first one is the STARE (Structured Analysis of the Retina) database which is created by Hoover et al. [29]. STARE database contains 20 raw retinal images for blood vessel segmentation and 10 of these images have pathologies. All images were captured by a TopCon TRV-50 fundus camera at 35° field of view (FOV) and digitized to 700 × 605 pixels with 8 bits per color channel.

The second database used in this work is the DRIVE database which was established by Alonso-Montes et al. [30]. In DRIVE database, 20 images are employed for training and 20 images for testing. These images are captured by a CanonCR5 3CCD camera with a 45° FOV and size is 700 × 605 pixels per color channel.

The flowchart of the method applied for retinal vessel segmentation is shown in Figure 4.

Flowchart of retinal vessel segmentation.

At first step, due to its higher contrast the green layer of the RGB retinal image is extracted and in the next steps analysis have been carried out on this layer. Although its higher rate of contrast, it is seen from the analyses that the G layer alone is insufficient for a successful clustering. For this reason, in order to clarify the vessels and the image background and as a result of this to improve the clustering performance, two more pre-processing were applied to the retinal image. The first process is the bottom-hat filtering while the second process is the brightness correction. Retinal images obtained after the pre-processing phase are subjected to segmentation process by the ABC, PSO, DE, TLBO, GWO, FA and HS algorithms. In the segmentation phase. optimal clustering centers have been determined by using ABC, PSO, DE, TLBO, GWO, FA and HS algorithms in order to obtain the highest clustering performance and clustering has been done according to these centers.

In the clustering process cluster centers are randomly determined and all pixels are indexed to the closest cluster center. In order to index each pixel to the nearest cluster center, the Mean-Squared Error (MSE) function is used. Namely, the quality of each solution has been calculated as mean squared error. The MSE function can be expressed as the following,

As seen from the equation given above, MSE error function calculates the quality of a solution based on the distance between each pixel and its related cluster center. In this equation, N represents the total number of pixels in the retinal image. Cluster centers are determined by algorithm and fi represents the value of the cluster center closest to the pixel i. Also, yi represents the pixel value of the ith pixel. By using MSE error function it is aimed to group the pixels around the appropriate cluster centers with the minimum error.

The control parameter values of the algorithms used in the simulations are given in Table 1.

Control parameter values used in the simulations.

| ABC |

| GWO |

|

| PSO |

| FA |

|

| DE |

| HS |

|

| TLBO |

|

Results

Figure 5 represents the resulting retinal images obtained after applying segmentation to the images Figure 1 (a) and (b) by using the clustering based ABC, PSO, DE, TLBO, GWO, FA and HS algorithms. From the figures it is seen that some regions that are not vessel but whose pixel values are close to the pixel value of the vessels seems to be segmented as vessel. There are post-processing methods in literature in order to eliminate these regions. But in this work in order to represent the pure performance of the algorithm these post-processing methods have not been applied. In general, as seen from the figures all three algorithms show a similar performance in terms of clustering. Also it is seen that ABC, PSO, DE, TLBO, GWO, FA and HS algorithms are able to cluster close pixel values at high accuracy.

Retinal images obtained after applying segmentation to the images in Figure 1a and 1b by using the clustering based ABC (a and h), PSO (b and i), DE (c and j), TLBO (d and k), GWO (e and l), FA (f and m) and HS (g and n) algorithms.

The clustering performance of the algorithms for Figure 2 (a) and (b) are given in Figure 6. In these images taken from the STARE database, it is seen that the performance of the algorithms is lower than the images taken from the DRIVE database. On the other hand, the results obtained show that the clustering performance of the algorithms is similar but a bit higher when compared with the results obtained in the literature.

Retinal images obtained after applying segmentation to the images Figure 2a and 2b by using the clustering based ABC (a and h), PSO (b and i), DE (c and j), TLBO (d and k), GWO (e and l), FA (f and m) and HS (g and n) algorithms.

Performance measures

The statistical analysis and performance comparison of the clustering based algorithms are realized based on the sensitivity (Se), specificity (Sp), accuracy (Acc), precision, standard deviation and mean squared error. Firstly, the performance of the algorithms is compared in terms of the Se, Sp and Acc and precision. These measures have been computed individually for each image and on average for the whole test images. The expressions are given below,

where the true positives (TP) represent the number of pixels that are actually vessels and have been detected as vessels. The false negatives (FN) determine the number of pixels that are actually vessels but which have been detected as background pixels. The true negatives (TN) are the pixels classified as non-vessel and they are really background pixels. The false positives (FP) represent the number of pixels that are actually background pixel and have been detected as vessels. So the sensitivity (Se) is the ratio of correctly classified vessel pixels while specificity (Sp) is the ratio of correctly classified background pixels and accuracy (Acc) is the ratio of correctly classified both the vessels and background pixels. Finally, precision determines the number of accurately true predicted TP pixels.

Performance analysis for DRIVE and STARE databases

Table 2, Table 3, Table 4, Table 5 show the performance of the ABC, PSO, DE, TLBO, GWO, FA and HS algorithms in terms of sensitivity, specificity, accuracy and precision for 20 retinal images taken from both DRIVE and STARE databases. These tables also contain the mean values of the results obtained for 20 retinal images. From the results obtained for Se, it is seen that each algorithm is able to reach high performance in terms of the ratio of correctly classified vessel pixels. The Sp values reached represents that each algorithm can successfully distinguish the vessel pixels and background pixels. Also, when the Acc values examined the results show that the ratio of correctly classified both the vessels and background pixels is very high for each algorithm. Finally, it is seen from the results obtained for precision that heuristic algorithms show similar and good performances in terms of correctly classified vessel pixels.

The performance measures of the different images in the DRIVE database in terms of sensitivity, specificity and accuracy.

| Image | Sensitivity | Specificity | Accuracy | ||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ABC | PSO | DE | TLBO | GWO | FA | HS | ABC | PSO | DE | TLBO | GWO | FA | HS | ABC | PSO | DE | TLBO | GWO | FA | HS | |

| 1 | 0.8913 | 0.8913 | 0.8913 | 0.8913 | 0.8606 | 0.8913 | 0.8913 | 0.9884 | 0.9884 | 0.9884 | 0.9884 | 0.9847 | 0.9884 | 0.9884 | 0.9791 | 0.9791 | 0.9791 | 0.9791 | 0.9724 | 0.9791 | 0.9791 |

| 2 | 0.8302 | 0.7857 | 0.8302 | 0.7857 | 0.8302 | 0.7857 | 0.7857 | 0.9776 | 0.9703 | 0.9776 | 0.9703 | 0.9776 | 0.9703 | 0.9703 | 0.9604 | 0.9479 | 0.9604 | 0.9479 | 0.9604 | 0.9479 | 0.9479 |

| 3 | 0.9408 | 0.8787 | 0.8787 | 0.8787 | 0.5392 | 0.8787 | 0.8787 | 0.9939 | 0.9867 | 0.9867 | 0.9867 | 0.923 | 0.9867 | 0.9867 | 0.9889 | 0.976 | 0.976 | 0.976 | 0.868 | 0.976 | 0.976 |

| 4 | 0.723 | 0.723 | 0.723 | 0.723 | 0.7793 | 0.723 | 0.723 | 0.9645 | 0.9645 | 0.9645 | 0.9645 | 0.9735 | 0.9645 | 0.9645 | 0.9371 | 0.9371 | 0.9371 | 0.9371 | 0.9527 | 0.9371 | 0.9371 |

| 5 | 0.9514 | 0.9514 | 0.9514 | 0.9514 | 0.9514 | 0.9514 | 0.9514 | 0.9955 | 0.9955 | 0.9955 | 0.9955 | 0.9955 | 0.9955 | 0.9955 | 0.9918 | 0.9918 | 0.9918 | 0.9918 | 0.9918 | 0.9918 | 0.9918 |

| 6 | 0.9572 | 0.9572 | 0.9572 | 0.9572 | 0.8605 | 0.9572 | 0.9572 | 0.9954 | 0.9954 | 0.9954 | 0.9954 | 0.9836 | 0.9954 | 0.9954 | 0.9917 | 0.9917 | 0.9917 | 0.9917 | 0.9707 | 0.9917 | 0.9917 |

| 7 | 0.9593 | 0.8874 | 0.8874 | 0.8874 | 0.7331 | 0.8874 | 0.8874 | 0.9967 | 0.9903 | 0.9903 | 0.9903 | 0.9727 | 0.9903 | 0.9903 | 0.994 | 0.9822 | 0.9822 | 0.9822 | 0.9504 | 0.9822 | 0.9822 |

| 8 | 0.5506 | 0.5506 | 0.5506 | 0.5506 | 0.8672 | 0.5506 | 0.5506 | 0.9449 | 0.9449 | 0.9449 | 0.9449 | 0.9892 | 0.9449 | 0.9449 | 0.9019 | 0.9019 | 0.9019 | 0.9019 | 0.98 | 0.9019 | 0.9019 |

| 9 | 0.7818 | 0.7818 | 0.7818 | 0.7818 | 0.8251 | 0.7818 | 0.7818 | 0.9761 | 0.9761 | 0.9761 | 0.9761 | 0.9817 | 0.9761 | 0.9761 | 0.9568 | 0.9568 | 0.9568 | 0.9568 | 0.9669 | 0.9568 | 0.9568 |

| 10 | 0.8932 | 0.8932 | 0.718 | 0.8932 | 0.8932 | 0.8932 | 0.8932 | 0.9908 | 0.9908 | 0.9705 | 0.9908 | 0.9908 | 0.9908 | 0.9908 | 0.9831 | 0.9831 | 0.9467 | 0.9831 | 0.9831 | 0.9831 | 0.9831 |

| 11 | 0.787 | 0.787 | 0.787 | 0.787 | 0.9879 | 0.787 | 0.787 | 0.976 | 0.976 | 0.976 | 0.976 | 0.9989 | 0.976 | 0.976 | 0.9569 | 0.9569 | 0.9569 | 0.9569 | 0.998 | 0.9569 | 0.9569 |

| 12 | 0.6624 | 0.6624 | 0.6624 | 0.6624 | 0.6824 | 0.6624 | 0.8609 | 0.9574 | 0.9574 | 0.9574 | 0.9574 | 0.9609 | 0.9574 | 0.9861 | 0.9243 | 0.9243 | 0.9243 | 0.9243 | 0.9305 | 0.9243 | 0.9747 |

| 13 | 0.7407 | 0.7992 | 0.7992 | 0.7992 | 0.6489 | 0.7992 | 0.7992 | 0.9621 | 0.9725 | 0.9725 | 0.9725 | 0.9426 | 0.9725 | 0.9725 | 0.9338 | 0.9516 | 0.9516 | 0.9516 | 0.9013 | 0.9516 | 0.9516 |

| 14 | 0.7439 | 0.7439 | 0.7439 | 0.7439 | 0.9292 | 0.7439 | 0.7439 | 0.973 | 0.973 | 0.973 | 0.973 | 0.9939 | 0.973 | 0.973 | 0.9511 | 0.9511 | 0.9511 | 0.9511 | 0.9887 | 0.9511 | 0.9511 |

| 15 | 0.8966 | 0.9444 | 0.9444 | 0.9444 | 0.5551 | 0.9444 | 0.9444 | 0.9908 | 0.9953 | 0.9953 | 0.9953 | 0.9393 | 0.9953 | 0.9953 | 0.9831 | 0.9913 | 0.9913 | 0.9913 | 0.8932 | 0.9913 | 0.9913 |

| 16 | 0.7784 | 0.8387 | 0.8387 | 0.8387 | 0.7084 | 0.8387 | 0.8387 | 0.9742 | 0.9824 | 0.9824 | 0.9824 | 0.9631 | 0.9824 | 0.9824 | 0.9538 | 0.9683 | 0.9683 | 0.9683 | 0.9345 | 0.9683 | 0.9683 |

| 17 | 0.7017 | 0.7017 | 0.7017 | 0.7017 | 0.7017 | 0.874 | 0.7017 | 0.9672 | 0.9672 | 0.9672 | 0.9672 | 0.9672 | 0.9886 | 0.9672 | 0.9409 | 0.9409 | 0.9409 | 0.9409 | 0.9409 | 0.9791 | 0.9409 |

| 18 | 0.8972 | 0.8972 | 0.8972 | 0.8972 | 0.7438 | 0.8972 | 0.8972 | 0.9885 | 0.9885 | 0.9885 | 0.9885 | 0.9663 | 0.9885 | 0.9885 | 0.9794 | 0.9794 | 0.9794 | 0.9794 | 0.9405 | 0.9794 | 0.9794 |

| 19 | 0.8161 | 0.8161 | 0.8161 | 0.8161 | 0.5084 | 0.8161 | 0.8161 | 0.9758 | 0.9758 | 0.9578 | 0.9758 | 0.9037 | 0.9758 | 0.9758 | 0.9572 | 0.9572 | 0.9572 | 0.9572 | 0.839 | 0.9572 | 0.9572 |

| 20 | 0.9646 | 0.7477 | 0.9646 | 0.7477 | 0.7464 | 0.9646 | 0.7477 | 0.9963 | 0.9967 | 0.9963 | 0.9667 | 0.9665 | 0.9963 | 0.9667 | 0.9933 | 0.9412 | 0.9933 | 0.9412 | 0.9408 | 0.9933 | 0.9412 |

| Mean | 0.82323 | 0.81193 | 0.81624 | 0.81193 | 0.7676 | 0.83139 | 0.82185 | 0.979255 | 0.979385 | 0.977815 | 0.977885 | 0.968735 | 0.98043 | 0.97932 | 0.96293 | 0.96049 | 0.9619 | 0.96049 | 0.94519 | 0.96505 | 0.96301 |

The performance measures of the different images in the DRIVE database in terms of precision.

| Image | Precision | ||||||

|---|---|---|---|---|---|---|---|

| ABC | PSO | DE | TLBO | GWO | FA | HS | |

| 1 | 0.7354 | 0.7354 | 0.7354 | 0.7354 | 0.8257 | 0.7354 | 0.7354 |

| 2 | 0.7241 | 0.6826 | 0.7241 | 0.6826 | 0.7241 | 0.6826 | 0.6826 |

| 3 | 0.6842 | 0.7529 | 0.7529 | 0.7529 | 0.8642 | 0.7529 | 0.7529 |

| 4 | 0.5838 | 0.5838 | 0.5838 | 0.5838 | 0.8177 | 0.5838 | 0.5838 |

| 5 | 0.7465 | 0.7465 | 0.7465 | 0.7465 | 0.7465 | 0.7465 | 0.7465 |

| 6 | 0.7269 | 0.7269 | 0.7269 | 0.7269 | 0.6644 | 0.7269 | 0.7269 |

| 7 | 0.7066 | 0.7539 | 0.7539 | 0.7539 | 0.7988 | 0.7539 | 0.7539 |

| 8 | 0.8265 | 0.8265 | 0.8265 | 0.8265 | 0.6491 | 0.8265 | 0.8265 |

| 9 | 0.7479 | 0.7479 | 0.7479 | 0.7479 | 0.618 | 0.7479 | 0.6841 |

| 10 | 0.7121 | 0.7121 | 0.8178 | 0.7121 | 0.7121 | 0.7121 | 0.7121 |

| 11 | 0.6414 | 0.6414 | 0.6414 | 0.6414 | 0.7592 | 0.6414 | 0.6414 |

| 12 | 0.8271 | 0.8271 | 0.8271 | 0.8271 | 0.5045 | 0.8271 | 0.774 |

| 13 | 0.582 | 0.6335 | 0.6335 | 0.6335 | 0.4420 | 0.6335 | 0.6335 |

| 14 | 0.8275 | 0.8275 | 0.8275 | 0.8275 | 0.7332 | 0.8275 | 0.8275 |

| 15 | 0.7283 | 0.683 | 0.683 | 0.683 | 0.8159 | 0.683 | 0.683 |

| 16 | 0.6618 | 0.7089 | 0.7089 | 0.7089 | 0.8496 | 0.7089 | 0.7089 |

| 17 | 0.8125 | 0.8125 | 0.8125 | 0.8125 | 0.8125 | 0.7613 | 0.8125 |

| 18 | 0.7106 | 0.7106 | 0.7106 | 0.7106 | 0.8183 | 0.7106 | 0.7106 |

| 19 | 0.6825 | 0.6825 | 0.6825 | 0.6825 | 0.8852 | 0.6825 | 0.6825 |

| 20 | 0.7213 | 0.7827 | 0.7213 | 0.7827 | 0.5856 | 0.7213 | 0.7827 |

| Mean | 0.71945 | 0.72891 | 0.7332 | 0.72891 | 0.73133 | 0.72328 | 0.723065 |

The performance measures of the different images in the STARE database in terms of sensitivity, specificity and accuracy.

| Image | Sensitivity | Specificity | Accuracy | ||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ABC | PSO | DE | TLBO | GWO | FA | HS | ABC | PSO | DE | TLBO | GWO | FA | HS | ABC | PSO | DE | TLBO | GWO | FA | HS | |

| 1 | 0.7205 | 0.7205 | 0.7205 | 0.7205 | 0.9611 | 0.7498 | 0.7498 | 0.9702 | 0.9702 | 0.9702 | 0.9702 | 0.9968 | 0.9742 | 0.9742 | 0.9461 | 0.9461 | 0.9461 | 0.9461 | 0.9941 | 0.9533 | 0.9533 |

| 2 | 0.6876 | 0.6876 | 0.6876 | 0.6876 | 0.8461 | 0.6876 | 0.6876 | 0.9701 | 0.9701 | 0.9701 | 0.9701 | 0.9878 | 0.9701 | 0.9701 | 0.9454 | 0.9454 | 0.9454 | 0.9454 | 0.9774 | 0.9454 | 0.9454 |

| 3 | 0.6423 | 0.6423 | 0.6423 | 0.6423 | 0.9761 | 0.6423 | 0.6423 | 0.9568 | 0.9568 | 0.9568 | 0.9568 | 0.998 | 0.9568 | 0.9568 | 0.9229 | 0.9229 | 0.9229 | 0.9229 | 0.9963 | 0.9229 | 0.9229 |

| 4 | 0.3513 | 0.6634 | 0.3513 | 0.3513 | 0.6634 | 0.3513 | 0.3513 | 0.8508 | 0.954 | 0.8508 | 0.8508 | 0.954 | 0.8508 | 0.8508 | 0.7575 | 0.9191 | 0.7575 | 0.7575 | 0.9191 | 0.7575 | 0.7575 |

| 5 | 0.8407 | 0.8407 | 0.8407 | 0.8407 | 0.7572 | 0.8407 | 0.8407 | 0.9825 | 0.9825 | 0.9825 | 0.9825 | 0.9708 | 0.9825 | 0.9825 | 0.9685 | 0.9685 | 0.9685 | 0.9685 | 0.9479 | 0.9685 | 0.9685 |

| 6 | 0.8211 | 0.8211 | 0.8789 | 0.8211 | 0.7104 | 0.8221 | 0.8221 | 0.981 | 0.981 | 0.9879 | 0.981 | 0.9649 | 0.981 | 0.981 | 0.9656 | 0.9656 | 0.9779 | 0.9656 | 0.9374 | 0.9656 | 0.9656 |

| 7 | 0.9324 | 0.9945 | 0.9945 | 0.9945 | 0.7999 | 0.9945 | 0.9324 | 0.9937 | 0.9995 | 0.9995 | 0.9995 | 0.9785 | 0.9995 | 0.9937 | 0.9884 | 0.9991 | 0.9991 | 0.9991 | 0.9612 | 0.9991 | 0.9884 |

| 8 | 0.8955 | 0.8955 | 0.8955 | 0.8955 | 0.7947 | 0.8955 | 0.8955 | 0.9906 | 0.9906 | 0.9906 | 0.9906 | 0.9795 | 0.9906 | 0.9906 | 0.9828 | 0.9828 | 0.9828 | 0.9828 | 0.9627 | 0.9828 | 0.9828 |

| 9 | 0.9962 | 0.9281 | 0.9281 | 0.9281 | 0.9281 | 0.9281 | 0.9281 | 0.9997 | 0.9932 | 0.9932 | 0.9932 | 0.9932 | 0.9932 | 0.9932 | 0.9994 | 0.9876 | 0.9876 | 0.9876 | 0.9876 | 0.9876 | 0.9876 |

| 10 | 0.8143 | 0.8143 | 0.8143 | 0.8143 | 0.925 | 0.8143 | 0.8143 | 0.9796 | 0.9796 | 0.9796 | 0.9796 | 0.9927 | 0.9796 | 0.9796 | 0.9633 | 0.9633 | 0.9633 | 0.9633 | 0.9866 | 0.9633 | 0.9633 |

| 11 | 0.9377 | 0.9377 | 0.9377 | 0.9377 | 0.7654 | 0.9377 | 0.9377 | 0.995 | 0.995 | 0.995 | 0.995 | 0.9772 | 0.995 | 0.995 | 0.9907 | 0.9907 | 0.9907 | 0.9907 | 0.9584 | 0.9907 | 0.9907 |

| 12 | 0.8163 | 0.8163 | 0.7862 | 0.8163 | 0.8163 | 0.8163 | 0.8163 | 0.9785 | 0.9785 | 0.9742 | 0.9785 | 0.9785 | 0.9785 | 0.9785 | 0.9615 | 0.9615 | 0.9539 | 0.9615 | 0.9615 | 0.9615 | 0.9615 |

| 13 | 0.8813 | 0.8813 | 0.8813 | 0.8813 | 0.7034 | 0.8813 | 0.8813 | 0.9883 | 0.9883 | 0.9883 | 0.9883 | 0.9643 | 0.9883 | 0.9883 | 0.9787 | 0.9787 | 0.9787 | 0.9787 | 0.9362 | 0.9787 | 0.9787 |

| 14 | 0.9822 | 0.9822 | 0.8918 | 0.9822 | 0.7102 | 0.9822 | 0.8918 | 0.9986 | 0.9986 | 0.9905 | 0.9986 | 0.9687 | 0.9986 | 0.9905 | 0.9973 | 0.9973 | 0.9825 | 0.9973 | 0.9434 | 0.9973 | 0.9825 |

| 15 | 0.7968 | 0.7968 | 0.7968 | 0.7968 | 0.9393 | 0.7968 | 0.7968 | 0.9794 | 0.9794 | 0.9794 | 0.9794 | 0.9947 | 0.9794 | 0.9794 | 0.9625 | 0.9625 | 0.9625 | 0.9625 | 0.9902 | 0.9625 | 0.9625 |

| 16 | 0.9095 | 0.9905 | 0.9095 | 0.9095 | 0.9095 | 0.9095 | 0.9095 | 0.9913 | 0.9913 | 0.9913 | 0.9913 | 0.9913 | 0.9913 | 0.9913 | 0.9841 | 0.9841 | 0.9841 | 0.9841 | 0.9841 | 0.9841 | 0.9841 |

| 17 | 0.9612 | 0.9449 | 0.9449 | 0.9449 | 0.9612 | 0.9449 | 0.9449 | 0.9969 | 0.9955 | 0.9955 | 0.9955 | 0.9969 | 0.9955 | 0.9916 | 0.9942 | 0.9916 | 0.9916 | 0.9916 | 0.9942 | 0.9916 | 0.9916 |

| 18 | 0.7593 | 0.7593 | 0.7593 | 0.7593 | 0.7593 | 0.9547 | 0.7593 | 0.9748 | 0.9748 | 0.9748 | 0.9748 | 0.9748 | 0.9961 | 0.9748 | 0.9544 | 0.9544 | 0.9544 | 0.9544 | 0.9544 | 0.9929 | 0.9544 |

| 19 | 0.9055 | 0.9095 | 0.9095 | 0.9095 | 0.9055 | 0.9055 | 0.9055 | 0.9896 | 0.9896 | 0.9896 | 0.9896 | 0.9896 | 0.9896 | 0.9896 | 0.9813 | 0.9813 | 0.9813 | 0.9813 | 0.9813 | 0.9813 | 0.9813 |

| 20 | 0.8817 | 0.8817 | 0.7621 | 0.8817 | 0.6438 | 0.9014 | 0.9014 | 0.9869 | 0.9869 | 0.9701 | 0.9869 | 0.9482 | 0.9893 | 0.9893 | 0.9764 | 0.9764 | 0.9468 | 0.9764 | 0.9095 | 0.9807 | 0.9807 |

| Mean | 0.82667 | 0.84541 | 0.81664 | 0.825755 | 0.823795 | 0.837825 | 0.82043 | 0.977715 | 0.98277 | 0.976495 | 0.97761 | 0.98002 | 0.978995 | 0.97704 | 0.96105 | 0.968945 | 0.95888 | 0.960865 | 0.964175 | 0.963365 | 0.960165 |

The performance measures of the different images in the STARE database in terms of precision.

| Image | Precision | ||||||

|---|---|---|---|---|---|---|---|

| ABC | PSO | DE | TLBO | GWO | FA | HS | |

| 1 | 0.6887 | 0.6887 | 0.6887 | 0.6887 | 0.6524 | 0.6886 | 0.6887 |

| 2 | 0.7652 | 0.7652 | 0.7652 | 0.7652 | 0.7035 | 0.7651 | 0.7652 |

| 3 | 0.7087 | 0.7087 | 0.7087 | 0.7087 | 0.7087 | 0.7087 | 0.7087 |

| 4 | 0.7684 | 0.6234 | 0.7684 | 0.7684 | 0.7684 | 0.7684 | 0.7684 |

| 5 | 0.7834 | 0.7834 | 0.7834 | 0.7834 | 0.7169 | 0.7833 | 0.7834 |

| 6 | 0.6797 | 0.6797 | 0.6177 | 0.6797 | 0.6797 | 0.6796 | 0.6797 |

| 7 | 0.6771 | 0.7037 | 0.7037 | 0.7037 | 0.6771 | 0.7037 | 0.6771 |

| 8 | 0.743 | 0.743 | 0.743 | 0.743 | 0.7837 | 0.743 | 0.743 |

| 9 | 0.73 | 0.7014 | 0.7014 | 0.7014 | 0.73 | 0.7014 | 0.7014 |

| 10 | 0.6955 | 0.6955 | 0.6955 | 0.6955 | 0.5877 | 0.6955 | 0.6955 |

| 11 | 0.6905 | 0.6905 | 0.6905 | 0.6905 | 0.6662 | 0.6905 | 0.695 |

| 12 | 0.7122 | 0.7122 | 0.6821 | 0.7112 | 0.7122 | 0.7122 | 0.7112 |

| 13 | 0.6739 | 0.6739 | 0.6739 | 0.6739 | 0.6739 | 0.6739 | 0.6739 |

| 14 | 0.6927 | 0.7293 | 0.7293 | 0.6927 | 0.82 | 0.6927 | 0.7293 |

| 15 | 0.7362 | 0.7362 | 0.7362 | 0.7362 | 0.6891 | 0.7362 | 0.7362 |

| 16 | 0.7134 | 0.7134 | 0.7134 | 0.7134 | 0.7134 | 0.7134 | 0.7134 |

| 17 | 0.7224 | 0.6855 | 0.6855 | 0.6855 | 0.7224 | 0.6855 | 0.6855 |

| 18 | 0.6497 | 0.6497 | 0.6497 | 0.6497 | 0.6497 | 0.6313 | 0.6497 |

| 19 | 0.6641 | 0.6641 | 0.6641 | 0.6641 | 0.6641 | 0.6641 | 0.6641 |

| 20 | 0.7042 | 0.7042 | 0.7248 | 0.7042 | 0.7042 | 0.6904 | 0.6904 |

| Mean | 0.70995 | 0.702585 | 0.70626 | 0.707955 | 0.701165 | 0.706375 | 0.70799 |

In order to compare the performances of the algorithms, the mean values obtained are combined in Table 6 and Table 7. When the mean Se, Sp and Acc values obtained for the DRIVE database are compared it is seen that all the algorithms produce similar results but FA performs a bit better classification. However, the performance of DE algorithm for DRIVE database in terms of precision seems only a bit better than the other algorithms. In the retinal images taken from the STARE database, the PSO algorithm showed the best results in terms of Se, Sp and Acc. It is also seen that the algorithm with the best precision performance for the STARE database is the HS algorithm. In general, it can be said that the clustering performances of the algorithms are successful and too close to each other.

The statistical performances of the ABC, PSO, DE, TLBO, GWO, FA and HS algorithms for the retinal images taken from DRIVE database.

| Method | Sensitivity | Specificity | Accuracy | Precision |

|---|---|---|---|---|

| ABC | 0.823237 | 0.979255 | 0.96293 | 0.71945 |

| PSO | 0.81193 | 0.979385 | 0.96049 | 0.72891 |

| DE | 0.81624 | 0.977815 | 0.9619 | 0.7332 |

| TLBO | 0.81193 | 0.977885 | 0.96049 | 0.72891 |

| GWO | 0.7676 | 0.968735 | 0.94519 | 0.73133 |

| FA | 0.83139 | 0.98043 | 0.96505 | 0.72328 |

| HS | 0.82185 | 0.97932 | 0.96301 | 0.723065 |

The statistical performances of the ABC, PSO, DE, TLBO, GWO, FA and HS algorithms for the retinal images taken from STARE database.

| Method | Sensitivity | Specificity | Accuracy | Precision |

|---|---|---|---|---|

| ABC | 0.82667 | 0.977715 | 0.96105 | 0.70995 |

| PSO | 0.84541 | 0.98277 | 0.968945 | 0.702585 |

| DE | 0.81664 | 0.976495 | 0.95888 | 0.70626 |

| TLBO | 0.825755 | 0.97761 | 0.960865 | 0.707955 |

| GWO | 0.823795 | 0.98002 | 0.964175 | 0.701165 |

| FA | 0.837825 | 0.978995 | 0.963365 | 0.706375 |

| HS | 0.82043 | 0.97704 | 0.960165 | 0.70799 |

A detailed statistical performance comparison has been realized between the heuristic approaches analyzed in this work and the studies published in literature. Table 8 and Table 9 contain the results obtained for DRIVE and STARE databases, respectively. As seen from Table 8, the sensitivity of the heuristic algorithms is close to [33], but higher than the other approaches. On the other hand, for the STARE database, the sensitivity values produced by the heuristic algorithms are similar to [33] and [49], but higher than the other approaches. Namely, the ABC, PSO, DE, TLBO, GWO, FA and HS algorithms considerably improve the sensitivity of the clustering process for both DRIVE and STARE databases. Furthermore, the clustering performance of the heuristic algorithms in terms of the specificity seems similar with the methods published in literature for both DRIVE and STARE databases. Finally, the higher accuracy values produced by the heuristic algorithms represent that the pixel classification performance of the heuristic methods is higher than or similar to the other methods in literature.

Performance comparison of ABC, PSO, DE, TLBO, GWO, FA and HS algorithms and other methods for DRIVE database.

| Methods | Sensitivity | Specificity | Accuracy | |

|---|---|---|---|---|

| You et al. [31] | 0.7410 | 0.9751 | 0.9434 | |

| Roychowdhury et al. [32] | 0.725 | 0.983 | 0.952 | |

| Wang et al. [33] | 0.8173 | 0.9733 | 0.9767 | |

| Mendonca et al. [34] | 0.7344 | 0.9764 | 0.9452 | |

| Azzopardi et al. [35] | 0.765 | 0.970 | 0.944 | |

| Fraz et al. [36] | 0.7406 | 0.9807 | 0.9480 | |

| Odstrcilik et al. [37] | 0.706 | 0.9693 | 0.934 | |

| Marin et al. [38] | 0.7068 | 0.9801 | 0.9452 | |

| Kaba et al.[39] | 0.7466 | 0.968 | 0.941 | |

| Dash et al. [40] | 0.719 | 0.976 | 0.955 | |

| Argüello et al. [41] | 0.7209 | 0.9758 | 0.9431 | |

| Asad et al. [42] | 0.7388 | 0.9288 | 0.9028 | |

| Zhao et al. [43] | 0.735 | 0.978 | 0.947 | |

| Zhang et al. [44] | 0.7120 | 0.9724 | 0.9382 | |

| Imani et al. [45] | 0.7524 | 0.9753 | 0.9523 | |

| Heuristic algorithms | ABC | 0.823237 | 0.979255 | 0.96293 |

| PSO | 0.81193 | 0.979385 | 0.96049 | |

| DE | 0.81624 | 0.977815 | 0.9619 | |

| TLBO | 0.81193 | 0.977885 | 0.96049 | |

| GWO | 0.7676 | 0.968735 | 0.94519 | |

| FA | 0.837825 | 0.98043 | 0.96505 | |

| HS | 0.82185 | 0.97932 | 0.96301 | |

Performance comparison of ABC, PSO, DE, TLBO, GWO, FA and HS algorithms and other methods for STARE database.

| Methods | Sensitivity | Specificity | Accuracy | |

|---|---|---|---|---|

| You et al. [31] | 0.726 | 0.976 | 0.950 | |

| Roychowdhury et al. [32] | 0.772 | 0.973 | 0.952 | |

| Wang et al. [33] | 0.8104 | 0.9791 | 0.9813 | |

| Odstrcilik et al. [37] | 0.7847 | 0.9512 | 0.9341 | |

| Kaba et al. [39] | 0.7619 | 0.967 | 0.9456 | |

| Argüello et al. [41] | 0.7305 | 0.9688 | 0.9448 | |

| Zhao et al. [43] | 0.719 | 0.977 | 0.951 | |

| Zhang et al. [44] | 0.718 | 0.975 | 0.948 | |

| Imani et al. [45] | 0.7502 | 0.9745 | 0.959 | |

| Mendonca et al. [46] | 0.718 | 0.973 | 0.946 | |

| Xiao et al. [47] | 0.715 | 0.974 | 0.948 | |

| Fraz et al. [48] | 0.755 | 0.976 | 0.953 | |

| Yin et al.[49] | 0.854 | 0.942 | 0.933 | |

| Lázáret al. [50] | 0.725 | 0.975 | 0.949 | |

| Strisciuglio et al. [51] | 0.801 | 0.972 | 0.954 | |

| Heuristic algorithms | ABC | 0.82667 | 0.977715 | 0.96105 |

| PSO | 0.84541 | 0.98277 | 0.968945 | |

| DE | 0.81664 | 0.9764495 | 0.95888 | |

| TLBO | 0.825755 | 0.97761 | 0.960865 | |

| GWO | 0.823795 | 0.98002 | 0.964175 | |

| FA | 0.837825 | 0.978995 | 0.963365 | |

| HS | 0.82043 | 0.97704 | 0.960165 | |

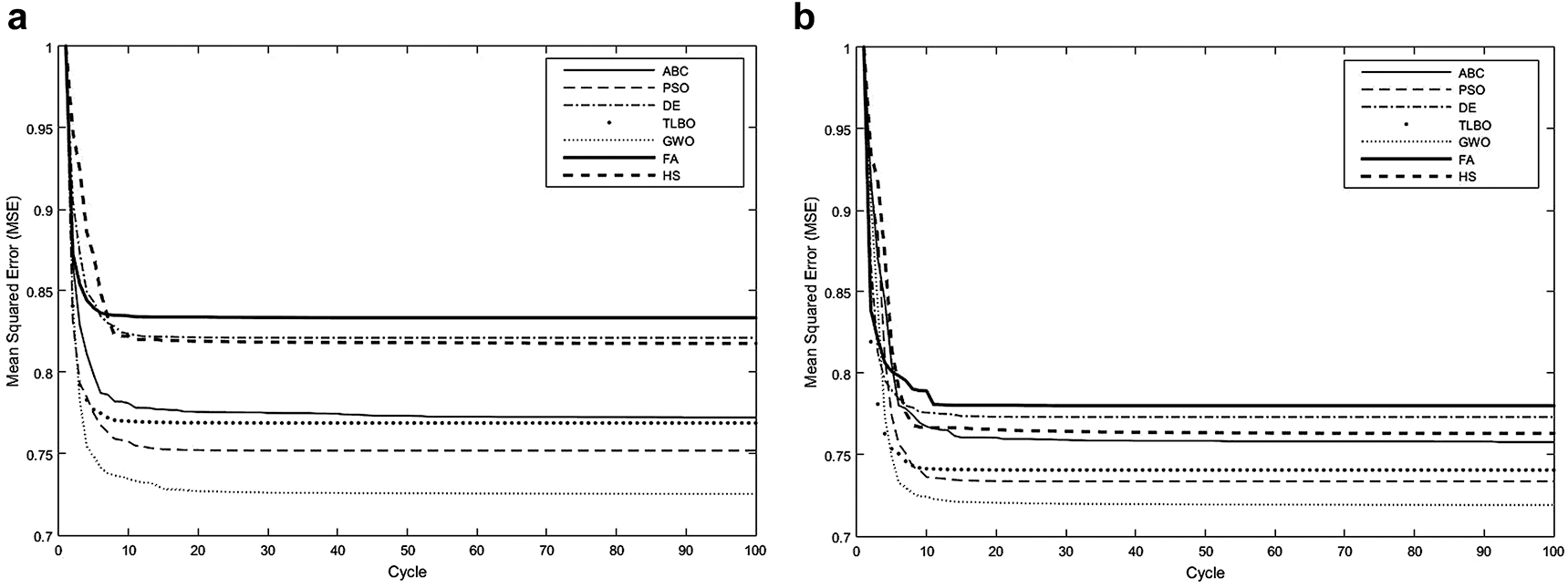

Figure 7 represents the convergence speeds of the algorithms for the retinal images given in Figure 1 (b) and Figure 2 (a). For a fair and detailed comparison, the convergence speeds have been given for both an abnormal retinal image from DRIVE database and a normal retinal image from STARE database. The evolution of best solutions has been obtained based on MSE. As seen from Figure 7 (a) which shows the convergence speeds obtained for the normal retinal image taken from DRIVE database, each algorithm needs approximately 10 cycles to converge to the optimal solution and the MSE performance of the GWO algorithm seems a bit better than the other algorithms. On the other hand, Figure 7 (b) shows the convergence speeds of the algorithms for the abnormal retinal image taken from STARE database. It is seen that each algorithm reaches to the global solution at 15 cycles. Also, the MSE performance of GWO seems a bit better than the other algorithms. According to the simulation results it is clear that convergence speeds and MSE performances of the algorithms in terms of clustering is close to each other.

Another important performance criterion for heuristic algorithms is the standard deviation which determines the stability of the algorithm. The low standard deviation indicates that the algorithm approximates similar error values at each random run. Table 10 and Table 11 contain the standard deviations obtained after 20 random runs for the retinal images taken from DRIVE and STARE databases. In addition to the standard deviation, the minimum MSE values reached by the algorithms have been shown in the tables. When the minimum MSE values reached by the algorithms are examined it is seen that all the algorithms produce similar MSE values, in other words, their clustering performance are very close to each other. On the other hand, due to their lower standard deviation values for the image taken from DRIVE database PSO and HS algorithms seem a little more stable in terms of clustering when compared to other algorithms. Similarly PSO, TLBO and HS algorithms are said to be a little more stable than the other algorithms for the image taken from STARE database.

Performance comparison of the heuristic algorithms for DRIVE database.

| ABC | Minimum MSE | 0.7722 |

| Standard deviation | 7.18762e-05 | |

| PSO | Minimum MSE | 0.752 |

| Standard Deviation | 2.58125e-08 | |

| DE | Minimum MSE | 0.8212 |

| Standard Deviation | 2.15721e-05 | |

| TLBO | Minimum MSE | 0.7689 |

| Standard Deviation | 5.46974e-05 | |

| GWO | Minimum MSE | 0.7253 |

| Standard Deviation | 5.45881e-06 | |

| FA | Minimum MSE | 0.8334 |

| Standard Deviation | 4.93834e-05 | |

| HS | Minimum MSE | 0.8123 |

| Standard Deviation | 1.94538e-08 |

Performance comparison of the heuristic algorithms for STARE database.

| ABC | Minimum MSE | 0.7578 |

| Standard deviation | 6.76833e-05 | |

| PSO | Minimum MSE | 0.7337 |

| Standard Deviation | 3.06854e-08 | |

| DE | Minimum MSE | 0.7732 |

| Standard Deviation | 3.41578e-05 | |

| TLBO | Minimum MSE | 0.74 |

| Standard Deviation | 2.9575e-08 | |

| GWO | Minimum MSE | 0.719 |

| Standard Deviation | 9.51344e-06 | |

| FA | Minimum MSE | 0.7823 |

| Standard Deviation | 4.87728e-05 | |

| HS | Minimum MSE | 0.764 |

| Standard Deviation | 5.53854e-07 |

Discussion

In retinal image analysis it is important to improve algorithms that are flexible and capable of accurate vessel segmentation. The results obtained in this work represent that the clustering based heuristic algorithms provide satisfactory performances in retinal vessel segmentation.

In this work, ABC, PSO, DE, TLBO, GWO, FA and HS algorithms which are most commonly used algorithms in literature for engineering problems are applied for the aim of retinal vessel segmentation. The analyses have been realized for both the normal and abnormal retinal images taken from the DRIVE and STARE databases. From the simulation results it is observed that all the algorithms successfully performed the retinal vessel segmentation. They produced similar MSE values and were able to converge to the global solutions. The standard deviation values reached by the algorithms have been representing that each algorithm was performing a stable behavior. On the other hand, statistical results obtained for sensitivity, specificity, accuracy and precision have been representing that the pixels with value very close to each other can successfully be distinguished with ABC, PSO, DE, TLBO, GWO, FA and HS algorithms.

The conventional gradient based algorithms may usually stuck into the local minimum and also their high dependence to the parameter values is an another disadvantage of these algorithms. Also, the nonflexible structures have been decreasing their optimization performance. Due to these disadvantages of conventional gradient based algorithms, more effective methods that can be used in image analysis have been needed. In this work, it has been shown that heuristic approaches can effectively be used in image analysis.

Conclusion

In this work, novel approaches based on ABC, PSO, DE, TLBO, GWO, FA and HS algorithms for clustering based retinal vessel segmentation is described in the fundus fluorescein angiography retinal images. It is seen from the simulation results that each algorithm converge to the global solutions at similar cycles and the final MSE error values reached by the algorithms are very close to each other. The statistical analyses based on the sensitivity, specificity, accuracy and precision show that ABC, PSO, DE, TLBO, GWO, FA and HS algorithms can successfully be used in analyses of retinal images. On the other hand, since the standard deviation values of the PSO, TLBO and HS algorithms are similar but a bit lower than ABC, DE, GWO and FA algorithms and it can be concluded that PSO, TLBO and HS algorithms are a little bit more stable in terms of clustering based retinal vessel segmentation. As a result, simulation results show that the performance of ABC, PSO, DE, TLBO, GWO, FA and HS algorithms in terms of clustering is too similar and they can successfully be used for the aim of retinal vessel segmentation.

Research funding: No financial support was received from any person or organization in this work.

Author contributions: All authors have accepted responsibility for the entire content of this manuscript and approved its submission.

Competing interests: The authors do not have financial and personal relationships with other people or organizations that could inappropriately influence their work.

References

1. Fong, DS, Aiello, L, Gardner, TW. Retinopathy in diabetes. Diabetes Care 2004;27:84–7. https://doi.org/10.2337/diacare.27.2007.s84.Search in Google Scholar

2. Fraz, MM, Remagnino, P, Hoppe, A, Uyyanonvara, B, Rudnicka, AR, Owen, CG, et al.. Blood vessel segmentation methodologies in retinal images-a survey. Comput Methods Progr Biomed 2012;108:407–33. https://doi.org/10.1016/j.cmpb.2012.03.009.Search in Google Scholar

3. Wang, JJ, Taylor, B, Wong, TY, Chua, B, Rochtchina, E, Klein, R, et al.. Retinal vessel diameters and obesity: a population-based study in older persons. Obesity 2006;14:206–14. https://doi.org/10.1038/oby.2006.27.Search in Google Scholar

4. Foracchia, M, Grisan, E, Ruggeri, A. Extraction and quantitative description of vessel features in hypertensive retinopathy fundus images. In: 2th Int workshop on comput assisted fundus image anal (CAFIA-2). Copenhagen, Denmark; 2001:5–7 pp.Search in Google Scholar

5. Mitchell, P, Leung, H, Wang, JJ, Rochtchina, E, Lee, AJ, Wong, TY, et al.. Retinal vessel diameter and open-angle glaucoma: the blue mountains eye study. Ophthalmology 2005;112:245–50. https://doi.org/10.1016/j.ophtha.2004.08.015.Search in Google Scholar

6. Goatman, K, Charnley, A, Webster, L, Nussey, S. Assessment of automated disease detection in diabetic retinopathy screening using two-field photography. PloS One 2011;6:275–84. https://doi.org/10.1371/journal.pone.0027524.Search in Google Scholar

7. Asad, AH, Elamry, E, Hassanien, AE, Tolba, MF. New global update mechanism of ant colony system for retinal vessel segmentation. In: 13th IEEE Int conf hybrid intell syst (HIS13) Tunisia; 2013:222–8 pp.10.1109/HIS.2013.6920486Search in Google Scholar

8. Verma, K, Deep, P, Ramakrishnan, AG. Detection and classification of diabetic retinopathy using retinal images flight. In: IEEE annu India conf (INDICON). Hyderabad, India; 2011:1–6 pp.10.1109/INDCON.2011.6139346Search in Google Scholar

9. Heneghan, C, Flynn, J, OKeefe, M, Cahill, M. Characterization of changes in blood vessel width and tortuosity in retinopathy of prematurity using image analysis. Med Image Anal 2002;6:407–29. https://doi.org/10.1016/s1361-8415(02)00058-0.Search in Google Scholar

10. Lowell, J, Hunter, A, Steel, D, Basu, A, Ryder, R, Kennedy, RL. Measurement of retinal vessel widths from fundus images based on 2D modeling. IEEE Trans Med Imag 2004;23:1196–204. https://doi.org/10.1109/tmi.2004.830524.Search in Google Scholar

11. Haddouche, A, Adel, M, Rasigni, M, Conrath, J, Bourennane, S. Detection of the foveal avascular zone on retinal angiograms using Markov random fields. Digit Signal Process 2010;20:149–54. https://doi.org/10.1016/j.dsp.2009.06.005.Search in Google Scholar

12. Grisan, E, Ruggeri, A. A divide etimpera strategy for automatic classification of retinal vessels into arteries and veins. Engineering in medicine and biology society. In: 25th IEEE annu Int conf. Cancun, Mexico; 2003:890–93 pp.Search in Google Scholar

13. Kanski, JJ. Clinical ophthalmology, 6th ed. London: Elsevier Health Sciences; 2007.Search in Google Scholar

14. Archer, DB. Diabetic retinopathy: some cellular. molecular and therapeutic considerations. Eye 1999;13:497–523. https://doi.org/10.1038/eye.1999.130.Search in Google Scholar

15. Antal, B, Hajdu, A. An ensemble-based system for microaneurysm detection and diabetic retinopathy grading. IEEE Trans Biomed Eng 2012;59:1720–6. https://doi.org/10.1109/tbme.2012.2193126.Search in Google Scholar

16. Abramoff, MD, Folk, JC, Han, DP. Automated analysis of retinal images for detection of referable diabetic retinopathy. JAMA Ophthalmol 2013;131:351–7. https://doi.org/10.1001/jamaophthalmol.2013.1743.Search in Google Scholar

17. Foracchia, M, Grisan, E, Ruggeri, A. Detection of optic disc in retinal images by means of a geometrical model of vessel structure. IEEE Trans Med Imag 2004;23:1189–95. https://doi.org/10.1109/tmi.2004.829331.Search in Google Scholar

18. Engelbrecht, AP. Fundamentals of computational swarm intelligence. Chichester, UK: John Wiley & Sons Publication; 2005.Search in Google Scholar

19. Karaboga, D. An idea based on honey bee swarm for numerical optimization. Technical Report-TR06. Kayseri, Turkey: Erciyes University, Engineering Faculty, Computer Engineering Department; 2005.Search in Google Scholar

20. Eberhart, RC, Kennedy, J. A new optimizer using particle swarm theory. In: 6th IEEE Int symp micromachine hum sci. Nagoya, Japan; 1995:39–43 pp.10.1109/MHS.1995.494215Search in Google Scholar

21. Storn, R, Price, K. Differential evolution- a simple and efficient adaptive scheme for global optimization over continuous spaces. Technical Report TR-95-012. Berkeley, California USA; 1995.Search in Google Scholar

22. Rao, RV, Patel, V. An elitist teaching-learning-based optimization algorithm for solving complex constrained optimization problems. Int J Ind Syst Eng Comput 2012;3:535–60. https://doi.org/10.5267/j.ijiec.2012.03.007.Search in Google Scholar

23. Mirjalili, SM, Lewis, A. Grey wolf optimizer. Adv Eng Software 2014;69:46–61. https://doi.org/10.1016/j.advengsoft.2013.12.007.Search in Google Scholar

24. Song, X, Tang, L, Zhao, S, Zhang, X, Li, L, Huang, J, et al.. Grey wolf optimizer for parameter estimation in surface waves. Soil Dynam Earthq Eng 2015;75:147–57. https://doi.org/10.1016/j.soildyn.2015.04.004.Search in Google Scholar

25. Mirjalili, S. How effective is the grey wolf optimizer in training multi-layer perceptrons. Appl Intell 2015;43:150–61. https://doi.org/10.1007/s10489-014-0645-7.Search in Google Scholar

26. Yang, XS. Firefly algorithms for multimodal optimization in stochastic algorithms: foundations and applications. Lect Notes Eng Comput Sci 2009;5792:169–78. https://doi.org/10.1007/978-3-642-04944-6_14.Search in Google Scholar

27. Lee, KS, Geem, ZW. A new meta-heuristic algorithm for continuous engineering optimization: harmony search theory and practice. Comput Methods Appl Mech Eng 2005;194:3902–33. https://doi.org/10.1016/j.cma.2004.09.007.Search in Google Scholar

28. Mahdavi, M, Fesanghary, M, Damangir, E. An improved harmony search algorithm for solving optimization problems. Appl Math Comput 2007;188:1567–79. https://doi.org/10.1016/j.amc.2006.11.033.Search in Google Scholar

29. Hoover, A, Kouznetsova, V, Goldbaum, M. Locating blood vessels in retinal images by piece wise threshold probing of a matched filter response. IEEE Trans Med Imag 2000;19:203–10. https://doi.org/10.1109/42.845178.Search in Google Scholar

30. Alonso-Montes, C, Vilariño, DL, Dudek, P, Penedo, MG. Fast retinal vessel tree extraction: a pixel parallel approach. Int J Circ Theor Appl 2008;36:641–51. https://doi.org/10.1002/cta.512.Search in Google Scholar

31. You, X, Peng, Q, Yaun, Y, Cheng, Y, Lei, J. Segmentation of retinal blood vessels using the radial projection and semi-supervised approach. Pattern Recogn 2011;44:2314–24. https://doi.org/10.1016/j.patcog.2011.01.007.Search in Google Scholar

32. Roychowdhury, S, Koozekanani, DD, Parhi, KK. Blood vessel segmentation of fundus images by major vessel extraction and subimage classification. IEEE J Biomed Health Inf 2015;19:1118–28.10.1109/JBHI.2014.2335617Search in Google Scholar

33. Wang, S, Yin, Y, Cao, G, Wei, B, Zheng, Y, Yang, G. Hierarchical retinal blood vessel segmentation based on feature and ensemble learning. Neurocomputing 2015;149:708–17. https://doi.org/10.1016/j.neucom.2014.07.059.Search in Google Scholar

34. Mendonca, AM, Campilho, A. Segmentation of retinal blood vessels by combining the detection of centerlines and morphological reconstruction. IEEE Trans Med Imag 2006;25:1200–13. https://doi.org/10.1109/tmi.2006.879955.Search in Google Scholar

35. Azzopardi, G, Strisciuglio, N, Vento, M, Petkov, N. Trainable COSFIRE filters for vessel delineation with application to retinal images. Med Image Anal 2015;19:46–57. https://doi.org/10.1016/j.media.2014.08.002.Search in Google Scholar

36. Fraz, MM, Remagnino, P, Hoppe, A, Uyyanonvara, B, Rudnicka, AR, Owen, CG, et al.. An ensemble classification-based approach applied to retinal blood vessel segmentation. IEEE Trans Biomed Eng 2012;59:2538–48. https://doi.org/10.1109/tbme.2012.2205687.Search in Google Scholar

37. Odstrcilik, J, Kolar, R, Budai, A, Hornegger, J, Jan, J, Gazarek, J, et al.. Retinal vessel segmentation by improved matched filtering: evaluation on a new high-resolution fundus image database. IET Image Process 2013;7:373–83. https://doi.org/10.1049/iet-ipr.2012.0455.Search in Google Scholar

38. Marín, D, Aquino, A, Gegúndez-Arias, ME, Bravo, JM. A new supervised method for blood vessel segmentation in retinal images by using gray-level and moment invariants-based features. IEEE Trans Med Imag 2011;30:146–58. https://doi.org/10.1109/tmi.2010.2064333.Search in Google Scholar

39. Kaba, D, Wang, C, Li, Y, Salazar-Gonzalez, A, Liu, X, Serag, A. Retinal blood vessels extraction using probabilistic modelling. Health Inf Sci Syst 2014;27:2.10.1186/2047-2501-2-2Search in Google Scholar PubMed PubMed Central

40. Dash, J, Bhoi, N. A thresholding based technique to extract retinal blood vessels from fundus images. Future Comp Inf J 2017;2:103–9. https://doi.org/10.1016/j.fcij.2017.10.001.Search in Google Scholar

41. Argüello, F, Vilariño, DL. Heras, DB. Nieto, A. GPU-based segmentation of retinal blood vessels. J Real-Time Image Process 2014;14:1–10. https://doi.org/10.1007/s11554-014-0469-z.Search in Google Scholar

42. Asad, AH, Azar, AT, Hassanien, AE. Ant colony-based system for retinal blood vessels segmentation. In: 7th Int conf bio-inspired comput theor and appl (BIC-TA 2012). Gwalior, India; 2012:441–52 pp.10.1007/978-81-322-1038-2_37Search in Google Scholar

43. Zhao, YQ, Wang, XH, Wang, XF, Shih, FY. Retinal vessels segmentation based on level set and region growing. Pattern Recogn 2014;47:2437–46.10.1016/j.patcog.2014.01.006Search in Google Scholar

44. Zhang, B, Zhang, L, Karray, F. Retinal vessel extraction by matched filter with first-order derivative of Gaussian. Comput Biol Med 2010;40:438–45. https://doi.org/10.1016/j.compbiomed.2010.02.008.Search in Google Scholar

45. Imani, E, Javidi, M, Pourreza, HR. Improvement of retinal blood vessel detection using morphological component analysis. Comput Methods Progr Biomed 2015;118:263–79. https://doi.org/10.1016/j.cmpb.2015.01.004.Search in Google Scholar

46. Mendonca, A, Dashtbozorg, B, Campilho, A. Segmentation of the vascular network of the retina. Image Anal Model Ophthalmol 2014:85–109. https://doi.org/10.1201/b16510-6.Search in Google Scholar

47. Xiao, Z, Adel, M, Bourennane, S. Bayesian method with spatial constraint for retinal vessel segmentation. Comput Math Methods Med 2013. https://doi.org/10.1109/icassp.2013.6637899.Search in Google Scholar

48. Fraz, MM, Remagnino, P, Hoppe, A, Uyyanonvara, B, Rudnicka, AR, Owen, CG, et al.. An ensemble classification-based approach applied to retinal blood vessel segmentation. IEEE Trans Biomed Eng 2012;59:2538–48. https://doi.org/10.1109/tbme.2012.2205687.Search in Google Scholar

49. Yin, B, Li, H, Sheng, B, Hou, X, Chen, Y, Wu, W, et al.. Vessel extraction from non-fluorescein fundus images using orientation-aware detector. Med Image Anal 2015;26:232–42. https://doi.org/10.1016/j.media.2015.09.002.Search in Google Scholar

50. Lázár, I, Hajdu, A. Segmentation of retinal vessels by means of directional response vector similarity and region growing. Comput Biol Med 2015;66:209–21. https://doi.org/10.1016/j.compbiomed.2015.09.008.Search in Google Scholar

51. Strisciuglio, N, Azzopardi, G, Vento, M, Petkov, N. Multiscale blood vessel delineation using b-cosfire filters. In: Springer Int conf comput anal images and patterns. Valletta, Malta; 2015:300–12 pp.10.1007/978-3-319-23117-4_26Search in Google Scholar

© 2020 Mehmet Bahadır Çetinkaya and Hakan Duran, published by De Gruyter, Berlin/Boston

This work is licensed under the Creative Commons Attribution 4.0 International License.

Articles in the same Issue

- Frontmatter

- Research Articles

- Nonlinear analysis of scalp EEGs from normal and brain tumour subjects

- Dimensionality reduction for EEG-based sleep stage detection: comparison of autoencoders, principal component analysis and factor analysis

- EEG signal classification based on SVM with improved squirrel search algorithm

- The effect of attentional focusing strategies on EMG-based classification

- Identification of dental pain sensation based on cardiorespiratory signals

- ScatT-LOOP: scattering tetrolet-LOOP descriptor and optimized NN for iris recognition at-a-distance

- A detailed and comparative work for retinal vessel segmentation based on the most effective heuristic approaches

- Visual enhancement of brain cancer MRI using multiscale dyadic filter and Hilbert transformation

- Raspberry Pi implemented with MATLAB simulation and communication of physiological signal-based fast chaff point (RPSC) generation algorithm for WBAN systems

- Short Communication

- How yarn orientation limits fibrotic tissue ingrowth in a woven polyester heart valve scaffold: a case report

Articles in the same Issue

- Frontmatter

- Research Articles

- Nonlinear analysis of scalp EEGs from normal and brain tumour subjects

- Dimensionality reduction for EEG-based sleep stage detection: comparison of autoencoders, principal component analysis and factor analysis

- EEG signal classification based on SVM with improved squirrel search algorithm

- The effect of attentional focusing strategies on EMG-based classification

- Identification of dental pain sensation based on cardiorespiratory signals

- ScatT-LOOP: scattering tetrolet-LOOP descriptor and optimized NN for iris recognition at-a-distance

- A detailed and comparative work for retinal vessel segmentation based on the most effective heuristic approaches

- Visual enhancement of brain cancer MRI using multiscale dyadic filter and Hilbert transformation

- Raspberry Pi implemented with MATLAB simulation and communication of physiological signal-based fast chaff point (RPSC) generation algorithm for WBAN systems

- Short Communication

- How yarn orientation limits fibrotic tissue ingrowth in a woven polyester heart valve scaffold: a case report