Abstract

Among the approaches in three-dimensional (3D) single molecule localization microscopy, there are several point spread function (PSF) engineering approaches, in which depth information of molecules is encoded in 2D images. Usually, the molecules are excited sparsely in each raw image. The consequence is that the temporal resolution has to be sacrificed. In order to improve temporal resolution and ensure localization accuracy, we propose a method, SH-CS, based on light needle excitation, detection system with single helix-point spread function (SH-PSF), and compressed sensing (CS). Although the SH-CS method still has a limitation about the molecule density, it is suited for relatively dense molecules. For each light needle scanning position, an SH image of excited molecules is processed with CS algorithm to decode their axial information. Simulations demonstrated, for random distributed 1–15 molecules in depth range of 4 μm, the axial localization accuracy is 12.1–73.5 nm. The feasibility of this method is validated with a designed 3D sample composed of fluorescent beads.

1 Introduction

In recent years, several approaches have been put forward to break the diffraction limited resolution. Some of them take advantages of fluorophore blinking, such as super-resolution optical fluctuation imaging (SOFI) [1], [2] and single molecule localization microscopy (SMLM) [3], [4]. As one of the common methods, 2D SMLM’s performance is outstanding in spatial resolution, which is usually less than 20 nm. In order to study 3D samples, 3D SMLM was developed, where other technologies with axial localization ability were introduced into SMLM, including astigmatic localization [5], PSF engineering [6], [7], bifocal plane detection [8], fluorescence interferometry [9], etc. However, because all of the SMLM approaches work on a premise that excited molecules in each raw image should be sparse, they are very dependent on the photoswitchable characteristic of fluorescent dyes [10]. Furthermore, the temporal resolution of these methods is quite limited.

Compared with the SMLM where molecules are excited sparsely by taking advantage of photoswitchable fluorescent dyes, there is another excitation strategy of reducing the density of effectively excited molecules, i.e., reducing the full width at half maximum (FWHM) of the effective excitation PSF. At the same time, reduced FWHM means better spatial resolution. STED is one representative method with the second strategy. By overlapping a donut-shaped depletion beam on a Gaussian-shaped excitation beam, the stimulated fluorescence molecules in the noncentral region return to the ground state by stimulated radiation with certain probabilities, thus reducing the effective fluorescence emission PSF. Since the high intensity of depletion beam implies a smaller point PSF, its lateral resolution can even reach several nanometers [11], [12]. In order to maintain a good lateral resolution at a large imaging depth, a Gauss–Bessel STED (GB-STED) microscopy was subsequently proposed, in which a Gaussian and a higher-order Bessel beam were used as the excitation and the depletion beam, respectively [13]. However, due to the confocal detection adopted in GB-STED, time-consuming 3D scanning is required to get the 3D information of samples. Subsequently, researchers proposed that both the excitation beam and the depletion beam could adopt a nondiffracted Bessel beam, BB-STED, so samples could be effectively excited with a light needle whose FWHM can be tens of nanometers and depth of field can be several microns [14], [15]. In BB-STED, although the lateral density of excited molecules could be reduced by BB-STED effectively, the axial density remains the same. If the axial information of the excited molecules in the light needle can be recovered, then the volumetric images of the sample can be obtained by only a 2D scanning. To achieve this, a method should be developed to locate the axially dense molecules in a light needle simultaneously.

Actually, there are a variety of algorithms of dense molecules localization, which mainly belong to two categories. Algorithms in the first category were developed based on the sparse molecular localization algorithms. Representative algorithms are DAOSTORM (Three Dimension Dominion Astrophysical Observatory Stochastic Optical Reconstruction Microscopy) [16] and SSM_BIC (Structured Sparse Model_Bayesian Information Criterion) [17]. Algorithms in the other category focus on estimating the molecular density to obtain the maximum probability of the density distribution. Representative algorithms are Compressed Sensing (CS) [18], [19] and 3B (Bayesian analysis of the Blinking and Bleaching) [20]. As the most representative algorithm in the first category, DAOSTORM achieved a lateral localization accuracy of 20 nm when the lateral projection density was less than 1 μm−2. However, when the density exceeded 1 μm−2, the localization accuracy decreases significantly. SSM_BIC employs the sparse structure model to estimate the initial multimolecule model and then selects the optimal fitting model in conjunction with Bayesian information criteria. Therefore, it can achieve sufficiently high localization accuracy and recover rate under the condition of low SNR (Signal-to-Noise Ratio), but execution speed is very slow. Compared with the algorithms in first category, those in the second one can handle situations with higher molecule density. For example, when CS was introduced into STORM, for images with lateral density even up to 12 μm−2, the lateral localization accuracy could still reach 10–60 nm. Furthermore, CS was also used to localize dense molecules in three dimensions [21], [22]. Later, Anthony Barsic proposed using Double-Helix PSF (DH-PSF) combined with Matching Pursuit (MP) algorithm for rough localizing. And then, Convex Optimization (CO) algorithm was used for accurate localization. Density limit of this method achieved 1.5 μm−2 with an axial localization accuracy of ∼ 75 nm, the depth of field of the system was only 2 μm, but the computation took a long time because of complicated procedure.

Therefore, we propose a method abbreviated as SH-CS, which combines SH-PSF and CS to realize localization of axial dense molecules under light needle excitation. Double helix PSF (DH-PSF) and SH-PSF are similar 3D localization methods [23], [24]. Actually, as is mentioned above, there are several axial localization techniques and among them, using spiral PSF is helpful to spread spots of emitters at different depths to different azimuths, so it is more preferable here. DH-PSF and SH-PSF are both spiral PSFs with ability of spreading spots of emitters at different depths to different azimuths. The reason SH-PSF is used here instead of DH-PSF is that SH-PSF’s energy is more concentrated, the SNR is better, and the depth of field is greater. After axial dense molecules are imaged with a detection system with SH-PSF, the highly overlapping spots in the image are then localized using CS. Simulation results demonstrated that for emitters density less than 15 in a depth range of 4 μm, the axial localization accuracy reaches 12.1–73.5 nm. In combination with 50 nm light needle scanning, 3D structure of the sample is well reconstructed with 3D super-resolution. The performance of SH-CS is also validated with a designed 3D sample composed of fluorescent beads.

2 Methods

The principle of SH-CS is shown in Figure 1. Under light needle excitation, the excited emitters from different depths are detected by a detection system with SH-PSF. The depth information of excited emitters is reconstructed using CS algorithm.

The principle of SH-CS.

The nondiffraction light nanoneedle could be realized by BB-STED where the concentric 0th-order Bessel beam and 1st-order Bessel beam are used as excitation and depletion beam, respectively [14], [15]. By adjusting their powers, the nondiffractive light nanoneedle excitation of sample can be realized. The 3D distribution of fluorescence emission is

The lateral coordinates of emitters in the reconstruction result are the center of light needle, and their axial coordinates are recalled by CS algorithm. The strategy of using CS in the SH-CS method here is similar to that in the CS-STORM method [18]. Transform the image on the camera into a one-dimensional vectors, b , which consists of row-wise concatenations of the camera image. Mathematically, each frame of the measured camera image, b , has a linear relationship with the emitters’ axial distribution, a , as

where A is the measurement matrix, which could be build based on the 3D-PSF of the system.

The construction process of the measurement matrix A is shown in Figure 2. First, the effective axial range is equally divided into s layers (Figure 2a). Next, as is shown in Figure 2b, the matrix M i (m × m) corresponding to SH-PSF image at the depth of layer i th is transformed into the i th column of measurement matrix A by connecting the columns of matrix M i end to end. Eventually, a measurement matrix A with m 2 rows and s columns is constituted. The optimal solution a of Equation (1) is solved according to the constraint condition.

where ε is an empirical value, typically ranging from approximately 0.22 to 0.3. The solved vector a is the depth information reconstructed from the acquired image b .

Schematic of CS in SH-CS. (a) The principle of axial division of measurement matrix A in SH-CS; (b) the different columns of measurement matrix A are constructed from SH-PSF for different depths.

The final 3D structure is achieved by scanning in the xoy plane with a light needle.

3 Simulation

First, the axial localization capability of SH-CS is tested through simulation. The simulated detection optical path is shown in Figure 3a. The fluorescence signal (wavelength 560 nm) from excited emitters is collected by an objective lens (NA1.4, 100×), imaged by a tube lens (focal length 180 mm) to the first image plane, and then pass through a 4f relay system. The PSF of the detection system is modulated into SH-PSF by placing a special phase pattern on its Fourier plane. The phase pattern is designed according to the reference [23]. Modulated PSF of the system is shown in Figure 3c. Spots center at different azimuths in 2D plane with different axial positions.

Setup and PSFs of SH-CS. (a) Setup of the detection optical path; (b) the relationship between azimuth angle and z-axis position; (c) z-axis projection of PSF with different L. Scale bar: 400 nm.

According to the method introduced in [23], different number of Fresnel zones L will lead to different modulation depth of SH-PSF. As is shown in Figure 3b, more Fresnel zones (larger L) mean larger modulation depth. Theoretically, the value of L can approach infinite. However, with the increase of L, the effective size of PSF image obtained after modulation also increases (as shown in Figure 3c), which will cause the longer execute time of CS algorithm. Therefore, L = 9 is subsequently adopted to balance the modulation depth and execute time. In addition, since the azimuths of 0° and 360° is practically indistinguishable, the effective angle range of phase with L = 9 is set to 300°, corresponding to an effective depth range of 4 μm. Larger imaging depth can be achieved with larger L, but increased depth also means worse localization accuracy as well, and at the same time it may also introduce variations in refraction and scattering along the optical path, which could result in reduced SH-PSF focus precision and a lower signal-to-noise ratio. The axial density of emitters is represented by the number of spots in azimuth range of 300°, i.e., the number of depths in the depth range of 4 μm, which is denoted by n. The effective pixel size of the detector is set to 80 nm and placed on the rear focal plane of Lens2. Gaussian noise (mean 0, variance 0.01) and Poisson noise are added to each raw image.

First, localization performance of the SH-CS is analyzed with simulated samples in which emitters were located at different axial positions and a fixed lateral position. The reason for fixing the lateral position is that the accuracy of the SH-CS method varies with the size of the light needle. Therefore, when analyzing the accuracy of the SH-CS method itself, we tried to minimize other influence factors by fixing the lateral position for the emitters. For a certain axial density n, 2000 random samples were generated, and each sample consists of n emitters at random n depths. Corresponding 2000 original images were simulated for the 2000 random samples. Take n = 8 as an example to illustrate the analysis process. Figure 4a shows one of the original images. As is mentioned in section “2. Method,” measurement matrix A is crucial for the final localization accuracy, and the number of columns, s, determines the theoretical upper limit of localizing accuracy in z-axis. So, the original image (Figure 4a) was analyzed when s is set to 41, 81, 161, and 201. The localization results (Figure 4b) demonstrated that, depth positions of the 8 emitters are reconstructed under different s, but there are certain localizing deviations from the ground truth (GT) positions. Next, referring to the method of Hugelier [25], we measured localizing bias, with a predefined tolerance of ±200 nm. As is shown in Figure 4c, for the depth d where identified emitter is located, if there are GT emitters existing within the depth range of d ± 200 nm, the identified emitter and the GT emitter are considered to be successfully matched. If there are two identified emitters that correspond to one GT emitter at the same time, the midpoints of the two identified emitters are taken as one virtual emitter, and the virtual emitter is considered to match the GT emitter. Figure 4e shows the relationship between the number of excited emitters, n, and the number of identified emitters (denoted as p) representing how many emitters are matched with the CS algorithm. For n = 8 and s = 201, 7.8 emitters were matched averagely in Figure 4e. In addition, the axial deviation (denoted by ZD) between matched identified molecule and GT molecule is statistically in a histogram (Figure 4d) and the corresponding standard deviation (denoted by “ZD Stdev”) represents the axial localizing accuracy at “excited number” of 8 in Figure 4f. Average executive time for each image under different n is shown in Figure 4g. It should be noted that, generally, with a smaller tolerance size, a method will exhibit lower recall rate but higher accuracy. So, the performance of a method should be evaluated comprehensively based on the two parameters.

Evaluation of localization performance of SH-CS. (a) Original image of SH-CS at n = 8, scale bar: 240 nm; (b) localizing performance under different s with n = 8; (c) measurement criteria for axial localizing; (d) statistical histogram of axial deviation with n = 8; (e) relationship between identification density and n; (f) relationship between localizing accuracy and n.

As is shown in Figure 4e, the number of matches increases with n, but recall rate that is calculated with “identified number”/n gradually decreased. However, at n = 15, the recall rate remains to be 61.2 % (s = 201). In addition, under the same n, identified number increases monotonically with s. Take “n = 10” as an example, when s is small relatively but increases from 41 to 81, identified number increases from 7.8 to 8.1, i.e., an increase of 3.8 %, while for larger s, when a same increment of 40 of s from 161 to 201, identified number increases by 2.4 % only, from 8.3 to 8.5. The results demonstrated that for larger s, the ability of improving identified number by increasing s becomes more and more limited. Figure 4f represents the relationship between “localizing accuracy” and n. With the increase of n, localizing accuracy gradually decreases, but for n larger than 7, localizing accuracy seems to increases abnormally. The reason for this seemingly counterintuitive phenomenon is that when n is too high, a GT molecule will easily be found near an identified molecule, which will be considered as matching. Figure 4f also shows that with the increase of s, the localizing accuracy shows a trend of improvement. Taking n = 6 as an example, the localizing accuracy increases from 73.2 to 71.4 nm, an increase of 2.5 %, when s increases from 41 to 81. For bigger s, the same increment of 40 layers, i.e., s increases from 161 to 201, brings an increase of only 1.1 %, from 70.2 to 69.4 nm. It shows that further increasing s has more and more limited ability to improve localizing accuracy. For s = 201, the axial localizing accuracy is within the range of 12.1–73.5 nm.

Since CS is used in SH-CS, execute time of the algorithm is a concern. It should be noted that the SH-CS method adopts strategy of light needle excitation, the excited area is very constrained, and the size of raw images to be processed is only 20 × 20 pixel2. We calculated the average frame execute time and found out that the execute time does not increase significantly with the increase of n. Larger s means longer execute time. Even so, when s = 201, average execute time for a raw image is only 1.1 s (n = 15). Based on the above analysis, s is set to 201 to maximize the reconstruction performance in the data processing thereafter.

Next, a sample with size of 2 μm × 2 μm × 600 nm was simulated, which consists of five letter-shaped structures, “H”, “I”, “S”, “Z” and “U”. The five letters are on five different depths, d i (i = 1–5), with a gap of 100 nm between adjacent layers. The diagram of the sample’s 3D structure was shown in Figure 5a, and in order to show the sample structure clearly, the lateral and axial coordinates take different scales bars. The structures of the five layers are shown in Figure 5b. The sample was scanned on the plane xoy by a light needle with a 2D Gaussian distribution (FWHM = 50 nm) at a step size of 20 nm. The SH raw image acquired at each light needle excitation is analyzed by the above CS method, and the reconstructed axial structure is reconstructed as the structure at lateral coordinates corresponding to the center of the light needle. The reconstructed structures within depth ranges of d i ± 50 nm are extracted and shown in Figure 5c. The reason of using data in a thickness of 100 nm instead of fixed depths d i is to match the axial localizing accuracy of SH-CS. As is shown in Figure 4f, the axial localizing accuracy varies according to axial density and in a range between 12.1 nm and 73.5 nm, so the middle value of 42.8 nm is chosen for the match. The “100 nm” is the corresponding FWHM of a Gaussian function with the standard deviation of 42.8 nm. As is shown in Figure 5c, the five layers of the letter-shaped structures are reconstructed at their original depths. However, there are still a few imperfections in the reconstructed images. Because the layers are too close with each other, partial structures in “I” and “Z” pointed with the two red arrows are missing while there is some unexpected structure occurs in “S”.

SH-CS performance with simulated sample. (a) 3D structure of simulated sample; (b) z-axis projection of the five-letter structure; (c) reconstructed z-axis projection of the five-letter structure; (d) intensity distribution along the yellow line in the second subimage in (c) and its Gaussian fit; (e) yoz projection of the white cuboid in (c); (f) intensity distribution along the yellow line in (e) and its Gaussian fit; (g) intensity distribution along the green line in (e) and its Gaussian fit. In (b) and (c), color bar represents the axial depth corresponding to the pseudo-color. Scale bar: 100 nm.

Next, we analyzed the intensity distribution along the yellow line in the second subimage in Figure 5c, and the results are shown in Figure 5d. Gaussian fitting of the distribution shows that the FWHM is 54 nm. It is consistent with the expectation that the lateral resolution is which is corresponding to the size of the light needle. In order to analyze the axial resolution, the reconstructed 3D structure within the interval of x = [520, 560] nm was extracted and project it to yoz plane, as shown in Figure 5e. The region corresponding to the selected interval is highlighted with white cuboid in Figure 5c. The intensity distribution of the yellow line and the green line is shown in Fig. 5f and g. The Gaussian function is fitted to their intensity distributions, respectively, and the standard deviation of the Gaussian function is about 25–35 nm.

It should be noted that, in the SH-CS method, off-axis emitter will lead to a lateral displacement of the PSF in the image, and this lateral displacement may be misidentified as a change in z coordinate. That is why a light needle with confined size is a necessary requirement for the SH-CS. Because only those emitters within the light needle can be excited, the maximum lateral displacement is limited. Furthermore, thanks to Gaussian distribution of the excitation intensity of the light needle, although the emitter with larger extent of off-axis has larger lateral displacement, its fluorescence intensity is lower as well. So, the effect of such lateral displacements is limited. As is shown in Figure 5 (and Figure 6 in the next section), the effect of the lateral displacement is acceptable for an optical needle of 50 nm (FWHM). If the width of the light needle can be reduced further, the effect of such lateral displacement will be reduced as well.

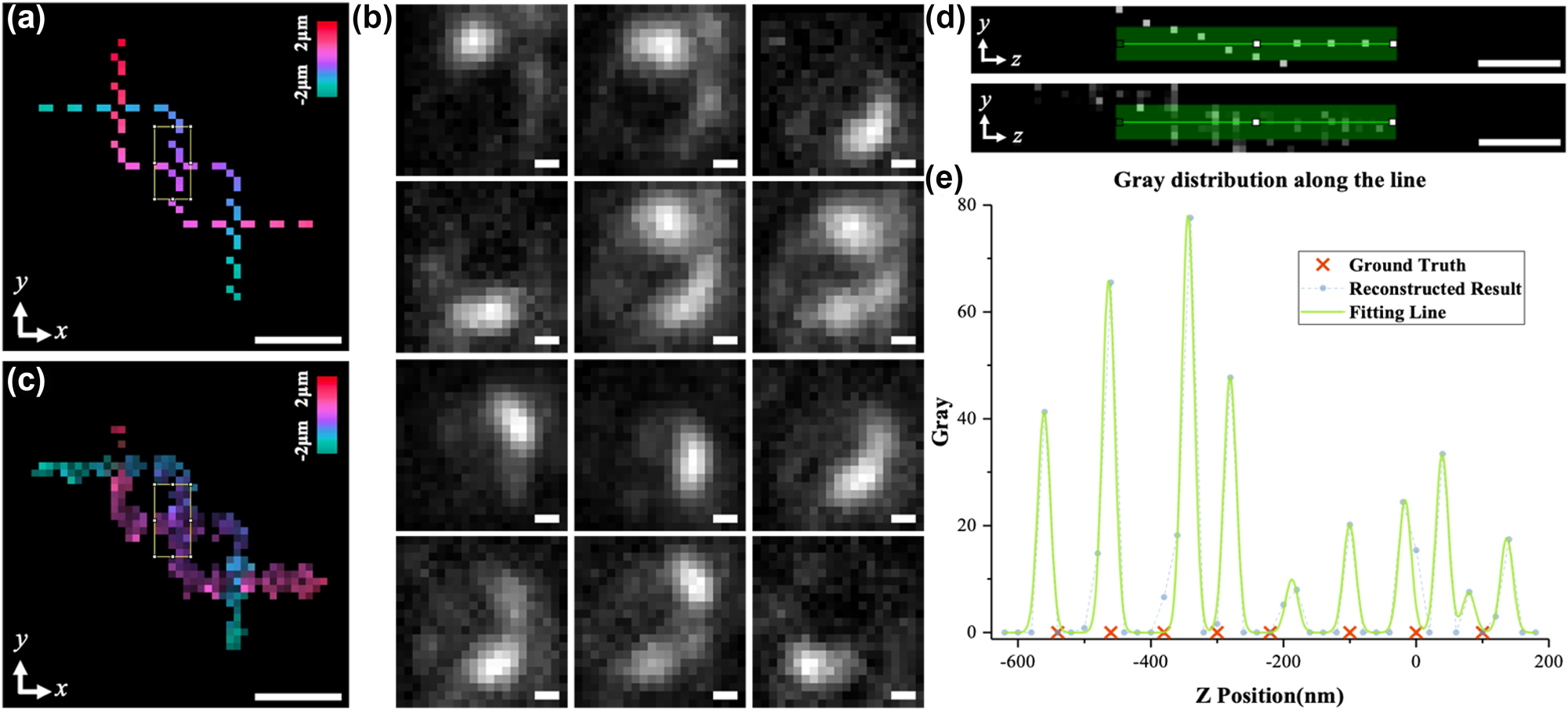

Using SH-CS to deal with experimental data. (a) z-axis projection of 3D experimental sample designed by experimental data; (b) multiple original images obtained by light needle scanning; (c) z-axis projection of reconstructed 3D sample structure; (d) the structures in the rectangles in (a) and (c) are projected onto the yoz plane and shown in the upper and lower subfigure, respectively; (e) intensity distribution along the green stripe in (d). Color bars in (a) and (c) represent the axial depth corresponding to the pseudo-color. Scale bar: 240 nm.

4 Validation with a designed 3D sample

To analyze the performance of the SH-CS in processing of experimental data, a 3D sample (as is shown in Figure 6a) composed of fluorescent beads was designed. The FWHM of the light needle for excitation is set to 50 nm and the step size for scanning is set to 20 nm. Figure 6b shows several raw images as examples. The complete series of the raw images are supplied in Supplementary materials (Visualization 1). It should be noted that, these raw images are not acquired directly by a detector in an implemented light needle system but generated by weighted superposing experimental images of multiple single beads at different positions (see Supplementary material for details). All raw images are analyzed with the CS algorithm introduced in Section 2, and measurement matrix used is based on experimental SH-PSF image of a fluorescent bead at 201 depths in a depth range of 4 μm. The 3D structure reconstructed by SH-CS is shown in Figure 6c. As is shown in Figure 6c and Visualization 2 in Supplementary materials, the 3D structure of the sample is well recovered. In order to show the axial localizing ability, we analyzed a part of the sample with the densest structure and its reconstructed result, which are highlighted with two rectangles in Figure 6a and c, respectively. The structures in the rectangle of Figure 6a and c are projected on the yoz plane and shown as the upper and the lower subfigure in Figure 6d, respectively. The intensity distribution along the green stripe in the lower subfigure is shown as dots in Figure 6e, and each peak was fitted with a Gaussian function and shown with green solid line in Figure 6e. For comparison, the axial positions of the beads in the corresponding part of the sample are shown as red crosses in Figure 6e as well. As is shown in Figure 6e, axial information of some beads is recovered very well, such as the second and the sixth peak, showing high coincidence with positions of their corresponding red crosses. However, other peaks exhibit different degree of offsets. Since the local density is quite high here, i.e., there are 8 beads within a depth range less than 650 nm, we think the result is reasonable.

It should also be noted that, some factors may affect the performance when working with images directly acquired by a detector in an implemented light needle system. First, because of the strategy of generating raw images here, the field-dependent aberrations may degrade the performance of the SH-CS, but it won’t be a problem for directly acquired raw images because we can build specific measurement matrix for each light needle position, which is helpful to avoid the field-dependent variation. However, directly acquired raw images may suffer from imperfect light needle, which might weaken the performance of the SH-CS method.

5 Conclusions

In order to realize 3D nanoimaging at large depth of field, a new method of recovering axial information of axial dense emitters in a restrained lateral area is put forward here, based on SH-PSF detection and CS algorithm, and it is abbreviated as the SH-CS method. With SH-PSF detection, axial position information is encoded to azimuth angle, so axial dense emitters at different depths can be imaged at different azimuths simultaneously. Then such axial information is decoded using CS algorithm. Simulation data show that SH-CS can distinguish 1–15 emitters randomly distributed within a depth range of 4 μm with axial localizing accuracy of 12.1 nm–73.5 nm and executing time of 1.1 s. Combined with light needle excitation, which can be achieved with BB-STED [14], [15], the SH-CS was validated with simulated samples or a designed 3D sample composed of fluorescent beads. The performance of the SH-CS may be improved in the future by optimizing the CS measurement matrix with better restricted isometry property (RIP) [19]. It should be noted that, the lateral resolution is limited by the size of the light needle generated by the BB-STED, which is set to 50 nm (FWHM) here, so if the size is further reduced by using higher depletion intensity, not only the lateral resolution can be improved directly, the axial resolution will also benefit because smaller light needle means lower excited density and smaller possible lateral displacement of off-axis molecules. SH-CS can achieve volumetric imaging method by a 2D scanning. Generally, the larger the imaging area is, the longer the imaging time will be, but this shortcoming will not become a big issue by introducing multi-light-needle parallel excitation, and similar strategy has been used in pSTED [26], [27] and pRESOLFT [28]. For example, pRESOLFT simultaneously uses 100,000 near-ring spots arranged in an array as off-switching light and achieves ultra-high-resolution imaging in living cells with a temporal resolution of about 1 s [26], but pRESOLFT adopts a confocal detection strategy, which has an axial resolution of only 580 nm, and there is only one layer can be recovered at a time, and the volume imaging speed is still limited. So, actually, the SH-CS method can become a complementary tool for the pRESOLFT and pSTED. As to the reconstruction of the SH-CS, although ∼1 s is needed for processing one single image so far, however, for multiple images acquired, parallel processing will be helpful to improve the total processing time in the future.

Funding source: Shenzhen Science and Technology Planning Project

Award Identifier / Grant number: JCYJ20200109105411133

Award Identifier / Grant number: JCYJ20210324093209024

Award Identifier / Grant number: JCYJ20210324094200001

Funding source: National Natural Science Foundation of China

Award Identifier / Grant number: 11774242

Award Identifier / Grant number: 61335001

Award Identifier / Grant number: 62175166

Award Identifier / Grant number: 62475168

Funding source: National Key Research and Development Program of China

Award Identifier / Grant number: 2022YFF0712500

Funding source: Shenzhen Key Laboratory of Photonics and Biophotonics

Award Identifier / Grant number: ZDSYS20210623092006020

-

Research funding: The authors express their gratitude to Prof. Tao Cheng for his insightful discussions regarding the technical details of the SH-CS method. This work was supported by National Natural Science Foundation of China (62475168), National Key Research and Development Program of China (2022YFF0712500), National Natural Science Foundation of China (Grant Nos. 11774242, 62175166, 61335001), Shenzhen Science and Technology Planning Project (Grant No. JCYJ20210324094200001, JCYJ20210324093209024, JCYJ20200109105411133), Shenzhen Key Laboratory of Photonics and Biophotonics (ZDSYS20210623092006020).

-

Author contributions: HW: methodology, software, data analysis, and writing. DC: conceptualization, methodology, and writing. YJ: investigation, data curation. GX: resources. YN: resources, writing review & editing. HL: resources. BY: writing review & editing. JQ: writing review & editing. All authors have accepted responsibility for the entire content of this manuscript and approved its submission.

-

Conflict of interest: Authors state no conflicts of interest.

-

Ethical approval: The conducted research is not related to either human or animals use.

-

Data availability: Data and code underlying the results presented in this paper can be obtained from the authors upon reasonable request.

-

Supplemental document: Supplementary material, Visualization 1, and Visualization 2.

References

[1] T. Dertinger, R. Colyer, G. Iyer, S. Weiss, and J. Enderlein, “Fast, background-free, 3D super-resolution optical fluctuation imaging (SOFI),” Proc. Natl. Acad. Sci. U. S. A., vol. 106, no. 52, pp. 22287–22292, 2009.10.1073/pnas.0907866106Search in Google Scholar PubMed PubMed Central

[2] H. Huang, et al.., “SOFFLFM: Super-resolution optical fluctuation Fourier light-field microscopy,” J. Innovat. Opt. Health Sci., vol. 16, no. 3, p. 2244007, 2023, https://doi.org/10.1142/s1793545822440072.Search in Google Scholar

[3] M. Rust, M. Bates, and X. Zhuang, “Sub-diffraction-limit imaging by stochastic optical reconstruction microscopy (STORM),” Nat. Methods, vol. 3, no. 10, pp. 793–796, 2006. https://doi.org/10.1038/nmeth929.Search in Google Scholar PubMed PubMed Central

[4] E. Betzig, et al.., “Imaging intracellular fluorescent proteins at nanometer resolution,” Science, vol. 313, no. 5793, pp. 1642–1645, 2006. https://doi.org/10.1126/science.1127344.Search in Google Scholar PubMed

[5] B. Huang, W. Q. Wang, M. Bates, and X. Zhuang, “Three-dimensional super-resolution imaging by stochastic optical reconstruction microscopy,” Science, vol. 319, no. 5864, pp. 810–813, 2008. https://doi.org/10.1126/science.1153529.Search in Google Scholar PubMed PubMed Central

[6] S. R. P. Pavani, “Three-dimensional, single-molecule fluorescence imaging beyond the diffraction limit by using a double-helix point spread function,” Proc. Natl. Acad. Sci. U. S. A., vol. 106, no. 9, pp. 2995–2999, 2009. https://doi.org/10.1073/pnas.09002451.Search in Google Scholar

[7] D. Chen, et al.., “Approach to multiparticle parallel tracking in thick samples with three-dimensional nanoresolution,” Opt. Lett., vol. 38, no. 19, pp. 3712–3715, 2013, https://doi.org/10.1364/ol.38.003712.Search in Google Scholar

[8] S. Ram, P. Prabhat, E. S. Ward, and R. J. Ober, “Improved single particle localization accuracy with dual objective multifocal plane microscopy,” Opt. Express, vol. 17, no. 8, pp. 6881–6898, 2009. https://doi.org/10.1364/oe.17.006881.Search in Google Scholar PubMed PubMed Central

[9] G. Shtengel, et al.., “Interferometric fluorescent super-resolution microscopy resolves 3D cellular ultrastructure,” Proc. Natl. Acad. Sci. U. S. A., vol. 106, no. 9, pp. 3125–3130, 2009.10.1073/pnas.0813131106Search in Google Scholar PubMed PubMed Central

[10] M. J. Rust, et al.., “Sub-diffraction-limit imaging by stochastic optical reconstruction microscopy (STORM),” Nat. Methods, vol. 3, no. 10, pp. 793–796, 2010.10.1038/nmeth929Search in Google Scholar

[11] S. W. Hell and J. Wichmann, “Breaking the diffraction resolution limit by stimulated emission: Stimulated-emission-depletion fluorescence microscopy,” Opt. Lett., vol. 19, no. 11, pp. 780–782, 1994. https://doi.org/10.1364/ol.19.000780.Search in Google Scholar PubMed

[12] T. A. Klar and S. W. Hell, “Subdiffraction resolution in far-field fluorescence microscopy,” Opt. Lett., vol. 24, no. 14, pp. 954–956, 1999. https://doi.org/10.1364/ol.24.000954.Search in Google Scholar PubMed

[13] Y. Wentao, et al.., “Super-resolution deep imaging with hollow Bessel beam STED microscopy,” Laser Photon. Rev., vol. 10, no. 1, pp. 147–152, 2017.10.1002/lpor.201500151Search in Google Scholar

[14] C. Gohn-kreuz and A. Rohrbach, “Light needles in scattering media using self-reconstructing beams and the STED principle,” Optica, vol. 4, no. 9, pp. 1134–1142, 2017. https://doi.org/10.1364/optica.4.001134.Search in Google Scholar

[15] C. Gohn-Kreuz and A. Rohrbach, “Light-sheet generation in inhomogeneous media using self-reconstructing beams and the STED-principle,” Opt. Express, vol. 24, no. 6, pp. 5855–5865, 2016. https://doi.org/10.1364/oe.24.005855.Search in Google Scholar PubMed

[16] S. J. Holden, S. Uphoff, and A. N. Kapanidis, “DAOSTORM: An algorithm for high-density super-resolution microscopy,” Nat. Methods, vol. 8, no. 4, pp. 279–280, 2011. https://doi.org/10.1038/nmeth0411-279.Search in Google Scholar PubMed

[17] T. Quan, et al.., “High-density localization of active molecules using structured sparse model and bayesian information criterion,” Opt. Express, vol. 19, no. 18, pp. 16963–16974, 2011. https://doi.org/10.1364/oe.19.016963.Search in Google Scholar

[18] L. Zhu, W. Zhang, D. Elnatan, and B. Huang, “Faster STORM using compressed sensing,” Nat. Methods, vol. 9, no. 7, pp. 721–723, 2012. https://doi.org/10.1038/nmeth.1978.Search in Google Scholar PubMed PubMed Central

[19] T. Cheng, D. Chen, B. Yu, and H. Niu, “Reconstruction of super-resolution STORM images using compressed sensing based on low-resolution raw images and interpolation,” Biomed. Opt Express, vol. 8, no. 5, pp. 2445–2457, 2017. https://doi.org/10.1364/boe.8.002445.Search in Google Scholar

[20] S. Cox, et al.., “Bayesian localization microscopy reveals nanoscale podosome dynamics,” Nat. Methods, vol. 9, no. 2, pp. 195–200, 2012. https://doi.org/10.1038/nmeth.1812.Search in Google Scholar PubMed PubMed Central

[21] A. Barsic, G. Grover, and R. Piestun, “Three-dimensional super-resolution and localization of dense clusters of single molecules,” Sci. Rep., vol. 4, p. 5388, 2014, https://doi.org/10.1038/srep05388.Search in Google Scholar PubMed PubMed Central

[22] S. Zhang, D. Chen, and H. Niu, “3D localization of high particle density images using sparse recovery,” Appl. Opt., vol. 54, no. 26, pp. 7859–7864, 2015. https://doi.org/10.1364/ao.54.007859.Search in Google Scholar PubMed

[23] S. Prasad, “Rotating point spread function via pupil-phase engineering,” Opt. Lett., vol. 38, no. 4, pp. 585–587, 2013. https://doi.org/10.1364/ol.38.000585.Search in Google Scholar

[24] D. Chen, H. Li, B. Yu, and J. Qu, “Four-dimensional multi-particle tracking in living cells based on lifetime imaging,” Nanophotonics, vol. 11, no. 8, pp. 1537–1547, 2022, https://doi.org/10.1515/nanoph-2021-0681.Search in Google Scholar PubMed PubMed Central

[25] S. Hugelier, et al.., “Sparse deconvolution of high-density super-resolution images,” Sci. Rep., vol. 6, p. 21413, 2016, https://doi.org/10.1038/srep21413.Search in Google Scholar PubMed PubMed Central

[26] F. Bergermann, L. Alber, J. S. Steffen, J. Engelhardt, and S. W. Hell, “2000-fold parallelized dual-color STED fluorescence nanoscopy,” Opt. Express, vol. 23, no. 1, pp. 211–223, 2015. https://doi.org/10.1364/oe.23.000211.Search in Google Scholar

[27] G. Vicidomini, P. Bianchini, and A. Diaspro, “STED super-resolved microscopy,” Nat. Methods, vol. 15, no. 3, pp. 173–182, 2018. https://doi.org/10.1038/nmeth.4593.Search in Google Scholar PubMed

[28] A. Chmyrov, et al.., “Nanoscopy with more than 100,000 ‘doughnuts’,” Nat. Methods, vol. 10, no. 8, pp. 737–740, 2013. https://doi.org/10.1038/nmeth.2556.Search in Google Scholar PubMed

Supplementary Material

This article contains supplementary material (https://doi.org/10.1515/nanoph-2024-0516).

© 2025 the author(s), published by De Gruyter, Berlin/Boston

This work is licensed under the Creative Commons Attribution 4.0 International License.

Articles in the same Issue

- Frontmatter

- Review

- Empowering nanophotonic applications via artificial intelligence: pathways, progress, and prospects

- Research Articles

- Robust multiresonant nonlocal metasurfaces by rational design

- Electrochemically modulated single-molecule localization microscopy for in vitro imaging cytoskeletal protein structures

- Neural network-based analysis algorithm on Mueller matrix data of spectroscopic ellipsometry for the structure evaluation of nanogratings with various optical constants

- Ultrasensitive metasurface sensor based on quasi-bound states in the continuum

- Enhanced optical encryption via polarization-dependent multi-channel metasurfaces

- Artificial intelligence driven Mid-IR photoimaging device based on van der Waals heterojunctions of black phosphorus

- 1,550-nm photonic crystal surface-emitting laser diode fabricated by single deep air-hole etch

- Curved geometric-phase optical element fabrication using top-down alignment

- Localizing axial dense emitters based on single-helix point spread function and compressed sensing

- Corrigendum

- Corrigendum to: Experimental demonstration of a photonic reservoir computing system based on Fabry Perot laser for multiple tasks processing

Articles in the same Issue

- Frontmatter

- Review

- Empowering nanophotonic applications via artificial intelligence: pathways, progress, and prospects

- Research Articles

- Robust multiresonant nonlocal metasurfaces by rational design

- Electrochemically modulated single-molecule localization microscopy for in vitro imaging cytoskeletal protein structures

- Neural network-based analysis algorithm on Mueller matrix data of spectroscopic ellipsometry for the structure evaluation of nanogratings with various optical constants

- Ultrasensitive metasurface sensor based on quasi-bound states in the continuum

- Enhanced optical encryption via polarization-dependent multi-channel metasurfaces

- Artificial intelligence driven Mid-IR photoimaging device based on van der Waals heterojunctions of black phosphorus

- 1,550-nm photonic crystal surface-emitting laser diode fabricated by single deep air-hole etch

- Curved geometric-phase optical element fabrication using top-down alignment

- Localizing axial dense emitters based on single-helix point spread function and compressed sensing

- Corrigendum

- Corrigendum to: Experimental demonstration of a photonic reservoir computing system based on Fabry Perot laser for multiple tasks processing