Abstract

This paper showcases a user study on users’ preferences and perceptions of data protection (e.g., intervenability and transparency) in a privacy assistant application. The tool aims to assist users in maintaining higher data protection of their personal data with minimal effort. The study involved 20 participants and employed a mixed-methods approach, including qualitative analysis to assess user perceptions and usability. The findings of the study are two-fold, (1) to understand the user requirements and attitudes towards data protection and privacy and (2) to evaluate whether the privacy assistant application meets these requirements in terms of transparency, intervenability, user experience, and usability. The results indicate that participants had varying attitudes toward privacy and exhibited diverse knowledge levels regarding the GDPR. The high-fidelity prototype demonstrated excellent usability and received positive evaluations in terms of user experience across various dimensions. The results shed light on both the strengths and areas for improvement in the design and implementation of the privacy assistant application prototype.

1 Introduction

In a world where the volume of produced data is skyrocketing, 1 and data protection debates are gaining traction, 2 an interesting trend is emerging: more individuals are willingly sharing their data as they embrace self-measurement technology. 3 Using devices like smartwatches, people are collecting digital data about their bodies to analyze their athletic performance, sleep, and overall health. They often share this data on social media or within self-measurement communities striving for, among other things, recognition, and a sense of belonging. 4 However, concerns about the long-term implications of data sharing and potential inaccuracies in profiling have arisen. 5 Despite these risks, the benefits of self-measurement technology are clear, especially for athletes and those interested in tracking their daily lives. 6

Meanwhile, mobile applications such as privacy assistants are gaining attention, not only in the domain of mobile health (mHealth), 7 but also in domains like Internet of Things in general. 8 Privacy assistants are aiming to enhance transparency and empower intervenability, while simplifying complex privacy choices and also adapt to user preferences. 7 Research also highlights the interdependency of those aspects, that users need to understand privacy processes and have transparent procedures to intervene effectively. 9

This paper presents a privacy assistant solution specifically designed for the self-tracking domain, developed as part of the “Tester” project (full title translated: “Designing Digital Self-Measurement in a Self-Determined Way”), 10 funded by the German Federal Ministry of Education and Research. 11 It will first elaborate on the theoretical background that the human-centered research was based on. Following, it will describe methodology used to assess the usability and user experience of the application. Additionally, its’ impact on perceived transparency and intervenability will be highlighted. Lastly, the key takeaways, learnings and possible future work will be discussed.

2 Theoretical background

This chapter outlines the theoretical foundations of the study’s goals and specifies the research questions that will be examined. It opens with an introduction to the human-centered design process and proven user testing methods for usability, user experience (UX), and user preferences. Subsequently, the employed user testing approaches are addressed in the context of the study questions. The theoretical groundwork is then established for the impact of transparency and intervenability in the context of data protection and privacy, with an emphasis on the user perspective, to provide the work with as broad a framework as feasible. The study explores how user requirements identified in the early project work 10 and attitudes toward data protection and privacy in self-tracking applications manifest in practice. Furthermore, it examines to what extent the privacy assistant meets these requirements in terms of transparency, intervenability, user experience, and usability.

2.1 Human-centered design

Human-centered design deals with the approach of focusing on the user, their needs, capabilities, and limitations during the design of products and systems. 12 To gain a comprehensive understanding, it is suggested to employ a combination of qualitative and quantitative methods in usability studies. 13 Usability and UX aspects are often assessed using quantitative methods such as the System Usability Scale (SUS) 14 and the User Experience Questionnaire (UEQ). 15 However, a substantial part of the mixed-methods approach is about incorporating qualitative methods to gain a comprehensive understanding of users and their interactions with a system. 13 Nevertheless, a strict dichotomy between qualitative and quantitative research methods is argued against based on the dichotomy’s lack of foundation and purpose. 16 Consequently, qualitative-oriented content analysis often involves quantified scaling techniques such as frequency analysis, which involves counting assigned codes based on their occurrence. Other effective analysis techniques include valence and intensity analyses, as well as contingency analysis. 16

Proper human-centered design of a privacy assistant builds on strong requirements research. Designing accordingly to the needs and preferences of the stakeholders will result in a usable system that helps people achieve their goals. One has to understand not only the goals of the users, but also the context in which they are trying to reach them. The context is made out of their capabilities and skills, their knowledge, their attitude towards aspects of privacy and data protection as well as their limitations. Including the human perspective in a privacy assistant’s design helps to implement the goals and intentions stated in the general data protection regulation (GDPR). The regulation is designed to empower individuals to make informed decisions about how their data is used and to provide them with the option to intervene if they choose.

2.2 Data protection

When using self-measurement technology part of the most common use cases for people is to share their self-measurement data within their social network but also with certain service providers. 17 People collect digital data about their bodies through technical means, such as smartwatches, to analyze and monitor athletic performance, their sleep, or their health. 4 As a result of sharing this kind of data through social networks, people achieve a sense of recognition and social belonging. 18 Whereas when people decide to share their data with service providers it is usually for a different incentive. It is already a frequent practice to share private data with health insurance companies or insurance agencies in exchange for discounted benefits or other financial incentives. 17 However, research shows that users are often unaware of the extent of data being collected about them, particularly when third parties aggregate this information without their explicit knowledge. 19 Aside from the benefits and incentives mentioned, there are potential risks associated with self-tracking and data sharing. Potential risks include identity theft, profiling, locating of users or stalking, embarrassment and extortion, and corporate use and misuse. 20 Even though a portion of users are aware of the risks, most are not, and they tend to assign little to no importance to the matter. 21 Additionally, another study found that their participants lacked the motivation to protect their personal information from external threats or exploitation. 22 Conversely, when transparency tools reveal the extent of personal data exposure over time, user attitudes shift. When users were shown a longitudinal view of their online tracking, they became more concerned about privacy risks and were more likely to take protective actions, such as limiting third-party data access. 23 Similarly, other sources report a growing demand for protecting one’s private data and therefore it is crucial to explore ways to safeguard one’s information. 24 , 25

According to the protection goals from Hansen and colleagues, transparency is critical for the user to intervene in a targeted manner. 26 However, users are usually not sufficiently educated about privacy legislation and the hazards involved. 9 As a result, the criteria for successful privacy protection can be described as comprehension of data processing (transparency) and the ability to act against it (intervenability). Both of these criteria form the core principles of the GDPR, 27 introduced to the European Union member states in 2018. Implementing these principles, however, is not without challenges for companies and service providers. Businesses in data-driven markets often struggle to strike a balance between protecting user privacy and driving value creation, yet they increasingly recognize transparency and intervenability as crucial for building trust and ensuring GDPR compliance. 28 This highlights the necessity of privacy assistants’ goals of promoting transparency and enabling intervenability. Finally, to be truly effective, privacy-enhancing technologies must evolve beyond basic transparency toward user-tailored and actionable interventions, ensuring that individuals are not just informed about risks but also empowered to mitigate them effectively. 29

2.3 Related usability research

Self-tracking applications, reveal significant usability and UX limitations in areas such as transparency, data control, and privacy management. Recent research highlights several critical gaps where these applications fail to deliver a seamless, human-centered experience regarding privacy. 7 , 30 , 31 , 32 Firstly, there is a lack of accessible privacy controls; many self-tracking apps provide limited and hard-to-navigate settings, making it challenging for users to control what personal data is collected, shared, or retained. This issue undermines user trust and often leads to confusion and frustration, as users struggle to manage their privacy preferences effectively. 7

Self-tracking apps frequently lack real-time privacy notifications that would inform users about active data processing, resulting in a lack of awareness about ongoing data collection. Without these notifications, users may assume their data is handled securely by default, missing essential information about data sharing or third-party involvement, which ultimately reduces transparency. 30 In line with that, there is a lack of ex-post transparency options in self-tracking applications. Ex-post transparency tools, which offer visibility into data processing after the fact, such as historical access logs or data usage reports, are rarely integrated. Without these retrospective tools, users cannot review or intervene in data handling practices based on historical data usage, which limits their ability to modify permissions and hold the app accountable. 30 , 31

Finally, another key issue is that privacy policies in self-tracking apps are often complex, lengthy, and written at a high literacy level, making it difficult for the average user to understand how their data is being used or protected. This complexity can lead users to skip these policies altogether or feel distrustful, creating a gap in informed consent. 32

In summary, self-tracking apps often fall short in delivering a human-centered privacy experiences, with key issues including inaccessible privacy settings, absence of real-time data notifications, overly complex privacy policies, missing transparency tools, and limited retrospective data visibility. These gaps underscore the need for a more intuitive and accessible approach to privacy management, which would promote greater user autonomy, satisfaction, and trust in these applications. Our proposed prototype addresses these significant gaps by implementing a suite of features designed to enhance user autonomy and engagement with privacy management. Key functionalities include easy personalization of privacy settings, allowing users to effortlessly control what personal data is collected, shared, and retained. Additionally, real-time notifications inform users whenever data processing contradicts their established preferences, enhancing awareness and transparency. The prototype also provides assistance for users to intervene in undesired data processing, making it easier to exercise their data subject rights. Furthermore, adaptable data protection content enables users to tailor privacy information to their own knowledge level, helping them better understand and manage their data handling practices. Collectively, these features create a more intuitive, accessible privacy experience that supports user control, informed consent, and trust in self-tracking applications.

3 Methodology

To evaluate the privacy assistant prototype, the research method for this study follows a mixed-method approach. The following subsections provide details regarding the choice of research method, the concept of the study, user test tasks, the study procedure, and the specific methods used for data collection and analysis, as well as a brief overview of the application features.

3.1 Process overview

The application prototype was created based on previous extensive requirements research and under close observation of usability and UX experts, who provided feedback to revise the prototype iteratively. The study aimed to ensure representative results for a diverse user population matching the use context analysis. To achieve this, 20 participants were selected for the in-person study. The study sample consisted of eight female and twelve male participants. Participant ages ranged from 18 to 45 years (six participants aged 18–25, eight participants aged 26–35 and six participants aged 36–45). Although the recruitment process did not exclude participants above 45, no individuals in that age group took part in the study. Audio recordings of the test sessions were made to facilitate later evaluation and categorization of user feedback.

3.2 Privacy assistant prototype

The creation of the application’s high-fidelity prototype employed various design solutions that were gathered based on specific usage requirements, framework conditions, and project demands. These solutions were then evaluated using wireframes and sketches. The iterative process involved expert feedback to refine the solution, resulting in three primary features or application functionalities, all centered around user flow and functionality. The primary goal of the application is to educate users about privacy and provide insights into the data processes involved when using self-measurement technology. It empowers users to intervene by exercising their data subject rights or adjusting privacy settings for their self-measurement devices. Figure 1 showcases the prototype’s main functions. The first main functionality shows data protection instances, which conflict with the user’s preset data protection preferences. This will help address transparency of personal data use and encourage easy-to-use options of intervenability. The second feature, named “Helpdesk” assists users in adjusting their data protection preferences, and addresses data protection conflicts and tutorials. The third feature is an information center that provides the user with easy-to-process content about the topics related to the features in the application.

Prototype main pages: privacy dashboard (two tabs: “Found Conflicts” and “Devices & Data”), helpdesk (three tiles: “Data Protection Preferences”, “Data Subject Right Templates” and “Device Tutorials”) and information center (headers: “DSGVO (GDPR)”, “Important Terminology” and “Data Protection in Everyday Life”).

When a user first starts using the application, they are guided through an onboarding process. During this process, they have the option to add their self-measurement technology to the application. The assistant then analyzes and presents the types of data being used, shared, or collected in a structured, device-specific overview integrated into the app’s dashboard. In the setup, the application prompts the user to define their data protection and privacy preferences. Based on their set preferences the application alerts the user when data is being collected, shared or processed they opted to protect. To simplify setting adjustments, three default intensity levels for privacy settings are suggested in line with the project’s privacy personas: 33 (1) lenient privacy, (2) balanced privacy, and (3) strict privacy. Additionally, the setup allows users to personalize the application’s text content according to their familiarity and desired level of detail. This is done by selecting your personal data protection skill-level with the help of three identifying descriptions (Figure 2).

Setting your personal data protection skill-level (translation: personalize according to your skill level: beginner: “I have little experience with data protection regulations. I prefer clear and simple explanations.” Intermediate: “I am somewhat familiar with data protection topics. I prefer a balance between details and clarity.” Expert: “I am well-informed about data protection topics. Detailed articles and in-depth content are not a problem for me”).

After the setup, the user gets forwarded to the privacy dashboard which also functions as a landing page. This page consists of two tabs: “Data Protection Conflicts found” and “Devices & Data.” A “Data Protection Conflict” is a warning or notification from the application that certain devices are using or collecting data types inconsistent with the user’s privacy preferences. These conflicts are categorized by relevance and can be resolved by exercising data subject rights, adjusting device settings, or allowing the data to be used, which overrides the privacy preferences for that particular type of data. The Privacy Helpdesk is accessible through the second page in the bottom bar’s navigation structure. Here, users can modify their privacy preferences or access GDPR templates. Additionally, the Privacy Helpdesk offers tutorials for self-measurement device settings. The final main feature page is the Information Center, which contains articles, introductions, and explanations related to privacy topics. Users can engagingly explore GDPR-related content or broaden their knowledge by reading current articles on data protection.

3.3 Comprehensive user test

The content of the user test was divided into six sections. Subsequently, they are addressed in chronological order based on their appearance in the user test. Starting with a pre-questionnaire, then moving on to prototype-based user test tasks, quantitative and qualitative questionnaires, A/B test pairs, cooldown questions in the form of a semi-structured interview, as well as adapted questionnaires on the topic of privacy and GDPR knowledge.

First, participants were asked to fill out a preliminary questionnaire. This questionnaire aimed to assess their demographics and their relationship with self-measurement technology. The latter assessment was achieved by having participants identify with short forms of the project’s defined user personas. 33 Following the pre-questionnaire, the participants were instructed to execute a set of user tasks with the given prototype. A red route importance ranking was conducted based on the proportion of users and frequency of use to gain insights into the most crucial features. Participants were asked to answer post-task questions after each user test task to encourage them to report on perceived problem sources, preferences, and needs.

In the next parts of the user test, two standardized usability and UX questionnaires were employed. Both the SUS and the UEQ were used due to their specific strengths and weaknesses. Whereas the SUS provides a straightforward way to assess the perceived usability of a product, it does not cover the user perception of security, which is shown to be critical to identity management and data protection. 34 Consequently, the UEQ was used to expand the assessable range of user-centered aspects.

Another study goal is to assess the application’s impact on the user’s perceived transparency and intervenability concerning data privacy. Two A/B test pairings of relevant application pages were created for this purpose. Participants were encouraged to become acquainted with the two versions of a pair before describing which of the two versions they preferred and why. The differences in the A/B versions were linked to privacy transparency and the extent of intervenability. For example, version A’s screen presented substantially more information than version B, indicating a better level of transparency. The initial A/B pair depicted the application’s dashboard. Its’ key difference was in the portrayal of the significance of a discovered “Data Conflict”. Version A presented this as comprehensive continuous text, whereas version B assigned a significance value to the data conflict using a traffic light system. Similarly, the extent of descriptive text was adjusted in the second A/B set, and the usage of icons was contrasted. The differences in levels of intervenability were designed based on the clustering of similar intervention options and the way they were presented. One version offered direct links to specific data subject right templates, while the other only suggested that the conflict could be resolved through the helpdesk functionalities. The participants in the interview expressed their remarks regarding the respective versions, which were categorized and assessed from the transcript.

At the end of the user test, participants were asked a series of cooldown questions to capture a reflection for the entire application prototype. Part of the questions included a holistic evaluation of the application rated on a five-point Likert scale (“How did you like the application overall?”). Upon this, positive and negative aspects of the application were inquired (“What about the application did you like specifically?”, “What about the application did you not like?”), as well as reasons that would prevent participants from using the application (“What would be things that would hinder you from using the application?”) and wishes for the future state of the application (“What are your wishes for a future state of the application?”). The last questions related to the participants’ preference regarding the frequency and type of application notifications (“Would you like to receive notifications from this application (e.g. about data protection conflicts found or changes to the status of the sanity check)? If yes, how often?”) as well as an assessment of the participants whether the use of the application has an influence on their perceived transparency and intervenability on the topic of data protection or in which situations they would use such an application (“Imagine you were to use such an application in your everyday life. What would change in terms of your transparency and ability to intervene on the subject of data protection? In which situations would you like to use it?”).

To further assess the participants’ privacy attitude (PRIV-Questionnaire) and their knowledge regarding GDPR contents (GDPR-Questionnaire), two short questionnaires each containing four items were utilized. Their items are reported in Table 1. Items from the PRIV-Questionnaire employed a seven-point Likert scale ranging from “do not agree at all” to “completely agree”. The questions asked about the participants’ understanding of how companies manage personal data, the relevance of privacy to online companies, a ranking of the relevance of personal privacy compared to other topics, and the level of perceived concern about a threat to one’s privacy. Similarly, the GDPR questionnaire also used a seven-point Likert scale, the difference being that each item was assigned individual scale labels. Participants were asked about their familiarity with the GDPR and their level of knowledge about its contents. Finally, they were asked to rate their level of knowledge compared to the rest of the population and to indicate their level of clarity about the features of the GDPR.

Privacy Attitude (PRIV) 35 and General Data Protection Regulation Knowledge (GDPR) 36 questionnaires.

| Item | Question | Response scale |

|---|---|---|

| priv_item1 | “I am sensitive to the way companies manage my personal information” | (1) Completely disagree – (7) completely agree |

| priv_item2 | “It is important that my privacy is maintained with online companies” | (1) Completely disagree – (7) completely agree |

| priv_item3 | “Personal privacy is particularly important compared to other issues” | (1) Completely disagree – (7) completely agree |

| priv_item4 | “I am concerned about threats to my personal privacy” | (1) Completely disagree – (7) completely agree |

| gdpr_item1 | “How familiar are you with the General Data Protection Regulation (GDPR)?” | (1) Completely unfamiliar – (7) completely familiar |

| gdpr_item2 | “How much do you know about the GDPR?” | (1) Very little – (7) very much |

| gdpr_item3 | “How would you rate your knowledge of the GDPR compared to the rest of the population?” | (1) I am among the least knowledgeable – (7) I am among the most knowledgeable |

| gdpr_item4 | “How clear is your understanding of what features of the GDPR are important to provide you with maximum satisfaction?” | (1) Not clear at all – (7) very clear |

3.4 Qualitative data analysis

Lastly, a qualitative valence analysis of the transcribed user test audio was conducted using deductive and inductive approaches. This approach follows the recommendations of Mayring and by those recommendations, 16 a set of categories was established based on initial correlation assumptions. Following that, a comprehensive coding system was created through systematic observations and iterative refinement. This coding system included aspects of usability, UX, sources of errors, participants’ attitudes toward privacy issues, and their perceptions of transparency and privacy intervention options through the interactive system. Textual items collected were carefully examined and classified as positive or negative statements based on their context, allowing for a more nuanced analysis of participant feedback. Figure 3 presents an overview of the coding system.

Qualitative coding system.

4 Results

The following section summarizes the findings from the 20 moderated user tests conducted. Presented in the results are findings regarding participants’ attitudes towards privacy, their knowledge of the GDPR, the usability of the high-fidelity prototype, the overall UX, as well as comments expressed by participants about the individual features of the prototype and their influence on perceived transparency and intervenability.

4.1 Privacy and data protection

Regarding privacy attitude and GDPR knowledge, the participants predominantly described themselves as individuals who are sensitive to how companies manage their data (priv_item1). Specifically, they placed foremost importance on their privacy when it comes to internet companies (priv_item2). Furthermore, personal privacy emerged as a significant concern compared to other topics (priv_item3). At the same time, there was a broader distribution of responses, indicating varying levels of concern about threats to their privacy (priv_item4).

The majority of participants reported a moderate understanding of the GDPR, with responses falling in the middle of the seven-point Likert scale for gdpr_item1, 2, and 4 while showing a slight tendency towards lower knowledge levels when comparing themselves to the rest of the population (gdpr_item3). Qualitative data analysis reflected the participants’ interest in data protection and privacy issues and their assessment of the usability of opt-out options within the prototype. Almost every participant reported at least a basic understanding of the GDPR, indicating a certain level of familiarity with the regulations. However, their interest in data protection issues varied significantly, with attitudes ranging from considering data protection as the “most important aspect” to regarding to it as “obsolete”.

4.2 Usability and user experience

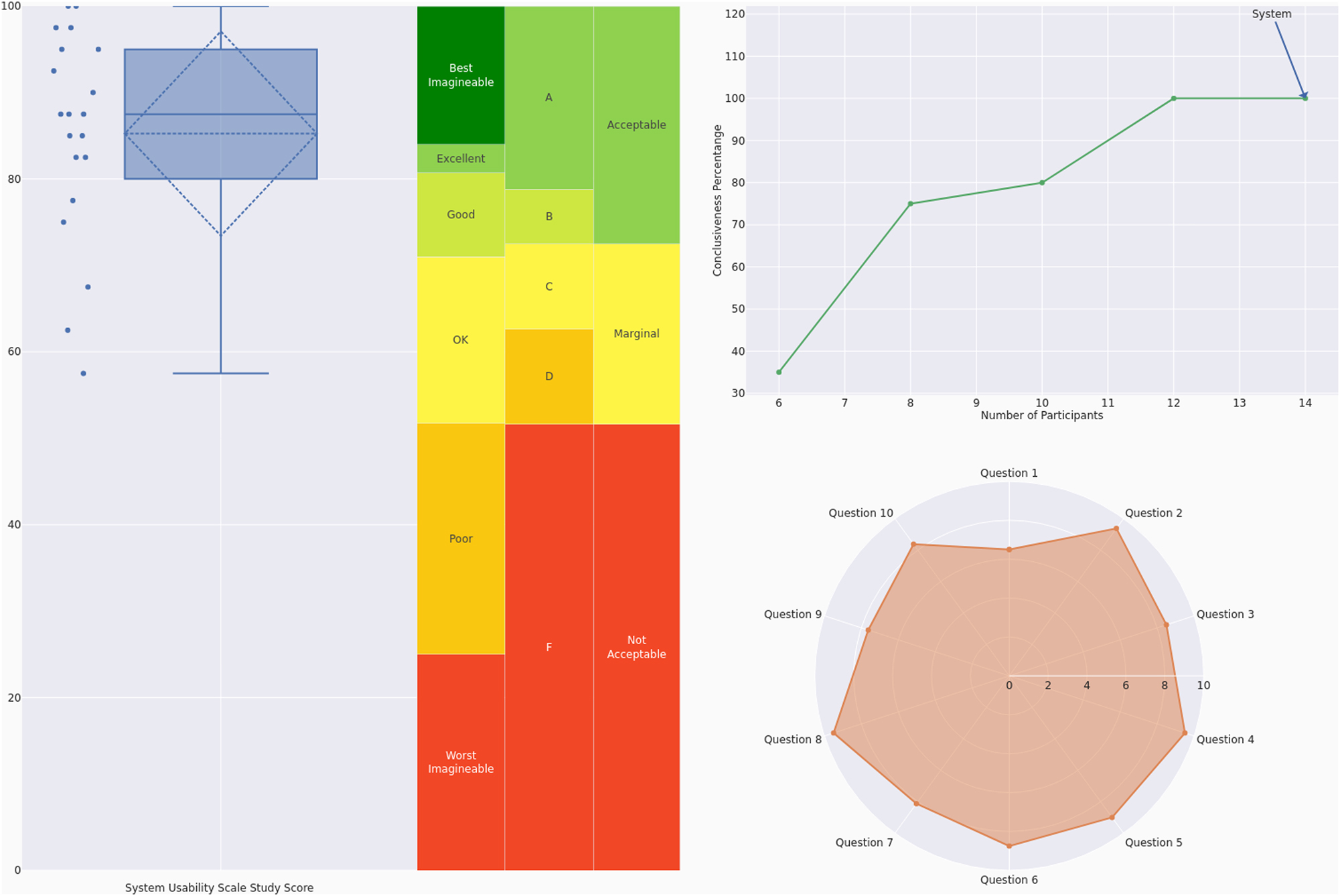

The high-fidelity prototype’s overall usability was assessed using the SUS. Among participants an “best imaginable” SUS rating (Md = 87.5, M = 85.25, SD = 12.14) was reported, according to the adjective rating system. 37 With a standard deviation of 12.14, there is some variation in participant responses, but overall, the scores are clustered relatively closely around the high mean score, suggesting consistency in usability ratings. The radar chart gives further input on the specific questions from the SUS, that performed particularly well (Questions 2, 4 and 8) and those that fell behind the average (Questions 1 and 9) (Figure 4).

System usability scale results (adjective-, grade- and acceptability-scale, conclusiveness, radar chart). 38

Furthermore, the UEQ was utilized to assess the overall UX of the high-fidelity prototype. The sample exhibited positive mean values for all subscales of the UEQ. The subscales of attractiveness (M = 1.93, SD = 0.65), transparency (M = 2.24, SD = 0.76), efficiency (M = 1.95, SD = 0.86), dependability (M = 1.78, SD = 0.73), and stimulation (M = 1.51, SD = 0.89) all received positive evaluations according to the benchmarking criteria, with mean scores well above +0.8. 39 While still being positively rated, the subscale of Novelty (M = 1.38, SD = 1.06) did not fall within the “excellent” category but the “good” category instead, indicating room for improvement in terms of innovative aspects (Figure 5). 39

User experience questionnaire results on benchmark. 39

4.3 User preferences and impressions

This chapter explores user preferences and impressions based on qualitative feedback gathered from transcriptions analyzed through a structured coding system. A total of 1,167 codes were assigned, capturing both positive and negative mentions across various categories. By organizing feedback according to specific topics, this chapter highlights the key themes. All quotations in this section are drawn directly from participant responses.

Usability Strengths: In general, the prototype received favorable comments and was often referred to as “intuitive” and “goal-oriented.” Participants appreciated the “lean design,” which appears “modern and refreshing,” as well as the easy learnability aspect. With a few exceptions, the information architectures were mostly characterized as “suitable” and “understandable” or similar expressions (about 75 % of coded comments). The participants considered the structure and flow of the UX as good (82 out of 92 coded comments). Aspects that have contributed to the usability of the application to a particular extent, according to participants, are the “straightforwardness of processes,” support structures such as pre-filled text fields or activation codes, “recommendation features” as assistance in decision-making, and the personalization of the application’s content through the definable privacy skill level.

Terminology Challenges: Negative effects on the UX occurred due to “the length of the setup” and the terminology of functions of the application or labels of some individual interaction fields (22 out of the 26 coded comments). The most frequently criticized labels were the main functions “Privacy Helpdesk” and “Information Center”. Many participants had a firm idea of a “Helpdesk”, which did not match the actual functions of the prototype. They expected the Helpdesk to be the place where they could get assistance concerning application features. Shorter versions of “Data Protection Administration” could be more suitable labels. However, “almost any name that does not evoke fixed expectations” is suitable, according to the participants. When it came to labeling the Information Center, it was not so much the name itself that was criticized, but rather the abbreviation “Info” used in the application prototype. This abbreviation was also expected to have a different function. In line with the previous recommendation for action, a renaming to show the complete title (“Information Center”) and a distinguishable icon could improve the user experience and prevent confusion.

User Expectations and Data Sharing: 18 out of 26 coded comments about expectations, including anticipated, during and after the interaction, were favorable for the prototype and expressed that the expectations of the participants were mostly met. Namely, the presentation of the user interface, the interactive behavior, and the functionalities of the prototype were in line with the anticipations of the user group. The application was suitably designed for the given sample and in the given context of use. Participants’ opinions split on the question of whether they wanted to view the raw data of their self-measurement technology in the privacy assistant. On the one hand, such connectivity was desired, and on the other hand, sharing self-measurement data with a third-party application was strictly rejected. However, only a fraction of participants commented on that topic (six out of the 20 participants).

Effectiveness and Efficiency: Besides user satisfaction, there are two more aspects to usability: perceived effectiveness and perceived efficiency. Coded comments for the aspect of effectiveness were 100 % positive (25 out of 25 coded comments), whereas the aspect of efficiency reports an almost fully positive result of 52 positive comments of out 55. The participants’ comments on effectiveness and efficiency emphasized the ease and speed of successfully completing their planned actions, thanks to the “intuitive structure” and “flow of the system”. According to the participants, the presetting of data protection preferences in the setup process and the fast navigation structures contributed significantly to efficiency. The only limiting factor for efficiency occurred when filling out the templates for data subject rights. It was criticized that only the template is downloaded instead of sending it directly from the application to the person responsible. This finding contributed to a better understanding of the goals of people.

A/B Test Findings: The findings on user preference concerning the A/B tests revealed that about 75 % of users prefer visual cues and icons over text when trying to solve a given task. The presentation of the A/B tests aimed at presenting one version with a high information content and one with a lower one, thus varying the level of transparency and intervenability. Preferences differed regarding the transparency level. Some prefer the lower reading effort and describe the information content as “sufficient”. Others would like to be presented with a concise text that can be expanded via user action. According to the participants, a positive effect on the perceived intervenability was achieved by the “grouping of similar functions” for affiliation and coherence of the different options. Additionally, the use of icons was preferred over the absence of icons. The icons would “assist with recognition of the functions and reduce reading effort”. This trend toward providing less information, however, was bound on the premise that key information would remain transparent to the user.

Privacy Settings Clarity: Regarding the clarity of the user interface, the breakdown of the privacy preference settings attracted negative attention. The “mental effort required to find the changes” between the setting levels was criticized. The breakdown currently shows both the “unprotected” and the “protected” data types. An improved presentation could be achieved by an overview table that shows the preferences next to the data types and a staggered presentation of existing changes between the levels. However, one aspect that was without exception considered positive was the color design of various interaction fields, warning messages, and related interface elements in general (41 out of 41 coded comments). Especially the design of the warning messages for irreversible actions was perceived as “effective”.

Accessibility and Personalization: Both the post-task question about participants’ suggestions for improvement and the cooldown question about wishes for the future state of the application encouraged demands to be made to a special extent reaching a total of 97 coded comments. It is important to note, that these comments were participant-specific and therefore usually not mentioned as many times across the sample. However, demands that were named multiple times included the following: In line with accessibility aspects, the demand was expressed to enable text input by speech or the output of text content by text-to-speech systems. These would reduce barriers for physically impaired people and provide a way to fully use the application for that user group. Further, in the area of accessibility, requests were made for a “senior mode”. This should be characterized by “large font sizes and simpler labels”. Another requirement is the desire for a tutorial to explore the functions and contents of the application when using it for the first time. However, such help structures should be “repeatable even after longer use of the application, yet not mandatory to start with”. A set of demands can be summarized from statements about the personalizability of the application. For example, the extension of the “Information Center Skill-Level” to all application content was discussed, so that users could choose between a detailed “expert mode” and a less extensive “fast mode”. One personalization that could have a significant positive impact on the user experience, according to participants, is the “smart customization” of recommendations and ratings. This meant that after solving a privacy conflict, the application would ask the user whether the rating was found to be appropriate or needed to be changed. Thus, over time, a “near-perfect fit” to user preferences could be achieved. The same adaptation was also conceivable for some participants for the contents of the information center. The application would then adjust the article selection based on feedback according to the user’s fields of interest. To speed up the process, some participants confirmed that they would answer a short sequence of assessment questions if this would result in noticeable differences in recommendations. A final personalization was mentioned by participants regarding notifications. There is a desire not only to turn notifications on or off depending on the topic but also to have the option of receiving them at defined times or after certain intervals. In addition, participants have requested functions for saving information. This function was compared to a “digital wallet”. It would contain all the information needed to fill out a data subject rights template (e.g., personal address, address of the persons responsible, identifiers of various manufacturers).

Transparency and Intervenability: In a final assessment of perceived transparency, 17 of the 20 participants confirmed that using the tested application would have a positive effect on perceived transparency. The application was considered a good “interface for data protection” and to present information in a user-friendly way. Those who felt that the application would not affect their perceived transparency cited either their strong and extensive knowledge or their scarcity of exposure to privacy issues as reasons for this assessment. An equal number, but not the same participants, reported in the final evaluation about the perceived intervenability when employing the application that it would have a positive impact. The opportunities for intervention appeared “meaningful, effective, and usable” to the participants. At the same time, the same reasons for the lack of influence on intervenability were presented as before for perceived transparency.

5 Discussion

This study showcased results on how users could perceive, understand, and experience a privacy assistant tool in the self-tracking domain. The project’s identified user requirements were converted into design solutions and evaluated employing an iterative approach based on expert feedback, as well as a concluding evaluation. The test included the participants’ subjective assessments of the interactive system’s usability, their UX when utilizing the application, and a subjective assessment of the application’s impact on transparency and intervenability in data protection. Furthermore, extensive qualitative-oriented content analysis was used to investigate the preferences of the representative user group regarding presentation and information structures.

The findings for the perceived usability of the interactive prototype are within an exceptionally good range. In terms of UX, the sample group responded favorably to the privacy assistant. The replies of the participants to questions about their privacy and level of knowledge about GDPR were reliably mirrored in the execution of the user test tasks. The evaluation of the PRIV-Questionnaire revealed that privacy is typically regarded as important to particularly important by the participants. During the use of the privacy assistant, no actions were taken by the participants that aligned with the description of a “privacy paradox” as formulated by Hoffman et al. 40 Based on this, the presentation and functionality of the various privacy preferences and additional intervention options in the privacy assistant seem appropriate for users to act following their goals if they choose to do so. A similar conclusion can be drawn from the results of the GDPR questionnaire. Within the setup process of the privacy assistant, users are prompted to indicate their “Privacy Skill Level” by choosing one of three identifying texts. This three-level assessment was reflected in the responses to the seven-level questionnaire scale on GDPR knowledge. In the final questionnaire, participants rated their GDPR knowledge on the lower end. This tendency contrasts with the selected privacy skill level in the privacy assistant, which leaned towards higher proficiency. This shift in self-assessment may be attributed to the illusion of explanatory depth phenomenon, which suggests that people often assume they are well-informed about a topic or how a system works until they are asked to explain it. 41 Thus, in the case of the privacy assistant, participants may have initially perceived themselves as more knowledgeable until they encountered new information regarding data protection or unknown GDPR articles while completing the tasks.

The strength of the study lies in the comprehensive content analysis focused on qualitative aspects, as well as the extensive analysis of perceived usability and UX. This led to being able to explore, understand, and summarize user preferences regarding presentation formats, information structures, and data protection and privacy elements. In particular, the data and privacy elements of the study provide key insights into how users value and interpret these aspects, as well as their overall interest in the topic. The results of the content analysis emphasize the positive influence of the privacy assistant on user-perceived transparency and control in the context of data privacy self-measurement.

The development of our prototype demonstrates significant progress toward achieving a more human-centered experience in self-tracking privacy assistants. By addressing the critical usability gaps identified in recent research – such as inaccessible privacy settings, lack of real-time data notifications, complex privacy policies, missing transparency tools, and limited retrospective data visibility – the prototype meets essential usage requirements for a transparent and intuitive privacy management solution. Key features such as customizable privacy settings, real-time notifications about contradictory data processing (“data protection conflicts”), and user assistance for intervening in undesired data handling processes effectively support users in exercising their data rights. The addition of adaptable data protection content, which allows users to tailor information to their own level of knowledge, seems to further increase understanding and engagement. In fulfilling these usage requirements, our prototype proves to be a suitable and effective tool for promoting transparency and intervenability in the form of a privacy assistant.

6 Limitations and future work

It should be emphasized that the study’s sample is young compared to the user group described in the usage context description. As a result, while representative results and conclusions can be stated for the younger part of the user group, they cannot be transferred to the older part without further study. It should also be mentioned in this context that the prototype’s functionality was merely replicated via design components and micro-interactions. As a result, the assessment and perception of a “real” technically integrated system by users may differ from that of the prototype. Furthermore, in the context of self-measurement technology, the long-term use of the tool is a crucial factor. Since our study relies on self-reported behavior, there may be a discrepancy between participants’ stated intentions and actual long-term engagement with the privacy assistant. Future research could benefit from a longitudinal study to examine sustained user behavior and adoption over time, providing deeper insights into the real-world effectiveness of the tool.

Future work will also focus on evaluating the technical implementation of the privacy assistant in a realistic setting. This involves testing the fully functional system to identify any differences between the prototype’s design-based interactions and the capabilities of a technically integrated solution. By examining how the actual implementation performs compared to the prototype, we can gain insights into adjustments needed to ensure functionality aligns with user expectations. Key areas of investigation will include assessing the system’s impact on perceived transparency and intervenability, as well as overall acceptance levels, usability, and user experience. Given the limitations of the current study’s younger sample, further research is essential to explore whether these results hold for older user demographics, as outlined in the usage context description. This broader study will enable us to confirm the system’s usability and effectiveness across the full target audience. Lastly, the transition from prototype to technical implementation may introduce variances in user perceptions and interactions, making it crucial to observe how users engage with the system under real conditions. This next phase will provide a solid basis for refining the system to meet diverse user needs and ensuring that it delivers an intuitive, transparent, and effective privacy management experience.

7 Conclusions

In this work, the perceived usability, and UX related to the privacy assistant application, as well as the users’ preferences regarding information structure and extent of transparency and intervenability in a data protection context was studied. Overall, the results indicate that participants had varying attitudes toward privacy and exhibited diverse knowledge levels regarding the GDPR. The high-fidelity prototype demonstrated excellent usability and received positive evaluations in terms of UX across various dimensions. The findings highlighted both the strengths and areas for improvement in the design and implementation of the prototype. Our findings demonstrated that a well-designed privacy assistant can significantly improve perceived transparency and intervenability – key objectives aligned with GDPR principles. Through user feedback, we showed that the privacy assistant prototype, with its intuitive interface and structured features, helped users better understand data handling processes and empowered them to exercise control over their data.

Funding source: Bundesministerium für Bildung und Forschung

Award Identifier / Grant number: KIS6AGSE022

-

Research ethics: Not applicable.

-

Informed consent: Informed consent was obtained from all individuals included in this study, or their legal guardians or wards.

-

Author contributions: All authors have accepted responsibility for the entire content of this manuscript and approved its submission.

-

Use of Large Language Models, AI and Machine Learning Tools: None declared.

-

Conflict of interest: The authors state no conflict of interest.

-

Research funding: Funding Agency: BMBF; Grant number: KIS6AGSE022.

-

Data availability: Not applicable.

References

1. Big Data. Statista. https://de.statista.com/statistik/studie/id/16989/dokument/big-data--statista-dossier/ (accessed 2024-11-12).Search in Google Scholar

2. Ruohonen, J. A Text Mining Analysis of Data Protection Politics: The Case of Plenary Sessions of the European Parliament. ArXiv 2023, abs/2302.09939. https://doi.org/10.48550/arXiv.2302.09939.Search in Google Scholar

3. Global: Fitness/activity Tracking Wristwear Number of Users 2020–2029. Statista. https://www.statista.com/forecasts/1314613/worldwide-fitness-or-activity-tracking-wrist-wear-users (accessed 2024-11-12).Search in Google Scholar

4. 5 Reasons to Quantify Yourself. WITHINGS BLOG. https://blog.withings.com/2012/08/07/5-reasons-to-quantify-yourself-2/ (accessed 2024-11-12).Search in Google Scholar

5. Rosenblat, A.; Wikelius, K.; Boyd, D.; Gangadharan, S. P.; Yu, C. Data & Civil Rights: Health Primer. In Data & Civil Rights Conference, October, 2014.10.2139/ssrn.2541509Search in Google Scholar

6. Kim, J. Analysis of Health Consumers’ Behavior Using Self-Tracker for Activity, Sleep, and Diet. Telemed. E-Health 2014, 20 (6), 552–558. https://doi.org/10.1089/tmj.2013.0282.Search in Google Scholar PubMed PubMed Central

7. Hutton, L.; Price, B. A.; Kelly, R.; McCormick, C.; Bandara, A. K.; Hatzakis, T.; Meadows, M.; Nuseibeh, B. Assessing the Privacy of mHealth Apps for Self-Tracking: Heuristic Evaluation Approach. JMIR MHealth UHealth 2018, 6 (10), e9217. https://doi.org/10.2196/mhealth.9217.Search in Google Scholar PubMed PubMed Central

8. Das, A.; Degeling, M.; Smullen, D.; Sadeh, N. Personalized Privacy Assistants for the Internet of Things: Providing Users with Notice and Choice. IEEE Pervasive Comput 2018, 17 (3), 35–46. https://doi.org/10.1109/MPRV.2018.03367733.Search in Google Scholar

9. Ajana, B. Personal Metrics: Users’ Experiences and Perceptions of Self-Tracking Practices and Data. Soc. Sci. Inf. 2020, 59 (4), 654–678. https://doi.org/10.1177/0539018420959522.Search in Google Scholar

10. TESTER Projektwebsite – Digitale Selbstvermessung Selbstbestimmt Gestalten. https://www.tester-projekt.de/ (accessed 2024-11-14).Search in Google Scholar

11. TESTER — Vernetzung und Sicherheit digitaler Systeme. https://www.forschung-it-sicherheit-kommunikationssysteme.de/projekte/tester (accessed 2024-11-14).Search in Google Scholar

12. DIN EN ISO 9241-110:2020-10, Ergonomie Der Mensch-System-Interaktion_- Teil_110: Interaktionsprinzipien (ISO_9241-110:2020); Deutsche Fassung EN_ISO_9241-110:2020; Beuth Verlag GmbH.Search in Google Scholar

13. Hass, C. A Practical Guide to Usability Testing. Consum. Inform. Digit. Health Solut. Health Health Care 2019, 107–124; https://doi.org/10.1007/978-3-319-96906-0_6.Search in Google Scholar

14. Brooke, J. SUS: A Quick and Dirty Usability Scale. Usability Eval Ind 1995, 189.Search in Google Scholar

15. Laugwitz, B.; Held, T.; Schrepp, M. Construction and Evaluation of a User Experience Questionnaire. In Construction and Evaluation of a User Experience Questionnaire, Vol. 5298, 2008; pp. 63–76.10.1007/978-3-540-89350-9_6Search in Google Scholar

16. Mayring, P. Qualitative Inhaltsanalyse. In Grundlagen Und Techniken, Vol. 12. Überarb. Aufl.; Beltz Verlag: Weinheim, 2015.Search in Google Scholar

17. Henkel, M.; Heck, T.; Göretz, J. Rewarding Fitness Tracking—The Communication and Promotion of Health Insurers’ Bonus Programs and the Use of Self-Tracking Data. In Social Computing and Social Media. Technologies and Analytics: 10th International Conference, SCSM 2018, Held as Part of HCI International 2018, Las Vegas, NV, USA, July 15-20, 2018, Proceedings, Part II 10; Springer, 2018; pp. 28–49.10.1007/978-3-319-91485-5_3Search in Google Scholar

18. John, N. A. The Age of Sharing; John Wiley & Sons: Cambridge, 2017.Search in Google Scholar

19. Arias-Cabarcos, P.; Khalili, S.; Strufe, T. “Surprised, Shocked, Worried”: User Reactions to Facebook Data Collection from Third Parties. arXiv:2209.08048 [cs.CR], 2022. https://doi.org/10.48550/arXiv.2209.08048.Search in Google Scholar

20. Barcena, M. B.; Wueest, C.; Lau, H. How Safe Is Your Quantified Self. Symantech Mt. View CA USA 2014, 16.Search in Google Scholar

21. Zimmer, M.; Kumar, P.; Vitak, J.; Liao, Y.; Chamberlain Kritikos, K. There’s Nothing Really They Can Do with This Information’: Unpacking How Users Manage Privacy Boundaries for Personal Fitness Information. Inf. Commun. Soc. 2020, 23 (7), 1020–1037 https://doi.org/10.1080/1369118x.2018.1543442.Search in Google Scholar

22. Gabriele, S.; Chiasson, S. Understanding Fitness Tracker Users’ Security and Privacy Knowledge, Attitudes and Behaviours. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems; Association for Computing Machinery: New York, NY, 2020; pp 1–12.10.1145/3313831.3376651Search in Google Scholar

23. Weinshel, B.; Wei, M.; Mondal, M.; Choi, E.; Shan, S.; Dolin, C.; Mazurek, M. L.; Ur, B. Oh the Places You’ve Been! User Reactions to Longitudinal Transparency about Third-Party Web Tracking and Inferencing. In Proceedings of the 2019 ACM SIGSAC Conference on Computer and Communications Security; ACM: London United Kingdom, 2019; pp. 149–166.10.1145/3319535.3363200Search in Google Scholar

24. Hoffman, D.; Rimo, P. A. It Takes Data to Protect Data. 2017. https://ssrn.com/abstract=2973280 .10.2139/ssrn.2973280Search in Google Scholar

25. Brimblecombe, F.; Fenwick, H. Protecting Private Information in the Digital Era: North. Irel. Leg. Q 2022, 73, 26–73. https://doi.org/10.53386/nilq.v73iad1.937.Search in Google Scholar

26. Hansen, M.; Jensen, M.; Rost, M. Protection Goals for Privacy Engineering. In 2015 IEEE Security and Privacy Workshops; IEEE: San Jose. CA, 2015; pp 159–166.10.1109/SPW.2015.13Search in Google Scholar

27. General Data Protection Regulation (GDPR) – Legal Text. General Data Protection Regulation (GDPR). https://gdpr-info.eu/ (accessed 2024-11-11).Search in Google Scholar

28. Astfalk, S.; Schunck, C. H. Balancing Privacy and Value Creation in the Platform Economy: The Role of Transparency and Intervenability; Gesellschaft für Informatik e.V: Bonn, 2023; pp 135–140.Search in Google Scholar

29. Reuter, C.; Iacono, L. L.; Benlian, A. A Quarter Century of Usable Security and Privacy Research: Transparency, Tailorability, and the Road Ahead. Behav. Inf. Technol. 2022, 41 (10), 2035–2048. https://doi.org/10.1080/0144929X.2022.2080908.Search in Google Scholar

30. Murmann, P. Eliciting Design Guidelines for Privacy Notifications in mHealth Environments. Int J Mob Hum Comput Interact 2019, 11, 66–83. https://doi.org/10.4018/ijmhci.2019100106.Search in Google Scholar

31. Murmann, P.; Fischer-Hübner, S. Tools for Achieving Usable Ex Post Transparency: A Survey. IEEE Access 2017, 5, 22965–22991. https://doi.org/10.1109/ACCESS.2017.2765539.Search in Google Scholar

32. Jantraporn, R.; Kian, A.; Collins, M.; Baden, M.; Mendez, E.; Wehlage, J.; Wolf, E.; Austin, R. R. Evaluation of Mobile Health Cycle Tracking Applications Privacy, Security, and Data Sharing Practices. CIN Comput. Inform. Nurs. 2023, 41, 629–634. https://doi.org/10.1097/CIN.0000000000001045.Search in Google Scholar PubMed

33. Böning, F.; Astfalk, S.; Sellung, R.; Laufs, U. Informiertheit und Transparenz im Kontext digitaler Selbstvermessung. In Daten-Fairness in einer globalisierten Welt; Friedewald, M., Roßnagel, A., Neuburger, R., Bieker, F., Hornung, G., Eds.; Nomos Verlagsgesellschaft mbH & Co. KG: Baden-Baden, 2023; pp 247–274.10.5771/9783748938743-247Search in Google Scholar

34. Cameron, K. The Laws of Identity; Kim Cameron’s Identity Weblog. https://www.identityblog.com/stories/2005/05/13/TheLawsOfIdentity.pdf.Search in Google Scholar

35. Martin, K. D.; Borah, A.; Palmatier, R. W. Data Privacy: Effects on Customer and Firm Performance. J. Mark. 2017, 81 (1), 36–58. https://doi.org/10.1509/jm.15.0497.Search in Google Scholar

36. Kelting, K.; Duhachek, A.; Whitler, K. Can Copycat Private Labels Improve the Consumer’s Shopping Experience? A Fluency Explanation. J. Acad. Mark. Sci. 2017, 45 (4), 569–585. https://doi.org/10.1007/s11747-017-0520-2.Search in Google Scholar

37. Bangor, A.; Kortum, P. P. T.; Miller, J. Determining what Individual SUS Scores Mean: Adding an Adjective Rating Scale. J. Usability Stud. 2009, 2015, 114–123.Search in Google Scholar

38. Blattgerste, J.; Behrends, J.; Pfeiffer, T. A Web-Based Analysis Toolkit for the System Usability Scale. In Proceedings of the 15th International Conference on PErvasive Technologies Related to Assistive Environments; PETRA ’22; Association for Computing Machinery: New York, NY, USA, 2022; pp. 237–246.10.1145/3529190.3529216Search in Google Scholar

39. Schrepp, M.; Hinderks, A.; Thomaschewski, J. Construction of a Benchmark for the User Experience Questionnaire (UEQ). Int. J. Interact. Multimed. Artif. Intell. 2017, 4, 40–44. https://doi.org/10.9781/ijimai.2017.445.Search in Google Scholar

40. Hoffmann, C. P.; Lutz, C.; Ranzini, G. Privacy Cynicism: A New Approach to the Privacy Paradox. Cyberpsychology J. Psychosoc. Res. Cyberspace 2016, 10 (4); https://doi.org/10.5817/cp2016-4-7.Search in Google Scholar

41. Mills, C. M.; Keil, F. C. Knowing the Limits of One’s Understanding: The Development of an Awareness of an Illusion of Explanatory Depth. J. Exp. Child Psychol. 2004, 87 (1), 1–32. https://doi.org/10.1016/j.jecp.2003.09.003.Search in Google Scholar PubMed

© 2025 the author(s), published by De Gruyter, Berlin/Boston

This work is licensed under the Creative Commons Attribution 4.0 International License.

Articles in the same Issue

- Frontmatter

- Special Issue on “Usable Safety and Security”

- Editorial on Special Issue “Usable Safety and Security”

- The tension of usable safety, security and privacy

- Research Articles

- Keeping the human in the loop: are autonomous decisions inevitable?

- iSAM – towards a cost-efficient and unobtrusive experimental setup for situational awareness measurement in administrative crisis management exercises

- Breaking down barriers to warning technology adoption: usability and usefulness of a messenger app warning bot

- Use of context-based adaptation to defuse threatening situations in times of a pandemic

- Cyber hate awareness: information types and technologies relevant to the law enforcement and reporting center domain

- From usable design characteristics to usable information security policies: a reconceptualisation

- A case study of the MEUSec method to enhance user experience and information security of digital identity wallets

- Evaluating GDPR right to information implementation in automated insurance decisions

- Human-centered design of a privacy assistant and its impact on perceived transparency and intervenability

- ChatAnalysis revisited: can ChatGPT undermine privacy in smart homes with data analysis?

- Special Issue on “AI and Robotic Systems in Healthcare”

- Editorial on Special Issue “AI and Robotic Systems in Healthcare”

- AI and robotic systems in healthcare

- Research Articles

- Exploring technical implications and design opportunities for interactive and engaging telepresence robots in rehabilitation – results from an ethnographic requirement analysis with patients and health-care professionals

- Investigating the effects of embodiment on presence and perception in remote physician video consultations: a between-participants study comparing a tablet and a telepresence robot

- From idle to interaction – assessing social dynamics and unanticipated conversations between social robots and residents with mild cognitive impairment in a nursing home

- READY? – Reflective dialog tool on issues relating to the use of robotic systems for nursing care

- AI-based character generation for disease stories: a case study using epidemiological data to highlight preventable risk factors

- Research Articles

- Towards future of work in immersive environments and its impact on the Quality of Working Life: a scoping review

- A formative evaluation: co-designing tools to prepare vulnerable young people for participating in technology development

Articles in the same Issue

- Frontmatter

- Special Issue on “Usable Safety and Security”

- Editorial on Special Issue “Usable Safety and Security”

- The tension of usable safety, security and privacy

- Research Articles

- Keeping the human in the loop: are autonomous decisions inevitable?

- iSAM – towards a cost-efficient and unobtrusive experimental setup for situational awareness measurement in administrative crisis management exercises

- Breaking down barriers to warning technology adoption: usability and usefulness of a messenger app warning bot

- Use of context-based adaptation to defuse threatening situations in times of a pandemic

- Cyber hate awareness: information types and technologies relevant to the law enforcement and reporting center domain

- From usable design characteristics to usable information security policies: a reconceptualisation

- A case study of the MEUSec method to enhance user experience and information security of digital identity wallets

- Evaluating GDPR right to information implementation in automated insurance decisions

- Human-centered design of a privacy assistant and its impact on perceived transparency and intervenability

- ChatAnalysis revisited: can ChatGPT undermine privacy in smart homes with data analysis?

- Special Issue on “AI and Robotic Systems in Healthcare”

- Editorial on Special Issue “AI and Robotic Systems in Healthcare”

- AI and robotic systems in healthcare

- Research Articles

- Exploring technical implications and design opportunities for interactive and engaging telepresence robots in rehabilitation – results from an ethnographic requirement analysis with patients and health-care professionals

- Investigating the effects of embodiment on presence and perception in remote physician video consultations: a between-participants study comparing a tablet and a telepresence robot

- From idle to interaction – assessing social dynamics and unanticipated conversations between social robots and residents with mild cognitive impairment in a nursing home

- READY? – Reflective dialog tool on issues relating to the use of robotic systems for nursing care

- AI-based character generation for disease stories: a case study using epidemiological data to highlight preventable risk factors

- Research Articles

- Towards future of work in immersive environments and its impact on the Quality of Working Life: a scoping review

- A formative evaluation: co-designing tools to prepare vulnerable young people for participating in technology development