Abstract

Resorting to the spectral analysis of the 4 × 4 matrix spectral problem, we construct a 4 × 4 matrix Riemann–Hilbert problem to solve the initial value problem for the Hermitian symmetric space derivative nonlinear Schrödinger equation. The nonlinear steepest decent method is extended to study the 4 × 4 matrix Riemann–Hilbert problem, from which the various Deift–Zhou contour deformations and the motivation behind them are given. Through some proper transformations between the corresponding Riemann–Hilbert problems, the basic Riemann–Hilbert problem is reduced to a model Riemann–Hilbert problem, by which the long-time asymptotic behavior to the solution of the initial value problem for the Hermitian symmetric space derivative nonlinear Schrödinger equation is obtained with the help of the asymptotic expansion of the parabolic cylinder function and strict error estimates.

1 Introduction

The derivative nonlinear Schrödinger (DNLS) equation

is one of the basic models in integrable systems. This equation describes the propagation of nonlinear pulses in nonlinear fiber optics [1], [2] and arises as a model for Alfvén waves propagating parallel to the ambient magnetic field in the plasma physics [3]. Equation (1.1) has also several interesting properties in mathematics. In Ref. [4], Kaup and Newell studied the DNLS Equation (1.1) by means of inverse scattering transformation and derived its infinity of conservation laws. N-soliton solution of the DNLS Equation (1.1) was obtained by resorting to the Darboux transformation [5]. The unique global existence of solutions for the DNLS Equation (1.1) was proved under an explicit smallness condition of the initial data in Sobolev spaces and the Schwartz class [6]. Explicit quasi-periodic solutions for the coupled DNLS hierarchy were given with the help of algebraic-geometric method [7]. The long-time asymptotics of the solution of the initial value problem and the initial-boundary value problem for the DNLS Equation (1.1) are studied in the basis of the nonlinear steepest descent analysis of the associated Riemann–Hilbert problem [8], [9], [10]. Moreover, the integrability and exact solutions for multi-coupled versions of the nonlinear Schrödinger equation and derivative nonlinear Schrödinger equation have also been discussed extensively in Ref. [11] by resorting to the Darboux transformation, Riccati equation, and Baker-Akhiezer function [12], [13], [14], [15], [16], [17], [18], [19], [20].

The nonlinear steepest descent method was first introduced by Deift and Zhou in Ref. [21]. This method provides a powerful tool to reduce the original 2 × 2 matrix Riemann–Hilbert problem to a canonical model Riemann–Hilbert problem whose solution can be precisely expressed in terms of parabolic cylinder functions, by which the long-time asymptotics for the initial value problems to a lot of integrable nonlinear evolution equations associated with 2 × 2 matrix spectral problems was obtained, (see e.g. [8], [9], [10], [21], [22], [23], [24], [25], [26], [27], [28], [29], [30]). However, there is little literature on the long-time asymptotic behavior of solutions for integrable nonlinear evolution equations associated with 3 × 3 matrix spectral problems. Usually, it is difficult and complicated for the 3 × 3 case. For 2 × 2 and 3 × 3 cases, the former corresponds to a scalar Riemann–Hilbert problem, while the latter corresponds to a matrix Riemann–Hilbert problem. In general, the solution of the matrix Riemann–Hilbert problem can not be given in explicit form, but the scalar Riemann–Hilbert problem can be solved by the Plemelj formula. Recently, the nonlinear steepest descent method was successfully generalized to study the long-time asymptotics of the initial value problems for the nonlinear evolution equations related to higher-order matrix spectral problems, for example, the coupled nonlinear Schrödinger equation, the Sasa-Satuma equation, the Degasperis-Procesi equation and so on [31], [32], [33], [34], [35], [36].

In this paper, the nonlinear steepest decent method is extended to study the long-time asymptotic behavior of solutions for the initial value problem of the Hermitian symmetric space derivative nonlinear Schrödinger (HSS-DNLS) equation associated with 4 × 4 matrix spectral problem

with the initial data

where the asterisk represents the complex conjugate, potentials q

±1 and q

0 are three complex-valued functions with two real independent variables x and t. The initial data q

j,0(x) lie in the Schwartz space

The contour

It is very difficult for us to carry out the spectrum analysis and the inverse scattering transformation because the associated spectral problem is a high-order matrix spectral problem, the 4 × 4 matrix spectral problem. The Hermitian symmetric space derivative nonlinear Schrödinger equation corresponds to the 4 × 4 matrix Riemannt–Hilbert problem, which is reduced to two 2 × 2 matrix Riemannt–Hilbert problems. However, the unsolvability of them is a challenge for us. The main result of this paper is as follows:

Theorem 1.1.

Let q

j

(x, t) be the solution of the initial value problem for the HSS-DNLS Equation (1.2) with the initial data

where

Remark 1.

For convenience, we introduce the basic notations: (1) For any matrix A = (a

ij

) (may not be square), the norm of matrix A is defined as

where A 11, A 12, A 21 and A 22 are all 2 × 2 matrices; (4) For two quantities A and B, A ≲ B if there exists a constant C > 0 such that |A| ≤ CB. In particular, if C depends on the parameter α, then we say that A ≲ α B.

The outline of this paper is as follows. In Section 2, based on the spectral analysis and inverse scattering transformation, we show how to transform the solution of the initial value problem for the HSS-DNLS equation into the solution of a 4 × 4 matrix Riemann–Hilbert problem. In Section 3, by using the nonlinear steepest descent method, the original 4 × 4 matrix Riemann–Hilbert problem can be reduced to a model Riemann–Hilbert problem whose solution can be expressed in terms of the parabolic cylinder functions. We finally obtain the long-time asymptotics of the initial value problem for the HSS-DNLS equation. The detailed proof of Theorems 3.1, 3.4, and 3.5 is given in Appendix A.

2 Riemann–Hilbert problem

2.1 Spectral analysis

The HSS-DNLS Equation (1.2) is the compatibility condition of the Lax pair equations

where

We introduce the transformation

where

which can be written in differential form

where

In order to obtain the original Riemann–Hilbert problem related to the initial value problem for the HSS-DNLS Equation (1.2), it is necessary that solutions of the spectral problem approach the identity matrix I 4×4. Note that the solutions of Equation (2.6) do not exhibit this property. Hence, our next step is to transform the Equation (2.6) for ϕ(λ; x, t) into an equation with the desired asymptotic behavior. For this purpose, we define

where W(x, t) is a 2 × 2 matrix valued function and satisfies

with the asymptotic condition W(x, t) → I 2×2 as x → −∞, t = 0. It is easy to see that W −1(x, t) = W †(x, t), and W(x, t) satisfies the integral equation

Again introducing a transformation

we can deduce by (2.4) and (2.5) that the Lax pair of μ(λ; x, t)

where

The Lax pair of μ(λ; x, t) can be written in differential form

From (2.13), the 4 × 4 matrix U has the block form

We define two matrix Jost solutions μ ± = μ ±(λ; x, t) of Equation (2.11) by the Volterra integral equations

with the asymptotic conditions

2.2 Analyticity and the symmetry relations

Let

where μ

±L

and μ

±R

denote the first two columns and latter two columns of μ

±. The existence and uniqueness of Jost solutions μ

± for integral Equation (2.16) can be proved according to the standard procedures [38]. A direct observation shows by using the integral Equations (2.16) that μ

−L

and μ

+R

are continuous in

From (2.3) and (2.10), we know that

Note that matrix U in (2.15) is traceless, one can infer that det μ ± are independent of the variable x. Furthermore, by (2.16), we calculate det μ ± = 1 at x = ±∞, hence

Combining with (2.19), we find that det s(λ) = 1. The matrix U in (2.15) satisfies the following two symmetry relations

It follows from (2.11) that

Therefore, we obtain by (2.19) that

The 4 × 4 scattering matrix s(λ) can be rewritten in a block form

We suppose that s 12(λ) and s 22(λ) are invertible in the domain D −. By (2.23), It is easy to verify that

Taking t = 0 and x → +∞ in (2.19), we can infer that scattering matrix s(λ) satisfies the integral equation

which implies

2.3 The Riemann–Hilbert problem

Define

In fact, by using formula (2.19) and definition (2.30), we find that the matrix M(λ; x, t) is analytic in

where the left and the right boundary values along the oriented contour on

The oriented contour on

The 2 × 2 matrix valued function γ(λ) is the reflection coefficient corresponding to the initial data q

j,0(x). It lies in the Schwartz space

It is obvious that the jump matrix J(λ; x, t) is positive definite, the existence and uniqueness of the solution of the Riemann–Hilbert problem (2.31) can be guaranteed by the Vanishing Lemma [39].

Theorem 2.1.

The solution of the initial value problem for the HSS-DNLS Equation (1.2) can be expressed as

where

3 Long-time asymptotic behavior

In this section, by using the nonlinear steepest descent method, the original Riemann–Hilbert problem (2.31) can be reduced to a model Riemann–Hilbert problem with constant coefficients after several appropriate transformations. We then obtain the long-time asymptotics of the HSS-DNLS Equation (1.2) with the leading term.

3.1 Transformation of the Riemann–Hilbert problem

The key step of the method is to transform the original Riemann–Hilbert problem according to the signature table for the phase function θ in jump matrix J defined in (2.32). It follows that there are three stationary points

To have a better understanding, we denote λ by λ = λ

R

+ iλ

I

, where

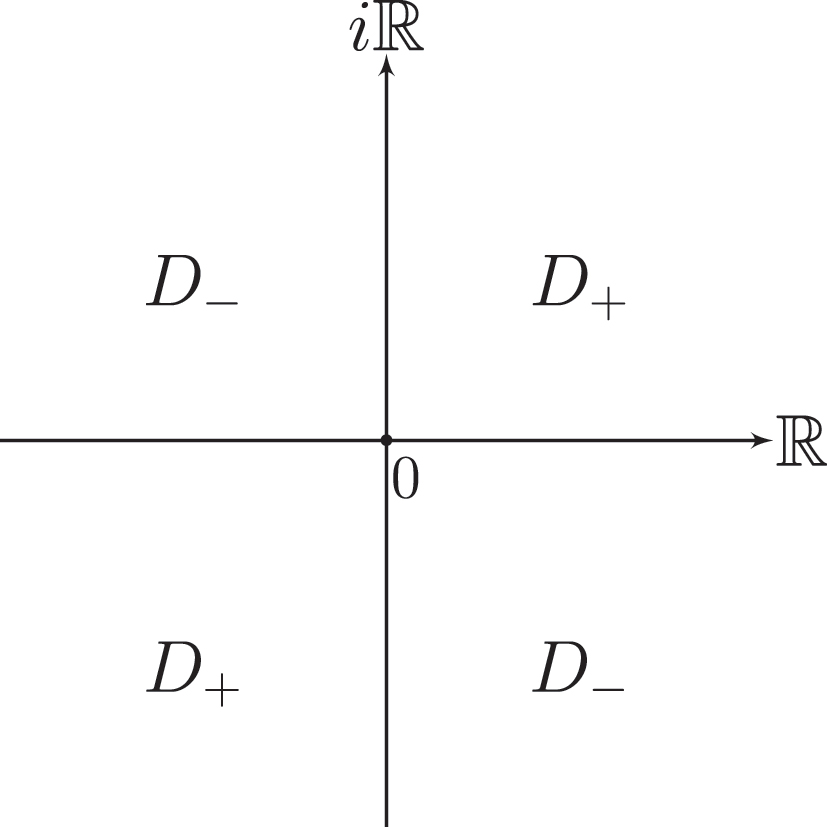

Therefore, the signature table of the real part of iθ can be depicted in Figure 3.

The signature table for Re(iθ) in the complex λ-plane.

Now, we introduce two 2 × 2 matrix valued functions δ 1(λ) and δ 2(λ), which satisfy the Riemann–Hilbert problems, respectively

with

The jump matrices T 1 and T 2 are positive definite, by uniqueness, we have

Inserting (3.5) in (3.3) and (3.4), we find

Hence, by the maximum principle, we have

which implies by the relation (3.5) that

The same scalar Riemann–Hilbert problem below can be obtained by taking the determinant of both sides for Riemann–Hilbert problems (3.3) and (3.4), respectively

where det δ(λ) = det δ 1(λ) = det δ 2(λ). The function det δ(λ) is given uniquely by Plemelj formula [39]

where

It is easy to see that the jump matrix J(λ; x, t) in (2.32) has an upper/lower triangular factorization

and a lower/diagonal/upper factorization

To remove diagonal matrix in the factorization (3.11), we introduce a transformation

where

and reverse the orientation for λ ∈ (−∞, −λ 0) ∪ (λ 0, +∞) as shown in Figure 4.

The reoriented contour on

Then, one verifies that M Δ(λ; x, t) solves the Riemann–Hilbert problem

on the reoriented contour depicted in Figure 4. The jump matrix J Δ(λ; x, t) has the lower/upper triangular decomposition

with the help of 2 × 2 matrix valued spectral function

3.2 Second transformation: contour deformation

In this subsection, the main purpose is to transform the Riemann–Hilbert problem (3.13) on

where L = L 0 ∪ L 1, and

The augmented jump contour Σ.

Moreover, we denote

Theorem 3.1.

The 2 × 2 matrix valued spectral function ρ(λ) has a decomposition

where R(λ) is piecewise rational, h

1(λ) is analytic on

Taking the Hermitian conjugate

leads to the same estimates for

Proof.

See Appendix A. □

According to (3.14) and the above decomposition of ρ(λ), we can reformulate matrices b ±

Then we introduce a matrix valued function

Lemma 3.1.

The matrix valued function M ♯(λ; x, t) defined by (3.22) satisfies the Riemann–Hilbert problem

where

Proof.

A direct calculation shows that Riemann–Hilbert problem (3.23) holds by (3.13) and the definition of M

♯ in (3.22). It is not difficult to arrive at the canonical normalization condition for M

♯ because

Then we find that

The purpose of the next step is to construct the integral equation for M ♯ of the Riemann–Hilbert problem (3.23), (see [21], P. 322 and [40]). Set

and the Cauchy operators C ± on Σ by

where λ

± denote the left (right) boundary values for λ on the oriented contour Σ. As is well known, the operators C

± are bounded from

for a 4 × 4 matrix valued function f and

Then

solves the Riemann–Hilbert problem (3.23). By formula (3.26) and Equation (3.29), we see that

which implies that

Theorem 3.2.

The 2 × 2 matrix valued function

3.3 Contour truncation

In this subsection, we first define the reduced contour

as shown in Figure 6. Then we shall reduce the Riemann–Hilbert problem (3.23) on Σ to the Riemann–Hilbert problem on Σ′. Moreover, we transform the integral expression for

The oriented contour Σ′.

Set ω

e

= ω

a

+ ω

b

+ ω

c

, where

Lemma 3.2.

For λ 0 > C, we have the following estimates

Proof.

From (3.6), (3.7) and Theorem 3.1, we find that

which implies that estimate (3.35) holds. There is a similarly calculation for (3.36) and (3.37). Next we consider about ω′, by the definition of R(λ) in (A.3), we see that

on the contour

Similarly, we have on the contour

from which the estimates (3.38) follow by simple computations. □

Lemma 3.3.

For λ

0 > C, as t → ∞,

Proof.

It follows from Proposition 2.23 and Corollary 2.25 in Ref. [21]. □

Theorem 3.3.

For arbitrary positive integer l, as t → ∞, we have

Proof.

A direct calculation shows that

By Lemmas 3.2 and 3.3, we obtain

Finally, inserting the above estimates in (3.43) yields the (3.42). □

Note. For λ ∈ Σ\Σ′, ω′(λ) = 0, we let

Lemma 3.4.

As t → ∞, the 2 × 2 matrix valued function

Our next step is to construct the corresponding Riemann–Hilbert problem on Σ′.

Define

Then, it follows that

solves the Riemann–Hilbert problem

where

3.4 Separate out the contributions of the three disjoint crosses

In this subsection, we show how to separate out the contributions of leading term of the three disjoint crosses in Σ′ to the 2 × 2 matrix valued function

Split Σ′ into a union of three disjoint crosses,

Set

where

Lemma 3.5.

Define the operators

for α ≠ β ∈ {A, B, C}.

Theorem 3.4.

As t → ∞, then the 2 × 2 matrix valued function

Proof.

See Appendix A. □

3.5 Rescaling and further reduction of the Riemann–Hilbert problems

In this subsection, we first extend the contours

and define

Let Σ

A

, Σ

B

and Σ

C

(see Figure 7) denote the contours

The oriented contour Σ A , Σ B , and Σ C .

Note that the scaling operators act on the exponential term and detδ(λ), one can derive

where

Define

Lemma 3.6.

For λ ∈ L A , L B and L C , then

where

Note: For

Set

Introducing the operators

a simple change of variables argument shows that

where

Furthermore, we first consider the case of A. It follows that

on L A and

on

Theorem 3.5.

As t → ∞ and λ ∈ L A , then

where

Proof.

See Appendix A. □

Note. As t → ∞ and

where

Now we construct

From Lemma 3.6 and Theorem 3.5, one infers that

There are similar consequences for the cases of B and C.

Set

For λ ∈ Σ C , using the symmetry property of γ(λ) in (2.34) yields

which implies that γ(0) = 0 and γ(i0) = 0. Hence, we have

where

Theorem 3.6.

As t → ∞, the matrix valued function

Proof.

Note that

By the triangle inequality and the error estimation in (3.70), we conclude that

According to (3.61), we see that

The other two cases have the similar computations. From (3.48), we finally obtain (3.75). □

In the following, we construct the corresponding Riemann–Hilbert problems on the contour Σ

A

, Σ

B

and Σ

C

and find out the relations between the solutions of the Riemann–Hilbert problems. For

Then

where

which implies

For

Then

where

which implies

From (3.74), we can conclude that the coefficient matrix

Using the matrices (3.68), (3.69), (3.71) and (3.72), we have the symmetry relation

By the uniqueness of the Riemann–Hilbert problem, we arrive at

Note that the expansion (3.78) and (3.82), we find that

Finally, we obtain from (3.75) and (3.79) that

3.6 Explicit solution of the model problem

In this subsection, we show how to find explicit formulations in terms of parabolic cylinder functions for

Combining with the Riemann–Hilbert problem (3.77), one infers that

It follows by differentiation that

Together with (3.86), we obtain

Note that detv(−λ 0) = 1, which implies that det Ψ = 1 by Liouville’s theorem. Hence, Ψ−1 exists and is bounded. Furthermore, (dΨ/dλ − iλσ 4Ψ)Ψ−1 has no jump discontinuity along each ray of Σ A and must be entire. Therefore,

It follows by Liouville’s theorem that

where

In particular, we have

Using the Riemann–Hilbert problem (3.77), one can verify

which implies that

The 4 × 4 matrix valued spectral function Ψ(λ) can be written as the block form

From (3.89) and its differential we obtain

Using the definition of β in (3.90), we find that β 12 and β 21 are two 2 × 2 constant matrices which are independent of λ. Set

By the relation in (3.93), we see that

We first consider the case of Ψ11(λ). It follows from (3.94) the following equations,

Let the constant s satisfy

Together with (3.100) and (3.101), one finds that

Consider the Weber’s equation

which has the solution

where c 1, c 2 are constants and D a (ζ) denotes the standard parabolic-cylinder function which satisfies

From [41], pp. 347 − 349, we know that as ζ → ∞,

where Γ(⋅) is the Gamma function. Setting

Lemma 3.7.

The 2 × 2 matrices Ψ11(λ), Ψ22(λ) and β 12 β 21 are both diagonal matrices and have the following forms:

Proof.

We first assume that

which implies

Set

Note that

which contradicts the assumptions. To sum up, we obtain

which implies that

and deduce the analogous equations as in (3.108) for

Combing with the asymptotic expansion (3.107), we arrive at

In the end, one can derive

From (3.94) and (3.96), we obtain

where

so that

Using (3.95) and (3.105), we arrive at

For

so that

Again by (3.95) and (3.105), we deduce

Along the ray

which implies that

and hence

By (3.106), we write

It follows from (2.35) that

For convenience, we denote

By (2.9) and (3.123), a direct simple computation shows that

We finally obtain the main result in Theorem 1.1 from (2.35), (3.84), (3.91), (3.122) and (3.127).

Funding source: National Natural Science Foundation of China

Award Identifier / Grant number: 12201572

Award Identifier / Grant number: 11931017

Award Identifier / Grant number: 12171439

-

Research ethics: Not applicable.

-

Author contributions: The authors have accepted responsibility for the entire content of this manuscript and approved its submission.

-

Competing interests: Authors state no conict of interest.

-

Research funding: The research of all authors is supported by National Natural Science Foundation of China (Grant Nos. 12201572, 11931017, 12171439).

-

Data availability: Not applicable.

Proof of Theorem 3.1.

First, we consider the case λ ≥ λ 0 and the other cases are similar. In this case, we have

By Taylor’s formula, we have

and define

Then, we see that

For the convenience, we assume that m is of the form

Here we set

rescale the phase

and utilizing the formula

obtain

Noting that λ can be denoted by λ = λ

R

+ iλ

I

, where

The signature table for

By the Fourier inversion theorem, we have that

where

It follows from (A.2), (A.4) and (A.6) that

where

from which we find that

Then, we obtain

By Plancherel formula [42],

Splitting

we can verify that

On the other hand, for λ on line

Noting

we have

Let l be an arbitrary positive integer and let m = 4n + 1 be sufficiently large that ((3n + 2)/4 − 1/2) and n/2 are both greater than l. In conclusion, the case of λ ≥ λ 0 is proved.

The signature table for Re

Proof of Theorem 3.4.

Using the identity

where

and δ αβ denote the Kronecker delta, one can verify that

Hence, we have

Now we consider the second integral expression. By using triangular inequality, we have

Observe that

Finally, note that

which implies that

Then, we consider the first integral expression

To estimate

Observe that

and

The other cases can be similarly proved. Therefore, we arrive at

Combining with Lemma 3.4, this proof is completed.

Proof of Theorem 3.5.

We first consider

where

where

By the definition of ρ(λ) in (3.15), we have

where adjγ †(λ*) denotes adjoint of matrix γ †(λ*). Again by a similar analysis with Theorem 3.1, we split

Furthermore, f(λ) can be separated into

where f

1(λ) is composed of terms of type h

1(λ) and

It is worth mentioning that

and a direct calculation shows that

By Cauchy’s Theorem, we can calculate |A

3| along the contour L

t

instead of the interval

Assembling the above estimates, we finally obtain

The oriented contour L t .

References

[1] G. P. Agrawal, Nonlinear Fiber Optics, San Diego, Academic Press, 2002.Search in Google Scholar

[2] Y. Kodama, “Optical solitons in a monomode fiber,” J. Stat. Phys., vol. 39, no. 5–6, pp. 597–614, 1985. https://doi.org/10.1007/bf01008354.Search in Google Scholar

[3] E. Mjølhus, “On the modulational instability of hydromagnetic waves parallel to the magnetic field,” J. Plasma Phys., vol. 16, no. 3, pp. 321–334, 1976. https://doi.org/10.1017/s0022377800020249.Search in Google Scholar

[4] D. J. Kaup and A. C. Newell, “An exact solution for a derivative nonlinear Schrödinger equation,” J. Math. Phys., vol. 19, no. 28, pp. 798–801, 1978. https://doi.org/10.1063/1.523737.Search in Google Scholar

[5] N. N. Huang and Z. Y. Chen, “Alfvén solitons,” J. Phys. A, vol. 23, no. 4, pp. 439–453, 1990. https://doi.org/10.1088/0305-4470/23/4/014.Search in Google Scholar

[6] N. Hayashi and T. Ozawa, “On the derivative nonlinear Schrödinger equation,” Phys. D, vol. 55, no. 1–2, pp. 14–36, 1992. https://doi.org/10.1016/0167-2789(92)90185-p.Search in Google Scholar

[7] X. G. Geng, Z. Li, B. Xue, and L. Guan, “Explicit quasi-periodic solutions of the Kaup–Newell hierarchy,” J. Math. Anal. Appl., vol. 425, no. 2, pp. 1097–1112, 2015. https://doi.org/10.1016/j.jmaa.2015.01.021.Search in Google Scholar

[8] L. K. Arruda and J. Lenells, “Long-time asymptotics for the derivative nonlinear Schrödinger equation on the half-line,” Nonlinearity, vol. 30, no. 11, pp. 4141–4172, 2017. https://doi.org/10.1088/1361-6544/aa84c6.Search in Google Scholar

[9] A. V. Kitaev and A. H. Vartanian, “Leading-order temporal asymptotics of the modified nonlinear Schrödinger equation: solitonless sector,” Inverse Probl., vol. 13, no. 5, pp. 1311–1339, 1997. https://doi.org/10.1088/0266-5611/13/5/014.Search in Google Scholar

[10] J. Xu, E. G. Fan, and Y. Chen, “Long-time asymptotic for the derivative nonlinear Schrödinger equation with step-like initial value,” Math. Phys. Anal. Geom., vol. 16, no. 3, pp. 253–288, 2013. https://doi.org/10.1007/s11040-013-9132-3.Search in Google Scholar

[11] T. Kanna and K. Sakkaravarthi, “Multicomponent coherently coupled and incoherently coupled solitons and their collisions,” J. Phys. A, vol. 44, no. 28, p. 285211, 2011. https://doi.org/10.1088/1751-8113/44/28/285211.Search in Google Scholar

[12] X. G. Geng, R. M. Li, and B. Xue, “A vector general nonlinear Schrödinger equation with (m + n) components,” J. Nonlinear Sci., vol. 30, no. 3, pp. 991–1013, 2020. https://doi.org/10.1007/s00332-019-09599-4.Search in Google Scholar

[13] X. G. Geng, Y. Y. Zhai, and H. H. Dai, “Algebro-geometric solutions of the coupled modified Korteweg–de Vries hierarchy,” Adv. Math., vol. 263, pp. 123–153, 2014, https://doi.org/10.1016/j.aim.2014.06.013.Search in Google Scholar

[14] X. G. Geng and B. Xue, “An extension of integrable peakon equations with cubic nonlinearity,” Nonlinearity, vol. 22, no. 8, pp. 1847–1856, 2009. https://doi.org/10.1088/0951-7715/22/8/004.Search in Google Scholar

[15] X. G. Geng and B. Xue, “A three-component generalization of Camassa–Holm equation with N-peakon solutions,” Adv. Math., vol. 226, no. 1, pp. 827–839, 2011. https://doi.org/10.1016/j.aim.2010.07.009.Search in Google Scholar

[16] M. X. Jia, X. G. Geng, and J. Wei, “Algebro-geometric quasi-periodic solutions to the Bogoyavlensky lattice 2(3) equations,” J. Nonlinear Sci., vol. 32, no. 6, p. 98, 2022. https://doi.org/10.1007/s00332-022-09858-x.Search in Google Scholar

[17] R. M. Li and X. G. Geng, “Rogue periodic waves of the sine-Gordon equation,” Appl. Math. Lett., vol. 102, p. 106147, 2020, https://doi.org/10.1016/j.aml.2019.106147.Search in Google Scholar

[18] R. M. Li and X. G. Geng, “On a vector long wave-short wave-type model,” Stud. Appl. Math., vol. 144, no. 2, pp. 164–184, 2020. https://doi.org/10.1111/sapm.12293.Search in Google Scholar

[19] X. G. Geng, R. M. Li, and B. Xue, “A vector Geng-Li model: new nonlinear phenomena and breathers on periodic background waves,” Phys. D, vol. 434, p. 133270, 2022, https://doi.org/10.1016/j.physd.2022.133270.Search in Google Scholar

[20] J. Wei, X. G. Geng, and X. Zeng, “The Riemann theta function solutions for the hierarchy of Bogoyavlensky lattices,” Trans. Am. Math. Soc., vol. 371, no. 2, pp. 1483–1507, 2019. https://doi.org/10.1090/tran/7349.Search in Google Scholar

[21] P. A. Deift and X. Zhou, “A steepest descent method for oscillatory Riemann–Hilbert problems. Asymptotics for the MKdV equation,” Ann. Math., vol. 137, no. 2, pp. 295–368, 1993. https://doi.org/10.2307/2946540.Search in Google Scholar

[22] A. B. de Monvel, A. Kostenko, D. Shepelsky, and G. Teschl, “Long-time asymptotics for the Camassa–Holm equation,” SIAM J. Math. Anal., vol. 41, no. 4, pp. 1559–1588, 2009. https://doi.org/10.1137/090748500.Search in Google Scholar

[23] A. B. de Monvel, A. Its, and V. Kotlyarov, “Long-time asymptotics for the focusing NLS equation with time-periodic boundary condition on the half-line,” Commun. Math. Phys., vol. 290, no. 1, pp. 479–522, 2009. https://doi.org/10.1007/s00220-009-0848-7.Search in Google Scholar

[24] P. J. Cheng, S. Venakides, and X. Zhou, “Long-time asymptotics for the pure radiation solution of the sine-Gordon equation,” Commun. Part. Differ. Equ., vol. 24, no. 7–8, pp. 1195–1262, 1999. https://doi.org/10.1080/03605309908821464.Search in Google Scholar

[25] P. Deift, A. R. Its, and X. Zhou, Long-Time Asymptotics for Integrable Nonlinear Wave Equations, Berlin, Springer, 1993.10.1007/978-3-642-58045-1_10Search in Google Scholar

[26] P. A. Deift and J. Park, “Long-time asymptotics for solutions of the NLS equation with a delta potential and even initial data,” Int. Math. Res., vol. 2011, no. 24, pp. 5505–5624, 2011. https://doi.org/10.1093/imrn/rnq282.Search in Google Scholar

[27] K. Grunert and G. Teschl, “Long-time asymptotics for the Korteweg–de Vries equation via nonlinear steepest descent,” Math. Phys. Anal. Geom., vol. 12, no. 2, pp. 287–324, 2009. https://doi.org/10.1007/s11040-009-9062-2.Search in Google Scholar

[28] A. V. Kitaev and A. H. Vartanian, “Asymptotics of solutions to the modified nonlinear Schrödinger equation: solution on a nonvanishing continuous background,” SIAM J. Math. Anal., vol. 30, no. 4, pp. 787–832, 1999. https://doi.org/10.1137/s0036141098332019.Search in Google Scholar

[29] A. H. Vartanian, “Higher order asymptotics of the modified non-linear Schrödinger equation,” Commun. Part. Differ. Equ., vol. 25, no. 5–6, pp. 1043–1098, 2000. https://doi.org/10.1080/03605300008821541.Search in Google Scholar

[30] H. Yamane, “Long-time asymptotics for the defocusing integrable discrete nonlinear Schrödinger equation,” J. Math. Soc. Jpn, vol. 66, no. 3, pp. 765–803, 2014. https://doi.org/10.2969/jmsj/06630765.Search in Google Scholar

[31] A. B. de Monvel, J. Lenells, and D. Shepelsky, “Long-time asymptotics for the Degasperis–Procesi equation on the half-line,” Ann. Inst. Fourier, vol. 69, no. 1, pp. 171–230, 2019. https://doi.org/10.5802/aif.3241.Search in Google Scholar

[32] X. G. Geng and H. Liu, “The nonlinear steepest descent method to long-time asymptotics of the coupled nonlinear Schrödinger equation,” J. Nonlinear Sci., vol. 28, no. 2, pp. 739–763, 2018. https://doi.org/10.1007/s00332-017-9426-x.Search in Google Scholar

[33] X. G. Geng, M. M. Chen, and K. D. Wang, “Long-time asymptotics of the coupled modified Korteweg–de Vries equation,” J. Geom. Phys., vol. 142, pp. 151–167, 2019, https://doi.org/10.1016/j.geomphys.2019.04.009.Search in Google Scholar

[34] X. G. Geng, K. D. Wang, and M. M. Chen, “Long-time asymptotics for the spin-1 Gross–Pitaevskii equation,” Commun. Math. Phys., vol. 382, no. 1, pp. 585–611, 2021. https://doi.org/10.1007/s00220-021-03945-y.Search in Google Scholar

[35] L. Huang and J. Lenells, “Asymptotics for the Sasa–Satsuma equation in terms of a modified Painlevé II transcendent,” J. Differ. Equ., vol. 268, no. 12, pp. 7480–7504, 2020. https://doi.org/10.1016/j.jde.2019.11.062.Search in Google Scholar

[36] H. Liu, X. G. Geng, and B. Xue, “The Deift–Zhou steepest descent method to long-time asymptotics for the Sasa–Satsuma equation,” J. Differ. Equ., vol. 265, no. 11, pp. 5984–6008, 2018. https://doi.org/10.1016/j.jde.2018.07.026.Search in Google Scholar

[37] J. Shen, X. G. Geng, and B. Xue, “Modulation instability and dynamics for the Hermitian symmetric space derivative nonlinear Schrödinger equation,” Commun. Nonlinear Sci. Numer. Simul., vol. 78, p. 104877, 2019, https://doi.org/10.1016/j.cnsns.2019.104877.Search in Google Scholar

[38] M. J. Ablowitz and P. J. Clakson, Solitons, Nonlinear Evolution Equations and Inverse Scattering, Cambridge, Cambridge University Press, 1991.10.1017/CBO9780511623998Search in Google Scholar

[39] M. J. Ablowitz and A. S. Fokas, Complex Variables: Introduction and Applications, Cambridge, Cambridge University Press, 2003.10.1017/CBO9780511791246Search in Google Scholar

[40] R. Beals and R. R. Coifman, “Scattering and inverse scattering for first order systems,” Commun. Pure Appl. Math., vol. 37, no. 1, pp. 39–90, 1984. https://doi.org/10.1002/cpa.3160370105.Search in Google Scholar

[41] E. T. Whittaker and E. T. Watson, A Course of Modern Analysis, Cambridge, Cambridge University Press, 1927.Search in Google Scholar

[42] W. Rudin, Functional Analysis, New York, McGraw-Hill, 1973.Search in Google Scholar

© 2024 the author(s), published by De Gruyter, Berlin/Boston

This work is licensed under the Creative Commons Attribution 4.0 International License.

Articles in the same Issue

- Frontmatter

- Research Articles

- Decay estimates for defocusing energy-critical Hartree equation

- A surprising property of nonlocal operators: the deregularising effect of nonlocal elements in convolution differential equations

- Long-time asymptotic behavior for the Hermitian symmetric space derivative nonlinear Schrödinger equation

- The classical solvability for a one-dimensional nonlinear thermoelasticity system with the far field degeneracy

- Regularity of center-outward distribution functions in non-convex domains

- Existence and multiplicity of solutions for fractional p-Laplacian equation involving critical concave-convex nonlinearities

- Periodic solutions for a coupled system of wave equations with x-dependent coefficients

- Remarks on analytical solutions to compressible Navier–Stokes equations with free boundaries

- Homogenization of Smoluchowski-type equations with transmission boundary conditions

- Existence and concentration of solutions for a fractional Schrödinger–Poisson system with discontinuous nonlinearity

- Principal spectral theory and asymptotic behavior of the spectral bound for partially degenerate nonlocal dispersal systems

Articles in the same Issue

- Frontmatter

- Research Articles

- Decay estimates for defocusing energy-critical Hartree equation

- A surprising property of nonlocal operators: the deregularising effect of nonlocal elements in convolution differential equations

- Long-time asymptotic behavior for the Hermitian symmetric space derivative nonlinear Schrödinger equation

- The classical solvability for a one-dimensional nonlinear thermoelasticity system with the far field degeneracy

- Regularity of center-outward distribution functions in non-convex domains

- Existence and multiplicity of solutions for fractional p-Laplacian equation involving critical concave-convex nonlinearities

- Periodic solutions for a coupled system of wave equations with x-dependent coefficients

- Remarks on analytical solutions to compressible Navier–Stokes equations with free boundaries

- Homogenization of Smoluchowski-type equations with transmission boundary conditions

- Existence and concentration of solutions for a fractional Schrödinger–Poisson system with discontinuous nonlinearity

- Principal spectral theory and asymptotic behavior of the spectral bound for partially degenerate nonlocal dispersal systems